Abstract

Quantum computers provide a valuable resource to solve computational problems. The maximization of the objective function of a computational problem is a crucial problem in gate-model quantum computers. The objective function estimation is a high-cost procedure that requires several rounds of quantum computations and measurements. Here, we define a method for objective function estimation of arbitrary computational problems in gate-model quantum computers. The proposed solution significantly reduces the costs of the objective function estimation and provides an optimized estimate of the state of the quantum computer for solving optimization problems.

Similar content being viewed by others

Introduction

Quantum computers exploit the fundamentals of quantum mechanics to solve computational problems more efficiently than traditional computers1,2,3,4,5,6,7,8,9,10,11,12,13,14,15,16,17,18,19,20. Quantum computers can solve computational problems by exploiting the phenomena of quantum superposition and quantum entanglement5,7,8,9,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57. In a quantum computer, computations are performed on quantum states that carry the information. Gate-model5,13,14,15,16,17,18,21,25,43 quantum computations provide a flexible framework for the realization of quantum computations in the practice. In a gate-model quantum computer, computations are realized by quantum gates (unitary operators); and the quantum-gate architecture integrates a different number of levels and application rounds5 to realize gate-model quantum computations5,18,21,25,36,37,38,39,43,58,59,60,61. The output quantum state of the quantum computer is practically measured by a physical measurement apparatus62,63,64,65,66,67,68 that produces a classical string. In gate-model quantum computers, the quantum states are represented by qubits, the unitaries are realized by qubit gates, and the measurement apparatus is designed for the measurement of qubit systems13,14,15,16,17,19,69,70,71,72,73,74. Another fundamental application scenario of gate-model quantum computations is the small and medium-scale near-term quantum devices of the quantum Internet69,70,71,72,73,74,75,76,77,78,79,80,81,82,82,83,84,85,86,87,88,89,90,91,92,93,94,95,96,97,98,99,100,101,102,103,104,105,106,107,108,109,110,111,112,113,114,115,116,117,118,119,120,121,122,123,124,125,126,127,128.

An important application scenario of gate-model quantum computers is the maximization of the objective function of computational problems5,18,21,25,43. The quantum computer produces a quantum state that yields a high value of the objective function (The objective function subject of a maximization refers to an objective function of an arbitrary computational problem fed into the quantum computer. Objective function examples can be found in9,24.). The output state of the quantum computer is measured in a computational basis, and from the measurement result, a classical objective function is evaluated. To get a high-precision estimate of the objective function of the quantum computer, the measurements have to be repeated several times in the physical layer. In each measurement round, a given number of measurement units are applied to measure the output state of the quantum computer. This state represents an objective function value via the quantum-gate attributes in the gate structure of the quantum computer. The objective function values obtained in the measurement rounds are averaged to estimate the objective function of the quantum computer. Since each round requires the preparation of a new quantum state and the application of a high number of measurement units, a high-precision approximation of the objective function value of the quantum computer is a costly procedure. The high-resource assumptions include not just the preparation of the initial and final states of the quantum computer, the application of the unitaries in several rounds, but also the physical apparatus required to measure the output state of the quantum computer. The procedure of the objective function estimation in gate-model quantum computers is therefore a subject of optimization.

Here, we propose a method for the optimized objective function estimation of the quantum computer and for the optimized preparation of the new quantum state of the quantum computer (The terminology “quantum state of the quantum computer” refers to the actual gate parameter values of the unitaries of the quantum computer5. Preparation of the target quantum state of the quantum computer refers to the determination of the target gate parameters of the unitaries of the quantum computer.). The framework integrates an objective function extension procedure, a quantum-gate structure segmentation stage, and a machine-learning11,12,19,50,129,130,131,132,133,134,135 unit called quantum-gate parameter randomization machine learning (QGPR-ML), which outputs the prediction of the new quantum computer state. The aim of the objective function extension is to increase the precision the objective function estimation procedure. An imaginary measurement round refers to a logical measurement round yielded by the post-processing. An imaginary measurement round requires no physical-layer measurement round, since it is resulted by logical-layer procedures and methods in the post-processing stage. The imaginary measurement round also characterizes the performance of the framework. At a particular number of imaginary rounds, the post-processed objective function becomes equal to an objective function yielded from the same number of “real” (e.g., physically implemented) measurement rounds. An initial objective function is calculated from an arbitrary low number of physical measurement rounds, which is then fed into the objective function extension algorithm of the framework. The extended objective function is then fed into a segmentation procedure that decomposes the quantum-gate structure of the quantum computer with respect to the properties of the quantum gates in the quantum circuit. The gate-based segmentation is rooted in the fact that the gate structure unitaries of the quantum computer determine the objective function and therefore the particular output state of the quantum computer. The results are then forwarded into the QGPR-ML block, which achieves a randomization and rule-learning stage. The aim of the randomization is to construct an optimal set for the learning set and test set selections in rule learning. The rule-learning method outputs a set of optimal rules learned from the input. Finally, a prediction stage is applied to the results to determine a new state of the quantum computer for the next iterations.

The novel contributions of our manuscript are as follows:

-

1.

We define a method for objective function estimation for arbitrary computational problems in gate-model quantum computers.

-

2.

The method reduces the costs of quantum state preparations, quantum computational steps and measurements. The proposed algorithms utilize the measurement results and increase the precision of objective function estimation and maximization via computational steps.

-

3.

The results are convenient for solving optimization problems in experimental gate-model quantum computers and for the near-term quantum devices of the quantum Internet.

This paper is organized as follows. In "Related works” section, the related works are discussed. In “System model and problem statement” section, the machine-learning-based objective function optimization framework is proposed. In “Objective function extension and gate structure decomposition” section, the procedures of the framework are discussed. In Section 5, we study the learning model and the quantum computer state prediction method. A performance evaluation is given in “Performance evaluation” section. Finally, “Conclusion” section concludes the results. Supplemental material is included in the Appendix.

Related works

The related works are summarized as follows.

On the utilized gate-model quantum computer environment, see5,18, and36,38.

In5, the authors studied the problem of objective function estimation of computational problems fed into the quantum computer. The authors focused on a qubit system with a fixed hardware structure in the physical layer. The input quantum system of the quantum circuit is transformed via a sequence of unitaries, and the qubits of the output quantum system are measured by a measurement array. The result of the measurement produces a classical bitstring that is processed further to estimate the objective function of the quantum computer.

Examples of objective functions for quantum computers can be found in9.

A quantum circuit design method for gate-model quantum computers has been defined in36. In37, a method has been defined for the stabilization of the optimal quantum state of the quantum computer.

A method for the evaluation of objective function connectivity in gate-model quantum computers has been proposed in33. An unsupervised machine learning method for quantum gate control in gate-model quantum computers has been defined in34. In35, a framework has been defined for the circuit depth reduction of gate-model quantum computers.

The technique of dense quantum measurement has been defined in38. As it has been proven, the method significantly can reduce the number of physical measurement rounds in a gate-model quantum computer environment. In39, a training optimization method has been defined for gate-model quantum neural networks.

For some related works on quantum machine learning, see12,13,43,46,136,137,138,139,140,141,142,143. For a detailed summary on these references, we suggest also39.

Optimization algorithms are also proved to be useful in various applications. In144, the authors proposed a neural network ensemble procedure. The aim of the optimization process is to improve the quality of the neural-network based prediction intervals. The prediction intervals are used to quantify uncertainties and disturbances in neural network-based forecasting. The optimization model utilizes the fundaments of simulated annealing and genetic algorithms.

An overview on experimental optimization approaches was proposed in145. In this work, the authors provide an overview on recent developments of fault diagnosis and nature-inspired optimal control of industrial process applications. The fields of fault detection and optimal control have proven various successful theoretical results and industrial applications. This work also contains a review on the recent results in machine learning, data mining, and soft computing techniques connected to the particular research fields.

In146, the authors studied the problem of training echo state networks (ESN) that are a special form of recurrent neural networks (RNNs). As an important attribute, the ESN structures can be used for a black box modeling of nonlinear dynamical systems. The authors defined a training method that uses a harmony search algorithm, and analyzed the performance of their approach.

In147, the authors defined a model-free sliding mode and fuzzy controllers for a particular problem and subject, called reverse osmosis desalination plants. The paper defines an optimization problem in terms of process controlling and fuzzy method. The authors also studied the performance of their solution.

On genetic algorithms for digital quantum simulations, see148. In149, a method for the learning of an unknown transformation via a genetic approach was defined. In150, the authors proposed an overview of existing approaches on quantum computation.

System model and problem statement

System model

In the modeled scenario, the goal is the maximization of an objective function C via the quantum computer. The aim of the quantum computer run is to produce a quantum state \({\left| \theta \right\rangle } \) dominated by computational basis states with a high value of an objective function C5,18 of a computational problem. The quantum computer has \(N_{tot} \) total number of the quantum gates (unitaries) that formulates a QG (quantum gate) structure. Using the \(N_{tot} \) unitaries \(U_{1} ,\ldots ,U_{N_{tot} } \), the QG structure of the quantum computer produces an output quantum state \({\left| \theta \right\rangle } \) as5

where \({\left| \psi _{0} \right\rangle } \) is an initial state and \(\theta \) is the gate-parameter vector

The aim is to select the \(\theta \) parameter vector such that the expected value of C is maximized; thus, the value of quantum objective function

is high5.

A unitary \(U_{j} \left( \theta _{j} \right) \) can be written as5

where \(B_{j} \) is a set of Pauli operators associated with the jth unitary \(U_{j} \) of the quantum computer, \(j=1,\ldots ,N_{tot} \), while \(\varphi _{j} \) is a continuous parameter, \(\varphi _{j} \ge 0\), referred to as the gate parameter of unitary \(U_{j}\).

Let \(N_{G} \left( U_{j} \right) \) refer to the qubit number associated to gate \(U_{j} \). Then, the \(\varphi _{j} \) parameter of an \(N_{G} \left( U_{j} \right) \)-qubit unitary \(U_{j}\) can be classified with respect to \(N_{G} \left( U_{j} \right) \) as

where \(N_{G} \left( U_{j} \right) =1\) identifies an 1-qubit gate \(U_{j} \) while \(N_{G} \left( U_{j} \right) =N\) refers to an N-qubit gate \(U_{j} \).

Without loss of generality, at a given \(B_{j} \), a particular \(U_{j} \) is approachable via \(\theta _{j} \), where

Therefore, the \({\left| \theta \right\rangle } \) state of the quantum computer depends on the gate parameters of the unitaries of the quantum computer, and (4) can also be referred as

where \(\varphi _{j} \) is determined as in (5).

Let \(N\left( \varphi _{j} \right) \) refer to the total number of occurrences of gate parameter value \(\varphi _{j} \) in the quantum computer (i.e., the number of quantum gates with a particular \(N_{G} \) qubit number). Then the state \({\left| \theta \right\rangle } \) of QG (see (1)) is evaluated as

where \({\left| s \right\rangle } ={\textstyle \frac{1}{\sqrt{2^{n} } }} \sum _{z}{\left| z \right\rangle } \), where n is the length of string z resulted from the physical measurement procedure M5.

Using (4), the function of (3) can be rewritten as

The schematic model of the objective function optimization framework \({{\mathscr {F}}}\) is depicted in Fig. 1. The notations of the system model are summarized in Table A.1 of the Supplemental Information.

Framework \({{{\mathscr {F}}}}\) of objective function optimization for gate-model quantum computers. The output \({\left| \theta \right\rangle } \) of the quantum computer is measured by the M measurement that consists of n measurement units and yields string z and the initial estimate \(f^{\left( 0\right) } \left( \theta \right) \). At \(R^{*} \) measurement rounds, the total number of measurements is \(R^{*} n\). From the measured objective function \({\tilde{C}}^{0} \left( z\right) \), algorithm \({{{\mathscr {A}}}}_{E} \) achieves an objective function extension and estimation and outputs \({\tilde{f}}^{\left( \kappa \right) } \left( \theta \right) \), followed by a feature extraction via algorithm \({{{\mathscr {A}}}}_{D} \). The QGPR-ML block is decomposed into a randomizing method \({{{\mathscr {A}}}}_{f} \) applied L times (depicted by \({{{\mathscr {A}}}}_{f}^{L} \)) and the \({{{\mathscr {R}}}}\) rule-generation method. The output of the QGPR-ML block is the \({{{\mathscr {P}}}}\left( \theta \right) \) prediction of the new value \({{\theta }^{*}}\) of \(\theta \).

Problem statement

To get an estimate \(f^{\left( 0\right) } \left( \theta \right) \) of function \(f\left( \theta \right) \), a measurement M is required that yields the n-length string z, from which \(C\left( z\right) \) is calculated. Since R measurement rounds required with n measurements in each round to get an average objective function \({\tilde{C}}\left( z\right) \)

where \(C^{\left( i\right) } \left( z\right) \), \(i=0,\ldots ,R-1\) is an objective function determined in the ith round and z is the n-length string resulted from the measurement of state \({\left| \theta \right\rangle } \) of the quantum computer, it follows that the \(\left| M\right| \) total number of required measurements to get the estimate \(f^{\left( 0\right) } \left( \theta \right) \) at R rounds is

The problem connected to the objective function estimation is summarized in Problem 1.

Since each step of Problem 1 is a high-cost procedure, at a given R, the cost of the determination of the estimate \(f^{\left( 0\right) } \left( \theta \right) \) is significantly high. Here, we show that by setting an arbitrary low number R for the number of physical-layer measurement rounds, an arbitrary high-precision estimate \(f^{\left( 0\right) } \left( \theta \right) \) can be produced by a well-constructed post-processing stage. Setting \(R=1\) represents the situation if only one measurement round is required. The post-processing is referred to as optimization framework \({{{\mathscr {F}}}}\). The results clearly indicate that the number of physical-layer measurements and the number of rounds required by the quantum computer to produce the output quantum state can be significantly decreased by a well-defined post-processing. However, after the R measurement rounds are completed, another problem exists, connected to the determination of the new output quantum state \(\left| {{\theta }^{*}} \right\rangle \) and summarized in Problem 2.

For the solution of Problem 1, we propose algorithm \({{\mathscr {A}}}_{E} \) in the objective function optimization framework \({{{\mathscr {F}}}}\). For the solution of Problem 2, we propose the QGPR-ML procedure in \({{{\mathscr {F}}}}\), which yields the \({{{\mathscr {P}}}}\left( \theta \right) \) prediction for the selection of the new value of \(\theta \) for the quantum computer. Since the solution of Problem 1 also eliminates the relevance of Sub-problem 2 of Problem 2, only Sub-problem 1 of Problem 2 remains a challenge.

Optimization problems and problem resolutions

The optimization problems connected to the problem resolution are as follows.

-

1.

Define a post-processing framework \({{{\mathscr {F}}}}\) to determine the new optimal state of quantum computer from the measurement results and the parameters of the gate structure of the quantum computer. The problem is resolved via the framework \({{{\mathscr {F}}}}\), \({{{\mathscr {F}}}}:\left\{ {{{\mathscr {A}}}}_{E} ,{{{\mathscr {A}}}}_{D} ,{{{\mathscr {A}}}}_{f}^{L} ,{{\mathscr {R}}},{{{\mathscr {P}}}}\right\} \), that integrates data extension \({{{\mathscr {A}}}}_{E} \), data analytics \({{{\mathscr {A}}}}_{D} \), feature extraction and classification \({{{\mathscr {A}}}}_{f}^{L} \), learning rule generation \({{{\mathscr {R}}}}\) and predictive analytics \({{{\mathscr {P}}}}\).

-

2.

At a given number of \(R^{*} \) physical measurement rounds, determine the \({\tilde{C}}\left( z\right) \) objective function that can be estimated after \(\kappa ^{2} R^{*} \) physical measurement rounds if no post-processing is applied, where \(\kappa \ge 1\) is a scaling coefficient. The number \(R^{*} \) of physical measurement rounds cannot be increased, only the measurement results and the available system parameterization of the quantum computer can be used. This optimization problem is resolved via algorithm \({{\mathscr {A}}}_{E} \) within \({{{\mathscr {F}}}}\).

-

3.

Determine the \(\theta ^{*} \) novel gate-parameter vector via predictive analytics to set the \({\left| \theta ^{*} \right\rangle } \) new state of the quantum computer. This optimization problem is resolved via algorithms \({{{\mathscr {A}}}}_{D} ,{{\mathscr {A}}}_{f}^{L} ,{{{\mathscr {R}}}}\) and \({{{\mathscr {P}}}}\) within \({{{\mathscr {F}}}}\).

Objective function optimization framework

Proposition 1

\({{{\mathscr {F}}}}\)is a machine-learning-based objective function optimization framework that determines \(f\left( \theta \right) \)and a new state \({\left| {{\theta }^{*}} \right\rangle } \)of the quantum computer.

Proof

The input and output and the steps of the proposed machine-learning-based objective function optimization framework \({{{\mathscr {F}}}}\) are described in Procedure 1. The related algorithms and procedures are detailed in the next sections.

The optimization framework therefore yields Output 1 via Step 1 and Output 2 via Step 4 as follows.

Output 1 is the estimate \({\tilde{f}}^{\left( \kappa \right) } \left( \theta \right) \) of \(f\left( \theta \right) \) as

where \({\tilde{C}}\left( z\right) \) is the averaged objective function

where \(R^{\left( \kappa \right) } \) is the “imaginary” measurement rounds of the post-processing

where \(\kappa \) is a scaling coefficient, defined as

while \(R^{*} \) is the total number of physical measurements, \(R^{\left( \kappa \right) } \ge R^{*} \), and \(C^{\left( i\right) } \left( z\right) \) refers to the objective function of the ith round, \(i=0,\ldots ,R^{\left( \kappa \right) } -1\).

Output 2 is the \({{{\mathscr {P}}}}\left( \theta \right) \) prediction for the selection of the new value of \(\theta \) to produce new state \({\left| \theta \right\rangle } \) via the quantum computer.

In the \({{{\mathscr {A}}}}_{D} \) segmentation stage, the QG quantum circuit of the quantum computer is simplified by preserving the important characteristic of the state of the quantum computer. The segmented values are fed into the QGPR-ML block. The features, like the objective function values, are computed from the segmented gate parameters. The classification of the \({\left| \theta \right\rangle } \) state of the quantum computer is based on the segmented quantum-gate structure. The output of the QGPR-ML block is a new value of \(\theta \).

The algorithms (\({{{\mathscr {A}}}}_{E} \), \({{{\mathscr {A}}}}_{D} \), \({{{\mathscr {A}}}}_{f}^{L} \), \({{{\mathscr {R}}}}\), \({{{\mathscr {P}}}}\)) defined within \({{{\mathscr {F}}}}\) are convergent and operate in an iterative manner such that the outputs converge to specific values. The output of \({{{\mathscr {F}}}}\) at a given initial \(\theta \) gate-parameter vector (see (2)) converges to the \(\theta ^{*} \) global optimum gate-parameter vector that maximizes the objective function of the quantum computer. \(\square \)

Objective function extension and gate structure decomposition

The post-processing framework \({{{\mathscr {F}}}}\) is applied to the results of the M measurement procedure that measures the \({\left| \theta \right\rangle } \) state produced by the quantum computer. First, the \({{{\mathscr {A}}}}_{E} \) objective function extension algorithm is applied, followed by the \({{{\mathscr {A}}}}_{D} \) decomposition algorithm. The results are then forwarded to the QGPR-ML machine-learning unit to predict the new state of the quantum computer.

Objective function extension

Theorem 1

The objective function of the quantum computer can be extended by the \({{{\mathscr {A}}}}_{E} \)objective function extension algorithm of \({{{\mathscr {F}}}}\).

Proof

Let \(C^{0} \left( z\right) \) refer to the cumulative objective function resulted from the physical measurement M at \(R^{*} \) rounds and n measurements in each rounds as

where \(C^{0} \left( x,y\right) \) identifies a component of \(C^{\left( 0\right) } \left( z\right) \) obtainable by the measurement of the yth qubit, \(y=0,\ldots ,n-1\), in the xth measurement round, \(x=0,\ldots ,R^{*} -1\).

The \(d_{C^{0} \left( z\right) }\) dimension (The \({{d}_{X}}\) dimension of X refers to the product of the measurement rounds and the measured quantum states per measurement rounds required for the evaluation of X.) of \(C^{0} \left( z\right) \) is

For the particular \(R^{*} \) physical measurement rounds, set \(R^{\left( \kappa \right) } \) as given in (16) with the \(\kappa \) scaling coefficient.

Since the physical measurement M consists of the measurements of n qubits, \({\tilde{C}}\left( z\right) \) from (15) can be rewritten as

where \(C^{E} \left( z\right) \) is the extended objective function defined as

where \(C\left( x,y\right) \) identifies a component of \(C^{\left( i\right) } \left( z\right) \) obtainable by the measurement of the yth qubit, \(y=0,\ldots ,n-1\), in the xth measurement round, \(x=0,\ldots ,R^{\left( \kappa \right) } -1\), \(d_{C^{\left( i\right) } \left( z\right) } =\left( 1 \times n\right) \).

The dimension of \(C^{E} \left( z\right) \) is

In our model, the number of “real” physical measurement rounds \(R^{*} \) is also referred to as the 0th level of “imaginary” measurement \(R^{\left( 0\right) } \) of the post-processing procedure; thus,

Therefore, at a particular \(\kappa \), the \(R^{\left( \kappa \right) } \) values of C are averaged to yield the estimate function \({\tilde{f}}^{\left( \kappa \right) } \left( \theta \right) \) via (14) using \({\tilde{C}}\left( z\right) \) as given in (20), which yields \({\tilde{f}}^{\left( \kappa \right) } \left( \theta \right) \) as

where \(C^{E} \left( z\right) \) is given in (21).

The discrete wavelet transform is a useful tool in image processing for noise reduction and to enhance the resolution of low-resolution images to obtain high-resolution images129,130. Motivated by these features, we show that we can utilize the wavelet transform for the extension of the objective function of the quantum computer. However, in our application framework, both the environment and the aims of the procedure are completely different.

Let \({{{\mathscr {W}}}}\left( C^{\left( i\right) } \left( z\right) \right) \) be the discrete wavelet transform function of the \(\left( R^{*} \times n\right) \) dimensional function \(C^{\left( i\right) } \left( z\right) \) as

where \(f_{\phi } \left( \cdot \right) \) are wavelet basis functions, \(W^{\left( j\right) } \left( z\right) \) is the transformed objective function, \(j=0,\ldots ,w^{\left( l\right) } -1\), where \(w^{\left( l\right) } \) is the number of transformed objective function values at a given level l, \(l\ge 1\), \(w^{\left( l\right) } =4+3\left( l-1\right) \), which follows from the execution of \({{{\mathscr {W}}}}\) in (25). The dimension of \({{{\mathscr {W}}}}\left( C^{\left( i\right) } \left( z\right) \right) \) is \(d_{{{{\mathscr {W}}}}\left( C^{\left( i\right) } \left( z\right) \right) } =\left( R^{*} \times n\right) \).

Applying the inverse function \({{{\mathscr {W}}}}^{-1} \left( \cdot \right) \) on (25) at a particular \(f_{\phi } \left( \cdot \right) \), a given \(C^{\left( i\right) } \left( z\right) \) can be expressed as

The proposed method for the objective function extension is given in Algorithm 1 (\({{{\mathscr {A}}}}_{E} \)). Algorithm 1 integrates Sub-procedure 1 (\(P_{E} \)) for the objective function extension.

The description of Sub-Procedure 1 (\(P_{E} \)) is as follows.

These results conclude the proof. \(\square \)

Lemma 1

The precision of the estimation of the objective function yielded from a physical-layer measurement M can be improved via the \({{{\mathscr {A}}}}_{E} \)objective function extension algorithm of \({{{\mathscr {F}}}}\).

Proof

In algorithm \({{{\mathscr {A}}}}_{E} \), function \({{\mathscr {W}}}^{-1} \left( \cdot \right) \) applied on \(W^{E} \left( z\right) \) yields the extended objective function \(C^{E} \left( z\right) \), from which estimate \({\tilde{f}}^{\left( \kappa \right) } \left( \theta \right) \) of \(f\left( \theta \right) \) can be determined at \(R^{*} \) physical measurement rounds. The produced estimate \({\tilde{f}}^{\left( \kappa \right) } \left( \theta \right) \) is equivalent to the estimate \(f^{\left( 0\right) } \left( \theta \right) \) obtainable at \(R^{\left( \kappa \right) } =\kappa ^{2} R^{*} \) physical measurement rounds, with \(\left| M\right| =n\kappa ^{2} R^{*} \) total measurements. The details are as follows. Since the dimension of \(W^{E} \left( z\right) \) is \(d_{W^{E} \left( z\right) } =\left( \kappa ^{2} R^{*} \times n\right) \), the \(C^{E} \left( z\right) \) extended objective function values contains \(R^{\left( \kappa \right) } =\kappa ^{2} R^{*} \) (16) objective functions evaluated for each measurement round. The estimate \({\tilde{f}}\left( \theta \right) \) yielded by the application of \({{{\mathscr {W}}}}^{-1} \left( \cdot \right) \) on \(W^{E} \left( z\right) \) is analogous to the estimate \(f^{\left( 0\right) } \left( \theta \right) \) that can be extracted by \(\left| M\right| \) number of measurements in the physical-layer measurement apparatus M via \(R^{\left( \kappa \right) } \) measurement rounds as

where \(\left| M^{*} \right| =nR^{*} \) is the total number of physical-layer measurements. The proof is concluded here. \(\square \)

Objective function extension factor

Let \(C^{0} \left( z\right) \) be the objective function resulting from the \(R^{*} \) measurement rounds with dimension \(d_{C^{0} \left( z\right) } =\left( R^{*} \times n\right) \), where \(C^{0} \left( z\right) \) is given in (18), \({{\mathscr {W}}}\left( C^{0} \left( z\right) \right) \) and \(W^{E} \left( z\right) ={{{\mathscr {W}}}}^{-1} \left( {{{\mathscr {W}}}}\left( C^{0} \left( z\right) \right) \right) \) be the transformed and extended transformed objective function with dimensions \(d_{W^{0} \left( z\right) } =\left( R^{*} \times n\right) \) and \(d_{W^{E} \left( z\right) } =\left( \kappa ^{2} R^{*} \times n\right) \) as given in (28) and (30), and \(C^{E} \left( z\right) \) be the extended objective function (see (31)) with dimension \(d_{C^{E} \left( z\right) } =\left( \kappa ^{2} R^{*} \times n\right) \).

Then let \(\lambda _{E} \left( \cdot \right) \) be the objective function extension factor, defined as

The quantity in (41) therefore identifies the ratio of the difference of the extended objective function and the extended transformed objective function and the difference of the initial objective function and the initial extended objective function.

Quantum-gate structure decomposition

Theorem 2

The \({\left| \theta \right\rangle } \) state of the quantum computer is decomposable by the \(\varphi \) gate parameters of the quantum computer.

Proof

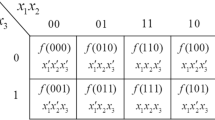

The proposed scheme can be applied for an arbitrary d-dimensional quantum-gate structure; however, for simplicity, we assume the use of qubit gates. Thus, in the QG structure of the quantum computer, we set \(d=2\) for the dimension of the quantum gates. Since the \(\varphi \) gate parameters determine the state \({\left| \theta \right\rangle } \) of the quantum computer (8), the segmentation of the quantum-gate structure is based on the \(\varphi \) gate parameters.

Let \(N_{G} \left( U_{j} \right) \) refer to the qubit number associated with gate \(U_{j} \), and let \(\varphi _{j} \) be a gate parameter of an \(N_{G} \left( U_{j} \right) \)-qubit gate unitary \(U_{j} \left( \varphi _{j} \right) \) as given in (5).

Let \(n_{t} \) be the number of classes selected for the segmentation of the \(\varphi \) gate parameters of the QG structure of the quantum computer. Let \(H_{k} \) be the entropy function associated with the kth class, \(k=1,\ldots ,n_{t} \), and \(f(\vec {\phi })\) be the objective function of the segmentation of the QG structure as

where \(\vec {\phi }\) is an \(d_{\vec {\phi }} =\left( n_{t} -1\right) \)-dimensional vector \(\vec {\phi }=\left[ \phi _{1} ,\ldots ,\phi _{n_{t} -1} \right] \), where \(\phi _{l} \) is the gate segmentation parameter to classify the \(\varphi \) gate parameters into lth and \(\left( l+1\right) \)-th classes, such that

where \(\chi \) is an upper bound on the \(\varphi _{i} \) gate parameters of the quantum computer,

Let \(\vec {\phi }^{*} \) be the optimal vector that maximizes the overall entropy in (42),

with \(\left( n_{t} -1\right) \) optimal parameters, \(0\le \phi _{l}^{*} \le \chi \); \(l=1,\ldots ,n_{t} -1\) subject to be determined as

which yields the maximization of the \(f\left( \vec {\phi }^{*} \right) \) objective function (42).

The \(H_{k} \) entropies in (42) are defined as

where \(N\left( \varphi _{i} \right) \) is the number of occurrences of gate parameter \(\varphi _{i} \) in the QG structure, with probability distribution \(\Pr \left( N\left( \varphi _{i} \right) \right) \) as

where \(N_{tot} \) is the total number of quantum gates in the quantum computer,

while \(\omega _{i} \)s are sum-of-probability distributions, as

Using (48) and (50), the QG structure can be segmented into \(n_{t} \) classes, \(\mathscr {C}{{}_{QG}}:\left\{ \mathscr {C}{{}_{1}},\ldots ,\mathscr {C}{{}_{{{n}_{t}}}} \right\} \) as

with class mean values \(\mu {{}_{QG}}:\left\{ \mu {{}_{1}},\ldots ,{{\mu }_{{{n}_{t}}}} \right\} \) as

As the objective function and the related quantities are determined by Algorithm 2 (\({{{\mathscr {A}}}}_{D} \)), a particular gate parameter \(\varphi _{j} \) is therefore classified as

Motivated by the multilevel segmentation procedures131,132, the steps of \({{{\mathscr {A}}}}_{D} \) are given in Algorithm 2.

According to Algorithm 2, the \(\phi '_{i,j} \) gate classification parameter is evaluated via events \({{E}_{i}}\) as

with the related probabilities132

The proof is concluded here. \(\square \)

Error of gate-parameter decomposition

The \(\varepsilon _{\vec {\phi }^{*} } \) error associated with the gate-parameter segmentation algorithm \({{{\mathscr {A}}}}_{D} \) at a given \(\vec {\phi }^{*} \), \(\varepsilon _{\vec {\phi }^{*} } \) is defined as

where \(D_{QG} \) is the depth of the quantum circuit QG of the quantum computer, n is the number of measurement blocks at the QG circuit output, \(\varphi _{QG_{R} } \left( i,j\right) \) is the \(\varphi \) gate parameter associated with the \(\left( i,j\right) \)-th gate of a reference quantum circuit \(QG_{R} \), \(i=0,\ldots ,D_{QG} -1\), \(j=0,\ldots ,n-1\), (\(\varphi _{QG}^{R} \left( i,j\right) =0\) if there is no gate at \(\left( i,j\right) \) in QG), and \(\varphi _{QG}^{\vec {\phi }^{*} } \left( i,j\right) \) is the \(\varphi \) gate parameter associated with the \(\left( i,j\right) \)-th gate of the segmented QG circuit (\(\varphi _{QG}^{\vec {\phi }^{*} } \left( i,j\right) =0\) if there is no gate at \(\left( i,j\right) \) in QG).

Gate parameter randomization machine learning

The QGPL-ML block splits further the results of \({{\mathscr {A}}}_{D} \) to achieve a randomized data partitioning and to generate rules. The QGPL-ML method integrates algorithms \({{\mathscr {A}}}_{f}^{L} \) and \({{{\mathscr {R}}}}\). Algorithm \({{\mathscr {A}}}_{f}^{L} \) is defined for the data randomization and selection for the learning, while algorithm \({{{\mathscr {R}}}}\) is defined for the rule learning.

Motivated by granulated computing133,134, the data randomization of \({{{\mathscr {A}}}}_{f}^{L} \) in the QGPL-ML block is based on the gate parameters of the quantum gates. The algorithm selects the best training and test instances for the rule-learning block via a ratio parameter \(r\in \left[ 0,1\right] \) in a multilevel structure. As a corollary, \({{{\mathscr {A}}}}_{f}^{L} \) avoids class imbalance and sample representativeness issues133,134. Using the results of \({{{\mathscr {A}}}}_{f}^{L} \), the rule-generation procedure \({{{\mathscr {R}}}}\) uses rule-quality metrics (leverage133,134,135) to identify the best rules in each iteration step. The result of \({{\mathscr {R}}}\) is L optimal rules, where L is the application number (level) of \({{{\mathscr {A}}}}_{f} \).

Randomization and probability distribution

The benefits of the proposed randomization in \({{\mathscr {A}}}_{f}^{L} \) are as follows. The randomization applied \({{{\mathscr {A}}}}_{f}^{L} \) in allows us to create an optimal \({{{\mathscr {S}}}}_{l} \) learning set and optimal \({{{\mathscr {S}}}}_{t} \) test set in the \({{{\mathscr {R}}}}\) rule learning stage. The optimality means that the input data is partitioned into a learning set and test set in a semi-randomized (granulated133,134,151,152) way (i.e., not fully randomized) to avoid the issues of class imbalance and sample representativeness. These problems are connected to a fully randomization151,152.

The problem of class imbalance means that the ratio of classes of the constructed learning set and test set do not represent the ratio of classes of the input data. This problem could occur at a non-optimal random partitioning of the input data, and could bring up in both the training and the test set, respectively133,134,151,152.

The problem of sample representativeness is an integrity problem, and it refers to the problem if the training and test instances have no any connection, which could lead to inconsistency in the learning process151,152.

The procedure of \({{{\mathscr {A}}}}_{f}^{L} \) applies a semi-randomization on the input data, to avoid these issues. The effect of probability distribution of the randomization in \({{{\mathscr {A}}}}_{f}^{L} \) determines the precision of the construction of the training and test sets. The \({{{\mathscr {A}}}}_{f}^{L} \) procedure allows us to keep the class consistency of the input data in the training and test sets, and also to keep the integrity of the instances of the training and test sets. To measure the precision of \({{{\mathscr {A}}}}_{f}^{L} \), we utilized the \({{{\mathscr {L}}}}\) leverage metric135, \({{{\mathscr {L}}}}\in \left[ 0,1\right] \) in the \({{{\mathscr {R}}}}\) rule learning stage. The probability distribution in \({{{\mathscr {A}}}}_{f}^{L} \) has effect on the rule precision generated by \({{{\mathscr {R}}}}\) since it uses the outputs of \({{{\mathscr {A}}}}_{f}^{L} \). At a full randomization in \({{{\mathscr {A}}}}_{f}^{L} \), the \({{\mathscr {L}}}\) value in \({{{\mathscr {R}}}}\) low, \({{{\mathscr {L}}}}\rightarrow 0\), while for a semi-randomization in \({{{\mathscr {A}}}}_{f}^{L} \), \({{{\mathscr {L}}}}\) picks up high values, \({{{\mathscr {L}}}}\rightarrow 1\), in \({{{\mathscr {R}}}}\).

Procedures

The procedure \({{{\mathscr {A}}}}_{f}^{L} \) of the QGPL-ML block is detailed in Algorithm 3.

The procedure \({{{\mathscr {R}}}}\) of the QGPL-ML block is detailed in Algorithm 4.

State of the quantum computer

Theorem 3

The state \({\left| {{\theta }^{*}} \right\rangle } \)of the quantum computer can be made by the output \({{{\mathscr {P}}}}\left( \theta \right) \)of the QGPL-ML procedure.

Proof

The \(\theta ^{*} \) new gate parameter vector is determined via a \({{{\mathscr {P}}}}\) predictive analytics. The \({{{\mathscr {P}}}}\) unit utilizes the outputs generated by the units \({{\mathscr {A}}}_{E} \), \({{{\mathscr {A}}}}_{D} \), \({{{\mathscr {A}}}}_{f}^{L} \) and \({{{\mathscr {R}}}}\) of \({{{\mathscr {F}}}}\). The input of \({{{\mathscr {A}}}}_{f}^{L} \) is provided by \({{{\mathscr {A}}}}_{D} \) (Algorithm 2), such that the input of \({{{\mathscr {A}}}}_{D} \) is the extended set of gate parameters determined by the extension algorithm \({{{\mathscr {A}}}}_{E} \) (Algorithm 1). The prediction of the \(\theta ^{*} \) can be made at an initial \(\theta \) as

where \(\rho \) is the gate parameter modification vector

where \(\alpha _{i} \) calibrates the gate parameter \(\theta _{i} \) of the ith unitary, \(i=1,\ldots ,N_{tot} \). The actual value of \(\alpha _{i} \) depends on the error \(\varepsilon _{\vec {\phi }^{*} } \) (57) associated with \({{{\mathscr {A}}}}_{D} \).

The precision of the prediction is also controlled by a \(\tau \) parameter, which quantifies the minimum of number of classes (\(n_{t} \)) selected for the classification of the quantum-gate parameters in the \({{{\mathscr {A}}}}_{f}^{L} \) procedure.

As the new gate parameter vector

is determined, the quantum computer can set up the state \({\left| \theta ^{*} \right\rangle } \).

The prediction of the \({\left| {{\theta }^{*}} \right\rangle } \) new state of the quantum computer are given in Procedure 2.

These results conclude the proof. \(\square \)

Performance evaluation

This section proposes a performance evaluation for the method validation and comparison. We study the precision of the objective function estimation, the estimation error, and the cost reduction in the objective function estimation process.

Objective function estimation

et \({\tilde{C}}^{{{{\mathscr {R}}}}} \left( z\right) \) be the \({{{\mathscr {R}}}}\) reference objective function that can be estimated at \(R_{{{{\mathscr {R}}}}}^{*} \) reference physical measurement rounds,

as

where \(C^{r,\left( i\right) } \) is the reference objective function evaluated in the ith physical measurement round, \(i=0,\ldots ,R_{{{{\mathscr {R}}}}}^{*} -1,\)\(d_{C^{r,\left( i\right) } } =1\times n\), and \(C^{r} \left( z\right) \) is the sum of the \(\kappa ^{2} R^{*} \) reference objective functions, with dimension \(d_{C^{r} \left( z\right) } =d_{C_{E} \left( z\right) } \), where \(d_{C^{E} \left( z\right) } \) is as given in (22).

The \(R_{{{{\mathscr {R}}}}}^{*} \) number of measurement round serves also as reference to a comparison in the performance evaluation with the scheme of5, that utilizes only physical layer measurement (i.e., refers to the case if no post-processing is applied).

Let \({\tilde{C}}\left( z\right) \) be the observed output objective function (see (20)) estimated via the \(C_{E} \left( z\right) \) extended objective function (see (21)) at \(R^{\left( \kappa \right) } \), as \({\tilde{C}}\left( z\right) ={\textstyle \frac{1}{R^{\left( \kappa \right) } }} \sum _{i=0}^{R^{\left( \kappa \right) } -1}C^{\left( i\right) } \left( z\right) ={\textstyle \frac{1}{R^{\left( \kappa \right) } }} C^{E} \left( z\right) \).

Then, let \(\sigma _{{\tilde{C}}^{{{{\mathscr {R}}}}} \left( z\right) } \) be the standard deviation of \({\tilde{C}}^{{{{\mathscr {R}}}}} \left( z\right) \), defined as

and let \(\sigma _{{\tilde{C}}\left( z\right) } \) be the standard deviation of \({\tilde{C}}\left( z\right) \), defined as

while \({{\sigma }_{{{{{\tilde{C}}}}^{\mathscr {R}}}\left( z \right) }}_{{\tilde{C}}\left( z \right) }\) is defined153 as

Using (65), (66) and (67), we define the quantity \(\Phi ({\tilde{C}}^{{{{\mathscr {R}}}}} \left( z\right) ,{\tilde{C}}\left( z\right) )\) to measure the precision of estimation \({\tilde{C}}\left( z\right) \) at a particular reference objective function \({\tilde{C}}^{{{\mathscr {R}}}} \left( z\right) \), as

where \(\Phi ({\tilde{C}}^{{{{\mathscr {R}}}}} \left( z\right) ,{\tilde{C}}\left( z\right) )\in \left[ 0,1\right] \) such that at \(\Phi ({\tilde{C}}^{{{{\mathscr {R}}}}} \left( z\right) ,{\tilde{C}}\left( z\right) )=0\), \({\tilde{C}}\left( z\right) \) is completely uncorrelated from the reference objective function \({\tilde{C}}^{{{{\mathscr {R}}}}} \left( z\right) \), while at \(\Phi ({\tilde{C}}^{{{{\mathscr {R}}}}} \left( z\right) ,{\tilde{C}}\left( z\right) )=1\) the observed \({\tilde{C}}\left( z\right) \) coincidences with \({\tilde{C}}^{{{{\mathscr {R}}}}} \left( z\right) \).

Note, that from \(\Phi ({\tilde{C}}^{{{{\mathscr {R}}}}} \left( z\right) ,{\tilde{C}}\left( z\right) )\) (see (68)) and \({\tilde{C}}\left( z\right) \) (see (20)), the value of \({\tilde{C}}^{{{{\mathscr {R}}}}} \left( z\right) \) can be evaluated as follows. Let

be a vector formulated from the elements of \({\tilde{C}}^{{{{\mathscr {R}}}}} \left( z\right) \), and let

be a vector formulated form the elements of \({\tilde{C}}\left( z\right) \).

Then, at a particular \(\Phi ({\tilde{C}}^{{{{\mathscr {R}}}}} \left( z\right) ,{\tilde{C}}\left( z\right) )\), the reference \(v({\tilde{C}}^{{{{\mathscr {R}}}}} \left( z\right) )\) can be evaluated from \(v({\tilde{C}}\left( z\right) )\) in a convergent and iterative manner, as

where \(\chi \) is a coefficient153, \(\nabla _{{\tilde{C}}\left( z\right) } (\Phi ({\tilde{C}}^{{{{\mathscr {R}}}}} \left( z\right) ,{\tilde{C}}\left( z\right) ))\) is the derivative of \(\Phi ({\tilde{C}}^{{{{\mathscr {R}}}}} \left( z\right) ,{\tilde{C}}\left( z\right) )\), and \({{\mathscr {P}}}(v({\tilde{C}}^{{{{\mathscr {R}}}}} \left( z\right) ),v({\tilde{C}}\left( z\right) ))\) is a projection

where I is the identity operator, while

Estimation error

Let assume that the physical reference measurement rounds is set to \(R_{{{{\mathscr {R}}}}}^{*} =R^{\left( \kappa \right) } \) to evaluate \({\tilde{C}}^{{{{\mathscr {R}}}}} \left( z\right) \), such that \(R^{*} \) is the actually performed physical layer measurement rounds to evaluate \({\tilde{C}}\left( z\right) \).

To measure the impacts of measurement rounds on the precision of the objective function estimation, we introduce the term \(\mu _{\kappa } ({\tilde{C}}^{{{{\mathscr {R}}}}} \left( z\right) ,{\tilde{C}}\left( z\right) )\) that quantifies the mean squared error (MSE) at a particular scaling factor \(\kappa \) as

As the value of the \(\kappa \) scaling factor increases, the information about the reference objective function \({\tilde{C}}^{{{{\mathscr {R}}}}} \left( z\right) \) increases, and the \(\mu _{\kappa } ({\tilde{C}}^{{{{\mathscr {R}}}}} \left( z\right) ,{\tilde{C}}\left( z\right) )\) value decreases.

Then, let \(\mu _{1} ({\tilde{C}}^{{{{\mathscr {R}}}}} \left( z\right) ,{\tilde{C}}\left( z\right) )\) be the MSE value obtainable at \(R^{*} \) measurement rounds, i.e., \(\kappa =1\), evaluated via as (74)

For \(\kappa >1\), let

be a quantity that measures the squared difference of the objective function values. Assuming an optimal situation, the value of \(\xi _{\kappa } \) is close to zero, \(\xi _{\kappa } \approx 0\). For \(\xi _{\kappa } =0\), it can be concluded that

while for \(\xi _{\kappa } >0,\)

that is, for \(\xi _{\kappa } >0\), (78) coincidences with (74). Additional results are included in the Appendix.

Cost reduction

The cost reduction is evaluated as follows. Let \(f_{0} \) be the cost function of the evaluation of the reference objective function \({\tilde{C}}^{{{{\mathscr {R}}}}} \left( z\right) \) via \(R_{{{{\mathscr {R}}}}}^{*} =R^{\left( \kappa \right) } \) physical measurement rounds (i.e., no post-processing is applied), defined as a reference cost with a unit value

At a given \(f_{0} \), the \(f\left( \kappa \right) \) be the cost function associated to the evaluation of \({\tilde{C}}\left( z\right) \) at a particular \(\kappa \) and \(\xi _{\kappa } \) is defined as

where \(\eta \left( \kappa ,\xi _{\kappa } \right) \) identifies the ratio of

As follows, at \(\xi _{\kappa } =0\), the proposed post-processing method reduces the cost of objective function estimation by a factor

and for any \(\xi _{\kappa } >0\), the \(\Delta f\left( \kappa ,{{\xi }_{\kappa }} \right) \) increment in the \(f\left( \kappa ,0 \right) \) cost function is

In Fig. 2. the \(f\left( \kappa ,\xi _{\kappa } \right) \) cost function values are depicted for a given \(\kappa \), \(\kappa =\left\{ 1,\ldots ,10\right\} \), with \(f_{0} =1\). In Fig. 2(a), the \(\xi _{\kappa } =0\) scenario is depicted. In this case, the objective function estimation cost is reduced to \(f\left( \kappa ,0\right) ={\textstyle \frac{1}{\kappa ^{2} }} \). In Fig. 2(b), the \(\xi _{\kappa } >0\) scenario is illustrated for \(\mu _{1} ({\tilde{C}}^{{{{\mathscr {R}}}}} \left( z\right) ,{\tilde{C}}\left( z\right) )=100\) and \({\textstyle \frac{1}{R^{*} }} \xi _{\kappa } =\left\{ 10,25,50,75,100\right\} \). The resulting cost is reduced to \(f\left( \kappa ,\xi _{\kappa } \right) =f_{0} \eta \left( \kappa ,\xi _{\kappa } \right) \), where \(\eta \left( \kappa ,\xi _{\kappa } \right) \) is as given in (81).

Cost reduction of objective function estimation. (a) The \(f\left( \kappa ,\xi _{\kappa } \right) \) cost function at \(\xi _{\kappa } =0\). The resulting cost is \(f\left( \kappa ,0\right) ={\textstyle \frac{1}{\kappa ^{2} }} \). The initial objective function \(f_{0} =1\) associated with the evaluation of the reference objective function \({\tilde{C}}^{{{{\mathscr {R}}}}} \left( z\right) \) from \(R_{{{{\mathscr {R}}}}}^{*} =R^{\left( \kappa \right) } \) physical measurement rounds is depicted by a red dot. (b) The \(f\left( \kappa ,\xi _{\kappa } \right) \) cost function at \(\xi _{\kappa } >0\) scenarios at \(\mu _{1} ({\tilde{C}}^{{{\mathscr {R}}}} \left( z\right) ,{\tilde{C}}\left( z\right) )=100\) and \({\textstyle \frac{1}{R^{*} }} \xi _{\kappa } =\left\{ 10,25,50,75,100\right\} \). The resulting cost is \(f\left( \kappa ,\xi _{\kappa } \right) =f_{0} \eta \left( \kappa ,\xi _{\kappa } \right) \).

Conclusion

Gate-model quantum computers provide an implementable architecture for experimental quantum computations. Here we studied the problem of objective function estimation in gate-model quantum computers. The proposed framework utilizes the measurement results and increases the precision of objective function estimation and maximization via computational steps. The method reduces the costs connected to the physical layer such as quantum state preparation, quantum computation rounds, and measurement rounds. We defined an objective function extension procedure, a segmentation algorithm that utilizes the gate parameters of the unitaries of the quantum computer, and a machine-learning unit for the system state prediction. The results are particularly convenient for the performance optimization of experimental gate-model quantum computers and near-term quantum devices of the quantum Internet.

Ethics statement

This work did not involve any active collection of human data.

Data availability

This work does not have any experimental data.

References

Arute, F. et al. Quantum supremacy using a programmable superconducting processor. Naturehttps://doi.org/10.1038/s41586-019-1666-5 (2019).

Preskill, J. Quantum computing in the NISQ era and beyond. Quantum 2, 79 (2018).

Harrow, A. W. & Montanaro, A. Quantum computational supremacy. Nature 549, 203–209 (2017).

Aaronson, S. & Chen, L. Complexity-theoretic foundations of quantum supremacy experiments. Proceedings of the 32nd Computational Complexity Conference, CCC ’17, 22:1–22:67 (2017).

Farhi, E., Goldstone, J. Gutmann, S. & Neven, H. Quantum Algorithms for Fixed Qubit Architectures. arXiv:1703.06199v1 (2017).

Alexeev, Y. et al. Quantum Computer Systems for Scientific Discovery. arXiv:1912.07577 (2019).

Loncar, M. et al. Development of Quantum InterConnects for Next-Generation Information Technologies. arXiv:1912.06642 (2019).

Foxen, B. et al. Demonstrating a Continuous Set of Two-qubit Gates for Near-term Quantum Algorithms. arXiv:2001.08343 (2020).

Ajagekar, A., Humble, T. & You, F. Quantum computing based hybrid solution strategies for large-scale discrete-continuous optimization problems. Comput. Chem. Eng. 132, 106630 (2020).

IBM. A new way of thinking: The IBM quantum experience. URL: http://www.research.ibm.com/quantum. (2017).

Biamonte, J. et al. Quantum machine learning. Nature 549, 195–202 (2017).

Lloyd, S., Mohseni, M. & Rebentrost, P. Quantum Algorithms for Supervised and Unsupervised Machine Learning. arXiv:1307.0411v2 (2013).

Lloyd, S., Mohseni, M. & Rebentrost, P. Quantum principal component analysis. Nat. Phys. 10, 631 (2014).

Monz, T. et al. Realization of a scalable Shor algorithm. Science 351, 1068–1070 (2016).

Barends, R. et al. Superconducting quantum circuits at the surface code threshold for fault tolerance. Nature 508, 500–503 (2014).

Kielpinski, D., Monroe, C. & Wineland, D. J. Architecture for a large-scale ion-trap quantum computer. Nature 417, 709–711 (2002).

Ofek, N. et al. Extending the lifetime of a quantum bit with error correction in superconducting circuits. Nature 536, 441–445 (2016).

Farhi, E., Goldstone, J. & Gutmann, S. A Quantum Approximate Optimization Algorithm. arXiv:1411.4028 (2014).

Gyongyosi, L., Imre, S. & Nguyen, H. V. A survey on quantum channel capacities. IEEE Commun. Surv. Tutor. 99, 1. https://doi.org/10.1109/COMST.2017.2786748 (2018).

Gyongyosi, L. & Imre, S. A survey on quantum computing technology. Comput. Sci. Rev.https://doi.org/10.1016/j.cosrev.2018.11.002 (2018).

Farhi, E. & Neven, H. Classification with Quantum Neural Networks on Near Term Processors. arXiv:1802.06002v1 (2018).

Harrigan, M. et al. Quantum Approximate Optimization of Non-Planar Graph Problems on a Planar Superconducting Processor. arXiv:2004.04197v1 (2020).

Rubin, N. et al. Hartree-Fock on a Superconducting Qubit Quantum Computer. arXiv:2004.04174v1 (2020).

Ajagekar, A. & You, F. Quantum computing for energy systems optimization: Challenges and opportunities. Energy 179, 76–89 (2019).

Farhi, E., Goldstone, J. & Gutmann, S. A Quantum Approximate Optimization Algorithm Applied to a Bounded Occurrence Constraint Problem. arXiv:1412.6062 (2014).

Farhi, E. & Harrow, A. W. Quantum Supremacy Through the Quantum Approximate Optimization Algorithm. arXiv:1602.07674 (2016).

Farhi, E., Kimmel, S. & Temme, K. A Quantum Version of Schoning’s Algorithm Applied to Quantum 2-SAT. arXiv:1603.06985 (2016).

Farhi, E., Gamarnik, D. & Gutmann, S. The Quantum Approximate Optimization Algorithm Needs to See the Whole Graph: A Typical Case. arXiv:2004.09002v1 (2020).

Farhi, E., Gamarnik, D. & Gutmann, S. The Quantum Approximate Optimization Algorithm Needs to See the Whole Graph: Worst Case Examples. arXiv:arXiv:2005.08747 (2020).

Lloyd, S. Quantum Approximate Optimization is Computationally Universal. arXiv:1812.11075 (2018).

Sax, I. et al. Approximate Approximation on a Quantum Annealer. arXiv:2004.09267 (2020).

Brown, K. A. & Roser, T. Towards storage rings as quantum computers. Phys. Rev. Accel. Beams 23, 054701 (2020).

Gyongyosi, L. Quantum state optimization and computational pathway evaluation for gate-model quantum computers. Sci. Rep.https://doi.org/10.1038/s41598-020-61316-4 (2020).

Gyongyosi, L. Unsupervised quantum gate control for gate-model quantum computers. Sci. Rep.https://doi.org/10.1038/s41598-020-67018-1 (2020).

Gyongyosi, L. Circuit depth reduction for gate-model quantum computers. Sci. Rep.https://doi.org/10.1038/s41598-020-67014-5 (2020).

Gyongyosi, L. & Imre, S. Quantum circuit design for objective function maximization in gate-model quantum computers. Quant. Inf. Process.https://doi.org/10.1007/s11128-019-2326-2 (2019).

Gyongyosi, L. & Imre, S. State stabilization for gate-model quantum computers. Quant. Inf. Process.https://doi.org/10.1007/s11128-019-2397-0 (2019).

Gyongyosi, L. & Imre, S. Dense quantum measurement theory. Sci. Rep.https://doi.org/10.1038/s41598-019-43250-2 (2019).

Gyongyosi, L. & Imre, S. Training optimization for gate-model quantum neural networks. Sci. Rep.https://doi.org/10.1038/s41598-019-48892-w (2019).

Gyongyosi, L. & Imre, S. Optimizing high-efficiency quantum memory with quantum machine learning for near-term quantum devices. Sci. Rep.https://doi.org/10.1038/s41598-019-56689-0 (2020).

Gyongyosi, L. & Imre, S. Theory of noise-scaled stability bounds and entanglement rate maximization in the quantum internet. Sci. Rep.https://doi.org/10.1038/s41598-020-58200-6 (2020).

Gyongyosi, L. & Imre, S. Decentralized base-graph routing for the quantum internet. Phys. Rev. Ahttps://doi.org/10.1103/PhysRevA.98.022310 (2018).

Rebentrost, P., Mohseni, M. & Lloyd, S. Quantum support vector machine for big data classification. Phys. Rev. Lett. 113, 130503 (2014).

Lloyd, S. The Universe as Quantum Computer, A Computable Universe: Understanding and exploring Nature as computation, H. Zenil ed., World Scientific, Singapore, 2012. arXiv:1312.4455v1 (2013).

Goodfellow, I., Bengio, Y. & Courville, A. Deep Learning (MIT Press, Cambridge, 2016).

Lloyd, S., Garnerone, S. & Zanardi, P. Quantum algorithms for topological and geometric analysis of data. Nat. Commun. arXiv:1408.3106 (2016).

Lloyd, S. et al. Infrastructure for the quantum Internet. ACM SIGCOMM Comput. Commun. Rev. 34, 9–20 (2004).

Debnath, S. et al. Demonstration of a small programmable quantum computer with atomic qubits. Nature 536, 63–66 (2016).

LeCun, Y., Bengio, Y. & Hinton, G. Deep learning. Nature 521, 436–444 (2014).

Schuld, M., Sinayskiy, I. & Petruccione, F. An introduction to quantum machine learning. Contemp. Phys. 56, 172–185 (2015).

Van Meter, R. Quantum Networking (Wiley, Hoboken, 2014).

Imre, S. & Gyongyosi, L. Advanced Quantum Communications—An Engineering Approach (Wiley-IEEE Press, New York, 2012).

Van Meter, R. Architecture of a Quantum Multicomputer Optimized for Shor’s Factoring Algorithm, Ph.D Dissertation, Keio University. arXiv:quant-ph/0607065v1 (2006).

Shor, P. W. Scheme for reducing decoherence in quantum computer memory. Phys. Rev. A 52, R2493–R2496 (1995).

Petz, D. Quantum Information Theory and Quantum Statistics (Springer-Verlag, Heidelberg, 2008).

Bacsardi, L. On the way to quantum-based satellite communication. IEEE Commun. Mag. 51(08), 50–55 (2013).

Farhi, E., Goldstone, J., Gutmann, S. & Zhou, L. The quantum approximate optimization algorithm and the Sherrington-Kirkpatrick model at infinite size. arXiv:1910.08187 (2019).

Wan, K. H. et al. Quantum generalisation of feedforward neural networks. NPJ Quant. Inf. 3, 36 (2017).

Brandao, F. G. S. L., Broughton, M., Farhi, E., Gutmann, S. & Neven, H. For Fixed Control Parameters the Quantum Approximate Optimization Algorithm’s Objective Function Value Concentrates for Typical Instances. arXiv:1812.04170 (2018).

Zhou, L.,Wang, S.-T., Choi, S., Pichler, H. & Lukin, M. D. Quantum Approximate Optimization Algorithm: Performance, Mechanism, and Implementation on Near-Term Devices. arXiv:1812.01041 (2018).

Lechner, W. Quantum Approximate Optimization with Parallelizable Gates. arXiv:1802.01157v2 (2018).

Wheeler, J. A. & Zurek, W. H. (eds) Quantum Theory and Measurement (Princeton University Press, Princeton, 1983).

Braginsky, V. B. & Khalili, F. Y. Quantum Measurement (Cambridge University Press, Cambridge, 1992).

Jacobs, K. Quantum Measurement Theory and Its Applications (Cambridge University Press, Cambridge, 2014).

Zurek, W. H. Decoherence, einselection, and the quantum origins of the classical. Rev. Mod. Phys. 75, 715 (2003).

Greenstein, G. S. & Zajonc, A. G. The Quantum Challenge: Modern Research on the Foundations Of Quantum Mechanics (2nd ed.). ISBN 978-0763724702 (2006).

Jaeger, G. Quantum randomness and unpredictability. Philos. Trans. R. Soc. Lond. Ahttps://doi.org/10.1002/prop.201600053 (2016).

Jabs, A. A conjecture concerning determinism, reduction, and measurement in quantum mechanics. Quant. Stud. Math. Found. 3, 279–292. https://doi.org/10.1007/s40509-016-0077-7 (2016).

Pirandola, S., Laurenza, R., Ottaviani, C. & Banchi, L. Fundamental limits of repeaterless quantum communications. Nat. Commun. 8, 15043. https://doi.org/10.1038/ncomms15043 (2017).

Pirandola, S. et al. Theory of channel simulation and bounds for private communication. Quant. Sci. Technol. 3, 035009. https://doi.org/10.1088/2058-9565/aac394 (2018).

Pirandola, S. End-to-end capacities of a quantum communication network. Commun. Phys. 2, 51 (2019).

Laurenza, R. & Pirandola, S. General bounds for sender-receiver capacities in multipoint quantum communications. Phys. Rev. A 96, 032318 (2017).

Pirandola, S. & Braunstein, S. L. Unite to build a quantum internet. Nature 532, 169–171 (2016).

Wehner, S., Elkouss, D. & Hanson, R. Quantum internet: A vision for the road ahead. Science 362, 6412 (2018).

Gyongyosi, L. Services for the Quantum Internet, DSc Dissertation, Hungarian Academy of Sciences (MTA) (2020).

Pirandola, S. Bounds for multi-end communication over quantum networks. Quant. Sci. Technol. 4, 045006 (2019).

Pirandola, S. Capacities of Repeater-Assisted Quantum Communications. arXiv:1601.00966 (2016).

Pirandola, S. et al. Advances in Quantum Cryptography. arXiv:1906.01645 (2019).

Gyongyosi, L. & Imre, S. Dynamic topology resilience for quantum networks, Proc. SPIE 10547, Advances in Photonics of Quantum Computing, Memory, and Communication XI, 105470Z. https://doi.org/10.1117/12.2288707(2018).

Gyongyosi, L. Topology adaption for the quantum internet. Quant. Inf. Process. 17, 295. https://doi.org/10.1007/s11128-018-2064-x (2018).

Gyongyosi, L. & Imre, S. Entanglement access control for the quantum internet. Quant. Inf. Process. 18, 107. https://doi.org/10.1007/s11128-019-2226-5 (2019).

Gyongyosi, L. & Imre, S. Opportunistic entanglement distribution for the quantum internet. Sci. Rep.https://doi.org/10.1038/s41598-019-38495-w (2019).

Gyongyosi, L. & Imre, S. Multilayer optimization for the quantum internet. Sci. Rep.https://doi.org/10.1038/s41598-018-30957-x (2018).

Gyongyosi, L. & Imre, S. Entanglement availability differentiation service for the quantum internet. Sci. Rep.https://doi.org/10.1038/s41598-018-28801-3 (2018).

Gyongyosi, L. & Imre, S. Entanglement-gradient routing for quantum networks. Sci. Rep.https://doi.org/10.1038/s41598-017-14394-w (2017).

Gyongyosi, L. & Imre, S. Adaptive routing for quantum memory failures in the quantum internet. Quant. Inf. Process. 18, 52. https://doi.org/10.1007/s11128-018-2153-x (2018).

Gyongyosi, L. & Imre, S. A Poisson model for entanglement optimization in the quantum internet. Quant. Inf. Process. 18, 233. https://doi.org/10.1007/s11128-019-2335-1 (2019).

Chakraborty, K., Rozpedeky, F., Dahlbergz, A. & Wehner, S. Distributed Routing in a Quantum Internet. arXiv:1907.11630v1 (2019).

Khatri, S., Matyas, C. T., Siddiqui, A. U. & Dowling, J. P. Practical figures of merit and thresholds for entanglement distribution in quantum networks. Phys. Rev. Res. 1, 023032 (2019).

Kozlowski, W. & Wehner, S. Towards Large-Scale Quantum Networks, Proc. of the Sixth Annual ACM International Conference on Nanoscale Computing and Communication, Dublin, Ireland. arXiv:1909.08396 (2019).

Pathumsoot, P. et al. Modeling of measurement-based quantum network coding on IBMQ devices. Phys. Rev. A 101, 052301 (2020).

Pal, S., Batra, P., Paterek, T. & Mahesh, T. S. Experimental Localisation of Quantum Entanglement Through Monitored Classical Mediator. arXiv:1909.11030v1 (2019).

Miguel-Ramiro, J. & Dur, W. Delocalized information in quantum networks. New J. Phys.https://doi.org/10.1088/1367-2630/ab784d (2020).

Miguel-Ramiro, J., Pirker, A. & Dur, W. Genuine Quantum Networks: Superposed Tasks and Addressing. arXiv:2005.00020v1 (2020).

Pirker, A. & Dur, W. A Quantum Network Stack and Protocols for Reliable Entanglement-Based Networks. arXiv:1810.03556v1 (2018).

Shannon, K., Towe, E. & Tonguz, O. On the Use of Quantum Entanglement in Secure Communications: A Survey. arXiv:2003.07907 (2020).

Amoretti, M. & Carretta, S. Entanglement verification in quantum networks with tampered nodes. IEEE J. Select. Areas Commun.https://doi.org/10.1109/JSAC.2020.2967955 (2020).

Cao, Y. et al. Multi-tenant provisioning for quantum key distribution networks with heuristics and reinforcement learning: A comparative study. IEEE Trans. Netw. Serv. Manage.https://doi.org/10.1109/TNSM.2020.2964003 (2020).

Cao, Y. et al. Key as a service (KaaS) over quantum key distribution (QKD)-integrated optical networks. IEEE Comm. Mag.https://doi.org/10.1109/MCOM.2019.1701375 (2019).

Liu, Y. Preliminary Study of Connectivity for Quantum Key Distribution Network. arXiv:2004.11374v1 (2020).

Amer, O., Krawec, W. O. & Wang, B. Efficient Routing for Quantum Key Distribution Networks. arXiv:2005.12404 (2020).

Sun, F. Performance analysis of quantum channels. Quant. Eng.https://doi.org/10.1002/que2.35 (2020).

Chai, G. et al. Blind channel estimation for continuous-variable quantum key distribution, Quantum Eng., e37, https://doi.org/10.1002/que2.37(2020).

Ahmadzadegan, A. Learning to Utilize Correlated Auxiliary Classical or Quantum Noise. arXiv:2006.04863v1 (2020).

Bausch, J. Recurrent Quantum Neural Networks. arXiv:2006.14619v1 (2020).

Xin, T. Improved Quantum State Tomography for Superconducting Quantum Computing Systems. arXiv:2006.15872v1 (2020).

Dong, K. et al. Distributed subkey-relay-tree-based secure multicast scheme in quantum data center networks. Opt. Eng. 59(6), 065102. https://doi.org/10.1117/1.OE.59.6.065102 (2020).

Krisnanda, T. et al. Probing quantum features of photosynthetic organisms. NPJ Quant. Inf. 4, 60 (2018).

Krisnanda, T. et al. Revealing nonclassicality of inaccessible objects. Phys. Rev. Lett. 119, 120402 (2017).

Krisnanda, T. et al. Observable quantum entanglement due to gravity. NPJ Quant. Inf. 6, 12 (2020).

Krisnanda, T. et al. Detecting nondecomposability of time evolution via extreme gain of correlations. Phys. Rev. A 98, 052321 (2018).

Krisnanda, T. Distribution of Quantum Entanglement: Principles and Applications, PhD Dissertation, Nanyang Technological University. arXiv:2003.08657 (2020).

Ghosh, S. et al. Universal Quantum Reservoir Computing. arXiv:2003.09569 (2020).

Quantum Internet Research Group (QIRG). https://datatracker.ietf.org/rg/qirg/about/ (2018).

Caleffi, M. End-to-End Entanglement Rate: Toward a Quantum Route Metric, 2017, IEEE Globecom, https://doi.org/10.1109/GLOCOMW.2017.8269080(2018).

Caleffi, M. Optimal routing for quantum networks. IEEE Accesshttps://doi.org/10.1109/ACCESS.2017.2763325 (2017).

Caleffi, M., Cacciapuoti, A. S. & Bianchi, G. Quantum Internet: from Communication to Distributed Computing. arXiv:1805.04360 (2018).

Castelvecchi, D. The quantum internet has arrived (Nature, News and Comment, 2018).

Cacciapuoti, A. S., Caleffi, M., Tafuri, F., Cataliotti, F. S., Gherardini, S. & Bianchi, G. Quantum Internet: Networking Challenges in Distributed Quantum Computing. arXiv:1810.08421 (2018).

Cuomo, D., Caleffi, M. & Cacciapuoti, A. S. Towards a Distributed Quantum Computing Ecosystem. arXiv:2002.11808v1 (2020).

Gyongyosi, L. Dynamics of entangled networks of the quantum internet. Sci. Rep. https://doi.org/10.1038/s41598-020-68498-x (2020).

Gyongyosi, L. & Imre, S. Routing space exploration for scalable routing in the quantum internet. Sci. Rep. https://doi.org/10.1038/s41598-020-68354-y (2020).

Khatri, S. Policies for elementary link generation in quantum networks. arXiv:2007.03193 (2020).

Chessa, S. & Giovannetti, V. Multi-level amplitude damping channels: quantum capacity analysis. arXiv:2008.00477 (2020).

Pozzi, M. G. et al. Using reinforcement learning to perform qubit routing in quantum compilers. arXiv:2007.15957 (2020).

Bartkiewicz, K. et al. Experimental kernel-based quantum machine learning in finite feature space. Sci. Rep. 10, 12356. https://doi.org/10.1038/s41598-020-68911-5 (2020).

Gattuso, H. et al. Massively parallel classical logic via coherent dynamics of an ensemble of quantum systems with dispersion in size. ChemRxiv. https://doi.org/10.26434/chemrxiv.12370538.v1 (2020).

Komarova, K. et al. Quantum device emulates dynamics of two coupled oscillators. J. Phys. Chem. Lett. https://doi.org/10.1021/acs.jpclett.0c01880 (2020).

Syed, L., Jabeen, S. & Manimala, S. Telemammography: a novel approach for early detection of breast cancer through wavelets based image processing and machine learning techniques. In Advances in Soft Computing and Machine Learning in Image Processing, Studies in Computational Intelligence (eds Hassanien, A. E. & Oliva, D. A.) (Springer, New York, 2018).

Liyakathunisa, R. & Ravi Kumar, C. N. A novel and robust wavelet based super resolution reconstruction of low resolution images using efficient denoising and adaptive interpolation. Int. J. Image Process. 4(4), 441 (2010).

Hinojosa, S., Pajares, G., Cuevas, E. & Ortega-Sanchez, N. Thermal Image Segmentation Using Evolutionary Computation Techniques. In: A. E. Hassanien, D. A. Oliva, Advances in Soft Computing and Machine Learning in Image Processing, Studies in Computational Intelligence (2018).

Oliva, D., Cuevas, E., Pajares, G., Zaldivar, D. & Perez-Cisneros, M. Multilevel thresholding segmentation based on harmony search optimization. J. Appl. Math. 2013, 575414 (2013).

Liu, H. & Cocea, M. Granular Computing Based Machine Learning, a Big Data Processing Approach, Studies in Big Data Vol. 35 (Springer, New York, 2018).

Liu, H., Gegov, A. & Cocea, M. Collaborative rule generation: An ensemble learning approach. J. Intell. Fuzzy Syst. 30(4), 2277–2287 (2016).

Tan, P. N., Kumar, V. & Srivastava, J. Selecting the right objective measure for association analysis. Inf. Syst. 29, 293–313 (2004).

Cao, Y., Giacomo Guerreschi, G. & Aspuru-Guzik, A. Quantum Neuron: An Elementary Building Block for Machine Learning on Quantum Computers. arXiv: 1711.11240 (2017).

Dunjko, V. et al. Super-Polynomial and Exponential Improvements for Quantum-Enhanced Reinforcement Learning. arXiv: 1710.11160 (2017).

Riste, D. et al. Demonstration of Quantum Advantage in Machine Learning. arXiv: 1512.06069 (2015).

Yoo, S. et al. A quantum speedup in machine learning: Finding an N-bit Boolean function for a classification. New J. Phys. 16(10), 103014 (2014).

Lloyd, S. & Weedbrook, C. Quantum generative adversarial learning. Phys. Rev. Lett. arXiv:1804.09139 (2018).

Wiebe, N., Kapoor, A. & Svore, K. M. Quantum Deep Learning. arXiv:1412.3489 (2015).

Dorozhinsky, V. I. & Pavlovsky, O. V. Artificial Quantum Neural Network: Quantum Neurons, Logical Elements and Tests of Convolutional Nets. arXiv:1806.09664 (2018).

Torrontegui, E. & Garcia-Ripoll, J. J. Universal Quantum Perceptron as Efficient unitary Approximators. arXiv:1801.00934 (2018).

Hosen, M. A., Khosravi, A., Nahavandi, S. & Creighton, D. IEEE Transactions on Industrial Electronics, vol. 62, 4420–4429 (2015).

Precup, R.-E., Angelov, P., Costa, B. S. J. & Sayed-Mouchaweh, M. An overview on fault diagnosis and nature-inspired optimal control of industrial process applications. Comput. Ind. 74, 75–94 (2015).

Saadat, J., Moallem, P. & Koofigar, H. Training echo state neural network using harmony search algorithm. Int. J. Artif. Intell. 15(1), 163–179 (2017).

Vrkalovic, S., Lunca, E.-C. & Borlea, I.-D. Model-free sliding mode and fuzzy controllers for reverse osmosis desalination plants. Int. J. Artif. Intell. 16(2), 208–222 (2018).

Las Heras, U., Alvarez-Rodriguez, U., Solano, E. & Sanz, M. Genetic algorithms for digital quantum simulations. Phys. Rev. Lett. 116, 230504 (2016).

Spagnolo, N. et al. Learning an unknown transformation via a genetic approach. Sci. Rep. 7, 14316 (2017).

Gheorghiu, A., Kapourniotis, T. & Kashefi, E. Verification of Quantum Computation: An Overview of Existing Approaches, Theory of Computing Systems (Springer, New York, 2018).

Liu, H. & Cocea, M. Semi-random partitioning of data into training and test sets in granular computing context. Granul. Comput. 2, 357–386 (2017).

Liu, H., Gegov, A. & Cocea, M. Unified framework for control of machine learning tasks towards effective and efficient processing of big data. Data Science and Big Data: An Environment of Computational Intelligence, 123–140. (Springer, Switzerlan, 2017).

Wang, B., Bovik, A. C., Sheikh, H. R. & Simoncelli, E. P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 13, 1–12 (2004).

Acknowledgements

Open access funding provided by Budapest University of Technology and Economics (BME). The research reported in this paper has been supported by the Hungarian Academy of Sciences (MTA Premium Postdoctoral Research Program 2019), by the National Research, Development and Innovation Fund (TUDFO/51757/2019-ITM, Thematic Excellence Program), by the National Research Development and Innovation Office of Hungary (Project No. 2017-1.2.1-NKP-2017-00001), by the Hungarian Scientific Research Fund - OTKA K-112125 and in part by the BME Artificial Intelligence FIKP grant of EMMI (Budapest University of Technology, BME FIKP-MI/SC).

Author information

Authors and Affiliations

Contributions

L.GY. designed the protocol, wrote the manuscript, and analyzed the results.

Corresponding author

Ethics declarations

Competing interests

The author declares no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Gyongyosi, L. Objective function estimation for solving optimization problems in gate-model quantum computers. Sci Rep 10, 14220 (2020). https://doi.org/10.1038/s41598-020-71007-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-020-71007-9

This article is cited by

-

Scalable distributed gate-model quantum computers

Scientific Reports (2021)

-

Resource prioritization and balancing for the quantum internet

Scientific Reports (2020)

-

Decoherence dynamics estimation for superconducting gate-model quantum computers

Quantum Information Processing (2020)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.