Abstract

We propose a regression algorithm that utilizes a learned dictionary optimized for sparse inference on a D-Wave quantum annealer. In this regression algorithm, we concatenate the independent and dependent variables as a combined vector, and encode the high-order correlations between them into a dictionary optimized for sparse reconstruction. On a test dataset, the dependent variable is initialized to its average value and then a sparse reconstruction of the combined vector is obtained in which the dependent variable is typically shifted closer to its true value, as in a standard inpainting or denoising task. Here, a quantum annealer, which can presumably exploit a fully entangled initial state to better explore the complex energy landscape, is used to solve the highly non-convex sparse coding optimization problem. The regression algorithm is demonstrated for a lattice quantum chromodynamics simulation data using a D-Wave 2000Q quantum annealer and good prediction performance is achieved. The regression test is performed using six different values for the number of fully connected logical qubits, between 20 and 64. The scaling results indicate that a larger number of qubits gives better prediction accuracy.

Similar content being viewed by others

Introduction

Sparse coding refers to a class of unsupervised learning algorithms for finding an optimized set of basis vectors, or dictionary, for accurately reconstructing inputs drawn from any given dataset using the fewest number of non-zero coefficients. Sparse coding explains the self-organizing response properties of simple cells in the mammalian primary visual cortex1,2, and has been successfully applied in various fields including image classification3,4, image compression5, and compressed sensing6,7. Optimizing a dictionary \(\varvec{\phi }\in \mathbb {R}^{M\times N_q}\) for a given dataset and inferring optimal sparse representations \(\varvec{a}^{(k)}\in \mathbb {R}^{N_q}\) of input data \(\mathbf {X}^{(k)}\in \mathbb {R}^{M}\) involves finding solutions of the following minimization problem:

where k is the index of the input data, and \(\lambda\) is the sparsity penalty parameter. Note that the convergence of the solution is guaranteed only when the norm of column vectors of the dictionary \(\varvec{\phi }\) is constrained by an upper bound, which is unity in this study. Because of the \(L_0\)-norm, the minimization problem falls into an NP-hard complexity class with multiple local minima8 in the energy landscape.

Recently, we developed a mapping of the \(\varvec{a}^{(k)}\)-optimization in Eq. (1) to the quadratic unconstrained binary optimization (QUBO) problem that can be solved on a quantum annealer and demonstrated its feasibility on the D-Wave systems9,10,11. The quantum processing unit of the D-Wave systems realizes the quantum Ising spin system in a transverse field and finds the lowest or the near-lowest energy states of the classical Ising model,

using quantum annealing12,13,14. Here \(s_{i}=\pm 1\) is the binary spin variable, \(h_i\) and \(J_{ij}\) are the qubit biases and coupling strengths that can be controlled by a user, and optimization for the Ising model is isomorphic to a QUBO problem with \(a_i = (s_i+1)/2\). By mapping the sparse coding to a QUBO structure, the sparse coefficients are restricted to binary variables \(a_i\in \{0,1\}\), and it makes the \(L_0\)-norm equivalent to the \(L_1\)-norm. Despite this restriction, it was able to provide good sparse representation for the the MNIST9,11,15 and CIFAR-1010,16 images.

In this paper, we propose a regression algorithm using the sparse coding on D-Wave 2000Q in Sect. Regression algorithm using sparse coding on D-Wave 2000Q and apply the algorithm to a prediction of quantum chromodynamics (QCD) simulation observable in Sect. Application to lattice QCD.

Regression algorithm using sparse coding on D-Wave 2000Q

Regression model

Consider N sets of training data \(\{\mathbf {X}^{(i)}, y^{(i)}\}_{i=1}^N\), and M sets of the test data \(\{\mathbf {X}^{(j)}\}_{j=1}^M\), where \(\mathbf {X}^{(i)}\equiv \{x_1^{(i)}, x_2^{(i)}, \ldots , x_D^{(i)}\}\) is an input vector known as the independent variable, and \(y^{(i)}\) is an output variable known as the dependent variable. A regression model F can be built by learning correlations between the input and output variables on the training dataset, so that it can make predictions \(\hat{y}\) of y for an unseen input data \(\mathbf {X}\) as

Such a regression model can be built using the sparse coding learning implemented on a quantum annealer described below.

-

Pre-training

-

(1)

Normalize \(x_d^{(i)}\) and \(y^{(i)}\) so that their standard deviations become comparable. One possible choice is rescaling the data to have a zero mean and a unit variance using the sample mean and sample variance of the training dataset. This procedure is an essential step for the regression algorithm as it makes the reconstruction error for each component comparable.

-

(2)

Using \(\mathbf {X}\) in the test dataset (M) or those in the combined training and test datasets (\(N+M\)), perform sparse coding training and obtain the dictionary \(\varvec{\phi }\) for \(\mathbf {X}\).

-

(1)

-

Training

-

(3)

Concatenate the input and output variables of the training dataset and build the concatenated vectors \(\widetilde{\mathbf {X}}^{(i)}\equiv \{x_1^{(i)}, x_2^{(i)}, \ldots , x_D^{(i)}, y^{(i)}\}\). Extend the dictionary matrix \(\varvec{\phi } \in \mathbb {R}^{D\times N_q}\) obtained in the pre-training to \(\widetilde{\varvec{\phi }}_o \in \mathbb {R}^{(D+1)\times N_q}\), filling up the new elements by zeros.

-

(4)

Taking \(\widetilde{\mathbf {X}}^{(i)}\) as the input signal and \(\widetilde{\varvec{\phi }}_o\) as an initial guess of the dictionary, perform sparse coding training on the training dataset and obtain the dictionary \(\widetilde{\varvec{\phi }}\). Through this procedure, \(\widetilde{\varvec{\phi }}\) will encode the correlation between \(x_d^{(i)}\) and \(y^{(i)}\).

-

(3)

-

Prediction

-

(5)

For the test dataset, for which only \(\mathbf {X}^{(j)}\) is given, build a vector \(\widetilde{\mathbf {X}}_o^{(j)}\equiv \{x_1^{(j)}, x_2^{(j)}, \ldots , x_D^{(j)}, \bar{y}^{(j)}\}\), where \(\bar{y}^{(j)}\) is an initial guess of \(y^{(j)}\). One possible choice of \(\bar{y}^{(j)}\) is the average value of \(y^{(i)}\) in the training dataset.

-

(6)

Using the dictionary \(\widetilde{\varvec{\phi }}\) obtained in (4), find a sparse representation \(\varvec{a}^{(j)}\) for \(\widetilde{\mathbf {X}}^{(j)}_o\) and calculate reconstruction as \(\widetilde{\mathbf {X}}'^{(j)} = \widetilde{\varvec{\phi }}\varvec{a}^{(j)}\). This replaces the outlier components, including \(\bar{y}^{(j)}\), in \(\widetilde{\mathbf {X}}^{(j)}_o\) by the values that can be described by \(\widetilde{\varvec{\phi }}\).

-

(7)

After inverse-normalization, the \((D+1)\)’th component of \(\widetilde{\mathbf {X}}'^{(j)}\) is the prediction of \(y^{(j)}\): \({(\widetilde{\mathbf {X}}'^{(j)})_{D+1} = \hat{y}^{(j)} \approx y^{(j)}}\).

-

(5)

In this regression model, D should be sufficiently large so that the initial guess of the dependent variable \(\bar{y}_j\) does not bias the reconstruction. This procedure can be extended to predict multiple variables by increasing the dimension of y, in exchange for prediction accuracy.

Sparse coding on a D-Wave quantum annealer

The \(\varvec{a}^{(k)}\)-optimization of the sparse coding problem in Eq. (1), can be mapped onto the D-Wave problem in Eq. (2), by the following transformations9,10,11:

In this mapping, each neuron, the sparse coefficient, of the sparse coding model corresponds to a qubit. After a measurement, the quantum state of a qubit collapses to 0 or 1, which indicates that the neuron can have only two states of fire (1) or silent (0). Here the qubit–qubit coupling \(\varvec{J}\) shares similarity with the lateral neuron–neuron inhibition in the locally competitive algorithm17, and the constant \(\lambda\) makes the solution sparse by acting a constant field forcing the qubits to stay in \(a_i=0\) (\(s_i=-1\)) state. By performing the quantum annealing for a given dictionary \(\varvec{\phi }\) and input data vector \(\mathbf {X}\) with the transformations given in Eq. (4), one can obtain the optimal sparse representation \(\varvec{a}\).

An ideal D-Wave 2000Q consists of 2048 qubits, and the entire coupling graph of this 2048-qubit system is called the perfect Chimera 2000Q, whose 1/16 subset is illustrated in Fig. 1. However, the graph is sparsely-connected in which one qubit can couple to only up to 6 other qubits. With the limited connectivity between the qubits, a perfect 2048-qubit Chimera has 6016 couplers. To map a general Ising model problem with arbitrary bipartite couplings to a D-Wave Chimera, in many cases, one requires an additional step called the embedding. In the case of the sparse coding problem, the embedding translates a graph of a fully-connected logical qubits to the Chimera graph of the partially-connected physical qubits by chaining a group of physical qubits together with a certain chain strength \(\xi\). One example of such a mapping of fully-connected logical 6 qubits to the D-Wave 2000Q by chaining 14 physical qubits is described in Fig. 1. This embedding procedure results in a significant reduction of the total available logical qubits that represent the mapped problem; on a perfect D-Wave 2000Q QPU, only up to 65 fully-connected logical qubits can be mapped. In practice, however, some qubits on the QPU are inoperable after a calibration, and the maximum number of logical qubits that could be embedded decreases. For example, the D-Wave 2000Q quantum annealer at Los Alamos National Laboratory (LANL) has only 2032 active qubits with 5924 active couplers. We find that an arbitrary QUBO problem up to 64 fully-connected logical qubits can be embedded in the LANL D-Wave 2000Q.

A subset (1/64) of the Chimera structure of the D-Wave 2000Q consisting of 32 qubits (circles) arranged in a \(2\times 2\) matrix of unit cells of 8 qubits. The qubits within a unit cell have relatively dense connections, while the interactions between the unit cells can be made through the sparse connections in their edges. This figure also shows an example of embedding 6 fully-connected logical qubits (numbers from 1 to 6 inside 14 circles) onto the D-Wave chimera using 14 physical qubits, in which red edges indicate bipartite couplings between qubits while blue edges indicate chained qubits. After such embedding, for example, the logical qubit 1 is mapped to two physical qubits tiled from one qubit in the top right and one in the bottom right unit cell, while the logical qubit 2 mapped to three physical qubits tiled from two qubits in the top left and one qubit in the top right unit cell, and so forth.

Application to lattice QCD

QCD is a theory of quarks and gluons, which are the fundamental particles composing hadrons such as pions and protons, and their interactions. It is a part of the Standard Model of particle physics, and the theory has been demonstrated by a large class of experiments over the decades18,19. Lattice QCD is a discrete formulation of QCD on a Euclidean space time lattice, which allows us to solve low-energy QCD problems using computer simulations by carrying out the Feynman path integration using Monte Carlo methods20,21.

In lattice QCD simulations, a large number of observables are calculated over an ensemble of the Gibbs samples of gluon fields, called the lattices, and computational cost for calculating those observables is expensive in modern simulations. However, the observables’ fluctuations over the statistical samples of the lattices are correlated as they share the same background lattice. By exploiting the correlation between them, in Ref.22, Gradient Tree Boosting (GTB) regression algorithm was able to replace the computationally expensive direct calculation of some observables by the computationally cheap machine learning predictions of them from other observables.

In this section, we apply the regression algorithm proposed in Sect. Regression algorithm using sparse coding on D-Wave 2000Q to the lattice QCD simulation data used for the calculation of the charge-parity (CP) symmetry violating phase \(\alpha _{\text {CPV}}\) of the neutron23,24. Here we consider three types of observables: (1) two-point correlation functions of neutrons calculated without CP violating (CPV) interactions \(C_{\text {2pt}}\), (2) \(\gamma _5\)-projected two-point correlation functions of neutrons calculated without CPV interactions \(C_{\text {2pt}}^P\), and (3) \(\gamma _5\)-projected two-point correlation functions of neutrons calculated with CPV interactions \(C_{\text {2pt}}^{P,\text {CPV}}\), and the phase \(\alpha _{\text {CPV}}\) is extracted from the imaginary part of \(C_{\text {2pt}}^{P,\text {CPV}}\). Those observables are calculated at multiple values of the nucleon source and sink separations in Euclidean time direction t.

Method

Our goal of the regression problem is to predict the imaginary part of \(C_{\text {2pt}}^{P,\text {CPV}}\) at \(t=10a\) from the real and imaginary parts of the two-point correlation functions calculated without CPV interactions, \(C_{\text {2pt}}\) and \(C_{\text {2pt}}^P\), at \(t=8a, 9a, 10a, 11a,\) and 12a, where a is the lattice spacing. It forms a problem with single value of output variable (y) and 20 values (two observables, real/imag, 5 timeslices) of the input variables (\(\mathbf {X}\)). In this application, we use 15616 data points of of these observables measured in Refs.25,26 divided into 6976 training data and 8640 test data. Using these datasets, we follow the regression procedure proposed in Sect. Regression algorithm using sparse coding on D-Wave 2000Q to predict y of the test dataset that contains around 9 K data points.

The procedure can be summarized as follows. First, we standardize the total data using the mean and variance of the training dataset for normalization. Then, we perform the pre-training and obtained \(\varvec{\phi }\) for the 20 elements of \(\mathbf {X}\) only using the test dataset. After appending the y to \(\mathbf {X}\) as the 21st element in the training dataset, we perform the sparse coding dictionary learning and update \(\varvec{\phi }\) to encode correlation between \(\mathbf {X}\) and y. For prediction, input vectors \(\mathbf {X}\) in the test dataset are augmented to dimension of 21 vectors by appending the average value of y, which is 0 after standardization. Finally, sparse coefficients \(\varvec{a}\) for the augmented input vectors are calculated with the fixed dictionary \(\varvec{\phi }\) obtained above, and predictions of y are estimated by taking the 21st element of the reconstructed vectors on the test dataset.

Original, or ground truth, data (blue circles) and the reconstruction from the missing-21st-element data using D-Wave 2000Q with \(N_q = 64\) (red squares) for two randomly chosen data points. Here the 21st-element is the dependent variable of the prediction, whose initial value before the reconstruction is given by 0.

Note that a sparse coding problem solves for the sparsest representation \(\varvec{a}\) and the dictionary \(\varvec{\phi }\), simultaneously, by minimizing Eq. (1). First, our optimization for \(\varvec{a}\) is performed using the D-Wave 2000Q at a given \(\varvec{\phi }\), whose initial guess is given, in general, by random numbers or via imprinting technique. Then, the optimization for \(\varvec{\phi }\) is performed on classical CPUs. The latter step is an offline learning for the fixed values of \(\varvec{a}\) obtained using D-Wave 2000Q. In the offline learning procedure, \(\varvec{\phi }\) is learned using the batch stochastic gradient descent (SGD) algorithm:

where \(E_b = \frac{1}{n_b}\sum _{i=1}^{n_b}{E_i}\) with \(E_i\) is the sparse coding energy function for a given input data given in Eq. (1), and \(\eta\) is the learning rate. In this study, \(\eta\) is initially set to 0.01 and gradually decreased during the training procedure. Batch-learning is used with the batch size of \(n_b = 50\). We repeat the iterative update of the quantum D-Wave inference for \(\varvec{a}\) and SGD learning for \(\varvec{\phi }\) until a convergence is attained. On average, we find the convergence after 4 or 5 iterations. In this study, we use the SAPI2 python client libraries27 for implementing D-Wave operations.

The sparsity of the sparse representation \(\varvec{a}\) associated with the sparsity penalty parameter \(\lambda\) is calculated by the ratio of nonzero elements in \(\varvec{a}\). In this study, \(\lambda\) is tuned to the values that make the average sparsity about 20%, because we find that the 20% of sparsity provides an optimal prediction performance, after examining a few different values of \(\lambda\). This corresponds to \(\lambda\) = [0.06, 0.1], which we varied for different \(N_q\) studies in our experiments. Although the prediction performance could be further optimized by an extensive parameter search, such as that performed in Ref.28, the procedure is computationally expensive so ignored in this proof-of-principle study.

Note that the definition of the overcompleteness is not straightforward for the D-Wave inferred sparse coding because the input signal \(\mathbf {X}\) may have arbitrary real numbers, while the sparse coefficients \(\varvec{a}\) could have only binary numbers of 0 or 1. Ignoring the subtlety, for simplicity, the overcompleteness \(\gamma\) for the input signal of dimension 20 (or 21 for extended vectors) can be calculated by \(\gamma = N_q/20\).

Results

Examples of the reconstruction and prediction from the randomly chosen test data points are visualized in Fig. 2. In the plot, the first 20 elements are the input variables, and the element 21 is the output of the prediction algorithm. As one can see, the reconstruction of the 21st element, which was 0 in their initial guess, is successfully shifted close to their ground truth, as expected.

Distribution of the prediction error \(\Delta ^{(i)}\) of the 21st element plotted against the distribution of the ground truth for different numbers of qubits \(N_q=20, 29, 38, 47, 55\), and 64. The narrower width of the prediction error indicates the better prediction. Standard deviations of the prediction errors for \(N_q= 20, 29, 38, 47, 55\), and 64 are 0.41, 0.375, 0.319, 0.29, 0.273 and 0.254, respectively. Scaling of the prediction error is summarized in Fig. 4.

In order to investigate the prediction accuracy for different \(N_q\), we explore the prediction algorithm with six different numbers of qubits \(N_q=20, 29, 38, 47, 55\) and 64, which corresponds to \(\gamma \approx 1 \sim 3\). Note that the larger \(N_q\) implies the more difficult optimization problem, and \(N_q=64\) is the maximum number of logical qubits that can be embedded onto the D-Wave 2000Q. In the experiments with the D-Wave 2000Q, we use annealing time \(\tau = 20 \mu s\), which is a relatively short annealing time. In addition, we run the experiments with 10 different values of the chain strengths \(\xi\) for each input data point to obtain an optimal solution in exchange for longer wallclock time. We performed our D-Wave experiments using 20 reads for each value of \(\xi\) and take the lowest energy solution. In Fig. 3, we show the distribution of the normalized original data of the dependent variable \(y^{(i)}\) and its prediction error \(\Delta ^{(i)}\) defined by the difference between the ground truth \(y^{(i)}\) and its prediction \(\hat{y}^{(i)}\): \({\Delta ^{(i)} = y^{(i)} - \hat{y}^{(i)}}\). It is clearly demonstrated that (1) the prediction error is much smaller than the fluctuation of the original data, (2) the prediction error is sharply distributed near 0, which indicates no obvious bias in the prediction, and (3) the prediction error tends to be smaller when \(N_q\) becomes larger.

To evaluate the prediction quality, the recovery of the 21st element in the extended input vector, quantitatively, we calculate the ratio of the standard deviations of the prediction error and that of the original data: \({Q \equiv \sigma (\Delta ) / \sigma (y)}\). Q converges to 0 when the prediction is precise, and \(Q\ge 1\) indicates no prediction for a statistical data. Note that this definition of the prediction quality does not account for the bias of the prediction because the bias for the prediction of a statistical data can be removed by following the procedure introduced in Ref.22 based on the variance reduction technique for lattice QCD calculations29,30.

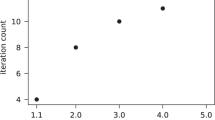

Figure 4 shows the prediction error Q as a function of the number of qubits. It is clearly demonstrated that the systematic decrease of the prediction error as \(N_q\) is increased. Although no theory explaining the scaling is known, we find that the scaling roughly follows the exponential decay ansatz \(Q_\infty +B\cdot \exp [-C\cdot N_q]\). By fitting the ansatz to the data points, an asymptotic value of the prediction quality is obtained as \(Q_\infty \approx 0.18\) or 0.23 for \(N_q \rightarrow \infty\), depending on whether we include \(N_q=20\) data point or not in the fit. For a comparison, regression algorithms provided by the scikit-learn library31 on a classical computer are investigated for the same dataset, and GTB regression algorithm32,33,34 showed the best prediction performance with \(Q=0.15(1)\).

Prediction error Q with the sparse coding regression algorithm implemented on D-Wave 2000Q applied to prediction of the lattice QCD simulation data as a function of \(N_q\) (red squares). An exponential ansatz is fitted to the data points for all \(N_q\) (blue solid line) and those excluding \(N_q=20\) (green dashed line).

The data points in Fig. 4 are obtained with a fixed training and test datasets without cross validation because of the limited D-Wave 2000Q resources. However, the classical regression algorithms we applied on the same datasets showed prediction quality Q between 0.15 and 0.33 with 2 and 8.6% uncertainties, where the uncertainties are estimated using the bootstrap resampling method following Ref.22. Based on this observation, we expect smaller than 10% uncertainties for the data points presented in Fig. 4.

Pre-training is demonstrated to lower the prediction error of this regression algorithm, significantly. When performed the prediction with \(N_q=64\) qubits without the pre-training procedure, we find that \(Q=0.34\), while it becomes \(Q=0.254\) with the pre-training. Without the pre-training, furthermore, we find that the required number of iterative updates of the D-Wave inference for \(\varvec{a}\) and SGD learning for \(\varvec{\phi }\) is increased to about 10 iterations.

Conclusion

In this paper, we proposed a regression algorithm using sparse coding dictionary learning that can be implemented on a quantum annealer, based on the formulation of a regression as an inpainting problem. A pre-training technique is introduced to improve the prediction quality. The procedure is described in Sect. Regression model. The regression algorithm was numerically demonstrated using a set of lattice QCD simulation observables and was able to predict the correlation function calculated in the presence of the CPV interactions from those calculated without the CPV interaction. The regression experiment is carried out using the D-Wave 2000Q quantum annealer with minor embedding technique in order to obtain fully-connected logical qubits. The study is performed for six different values of the number of qubits between 20 and 64, and it showed a systematic decrease of the prediction error as the number of qubits is increased (see Fig. 4). With a larger number of qubits and elaborately tuned the sparsity parameter, we expect further improved performance in the future.

References

Olshausen, B. & Field, D. Emergence of simple-cell receptive field properties by learning a sparse code for natural images. Nature 381, 607–609 (1996).

Olshausen, B. A. & Field, D. J. Sparse coding with an overcomplete basis set: A strategy employed by v1?. Vis. Res. 37, 3311–3325. https://doi.org/10.1016/S0042-6989(97)00169-7 (1997).

Yang, J., Yu, K., Gong, Y. & Huang, T. Linear spatial pyramid matching using sparse coding for image classification. In 2009 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, CVPR Workshops 2009, pp. 1794–1801. https://doi.org/10.1109/CVPR.2009.5206757 (2009).

Coates, A. & Ng, A. Y. The importance of encoding versus training with sparse coding and vector quantization. In Proceedings of the 28th International Conference on International Conference on Machine Learning, ICML’11, pp. 921–928 (Omnipress, USA, 2011).

Watkins, Y., Sayeh, M., Iaroshenko, O. & Kenyon, G. T. Image compression: Sparse coding vs. bottleneck autoencoders. (2017). arXiv:1710.09926.

Candes, E. J., Romberg, J. & Tao, T. Robust uncertainty principles: Exact signal reconstruction from highly incomplete frequency information. IEEE Trans. Inf. Theory 52, 489–509. https://doi.org/10.1109/TIT.2005.862083 (2006).

Donoho, D. L. Compressed sensing. IEEE Trans. Inf. Theory 52, 1289–1306. https://doi.org/10.1109/TIT.2006.871582 (2006).

Natarajan, B. K. Sparse approximate solutions to linear systems. SIAM J. Comput. 24, 227–234. https://doi.org/10.1137/S0097539792240406 (1995).

Nguyen, N. T. T. & Kenyon, G. T. Solving sparse representation for object classification using quantum D-wave 2x machine. In The First IEEE International Workshop on Post Moore’s Era Supercomputing, PMES, pp. 43–44 (2016).

Nguyen, N. T. T., Larson, A. E. & Kenyon, G. T. Generating sparse representations using quantum annealing: Comparison to classical algorithms. In 2017 IEEE International Conference on Rebooting Computing (ICRC), pp. 1–6. https://doi.org/10.1109/ICRC.2017.8123653 (2017).

Nguyen, N. T. T. & Kenyon, G. T. Image classification using quantum inference on the D-Wave 2x. In 2018 IEEE International Conference on Rebooting Computing (ICRC), pp. 1–7 (2018). arXiv:1905.13215.

Kadowaki, T. & Nishimori, H. Quantum annealing in the transverse ising model. Phys. Rev. E 58, 5355–5363. https://doi.org/10.1103/PhysRevE.58.5355 (1998).

Finnila, A., Gomez, M., Sebenik, C., Stenson, C. & Doll, J. Quantum annealing: A new method for minimizing multidimensional functions. Chem. Phys. Lett. 219, 343–348. https://doi.org/10.1016/0009-2614(94)00117-0 (1994).

D-Wave systems. http://www.dwavesys.com/.

LeCun, Y. & Cortes, C. MNIST Handwritten Digit Database. https://doi.org/10.1016/S0042-6989(97)00169-70 (2010).

Krizhevsky, A., Nair, V. & Hinton, G. Cifar-10 (Canadian Institute for Advanced Research).

Rozell, C., Johnson, D., Baraniuk, R. & Olshausen, B. Sparse coding via thresholding and local competition in neural circuits. Neural Comput. 20, 2526–2563 (2008).

Patrignani, C. et al. Review of particle physics. Chin. Phys. C40, 100001. https://doi.org/10.1016/S0042-6989(97)00169-71 (2016).

Greensite, J. An introduction to the confinement problem. Lect. Notes Phys. 821, 1–211. https://doi.org/10.1016/S0042-6989(97)00169-72 (2011).

Wilson, K. G. Confinement of quarks. Phys. Rev. D 10(319), 2445–2459. https://doi.org/10.1016/S0042-6989(97)00169-73 (1974).

Creutz, M. Monte Carlo study of quantized SU(2) gauge theory. Phys. Rev. D 21, 2308–2315. https://doi.org/10.1016/S0042-6989(97)00169-74 (1980).

Yoon, B., Bhattacharya, T. & Gupta, R. Machine learning estimators for lattice QCD observables. Phys. Rev. D 100, 014504. https://doi.org/10.1016/S0042-6989(97)00169-75 (2019) (arXiv:1807.05971).

Yoon, B., Bhattacharya, T. & Gupta, R. Neutron electric dipole moment on the lattice. EPJ Web Conf. 175, 01014. https://doi.org/10.1016/S0042-6989(97)00169-76 (2018) (arXiv:1712.08557).

Pospelov, M. & Ritz, A. Electric dipole moments as probes of new physics. Ann. Phys. 318, 119–169. https://doi.org/10.1016/S0042-6989(97)00169-77 (2005) (arXiv:hep-ph/0504231).

Bhattacharya, T., Cirigliano, V., Gupta, R., Mereghetti, E. & Yoon, B. Neutron Electric dipole moment from quark chromoelectric dipole moment. PoS LATTICE2015, 238 (2016) (arXiv:1601.02264).

Bhattacharya, T., Cirigliano, V., Gupta, R. & Yoon, B. Quark chromoelectric dipole moment contribution to the neutron electric dipole moment. PoS LATTICE2016, 225 (2016) (arXiv:1612.08438).

D-Wave Solver API. https://doi.org/10.1016/S0042-6989(97)00169-78.

Carroll, J., Carlson, N. & Kenyon, G. T. Phase Transitions in Image Denoising via Sparsely Coding Convolutional Neural Networks, pp. 1–4 (2017) https://doi.org/10.1016/S0042-6989(97)00169-79.

Bali, G. S., Collins, S. & Schafer, A. Effective noise reduction techniques for disconnected loops in Lattice QCD. Comput. Phys. Commun. 181, 1570–1583. arXiv:1710.099260 (2010) (arXiv:0910.3970).

Blum, T., Izubuchi, T. & Shintani, E. New class of variance-reduction techniques using lattice symmetries. Phys. Rev. D 88, 094503. arXiv:1710.099261 (2013) (arXiv:1208.4349).

Pedregosa, F. et al. Scikit-learn: Machine learning in python. J. Mach. Learn. Res. 12, 2825–2830 (2011).

Breiman, L., Friedman, J., Stone, C. & Olshen, R. Classification and Regression Trees. The Wadsworth and Brooks–Cole Statistics-Probability Series (Taylor & Francis, Boca Raton, 1984).

Friedman, J. H. Greedy function approximation: A gradient boosting machine. Ann. Stat. 29, 1189–1232 (2000).

Friedman, J. H. Stochastic gradient boosting. Comput. Stat. Data Anal. 38, 367–378. https://doi.org/10.1016/S0167-9473(01)00065-2 (2002).

Acknowledgements

The sparse coding optimizations were carried out using the D-Wave 2000Q at Los Alamos National Laboratory (LANL). Simulation data used for the numerical experiment were generated using the computer facilities at (1) the National Energy Research Scientific Computing Center, a DOE Office of Science User Facility supported by the Office of Science of the U.S. Department of Energy under Contract No. DE-AC02-05CH11231; and (2) the Oak Ridge Leadership Computing Facility at the Oak Ridge National Laboratory, which is supported by the Office of Science of the U.S. Department of Energy under Contract No. DE-AC05-00OR22725; (3) the USQCD Collaboration, which is funded by the Office of Science of the U.S. Department of Energy, (4) Institutional Computing at Los Alamos National Laboratory. This work was supported by the U.S. Department of Energy, Office of Science, Office of High Energy Physics under Contract No. 89233218CNA000001. BY also acknowledges support from the U.S. Department of Energy, Office of Science, Office of Advanced Scientific Computing Research and Office of Nuclear Physics, Scientific Discovery through Advanced Computing (SciDAC) program, and the LANL LDRD program.

Author information

Authors and Affiliations

Contributions

B.Y., N.N., and G.K. designed the research, N.N. conducted the machine learning related experiments on D-Wave 2000Q, and B.Y. and N.N analyzed the results. B.Y., N.N. and G.K. contributed to writing the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Nguyen, N.T.T., Kenyon, G.T. & Yoon, B. A regression algorithm for accelerated lattice QCD that exploits sparse inference on the D-Wave quantum annealer. Sci Rep 10, 10915 (2020). https://doi.org/10.1038/s41598-020-67769-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-020-67769-x

This article is cited by

-

Lossy compression of statistical data using quantum annealer

Scientific Reports (2022)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.