Abstract

Natural and social multivariate systems are commonly studied through sets of simultaneous and time-spaced measurements of the observables that drive their dynamics, i.e., through sets of time series. Typically, this is done via hypothesis testing: the statistical properties of the empirical time series are tested against those expected under a suitable null hypothesis. This is a very challenging task in complex interacting systems, where statistical stability is often poor due to lack of stationarity and ergodicity. Here, we describe an unsupervised, data-driven framework to perform hypothesis testing in such situations. This consists of a statistical mechanical approach—analogous to the configuration model for networked systems—for ensembles of time series designed to preserve, on average, some of the statistical properties observed on an empirical set of time series. We showcase its possible applications with a case study on financial portfolio selection.

Similar content being viewed by others

Introduction

Hypothesis testing lies at the very core of the scientific method. In its general formulation, it hinges upon contrasting the observed statistical properties of a system with those expected under a null hypothesis. In particular, hypothesis testing allows to discard potential models of a system when empirical measurement that would be exceedingly unlikely under them are made.

However, there is often no theory to guide the investigation of a system’s dynamics. What is worse, in many practical situations one may be given a single—and possibly unreproducible—set of experimental data. This is indeed the case when dealing with most complex systems, whose collective dynamics often are markedly non-stationary, ranging from climate1,2 to brain activity3 and financial markets4,5,6. This, in turn, makes hypothesis testing in complex systems a very challenging task, that potentially prevents from assessing which properties observed in a given data sample are “untypical”, i.e, unlikely to be observed again in a sample collected at a different point in time.

This issue is usually tackled by constructing ensembles of artificial time series sharing some characteristics with those generated by the dynamics of the system under study. This can be done either via modelling or in a purely data-driven way. In the latter case, the technique most frequently used by both researchers and practitioners is bootstrapping7,8, which amounts to generating partially randomised versions of the available data via resampling that can then be used as a null benchmark to perform hypothesis testing. Depending on its specificities, bootstrapping can account for autocorrelations and cross-correlations in time series sampled from multivariate systems. However, it relies on assumptions, such as sample independence and some form of stationarity9, which limit its power when dealing with complex systems.

As far as model-driven approaches are concerned, the literature is extremely vast10. Broadly speaking, modelling approaches rely on a priori structural assumptions for the system’s dynamics, and on identifying the parameter values that best explain the available set of observations within a certain class of models (e.g., via Maximum Likelihood10). A widely used class for multivariate time series is that of autoregressive models, such as VAR11, ARMA12, and GARCH13, which indeed were originally introduced, among other reasons, to perform hypothesis testing12. In such models, the future values of each time series are given by a linear combination of past values of one or more time series, each characterised by their own idiosyncratic noise to capture the fluctuations of individual variables. Such a structure is most often dictated by its simplicity rather than by first principles. As a consequence, once calibrated, autoregressive models produce rather constrained ensembles of time series that do not allow to explore scenarios that differ substantially from those observed empirically.

Another modelling philosophy places more emphasis on capturing the structural collective properties of multivariate systems rather than their dynamical ones. Random Matrix models are a prime example in this direction, which usually rely on ansatzes on the correlation structure of the system under study trying to strike a balance between the resulting models’ analytical tractability and their adherence to empirical observations. One of the first—and still most widely used—Random Matrix models is the Wishart Ensemble14,15, which in its simplest form leads to the much celebrated Marčenko–Pastur distribution16 for uncorrelated systems, up to rather recent developments to tackle non-stationarities in financial data17.

Here we propose a maximum entropy approach—inspired by Statistical Mechanics—to perform hypothesis testing on sets of time series. Starting from the maximum entropy principle, we will introduce a (gran)canonical ensemble of correlated time series subject to constraints based on the properties of an empirically observed set of measurements. This, in turn, will result in a multivariate probability distribution which allows to unbiasedly sample values centred on such measurements, which represents the main contribution of this paper. The theory we propose in the following shares some similarities with the canonical ensemble of complex networks18,19,20,21, and, as we will show, inherits its powerful calibration method based on Likelihood maximization22.

The paper is organized as follows. In the next section, we outline the general formalism of our approach. Then, as a formative example, we show how the methodology introduced can be used to reconstruct an unknown probability density function from repeated measurements over time. After that, we proceed to study the most general case of multivariate time series, showing how our approach recovers collective statistical properties of interacting systems without directly accounting for such interactions in the set of constraints imposed on the ensemble. Before concluding with some final remarks, we present an application to financial portfolio selection, and we briefly mention an interesting analogy between our approach and Jaynes’ Maximum Caliber principle23.

Schematic representation of the model. Starting from an empirical set of time series \({\overline{W}}\), we construct its unbiased randomization by finding the probability measure P(W) on the phase space \({{\fancyscript {W}}}\) which maximises Gibbs’ entropy while preserving the constraints \(\{{{\fancyscript {O}}}_l({\overline{W}}) \}_{l=1}^L\) as ensemble averages. The probability distribution P(W) depends on L parameters that can be found by maximising the likelihood of drawing \({\overline{W}}\) from the ensemble. In the figure, orange, turquoise and black are used to indicate positive, negative or empty values of the entries \(W_{i t}\), respectively, while brighter shades of each color are used to display higher absolute values. As it can be seen, the distribution P(W) assigns higher probabilities to those sets of time series that are more consistent with the constraints and therefore more similar to \({\overline{W}}\). See20 for a similar chart in the case of the canonical ensemble of complex networks.

General framework description

Let \({{\fancyscript {W}}}\) be the set of all real-valued sets of N time series of length T (i.e., the set of real-valued matrices of size \(N \times T\)), and let \({\overline{W}} \in {{\fancyscript {W}}}\) be the empirical set of data we want to perform hypothesis testing on (i.e., \({\overline{W}}_{i t}\) stores the value of the ith variable in the system sampled at time t, so that \({\overline{W}}_{i t}\) for \(t = 1, \ldots , T\) represents the sampled time series of variable i). Our aim is to define a probability density function P(W) on \({{\fancyscript {W}}}\) such that the expectation values \(\langle {{\fancyscript {O}}}_\ell (W) \rangle\) of a set of observables (\(\ell = 1, \ldots , L\)) coincide with the value \({\overline{O}}_\ell\) of the corresponding quantities as empirically measured from \({\overline{W}}\).

Following Boltzmann and Gibbs, we can achieve the above by invoking the maximum entropy principle, i.e., by identifying P(W) as the distribution that maximises the entropy functional \(S(W) = - \sum _{W \in {{\fancyscript {W}}}} P(W) \, \ln P(W)\), while satisfying the L constraints \(\langle {{\fancyscript {O}}}_\ell (W) \rangle = \sum _W {{\fancyscript {O}}}_\ell (W) P(W) = {\overline{O}}_\ell\) and the normalisation condition \(\sum _W P(W) = 1\). As is well known24,25, this reads

where \(H(W) = \sum _\ell \beta _\ell \ {{\fancyscript {O}}}_\ell (W)\) is the Hamiltonian of the system, \(\beta _\ell\) (\(\ell = 1, \ldots , L\)) are Lagrange multipliers introduced to enforce the constraints, and \(Z =\sum _W e^{- H(W)}\) is the partition function of the ensemble, which verifies \(\langle {{\fancyscript {O}}}_\ell (W) \rangle = \partial \ln Z / \partial \beta _\ell , \forall \, \ell\).

Figure 1 provides a sketch representation of the ensemble theory just introduced. The rationale for enforcing the aforementioned constraints is that of finding a distribution P(W) that assigns low probability to regions of the phase space \({{\fancyscript {W}}}\) where the observables associated to the Lagrange multipliers \(\beta _\ell\) take values that are exceedingly different from those measured in the empirical set \({\overline{W}}\), and high probability to regions where some degree of similarity with \({\overline{W}}\) is retained (it should be noted that in some cases this does not necessarily lead to the distribution P(W) being peaked around the values \({\overline{O}}_\ell\)). This, in turn, allows to test whether other properties (not encoded in any of the constraints) of \({\overline{W}}\) are statistically significant by measuring how often they appear in instances drawn from the ensemble. The existence and uniqueness of the Lagrange multipliers ensuring the ensemble’s ability to preserve the constraints \({\overline{O}}_\ell\) is a well known result, and they are equivalent to those that would be obtained from the maximization of the Likelihood of drawing the empirical matrix \({\overline{W}}\) from the ensemble26.

In the two following Sections, we shall illustrate our general framework on two examples—one devoted to the single time series case, one to the multivariate case. In both examples, we shall make a selection of possible constraints that can be analytically captured by the approach, i.e., constraints for which the resulting ensemble’s partition function can be computed in closed form. In particular, since we will apply our approach to financial data in a later section, we will choose constraints that have a clear interpretation in the analysis of financial time series. However, it should be kept in mind that such constraints are by no means to be interpreted as general prescriptions, and all results presented in the following could be reobtained—depending on the applications of interest—with any other set of constraints allowing for analytical solutions.

Single time series

As a warm up example to showcase our approach, we shall consider a simple case of a univariate and stationary system with no correlations over time. This amounts to a time series made of independent and identically distributed random draws from a probability density function, which we aim to reconstruct. In the next section we will then proceed to consider multivariate and correlated cases.

Let us then consider a \(1 \times T\) empirical data matrix \({\overline{W}}\) coming from T repeated samples of an observable of the system under consideration. If the processes is stationary and time-independent, this is equivalent to sampling T times a random variable from its given, unknown, distribution and therefore the task of the model can be translated into reconstructing the unknown distribution given the data. Let us consider a vector \(\xi \in [0,1]^d\) and the associated empirical \(\xi\)-quantiles \({\overline{q}}_{\xi }\) calculated on \({\overline{W}}\). In order to fully capture the information present in the data \({\overline{W}}\), we are going to constrain our ensemble to preserve, as averages, one or more quantities derived from \({\overline{q}}_{\xi }\). Possible choices may be:

The number of data points falling within each pair of empirically observed adjacent quantiles:

\({\overline{N}}_{\xi _i} = \sum _t \Theta ({\overline{W}}_t-{\overline{q}}_{\xi _{i-1}}) \; \Theta (-{\overline{W}}_t+{\overline{q}}_{\xi _{i}})\)

The cumulative values of the data points falling within each pair of adjacent quantiles:

\({\overline{M}}_{\xi _i} = \sum _t {\overline{W}}_t \; \Theta ({\overline{W}}_t-{\overline{q}}_{\xi _{i-1}}) \; \Theta (-{\overline{W}}_t+{\overline{q}}_{\xi _{i}})\)

The cumulative squared values of the data points falling within each pair of adjacent quantiles:

\({\overline{M}}_{\xi _i}^2 = \sum _t {\overline{W}}_t^2 \; \Theta ({\overline{W}}_t-{\overline{q}}_{\xi _{i-1}}) \; \Theta (-{\overline{W}}_t+{\overline{q}}_{\xi _{i}})\)

In each of the above constraints we assumed \(i=2,\ldots ,d\), and we have used \(\Theta (\cdot )\) to indicate Heaviside’s step function (i.e., \(\Theta (x) = 1\) for \(x > 0\), and \(\Theta (x) = 0\) otherwise). In general we are not required to use the same \(\xi\) for all constraints, for example we can freely choose to impose on the ensemble the ability to preserve \({\overline{N}}_{\xi _i} \forall i \in [1,d]\) together with the total cumulative values \({\overline{M}} = \sum _i {\overline{M}}_{\xi _i}\), and total cumulative squared values \({\overline{M}}^2 = \sum _i {\overline{M}}_{\xi _i}^2\), as well as each \({\overline{M}}_{\xi _i}\) and \({\overline{M}}_{\xi _i}^2\) separately. Note that the first constraint in the above list effectively amounts to constraining the ensemble’s quantiles.

As we will discuss more extensively later on, the above set of constraints is discretionary. A possible strategy to get rid of such discretionality, would be to partition the data based on a binning procedure aimed at compromising between resolution and relevance, according to its definition introduced in Ref.27.

A defined set of constraints will lead to a different Hamiltonian, to a different number of Lagrange multipliers and therefore to a different statistical model. If we choose, for example, to adopt all the constraints specified above, the Hamiltonian H of the ensemble will depend on a total of \(3 (d-1)\) parameters:

The freedom to choose the amount of constraints of course comes with a cost. First of all, it must be noted that the Likelihood of the data matrix \({\overline{W}}\) will be in general a non linear function of the Lagrange multipliers and therefore of the constraints. These latter can vary both in magnitude (by choosing different values for the entries of \(\xi\)) and in size (by choosing a different d). In general, finding the optimal positions for the constraints, given their number d, can become highly not trivial and goes out of the scope of the present work. However, loosely speaking, the Likelihood of finding \({\overline{W}}\) after a random draw from the defined ensemble is an increasing function of the number of constraints, coherently with the idea that a larger number of parameter leads to better statistics on the data used to train the model. As a result, in order to avoid overfitting, given a set of constraints, we can compare different values of d by using standard model selection techniques such as the Bayesian28 or Akaike information criteria29.

In the following we are going to show how to apply the methodology just outlined to a synthetic dataset. For this example, let us assume that the data generating process follows a balanced mixture of a truncated standard Normal distribution and a truncated Student’s t-distribution with \(\nu =5\) degree of freedom. The two models we are going to use to build the respective ensembles are specified by the following Hamiltonians:

The model resulting from \(H_1\) will have a total of \(2(d-1)\) parameter and will preserve the average number of data points contained within each pair of adjacent quantiles together with their cumulative values, while the model resulting from \(H_2\) will be characterised by \(d+1\) parameters and will preserve the average number of data points contained within each pair of adjacent quantiles together with the overall mean and variance calculated across all data points. In order to find the Lagrange multipliers able to preserve the chosen constraints, we first need to the find the partitions functions of the two ensembles \(Z_{1,2} = \sum _{W} e^{- H_{1,2}(W)}\). In order to do that, we first need to specify the sum over the phase space:

The above expression leads to the following partition functions (in the Supplementary Information we present a detailed derivation of the partition function shown in the next Section; the following result can be obtained with similar steps):

where with \({\text {erf}}\) we indicate the Gaussian error function \({\text {erf}}(z) = \frac{2}{\pi } \int _{0}^{z} e^{-t^2} dt\).

In Fig. 2 we show how the models resulting from the partition functions \(Z_1\) and \(Z_2\) are able to reconstruct the underlying true distribution starting from different amounts of information (i.e different sample sizes) and quantiles vector set to \({\overline{q}} = [-\infty ,{\overline{q}}_{0.25},{\overline{q}}_{0.5},{\overline{q}}_{0.75},\infty ]\) (the steps to obtain the ensemble’s probability density function from its partition function are outlined in the Supplementary Information for the case discussed in the next Section). First of all we note that, as expected, estimating the unknown distribution from more data gives estimates that are closer to the real underlying distribution. Moreover, looking at Fig. 2, we can qualitatively see that the model described by \(Z_1\) does a better job than \(Z_2\) at inferring the unknown pdf. We can verify both statements more quantitatively by calculating the the Kullback–Leibler divergence of the estimated distributions from the true one: for the case with 40 data points we observe \(D_{KL}(P_{Z_1} | P_{T}) = 0.10\) and \(D_{KL}(P_{Z_2} | P_{T}) = 0.19\) while for the case with 4000 samples we have \(D_{KL}(P_{Z_1} | P_{T}) = 0.01\) and \(D_{KL}(P_{Z_2} | P_{T}) = 0.08\). Of course, we cannot conclude yet that \(Z_1\) gives overall a better model for our reconstruction task than \(Z_2\) since they are described by a different number of parameters. As mentioned above, in order to complete our model comparison exercise, we need to rely on a test able to assess the relative quality of the models for a given set of data. We choose the Akaike information criterion which uses as its score function \({\text {AIC}} = 2 k - 2 \log {\hat{L}}\), where k is the number of estimated parameters and \({\hat{L}}\) is the maximum value of the likelihood function for the model. We end up with \({\text {AIC}}_{Z_1} = 130\) and \({\text {AIC}}_{Z_2} = 950\) for the 40 data points case and \({\text {AIC}}_{Z_1} = 1,15 \times 10^4\) and \({\text {AIC}}_{Z_2} = 2.11 \times 10^{5}\) when 4000 data points are available. Of course it is worth repeating that all the steps mentioned above are performed given a fixed vector \({\overline{q}}\) common to the two models.

Comparisons between empirical PDFs (shown as histograms), CDFs and survival functions (CCDFs) and their empirical counterparts reconstructed with our ensemble approach from the Hamiltonians in Eq. (3), shown in yellow (\(H_1\)) and red (\(H_2\)), respectively. (a) Results on the PDF obtained by calibrating the models on 40 data points. (b) Results on the PDF obtained by calibrating the models on 4000 data points. (c) Results on the CDF (and associated survival function) with models calibrated on 40 data points. (d) Results on the CDF (and associated survival function) with models calibrated on 4000 data points. In all plots the dashed black lines marked as “True” correspond to the analytical PDF, CDF and survival function (depending on the panel) of the synthetic data generating process (given by a mixture of a Gaussian and a Student-t, see main text). Vertical dashed lines correspond to the 0.25, 0.5, and 0.75 quantiles employed to calibrate the models. The results from the ensemble have been obtained by pooling together \(10^6\) time series independently generated from the ensemble.

Multiple time series

In this Section we proceed to present the application of the approach introduced above to the multivariate case.

Let us consider an \(N \times T\) empirical data matrix \({\overline{W}}\) whose rows have been rescaled to have zero mean, so that \({\overline{W}}_{it} > 0\) (\({\overline{W}}_{it} < 0\)) will indicate that the time t value of the ith variable is higher (lower) than its empirical mean. Also, without loss of generality, let us assume that \({\overline{W}}_{it} \in {\mathbb {R}}_{\ne 0}\), and that \({\overline{W}}_{it} = 0\) indicates missing data. For later convenience, let us define \(A^{\pm } = \Theta (\pm W)\) and \(w^{\pm } = \pm W \Theta (\pm W)\) (we shall denote the corresponding quantities measured on the empirical set as \({\overline{A}}^{\pm }\) and \({\overline{w}}^{\pm }\)), and let us constrain the ensemble to preserve the values of the following observables:

The number of positive (above-average) and negative (below-average) values \({\overline{N}}_i^\pm = \sum _t {\overline{A}}^{\pm }_{it}\), and the number of missing values \({\overline{N}}_i^0 = T - {\overline{N}}_i^+ - {\overline{N}}_i^-\) recorded for each time series (\(i = 1, \ldots , N\)).

The cumulative positive and negative values recorded for each time series: \({\overline{S}}_i^\pm = \sum _t {\overline{w}}_{it}^\pm\) (\(i = 1, \ldots , N\)).

The number of positive, negative, and missing values recorded at each sampling time: \({\overline{M}}_t^\pm = \sum _i {\overline{A}}^\pm _{it}\), \({\overline{M}}_t^0 = N - {\overline{M}}_t^+ - {\overline{M}}_t^-\) (\(t = 1, \ldots , T\)).

The cumulative positive and negative value recorded at each sampling time: \({\overline{R}}_t^\pm = \sum _i {\overline{w}}^\pm _{it}\) (\(t = 1, \ldots , T\)).

Note that the second constraint in the above list indirectly constrains the mean of each time series. As mentioned in the General Framework section, we selected the above constraints inspired by potential financial applications (and indeed we will assess the ensemble’s ability to capture time series behaviour on financial data). In such context, the above four constraints respectively correspond to: the number of positive and negative returns of a given financial stock, the total positive and negative return of a stock, the number of stocks with a positive or negative return on a given trading day, the total positive and negative return across all stocks on a given trading day. Such constraints amount to some of the most fundamental “observables” associated with financial returns. As we will detail in the following, forcing the ensemble to preserve them on average also amounts to effectively preserving other quantities that are of paramount importance in financial analysis, such as, e.g., the skewness and kurtosis of return distributions, and some of the correlation properties of a set of financial stocks (which are central to financial portfolio analysis and selection.)

The above list amounts to \(8(N + T)\) constraints, and the Hamiltonian H depends on the very same number of parameters:

where we have introduced the Lagrange multipliers associated to all constraints. This choice for the Hamiltonian naturally generalizes the framework introduced in30.

Let us remark that none of the above constraints explicitly accounts for either cross-correlations between variables or for correlations in time. Accounting for these would amount to constraining products of the type \(\sum _{t=1}^T w_{it} w_{jt}\) (in the case of cross-correlations) and \(\sum _{i=1}^N w_{it} w_{it^\prime }\) (in the case of temporal correlations), which introduce a direct coupling between the entries of W, resulting in a considerable loss in terms of the approach’s analytical tractability. However, as we shall see in a moment, the combination of the above constraints is enough to indirectly capture some of the correlation properties in the data of interest.

In order to calculate the partition function \(Z = \sum _{W} e^{- H(W)}\), we first need to properly specify the sum over the phase space. Given the matrix representation we have chosen for the system, and the fact that \(w_{it}^\pm = w_{it} A^\pm _{it}\), this reads:

where the sum specifies whether the entry \(A_{it}\) stores a positive, negative or missing value, respectively. This signifies that negative and positive events (this in general holds for any discretization of the distribution of the entries of W), i.e., values above and below the empirical mean of each variable, obviously cannot coexist in an entry \(W_{i t}\), which, once occupied, cannot hold any other event. In this respect, we can anticipate that negative and positive events will effectively be treated as different fermionic species populating the energy levels of a physical system. Following this line of reasoning, the role of \(w_{i t}^\pm\) is that of general coordinates for each of the two fermionic species. In principle, the integrals in Eq. (8) could have as upper limits some quantities \(U^\pm _{it}\) to incorporate any possible prior knowledge on the bounds of the variables of interest.

The above expression leads to the following partition function (explicitly derived in the Supplementary Information):

where the quantities \(\mu _{it}^{1,2}\), \(\epsilon _{it}\), and \(T_{it}\) are functions of the Lagrange multipliers (specified in the Supplementary Information).

Some considerations about Eq. (9) are now in order. First of all, the partition function factorises into the product of independent factors \(Z_{it}\), and therefore into a collection of \(N \times T\) statistically independent sub-systems. However, it is crucial to notice that their parameters (i.e., the Lagrange multipliers) are coupled through the system of equations specifying the constraints (\(\langle {{\fancyscript {O}}}_\ell (W) \rangle = \partial \ln Z / \partial \beta _\ell , \forall \, \ell\)). As we shall demonstrate later, this ensures that part of the original system’s correlation structure is retained within the ensemble. Moreover, with the above positions, the aforementioned physical analogy becomes clear: the system described by Eq. (9) can be interpreted as a system of \(N \times T\) orbitals with energies \(\epsilon _{it}\) and local temperatures \(T_{it}\) that can be populated by fermions belonging to two different species characterised by local chemical potentials \(\mu _{it}^1\) and \(\mu _{it}^2\), respectively.

From the partition function in Eq. (9) we can finally calculate the probability distribution P(W):

where \(P_{it}^\pm\) and \(Q_{it}^\pm (w^\pm _{it})\) are functions of the Lagrange multipliers (specified in the Supplementary Information) and correspond, respectively, to the probability of drawing a positive (negative) value for the ith variable at time t and to its probability distribution.

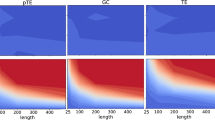

Comparisons between empirical statistical properties and ensemble averages. In these plots we demonstrate the model’s ability to partially reproduce non-trivial statistical properties of the original set of time series that are not explicitly encoded as ensemble constraints. (a) Empirical vs ensemble average values of the variances of the returns calculated for each stock (red dots) and each day (blue dots). (b) Same plot for the skewness of the returns. (c) Comparison between the ensemble and empirical cumulative distributions (and associated survival functions) for the returns of two randomly selected stocks (Microsoft and Pepsi Company). Dots correspond to the cumulative distribution and survival functions obtained from the empirical data. Dashed lines correspond to the equivalent functions obtained by pooling together \(10^6\) time series independently generated from the ensemble. Different colours refer to different stocks as reported in the legend. Remarkably, a Kolmogorov–Smirnov test (0.01 significance) shows that 92% of the stocks returns empirical distributions are compatible with their ensemble counterparts. (d) Same plot for the returns of all stocks on two randomly chosen days. In this case, 82% of daily returns empirical distributions are compatible with their ensemble counterparts (K–S test at 0.01 significance).

Applications of the ensemble theory we propose to a system of stocks. (a) Anomaly detection performed on each single trading day of a randomly selected stock (Google). A return measured on a specific day for a specific stock is marked as anomalous if it exceeds the associated 95% confidence interval on that specific return (accounting for multiple hypothesis correction via False Coverage Rate31). (b) Comparison between the empirical spectrum of the estimated correlation matrix (black dashed line), its ensemble counterpart (orange line) and the one prescribed by the Marchenko–Pastur law (blue line). The inset shows the empirical largest eigenvalue (dahsed line) against the ensemble distribution for it.

As an example application of the ensemble defined above, let us consider the daily returns of the \(N=100\) most capitalized NYSE stocks over \(T=560\) trading days (spanning October 2016–November 2018). In this example, the aforementioned constraints force the ensemble to preserve, on average, the number of positive and negative returns and the overall positive and negative return for each time series and for each trading day, leading to \(6 (N \times T)\) constraints. When these constraints are enforced, an explicit expression for the marginal distributions can be obtained (see the Supplementary Information):

where \(\lambda _{i t}^\pm\) are also functions of the Lagrange multipliers (specified in the Supplementary Information). The above distribution allows both to efficiently sample the ensemble numerically and to obtain analytical results for several observables. Remarkably, it has been shown32 that sampling from a mixture-like density such as the one in Eq. (11) can result in heavy tailed distribution, which is of crucial importance when dealing with financial data.

Figure 3 and Tables 1 and 2 illustrate how the above first-moment constraints translate into explanatory power of higher-order statistical properties. Indeed, in the large majority of cases, the empirical return distributions of individual stocks and trading days and their higher-order moments (variance, skewness, and kurtosis) are statistically compatible with the corresponding ensemble distributions, i.e., with the distributions of such quantities computed over large numbers (\(10^6\) in all cases shown) of time series independently generated from the ensemble. Notably, this is the case without constraints explicitly aimed at enforcing such level of agreement. This, in turn, further confirms that the ensemble can indeed be exploited to perform reliable hypothesis testing by sampling random scenarios that are however closely based on the empirically available data.

In that spirit, in Fig. 4 we show an example of ex-post anomaly detection, where the original time series of a stock is plotted against the 95% confidence intervals obtained from the ensemble for each data point \(W_{i t}\). As it can be seen, the results are non-trivial, since the returns flagged as anomalous are not necessarily the largest ones in absolute value. This is because the constraints imposed on the ensemble reflect the collective nature of financial market movements, thus resulting in the statistical validation of events that are anomalous with respect to the overall heterogeneity present in the market.

Following the above line of reasoning, in Fig. 4 we show a comparison between the eigenvalue spectrum of the empirical correlation matrix of the data, and the average eigenvalue spectrum of the ensemble. As is well known, the correlation matrix spectrum of most complex interacting systems normally features a large bulk of small eigenvalues which is often approximated by the Marchenko–Pastur (MP) distribution33 of Random Matrix Theory (i.e., the average eigenvalue spectrum of the correlation matrix of a large system of uncorrelated variables with finite second moments)15,34,35, plus a few large and isolated eigenvalues that contain information about the relevant correlation structure of the system (e.g., they can be associated to clusters of strongly correlated variables36). As it can be seen in the Figure, the ensemble’s average eigenvalue spectrum qualitatively captures the same range of the empirical spectral bulk (for reference, we also plot the MP distribution), and the ensemble distribution for the largest eigenvalue is very close to the one empirically observed, demonstrating that the main source of correlation in the market is well captured by the ensemble. Conversely, the average distance between the empirically observed largest eigenvalue and its ensemble distribution can be interpreted as the portion of the market’s collective movement which cannot be explained by the constraints imposed on the ensemble.

In the Supplementary Information, we also apply the above ensemble approach to a dataset of weekly and hourly temperature time series recorded in North-American cities. We do this to showcase the approach’s ability to capture inherent time periodicities in empirical data—which would be very hard to capture directly—by means of the constraints already considered in the examples above.

Applications to financial risk management

In this section we push the examples of the previous section—where we demonstrated the ensemble’s ability to partially capture the collective nature of fluctuations in multivariate systems – towards real-world applications. Namely, we will illustrate a case study devoted to financial portfolio selection.

Financial portfolio selection is an optimization problem which entails allocating a fixed amount of capital across N financial stocks. Typically, the goal of an investor is to allocate their capital in order to maximise the portfolio’s expected return while minimising the portfolio’s expected risk. When the latter is quantified in terms of portfolio variance, the solution to the optimization problem amounts to computing portfolio weights \(\pi _i\) (\(i = 1, \ldots , N\)), where \(\pi _i\) is the amount of capital to be invested in stock i (note that \(\pi _i\) can be negative when short selling is allowed). As is well known, these are functions of the portfolio’s correlation matrix37, which reflects the intuitive notion that a well balanced portfolio should be well diversified, avoiding similar allocations of capital in stocks that are strongly correlated. The mathematical details of the problem and explicit expressions for the portfolio weights are provided in the Supplementary Information.

The fundamental challenge posed by portfolio optimization is that portfolio weights have to be first computed “in-sample” and then retained “out-of-sample”. In practice, this means that portfolio weights are always computed based on the correlations between stocks observed over a certain period of time, after which one observes the portfolio’s realized risk based on such weights. This poses a problem, as financial correlations are known to be noisy34,35 and heavily non-stationary6, so there is no guarantee that portfolio weights that are optimal in-sample will perform well in terms of out-of-sample risk.

A number of solutions have been put forward in the literature to mitigate the above problem. Most of these amount to methods to “clean” portfolio correlation matrices38, i.e., procedures aimed at subtracting noise and unearthing the “true” correlations between stocks (at least over time windows where they can be reasonably assumed to be constant). Here, we propose to exploit our ensemble approach in order to apply the same philosophy directly on financial returns rather than on their correlation structure.

Using the same notation as in the previous section, let us assume that \({\overline{W}}_{it}\) represents the time-t return of stock i (\(i = 1, \ldots , N\); \(t = 1, \ldots , T\)). Let us then define detrended returns \({\tilde{W}}_{it} = {\overline{W}}_{it} - \langle W_{it} \rangle\), where \(\langle W_{it} \rangle\) denotes the ensemble average of the return computed from Eq. (11). The rationale for this is to mitigate the impact of returns that may be anomalously large (in absolute value), i.e., returns whose values are markedly distant from their typical values observed in the ensemble (as in the example shown in Fig. 4).

In Table 3 we show the results obtained by performing portfolio selection on the aforementioned detrended returns, and compare them to those obtained without detrending. Namely, we form four portfolios (two of size \(N=20\) and two of size \(N=50\)) with the returns of randomly selected S&P500 stocks in the period from September 2014 to October 2018, and compute their weights based on the correlations computed over non-overlapping period of lengths \(T = N/q\), where \(q \in (0,1)\) is the portfolio’s “rectangularity ratio”, which provides a reasonable proxy for the noisiness of the portfolio’s correlation matrix (which indeed becomes singular for \(q \rightarrow 1\)). The numbers in the table represent the average and \(90\%\) confidence level intervals of the out-of-sample portfolio risk—quantified in terms of variance—computed over a number of non-overlapping time windows of 30 days in the aforementioned period (see caption for more details), with the first two rows corresponding to the raw returns and the two bottom rows corresponding to the detrended returns (note that in both cases out-of-sample risk is still computed on the raw returns, i.e., detrending is only performed in-sample to compute the weights). As it can be seen, detrending by locally removing the ensemble average from each return reduces out-of-sample risk dramatically, despite the examples considered here being plagued by a number of well-known potential downsides, such as small portfolio size, small time windows, and exposure to outliers (the returns used here are well fitted by power law distributions—using the method in39—whose median tail exponent across all stocks is \(\alpha = 3.9\)). In the Supplementary Information we report the equivalent of Table 3 for the portfolios’ Sharpe ratios (i.e., the ratio between portfolio returns and portfolio variance over a time window), with qualitatively very similar results.

In the Supplementary Information, we provide details of an additional application of our ensemble approach to financial risk management, where we compute and test the out-of-sample performance of estimates of Value-at-Risk (VaR)—the most widely used financial risk measure40—based on our ensemble approach. This application is specifically aimed at demonstrating that—despite the large number of Lagrange multipliers necessary to calibrate the more constrained versions of our ensembles—the approach does not suffer from overfitting issues. Quite to the contrary—in line with the literature on configuration models for networked systems19,20, which are a fairly close relative of the approach proposed here—we find the out-of-sample performance of our method to improve even when increasing the number of Lagrange multipliers quite substantially. The reason for this lies in the fact that in classic cases of overfitting one completely suppresses any in-sample variance of the model being used (e.g., when fitting n points with a polynomial of order \(n-1\)). This is not the case, instead, with the model at hand. Indeed, being based on maximum entropy, our approach still allows for substantial in-sample variance even when building highly constrained ensembles. We illustrate this in the aforementioned example in the Supplementary Information by obtaining progressively better out-of-sample VaR performance when increasing the number of constraints (and therefore or Lagrange multipliers) the ensemble is subjected to.

Relationship with Maximum Caliber principle

Before concluding, let us point out an interesting connection between our approach and Jaynes’ Maximum Caliber principle23. It has recently been shown41 that the time-dependent probability distribution that maximizes the caliber of a two-state system evolving in discrete time can be calculated by mapping the time domain of the system as a spatial dimension of an Ising-like model. This is exactly equivalent to our mapping of a time-dependent system onto a data matrix, where the system’s time dimension is mapped onto a discrete spatial dimension of the lattice representing the matrix.

From this perspective, our ensemble approach represents a novel way to calculate and maximize the caliber of systems sampled in discrete time with a continuous number of states. This also allows to interpret some recently published results on correlation matrices in a different light. Indeed, in21 the authors obtain a probability distribution on the data matrix of sampled multivariate systems starting from a maximum entropy ensemble on their corresponding correlation matrices. Following the steps outlined in our paper, the same results could be achieved via the Maximum Caliber principle by first mapping the time dimension of the system onto a spatial dimension of a corresponding lattice, and by then imposing the proper constraints on it.

Discussion

In this paper we have put forward a novel formalism—grounded in the ensemble theory of statistical mechanics—to perform hypothesis testing on time series data. Whereas in physics and in the natural sciences, hypothesis testing is carried out through repeated controlled experiments, this is rarely the case in complex interacting systems, where the lack of statistical stability and controllability often hamper the reproducibility of experimental results. This, in turn, prevents from assessing whether the observations made are consistent with a given hypothesis on the dynamics of the system under study.

The framework introduced here tackles the above issues by means of a data-driven and assumptions-free paradigm, which entails the generation of ensembles of randomized counterparts of a given data sample. The only guiding principle underpinning such a paradigm is that of entropy maximization, which allows to interpret the ensemble’s partition function in terms of a precise physical analogy. Indeed, as we have shown, in our framework events in a data sample correspond to fermionic particles in a physical system with multiple energy levels. In this respect, our approach markedly differs from other known methods to generate synthetic data, such as bootstrap. Notably, even though the Hamiltonians used throughout the paper correspond to non-interacting systems, and therefore the correlations in the original data are not captured in terms of interactions between particles (as is instead the case in Ising-like models), the ensemble introduced here is still capable of partially capturing properties typical of interacting systems through the ‘environment’ the particles are embedded in, i.e., a system of coupled local temperatures and chemical potentials.

All in all, our framework is rather flexible, and can be easily adapted to the data at hand by removing or adding constraints from the ensemble’s Hamiltonian. From this perspective, the number and type of constraints can be used to “interpolate” between very different applications. In fact, loosely constrained ensembles can serve as highly randomized counterparts of an empirical dataset of interest, and therefore can be used for statistical validation purposes, i.e., to determine which statistical properties of the empirical data can be explained away with a few basic constraints. The opposite situation is instead represented by a heavily constrained ensemble, designed to capture most of the statistical properties of the empirical data (the examples detailed in previous sections go in this direction). From a practical standpoint, this application of our approach can be particularly useful in situations where sensitive data cannot be shared between parties (e.g., due to privacy restrictions), and where sharing synthetic data whose statistical properties closely match those of the empirical data can be a very valuable alternative.

For example, the constraints in the applications showcased here (i.e., on the sums of above and below average values) result in two fermionic species of particles. More stringent constraints (e.g., on the data belonging to certain percentiles of the empirical distribution) would result in other species being added to the ensemble.

As we have shown, our framework is capable of capturing several non-trivial statistical properties of empirical data that are not necessarily associated with the constraints imposed on the ensemble. As such, it can provide valuable insight on a variety of complex systems by both allowing to test theoretical models for their structure and by allowing to uncover new information in the statistical properties that are not fully captured by the ensemble. We have illustrated some of these aspects with a financial case study, where we demonstrated that a detrending of stock returns based on our ensemble approach yields a dramatic reduction in out-of-sample portfolio risk.

Once more, let us stress that the main limitation of our approach is that of not accounting explicitly for cross-correlations or temporal correlations. As mentioned above, these could in principle be tackled by following the same analytical framework presented here, but would result in a considerably less tractable model. We aim to explicitly deal with the case of temporal correlations in future work.

Data availability

The financial data employed in this paper are freely available to download from Yahoo Finance.

References

Vaze, J. et al. Climate non-stationarity-validity of calibrated rainfall-runoff models for use in climate change studies. J. Hydrol. 394, 447–457 (2010).

Drótos, G., Bódai, T. & Tél, T. Quantifying nonergodicity in nonautonomous dissipative dynamical systems: an application to climate change. Phys. Rev. E 94, 022214. https://doi.org/10.1103/PhysRevE.94.022214 (2016).

von Bünau, P., Meinecke, F. C., Király, F. C. & Müller, K.-R. Finding stationary subspaces in multivariate time series. Phys. Rev. Lett. 103, 214101. https://doi.org/10.1103/PhysRevLett.103.214101 (2009).

Tsallis, C., Anteneodo, C., Borland, L. & Osorio, R. Nonextensive statistical mechanics and economics. Physica A 324, 89–100 (2003).

Cont, R. Empirical properties of asset returns: stylized facts and statistical issues. Quant. Finance 1, 223–236 (2001).

Livan, G., Inoue, J.-I. & Scalas, E. On the non-stationarity of financial time series: impact on optimal portfolio selection. J. Stat. Mech. Theory Exp. 2012, P07025 (2012).

Davison, A. C. & Hinkley, D. V. Bootstrap Methods and Their Application Vol. 1 (Cambridge University Press, Cambridge, 1997).

Kuonen, D. An introduction to bootstrap methods and their application. WBL Angew. Stat. ETHZ 2017(19), 1–143 (2018).

Haukoos, J. S. & Lewis, R. J. Advanced statistics: bootstrapping confidence intervals for statistics with “difficult” distributions. Acad. Emerg. Med. 12, 360–365 (2005).

Lütkepohl, H. New Introduction to Multiple Time Series Analysis (Springer, New York, 2005).

Qin, D. Rise of var modelling approach. J. Econ. Surv. 25, 156–174 (2011).

Whittle, P. Tests of fit in time series. Biometrika 39, 309–318 (1952).

Bollerslev, T. Generalized autoregressive conditional heteroskedasticity. J. Econ. 31, 307–327 (1986).

Wishart, J. Proofs of the distribution law of the second order moment statistics. Biometrika 35, 55–57 (1948).

Livan, G., Novaes, M. & Vivo, P. Introduction to Random Matrices: Theory and Practice Vol. 26 (Springer, New York, 2018).

Marčenko, V. A. & Pastur, L. A. Distribution of eigenvalues for some sets of random matrices. Math. USSR-Sb. 1, 457 (1967).

Schmitt, T. A., Chetalova, D., Schäfer, R. & Guhr, T. Non-stationarity in financial time series: Generic features and tail behavior. Europhys. Lett. EPL 103, 58003 (2013).

Park, J. & Newman, M. E. Statistical mechanics of networks. Phys. Rev. E 70, 066117 (2004).

Gabrielli, A., Mastrandrea, R., Caldarelli, G. & Cimini, G. Grand canonical ensemble of weighted networks. Phys. Rev. E 99, 030301. https://doi.org/10.1103/PhysRevE.99.030301 (2019).

Cimini, G. et al. The statistical physics of real-world networks. Nat. Rev. Phys. 1, 58 (2019).

Masuda, N., Kojaku, S. & Sano, Y. Configuration model for correlation matrices preserving the node strength. Phys. Rev. E 98, 012312 (2018).

Squartini, T. & Garlaschelli, D. Analytical maximum-likelihood method to detect patterns in real networks. N. J. Phys. 13, 083001. https://doi.org/10.1088/1367-2630/13/8/083001 (2011).

Jaynes, E. T. The minimum entropy production principle. Annu. Rev. Phys. Chem. 31, 579–601 (1980).

Jaynes, E. T. Information theory and statistical mechanics. Phys. Rev. 106, 620 (1957).

Jaynes, E. T. Information theory and statistical mechanics. ii. Phys. Rev. 108, 171 (1957).

Garlaschelli, D. & Loffredo, M. I. Maximum likelihood: extracting unbiased information from complex networks. Phys. Rev. E 78, 015101 (2008).

Cubero, R. J., Jo, J., Marsili, M., Roudi, Y. & Song, J. Statistical criticality arises in most informative representations. J. Stat. Mech Theory Exp. 2019, 063402 (2019).

Kass, R. E. & Wasserman, L. A reference bayesian test for nested hypotheses and its relationship to the Schwarz criterion. J. Am. Stat. Assoc. 90, 928–934 (1995).

Akaike, H. Information theory and an extension of the maximum likelihood principle. In Selected Papers of Hirotugu Akaike (eds Parzen, E. et al.) 199–213 (Springer, New York, 1998).

Almog, A. & Garlaschelli, D. Binary versus non-binary information in real time series: empirical results and maximum-entropy matrix models. N. J. Phys. 16, 093015 (2014).

Benjamini, Y. & Yekutieli, D. False discovery rate-adjusted multiple confidence intervals for selected parameters. J. Am. Stat. Assoc. 100, 71–81 (2005).

Okada, M., Yamanishi, K. & Masuda, N. Long-tailed distributions of inter-event times as mixtures of exponential distributions. R. Soc. Open Sci. 7, 191643 (2020).

Marchenko, V. A. & Pastur, L. A. Distribution of eigenvalues for some sets of random matrices. Mat. Sb. 114, 507–536 (1967).

Plerou, V., Gopikrishnan, P., Rosenow, B., Amaral, L. A. N. & Stanley, H. E. Universal and nonuniversal properties of cross correlations in financial time series. Phys. Rev. Lett. 83, 1471 (1999).

Laloux, L., Cizeau, P., Bouchaud, J.-P. & Potters, M. Noise dressing of financial correlation matrices. Phys. Rev. Lett. 83, 1467 (1999).

Livan, G., Alfarano, S. & Scalas, E. Fine structure of spectral properties for random correlation matrices: an application to financial markets. Phys. Rev. E 84, 016113 (2011).

Merton, R. C. An analytic derivation of the efficient portfolio frontier. J. Financ. Quant. Anal. 7, 1851–1872 (1972).

Bun, J., Bouchaud, J.-P. & Potters, M. Cleaning correlation matrices. Risk Mag. 2015, 1–109 (2016).

Clauset, A., Shalizi, C. R. & Newman, M. E. Power-law distributions in empirical data. SIAM Rev. 51, 661–703 (2009).

Jorion, P. Value at Risk (McGraw-Hill, New York, 2000).

Marzen, S., Wu, D., Inamdar, M. & Phillips, R. An equivalence between a maximum caliber analysis of two-state kinetics and the ising model. arXiv preprint arXiv:1008.2726 (2010).

Acknowledgements

G.L. acknowledges support from an EPSRC Early Career Fellowship in Digital Economy (Grant No. EP/N006062/1).

Author information

Authors and Affiliations

Contributions

R.M. and G.L. designed research; R.M. performed research and analyzed data; R.M. and G.L. wrote the paper.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Marcaccioli, R., Livan, G. Maximum entropy approach to multivariate time series randomization. Sci Rep 10, 10656 (2020). https://doi.org/10.1038/s41598-020-67536-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-020-67536-y

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.