Abstract

The cochlear implant (CI) is the most widely used neuroprosthesis, recovering hearing for more than half a million severely-to-profoundly hearing-impaired people. However, CIs still have significant limitations, with users having severely impaired pitch perception. Pitch is critical to speech understanding (particularly in noise), to separating different sounds in complex acoustic environments, and to music enjoyment. In recent decades, researchers have attempted to overcome shortcomings in CIs by improving implant technology and surgical techniques, but with limited success. In the current study, we take a new approach of providing missing pitch information through haptic stimulation on the forearm, using our new mosaicOne_B device. The mosaicOne_B extracts pitch information in real-time and presents it via 12 motors that are arranged in ascending pitch along the forearm, with each motor representing a different pitch. In normal-hearing subjects listening to CI simulated audio, we showed that participants were able to discriminate pitch differences at a similar performance level to that achieved by normal-hearing listeners. Furthermore, the device was shown to be highly robust to background noise. This enhanced pitch discrimination has the potential to significantly improve music perception, speech recognition, and speech prosody perception in CI users.

Similar content being viewed by others

Introduction

Cochlear implants (CIs) are neuroprostheses that allows hundreds of thousands of severely-to-profoundly hearing-impaired people to hear again. To recover auditory perception, an array of micro-electrodes that deliver electrical pulses to the auditory nerve is surgically implanted into the cochlea. Due to anatomical and physical limitations, modern implants use only 12–24 electrodes to transfer sound information to the brain, although only around 8 electrodes are thought to be effective when used together1,2. In contrast, in a healthy cochlea, sound information is transferred to the auditory nerve by around 3500 hair cells3. Remarkably, despite these limitations, CIs allow the majority of users to identify words in quiet listening environments at an accuracy similar to those with normal hearing4,5. However, CI users are typically very poor at detecting pitch changes, which impairs their ability to identify age, sex, and accent6,7, as well as perception of speech prosody8,9,10,11,12,13. Speech prosody allows a listener to distinguish statements from questions (e.g. “It's good”. from “It's good?”) and nouns from verbs (e.g. “Object” from “Object”). It also allows listeners to distinguish emotion (e.g. anger from sadness) and intention (e.g. whether the phrase “nice jumper” was meant as a genuine complement or a sarcastic remark). Impaired pitch discrimination also limits music perception14, as pitch conveys crucial melody, harmony, and tonality information. CI users struggle to recognise simple melodies14,15,16,17 and to discriminate different instruments14,18,19 with only around 13% of adult CI users reporting that they enjoy listening to music after implantation20.

Traditionally, researchers and manufacturers have attempted to overcome the limitations of CIs by improving implant technology and surgical techniques. However, in recent decades, improvements in CI outcomes have slowed markedly5,21. In this study, we take a new approach. Rather than attempting to transfer more pitch information through the implant, we augment the electrical CI signal by delivering pitch information through haptic stimulation on the forearm (“electro-haptic stimulation”22). This approach is particularly appealing as this supplementary wearable neuroprosthetic is non-invasive and inexpensive.

The effectiveness of providing sensory information that is usually delivered through one sense using a different sense is well established. Seminal work by Paul Bach-y-rita in the late 1960s showed that, using visual information presented through tactile stimulation on the back, blind people can recognise faces, judge the speed and direction of an object, and complete complex inspection-assembly tasks23,24. Later, researchers successfully delivered visual information using sound25,26 and basic speech information using haptic stimulation, either on the finger, forearm or wrist27,28. More recently, in addition to substituting auditory input for haptic input, it has been shown that it is possible to augment auditory input with haptic input; three recent studies have shown that CI users’ ability to recognise speech in background noise was enhanced when speech information was presented through haptic stimulation on the wrists22,29 or fingertips30. Two other recent studies have shown that haptic stimulation can improve melody identification in CI users, using a single-channel haptic stimulation device strapped to the wrist31 or fingertip32. In the current study, we evaluated the ability of our new mosaicOne_B device, which delivers pitch information through multi-channel haptic stimulation along the forearm, to provide accurate pitch information.

The mosaicOne_B extracts pitch information from audio in real-time and delivers it through haptic stimulation. The device uses 12 motors, with six along the top and six along the underside of the forearm (see Fig. 1). The motors are activated chromatically, like keys on a piano, with each motor representing a different pitch within a single octave. The mosaicOne_B delivers relative pitch information, meaning that sounds that are exactly an octave apart will produce the same pattern of stimulation. This approach allows for high relative pitch resolution, whilst discarding absolute pitch information (i.e. information on the pitch of a stimulus within the full scale of perceivable pitches). As even the poorest performing CI users are typically able to discriminate sounds that are an octave apart33,34,35,36, by using the CI in combination with the mosaicOne_B, CI users are expected to have access to absolute pitch information.

The first aim of this study was to test the limits of pitch discrimination with the mosaicOne_B. Studies using real musical instruments have estimated average pitch discrimination thresholds across CI users of around 80–90% (i.e. 10–11 semitones)35,36. Other studies using synthetic sounds (tone complexes) have found average pitch discrimination thresholds of around 10–20% (2–3 semitones)33,34. In all of these studies, the variance across subjects was considerable, with some participants only able to discriminate sounds with a pitch difference of slightly less than 100% (one octave) and the best individuals able to discriminate around 3% (0.5 semitones). The performance of the best CI users is similar to pitch discrimination thresholds with musical instruments for normal-hearing listeners35. In the current study, we aimed to achieve an average pitch discrimination threshold of 6% (1 semitone) or better. This would allow CI users to track musical melodies (the smallest musical interval for western melodies is typically 1 semitone) and give access to cues for emotion and intention in speech.

Another way in which the mosaicOne_B could aid CI listening is by making it more robust to background noise. CI user performance degrades quickly when there are competing sounds; for example, CI users struggle to discriminate musical instruments when multiple instruments are playing14,18,19 or to understand speech in noisy environments21,22,30, such as classrooms, busy workplaces, or cafes. A number of studies have shown that, in addition to impairing speech prosody perception, reduced access to information about changes in speech fundamental frequency (F0; an acoustic correlate of pitch) reduces speech recognition in noise37,38. The second aim of this study was to test whether the mosaicOne_B could provide accurate pitch information in the presence of background noise.

Finally, this study aimed to test whether pitch information from different modalities is combined effectively when delivered through audio and haptic stimulation, so that performance with audio and haptic stimulation together is better than with either alone. Alternatively, if one sense gives weak pitch information and the other strong pitch information, the weaker signal may create a distraction that impairs performance. There is a range of anatomical, physiological, and psychophysical evidence to suggest that audio and haptic signals are combined in the brain. Anatomical and physiological studies have revealed extensive connections between auditory and somatosensory neural pathways, from the periphery to the cortex39,40,41,42. Psychophysical studies have demonstrated both that auditory stimuli can affect haptic perception43 and that haptic stimuli can affect auditory perception44,45,46. In one study, it was shown that perception of the dryness of a surface could be modulated by manipulating the accompanying audio43. In another set of studies, tactile stimulation was shown to increase perceived loudness and facilitate detection of faint sounds45,46. It may therefore be expected that pitch discrimination performance will be better when audio and haptic stimulation are provided concurrently than when either are presented alone.

In the twelve normal-hearing listeners tested in the current study, pitch discrimination was measured with CI-simulated audio alone, haptic stimulation alone, or with audio and haptic stimulation together. The stimuli were harmonic tone complexes that were designed to differ only in pitch (see Methods), so that the results could be generalised to both speech and musical sounds. For each of the three conditions, measurements were made with no background noise, and with background noise at signal-to-noise ratios (SNRs) of either −5 dB or −7.5 dB. These background noise levels were selected as assessment of pitch estimation errors produced by the mosaicOne_B increased at these SNRs (see Methods). It should be noted that these SNRs are far more challenging than those in which CI users are typically able to perform on speech-in-noise recognition tasks21,22,30.

Results

Figure 2 shows pitch discrimination thresholds with audio stimulation only, with haptic stimulation only, and with audio and haptic stimulation together. Results are shown without background noise and with white background noise at either −5 dB or −7.5 dB SNR. Friedman ANOVAs were conducted with stimulation type (audio only, haptic only, audio-haptic) and noise type (clean, -5 dB SNR, and -7.5 dB SNR) as factors. A significant overall effect of stimulation type was found (χ2(2) = 18.17, p = <0.001). A significant overall effect of noise was also found for audio (χ2(2) = 18.17, p = 0.001) and haptic stimulation only (χ2(2) = 15.45, p = .001). For audio only, pitch discrimination increased from a median change in F0 of 43.4% without noise (ranging from 8.4% to 106.0% across participants) to 82.2% with noise at −5 dB SNR (ranging from 27.6% to 130%) and to 85.2% with noise at −7.5 dB SNR (ranging from 29.7% to 116.5%). For haptic only, median pitch discrimination was 1.4% without noise (ranging from 0.8% to 3.5%), 2.0% with noise at −5 dB SNR (ranging from 0.6% to 6.6%), and 5.0% with noise at −7.5 dB SNR (ranging from 1.1% to 10.8%). No effect of noise was found for audio-haptic stimulation (χ2(2) = 2.09, p = 0.35). In the audio-haptic condition, median pitch discrimination thresholds were 1.5% without noise (ranging from 0.8% to 4.1%), 2.5% with noise at −5 dB SNR (ranging from 0.8% to 5.5%), and 2.4% with noise at −7.5 dB SNR (ranging from 0.9% to 15.0%).

Box plot showing fundamental frequency (F0) discrimination thresholds across our 12 participants with CI simulated audio only, haptic stimulation only, and audio and haptic stimulation together. Conditions with no background noise and with background noise at either −5 dB or −7.5 dB signal-to-noise ratio (SNR) are shown. The central line on each box shows the median and the bottom and top edges of the box show the 25th and 75th percentiles. The whiskers extend to the most extreme data points that are not considered outliers. Outliers are shown individually as circular symbols.

Three post-hoc Wilcoxon signed-rank tests (with Holm-Bonferroni correction for multiple comparisons) were conducted to assess the effect of haptic stimulation on pitch discrimination thresholds (see Methods). Pitch discrimination was significantly better with audio-haptic stimulation than with audio alone (T = 78, p = .001, d = 3.76). Median discrimination thresholds improved by 42.0% without noise (ranging from 7.5% to 103.4% across participants), by 80.2% with noise at −5 dB SNR (ranging from 7.5% to 103.6%), and by 80.3% with noise at −7.5 dB SNR (ranging from 5.7% to 95.2%). Pitch discrimination was also significantly better with haptic alone than with audio alone (T = 78, p = .001, d = 3.75). Discrimination improved by 41.9% without noise (ranging from 7.2% to 104.9% across participants), by 79.8% with noise at −5 dB SNR (ranging from 7.0% to 101.0%), and by 80.8% with noise at −7.5 dB SNR (ranging from 7.1% to 91.0%). No difference in pitch discrimination was found between haptic alone and audio-haptic stimulation (T = 35, p = .791, d = −0.05).

Discussion

In this study, we found that the mosaicOne_B substantially improved pitch discrimination for normal-hearing subjects listening to CI simulated audio. The average pitch discrimination threshold with haptic stimulation was 1.4% (without noise), which corresponds to markedly less than a quartertone, and is comfortably better than our target of 6% (1 semitone). Furthermore, even the worst performer in the current study was substantially better than our targeted average performance across subjects, achieving a pitch discrimination threshold of just 3.5% - comfortably less than a semitone (the minimum pitch change in most western melodies) and similar to pitch discrimination of the best performing CI users33,34. For both haptic alone and audio-haptic conditions, some participants achieved pitch discrimination thresholds as low as just 0.8%. This is similar to the performance of normal-hearing listeners for a similar auditory stimulus (although it should be noted that pitch discrimination thresholds for audio is highly sensitive to stimulus parameters)47. This enhanced pitch discrimination by the mosaicOne_B has the potential to significantly aid music perception in CI users, as well as speech recognition and speech prosody perception.

The excellent pitch discrimination performance found for the mosaicOne_B was more robust to background noise than may have been expected, with some participants achieving pitch discrimination thresholds of just 0.9% even when the noise was 7.5 dB louder than the signal. In fact, no effect of noise on pitch discrimination thresholds was found. At −7.5 dB SNR (the lowest used in the current study), even the best CI users are unable to perform pitch48 or speech recognition tasks21,22,30. The absence of an effect of noise was surprising given the greater pitch estimation error by the mosaicOne_B at this low SNR, which led to a wider distribution of motors being activated for a single stimulus (see Methods). It is possible that discrimination was achieved by a comparison of the time-averaged distributions of active motors for each stimulus. A similar process is thought to underlie signal detection in the auditory system49,50. The robustness to noise that was achieved by our real-time signal processing strategy is particularly impressive as pitch extraction algorithms tend to be highly susceptible to background noise and can often not be applied in real time51. It should be noted that, in the current study, the background noise used was non-harmonic (like environmental sounds such as rain or wind, but unlike competing talkers or background music). Future work is required to explore whether the current approach can also be successfully applied in environments with multiple harmonic sounds.

No difference in performance was found between the audio-haptic and haptic-alone conditions. The absence of a degradation in performance is encouraging, as it indicates that the poor-quality pitch information from auditory stimulation did not distract participants, even after only a small amount of familiarization. It is perhaps not surprising that performance with haptic stimulation was not enhanced by the addition of apparently much poorer pitch information provided through audio stimulation (as indicated by the better performance in the haptic-alone than audio-alone condition). Indeed, it has been observed in several previous studies that the greatest benefit from multisensory integration occurs when senses provide relatively low-quality information when used in isolation52,53,54 (the principal of inverse effectiveness), which was not the case for haptic stimulation. Another reason for the absence of audio-haptic integration may have been the lack of training given. In previous studies, it has been shown that training is critical for audio-haptic integration. This has been shown for haptic enhancement of spatial hearing in CI users55 and of speech recognition in noise both for CI users22 and for normal-hearing listeners listening to CI simulated audio29. It is possible that audio-haptic integration would have been observed in the current study if training was provided.

It should be noted that the current findings do not demonstrate that the auditory percept of pitch was enhanced, but rather that participants were able to access higher-resolution pitch information through haptic stimulation. Participants in a previous study using haptic stimulation to enhance speech-in-noise performance22 gave subjective reports that, after training, the speech sounded louder or clearer when haptic stimulation was provided. This indicates that haptic stimulation was able to modulate the audio percept. This idea is supported by psychophysical evidence (discussed in the introduction) that haptic stimulation can modulate auditory perception of loudness and the perception of aspirated and non-aspirated syllables44,45,46. Further work is required to establish whether the auditory percept of pitch can be modulated by haptic stimulation.

The average performance of our participants in the audio-only condition was consistent with previous studies of pitch discrimination in CI users33,34,35,36. However, previous studies did not use the precise stimulus used in the current study and average performance ranges markedly across studies. In the current study, there was a wide range of performance (of around one octave) across participants. This is also consistent with previous studies with CI users. It should be noted that, while performance of normal-hearing listeners listening to CI simulated audio matched that of CI users, the way that sounds were perceived may have differed.

There are limitations of the current study that should be noted. One such limitation is that pitch discrimination was only demonstrated for a reference signal with an F0 close to 300 Hz (F0 was roved). To ensure that the current approach could be applied to signals with different F0s, mosaicOne_B outputs were assessed for several F0s (see Methods). The outputs showed good consistency, which indicates that the results of the current study can be generalised to a range of F0s. A second consideration is the age of the participants who took part (all of whom were under 32 years of age). A substantial portion of the CI user community is significantly older than the population tested in the current study. However, while spatial discrimination on the forearm is known to decline with age, the ability to distinguish between two stimulation points on the forearm remains less than the motor spacing for the mosaicOne_B (3 cm), even in older people56. Therefore, the findings of the current study are expected to be translatable to older populations. Finally, only a small amount of training was given in the current study, which may have led to pitch discrimination thresholds being underestimated. Previously, researchers have reported that, for normal-hearing listeners, auditory frequency discrimination performance continues to improve for around two weeks when two hours of training are given each day57. Furthermore, studies of enhancement of speech-in-noise performance with haptic stimulation for CI users have shown the importance of training for maximizing benefit22,29. Future work should assess whether training can lead to further enhancements in pitch discrimination with the mosaicOne_B.

Several steps are required to maximize the potential of the mosaicOne_B to bring real-world benefits to CI users. Firstly, it will be important to verify the findings of the current study in CI users. Future work should also seek to optimize the pitch extraction techniques used to reduce estimation errors in noise. Another important step, already discussed, is to assess the ability of the mosaicOne_B to effectively extract pitch cues in the presence of multiple harmonic sounds. Additionally, future studies should assess the effectiveness of the mosaicOne_B for improving speech perception, both in quiet and in noise, and music perception. Future developments to the mosaicOne_B could also include the exploitation of spatial hearing cues. CI users have poor access to spatial cues and are extremely poor at locating sounds58, which can lead to impaired threat detection and sound source segregation. For example, it is well established that access to spatial hearing cues can enhance detection of signals, such as speech, in noise59,60. A recent study in CI users has shown strong evidence that haptic stimulation can be used to enhance localisation of sounds55 and a similar approach might be implemented on the mosaicOne_B. Finally, a wearable neuroprosethic like the mosaicOne_B could include additonal aids to everyday activities. It could incorporate features such as a wake-up alarm (as CI users typically charge their implants during the night), and could link to smart devices in the Internet of Things, such as telephones, doorbells, baby monitors, ovens, and fire alarms.

The results of the current study demonstrate that the mosaicOne_B can extract and deliver precise pitch information through haptic stimulation. The device has been shown to be remarkably robust to non-harmonic background noise, which is common in real-world environments. The mosaicOne_B has several properties that make it suitable for a real-world application: stimulation was delivered to the forearm (a suitable site for a real-world use), the signal processing was performed in real-time, and the haptic signal was delivered using lightweight, low-powered, compact motors. The findings of the current study suggest that the mosaicOne_B could offer a non-invasive and inexpensive means to improve speech and music perception in CI users.

Methods

Participants

Twelve participants (3 male and 9 female, aged between 22 and 31 years old) were recruited from the staff and students of the University of Southampton, and from acquaintances of the researchers. Participants gave written informed consent and no payment was given for participation. All participants reported no hearing or touch issues, had received no musical training, and did not speak a tonal language. Vibrotactile detection thresholds were measured at the fingertips of the left and right index fingers. Thresholds were measured at 31.5 Hz and 125 Hz, following conditions and criteria specified in ISO 13091-1:200161 (the fingertip was used as there are no published standards for normal wrist or forearm sensitivity). All participants had vibrotactile detection thresholds within the normal range (<0.4 ms−2 RMS at 31.5 Hz, and <0.7 ms−2 RMS at 125 Hz61), indicating no touch perception issues. Participants were also assessed by otoscopy and pure-tone audiometry. Participants had hearing thresholds not exceeding 20 dB hearing level (HL) at any of the standard audiometric frequencies between 0.25 and 8 kHz in either ear.

Stimuli

In testing and task familiarisation (see Procedure), the reference stimulus was a harmonic complex, with an average F0 of 300 Hz (within the range of F0s found for many musical instruments, approximately central to the range of F0s found in human speech62, and the frequency at which pitch cues are reduced for CI users14). The F0 was roved by ±5% on each presentation (with a uniform distribution). The stimulus comprised of equal-amplitude harmonics generated up to 24 kHz (the Nyquist frequency). This signal was band-pass filtered between 1 kHz and 4 kHz, with 12th order (72 dB per octave) 0-phase Butterworth filters, to remove non-pitch cues (such as differences in the brightness of the sound63, as discussed in Mehta and Oxenham64]). The signal had a duration of 500 ms, with 20 ms quarter-sine and -cosine onset and offset ramps. The target and reference stimuli were separated by 300 ms. The target and reference stimuli were the same, except that the F0 of the target stimuli was adjusted following the adaptive track described in the procedure section. The level of the target and reference was nominally set to 65 dB SPL (RMS), but was roved on each presentation within a ±3 dB range (with a uniform distribution) to reduce potential loudness cues. The masking stimulus was a white noise, selected to equally mask each of the components of the harmonic complex.

The audio stimuli were processed using the SPIRAL vocoder to simulate CI listening. The SPIRAL vocoder is an advanced CI simulator that aims to bridge the gap between traditional tone- and noise-based simulations65. The SPIRAL was set to simulate 22 CI electrodes (with a current decay slope of 16 dB per octave) using 80 carrier tones. The test stimuli were delivered to the participants’ right ear only. In the audio alone and audio-haptic conditions, pink noise at a level of 55 dB SPL was delivered to the left ear to mask any audio cues from the mosaicOne_B. In the haptic-alone condition, the pink noise was delivered to both ears.

In the mosaicOne_B familiarization app (see Procedure), two stimulus types were used. In both of the app’s modules, CI simulation was not applied to the audio. In the pitch slider module, a constant tone was presented, and the frequency was adjusted between D3 and B3 on the chromatic scale based on the slider position. In the interval training module, two tones were presented. The tones were 500 ms long, with 20 ms quarter-sine and -cosine onset and offset ramps and were separated by a 100 ms gap. Frequencies were selected at random between D3 and B3 on the chromatic scale.

Tactile signal processing

Melody in music and prosody in speech are typically conveyed in sub-octave frequency shifts, with the absolute height of the pitch being largely irrelevant. In the current study, we used a pitch chroma analysis, which groups frequencies by octave to produce a spectral representation of relative pitch, discarding absolute pitch height information. A schematic representation of the signal processing chain that was used to convert audio to a tactile signal is illustrated in Fig. 3. The haptic signal was generated by first estimating the F0 and amplitude envelope of the input signal. F0 was estimated using YIN, implemented in the Max Sound Box toolbox (version 2018-3, IRCAM, Paris, FR). A 14 ms window size was used (giving a minimum possible F0 estimation of approximately 70 Hz) with no downsampling. The resulting F0 estimate was then used to activate one of the 12 shakers on the mosaicOne_B. This was achieved by first mapping the F0 to the MIDI scale, a commonly used scale for relating musical pitch to frequency. This representation was then assigned to one of 12 frequency channels. The full frequency mapping was defined as:

where fwrap is an integer in the range \(0\le {f}_{{\rm{wrap}}} < 12\), and yi is the channel at index i.

The RMS amplitude envelope of the input audio was calculated in parallel, using a 14 ms window. The activated channel was then multiplied by this envelope. Finally, a moving RMS average of each of the 12 channels was calculated using a 125 ms window. This per-channel averaging acted as a simple noise-reduction method. This helped reduce the effects of artefacts produced by the F0 estimation algorithm as background noise increased. The haptic output in response to the harmonic complexes (described in the Stimuli section) is illustrated in Fig. 4.

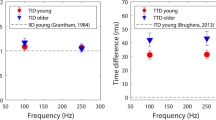

The performance of the algorithm was assessed for different stimulus frequencies. Sawtooth waves at 85 Hz (lowest average F0 of typical male speech62), 255 Hz (highest average F0 of typical female speech62) and 440 Hz (standard tuning pitch for western music). A sawtooth harmonic complex was used as it consists of equivalent odd and even harmonics (that decrease in amplitude with increasing frequency – as is typical of real-world stimuli, such as speech). Bandpass filtering was not applied as removal of non-pitch cues was not necessary. Figure 5 illustrates the algorithm’s performance. Performance at decreasing SNRs is comparable to the test stimulus, with marginally poorer performance at 85 Hz for the −7.5 dB SNR condition. Additionally, for the −5 and −7.5 dB SNR conditions at 85 Hz and 255 Hz, estimates are offset by 1–2 shakers relative to the clean condition. These errors are due to the inaccuracy of initial F0 estimation, and the non-linear mapping of frequency (which requires greater precision of F0 estimation at lower frequencies). Despite these errors, relative pitch differences appear largely unaffected.

Apparatus

During pure-tone audiometry, participants were seated in a sound-attenuated booth with a background noise level conforming to British Society of Audiology recommendations66. Audiometric measurements were conducted using a Grason-Stadler GSI 61 Clinical Audiometer and Telephonics 296 D200-2 headphones. Vibro-tactile threshold measurements were made using a HVLab Vibro-tactile Perception Meter with a 6-mm contactor that had a rigid surround and a constant upward force of 2 N (following International Organization for Standardization specifications61). This system was calibrated using a Bruel & Kjaer (B&K) calibration exciter (Type 4294).

The experiment took place in a quiet listening room. During testing, the experimenter sat behind a screen with no line of site to the participant. The participants responded by pressing buttons on a iiyama ProLite T2454MSC-B1AG 24-inch touchscreen monitor. All stimuli were generated using custom MATLAB scripts (version R2019a, The MathWorks Inc., Natick, MA, USA) and controlled using Max 8 (version 8.0.8). Both audio and haptic signals were played out at a sample rate of 48 kHz via a MOTU 24Ao soundcard (MOTU, Cambridge, MA, USA). Audio was presented using ER-2 insert earphones (Etymotic, IL, USA) and the haptic signal was delivered through the mosaicOne_B via the mosaicOne_B haptic interface (for amplification of haptic signals). Audio stimuli were calibrated using a B&K G4 sound level meter, with a B&K 4157 occluded ear coupler (Royston, Hertfordshire, UK). Sound level meter calibration checks were carried out using a B&K Type 4231 sound calibrator.

Haptic stimulation was delivered using the mosaicOne_B (Fig. 1 shows a schematic representation of the device). The mosaicOne_B had twelve motors, with six strapped to the top and six to the bottom of the forearm. The motors were attached using six elastic straps, fastened with Velcro. Two motor types, the Precision Microdrives 304–116 5-mm vibration motor (labelled “Motor type 1” in Fig. 1) and the Precision Microdrives 306-10H 7-mm vibration motor (labelled “Motor type 2” in Fig. 1) were used in an interleaved fashion, with each motor separated by 3 cm. The bottom motors were arranged in reverse order to maximize the distance between motor types. The motors were calibrated so that the driving signal extrema corresponded to the output amplitude extrema (maximum amplitudes of 1 and 1.84 G, respectively). This maximised the dynamic range of the motors. The different motor types have different operating frequencies of 280 Hz for the 5 mm motor and 230 Hz for the 7 mm motor. This configuration was selected to maximize differentiation between motors by allowing the user to exploit both skin location and stimulation frequency cues. The different motors were expected to be discriminable in frequency based on frequency discrimination thresholds for vibrotactile stimulation67. The motors were also expected to be spatially discriminable, even in older users, based on two-point discrimination thresholds56. Note that it has been argued that two-point discrimination thresholds likely over-estimate the minimum location separation required to discriminate motors24. This suggestion was supported by informal testing during development of the mosaicOne_B.

Procedure

The experiment had three phases, all of which were completed in a single session lasting around two hours. The first phase was the screening phase. During screening, participants first completed a questionnaire to ensure that they (1) had no conditions or injuries that may affect their touch perception, (2) had not been exposed to sustained periods of intense hand or arm vibration at any time, (3) had no recent exposure to hand or arm vibration, (4) had no conditions or injuries that may affect their hearing perception, (5) had received no musical training at any time, or (6) did not speak a tonal language. Next, audiometric hearing thresholds were measured to ensure participants had normal hearing (thresholds <20 dB HL). Thresholds were measured following British Society of Audiology guidelines66. Following this, vibrotactile detection thresholds were measured at the fingertip, to check for normal touch perception (<0.4 ms−2 RMS at 31.5 Hz, and <0.7 ms−2 RMS at 125 Hz61). Thresholds were measured following the protocol recommended by the International Organization for Standardization61. Finally, otoscopy was performed to ensure insert earphones could safely be used. If the participant passed all screening stages, they continued to the familiarization phase.

In the familiarization phase, participants first used an app developed to familiarize them with the mosaicOne_B. Participants used the app for 5–10 minutes and were invited to ask questions if anything was unclear. The app consisted of a pitch slider and an interval training module. For each module, participants could switch between haptic only, audio-haptic and audio only modes. In both modules, CI simulation was not applied to the audio. In the pitch slider module, a constant tone was played, and the frequency of the tone was adjusted based on slider position. In the interval training module, participants could select either a “Low → High” or “High → Low” button, which determined the pitches of two consecutive tones. The number of presentations was not limited, but any given presentation could not be repeated.

After using the app to familiarize themselves with the device, participants were familiarized with the task used in the testing phase. Participants completed a short practice session of 15 trials for each condition. In the testing and task familiarization, a two-alternative forced-choice task was used in which participants were asked to judge which interval contained the sound or vibration stimulus with the higher pitch. Participants used two buttons labelled “1” and “2” to select whether the first or second stimulus was higher in pitch. Visual feedback was given, indicating whether the response was correct or incorrect. The pitch difference between intervals was initially set at +80% of the reference pitch and was then varied using a one-up, two-down adaptive procedure, with percentage difference varying by 10% for the first two reversals, 5% for the third reversal, and 1% for the remaining four reversals. Thresholds for each track were calculated as the mean of the last four reversals. The order of conditions (audio only, audio-haptic, and haptic only) was counterbalanced across participants, and the noise conditions (no noise, noise at −5 dB SNR, and noise at −7.5 dB SNR) were presented in a random order for each condition.

The experimental protocol was approved by the University of Southampton Faculty of Engineering and Physical Sciences Ethics Committee (ERGO ID: 47769). All research was performed in accordance with the relevant guidelines and regulations.

Statistics

The data were analysed using Friedman ANOVAs (with Bonferoni-Holm correction for multiple comparisons). Three planned post-hoc Wilcoxon signed-rank tests were also performed (also Bonferoni-Holm corrected). Non-parametric tests were used as the data was not normally distributed.

Data availability

The dataset from the current study is publicly available through the University of Southampton’s Research Data Management Repository at: https://doi.org/10.5258/SOTON/D1401.

References

Wilson, B. S. & Dorman, M. F. Cochlear implants: a remarkable past and a brilliant future. Hear. Res. 242, 3–21 (2008).

Friesen, L. M., Shannon, R. V., Baskent, D. & Wang, X. Speech recognition in noise as a function of the number of spectral channels: Comparison of acoustic hearing and cochlear implants. J. Acous Soc. Am. 110, 1150–1163 (2001).

Moller, A. R. Hearing: it’s physiology and pathophysiology. New York: Academic Press (2000).

Fetterman, B. L. & Domico, E. H. Speech recognition in background noise of cochlear implant patients. Otolaryngol. Head. Neck Surg. 126, 257–263 (2002).

Zeng, F. G., Rebscher, S., Harrison, W., Sun, X. & Feng, H. Cochlear implants: system design, integration, and evaluation. IEEE Rev. Biomed. Eng. 1, 115–142 (2008).

Abberton, E. & Fourcin, A. J. Intonation and speaker identification. Lang. Speech 21, 305–318 (1978).

Titze, I. R. Physiologic and acoustic differences between male and female voices. J. Acoust. Soc. Am. 85, 1699–1707 (1989).

Banse, R. & Scherer, K. R. Acoustic profiles in vocal emotion expression. J. Pers. Soc. Psychol. 70, 614–636 (1996).

Murray, I. R. & Arnott, J. L. Toward the simulation of emotion in synthetic speech - a review of the literature on human vocal emotion. J. Acoust. Soc. Am. 93, 1097–1108 (1993).

Most, T. & Peled, M. Perception of suprasegmental features of speech by children with cochlear implants and children with hearing aids. J. Deaf. Stud. Deaf Edu. 12, 350–361 (2007).

Peng, S. C., Tomblin, J. B. & Turner, C. W. Production and perception of speech intonation in pediatric cochlear implant recipients and individuals with normal hearing. Ear Hear. 29, 336–351 (2008).

Meister, H., Landwehr, M., Pyschny, V., Walger, M. & von Wedel, H. The perception of prosody and speaker gender in normal-hearing listeners and cochlear implant recipients. Int. J. Audiol. 48, 38–48 (2009).

Xin, L., Fu, Q. J. & Galvin, J. J. III. Vocal emotion recognition by normal-hearing listeners and cochlear implant users. Trends Amplif. 11, 301–315 (2007).

McDermott, H. J. Music perception with cochlear implants: a review. Trends Amplif. 8, 49–82 (2004).

Kong, Y. Y., Cruz, R., Jones, J. A. & Zeng, F. G. Music perception with temporal cues in acoustic and electric hearing. Ear Hear. 25, 173–185 (2004).

Galvin, J. J. 3rd, Fu, Q. J. & Nogaki, G. Melodic contour identification by cochlear implant listeners. Ear Hear. 28, 302–319 (2007).

Zeng, F. G., Tang, Q. & Lu, T. Abnormal pitch perception produced by cochlear implant stimulation. Plos One 9 (2014).

Gfeller, K., Witt, S., Woodworth, G., Mehr, M. A. & Knutson, J. Effects of frequency, instrumental family, and cochlear implant type on timbre recognition and appraisal. Ann. Otol. Rhinol. Laryngol. 111, 349–356 (2002).

Prentiss, S. M. et al. Temporal and spectral contributions to musical instrument identification and discrimination among cochlear implant users. World J. Otorhinolaryngol. Head. Neck Surg. 2, 148–156 (2016).

Philips, B. et al. Characteristics and determinants of music appreciation in adult CI users. Eur. Arch. Otorhinolaryngol. 269, 813–821 (2012).

Wilson, B. S. Getting a decent (but sparse) signal to the brain for users of cochlear implants. Hear. Res. 322, 24–38 (2015).

Fletcher, M. D., Hadeedi, A., Goehring, T. & Mills, S. R. Electro-haptic enhancement of speech-in-noise performance in cochlear implant users. Sci. Rep. 9 (2019).

Bach-y-Rita, P., Collins, C. C., Saunders, F. A., White, B. & Scadden, L. Vision substitution by tactile image projection. Nature 221, 963–964 (1969).

Bach-y-Rita, P. Tactile sensory substitution studies. Ann. N. Y. Acad. Sci. 1013, 83–91 (2004).

Capelle, C., Trullemans, C., Arno, P. & Veraart, C. A real-time experimental prototype for enhancement of vision rehabilitation using auditory substitution. IEEE Trans. Biomed. Eng. 45, 1279–1293 (1998).

Meijer, P. B. L. An experimental system for auditory image representations. IEEE T Bio-Med Eng. 39, 112–121 (1992).

Hnath-Chisolm, T. & Kishon-Rabin, L. Tactile Presentation of Voice Fundamental-Frequency as an Aid to the Perception of Speech Pattern Contrasts. Ear Hear. 9, 329–334 (1988).

Thornton, A. R. D. & Phillips, A. J. A comparative trial of four vibrotactile aids. In Tactile Aids for the Hearing Impaired, edited by I. R. Summers, Whurr, London, pp. 231–251 (1992).

Fletcher, M. D., Mills, S. R. & Goehring, T. Vibro-tactile enhancement of speech intelligibility in multi-talker noise for simulated cochlear implant listening. Trends Hear. 22 (2018).

Huang, J., Sheffield, B., Lin, P. & Zeng, F. G. Electro-tactile stimulation enhances cochlear implant speech recognition in noise. Sci. Rep. 7 (2017).

Luo, X. & Hayes, L. Vibrotactile stimulation based on the fundamental frequency can improve melodic contour identification of normal-hearing listeners with a 4-channel cochlear implant simulation. Front. Neurosci. 13, 1145 (2019).

Huang, J., Lu, T., Sheffield, B. & Zeng, F.-G. Electro-tactile stimulation enhances cochlear-implant melody recognition. Ear Hear. (2019).

Drennan, W. R. et al. Clinical evaluation of music perception, appraisal and experience in cochlear implant users. Int. J. Audiol. 54, 114–123 (2015).

Kang, R. et al. Development and validation of the University of Washington Clinical Assessment of Music Perception test. Ear Hear. 30, 411–418 (2009).

Brockmeier, S. J. et al. The MuSIC perception test: a novel battery for testing music perception of cochlear implant users. Cochlear Implant Int. 12, 10–20 (2011).

Bruns, L., Murbe, D. & Hahne, A. Understanding music with cochlear implants. Sci. Rep. 6 (2016).

Laures, J. S. & Weismer, G. The effects of a flattened fundamental frequency on intelligibility at the sentence level. J. Speech Lang. Hear. Res. 42, 1148–1156 (1999).

Binns, C. & Culling, J. F. The role of fundamental frequency contours in the perception of speech against interfering speech. J. Acoust. Soc. Am. 122, 1765–1776 (2007).

Meredith, M. A. & Allman, B. L. Single-unit analysis of somatosensory processing in the core auditory cortex of hearing ferrets. Eur. J. Neurosci. 41, 686–698 (2015).

Shore, S. E., Vass, Z., Wys, N. L. & Altschuler, R. A. Trigeminal ganglion innervates the auditory brainstem. J. Comp. Neurol. 419, 271–285 (2000).

Aitkin, L. M., Kenyon, C. E. & Philpott, P. The representation of the auditory and somatosensory systems in the external nucleus of the cat inferior colliculus. J. Comp. Neurol. 196, 25–40 (1981).

Shore, S. E., El Kashlan, H. & Lu, J. Effects of trigeminal ganglion stimulation on unit activity of ventral cochlear nucleus neurons. Neuroscience 119, 1085–1101 (2003).

Jousmaki, V. & Hari, R. Parchment-skin illusion: sound-biased touch. Curr. Biol. 8 (1998).

Gick, B. & Derrick, D. Aero-tactile integration in speech perception. Nature 462, 502–504 (2009).

Gillmeister, H. & Eimer, M. Tactile enhancement of auditory detection and perceived loudness. Brain Res. 1160, 58–68 (2007).

Schurmann, M., Caetano, G., Jousmaki, V. & Hari, R. Hands help hearing: Facilitatory audiotactile interaction at low sound-intensity levels. J. Acoust. Soc. Am. 115, 830–832 (2004).

Kaernbach, C. & Bering, C. Exploring the temporal mechanism involved in the pitch of unresolved harmonics. J. Acoust. Soc. Am. 110, 1039–1048 (2001).

Kreft, H. A., Nelson, D. A. & Oxenham, A. J. Modulation frequency discrimination with modulated and unmodulated interference in normal hearing and in cochlear-implant users. J. Assoc. Res. Oto 14, 591–601 (2013).

Verhey, J. L., Rennies, J. & Ernst, S. M. A. Influence of envelope distributions on signal detection. Acta Acust. U Ac 93, 115–121 (2007).

McDermott, J. H., Schemitsch, M. & Simoncelli, E. P. Summary statistics in auditory perception. Nat. Neurosci. 16, 493–498 (2013).

Jouvet, D. & Laprie, Y. Performance analysis of several pitch detection algorithms on simulated and real noisy speech data. Eur Signal Pr Conf, 1614–1618 (2017).

Laurienti, P. J., Burdette, J. H., Maldjian, J. A. & Wallace, M. T. Enhanced multisensory integration in older adults. Neurobiol. Aging 27, 1155–1163 (2006).

Hairston, W. D., Laurienti, P. J., Mishra, G., Burdette, J. H. & Wallace, M. T. Multisensory enhancement of localization under conditions of induced myopia. Exp. Brain Res. 152, 404–408 (2003).

Wallace, M. T., Wilkinson, L. K. & Stein, B. E. Representation and integration of multiple sensory inputs in primate superior colliculus. J. Neurophysiol. 76, 1246–1266 (1996).

Fletcher, M. D., Cunningham, R. O. & Mills, S. R. Electro-haptic enhancement of spatial hearing in cochlear implant users. Sci. Rep. 10, 1621 (2020).

Leveque, J. L., Dresler, J., Ribot-Ciscar, E., Roll, J. P. & Poelman, C. Changes in tactile spatial discrimination and cutaneous coding properties by skin hydration in the elderly. J. Invest. Dermatol. 115, 454–458 (2000).

Moore, B. C. Frequency difference limens for short-duration tones. J. Acoust. Soc. Am. 54, 610–619 (1973).

Dorman, M. F., Loiselle, L. H., Cook, S. J., Yost, W. A. & Gifford, R. H. Sound source localization by normal-hearing listeners, hearing-impaired listeners and cochlear implant listeners. Audiol. Neurootol. 21, 127–131 (2016).

Bronkhorst, A. W. & Plomp, R. The effect of head-induced interaural time and level differences on speech intelligibility in noise. J. Acoust. Soc. Am. 83, 1508–1516 (1988).

Dirks, D. D. & Wilson, R. H. The effect of spatially separated sound sources on speech intelligibility. J. Speech Hear. Res. 12, 5–38 (1969).

International Organization for Standardization. Mechanical vibration–Vibrotactile perception thresholds for the assessment of nerve dysfunction–Part 1: Methods of measurement at the fingertips. ISO 13091-1:2001 (2001).

Hess, W. Pitch determination of speech signals: Algorithms and devices. Springer-Verlag (1983).

Shackleton, T. M. & Carlyon, R. P. The role of resolved and unresolved harmonics in pitch perception and frequency modulation discrimination. J. Acoust. Soc. Am. 95, 3529–3540 (1994).

Mehta, A. H. & Oxenham, A. J. Vocoder simulations explain complex pitch perception limitations experienced by cochlear implant users. J. Assoc. Res. Otolaryngol. 18, 789–802 (2017).

Grange, J. A., Culling, J. F., Harris, N. S. L. & Bergfeld, S. Cochlear implant simulator with independent representation of the full spiral ganglion. J. Acoust. Soc. Am. 142 (2017).

British Society of Audiology. Recommended procedure: Pure-tone air-conduction and bone-conduction threshold audiometry with and without masking. British Society of Audiology, 1–36, (2017).

Rothenberg, M., Verrillo, R. T., Zahorian, S. A., Brachman, M. L. & Bolanowski, S. J. Jr. Vibrotactile frequency for encoding a speech parameter. J. Acoust. Soc. Am. 62, 1003–1012 (1977).

Acknowledgements

Thank you to Carl Verschuur for his consistent support and encouragement. Thank you also to Anahita Mehta for extremely helpful input to the design of this study, to Eric Hamdan and P.T. Chaplin for technical support, to Jana Zgheib (Knárc Bön) for insightful comments during the piloting of this study, and to Ben Lineton for statistics support. Our deepest gratitude also goes to Alex Fletcher and Helen Fletcher, whose patience and calmness facilitated the writing of this manuscript. Finally, we are grateful to each of the participants who gave up their time to take part in this study. Salary support for author MDF was provided by The Oticon Foundation. Salary support for author SWP was provided by The Oticon Foundation and the Engineering and Physical Sciences Research Council (UK).

Author information

Authors and Affiliations

Contributions

M.D.F. and S.W.P. designed and implemented the experiment. N.T., M.D.F. and S.W.P. conducted the experiment. M.D.F. and S.W.P. wrote the manuscript text. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Fletcher, M.D., Thini, N. & Perry, S.W. Enhanced Pitch Discrimination for Cochlear Implant Users with a New Haptic Neuroprosthetic. Sci Rep 10, 10354 (2020). https://doi.org/10.1038/s41598-020-67140-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-020-67140-0

This article is cited by

-

Vibrotactile enhancement of musical engagement

Scientific Reports (2024)

-

Improved tactile speech perception using audio-to-tactile sensory substitution with formant frequency focusing

Scientific Reports (2024)

-

Improving speech perception for hearing-impaired listeners using audio-to-tactile sensory substitution with multiple frequency channels

Scientific Reports (2023)

-

Sensitivity to haptic sound-localisation cues

Scientific Reports (2021)

-

Neuroprosthetics in systems neuroscience and medicine

Scientific Reports (2021)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.