Abstract

Ultrasonography (US) has been considered image of choice for gallbladder (GB) polyp, however, it had limitations in differentiating between nonneoplastic polyps and neoplastic polyps. We developed and investigated the usefulness of a deep learning-based decision support system (DL-DSS) for the differential diagnosis of GB polyps on US. We retrospectively collected 535 patients, and they were divided into the development dataset (n = 437) and test dataset (n = 98). The binary classification convolutional neural network model was developed by transfer learning. Using the test dataset, three radiologists with different experience levels retrospectively graded the possibility of a neoplastic polyp using a 5-point confidence scale. The reviewers were requested to re-evaluate their grades using the DL-DSS assistant. The areas under the curve (AUCs) of three reviewers were 0.94, 0.78, and 0.87. The DL-DSS alone showed an AUC of 0.92. With the DL-DSS assistant, the AUCs of the reviewer’s improved to 0.95, 0.91, and 0.91. Also, the specificity of the reviewers was improved (65.1–85.7 to 71.4–93.7). The intraclass correlation coefficient (ICC) improved from 0.87 to 0.93. In conclusion, DL-DSS could be used as an assistant tool to decrease the gap between reviewers and to reduce the false positive rate.

Similar content being viewed by others

Introduction

Gallbladder (GB) polyps are commonly detected during ultrasonography (US), with a reported prevalence that ranges from 0.3 to 9.5%, and they present a tricky clinical question regarding their malignancy1,2,3,4,5,6,7,8,9. They can be divided into two groups: benign nonneoplastic and neoplastic polyps, which include adenomas and adenocarcinomas9,10,11. As adenomatous polyps can be malignant or can become malignant, it is important to differentiate and properly manage an adenomatous polyp9.

US has been considered image of choice for GB polyp. To diagnose neoplastic GB polyps, US showed an 80% accuracy12. Although US provided relatively good performance for diagnosing GB polyps, it had limitations in differentiating between nonneoplastic polyps and neoplastic polyps.

Recently, deep learning has been applied in various fields13. The deep learning models using image input usually have been developed to classify lesion or nonlesion, to group the lesion type, to detect the lesion, or to segment the lesion14. Additionally, the US field has less deep learning studies than other modalities. Most of the topics have been focused on lesion classification for breast, liver, and thyroid, and other minor topics include automatic carotid ultrasound image analysis, myositis type classification, and spine level identification15.

Several studies have shown that deep learning could enhance the performance of the radiologist13. A deep learning model could help the radiologist in detecting the pulmonary nodule on a chest radiograph, interpreting the knee magnetic resonance imaging (MRI), and detecting a cerebral aneurysm on magnetic resonance angiography16,17,18. To our knowledge, no articles have been published applying the deep learning method to differentiate GB polyps on US. The purpose of our study is to determine if a deep learning-based decision support system (DL-DSS) is helpful for the differential diagnosis of neoplastic GB polyps on US.

Results

Patient population

A total of 535 patients were included in our study group, including 357 patients with nonneoplastic polyps and 179 patients with neoplastic polyps. We divided selected patients into two groups to make two temporally independent datasets: the development dataset (n = 437) and test dataset (n = 98).

The pathologic diagnoses of GB polyps were as follows: nonneoplastic polyp (n = 357), including cholesterol polyp (n = 349); hyperplastic polyp (n = 1); inflammatory polyp (n = 7); neoplastic polyp (n = 179), including adenoma (n = 95); intracystic papillary neoplasm (n = 1); intracystic tubulopapillary neoplasm (n = 8); fibroepithelial polyp (n = 1); adenocarcinoma (n = 62); intracystic papillary neoplasm with an associated invasive carcinoma (n = 10); papillary carcinoma (n = 1); and adenosquamous carcinoma (n = 1).

From the 535 patients, we collected total of 6,056 cropped polyp images: 3,629 images of nonneoplastic polyps and 2,427 images of neoplastic polyps. The development dataset consisted of 3,200 images of nonneoplastic polyps and 1,971 images of neoplastic polyps. The test dataset consisted of 429 images of nonneoplastic polyps and 456 images of neoplastic polyps.

Baseline characteristics of data sets

There was no significant difference in the population characteristics (age, man to woman ratio, proportion of nonneoplastic and neoplastic polyps, and size of polyps) between the development set and test set (p ≥ 0.05). The baseline characteristics of the data sets are described in Table 1.

In the both development set and test set, patient age and polyp size showed statistically significant differences between nonneoplastic and neoplastic polyp (p < .001). The average size of nonneoplastic and neoplastic polyps was 9.4 ± 3.5 mm and 18.4 ± 8.4 mm, respectively. The optimal size cutoff for differentiating a neoplastic polyp was over 13.1 mm (with sensitivity 70.1%, and specificity 87.1%) form the receiver operating characteristic (ROC) curve analysis. However, there was a substantial amount of size overlap between the nonneoplastic and neoplastic polyps. The size distribution histogram is shown on Fig. 1.

Outcomes of clinical validation of DL-DSS

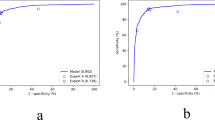

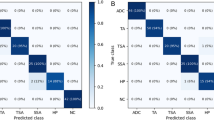

Step 1: Diagnostic performance of image analysis by three human reviewers

Table 2 summarizes the diagnostic performance of the three human reviewers. Three reviewers showed different area under the receiver operating characteristic curve (AUC) from 0.78 to 0.94. Reviewer A showed relatively good sensitivity (31 of 35 [88.6%]) and specificity (54 of 63 [85.7%]) on classifying the neoplastic polyp. However, reviewers B and C showed relatively low specificities of 43/63 (68.3%) and 41/63 (65.1%) respectively. The overall accuracy was between 68/98 (69.4%) and 85/98 (86.7%).

Table 3 summarizes the comparison with the US image findings between neoplastic polyps and nonneoplastic polyps. Among the three reviewers, the characteristic image findings of neoplastic polyps were single, larger, and sessile compared to the nonneoplastic polyps. A majority of the reviewers (two of three) reported lobulated surface contour, the presence of a vascular core, and heterogeneous internal echogenicity was also a common finding in the neoplastic polyps. Among the US findings, multiplicity, size, vascular core, and presence of gallstone showed good interobserver agreement (intraclass correlation coefficient (ICC) = 0.82–0.97). The shape, surface contour, and internal echo level showed moderate agreement (ICC = 0.65–0.79). From the multivariate logistic regression analysis, only the diameter of the polyp was a significant predictive finding of a neoplastic polyp. This was consistent result from all three reviewers (odds ratio (OR) 2.1, confidence interval (CI) 1.2–3.5, p < 0.01 for reviewer A, OR 1.5, CI 1.2–1.9, p < 0.001 for reviewer B, and OR 1.5, CI 1.1–2.2, p < 0.02 for reviewer C).

Step 2: Diagnostic performance of the DL-DSS

The diagnostic performance of the DL-DSS showed an AUC of 0.92, sensitivity of 26/35 (74.3%), specificity of 58/63 (92.1%), and an overall accuracy of 84/98 (85.7%) (Table 2). One radiologist with 11 years of abdominal ultrasound experience showed better performance than the DL-DSS. However, the system showed a better performance than two reviewers, who had 3 and 5 years of abdominal ultrasound experience. A statistically significant difference was observed between the DL-DSS and reviewer B (p < 0.01).

Step 3: Diagnostic performance of image analysis by three human reviewers with the aid of the DL-DSS

Table 2 shows that all three reviewers’ diagnostic performances increased when the DL-DSS was added. All three of the reviewers’ AUCs became higher than 0.9. A statistically significant improvement was shown for reviewer B, whose performance increased from 0.78 to 0.91 (p < 0.01). Among the sensitivity, specificity, and accuracy, the specificity showed marked growth in all three reviewers (Fig. 2). In addition, the interobserver agreement improved with the aid of the DL-DSS (ICC from 0.87 to 0.93).

Example cases showing the effectiveness of DL-DSS aided diagnosis. (a) Three patients with a nonneoplastic polyp, measured over 10 mm size. Majority of the reviewers regarded these polyps as neoplastic polyp with confidence scale 3 or more. However, patient-level probability value was from 0.1 to 0.3 suggesting nonneoplastic polyp more likely. On the re-evaluation, some of the reviewers downgraded the score. (b) Three patients with a neoplastic polyp, measured from 13 to 18 mm size. Some of the reviewers classified these polyps as nonneoplastic polyp with confidence scale 3 or less. On the other hand, patient-level probability value was from 0.7 to 0.9, favoring neoplastic polyp. On the re-evaluation, some of the reviewers upgraded the score.

Diagnostic performance for GB polyps larger than 10 mm

Table 4 summarizes the diagnostic performance of the three human reviewers and the DL-DSS for GB polyps larger than 10 mm. When the GB polyps were larger than 10 mm, the AUC of all experiments became lower. However, the added gain of the DL-DSS became more evident. For the human reviewers, the gap between sensitivity and specificity became more prominent. The sensitivity and specificity were from 69.7 to 100 and from 41.9 to 77.4, respectively. Additionally, the sensitivity and specificity of the DL-DSS were 78.8 and 87.1, respectively. The maximum gap of specificity between human and DL-DSS was exaggerated from 27 to 45.2. The p-values of the comparison between the 1st and 2nd review became more significant (<0.001–0.16).

Discussion

The DL-DSS could be a useful assistant tool for the differential diagnosis of neoplastic GB polyp by decreasing the gap between reviewers with different experience levels and reducing the false positive rate. The initial AUCs for neoplastic GB polyp were 0.94, 0.78, and 0.87. With the DL-DSS assistant, reviewers’ performances improved to 0.95, 0.91, and 0.91. The diagnostic performance of the less-experienced radiologists significantly improved with the aid of the DL-DSS (0.78 to 0.91 p < 0.01 for Reviewer B and 0.87 to 0.91, p = 0.17 for Reviewer C). The ICC improved from 0.87 to 0.93 with the aid of DL-DSS.

Among the important US finings for neoplastic polyps such as a single, larger, and sessile polyp, a larger size was the only independent factor on the multivariable regression. The optimal cutoff dividing the nonneoplastic polyp and neoplastic polyp was 13.1 mm. The importance of the size also had been reported on previous studies. According to Yeh C-N’s reports19, a size criteria that was equal to 10 mm or larger was the independent risk factor for neoplastic polyp. Cha BH, et al. collected 210 patients who had a GB polyp larger than 10 mm, and found that a size equal to 15 mm or larger was an independent risk factor suggesting neoplastic polyp20. Choi TW, et al. collected 136 patient’s high-resolution US images and reported that a single polyp and large diameter were the meaningful factors predicting a neoplastic polyp21.

The DL-DSS showed a higher specificity than all of the human reviewers, and this characteristic helped in improving the human performance. Although the human reviewers mainly relied on the size of polyp to differentiate neoplastic polyp, there was a substantial overlap zone between nonneoplastic polyps and neoplastic polyps in the size distribution plot. On the subgroup analysis for the large polyp (≥10 mm), the specificity of the human reviewers dropped markedly than the DL-DSS. The human reviewers overcalled the neoplastic polyp when the polyp measured 10 mm or larger, and this trend led to unnecessary cholecystectomies. The DL-DSS could reduce the false positive rate, thereby avoiding unnecessary cholecystectomy.

The DL-DSS could help to improve a less-experienced radiologist’s performance and narrow the gap between reviewers. Nam JG, et al. demonstrated that the malignant pulmonary nodule detection deep learning algorithm improved the nodule detection performance of 18 physicians with different experience levels16. The performance gap between the thoracic radiologist group and other physicians became lower. Bien N, et al. developed and validated the deep learning model to detect a general abnormality, anterior cruciate ligament tear, and meniscal tear on knee MRI data17. The model improved the interrater reliability among the 7 general radiologists and 2 orthopedic surgeons. Our study results also showed that the diagnostic performance of a nonexperienced radiologist significantly improved with the aid of the DL-DSS (0.78 to 0.91 p < .01 for Reviewer B and 0.87 to 0.91, p = 0.17 for Reviewer C).

Our study has some limitations. First, this study was only performed in a single center, and only temporally external validation was done. Second, the DL-DSS differentiated between nonneoplastic polyps and neoplastic polyps rather than diagnosing the malignant polyps. Since malignant polys are rare, not enough of a development dataset could be collected. With the multicenter dataset, the system could also learn this function. Third, in this study, we used Inception V3 and did not compare with more recent networks such as DenseNet, NASNet, etc. This is one of limitation of this research and further study is needed to make optimal DL-DSS. Finally, as a retrospective study, this study could not reflect the real clinical setting. A multicenter prospective study is needed.

In conclusion, we developed the DL-DSS, which has higher specificity at differentiating neoplastic polyps on transabdominal US. When it was used as an assistant tool, the gap between the reviewers with different experience levels narrowed, and the false positive rate was reduced, especially for the polyps with a size of 10 mm or larger. The DL-DSS could be used as an assistant tool for the differential diagnosis of neoplastic GB polyps using US, decrease the gap between the reviewers with different experience levels, and reduce the false positive rate, thus avoiding unnecessary cholecystectomies.

Materials and Methods

This study was approved by the Institutional Review Board of Seoul National University Hospital (IRB No. 1712–007–903). The Institutional Review Board granted a waiver of informed patient consent due to the retrospective nature of our study. All methods were performed in accordance with the relevant guidelines and regulations.

Patients

We reviewed our institution’s medical patient records from 2006 to 2017 and identified 8,452 patients who received cholecystectomy and 8,047 patients who received US for GB. From this data, we collected 923 patients on whom a GB polyp was found through US and received consecutive cholecystectomy. Among them, the following patients were excluded: polyp was not identified on pathologic report (n = 290); polyp smaller than 4 mm in size (n = 82); suboptimal image quality (n = 7); size and location of the polyp was evidently different between US and pathologic report (n = 6); rare pathology such as lymphoma or metastasis (n = 3). Finally, a total of 535 patients were included in our study group, including 357 patients with nonneoplastic polyps and 179 patients with neoplastic polyps. We divided selected patients into two groups to make two temporally independent datasets: the development dataset and test dataset. According to study date of their latest US exam, the patients who received US before January 2015 were considered as the development dataset (n = 437), and the patients who received US from January 2015 were grouped as the test dataset (n = 98). Figure 3 shows the flowchart of this study population.

Flow diagrams for the patient selection and dataset division. From our institution’s medical record, we collected 923 patients who examined GB polyp on US and underwent consecutive cholecystectomy. After the exclusion step, we collected total of 535 patients. We divided patients into two temporally independent groups according to study date of their latest US exam.

US examination

An US examination was performed by the radiologists, who have approximately 10 to 20 years of abdominal ultrasound experience, using an US scanner (LOGIQ 9 or LOGIQ E9, GE Healthcare, Milwaukee, WI, USA). First, the GB was observed grossly by a subcostal or intercostal approach with the convex low-MHz transducer (C1–6 or 4C, GE Healthcare, Milwaukee, WI, USA). Then the high resolution images were achieved by a linear transducer (9L or 7L, GE Healthcare, Milwaukee, WI, USA), which produced a high frequency ultrasound wave with a bandwidth of 2–8 MHz21,22.

Development of DL-DSS

Labeling of dataset

The images were selected from each patient’s US studies according to the following criteria: only the B-mode images were captured with the linear transducer, the images did not contain any annotation such as size measurement, and the captured polyp should match the pathology report in terms of its size and location. The US image selection and labeling process were performed by two radiologists (Kim JH and Jeong YB, with 23 years and two years of abdominal ultrasound experience, respectively) with consensus. If one patient received serial US follow up, all of the US studies were included when the patient was in the development dataset. By contrast, only the latest US study was included when the patient was in the test dataset. All of the selected images were converted into Portable Network Graphics (PNG) format. Then, all of the images were manually cropped using an in-house program. In every image, we drew a free size square box that contained one polyp and its attachment site. The square box was drawn as small as possible to contain the whole following structures; polyp, stalk, and focal portion of GB wall the polyp attached. The center of the box was located as close as to the polyp’s center. If there were multiple polyps in one image, each meaningful polyp that was 4 mm or larger and matched the pathologic description was cropped separately. We evaluated the size of each polyp simultaneously. We used the size that was measured by the onsite radiologist when it was available. If not, a retrospective size estimation was conducted using the scale bar in the image.

Training for the DL-DSS

We used the transfer learning method base on the GoogleNet Inception v3 Convolutional Neural Network (CNN) architecture23,24. DL-DSS used both cropped image and nonimage information. All of the cropped images were resized into 299 × 299 pixels. Intensity normalization was used as a preprocessing method. Geometric image augmentation techniques such as vertical/horizontal flipping, rotation, and cropping were used to reduce overfitting. Then, the image input was processed with a pretrained Inception v3 model to extract the high-level features. Nonimage inputs, including patient age, size of polyp and polyp multiplicity, were concatenated to the last fully connected layer of the network using a late fusion strategy25. In the end, DL-DSS reports a probability value that represents the image-level probability of a neoplastic polyp. A schematic diagram of the DL-DSS is described in Fig. 4.

Schematic diagram of DL-DSS. (a) We used transfer learning method base on the GoogleNet Inception v3 CNN architecture. All cropped Image were resized into 299 × 299 pixels, and then processed with a pretrained Inception v3 model. Nonimage inputs were concatenated to the last fully connected layer of the network using a late fusion strategy. (b) As multiple images were included for one patient, the average of the multiple image-level probability values was used as a patient-level probability value. It is a continuous value between 0 and 1. Zero represents a definite nonneoplastic polyp, and vice versa.

The development dataset was randomly divided into an 8:2 ratio and was then used for development (4138 images) and test (1033 images). The training process was done with a learning rate of 0.001 and an RMSprop optimizer. We used an early stopping strategy to prevent overfitting, and training was performed for up to 400 epochs where validation loss has reached a plateau (Fig. 5).

Training curves of DL-DSS. The orange and blue curves represent the (a) accuracy and (b) loss on the development and test datasets, respectively. We used an early stopping strategy to prevent overfitting, and training was performed up to 400 epochs where validation loss has reached a plateau. Final accuracy was 96.69% and 88.58% in the development set and test set.

Clinical validation of the DL-DSS

Step 1: Image analysis by three human reviewers

Two abdominal radiologists (Reviewers A and B, with 11 and 5 years of abdominal ultrasound experience, respectively) and one trainee radiology resident (Reviewer C with 3 years of abdominal ultrasound experience) independently reviewed the 98 patients’ US exams in the test dataset. They were blinded to the histologic diagnoses. First, they reviewed the following characteristics of polyp: multiplicity (solitary or multiple), size of the largest polyp (mm), overall shape (pedunculated or sessile), surface contour (smooth or lobulated), presence or absence of a vascular core seen on color Doppler sonography, presence of GB stone, internal echogenicity level (hypoechoic or iso- to hyperechoic), internal echogenicity pattern (homogenous or heterogenous) and presence of hyper- or hypoechoic foci. When the patient had multiple polyps, the largest polyp was evaluated. The detail method to evaluate the polyp characteristics was based on the previous researches21,22. Second, the radiologists graded the possibility of a neoplastic polyp using a 5-point confidence scale (1: definitely a nonneoplastic polyp; 2: probably a nonneoplastic polyp; 3: borderline; 4: probably a neoplastic polyp; and 5: definitely a neoplastic polyp) for each patient. The grading was based on not only the reviewers own experience, but also the polyp characteristics. All reviewers knew the relationship between the risk of neoplastic polyp and polyp characteristics, based on two previous researches21,22.

Step 2: Image analysis by DL-DSS

A temporally external validation of the DL-DSS was done with the 98 patients in the test set. Unlike human reviewers’ 5-point confident scale, the DL-DSS output the decimal probability value range from 0 to 1 (0: definitely a definite nonneoplastic polyp, and 1: definitely a neoplastic polyp). As multiple images were included for one patient, the average of the multiple image-level probability values was used as a patient-level probability value (Fig. 4). Using the patient-level probability value from each patient, we performed a ROC curve analysis and calculated the sensitivity, specificity, and accuracy of the DL-DSS.

Step 3: Image analysis by three human reviewers with the aid of the DL-DSS

To evaluate the added value of the DL-DSS aided diagnosis, a second review was conducted by three reviewers after 4 months since the first human review. They were still blinded to the histologic diagnoses. The following DL-DSS analysis data from each patients of test dataset was provide to the reviewers: the number of cropped images analyzed by DL-DSS, patient-level probability value, and standard deviation of multiple image-level probability values. US-images and previous 5-point confidence scale that own rated were also provided to the reviewers. Finally, considering all this information from the DL-DSS, including DL-DSS performance data and DL-DSS analysis data, the reviewers rerated the 5-point confidence scale. We also provided the performance of the DL-DSS on development dataset was introduced, by providing the ROC curve, sensitivity, specificity, and accuracy (with cut-off probability value 0.5).

Statistical analysis

The population characteristics, such as man to woman ratio, a proportion of nonneoplastic and neoplastic polyps, were compared using a Pearson’s Chi-square test between the development set and test set. Also, patient age and size of polyp were compared using an independent t test. These method were also used to evaluate characteristic differences between the nonneoplastic polyp group and the neoplastic polyp group, in both the development set and test set.

In the step 1, polyp characteristics were compared between the nonneoplastic polyps and neoplastic polyps using a Pearson’s Chi-square test and Fisher’s exact test. The sizes of the polyps were compared using an independent t test. A logistic regression analysis was performed to extract meaningful predictors for neoplastic polyp. After the univariate analysis with the US findings, variables with p values less than 0.05 were chosen for the multivariate analysis. Among three reviewers, the interobserver agreement was calculated with an ICC for each US finding and confidence scale. An ICC was classified into three levels of agreement: 0.59 and less as poor agreement, 0.60–0.79 as moderate agreement, and 0.80–1.00 as good correlation.

In step 1 and step 3, the diagnostic performance evaluation of reviewers was done by a ROC curve analysis, using a 5-point confidence scale. Sensitivity, specificity, accuracy, and F-1 score were calculated with a cut-off confident scale (>3). In step 2, the diagnostic performance evaluation of the DL-DSS was done by a ROC curve analysis, using a patient-level probability value. Sensitivity, specificity, accuracy, and F-1 score were calculated with a cut-off probability value (>0.5). An AUC comparison was performed by using a pairwise comparison ROC curve analysis.

All of the analyses were performed with software (SPSS version 25.0, IBM, Armonk, NY; and MedCalc version 18.2, MedCalc Software, Ostend, Belgium). For all of the tests, a P value less than 0.05 was considered to indicate statistical significance.

Data availability

The datasets generated during the current study are not publicly available due to our institutional review board prohibits publication of patient’s personal medical records.

References

Jørgensen, T. & Jensen, K. Polyps in the gallbladder. A prevalence study. Scandinavian Journal of Gastroenterology 25, 281–286 (1990).

Pandey, M., Khatri, A. K., Sood, B. P., Shukla, R. C. & Shukla, V. K. Cholecystosonographic evaluation of the prevalence of gallbladder diseases a university hospital experience. Clinical Imaging 20, 269–272 (1996).

Chen, C.-Y., Lu, C.-L., Chang, F.-Y. & Lee, S.-D. Risk factors for gallbladder polyps in the chinese population. American Journal of Gastroenterology 92, 2066–2068 (1997).

Okamoto, M. et al. Ultrasonographic evidence of association of polyps and stones with gallbladder cancer. The American Journal Of Gastroenterology 94, 446–450 (1999).

Park, J. K. et al. Management strategies for gallbladder polyps: Is it possible to predict malignant gallbladder polyps? Gut and Liver 2, 88–94 (2008).

Lin, W. R. et al. Prevalence of and risk factors for gallbladder polyps detected by ultrasonography among healthy chinese: Analysis of 34 669 cases. Journal of Gastroenterology and Hepatology 23, 965–969 (2008).

Kratzer, W. et al. Ultrasonographically detected gallbladder polyps: A reason for concern? A seven-year follow-up study. BMC Gastroenterology 8, 41 (2008).

Mellnick, V. M. et al. Polypoid lesions of the gallbladder: Disease spectrum with pathologic correlation. Radiographics 35, 387–399 (2015).

Wiles, R. et al. Management and follow-up of gallbladder polyps: Joint guidelines between the european society of gastrointestinal and abdominal radiology (esgar), european association for endoscopic surgery and other interventional techniques (eaes), international society of digestive surgery - european federation (efisds) and european society of gastrointestinal endoscopy (esge). European Radiology 27, 3856–3866 (2017).

Babu, B. I., Dennison, A. R. & Garcea, G. Management and diagnosis of gallbladder polyps: A systematic review. Langenbeck’s Archives of Surgery 400, 455–462 (2015).

Bhatt, N. R., Gillis, A., Smoothey, C. O., Awan, F. N. & Ridgway, P. F. Evidence based management of polyps of the gall bladder: A systematic review of the risk factors of malignancy. The Surgeon 14, 278–286 (2016).

Lee, J. S. et al. Diagnostic accuracy of transabdominal high-resolution us for staging gallbladder cancer and differential diagnosis of neoplastic polyps compared with eus. European Radiology 27, 3097–3103 (2017).

Choy, G. et al. Current applications and future impact of machine learning in radiology. Radiology 288, 318–328 (2018).

Suzuki, K. Overview of deep learning in medical imaging. Radiological Physics and Technology 10, 257–273 (2017).

Huang, Q., Zhang, F. & Li, X. Machine learning in ultrasound computer-aided diagnostic systems: A survey. Biomed Research International 2018, 5137904 (2018).

Nam, J. G. et al. Development and validation of deep learning-based automatic detection algorithm for malignant pulmonary nodules on chest radiographs. Radiology 290, 218–228 (2018).

Bien, N. et al. Deep-learning-assisted diagnosis for knee magnetic resonance imaging: Development and retrospective validation of mrnet. PLoS Medicine 15, e1002699 (2018).

Ueda, D. et al. Deep learning for mr angiography: Automated detection of cerebral aneurysms. Radiology 290, 187–194 (2018).

Yeh, C.-N., Jan, Y.-Y., Chao, T.-C. & Chen, M.-F. Laparoscopic cholecystectomy for polypoid lesions of the gallbladder: A clinicopathologic study. Surgical Laparoscopy Endoscopy & Percutaneous Techniques 11, 176–181 (2001).

Cha, B. H. et al. Pre-operative factors that can predict neoplastic polypoid lesions of the gallbladder. World Journal of Gastroenterology: WJG 17, 2216–2222 (2011).

Choi, T. W. et al. Risk stratification of gallbladder polyps larger than 10 mm using high-resolution ultrasonography and texture analysis. European Radiology 28, 196–205 (2017).

Kim, J. H. et al. High-resolution sonography for distinguishing neoplastic gallbladder polyps and staging gallbladder cancer. American Journal of Roentgenology 204, W150–W159 (2015).

Szegedy, C., Vanhoucke, V., Ioffe, S., Shlens, J. & Wojna, Z. Rethinking the inception architecture for computer vision. The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA. IEEE. (27-30 June 2016).

Pan, S. J. & Yang, Q. A survey on transfer learning. IEEE Transactions on Knowledge and Data Engineering 22, 1345–1359 (2010).

Akilan, T., Wu, Q. J., Safaei, A. & Jiang, W. A late fusion approach for harnessing multi-cnn model high-level features. The IEEE International Conference on Systems, Man, and Cybernetics (SMC), Banff, AB, Cananda. IEEE. (5-8 Oct. 2017).

Acknowledgements

This research was partially supported by the Interdisciplinary Research Initiatives Program from College of Engineering and College of Medicine, Seoul National University (No. 800-20170166) and by grant no 03-2019-0110 from the SNUH Research Fund.

Author information

Authors and Affiliations

Contributions

Y.J. and J.H.K. designed the study and wrote the main manuscript text. H.D.C. designed the study and coded the DL-DSS model. J.H.K. and H.D.C. contributed equally to this work. S.J.P., J.S.B., I.J. and J.K.H. collected the data and reviewed the images. All authors reviewed the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Jeong, Y., Kim, J.H., Chae, HD. et al. Deep learning-based decision support system for the diagnosis of neoplastic gallbladder polyps on ultrasonography: Preliminary results. Sci Rep 10, 7700 (2020). https://doi.org/10.1038/s41598-020-64205-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-020-64205-y

This article is cited by

-

Advances in the management of gallbladder polyps: establishment of predictive models and the rise of gallbladder-preserving polypectomy procedures

BMC Gastroenterology (2024)

-

Applications of artificial intelligence in biliary tract cancers

Indian Journal of Gastroenterology (2024)

-

Diagnosis of Gallbladder Disease Using Artificial Intelligence: A Comparative Study

International Journal of Computational Intelligence Systems (2024)

-

Risk stratification of gallbladder masses by machine learning-based ultrasound radiomics models: a prospective and multi-institutional study

European Radiology (2023)

-

Application of cloud computing technology in optimal design of decision support system under mass communication theory

International Journal of System Assurance Engineering and Management (2022)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.