Abstract

The widespread applications of high-throughput sequencing technology have produced a large number of publicly available gene expression datasets. However, due to the gene expression datasets have the characteristics of small sample size, high dimensionality and high noise, the application of biostatistics and machine learning methods to analyze gene expression data is a challenging task, such as the low reproducibility of important biomarkers in different studies. Meta-analysis is an effective approach to deal with these problems, but the current methods have some limitations. In this paper, we propose the meta-analysis based on three nonconvex regularization methods, which are L1/2 regularization (meta-Half), Minimax Concave Penalty regularization (meta-MCP) and Smoothly Clipped Absolute Deviation regularization (meta-SCAD). The three nonconvex regularization methods are effective approaches for variable selection developed in recent years. Through the hierarchical decomposition of coefficients, our methods not only maintain the flexibility of variable selection and improve the efficiency of selecting important biomarkers, but also summarize and synthesize scientific evidence from multiple studies to consider the relationship between different datasets. We give the efficient algorithms and the theoretical property for our methods. Furthermore, we apply our methods to the simulation data and three publicly available lung cancer gene expression datasets, and compare the performance with state-of-the-art methods. Our methods have good performance in simulation studies, and the analysis results on the three publicly available lung cancer gene expression datasets are clinically meaningful. Our methods can also be extended to other areas where datasets are heterogeneous.

Similar content being viewed by others

Introduction

With the rapid development of biotechnology and its wide applications, many database repositories of high-throughput gene expression data have been created and published. For example, Gene Expression Omnibus (GEO) currently has stored more than 2.76 million samples over 105,000 studies1. The gene expression datasets have been widely used in the prediction and diagnosis of diseases, and their application prospects are increasingly promising.

It is desirable to consider variable selection into the analysis of gene expression data due to its small sample size and high dimensionality. Variable selection not only enhances generalization by reducing overfitting, but also enhances interpretability by simplifying the model, i.e., identifying important biomarkers associated with the disease and helping to find the best solution for patients in the treatment process. For a single dataset, there exist many variable selection methods, such as Least Absolute Shrinkage and Selection Operator (LASSO)2, L1/2 regularization3,4,5, Minimax Concave Penalty (MCP)6, Smoothly Clipped Absolute Deviation (SCAD)7,8,9, Group LASSO10, elastic net11, Hard Ridge12, SCAD-L213, Complex Harmonic Regularization (CHR) penalty14 and so on. These methods are effective in discovering important biomarkers in a single dataset. However, it is well known that the analysis of gene expression data is still a challenging task due to high noise and low reproducibility of important biomarkers. There are two main reasons for this challenging task. One is that the decisive biomarkers that regulate the phenotypes are usually very sparse compared to the total number of biomarkers in the entire genome, and their effects are usually weak, therefore, the results of individual studies are not remarkable and difficult to reproduce. The other is that the different experimental datasets may come from inconsistent experimental conditions, sample preparation methods, measurement sensitivities or precision, and also from different study groups, biological sample selections. Therefore, the important genes in some studies may be not remarkable in other studies, which we call the data have the heterogeneity. The data heterogeneity reveals the complexity of gene expression data and significantly obstructs gene expression technology in clinical applications.

Since many genomic databases are publicly available, meta-analysis is an effective approach to address the heterogeneity among different datasets and make full use of different datasets. Meta-analysis is a significant technique for clinical diagnosis, which plays an important role in summarizing and synthesizing scientific evidence from multiple studies. Classic meta-analysis methods, which aggregate the summary statistics from individual datasets to obtain total scores and then evaluate them based on statistical significance of all studies, including p values15, ranks16,17, effect sizes18,19,20,21. Li and Tseng22 apply Fisher’s method combining p values by summation of log-transformed p values, and the method increases the biological interpretation of meta-analysis results. Similar strategies can be applied to combine effect sizes of Random Effects Model (REM) or Fixed Effects Model (FEM) from individual studies. A comprehensive review of these methods is given in the researches23,24,25. These methods perform well in identifying differentially expressed genes, but they ignore the correlations between the covariates (genes). There are some approaches that attempt to model the preprocessed microarray datasets using latent variable-based models26,27,28,29,30. In general, latent variables are not observable in the data, but can be inferred from other observed variables. Huo et al.31 use latent variable to quantify homogeneous and heterogeneous differentially expressed signals across studies to detect genes that are differentially expressed in only a subset of the combined studies. Rashid et al.32 utilize a penalized Generalized Linear Mixed Model based on latent variable to select gene signatures and address between-study heterogeneity. These methods provide the potential to pool information across genes, making it possible to more clearly infer which genes are differentially expressed. Compared with the previous classical meta-analysis methods, these methods are more complex, which limit their application in practice. Recently, Zhang et al.33 set different constant terms for multiple studies in the logistic regression model to measure the heterogeneity of the samples. This method assumes that the same variables in multiple studies should make the same contribution to their corresponding responses. In other words, this method conducts variable selection in an ‘all-in-or-all-out’ fashion. In this paper, we consider that some important genes in some studies are likely to be ineffective in other studies, and it is important to allow such flexibility.

Some researchers propose the bi-level selection methods which consider the coefficients of each variable (gene) from all datasets as a group, and simultaneously shrink these groups and the variables within these groups by the penalty function to study the correlation between variables and identify important genes. Existing bi-level selection methods include composite MCP34, group Bridge35 and group exponential LASSO36, meta-SVM37 etc. These methods of the aforementioned references generally treat the coefficients of one gene from different datasets as a group, and conduct two levels selection. The first is to determine whether a particular gene is related to the response variable in all datasets, and the second is to determine which dataset contains the identified gene related to the response variable. These methods consider both the heterogeneity and the correlation between the datasets. However, for M independent datasets \({\{\left({{\boldsymbol{X}}}_{m},{{\boldsymbol{y}}}_{m}\right)\}}_{m=1}^{M}\), each of which contains nm samples and p variables, these methods consider to solve the problem which has the \(\mathop{\sum }\limits_{m=1}^{M}{n}_{m}\times Mp\) dimensional measurement matrix \(\widetilde{{\boldsymbol{X}}}=diag({{\boldsymbol{X}}}_{1},{{\boldsymbol{X}}}_{2},\cdots \ ,{{\boldsymbol{X}}}_{M})\), the \(\mathop{\sum }\limits_{m=1}^{M}{n}_{m}\) dimensional response \(\widetilde{{\boldsymbol{y}}}={\left({{\boldsymbol{y}}}_{1}^{T},{{\boldsymbol{y}}}_{2}^{T},\cdots ,{{\boldsymbol{y}}}_{M}^{T}\right)}^{T}\) and the Mp dimensional unknown coefficients \({\boldsymbol{\beta }}={\left({{\boldsymbol{\beta }}}_{1}^{T},{{\boldsymbol{\beta }}}_{2}^{T},\cdots ,{{\boldsymbol{\beta }}}_{M}^{T}\right)}^{T}\), where the superscript T represents the transpose of the vector. Since the gene expression data has the characteristics of small sample size and high-dimensional, these methods greatly increase the variable dimension, so it may increase the difficulty of solving the problem.

Zhou and Zhu38 propose a new group variable selection method “hierarchical LASSO” that can be used for gene-set selection. The hierarchical LASSO not only removes unimportant groups effectively, but also maintains the flexibility of selecting variables within the group. They also showed that the new method offers the potential for achieving the theoretical “oracle” property. Li et al.39 propose meta-LASSO for variable selection with high-dimensional meta-analyzed data. The meta-LASSO not only improves the ability to identify important genes with the strength of multiple datasets, but also maintains the flexibility of selection between datasets to consider the data heterogeneity.

For many practical applications, LASSO often cannot find the most sparse solutions (this is extremely important for model selection), and it is inefficient when the errors in data have heavy tail distribution2. Zhao and Yu40 give the Strong Irrepresentable Condition for the model selection consistency of LASSO, and show that to induce sparsity, LASSO shrinks the estimates for the nonzero coefficients too heavily. When Strong Irrepresentable Condition fails, the irrelevant covariates are correlated with the relevant covariates enough to be picked up by LASSO to compensate the over-shrinkage of the nonzero parameters. Therefore, to get universal consistency, some nonconvex regularization methods have been proposed in recent years, such as L1/2 penalty, Minimax Concave Penalty (MCP) and Smoothly Clipped Absolute Deviation (SCAD) penalty etc. These methods achieve both selection consistency and nearly unbiasedness, which make them widely applied in signal/image processing, statistics and machine learning, such as biological feature selection14,41,42,43,44, compressed sensing and low rank matrix completion8,45,46,47,48, sparse signals separation and image inpainting49,50, and dictionary learning51 etc.

In this paper, we propose the meta-analysis based on three nonconvex regularization methods (L1/2 regularization, MCP regularization and SCAD regularization), dubbed as meta-Half, meta-MCP and meta-SCAD respectively. Our methods combine the advantages of meta-analysis and the nonconvex regularization methods. We propose the efficient algorithms which apply the nonconvex iterative thresholding algorithms based on approximate message passing (Half-AMP, MCP-AMP and SCAD-AMP)52,53 to solve our models. Furthermore, we apply our methods to the simulation data and three publicly available lung cancer gene expression datasets, and compare the performance of our methods with other four state-of-the-art methods, which are meta-LASSO, composite MCP, group Bridge and group exponential LASSO. The experiments results show that our methods have favorable performance.

Methodology

In this section, we study the meta-analysis based on the three nonconvex regularization methods (L1/2 regularization, MCP regularization and SCAD regularization).

Consider M independent datasets \(D={\{\left({{\boldsymbol{X}}}_{m},{{\boldsymbol{y}}}_{m}\right)\}}_{m=1}^{M}\), each of which contains nm samples. Denote \({{\boldsymbol{X}}}_{m}={({{\boldsymbol{x}}}_{m1},{{\boldsymbol{x}}}_{m2},\cdots ,{{\boldsymbol{x}}}_{m,{n}_{m}})}^{T}\) and \({{\boldsymbol{y}}}_{m}={({y}_{m1},{y}_{m2},\cdots ,{y}_{m,{n}_{m}})}^{T}\), where the superscript T represents the transpose of the vector, \({{\boldsymbol{x}}}_{mi}={({x}_{mi,1},{x}_{mi,2},\cdots ,{x}_{mi,p})}^{T}\) (i = 1, 2, ⋯, nm) is ith sample in the mth dataset which contains p variables (genes), and ymi is the response variable, in this paper, we consider the response variable is a binary phenotype (for example, if the ith sample of the mth dataset is a disease patient, ymi is 1, and 0 otherwise). The p genes are assumed common in all datasets. We assume the conditional probability that ymi takes value 1 given the gene expression vector xmi follows the logistic regression model

where βm0 is an intercept and \({{\boldsymbol{\beta }}}_{m}={\left({\beta }_{m1},\cdots ,{\beta }_{mp}\right)}^{T}\) is the unknown coefficients for the mth data. Due to heterogeneity between datasets, we allow βm0 and βm in (1) to vary with m. We hope to find the true nonzero components of βm for each dataset.

Compared with the variable selection of single dataset model, the variable selection of the M datasets models are distinguishing and peculiar. On the one hand, each variable has M coefficients, which belong to the same explanatory variable. Therefore, there is some correlation or similarity, which makes it impossible to make coefficient estimation and variable selection separately, otherwise this correlation will be ignored. On the other hand, the significance of variables is not identical, so we cannot simply synthesize estimation. The penalization methods with meta-analysis make full use of this particularity to study data differences. These methods conduct variable selection by maximizing,

where ℓm(βm0, βm) is the log-likelihood for the mth dataset and has the following form

P is a penalty function and λ is the regularization parameter that controls the complexity of the machine.

In this paper, we focus on the three nonconvex regularization methods (L1/2 regularization, MCP regularization and SCAD regularization), and through the hierarchical decomposition of coefficients that maintain the flexibility of variable selection as well incorporate the relationship between different datasets. We consider the following hierarchical reparameterization:

The parameter hj is the effect of the jth gene, and the different m for ξmj reflects the different effects of the jth gene among M datasets. If hj = 0, then \({{\boldsymbol{\beta }}}_{j}={\left({\beta }_{1j},{\beta }_{2j},\cdots ,{\beta }_{Mj}\right)}^{T}={\bf{0}}\), this indicates that the jth gene is not significant in all M datasets. If hj ≠ 0, then whether the βmj is equal to 0 depends on whether ξmj is equal to 0. Since the M datasets may have heterogeneity (the M datasets may come from inconsistent experimental conditions, sample preparation methods, measurement sensitivities or precision, and also from different study groups, biological sample selections.), then one gene is important in some datasets may be not remarkable in other datasets. Through ξmj contral βmj to keep the selection flexibility among M datasets. If the M datasets have no heterogeneity, then hj = βmj for m = 1, ⋯, M defined in (2) and ξmj = 1 for all j and m. With reparameterization (3), we propose a meta-analysis method based on nonconvex regularization. Our method selects important genes by solving

where ℓm(βm0, h, ξm) is the likelihood function and has the following form

\({\boldsymbol{h}}={\left({h}_{1},{h}_{2},\cdots ,{h}_{p}\right)}^{T}\), \({{\boldsymbol{\xi }}}_{m}={({\xi }_{m1},{\xi }_{m1},\cdots ,{\xi }_{mp})}^{T}\), \({{\boldsymbol{\beta }}}_{0}={({\beta }_{10},{\beta }_{20},\cdots ,{\beta }_{M0})}^{T}\), \({\boldsymbol{\xi }}={\left({{\boldsymbol{\xi }}}_{1}^{T},{{\boldsymbol{\xi }}}_{2}^{T},\cdots ,{{\boldsymbol{\xi }}}_{M}^{T}\right)}^{T}\), and h ⋅ ξm means the element-wise product. P( ⋅ ) is a nonconvex penalty function. In this paper, considering the three nonconvex penalty function, the L1/2 penalty, the MCP penalty and the SCAD penalty. The L1/2 penalty function is \({P}_{{L}_{1/2}}(x;\lambda )=\lambda \parallel x{\parallel }_{1/2}^{1/2}=\mathop{\sum }\limits_{i=1}^{p}| {x}_{i}{| }^{1/2}\). MCP penalty function has the following form

where \({\left(1-\frac{s}{\gamma \lambda }\right)}_{+}=\max \left\{1-\frac{s}{\gamma \lambda },0\right\}\). The SCAD penalty function has the following form

we call these three nonconvex penalties for the methods (4) as “meta-Half”, “meta-MCP” and “meta-SCAD”, respectively.

Algorithm

In this section, we give the efficient algorithms (Algorithm 1) to solve our models. Note that we can assume that the mean of the predictor variable is zero (through the location transformation). (4) can be decomposed into two nonconvex problems, each of which views h or ξ as fixed. We propose to iteratively solve β0, h, and ξ in (4). First, we fix β0 and ξ in (4) to maximize h. We next fix β0 and h to maximize ξ. Finally, we maximize over β0 by fixing h and ξ. Iterate these steps until the algorithm converges. Since at each step, the value of the objective function (4) decreases, the solution is guaranteed to converge. Specifically, the algorithm is described as follows

Step 3 and step 4 are general nonconvex regularization problem. We52,53 propose the nonconvex iterative thresholding algorithms based on approximate message passing (Half-AMP, MCP-AMP and SCAD-AMP) to solve the nonconvex regularization problem, and verified the effectiveness of the algorithms through theoretical analysis and experiment. In this paper, for the two problems in step 3 and step 4, we apply the Half-AMP algorithm, the MCP-AMP algorithm and the SCAD-AMP algorithm to solve the meta-Half, meta-MCP and meta-SCAD respectively.

In order to solve the above two problems in step 3 and step 4, we first consider the solution of the traditional logistic regression model. Here we omit the intercept term (in fact, just rewrite the input variable as \({\widetilde{{\boldsymbol{x}}}}_{i}={\left(1,{{\boldsymbol{x}}}_{i}^{T}\right)}^{T}\)), the logistic regression can be expressed as the following optimization problem

Differentiating ℓ(β) with respect to β, we can get

where \(\mu \left({{\boldsymbol{x}}}_{i};{\boldsymbol{\beta }}\right)=\frac{\exp \left({{\boldsymbol{x}}}_{i}^{{\rm{T}}}{\boldsymbol{\beta }}\right)}{1+\exp \left({{\boldsymbol{x}}}_{i}^{{\rm{T}}}{\boldsymbol{\beta }}\right)}.\) To find the optimal solution \(\widehat{{\boldsymbol{\beta }}}\) of equation (6), we let \(\frac{\partial \ell ({\boldsymbol{\beta }})}{\partial {\boldsymbol{\beta }}}=0\), and use the Newton-Raphson iteration algorithm which requires computing the second derivative, the Hessian matrix has the following form

Hence, given the current estimated value βold of β, the new estimated value βnew is updated as following

where the value of the derivative (and second derivative) is calculated at the point βold. The equation (9) can be expressed by matrix form. Let X be a N × P matrix, where the i-th row is xi; W is a diagonal matrix, and the elements on the diagonal

Let \({\boldsymbol{y}}={\left({y}_{1},{y}_{2},\cdots ,{y}_{N}\right)}^{{\rm{T}}}\), \({\boldsymbol{\mu }}={\left(\mu \left({{\boldsymbol{x}}}_{1};{\boldsymbol{\beta }}\right),\mu \left({{\boldsymbol{x}}}_{2};{\boldsymbol{\beta }}\right),\cdots ,\mu \left({{\boldsymbol{x}}}_{N};{\boldsymbol{\beta }}\right)\right)}^{{\rm{T}}},\) then the formulas (7) and (8) can be expressed as

Therefore, Newton-Raphson iteration (9) can be expressed as

where

It can be seen that each Newton-Raphson iteration actually solves the weighted least squares problem as follows

Based on the solution process of the traditional logistic regression model, a similar iterative algorithm can be used to solve the logistic regression with nonconvex penalties problem, and only a slight deformation of the formula (14) is needed to obtain the iterative algorithm.

where P( ⋅ ; ⋅ ) is the nonconvex penalty function. It is easy to see that the minimization problem (15) is equivalent to the maximization problem (2). The minimization problem (15) can be solved by the nonconvex iterative thresholding algorithms based on approximate message passing52,53 (which are based on linear regression y = Xβ, y ∈ RN, X ∈ RN×p). The algorithms are according to the following iteration:

where \(\delta =\frac{p}{N}\) represents a measure of indeterminacy of the measurement system, in this paper, considering the case δ is fixed for N → ∞. For a vector u = (u1, u2, . . . , uN), \(\langle u\rangle ={\sum }_{i=1}^{N}{u}_{i}\)/N, \(\eta {\prime} (x)=\frac{\partial }{\partial x}\eta (x)\). η is the thresholding function, in this paper, η represents the Half thresholding function, the MCP thresholding function and the SCAD thresholding function, respectively. The Half thresholding function is

where

The MCP thresholding function is

where

where sign(u) is sign function,

The SCAD thresholding function is

Theoretical Properties

In this section, we study the theoretical properties of the meta-Half method. The meta-Half has the following uniform form

there are two tuning parameters λh and λξ in (19), we first show that the two tuning parameters can be simplified into one. Specifically, let λ = λhλξ, we can show that (19) is equivalent to

Lemma 1.

If \(\left({\widetilde{{\boldsymbol{\beta }}}}_{0},\widetilde{{\boldsymbol{h}}},\widetilde{{\boldsymbol{\xi }}}\right)\)is a local maximizer of (19). Then there exists a local maximizer \(({\hat{{\boldsymbol{\beta }}}}_{0},\hat{{\boldsymbol{h}}},\hat{{\boldsymbol{\xi }}})\) of (20) such that \({\widetilde{h}}_{j}{\widetilde{\xi }}_{mj}={ {\hat{h}} }_{j}{\widehat{\xi }}_{mj}\) and \({\widetilde{{\boldsymbol{\beta }}}}_{0}={\widehat{{\boldsymbol{\beta }}}}_{0}\). Vice versa.

The proof is in the Supplementary. This lemma indicates that although (19) and (20) may provide different hj and ξmj, the final fitted models from them are the same. Therefore, we only need to tune one parameter λ = λhλξ other than tune λh and λξ separately in practice.

We then show that (20) can also be written in an equivalent form using the original regression coefficients βmj.

Lemma 2.

Suppose \(\left(\widehat{{\boldsymbol{h}}},\widehat{{\boldsymbol{\xi }}}\right)\) is a local maximizer of (20), for j = 1, 2, ⋯, p, let \({\widehat{\beta }}_{mj}={ {\hat{h}} }_{j}{\widehat{\xi }}_{mj}\), \({\widehat{{\boldsymbol{\beta }}}}_{j}={({\widehat{\beta }}_{1j},{\widehat{\beta }}_{2j},\cdots ,{\widehat{\beta }}_{Mj})}^{T}\) and \({\widehat{{\boldsymbol{\xi }}}}_{j}={({\widehat{\xi }}_{1j},{\widehat{\xi }}_{2j},\cdots ,{\widehat{\xi }}_{Mj})}^{T}\),

(a) If \({ {\hat{h}} }_{j}=0\), then \({\widehat{{\boldsymbol{\beta }}}}_{j}={\bf{0}}\);

(b) If \({ {\hat{h}} }_{j}\ne 0\), then \({\widehat{{\boldsymbol{\beta }}}}_{j}\ne {\bf{0}}\) and \({ {\hat{h}} }_{j}=\lambda \parallel {\widehat{{\boldsymbol{\beta }}}}_{j}{\parallel }_{1/2}^{1/2}\), \({\widehat{{\boldsymbol{\xi }}}}_{j}=\frac{{\widehat{{\boldsymbol{\beta }}}}_{j}}{\lambda \parallel {\widehat{{\boldsymbol{\beta }}}}_{j}{\parallel }_{1/2}^{1/2}}\).

The proof is in the Supplementary.

Theorem 1.

If \(({\hat{{\boldsymbol{\beta }}}}_{0},\hat{{\boldsymbol{h}}},\hat{{\boldsymbol{\xi }}})\) is a local maximizer of (20), then \(\widehat{{\boldsymbol{\beta }}}\) with \({\widehat{\beta }}_{mj}={ {\hat{h}} }_{j}{\widehat{\xi }}_{mj}\), is a local maximizer of

where \({\boldsymbol{\beta }}={({\beta }_{10},{\beta }_{11},\cdots ,{\beta }_{Mp})}^{T}\). On the other hand, if \(\widehat{{\boldsymbol{\beta }}}\) is a solution of (21), then \(({\hat{{\boldsymbol{\beta }}}}_{0},\hat{{\boldsymbol{h}}},\hat{{\boldsymbol{\xi }}})\) is a solution of (20), where \({\widehat{{\boldsymbol{\beta }}}}_{0}={\left({\widehat{\beta }}_{10},{\widehat{\beta }}_{20},\cdots ,{\widehat{\beta }}_{M0}\right)}^{T}\), \(\parallel {\widehat{{\boldsymbol{\beta }}}}_{j}{\parallel }_{1/2}^{1/2}=\mathop{\sum }\limits_{m=1}^{M}| {\widehat{\beta }}_{mj}{| }^{\frac{1}{2}}\),

where \(\widehat{{\boldsymbol{h}}}=({ {\hat{h}} }_{1},{ {\hat{h}} }_{2},\cdots \ ,{ {\hat{h}} }_{p})\), \(\widehat{{\boldsymbol{\xi }}}={({\widehat{\xi }}_{11},{\widehat{\xi }}_{12},\cdots ,{\widehat{\xi }}_{Mp})}^{T}\), \({\widehat{{\boldsymbol{\beta }}}}_{j}={({\widehat{\beta }}_{1j},{\widehat{\beta }}_{2j},\cdots ,{\widehat{\beta }}_{Mj})}^{T}\) and \({\widehat{{\boldsymbol{\xi }}}}_{j}={({\widehat{\xi }}_{1j},{\widehat{\xi }}_{2j},\cdots ,{\widehat{\xi }}_{Mj})}^{T}\).

The proof is in the Supplementary. If we regard one gene’s effects among all datasets as a “group”, then (21) imposes an L1 penalty on each group and a square root penalty on individual elements within a group. The following theorem shows the theoretical properties of the meta-Half.

Theorem 2.

The meta-Half method possesses sparsity, unbiasedness and oracle properties.

The proof is in the Supplementary.

Experiments

In this section, we analyze the performance of our methods (meta-Half, meta-MCP and meta-SCAD) by simulation and real-data analysis. We compare these three methods with other four methods, which are meta-LASSO, composite MCP, group Bridge and group exponential LASSO. The codes of our methods are available at GitHub (https://github.com/zhhui019/meta-nonconvex). The meta-LASSO is implemented by Li et al.39. The composite MCP, the group Bridge and the group exponential LASSO are implemented by Patrick Breheny and Yaohui Zeng’s R package “grpreg”.

Simulations

Simulation studies are performed to compare the performance of the proposed meta-Half, meta-MCP and meta SCAD with the meta-LASSO, composite MCP, group Bridge and group exponential LASSO.

Generate simulated data

In this simulation, we use the normal distribution to generate the gene expression xmi (m = 1, 2, ⋯, M; i = 1, 2, ⋯, nm) with M = 10 datasets, each dataset contains nm = 50 samples, and each sample contains p = 1, 000 genes. The response ymi is generated from a logistic model

where \({{\boldsymbol{\beta }}}_{m}^{\ast }=({\beta }_{m1}^{\ast },{\beta }_{m2}^{\ast },\cdots \ ,{\beta }_{mp}^{\ast })\) and we suppose that the intercept term \({\beta }_{m0}^{\ast }=0.\) We let \({\beta }_{mj}^{\ast }={\alpha }_{mj}{\theta }_{mj}\) simulate possible data heterogeneity, for m = 1, 2 ⋯, M; j = 1, 2 ⋯, 10, αmj are generated from N(3, 0. 52) and θmj are generated from Bernoulli(π0), for m = 1, 2 ⋯, M; j = 11, 12 ⋯, 1000, let \({\beta }_{mj}^{\ast }=0\). This means that the first 10 genes of each dataset are important to the response with probability π0. The value αmj demonstrates whether the jth gene is important in the mth dataset, and the value θmj demonstrates different levels of heterogeneity among different datasets, in this simulation, considering π0 = 0.9, 0.5, 0.2 to represent the low, medium and high heterogeneity. We run 30 replicates and report the average measurement.

For the all methods, the tuning parameters are selected by minimizing the BIC:

where \({\widehat{{\boldsymbol{\beta }}}}_{m,\lambda }\) is the estimated coefficients in the mth dataset, λ is the tuning parameter, Sm is the number of non-zero elements of \({\widehat{{\boldsymbol{\beta }}}}_{m,\lambda }\), \({\ell }_{m}({\widehat{{\boldsymbol{\beta }}}}_{m,\lambda })\) is the log-likelihood for the mth dataset and has the form (5).

Analysis of simulation

The variable selection performance of the seven methods is evaluated using the selection sensitivity, specificity and accuracy of coefficient β. The sensitivity is the proportion of non-zero \({\beta }_{mj}^{\ast }\)’s that are correctly estimated as non-zero, the specificity is the proportion of zero \({\beta }_{mj}^{\ast }\)’s that are correctly estimated as zero and the accuracy is the proportion of \({\beta }_{mj}^{\ast }\)’s that are correctly estimated.

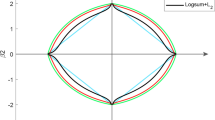

The simulation results are summarized in Table 1 (The variable selection performance of the seven methods are evaluated using the selection sensitivity, specificity and accuracy of coefficient β). Table 1 shows that the specificity and accuracy of the coefficients β of all seven methods are similar. The sensitivity trend of coefficient β for all seven methods with the varying levels of heterogeneity is shown in Fig. 1. Figure 1 shows that the sensitivity (the proportion of non-zero \({\beta }_{mj}^{\ast }\)’s that are correctly estimated as non-zero) of the composite MCP, the group Bridge and the group exponential LASSO dramatically decreases as π0 increases, while the sensitivity of meta-Half, meta-MCP, meta-SCAD and meta-LASSO remains above 0.9 for π0 = 0.2, 0.5, 0.9. When π = 0.2, the sensitivity of meta-Half, meta-MCP and meta-SCAD are 0.9693, 0.9651 and 0.9738, respectively, which are significantly higher than other methods. This result shows that our proposed meta-Half, meta-MCP and meta-SCAD have the superior performance when data heterogeneity is strong (π0 is small). With the weakening of data heterogeneity(π = 0.5, 0.9), the performance of the four meta methods (meta-Half, meta-MCP, meta-SCAD and meta-LASSO) tends to be comparable. The specificity and accuracy of the coefficients for all seven methods are similar.

Real-Data analysis

In this section, we apply our methods (meta-Half, meta-MCP and meta-SCAD) to three publicly available lung cancer gene expression datasets, and compare our three methods with other four methods including meta-LASSO, composite MCP, group Bridge and group exponential LASSO.

Lung cancer datasets

The three publicly available lung cancer microarray datasets come from disparate platforms and can be download from GEO (https://www.ncbi.nlm.nih.gov/gds/). The three datasets are described as follows:

GSE10072 dataset. The dataset is gene expression signature of cigarette smoking, it contains 107 final expression samples from 58 tumors and 49 non-tumor tissues from 20 never smokers, 26 former smokers, and 28 current smokers, each sample has 22283 genes. The original gene expression data is provided by Landi et al.54.

GSE19188 dataset. The dataset is expression data for early stage non-small-cell lung cancer (NSCLC), it contains 156 samples from 91 tumor tissues and 65 adjacent normal lung tissue samples, each sample has 54675 genes. The more information can be found in Hou et al.55.

GSE19804 dataset. The dataset is non-smoking female lung cancer in Taiwan, it contains 120 samples from 60 tumors and 60 normal tumor tissues, each sample has 54675 genes. The more information can be found in Lu et al.56.

Each dataset is divided into two parts, about 70 percent of the datasets as training samples and the other 30 percent as testing samples. Table 2 lists the details of the three datasets.

The original Affymetrix data was first normalized and log-transformed by a robust multi-array average (RMA) method57. After that, downloading and installing the appropriate custom chip definition files (CDFs) packages according to the type of microarray platform. The CDF package is necessary for probe annotation for Affymetrix data. The probes of the normalized data can be successfully mapped to Entrez Gene IDs by annotation packages in Bioconductor58. If multiple probes match a single Entrez ID, we calculated the median of values of those probes as the expression value for this gene.

We extract common genes from the three gene expression datasets as the merged set of genes. There are 13515 common genes in three datasets and our analysis is based on those 13515 genes. We use a random partition in three lung cancer datasets, and apply aforementioned seven methods to select important genes, with the optimal tuning parameters chosen by the BIC as discussed above. We repeat this procedure 30 times and report the average measurement and standard error.

Evaluating the classification performance

Table 3 demonstrates the prediction performance of the seven methods in three lung cancer datasets. The sensitivity, specificity and accuracy of training and testing predictions for all seven methods are shown in Fig. 2.

As shown in Table 3 and Fig. 2, for the training dataset and testing dataset, the sensitivity and accuracy of meta-Half, meta-MCP, meta-SCAD are consistently higher than the other four methods, and the specificity of all methods are similar. This result shows that our three methods are more effectively distinguish whether an individual is a disease patient compared to the other four methods. Therefore, our three methods have superior performance than the other four methods in the prediction and diagnosis of diseases.

Analysis of the selected genes

Table 4 gives the names of genes selected in each dataset. We focus on the gene WIF1 which is bolded in the Table 4. WIF1, a secreted Wnt antagonist, is a downstream gene of the Wnt/β-catenin pathway, which exerts inhibition through direct binding to Wnt proteins59. WIF1 was found to be silenced by methylation in various human carcinomas including lung60, oral61, nasopharyngeal62, esophageal63, breast64 and colon cancer65 etc.

As shown in Table 4, our three methods (meta-Half, meta-MCP and meta-SCAD) all select gene WIF1 on both datasets GSE10072 and GSE19188, but not select gene WIF1 on dataset GSE19804. As we known that GSE10072 dataset is gene expression signature of cigarette smoking, GSE19188 dataset is expression data for early stage non-small-cell lung cancer (NSCLC) and GSE19804 dataset is non-smoking female lung cancer in Taiwan. Huang et al. showed that WIF1 is significantly associated with the smoking behavior in NSCLC patients66. It shows that our three methods can more realistically identify the important biomarkers from different datasets which have heterogeneity. The meta-LASSO selects gene WIF1 in all three lung cancer datasets, and the genes selected by meta-LASSO in the three lung cancer datasets are the same. The other three methods (composite MCP, group Bridge and group exponential LASSO) cannot select gene WIF1 in all three lung cancer datasets. Therefore, our three methods are superior to the other four methods when applied in the heterogeneity datasets.

The number of genes selected by meta-Half, meta-MCP, meta-SCAD and meta-LASSO are 11, 13, 9 and 13 respectively. Figure 3 shows the overlap of commonly selected genes across the four different methods (meta-Half, meta-MCP, meta-SCAD, meta-LASSO) in three lung cancer datasets. The other three methods (composite MCP, group Bridge and group exponential LASSO) select fewer genes, so we don’t show the genes they selected in Fig. 3. As shown in Fig. 3(a), for the datasets GSE10072 and GSE19188, seven common genes are selected by meta-Half, meta-MCP and meta-SCAD, which are CXCL13, COL11A1, SPP1, MMP12, AGER, WIF1 and FCN3. Two common genes are selected by meta-Half, meta-MCP, meta-SCAD and meta-LASSO, which are SPP1 and WIF1. Figure 3(b) shows that for dataset GSE19804, four common genes selected by meta-Half, meta-MCP and meta-SCAD are CXCL13, SPP1, MMP12 and AGER. One common genes are selected by meta-Half, meta-MCP, meta-SCAD and meta-LASSO, which is SPP1. More unique non-overlapping sets of genes are selected by our three methods and meta-LASSO. In addition, some of the aforementioned genes have been reported in the literature. COL11A1 is collagen type XI alpha 1 chain. The over-expression of COL11A1 reportedly correlates with lymph node metastasis and poor prognosis in non-small cell lung cancer and ovarian cancer67. Zhang et al. suggest that SPP1 and AGER are risk factors for lung adenocarcinoma, and these two genes may be utilized in the prognostic evaluation of patients with lung adenocarcinoma68. The advanced glycosylation end-product specific receptor (AGER) belongs to the immunoglobulin superfamily, whose abnormal expression has been detected in lung cancer69. MMP12 is matrix metallopeptidase 12 and may play a role in aneurysm formation and mutations in this gene are associated with lung function and chronic obstructive pulmonary disease (COPD)70. WIF1 was found to be silenced by methylation in lung60. Lea et al. shows that the Ficolin-3, encoded by the FCN3 gene and expressed in the lung and liver, is a recognition molecule in the lectin pathway of the complement system71. The aforementioned genes CXCL13, MMP12, AGER and FCN3 are only selected by our three methods, and the gene COL11A1 is selected by our three methods and group exponential LASSO.

To make it easier to demonstrate the interplay between the selected genes from the different methods, we construct a network of interactions among the genes using the cBioPortal72,73. Figures 4, 5 and 6 show the interactive network of the genes selected by our three methods in three lung cancer datasets. Most of the genes selected by our three methods are linked to the frequently altered neighbor genes from the TCGA lung adenocarcinoma dataset. The expression of SPP1 is controlled by TP53. TP53 is tumor protein p53, this gene encodes a tumor suppressor protein containing transcriptional activation, DNA binding, and oligomerization domains. Mutations in this gene are associated with a variety of human cancers74. MMP12 and TOP2A are targeted by certain cancer drugs, and are only selected by our three methods.

Network view of the genes selected from meta-Half in lung cancer datasets. The genes corresponding to the selected variables are highlighted by a thicker black outline. The rest of the nodes correspond to the genes that are frequently altered and are known to interact with the highlighted genes (based on publicly available interaction data). The nodes are gradient color-coded according to the alteration frequency based on microarray data derived from the TCGA lung cancer dataset via cBioPortal. (a) GSE10072 and GSE19188. (b) GSE19804.

In this part, we analyze the genes selected by the four methods (meta-Half, meta-MCP, meta-SCAD and meta-LASSO) in three lung cancer datasets. According to the network of interactions between genes, among the genes selected by our three methods, we find that some genes are connected to other frequently altered genes in publicly available datasets, and some genes are targeted by certain cancer drugs. Some functions may also need to be verified in the future. Results demonstrate that our three methods have good performance in the high-dimensionality gene expression data with heterogeneity.

Conclusion

With the rapid development of biotechnology and its wide applications, a large number of publicly available gene expression datasets have been produced. However, due to the gene expression datasets have the characteristics of small sample size, high dimensionality and high noise, the application of biostatistics and machine learning methods to analyze gene expression data is a challenging task, such as the low reproducibility of important biomarkers in different studies. The low reproducibility of important biomarkers is mainly caused by the heterogeneity of the different datasets. These problems reveal the complexity of gene expression data and significantly obstruct biotechnology in clinical applications. Meta-analysis is an effective approach to deal with these problems. It plays an important role in summarizing and synthesizing scientific evidence from multiple studies, and provides a more comprehensive understanding of the biological systems, but the current methods have some limitations. The nonconvex regularization method is an effective approach for variable selection developed in recent years. In this paper, we combine the advantages of meta-analysis and the nonconvex regularization method, and propose three novel methods, dubbed as meta-Half, meta-MCP and meta-SCAD, respectively. Through the hierarchical decomposition of coefficients, our methods not only consider the data heterogeneity to maintain the flexibility in selecting variables on different datasets, but also consider the correlation between multiple datasets to improve the ability of identifying important biomarkers. We give the efficient algorithms which apply the nonconvex iterative thresholding algorithms based on approximate message passing (Half-AMP, MCP-AMP and SCAD-AMP) to solve our models and study the theoretical property of meta-Half. The theoretical property analysis of MCP-AMP and SCAD-AMP are the future work. We prove meta-Half possesses sparsity, unbiasedness and oracle properties. Furthermore, we apply our methods to the simulation data and three publicly available lung cancer gene expression datasets, and compare the performance of our methods with other four methods, which are meta-LASSO, composite MCP, group Bridge and group exponential LASSO. Simulation studies demonstrate our methods have the superior performance when data heterogeneity is strong. In the three publicly available lung cancer gene expression datasets, the analysis results show that our three methods have good performance in the gene expression data of small sample size and high dimensionality from different sources (heterogeneity), and the selected important biomarkers have clinical significance. Our methods can also be extended to other areas where datasets are heterogeneous.

References

Barrett, T. et al. Ncbi geo: archive for functional genomics data sets—update. Nucleic acids research 41, D991–D995 (2012).

Tibshirani, R. Regression shrinkage and selection via the lasso. Journal of the Royal Statistical Society: Series B (Methodological) 58, 267–288 (1996).

Fu, W. Penalized regressions: The bridge versus the lasso. Journal of Computational and Graphical Statistics 7, 397–416 (1998).

Xu, Z. B., Zhang, H., Wang, Y., Chang, X. Y. & Liang, Y. l 1/2 regularization. Science China Information Sciences 53, 1159–1169 (2010).

Liang, Y. et al. Sparse logistic regression with a l 1/2 penalty for gene selection in cancer classification. BMC bioinformatics 14, 198 (2013).

Zhang, C. H. Nearly unbiased variable selection under minimax concave penalty. The Annals of statistics 38, 894–942 (2010).

Fan, J. Q. & Li, R. Z. Statistical challenges with high dimensionality: Feature selection in knowledge discovery,proceeding of the international congress of mathematicians. European Mathematical Society 595–622 (2006).

Zhang, H., Liang, Y., Xu, Z. & Chang, X. Compressive sensing with noise based on scad penalty. Acta Mathematica Sinica (in Chinese) 56, 767–776 (2013).

Zhang, H., Zhang, H. & Gou, M. Convergence analysis of compressive sensing based on scad iterative thresholding algorithm. Chinese Journal of engineering mathematics 33, 243–258 (2016).

Yuan, M. & Lin, Y. Model selection and estimation in regression with grouped variables. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 68, 49–67 (2006).

Zou, H. & Hastie, T. Regularization and variable selection via the elastic net. Journal of the royal statistical society: series B (statistical methodology) 67, 301–320 (2005).

She, Y. et al. Thresholding-based iterative selection procedures for model selection and shrinkage. Electronic Journal of statistics 3, 384–415 (2009).

Zeng, L. & Xie, J. Group variable selection via scad-l 2. Statistics 48, 49–66 (2014).

Liu, X.-y. et al. Novel regularization method for biomarker selection and cancer classification. IEEE/ACM transactions on computational biology and bioinformatics (2019).

Rhodes, D. R., Barrette, T. R., Rubin, M. A., Ghosh, D. & Chinnaiyan, A. M. Meta-analysis of microarrays: interstudy validation of gene expression profiles reveals pathway dysregulation in prostate cancer. Cancer research 62, 4427–4433 (2002).

DeConde, R. P. et al. Combining results of microarray experiments: a rank aggregation approach. Statistical applications in genetics and molecular biology5 (2006).

Zintzaras, E. & Ioannidis, J. P. Meta-analysis for ranked discovery datasets: theoretical framework and empirical demonstration for microarrays. Computational biology and chemistry 32, 39–47 (2008).

Choi, J. K., Yu, U., Kim, S. & Yoo, O. J. Combining multiple microarray studies and modeling interstudy variation. Bioinformatics 19, 84–90 (2003).

Grützmann, R. et al. Meta-analysis of microarray data on pancreatic cancer defines a set of commonly dysregulated genes. Oncogene 24, 5079 (2005).

Han, B. & Eskin, E. Interpreting meta-analyses of genome-wide association studies. PLoS genetics 8, e1002555 (2012).

Bhattacharjee, S. et al. A subset-based approach improves power and interpretation for the combined analysis of genetic association studies of heterogeneous traits. The American Journal of Human Genetics 90, 821–835 (2012).

Li, J. et al. An adaptively weighted statistic for detecting differential gene expression when combining multiple transcriptomic studies. The Annals of Applied Statistics 5, 994–1019 (2011).

Ramasamy, A., Mondry, A., Holmes, C. C. & Altman, D. G. Key issues in conducting a meta-analysis of gene expression microarray datasets. PLoS medicine 5 (2008).

Hong, F. & Breitling, R. A comparison of meta-analysis methods for detecting differentially expressed genes in microarray experiments. Bioinformatics 24, 374–382 (2008).

Tseng, G. C., Ghosh, D. & Feingold, E. Comprehensive literature review and statistical considerations for microarray meta-analysis. Nucleic acids research 40, 3785–3799 (2012).

Shen, R., Ghosh, D. & Chinnaiyan, A. M. Prognostic meta-signature of breast cancer developed by two-stage mixture modeling of microarray data. BMC genomics 5, 94 (2004).

Conlon, E. M., Song, J. J. & Liu, J. S. Bayesian models for pooling microarray studies with multiple sources of replications. BMC bioinformatics 7, 247 (2006).

Choi, H., Shen, R., Chinnaiyan, A. M. & Ghosh, D. A latent variable approach for meta-analysis of gene expression data from multiple microarray experiments. BMC bioinformatics 8, 364 (2007).

Scharpf, R. B., Tjelmeland, H., Parmigiani, G. & Nobel, A. B. A bayesian model for cross-study differential gene expression. Journal of the American Statistical Association 104, 1295–1310 (2009).

Fan, X. et al. Bayesian meta-analysis for identifying periodically expressed genes in fission yeast cell cycle. The Annals of applied statistics 4, 988–1013 (2010).

Huo, Z., Song, C. & Tseng, G. Bayesian latent hierarchical model for transcriptomic meta-analysis to detect biomarkers with clustered meta-patterns of differential expression signals. The annals of applied statistics 13, 340 (2019).

Rashid, N. U., Li, Q., Yeh, J. J. & Ibrahim, J. G. Modeling between-study heterogeneity for improved replicability in gene signature selection and clinical prediction. Journal of the American Statistical Association 1–14 (2019).

Zhang, K., Geng, W. & Zhang, S. Network-based logistic regression integration method for biomarker identification. BMC systems biology 12, 135 (2018).

Breheny, P. & Huang, J. Penalized methods for bi-level variable selection. Statistics and its interface 2, 369 (2009).

Huang, J., Ma, S., Xie, H. & Zhang, C.-H. A group bridge approach for variable selection. Biometrika 96, 339–355 (2009).

Breheny, P. The group exponential lasso for bi-level variable selection. Biometrics 71, 731–740 (2015).

Kim, S., Jhong, J.-H., Lee, J. & Koo, J.-Y. Meta-analytic support vector machine for integrating multiple omics data. BioData mining 10, 2 (2017).

Zhou, N. & Zhu, J. Group variable selection via a hierarchical lasso and its oracle property. arXiv preprint arXiv:1006.2871 (2010).

Li, Q., Wang, S., Huang, C.-C., Yu, M. & Shao, J. Meta-analysis based variable selection for gene expression data. Biometrics 70, 872–880 (2014).

Zhao, P. & Yu, B. On model selection consistency of lasso. Journal of Machine learning research 7, 2541–2563 (2006).

Chai, H., Li, Z.-n., Meng, D.-y., Xia, L.-y. & Liang, Y. A new semi-supervised learning model combined with cox and sp-aft models in cancer survival analysis. Scientific Reports7, 13053.

Fan, J. et al. Local partial-likelihood estimation for lifetime data. The Annals of Statistics 34, 290–325 (2006).

Breheny, P. & Huang, J. Coordinate descent algorithms for nonconvex penalized regression, with applications to biological feature selection. The annals of applied statistics 5, 232 (2011).

Fan, J. & Li, R. Variable selection for cox’s proportional hazards model and frailty model. Annals of Statistics 30, 74–99 (2002).

Jin, Z.-F., Wan, Z., Jiao, Y. & Lu, X. An alternating direction method with continuation for nonconvex low rank minimization. Journal of Scientific Computing 66, 849–869 (2016).

Wen, F., Pei, L., Yang, Y., Yu, W. & Liu, P. Efficient and robust recovery of sparse signal and image using generalized nonconvex regularization. IEEE Transactions on Computational Imaging 3, 566–579 (2017).

Cui, Z.-X. & Fan, Q. A nonconvex nonsmooth regularization method for compressed sensing and low rank matrix completion. Digital signal processing 62, 101–111 (2017).

Huang, X. & Yan, M. Nonconvex penalties with analytical solutions for one-bit compressive sensing. Signal Processing 144, 341–351 (2018).

Wen, F. et al. Nonconvex regularization-based sparse recovery and demixing with application to color image inpainting. IEEE Access5, 11513–11527.

You, J., Jiao, Y., Lu, X. & Zeng, T. A nonconvex model with minimax concave penalty for image restoration. Journal of Scientific Computing 78, 1063–1086 (2019).

Li, Z. et al. Manifold optimization-based analysis dictionary learning with an l 1/2-norm regularizer. Neural Networks 98, 212–222 (2018).

Zhang, H. & Zhang, H. Approximate message passing algorithm for l 1/2 regularization. Science China Information Sciences (in Chinese) 47, 58–72 (2017).

Zhang, H., Zhang, H., Liang, Y., Yang, Z.-Y. & Ren, Y. Approximate message passing algorithm for nonconvex regularization. IEEE Access 7, 9080–9090 (2019).

Landi, M. T. et al. Gene expression signature of cigarette smoking and its role in lung adenocarcinoma development and survival. PloS one 3, e1651 (2008).

Hou, J. et al. Gene expression-based classification of non-small cell lung carcinomas and survival prediction. PloS one 5, e10312 (2010).

Lu, T.-P. et al. Identification of a novel biomarker, sema5a, for non–small cell lung carcinoma in nonsmoking women. Cancer Epidemiology and Prevention Biomarkers 19, 2590–2597 (2010).

Irizarry, R. A. et al. Exploration, normalization, and summaries of high density oligonucleotide array probe level data. Biostatistics 4, 249–264 (2003).

Gentleman, R. C. et al. Bioconductor: open software development for computational biology and bioinformatics. Genome biology 5, R80 (2004).

Reguart, N. et al. Cloning and characterization of the promoter of human wnt inhibitory factor-1. Biochemical and biophysical research communications 323, 229–234 (2004).

Wissmann, C. et al. Wif1, a component of the wnt pathway, is down-regulated in prostate, breast, lung, and bladder cancer. The Journal of Pathology: A Journal of the Pathological Society of Great Britain and Ireland 201, 204–212 (2003).

Pannone, G. et al. Wnt pathway in oral cancer: epigenetic inactivation of wnt-inhibitors. Oncology reports 24, 1035–1041 (2010).

Lin, Y.-C. et al. Wnt signaling activation and wif-1 silencing in nasopharyngeal cancer cell lines. Biochemical and biophysical research communications 341, 635–640 (2006).

Clément, G. et al. Epigenetic alteration of the wnt inhibitory factor-1 promoter occurs early in the carcinogenesis of barrett’s esophagus. Cancer science 99, 46–53 (2008).

Ai, L. et al. Inactivation of wnt inhibitory factor-1 (wif1) expression by epigenetic silencing is a common event in breast cancer. Carcinogenesis 27, 1341–1348 (2006).

Park, S. Y. et al. Promoter cpg island hypermethylation during breast cancer progression. Virchows Archiv 458, 73–84 (2011).

Huang, T. et al. Meta-analyses of gene methylation and smoking behavior in non-small cell lung cancer patients. Scientific reports 5, 8897 (2015).

Chong, I.-W. et al. Great potential of a panel of multiple hmth1, spd, itga11 and col11a1 markers for diagnosis of patients with non-small cell lung cancer. Oncology reports 16, 981–988 (2006).

Zhang, W. et al. Spp1 and ager as potential prognostic biomarkers for lung adenocarcinoma. Oncology letters 15, 7028–7036 (2018).

Pan, Z. et al. Long non-coding rna ager-1 functionally upregulates the innate immunity gene ager and approximates its anti-tumor effect in lung cancer. Molecular carcinogenesis 57, 305–318 (2018).

Hunninghake, G. M. et al. Mmp12, lung function, and copd in high-risk populations. New England Journal of Medicine 361, 2599–2608 (2009).

Munthe-Fog, L. et al. Immunodeficiency associated with fcn3 mutation and ficolin-3 deficiency. New England Journal of Medicine 360, 2637–2644 (2009).

Gao, J. et al. Integrative analysis of complex cancer genomics and clinical profiles using the cbioportal. Sci. Signal. 6, pl1–pl1 (2013).

Cerami, E. et al. The cbio cancer genomics portal: an open platform for exploring multidimensional cancer genomics data (2012).

Oros Klein, K. et al. Gene coexpression analyses differentiate networks associated with diverse cancers harboring tp53 missense or null mutations. Frontiers in genetics 7, 137 (2016).

Acknowledgements

This work is supported by the Macau Science and Technology Development Funds Grant No. 0055/2018/A2 from the Macau Special Administrative Region of the People’s Republic of China.

Author information

Authors and Affiliations

Contributions

Hui Zhang and Yong Liang propose the novel methods (meta-Half, meta-MCP, meta-SCAD) and give the efficient algorithms for the novel methods. Hui Zhang and Hai Zhang proved the theoretical property for the proposed methods. Hui Zhang, Shou-Jiang Li and Zi-Yi Yang conceived and conducted the experiment. Yan-Qiong Ren and Liang-Yong Xia provided the real data and analysis the information of biology. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zhang, H., Li, SJ., Zhang, H. et al. Meta-Analysis Based on Nonconvex Regularization. Sci Rep 10, 5755 (2020). https://doi.org/10.1038/s41598-020-62473-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-020-62473-2

This article is cited by

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.