Abstract

Growing metropolitan areas bring rapid urbanization and air pollution problems. As diseases and mortality rates increase because of the air pollution problem, it becomes a necessity to estimate the air pollution density and inform the public to protect the health. Air pollution problem displays contextual characteristics such as meteorological conditions, industrial and technological developments, traffic problem etc. that change from country to country and also from city to city. In this study, we determined PM\({}_{10}\) as the target pollutant and designed a new deep learning based air quality forecasting model, namely DFS (Deep Flexible Sequential). Our study uses real world hourly data from Istanbul, Turkey between 2014 and 2018 to forecast the air pollution 4, 12, and 24 hours before. DFS model is a hybrid & flexible deep model including Long Short Term Memory (LSTM) and Convolutional Neural Network (CNN). The proposed model also is capable of generalization with standard and flexible Dropout layers. Through flexible Dropout layer, the model also obtains flexibility to adapt changing window sizes in sequential modelling. Moreover, this model can be applied to other air pollution time series data problems with small modifications on parameters by taking into account the nature of the data set.

Similar content being viewed by others

Introduction

Air pollution plays an important role in living conditions in most large cities of the world. Accurate estimation of air pollution is a preliminary step in the presence of air pollution control technologies and helps to ensure economic and social development in developing countries.

There are standard approaches in order to identify specific pollutant mixtures that may include hundreds of gas compounds and particulates of complex physic-chemical compounds. These mixtures which are combinations of different pollutants in varying percentages, depend on social, economic, and technological activities at a given area. So, in air pollution studies, air pollutant indicators are used for risk assessment and epidemiological analysis. Most common indicators are particulate matter under \(10\ \mu m\) (PM\({}_{10}\)), particulate matter under \(2.5\) \(\mu m\) (PM\({}_{2.5}\)), nitrogen oxides (NO, NO\({}_{2}\), NO\({}_{X}\)), ozone (O\({}_{3}\)), sulphur oxides (SO\({}_{2}\)), and carbon oxides (CO).

Air pollution has serious effects on urban residents, especially vulnerable ones such as children and people with heart or respiratory failure. Besides, growing mortality and morbidity rates are associated with the high density of pollutants in the air (e.g. PM and SO\({}_{2}\))1,2,3.

Particulate matters are among air pollutants with serious effects on human health. Both heavy metals and carcinogenic chemicals such as mercury, lead, and cadmium lead serious health problems. Gasoline and diesel powered vehicles emit particulates such as benzo(a)pyre- ne and cause cancer when inhaled for a long time4. Prolonged exposure to high concentrations of PM\({}_{10}\) may also lead to early deaths, impaired cardiovascular system, internal diseases and respiratory infections. Considering the threats posed by human health, in this study we focus on estimating PM\({}_{10}\) density.

Estimation of alterations at air pollution concentration is required to secure life quality at city centers. In this respect, air quality estimation models have been developed in order to forecast air pollution before air quality declines significantly at the regional or local level. While doing this, the characteristics of atmospheric pollution and their negative effects on life quality are taken into account5,6.

In previous studies, meteorological data are widely used to forecast/predict air quality. Meteorological conditions play a pivotal role in determining air pollutant concentrations4,7,8,9,10,11. For instance, subnormal temperatures and solar radiation slow down photo-chemical reactions and lead to low levels of secondary air pollutants such as O\({}_{3}\) 10. Increasing wind velocity may either increase or decrease air pollutant concentration12. High wind velocity can lead to dust-storms by levitating particulate matter from the surface13. A high level of humidity generally increases concentration of PM, CO, and SO\({}_{2}\) in the air. Meanwhile it may decrease the concentration of some pollutants such as NO\({}_{2}\) and O\({}_{3}\)12. This is because high humidity is an indicator of rain14.

Besides meteorological data, pollution data can also be used for air quality forecasting. Nevertheless use of pollution data is rare than meteorological data due to three obstacles. First, the establishment and administration of an air quality monitoring station (AQMS) is more costly and difficult than that of meteorological station. Second, AQMSs are founded at very rare and specific locations. Finally, data collection from AQMSs is difficult.

This study aims to forecast PM\({}_{10}\) density four, twelve and twenty-four hours before it occurs and offers a novel deep learning based forecasting approach, entitled Deep Flexible Sequential (DFS) model. The novelty of our model lies in the combination of an Convolutional Neural Network (CNN) and Long Short Term Memory (LSTM) which yields a flexible dropout layer. The model we propose here, is a hybrid-sequential model that incorporates a Convolutional 1D (Conv) layer and a LSTM layer and combines their advantages. Thanks to Conv, feature extraction is effectively performed and with the help of LSTM, long-short term time dependencies are taken into account sequentially. Outside the Conv and LSTM layers, dropout layers are included in the model in order to prevent overfitting.

For the purpose of observing our DFS model performance in air pollution forecasting, we gathered both hourly meteorological and pollution data between August 2014 and February 2018 from four stations located in a very central location in Istanbul, Turkey. Additionally, we also collected traffic data (https://uym.ibb.gov.tr/) that we think it has a great impact on air pollution in urban areas. Proposed DFS architecture uses past meteorology, pollution, traffic and PM\({}_{10}\) data and implements deep learning based hybrid-sequential modeling for future PM\({}_{10}\) forecasting.

We compared the success of DFS model with deep learning-based Gated Recurrent Unit (GRU), LSTM, bidirectional LSTM (bi-LSTM) and Conv-LSTM models through the MAE and RMSE metrics for three different window sizes (g = 4, 12, 24) in four different measurement stations. The experiments demonstrate that proposed DFS model architecture is more suitable than the state of the art deep learning methods.

The contributions of this study are as follows:

We developed a new flexible and hybrid deep learning model called DFS for future PM\({}_{10}\) forecasting. Our model has generalization ability on different regions and includes CNN, LSTM and Dropout layers together. Compared to pure deep learning models, the hybrid architecture combining the benefits of these layers clearly comes to fore.

DFS air pollution forecasting model uses multivariate time-series data related to air pollution and performs flexible-temporal modeling regardless of window size. DFS can be an inspiration to not only other air pollution forecasting studies but also different data mining problems that perform sequential modeling on time series data with the flexibility it provides.

The fact that obtaining meteorological data is relatively easy compared to pollution data makes the estimation models using meteorological data more easily applicable. However, pollution data, including other pollutants at the point of measurement outside the target pollutant, may contribute more to the estimation. Our model has produced satisfactory results on two different data sets and by adding traffic data to these data sets, inter-data interaction from different sources is provided.

The rest of the paper is organized as follows. Previous air quality-pollution forecasting studies in literature are described in Related Works; proposed DFS model and the deep learning methods in the background of the model are described in Methodology. Subsequently, Model Implementation and Experimental Results section includes data analysis, data preprocessing, step-by-step formation of the DFS model and the experimental results. Lastly, Conclusion and Future Work concludes the paper.

Related Works

The approaches to estimate PM\({}_{10}\) density in the air can be categorized into two major groups based on the techniques they applied: deterministic models and statistical models. Deterministic models are methods that quantify the deterministic relationship between emission sources, meteorological processes, physco-chemical changes and pollutant concentrations, including the consequences of past and future scenarios and the determination of the effectiveness of alleviation strategies. On the other hand, statistical models include linear and nonlinear supervised learning methods and are easily distinguished from deterministic methods by their randomness property.

Machine learning approaches from statistical models proved their superiority to deterministic models in many air pollution estimation studies.

Studies with target pollutants other than PM

Singh et al. predicted SO\({}_{2}\) and NO\({}_{2}\) by using meteorological parameters15. In their work, they compared linear (Partial Least Square Regression (PLSR)) and non-linear models (Multivariate Polynomial Regression (MPR), Artificial Neural Networks (ANN)) and found most accurate results with ANN model.

Among different ANN approaches (Multilayer Perceptron Network (MLPR), Radial-basis function network (RBFN), Generalized Regression Neural Network (GRNN)), GRNN outperformed others. Ana Russo and colleagues highlighted the importance of size reduction16 and predicted NO\({}_{2}\), NO and CO densities with meteorological parameters such as temperature, relative humidity, precipitation accumulation, atmospheric boundary layer height, pressure, and brightness. Dhirendra Mishra17 compared Multiple Linear Regression (MLR) and Principle Component Analysis (PCA) aided ANN model while forecasting hourly NO\({}_{2}\) concentration in Tac Mahal, India. Because the latter model displayed a better performance it has been stated that the model can be used for air pollution forecasts in Tac Mahal, Agra. In another study Multilayer Perceptron (MLP) is used to forecast the concentrations of NO\({}_{2}\), O\({}_{3}\), and SO\({}_{2}\) in Delhi, the second biggest city in India18.

Sheikh Saeed Ahmad and his colleagues emphasized feature engineering and predicted the NO\({}_{2}\) density at Rawalpindi and Islamabad regions between November 2009 and March 2011 via temperature, relative humidity, precipitation accumulation, the location on earth, the week of measurement, and the location number19. The location number relied on the sequential binary number system. The number became ‘1’ if bidirectional transport way, main road, side road, public hospital, modern residence, trading area, resting area, bus station, school, lake, or, forest exists nearby the area. If there was not any of these, then it was coded as 0. The best ANN network structure was decided by evolutionary algorithm and the results were improved by back-propagation method.

Studies where PM is the target pollutant

Haiming et al. used PM\({}_{10}\), SO\({}_{2}\), NO\({}_{2}\), temperature, pressure, wind direction, wind velocity as parameters while predicting PM\({}_{2.5}\) concentration in20. It was understood that RBF with Gauss transfer function generated more accurate result than ANN with back-propagation method. Similarly21, used ANN in order to forecast PM\({}_{10}\) density in Barcelona and Montseny. Nieto followed a similar method22 in Ovieodo utilizing monthly data. In 2011, Mingjian and colleagues employed PM\({}_{2.5}\), PM\({}_{5}\) and PM\({}_{10}\) density data collected from laser dust monitors located along the Zhongshan Avenue, which is one of the most busy streets in the city of Chongqing in China23. While predicting PM\({}_{2.5}\) density24, utilized both the Aerosol Optical Depth (AOD) provided by satellite images and the traffic density. On the other hand, MODerate resolution Imaging Spectro-radiometer (MODIS) used the average of satellite-based night lights in addition to AOD satellite images while estimating PM\({}_{10}\)25. In their recent study, Kurt and Oktay built a geographic model while forecasting SO\({}_{2}\), CO and PM\({}_{10}\) levels at Beşiktaş region in Istanbul by using daily air pollutant data, meteorological data, and geographic data26.

In air pollution estimation studies via machine learning, it is clearly seen that methods based on artificial neural networks stand out regardless of whether the target pollutant is PM or not. Considering the success of deep learning techniques in many other application domains27,28,29, it is inevitable that the studies for air pollution prediction have recently focused on deep learning methods.

First studies conducted with deep learning in this area have tended to use pure sequential models (RNN, LSTM, GRU) with proven success in time series. A cyclic ANN model, Recurrent Neural Network (RNN), was run for estimating the density of PM\({}_{10}\) and PM\({}_{2.5}\) in the work of Kim and his colleagues30. RNN performance was compared with Feed Forward Artificial Neural Network (FFANN) and MLR on the data from subway stations in Seoul, the capital city of Korea. The findings of this study demonstrated that compounds with Nitrogen element are more effective at predicting PM\({}_{10}\) and PM\({}_{2.5}\) than the compounds with Carbon element. Comparing RNN, RNN based-LSTM and RNN based-GRU performances, GRU was found to be slightly higher than LSTM for PM\({}_{10}\) level prediction31. The extended version of LSTM is presented as framework in32 by using hourly PM data.

Convolutional neural networks, which stand out with its success in image processing, are used in many research areas for feature extraction. In air pollution estimation problems Conv takes place in hybrid network architectures with sequential models in general. Study of air pollution prediction through ozone in33 and PM\({}_{2.5}\) forecasting studies in34,35 are some of these hybrid models.

Air pollution is present in every scale from personal to global. The outcomes of ambient air pollution may be divided into two as local outcomes and global outcomes. While local outcomes have an impact on human health, vegetation, raw material and cultural goods, global outcomes may cause greenhouse effect, climate change and tropospheric/stratospheric ozone effect.

In this study, air pollution in Istanbul,Turkey is predicted accurately four, twelve and twenty-four hours before air pollution occurs using deep flexible sequential model, namely DFS. This is a hybrid deep learning model including LSTM, Conv and Dropout layers. The novelty in this model is the use of flexible dropout layer, which distinguishes our DFS forecasting model from other air pollution forecasting studies using hybrid deep learning methods. On the other hand, crucial difference between this work and the previous sequential modelling works is that we emphasize how flexible deep model should be designed on time series data due to changing window sizes. The proposed DFS model has a different architecture than the models proposed so far and can be used in other air pollution forecasting studies in the future.

Methodology

The densities of pollutants are influenced by meteorological parameters, which display specific characteristics on a hourly, daily, yearly basis. That is, not surprisingly, the highest air pollutant densities in Istanbul are measured not only in summer months due to high temperatures and evaporation but also in winter months due to high level of gasoline consumption. Therefore, we can say it is contrary to the nature of the problem to use fully connected artificial neural networks by treating the air pollution estimation problem independent from the time series feature. For such problems, sequential deep learning methods are already available in the literature.

Studies using traditional prediction methods view time as a feature and do not use previous target values at prediction model. However, pollutant density at time ‘t’ is influenced by the value at time ‘t-g’ as well. Traditional artificial neural networks do not forecast with sequential information so their connections with previous events are limited. At this point, RNNs come to fore. RNNs differ from traditional networks because they provide a continuity of information flow thanks to their cyclic nature.

This study uses RNN based GRU, LSTM, bi-LSTM, and hybrid model Conv-LSTM for performance evaluation. In the following sections; the basis of sequential modeling in deep learning, RNN, and our proposed DFS model architecture with its background methods are presented.

RNN

RNNs have proven useful at time series36, natural language processing37, and bioinformatics38,39. In short, these ANNs yield satisfactory results at applications that use serial and connected data sets. While feeding the network, RNNs take and process input series at each stage. They hold these series at a hidden unit and use this information in order to update state vector that keeps information about all previous elements of the series.

Figure 1 shows both the architecture of the RNN and its unfold version. The symbols shown in the figure are as follows: x\({}_{t}\) is input sequence, o\({}_{t}\) is output vector, s\({}_{t}\) is hidden state vector and W, U, V weight matrices. RNN maps an input sequence (x\({}_{t}\)) into an output sequence (o\({}_{t}\)) according to the recursive formulas of RNN in Eqs. 1 and 2.

RNN Architecture40.

When looked at unfold version of the architecture (time is not cyclic) in Fig. 1, it is not wrong to state that RNN is a very deep version of FFANN where same weights are shared. However, the drawback of RNN is salient during network training, where multiplicative decreases/increases in back-propagated gradients lead to Vanishing Gradient41 or Exploding Gradient42 problem. When gradient problems occur, the training process takes too long and the accuracy is decreasing. Another problem regarding RNN is that although its primary objective is to learn long-term dependencies, it is not very good at storing network information especially when retrospective dependencies abound. In order to fix these, RNN-based LSTM model is suggested.

DFS model for air pollution forecasting

This study proposes the Deep Flexible Sequential (DFS) model in Fig. 2 for air quality forecasting problem. The model includes LSTM and Convolutional layers and becomes prominent with its flexible Dropout layer. Before giving the details of the DFS Model architecture we propose, LSTM and CNN are described in the following subsections which form the basis of the proposed model.

LSTM

LSTM43 is a special version of RNN and is essentially separated from RNN by the fact that each neuron in its structure is actually a memory cell. As shown in Fig. 3, the working principle of LSTM relies on cells and intercellular data transfer. Information obtained from previous memory cells is used when processing in the current cell. In this wise, data is transferred from one cell to another and temporal dependencies are stored.

LSTM can handle even the longest sequence data without being affected by gradient problems and proves useful at learning long term dependency. Compared to similar methods, it performs better than GRU44 and RNN especially while modeling long distance relations and differs from other types of learning models with its three-gate structure (forget gate, input gate, output gate).

Long-Short Term Memory Architecture is presented in Fig. 3, where \(x(t)\) is input of current cell, \(C(t)\) is the cell memory, \(h(t)\) is output of current cell block to be used in the next cell as a hidden state. \(C(t-1)\) and \(h(t-1)\) comes from previous cell and ensures sequential dependency. "\(\sigma \)” is \(Sigmoid\) and "\(tanh\)” \(HyperbolicTangent\) functions. While implementing element-wise weighted sum operation in LSTM, "\(\times \)” shows element-wise multiplication and "+” indicates element-wise sum.

Forget Gate: At the forget gate, the decision is about how many percent of the information from previous cell is preserved in the new cell. The output from previous cell \(h(t-1)\) is combined with the input of current cell \(x(t)\) and this combination is introduced into the \(Sigmoid\) function in Eq. 3. Afterwards, according to the multiplication of the output of \(Sigmoid\) activation function and \(C(t-1)\), it is decided to which extent the existing information is forgotten (Eq. 4). The output by \(Sigmoid\) is between 0 and 1, where 0 denotes complete forgetting, whereas 1 does complete remembering.

$$\quad S(t)=\frac{1}{1+{e}^{-t}}$$(3)$${f}_{t}=\sigma ({W}_{f}\cdot [{h}_{t-1},{x}_{t}]+{b}_{f})$$(4)Input Gate: This layer is composed of \(Sigmoid\) layer and \(tanh\) layer. The former decides which values will be updated (Eq. 5), whereas the later generates possible values of \({\widetilde{C}}_{t}\) vector (Eq. 6). The outputs of these two layers are multiplied by element-wise multiplication and the result is added to the function \(C(t)\) as in Eq. 7.

$$\quad {i}_{t}=\sigma ({W}_{i}\cdot [{h}_{t-1},{x}_{t}]+{b}_{i})$$(5)$${\widetilde{C}}_{t}=tanh({W}_{C}\cdot [{h}_{t-1},{x}_{t}]+{b}_{C})$$(6)$${C}_{t}={f}_{t}\ \ast \ {C}_{t-1}+{i}_{t}\ \ast \ {\widetilde{C}}_{t}$$(7)Output Gate: This layer decides cell state output at time ‘t’. Then, the output of h(t-1) and the input of X(t) are combined and the result is put into \(Sigmoid\) function (Eq. 8). The output of this function determines how much information will be retrieved from cell state. \(C(t)\) results of Forget gate and Input gate are activated by \(tanh\) function, and afterwards these results are multiplied by \(Sigmoid\) output in order to yield the cell output (Eq. 9).

$${o}_{t}=\sigma ({W}_{o}\cdot [{h}_{t-1},{x}_{t}]+{b}_{o})$$(8)$${h}_{t}={o}_{t}\ \ast \ tanh({C}_{t})$$(9)

CNN

CNN is more prominent in image processing45 and computer vision46 than that in most of the deep learning studies, CNN and image are mentioned together. Yann Lecun’s LeNet-5, AlexNet, GoogLeNet and VGG are the keystones of studies using convolutional networks in image processing. These are followed by modern network architectures such as Inception, ResNet and ResNeXt.

Although CNN is particularly well known for its success in visual imagery analysis, it can also be effectively applied to time series analysis problems. What makes CNN different from other networks is basically weight sharing and sparse connectivity. Through the shared weights, training is relatively easy on CNN compared to FFANN.

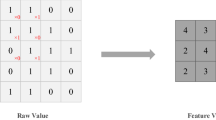

The weight sharing and local perception features make CNN attractive for time series models as it reduces the number of parameters and improves the learning ability of the model. Hierarchical CNN structures for feature extraction consist of two successive layers; first convolutional layer and then subsampling or pooling layer. In the DFS architecture, 1-dimensional CNN (CNN-1D) is used for property extraction, and CNN-1D here contains the maximum pooling layer after the convolution layer. The convolutional layer, implements sliding-window on input data and by this way, it creates feature maps that represent the temporal sequence property of time series data. The weight of the convolution filter is shared in the convolutional layer and connected to the input. The maximum pooling layer reduces the size of output dimension over the feature maps in the convolutional layer. Therefore, it may improve the learning and generalization ability of the model by ignoring temporal shifts and distortions in data.

Model Implementation and Experimental Results

In this study, air quality intensity is predicted before air pollution occurs by using hourly data at \((t-4)\), \((t-12)\), and \((t-24)\). Real world data used in here, belong to Aksaray, Alibeyköy, Beşiktaş and Esenler which are located in a very central location in European side of Istanbul covering the time period between August 2014 and February 2018.

Meteorological conditions play a critical role while measuring air pollutant concentration. Therefore, temperature in \({}^{\circ }\)C (maximum temperature, minimum temperature), wind speed, wind direction, maximum wind speed, maximum wind direction, and humidity meteorological parameters were collected on hourly basis from the Turkish State Meteorological Service (TSMS). For reliable temperature and wind values, a number of serial measurements is carried out within the same hour. Meteorological parameters with max-prefix denote the highest value of a given parameter within an hour.

As for air quality prediction, it is found that other pollutants can be used for measuring the density of target pollutants47. The pollution density data of CO, NO, NO\({}_{2}\), NO\({}_{X}\), O\({}_{3}\) and SO\({}_{2}\) were collected from the closest AQMSs to meteorological stations.

Meteorological and pollutant data, which are frequently used at air pollution prediction/forecasting, yield satisfactory results in many studies. Nevertheless, works in48 and49 suggest to add traffic data into data sets in further studies. Both of these studies solve air pollution problem by times series prediction and specifically uses LSTM. Given that Istanbul is a crowded mega city and suffers from traffic, we believed that taking traffic data into account would increase the performance of our model. For this reason, traffic data from Istanbul Metropolitan Municipality are also included while predicting PM\({}_{10}\). Traffic data is the percentage of traffic density measured with five-minute intervals (traffic index). In order to convert these five-minute data to hourly basis, we calculated their arithmetic mean. Since the singularity of these data may produce misleading forecasts, traffic data were used together with meteorological or pollution data. Air pollutant density values should be positive (http://havaizleme.gov.tr/). The hours at which the pollutant density is zero or negative is equivalent to no measurement at that time. Zero, negative values, or the lack of measurement at a given hour would create the sparsity problem. Since our missing data is negligible, we preferred to remove these samples from the data set instead of applying one of the data filling methods. After removal of the samples with missing data, we proceeded with the data at hand of which minimum, maximum, mean and standard deviation of PM\({}_{10}\) values and number of samples in each data set are shown at Table 1.

In meteorological data set, wind direction and maximum wind direction parameters are represented by a value between 0 and 360. These features differ from others as they are categorical variables. Expression of these categorical features by 4, 8 and 16 labeling was applied and tested. For instance, in labeling 4; 0–90, 90–180, 180–270, and 270–360 intervals are represented by 1, 2, 3, and 4 respectively. After feature representations were changed, ’One Hot Encoding’ was applied and the effects of these representations on prediction models were compared. It is understood that 4-label representation gave better results than other labels or unmodified representation. So, 4-label representation was used in our models.

In order to evaluate the performance of the model, state of the art techniques RNN based LSTM, GRU, bi-LSTM and hybrid method Conv-LSTM used in this study implemented in Keras (supports Tensorflow backend) framework. In each region, data sets were divided into three as; training set 60%, validation set 15% and test set 25%. Thus, the three-year data was used for the training of the model, while the data of the last year was reserved for the test.

We built our model on LSTM in beginning, since the air pollution problem is based on time series data and developed this model step by step until the final DFS model was obtained. In recent studies at the field of air pollution forecasting, it is advocated that models with 1-2 LSTM layers outperform those with 3-4 layers31. Similarly, Chaudhary et al. showed that the model with single layer and 50 memory units yielded the best results50. So we thought that to use an initial model with single layer of average depth and LSTM with 96 memory units would be more appropriate. Since LSTM models give more accurate results on data at the interval of 0-1, we converted the data to 0-1 range by using MinMaxScaler51. This transformation also made computational time shorter.

During hyper-parameter tuning for the model of single layer LSTM with 72 memory units model, we used the meteorological data that performed best in our previous study52 and forecasted PM\({}_{10}\) density at time t by using data we have at (t-4), (t- 12), and (t-24). We evaluated model performance that depends on parameter changes according to MAE (10) and RMSE (11). We also repeated the parameter optimization process mentioned for 48, 96, 120 and 144 LSTM unit values in stations Aksaray, Alibeyköy, Beşiktaş and Esenler.

We observed that LSTM Model with 96 units stands out in all regions. So, in order to demonstrate the effect of the parameters on the model, we present the results of the initial LSTM Model with 96 memory unit for Beşiktaş, which is relatively more lively area and prone to air pollution at Table 2.

As shown at Table 2, to use Adam53 optimizer rather than RMSProp optimizer yielded minimum error with less epoch values. After the selection of Adam optimizer, it is decided that the epoch value should be between 80 and 120. Accordingly, 100 was assigned as the epoch value since the Training loss - epoch graphic was saddle at that time.

When model performances were evaluated according to RMSE and MAE metrics, the former generated relatively greater results. It is so, because our target variable, PM\({}_{10}\), takes values in a wide range (Table 1) and large values effect RMSE in proportion to their square. This is also valid for loss function. Unlike mse, mae does not take into account the square of the difference between the actual value and predicted value. On the other hand, loss function mse and performance metric RMSE yield results based on the total of these squares.

Batch size values are listed at Table 2 in order of progress. After output of models with 48 and 96 batch sizes are generated, 24 and 72 batch sizes were used respectively for hyper parameter optimization. Since we do time series forecasting on hourly basis data, we chose specifically 24 and multiples of 24 as batch sizes. The model with 72 batch size came to fore.

After the adjustment of the parameters as, optimizer = Adam, loss function = mae, batch size = 72, and epoch number = 100; we attained deep and shallow models by changing the memory unit values of LSTM. Alternative models to 72 memory unit model are those with 48, 96, 120, 144 memory units respectively. When we compared MAE values regarding test results based on these models (For instance, for g = 4 with different memory units the results are as follows; MAE\({}_{48}\) = 7.69 MAE\({}_{72}\) = 7.60, MAE\({}_{96}\) = 7.56, MAE\({}_{120}\) = 7.67, MAE\({}_{144}\) = 7.75) the model with 96 memory units outperformed others.

By means of tuned LSTM model, we compared meteorological and pollutant data sets that included almost equal number of features and samples in order to see the effect of traffic data on Beşiktaş region. The results are illustrated as graphics at Fig. 4 for meteorological data and at Fig. 5 for air pollution data. Obviously, to include traffic data significantly minimizes errors.

We have trained our proposed Deep Flexible Sequential Model regarding two distinct data sets (meteorological and air pollution) each of which was added the traffic data. In DFS model, past sequential data is used as much as the window size. As shown in Eq. 12, sequence modeling was carried out in order to forecast the PM\({}_{10}\) target value. The g value at the equation denotes window size we set as four, twelve and twenty four.

Numerous experiments with different hyper parameters have been conducted in order to construct the best deep neural network architecture. The best model after hyper parameter tuning is shown at Fig. 2 and DFS Model parameters are explained layer by layer below.

Conv 1D Layer: Hyper parameters that we tuned in this layer are kernel size, number of filters and activation function. Also known as filter length, the kernel size set as 6 in this layer gives the size of the sliding window that convolves through the data. Filter defines how many sliding windows work on the data and also indicates how many features will be captured. Number of filters here is 24. Lastly, activation function is \(tanh\).

Max Pooling Layer: Max pooling was applied with ’pool size = 4’.

Dropout Layer: In the dropout layer, the drop rate between zero and one is determined for the input (dropout rate = 0.2).

LSTM Layer: This layer includes 24 LSTM memory units.

Flexible Dropout Layer: This layer is based on the principle of defining Dropout Rate in time series problems as an equation that depends on window size. Thanks to this layer, flexible dropout rates can be assigned within a specific interval for each different window sizes in LSTM. This rate assignment is carried out by a multiplier value, which depends on a threshold value and window size (\(g\)).

$$dropoutrate=0.19+0.0025\ \ast \ g$$(13)In our study, window size (\(g\)) takes the values of 4, 12, and 24 and flexible dropout rate varies between 0.2 and 0.25 depending on the formula at Eq. 13.

Dense Layer: Default parameters are used without any modification.

When we first designed our model, we used 0.2 dropout rate for both of two dropout layers and applied hyper parameter tuning for other layers. We made additional tests with lower and higher dropout rates in order to see whether the results would be better. Lower rates yielded no better results on any window size at the second layer. However, we observed less error values when higher dropout rates until around 0.25 are used especially for window sizes of 12 and 24. As for window size 4, increasing the rate from 0.2 to 0.25 decreased errors but beyond this rate the error values inflated. At this point, we concluded to design flexible dropout layer that depends on window size.

We applied different versions of flexible design to both dropout layers. The flexible design of the first dropout or both dropout layers increased errors. Therefore, we argue that the flexible dropout layer should be used after the LSTM rather than using before. By doing so, the error values of our model decrease. While using past sequential data, the bigger the window size the more features network can benefit from. As window size gets bigger, weight matrix grows and becomes complicate. We achieved to control this complexity by using Flexible Dropout Layer after LSTM layer.

Our model, LSTM, GRU, bi-LSTM and Conv-LSTM were applied to data sets, the model performances were compared, and MAE and RMSE error values are shown at Tables 3, 4, 5 and 6, respectively. One of the models compared here, bi-LSTM, has become very popular recently for its exemplary performance at natural language processing and machine translation. GRU occupies an important position in recommendation systems. When MAE and RMSE results are examined, it is found that our model led to a remarkable increase at the performance specifically for big window sizes. As for the other models, they can be ranked as LSTM, conv-LSTM, bi-LSTM, and GRU in terms of their performances. When the meteorological and pollution data sets are compared, it is seen that both data sets are sufficient for the proposed model since they revealed similar error values.

Conclusion and Future Work

In this study, Deep Flexible Sequential Model is suggested since it yielded accurate predictions 4, 12 and 24 hours before the air pollution occurs. We are proposing a flexible deep learning model composed of CNN, LSTM, and Dropout layer. The contributions of these three components are as follows. First, CNN can reveal effectively the characteristics of the data. Second, LSTM shows a good performance while unfolding long time dependencies from time series data. Third, Dropout layer brings a balance during sequential modeling.

In this study, the performance of our model was compared with those of GRU, LSTM and bi-LSTM that prioritize sequential data at PM\({}_{10}\) pollutant prediction. According to two performance metrics, MAE and RMSE, it is demonstrated that DFS Model displayed a superior performance. Under same parameters, DFS Model performed also better than Conv-LSTM model without flexible dropout layer structure (especially more salient at bigger window sizes) while predicting air pollution.

DFS model, which yielded remarkable results with four-year-long hourly data and eight features, is elaborately explained so that it can be used for air pollution forecasting at different regions. We believe that this model can also be used at different applications. We have two goals for future work: our specific goal is to collect data from other measurement stations in Istanbul and make a model fusing whole data, whereas our broad goal is to monitor the performance of DFS Model on different time series data sets beyond the air pollution problem.

References

Van Donkelaar, A. et al. Global estimates of ambient fine particulate matter concentrations from satellite-based aerosol optical depth: development and application. Environmental health perspectives 118, 847 (2010).

Martin, R. V. Satellite remote sensing of surface air quality. Atmospheric environment 42, 7823–7843 (2008).

Hoff, R. M. & Christopher, S. A. Remote sensing of particulate pollution from space: have we reached the promised land? Journal of the Air & Waste Management Association 59, 645–675 (2009).

Kalkstein, L. S. & Corrigan, P. A synoptic climatological approach for geographical analysis: assessment of sulfur dioxide concentrations. Annals of the Association of American Geographers 76, 381–395 (1986).

Lal, B. & Tripathy, S. S. Prediction of dust concentration in open cast coal mine using artificial neural network. Atmospheric Pollution Research 3, 211–218 (2012).

Raischel, F., Russo, A., Haase, M., Kleinhans, D. & Lind, P. G. Searching for optimal variables in real multivariate stochastic data. Physics Letters A 376, 2081–2089 (2012).

Wehner, B., Birmili, W., Gnauk, T. & Wiedensohler, A. Particle number size distributions in a street canyon and their transformation into the urban-air background: measurements and a simple model study. Atmospheric Environment 36, 2215–2223 (2002).

Jacob, D. J. & Winner, D. A. Effect of climate change on air quality. Atmospheric environment 43, 51–63 (2009).

Fiore, A. M. et al. Global air quality and climate. Chemical Society Reviews 41, 6663–6683 (2012).

Rasmussen, D., Hu, J., Mahmud, A. & Kleeman, M. J. The ozone-climate penalty: past, present and future. Environmental science & technology 47, 14258–14266 (2013).

Seinfeld, J. H. & Pandis, S. N. Atmospheric chemistry and physics: from air pollution to climate change (John Wiley & Sons, 2012).

Elminir, H. K. Dependence of urban air pollutants on meteorology. Science of the Total Environment 350, 225–237 (2005).

Hamidi, M., Kavianpour, M. R. & Shao, Y. Synoptic analysis of dust storms in the middle east. Asia-Pacific Journal of Atmospheric Sciences 49, 279–286 (2013).

Seinfeld, J. H. & Pandis, S. N. Atmospheric chemistry and physics: from air pollution to climate change (John Wiley & Sons, 2016).

Singh, K. P., Gupta, S., Kumar, A. & Shukla, S. P. Linear and nonlinear modeling approaches for urban air quality prediction. Science of the Total Environment 426, 244–255 (2012).

Russo, A., Raischel, F. & Lind, P. G. Air quality prediction using optimal neural networks with stochastic variables. Atmospheric Environment 79, 822–830 (2013).

Taneja, S., Sharma, N., Oberoi, K. & Navoria, Y. Predicting trends in air pollution in delhi using data mining. In Information Processing (IICIP), 2016 1st India International Conference on, 1–6 (IEEE, 2016).

Mishra, D. & Goyal, P. Development of artificial intelligence based no2 forecasting models at taj mahal, agra. Atmospheric Pollution Research 6, 99–106 (2015).

SheikhSaeedAhmad, R. U. M. N. Air Pollution Monitoring and Prediction. Intech Open (2015).

Haiming, Z. & Xiaoxiao, S. Study on prediction of atmospheric pm2. 5 based on rbf neural network. In Digital Manufacturing and Automation (ICDMA), 2013 Fourth International Conference on, 1287–1289 (IEEE, 2013).

Vong, C.-M., Ip, W.-F., Wong, P.-K. & Chiu, C.-C. Predicting minority class for suspended particulate matters level by extreme learning machine. Neurocomputing 128, 136–144 (2014).

Nieto, P. G., Lasheras, F. S., García-Gonzalo, E. & de Cos Juez, F. Pm 10 concentration forecasting in the metropolitan area of oviedo (northern spain) using models based on svm, mlp, varma and arima: a case study. Science of the Total Environment 621, 753–761 (2018).

Mingjian, F., Guocheng, Z., Xuxu, Z. & Zhongyi, Y. Study on air fine particles pollution prediction of main traffic route using artificial neural network. In Computer Distributed Control and Intelligent Environmental Monitoring (CDCIEM), 2011 International Conference on, 1346–1349 (IEEE, 2011).

Tang, M., Wu, X. & Agrawal, P. Pongpaichet, S. andJain, R. Integration of diverse data sources for spatial pm2. 5 data interpolation. IEEE Transactions on Multimedia 19, 408–417 (2017).

Campalani, P., Nguyen, T. N. T., Mantovani, S. & Mazzini, G. On the automatic prediction of pm 10 with in-situ measurements, satellite aot retrievals and ancillary data. In Signal Processing and Information Technology (ISSPIT), 2011 IEEE International Symposium on, 093–098 (IEEE, 2011).

Kurt, A. & Oktay, A. B. Forecasting air pollutant indicator levels with geographic models 3 days in advance using neural networks. Expert Systems with Applications 37, 7986–7992 (2010).

Xiao, C. et al. A spatiotemporal deep learning model for sea surface temperature field prediction using time-series satellite data. Environmental Modelling & Software 120, 104502 (2019).

Ni, L. et al. Forecasting of forex time series data based on deep learning. Procedia computer science 147, 647–652 (2019).

Shen, Z., Zhang, Y., Lu, J., Xu, J. & Xiao, G. A novel time series forecasting model with deep learning. Neurocomputing (2019).

Kim, M., Kim, Y., Sung, S. & Yoo, C. Data-driven prediction model of indoor air quality by the preprocessed recurrent neural networks. In ICCAS-SICE, 2009, 1688–1692 (IEEE, 2009).

Athira, V., Geetha, P., Vinayakumar, R. & Soman, K. Deepairnet: Applying recurrent networks for air quality prediction. Procedia Computer Science 132, 1394–1403 (2018).

Li, X. et al. Long short-term memory neural network for air pollutant concentration predictions: Method development and evaluation. Environmental Pollution 231, 997–1004 (2017).

Pak, U., Kim, C., Ryu, U., Sok, K. & Pak, S. A hybrid model based on convolutional neural networks and long short-term memory for ozone concentration prediction. Air Quality, Atmosphere & Health 11, 883–895 (2018).

Huang, C.-J. & Kuo, P.-H. A deep cnn-lstm model for particulate matter (pm2. 5) forecasting in smart cities. Sensors 18, 2220 (2018).

Du, S., Li, T., Yang, Y. & Horng, S.-J. Deep air quality forecasting using hybrid deep learning framework. arXiv preprint arXiv:1812.04783 (2018).

Mhammedi, Z., Hellicar, A., Rahman, A., Kasfi, K. & Smethurst, P. Recurrent neural networks for one day ahead prediction of stream flow. In Proceedings of the Workshop on Time Series Analytics and Applications, TSAA ’16, 25–31 (ACM, New York, NY, USA, 2016), https://doi.org/10.1145/3014340.3014345.

Wen, Y., Xu, A., Liu, W. & Chen, L. A wide residual network for sentiment classification. In Proceedings of the 2018 2Nd International Conference on Deep Learning Technologies, ICDLT ’18, 7–11 (ACM, New York, NY, USA, 2018).

Gogoi, P. & Sarma, K.K. Recurrent neural network based channel estimation technique for stbc coded mimo system over rayleigh fading channel. In Proceedings of the CUBE International Information Technology Conference, CUBE ’12, 294–298 (ACM, New York, NY, USA, 2012), https://doi.org/10.1145/2381716.2381771.

Gao, P., Yu, L., Wu, Y. & Li, J. Low latency rnn inference with cellular batching. In Proceedings of the Thirteenth EuroSys Conference, EuroSys ’18, 31:1–31:15 (ACM, New York, NY, USA, 2018) https://doi.org/10.1145/3190508.3190541

LeCun, Y., Bengio, Y. & Hinton, G. Deep learning. nature 521, 436 (2015).

Hochreiter, S. The vanishing gradient problem during learning recurrent neural nets and problem solutions. International Journal of Uncertainty, Fuzziness and Knowledge-Based Systems 6, 107–116 (1998).

Pascanu, R., Mikolov, T. & Bengio, Y. Understanding the exploding gradient problem. CoRR, abs/1211.5063 (2012).

Gers, F. A., Schraudolph, N. N. & Schmidhuber, J. Learning precise timing with lstm recurrent networks. J. Mach. Learn. Res. 3, 115–143, https://doi.org/10.1162/153244303768966139 (2003).

Bansal, T., Belanger, D. & McCallum, A. Ask the gru: Multi-task learning for deep text recommendations. In Proceedings of the 10th ACM Conference on Recommender Systems, RecSys ’16, 107–114 (ACM, New York, NY, USA, 2016).

Chua, L. O. & Roska, T. The cnn paradigm. IEEE Transactions on Circuits and Systems I: Fundamental Theory and Applications 40, 147–156 (1993).

SharifRazavian, A., Azizpour, H., Sullivan, J. & Carlsson, S. Cnn features off-the-shelf: an astounding baseline for recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition workshops, 806–813 (2014).

Asgari, M., Farnaghi, M. & Ghaemi, Z. Predictive mapping of urban air pollution using apache spark on a hadoop cluster. In Proceedings of the 2017 International Conference on Cloud and Big Data Computing, ICCBDC 2017, 89–93 (ACM, New York, NY, USA, 2017), https://doi.org/10.1145/3141128.3141131

Pardo, E. & Malpica, N. Air quality forecasting in madrid using long short-term memory networks. In International Work-Conference on the Interplay Between Natural and Artificial Computation, 232–239 (Springer, 2017).

Tsai, Y.-T., Zeng, Y.-R. & Chang, Y.-S. Air pollution forecasting using rnn with lstm. In 2018 IEEE 16th Intl Conf on Dependable, Autonomic and Secure Computing, 16th Intl Conf on Pervasive Intelligence and Computing, 4th Intl Conf on Big Data Intelligence and Computing and Cyber Science and Technology Congress (DASC/PiCom/DataCom/CyberSciTech), 1074–1079 (IEEE, 2018).

Chaudhary, V., Deshbhratar, A., Kumar, V. & Paul, D. Time series based lstm model to predict air pollutant’s concentration for prominent cities in india (2018).

Kramer, O. Scikit-learn. In Machine Learning for Evolution Strategies, 45–53 (Springer, 2016).

Kaya, K. & Öğüdücü, Ş. G. A binary classification model for pm 10 levels. In 2018 3rd International Conference on Computer Science and Engineering (UBMK), 361–366 (IEEE, 2018).

Kingma, D. P. & Ba, J. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014).

Acknowledgements

We’re thankful to the Turkish State Meteorological Service and Istanbul Metropolitan Municipality for providing the meteorological and traffic data used in this study. The authors are supported by the Scientific Research Project Unit of Istanbul Technical University, Project Number: MOA-2019-42321.

Author information

Authors and Affiliations

Contributions

S.G.O. provided the data and took part mainly in Introduction and Literature review section. K.K. completed Model Implementation and Experimental Results. All figures and tables are prepared by K.K. and she also took part in forming of Introduction and Literature review section. The authors collaborated in Methodology part and they jointly decided on the proposed model after long discussions.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kaya, K., Gündüz Öğüdücü, Ş. Deep Flexible Sequential (DFS) Model for Air Pollution Forecasting. Sci Rep 10, 3346 (2020). https://doi.org/10.1038/s41598-020-60102-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-020-60102-6

This article is cited by

-

Hybridization of rough set–wrapper method with regularized combinational LSTM for seasonal air quality index prediction

Neural Computing and Applications (2024)

-

Analysis of deep learning approaches for air pollution prediction

Multimedia Tools and Applications (2022)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.