Abstract

Thyroid nodules are a common clinical problem. Ultrasonography (US) is the main tool used to sensitively diagnose thyroid cancer. Although US is non-invasive and can accurately differentiate benign and malignant thyroid nodules, it is subjective and its results inevitably lack reproducibility. Therefore, to provide objective and reliable information for US assessment, we developed a CADx system that utilizes convolutional neural networks and the machine learning technique. The diagnostic performances of 6 radiologists and 3 representative results obtained from the proposed CADx system were compared and analyzed.

Similar content being viewed by others

Introduction

Advances in high-resolution ultrasonography (US) along with increased access to health check-up services and increased medical surveillance have led to a massive escalation in the number of detected thyroid nodules, especially small thyroid nodules, and thyroid nodules have been detected in up to 68% of adults1. US is recognized as the best diagnostic tool for thyroid nodules due to its sensitivity and accuracy. However, US is an operator-dependent and subjective imaging modality2. While interobserver variability (IOV) is very low among experienced physicians3, poor agreement was documented when US findings of thyroid nodules were interpreted by less experienced physicians4.

In order to support the decision-making process of physicians by adding objective opinions, computer-aided diagnosis (CADx) has been introduced and developed over the years5,6,7. CADx provides physicians with second opinions from computational and statistical perspectives so that physicians can refer to the information obtained through CADx and use it as supplementary data to reach their final decision. In conventional CADx systems, feature extraction and classification are common processes. Feature extraction involves extracting information and generating features from original data. Classical techniques for feature extraction are based on mathematical and statistical approaches, and handcrafted features including textural and morphological properties are extracted. Textural features include information such as contrast, coarseness, roughness, and intensity and morphological features include information such as perimeter, circularity, elongation, and compactness8,9,10. Classification integrates the extracted features and then estimates the class of data. Many classifiers are variations of Support Vector Machine (SVM), decision tree, K-nearest neighbor, etc11. Both feature extraction techniques and classification methods have been widely used for thyroid US images5,12,13,14,15,16,17,18,19,20,21.

However, extracting meaningful features often results in loss of good characteristics due to a heavy dependence on problems. Therefore, series of trial and error are required to get optimal results and this in turn can increase operational costs. Deep learning has attracted attention to recent image classification problems by showing outstanding results in the ImageNet Large Scale Visual Recognition Competition (ILSVRC). Early in the 2010s, feature extraction based on deep learning was introduced as big data began to be utilized in the medical field22,23,24.

The deep learning method not only generates non-handcrafted features from original data but also acts as a classifier. Recently, many studies have applied deep learning to medical image analysis. Convolutional Neural Networks (CNNs), a popular deep learning structure, are widely used for this analysis25. Typically, good learning processes require big data which are not often available, especially in the medical imaging field. For this reason, we use CNN models trained by huge amounts of data with various classes in a process called transfer learning26,27.

Previous studies have applied deep learning methods to the classification of thyroid nodules on US6,28,29. Other studies have also focused on CNN-based features and have applied them to existing classifiers30,31. In this study, we employed various trained CNNs to combine features and to use them to diagnose thyroid nodules on US through classifiers, and compared the diagnostic performance of CNNs with that of radiologists with various levels of experience.

Results

We performed 2 machine learning algorithms which were trained with the combined features from 6 pre-trained CNNs to classify thyroid nodules on US images. Representative outcomes were then selected and compared with the diagnostic performances of the 6 radiologists. We first examined the performances of the fine-tuned CNNs. Afterwards, the proposed combinations for CNN-based feature extraction and classifier results were presented and analyzed. Here, accuracy (Acc), specificity (Spe), and sensitivity (Sen) were the three performance evaluation criteria and calculated as follows.

where TP (true positive) is the number of nodules correctly predicted as malignant, TN (true negative) the number of nodules correctly predicted as benign, FP (false positive) the number of nodules inaccurately predicted as malignant, and FN (false negative) the number of nodules inaccurately predicted as benign. Acc, Spe, Sen and AUC were expressed as values multiplied by 100 in the tables. The diagnostic results with 150 test images interpreted by six radiologists who had different levels of experience are presented for comparison (see Table 1).

Conventional approaches

The conventional CNN results obtained without separating feature extraction and classification processes are presented in Table 2. Furthermore, in Table 3, we presented the performances observed when the features extracted from a single CNN and one of the SVM/RF classifiers were used (details of CNNs and classifiers can be found in Supplementary Information). These results were compared with the results obtained with the proposed method.

As depicted in Table 3, AlexNet, OverFeat, and VGG showed that features extracted from fine-tuned CNNs and SVM or RF classification using these features produced similar or better results than the ones in Table 2. Conversely, VGG-verydeep, ResNet, Inception showed that a SVM/RF classifier associated with features extracted from pre-trained CNNs led to similar or worse results than fine-tuned CNN in Table 2. Taken together, feature extraction techniques based on CNNs combined with SVM/RF classifiers may have worse results than fine-tuned CNNs (Table 2) with deeper layers. Otherwise, there is a possibility that the training dataset was not large enough to tune a huge amount of parameters. Thus, fine-tuning with a small dataset may harm good parameters which can generate useful and objective features. When classifiers were compared, RF often performed better than SVM.

Feature concatenation

Based on the idea that different structures in CNN will provide different features, we selected effective features for each CNN based on the results shown in Table 3 and concatenated them. We chose CNN features extracted from AlexNet32-fc2 with fine-tuning ([A]), OverFeat33-fc2 with fine-tuning ([O]), VGG34-fc1 with fine-tuning ([V]), VGG-verydeep35-fc2 without fine-tuning ([Vv]), ResNet36-avg without fine-tuning ([R]), and Inception37-avg without fine-tuning ([I]). Table 4 summarizes the results of the selected features. Even though AlexNet, OverFeat, VGG, VGG-verydeep allow self-feature-concatenations since features can be extracted from two different layers in a single net, we decided not to use them due to there being almost no effect with self-concatenation. We expected feature concatenation to improve results compared to when it was not performed (Table 3), so we added a new performance criterion \(\tilde{J}\) which is calculated as follows

The quantity \(\tilde{J}\) indicates whether or not the criteria of feature concatenation led to better results than individual criteria. An asterisk (*) indicated performance values of feature concatenations that had a \(\tilde{J}\) smaller than 1. Table 5 shows the feature concatenations of two or three CNN features and Table 6 shows the feature concatenations of four or more CNN features, respectively. One can tell from Table 5 that most of the feature concatenations provide improved results compared with individual results, with the word ‘individual’ henceforth indicating results obtained using features from a single CNN in Table 3. With SVM, all results except for [VvI] showed improved accuracy than individual results. Notable results were found when we applied feature concatenations of four or more CNN features. In Table 6, all accuracies and sensitivities improved compared to individual cases. For instance, minimum accuracy and sensitivity was 90.0% and 91.0%, and maximum accuracy and sensitivity was 94.0% and 99.0%, respectively. This shows that accuracy and sensitivity are guaranteed to improve when feature concatenations of more various CNN features are applied.

Classification ensemble

In this subsection, the same features which were named as [A],[O],[V],[Vv],[R], and [I] in the previous section were used again. We first executed a classification ensemble of SVM and RF results with single CNN-based features and these results are written in italic, [A],[O],[V],[Vv],[R], and [I]. To compare these with individual results (the results found using features from a single CNN) in Table 3, we defined \(\hat{J}\) as follows

The value \(\hat{J}\) is an indicator of the performance of the classification ensemble. An asterisk (*) was used to mark performance values of classification ensembles that had a \(\hat{J}\) smaller than 1. As shown in Table 7, several hierarchical steps of the classification ensemble affected overall accuracies while the classification ensemble of SVM and RF for a single CNN did not improve accuracies significantly.

Combination of feature concatenation and classifier ensemble

A combination of the two previously proposed approaches was also performed. For the feature concatenation, we used the results of Table 6 and then we applied the classification ensemble of SVM and RF results. As seen in Tables 7 and 8, feature concatenation plays a key role while the classifier ensemble merely affects the results.

Diagnostic performances of radiologists and CNNs

The diagnostic performances of the 6 radiologists and 3 CNN-combinations for the diagnosis of thyroid malignancy are shown in Table 1. We chose three types of CNN-based feature concatenations and classifier ensembles from Tables 6 and 7 which were shaded. CNN 1 stands for the results obtained from trained SVM using features from [A], [V], [Vv] and [R]. CNN 2 represents RF classifier results trained with [O], [V], [Vv] and [R]-based features. CNN 3 corresponds to the results from the ensemble outcome of SVM and RF which were both trained with concatenated features from [O], [V] and [I]. Experienced radiologists showed higher accuracies than less experienced radiologists (Table 1). Compared to the diagnostic performances of the two experienced radiologists, differences in accuracies were not statistically significant (P = 0.309). Faculty 1 showed significantly higher sensitivity than faculty 2 (P < 0.001). In contrast, faculty 2 showed significantly higher specificity than faculty 1 (P = 0.006). Accuracies of faculty 1, faculty 2, CNN 1, CNN 2, and CNN 3 were 78%, 82.7%, 94%, 94%, and 94%. Accuracies of the 3 CNNs were significantly higher than those of the 2 faculties (Table 1). Specificities of the 3 CNNs were significantly higher than that of faculty 1 (90% of CNN 1, 94% of CNN 2, 86% of CNN 3 vs 52% of faculty 1, P < 0.001) (Table 9). Sensitivities of the 3 CNNs were significantly higher than that of faculty 2 (96% of CNN 1, 94% of CNN 2, 98% of CNN 3 vs 76% of faculty 2, P < 0.001) (Table 9).

Interobserver variability and agreement of US assessments for predicting thyroid malignancy among 6 radiologists and between 2 radiologists with similar experience levels

Interobserver agreement to diagnose thyroid malignancy among the 6 radiologists was 0.465, which meant a moderate degree of agreement Table 10). Interobserver agreements for the differentiation of thyroid nodules was 0.387 (fair agreement) for the two faculties, 0.663 (substantial agreement) for the two fellows, and 0.418 (moderate agreement) for the two residents.

Discussion

We have proposed a CADx system which can provide reliable supplementary and objective information to help radiologists in the decision-making process. More precisely, we focused on constructing an efficient and accurate CADx system for thyroid US image classification using deep learning and this was achieved by concatenating features extracted from various pre-trained CNNs and training classifiers based on those features. Six pre-trained CNNs, AlexNet, OverFeat, VGG, VGG-verydeep, ResNet, and Inception, were utilized in feature extraction and two classifiers, SVM and RF, were used. In the overall process, 594 training and 150 test images were used. Table 2 shows that the results of pre-trained CNNs with fine-tuning were not much better than those of the radiologists (Table 1). A past study38 also found similar results. The pre-trained CNN, VGG-F, was utilized to classify the US images of thyroid nodules. The study only focused on using a single CNN to determine the label of each test image.

Our approach suggested using pre-trained CNNs only for feature extraction and training them with SVM or RF classifiers. More importantly, we proposed combining features from various CNNs (feature concatenation) and combining the results from different classifiers (classifier ensemble). The different structures of various CNNs allow the creation of different features, which motivates our approach. Several factors have to be considered before CNN-based feature extraction is used in CADx: CNN selection, performance of fine-tuning, extracted layer selection, and classifier selection. We conducted all possible combinations considering these factors and the results are reported in this paper. The pre-trained CNNs, AlexNet, OverFeat, VGG, and VGG-verydeep have two feature extractable layers in which self-feature-concatenation was possible. But, it turned out that self-feature-concatenation was not very effective. In AlexNet, OverFeat, and VGG, feature extraction with fine-tuning led to results with higher accuracy. On the contrary, for VGG-verydeep, ResNet, and Inception, the results of feature extraction without fine-tuned CNNs were generally better than those obtained with fine-tuned CNNs. Since these latter three CNNs have deeper layers, we supposed that the extracted features from the original pre-trained CNNs were sufficiently objective and fine-tuning may have degraded meaningful features instead.

When a single CNN was used to extract features, the results (Table 3) were almost the same with fine-tuned pre-trained CNNs in Table 2. Moreover, a classifier ensemble using features from a single CNN (from the first to the sixth row in the first column of Table 7) had results that were not that different from those obtained without a classifier ensemble, as can be seen in Table 3. Based on the results in Tables 5–7, we conclude that feature concatenation with more CNNs produces better results while a classifier ensemble does not.

When the diagnostic performances of the 6 radiologists and 3 CNN-combinations were analyzed, accuracies of the 3 CNN-combinations (all 94%) were significantly higher than those (78% and 82.7%) of the 2 experienced radiologists. Specificities were significantly higher with the 3 CNN-combinations (86%~94%) than that (52%) of faculty 1. The 3 CNN-combinations (94%~98%) also had significantly higher sensitivities than that (76%) of faculty 2. Furthermore, the interobserver agreement for the final assessment among the 6 radiologists was fair (κ = 0.387) for the 2 faculties, substantial (κ = 0.663) for the 2 fellows, moderate (κ = 0.418) for the 2 residents, and moderate (κ = 0.465) for all 6 radiologists (2 faculties, 2 fellows, and 2 residents). Therefore, a CADx system using CNN-combinations may help radiologists make decisions by overcoming interobserver variability when assessing thyroid nodules on US.

In our opinion, feature concatenation with many CNNs shows promising performance and we expect this approach to be a potential supplementary tool for radiologists. In the future, we plan to examine the proposed method with more data and with medical images from other devices such as MR and CT. Another aiming challenge is developing an efficient localization scheme using concatenating methodology. Our research has excluded the localization task since all US images in this study had a square region-of-interest (ROI) that was depicted by experienced radiologists (with all US images being either cytologically proven or sugically confirmed). There has been research on a CNN-based framework conducting both detection and classification. For example, a multi-task cascade CNN framework was proposed39 to detect and recognize nodules and the framework was able to fuse different scales of features in a single module. This spatial pyramid module seems promising as a detection and classification scheme can be established with features from multiple CNNs.

Methods

Patients

Institutional review board (IRB) approval was obtained for this retrospective study and the requirement for informed consent for review of patient images and medical records was waived. The patients in the current cohort38 had been included in a previous study that used a computerized algorithm to predict thyroid malignancy with a deep CNN to differentiate malignant and benign thyroid nodules on US. Unlike previous studies, we separated the feature extraction and classification processes to enhance the efficiency and accuracy of the previously studied deep CNN algorithms. Multiple deep CNNs were only used for feature extraction and conventional machine learning algorithms were applied for classification.

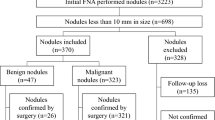

From May 2012 to February 2015, 1576 consecutive patients who underwent US and subsequent thyroidectomy were recruited. Of those, 592 small nodules from 522 patients were excluded because they were microcalcifications. Finally, we included 589 small nodules equal to or larger than 1 cm and less than 2 cm on US from 519 patients (426 women and 93 men, 47.5 years ± 12.7). The mean size of the 589 nodules was 12.9 mm ± 2.5 (range, 10–19 mm). All of the nodules were confirmed by histopathological examination after surgical excision. Of the 396 malignant nodules, 376 (94.9%) were conventional papillary thyroid carcinoma (PTC), 14 (3.5%) were the follicular variant of PTC, 4 (1%) were the diffuse sclerosing variant of PTC, 1 (0.3%) was the Warthin-like tumor variant of PTC, and 1 (0.3%) was a minimally invasive follicular carcinoma. For the 193 benign nodules, 154 (80%) were adenomatous hyperplasia, 25 (13%) were lymphocytic thyroiditis, 8 (4%) were follicular adenoma, 2 (1%) were Hurthle cell adenoma, 2 (1%) were hyaline trabecular tumors, 1 (0.5%) was a hyperplastic nodule, and 1 (0.5%) was a calcific nodule without tumor cells. We designated 439 (142 benign and 297 malignant) US images as the training dataset and 150 (50 benign and 100 malignant) US images as the test dataset. To balance the training set, data augmentation was applied for the benign training data by left-right flipping and up-down flipping so that 155 additional benign images were added to the training dataset. As a result, a total of 594 US images were used as the training data and 150 US images were used as the test data. All US images were labeled as benign or malignant and cropped by a ROI.

Image acquisition

One of 12 physicians dedicated to thyroid imaging performed US with a 5-to 12-MHz linear transducer (iU22; Philips Healthcare, Bothell, WA) or a 6–13-MHz linear transducer (EUB-7500; Hitachi Medical, Tokyo, Japan). A representative US image was obtained for each tumor considering US findings by K.J.Y who had 16 years of experience in analyzing thyroid US images. The images were stored as JPEG images in the picture archiving and communication system. Square regions of interest (ROIs) were drawn using the Paint program of Windows 7.

Image analyses

A total of 150 US images (50 benign and 100 malignant) were reviewed by two faculties (K.H.J. and M.H.J.) with 8 and 16 years of experience in thyroid imaging, two second-year fellows (B.J.H. and H.S.), and two second-year residents (S.J.W. and Y.J.), retrospectively. Each physician categorized the nodules as ‘probably benign‘ or ‘suspicious malignant‘ based on the criteria from Kim et al.40. which classified a nodule as ‘suspicious malignant‘ when any of the suspicious US features (markedly hypoechogenicity, microlobulated or irregular margins, microcalcifications, and taller-than-wide shape) were present. In Figs. 1 and 2, two clinical cases were introduced.

An ultrasonography (US) image of a 50-year-old woman with an incidentally detected thyroid nodule discovered on screening examination that shows a 1.2-cm sized hypoechoic solid nodule with eggshell calcifications (arrows). All 6 radiologists interpreted the nodule as a benign. In contrast, 3 CNN-combinations interpreted it as cancer. The nodule was diagnosed as papillary thyroid cancer by surgery.

An ultrasonography (US) image of a left thyroid nodule in a 77-year-old woman who was confirmed with cancer in the right thyroid gland. A 1-cm sized isoechoic nodule with internal echogenic spots was seen (arrows). Four radiologists (1 faculty, 1 fellow, and two residents) interpreted the nodule as cancer. In contrast, 3 CNN-combinations interpreted it as benign. The nodule was diagnosed as adenomatous hyperplasia.

Feature extraction using pre-trained CNN

In CNN, high-level features were generated as images passed through multiple layers. Here, two different approaches were used when the features were extracted. One was to collect the generalized (or objective) features from pre-trained CNNs directly (Fig. 3(a)). The other was to train pre-trained CNNs with modifications of the last layer to fit the given data (Fig. 3(b)). In this process, pre-trained parameters were considered as initial information and these parameters were fine-tuned by the training dataset so that they would carry information about the given training data. The overall procedure was transfer learning and fitted features were extracted from fine-tuned CNNs. The pre-trained CNNs, AlexNet32, OverFeat-accurate33, VGG-F34, VGG-1935, ResNet-5036, and Inception-v337, were used to extract features.

Feature concatenation

Features extracted from deeper layers are compressive, so discriminative information may have been missed. Also, different CNNs might have differentiated information. While some CNNs (AlexNet, VGG, VGG-verydeep) had several possible feature extractable layers, the others had only one feature layer to extract features. To catch the sensible information, we examined features extracted from different layers in a particular CNN and those from different CNNs in various combinations. For instance, Fig. 4 describes the feature concatenation of features extracted from three different CNNs.

Classification ensemble

Once a feature set was ready, a classifier was trained to establish the results. We employed two classifiers, SVM and RF, to produce results with different criteria. The two classifiers may agree but sometimes they give conflicting results. To observe an objective result, we applied the classification ensemble by averaging the results from the classifiers as follows.

For a given input image x, let \({{\rm{f}}}_{{\rm{r}}}({\bf{x}}),\,r=1,\cdots ,R\)be trained with classifiers and let \({{\bf{p}}}_{{\rm{r}}}=\{{p}_{(r,0)},\,{p}_{(r,1)}\}\) be the output of \({{\rm{f}}}_{{\rm{r}}}({\bf{x}})\), where \({p}_{(r,0)}\) and \({p}_{(r,1)}\) are the probabilities that the feature in question corresponds respectively to benign and malignant. Then, the outputs from each classifier were averaged to generate new probability results \({\hat{p}}_{0}\,\)and \({\hat{p}}_{1}\) as follows

and this is the ‘classification ensemble’. In Fig. 5(a,b) delineate the abovementioned process.

This classification ensemble can be extended to cases using multiple feature sets as well. Let \([{{\rm{N}}}_{{\rm{j}}}],\,{\rm{j}}=1,\cdots ,{\rm{M}}\) be the feature set extracted from CNNj (or the feature set obtained from j-th feature concatenation), then \({{\rm{f}}}_{{\rm{r}}}^{j}({\bf{x}})\) is the trained classifier using features in \([{{\rm{N}}}_{{\rm{j}}}]\) and \({{\bf{p}}}_{{\rm{r}}}^{j}=\{{p}_{(r,0)}^{j},\,{p}_{(r,1)}^{j}\}\)is the corresponding probability result. Then, a more objective result can be obtained by averaging the ensemble results through the procedure below

The above approach does not require any additional training processes because the ensemble method only uses results already obtained. Figure 5 illustrates classification ensembles with SVM and RF using two feature sets.

Data and statistical analysis

To evaluate the performances of radiologists and CNNs for predicting thyroid malignancy, sensitivity, specificity, and accuracy with 95% confidence intervals were estimated and compared with the logistic regression using the generalized estimating equation. We calculated the interobserver variability. Fleiss’s kappa statistics were used for interobserver variability among the 6 radiologists and Cohen’s kappa statistics were used for interobserver variability between the two radiologists with similar levels of experience. To obtain 95% confidence intervals of kappa statistics, the bootstrap method was used with resampling done 1000 times. We interpreted kappa statistics as follows: 0.01–0.20 (slight agreement), 0.21–0.40 (fair agreement), 0.41–0.60 (moderate agreement), 0.61–0.80 (substantial agreement) and 0.81–0.99 (almost perfect agreement41).

P values less than 0.05 were considered statistically significant. Data analysis was performed using R version 3.5.1 (R Foundation for Statistical Computing, Vienna, Austria).

References

Guth, S., Theune, U., Aberle, J., Galach, A. & Bamberger, C. J. E. J. O. C. I. Very high prevalence of thyroid nodules detected by high frequency (13 MHz) ultrasound examination. 39, 699–706 (2009).

Seo, J. Y. et al. Can ultrasound be as a surrogate marker for diagnosing a papillary thyroid cancer Comparison with BRAF mutation analysis. 55, 871–878 (2014).

Choi, S. H., Kim, E.-K., Kwak, J. Y., Kim, M. J. & Son, E. J. J. T. Interobserver and Intraobserver Variations in Ultrasound Assessment of Thyroid Nodules. Thyroid 20, https://doi.org/10.1089/thy.2008.0354 (2010).

Kim, S. H. et al. Observer Variability and the Performance between Faculties and Residents: US Criteria for Benign and Malignant Thyroid Nodules. Korean Journal of Radiology 11, 149–155, https://doi.org/10.3348/kjr.2010.11.2.149 (2010).

Lim, K. J. et al. Computer-aided diagnosis for the differentiation of malignant from benign thyroid nodules on ultrasonography. 15, 853–858 (2008).

Gao, L. et al. Computer-aided system for diagnosing thyroid nodules on ultrasound: A comparison with radiologist-based clinical assessments. 40, 778–783 (2018).

Doi, K. Computer-aided diagnosis in medical imaging: historical review, current status and future potential. J Computerized medical imaging graphics 31, 198–211 (2007).

Haralick, R. M., Shanmugam, K. J. I. T. O. S., man, & cybernetics. Textural features for image classification. 610–621 (1973).

Haralick, R. M. & Shapiro, L. G. J. P. R. Glossary of computer vision terms. 24, 69–93 (1991).

Glasbey, C. A. & Horgan, G. W. Image analysis for the biological sciences. Vol. 1 (Wiley Chichester, 1995).

Kotsiantis, S. B., Zaharakis, I. & Pintelas, P. J. E. A. I. A. I. C. E. Supervised machine learning: A review of classification techniques. 160, 3–24 (2007).

Tsantis, S. et al. Development of a support vector machine-based image analysis system for assessing the thyroid nodule malignancy risk on ultrasound. 31, 1451–1459 (2005).

Chang, C.-Y., Tsai, M.-F. & Chen, S.-J. In Neural Networks, 2008. IJCNN 2008.(IEEE World Congress on Computational Intelligence). IEEE International Joint Conference on. 3093–3098 (IEEE).

Tsantis, S., Dimitropoulos, N., Cavouras, D., Nikiforidis, G. J. C. M. I. & Graphics. Morphological and wavelet features towards sonographic thyroid nodules evaluation. 33, 91–99 (2009).

Chang, C.-Y., Chen, S.-J. & Tsai, M.-F. J. P. R. Application of support-vector-machine-based method for feature selection and classification of thyroid nodules in ultrasound images. 43, 3494–3506 (2010).

Ma, J., Luo, S., Dighe, M., Lim, D.-J. & Kim, Y. In Ultrasonics Symposium (IUS), 2010 IEEE. 1372–1375 (IEEE).

Liu, Y. I., Kamaya, A., Desser, T. S. & Rubin, D. L. J. A. J. O. R. A bayesian network for differentiating benign from malignant thyroid nodules using sonographic and demographic features. 196, W598–W605 (2011).

Luo, S., Kim, E.-H., Dighe, M. & Kim, Y. J. U. Thyroid nodule classification using ultrasound elastography via linear discriminant analysis. 51, 425–431 (2011).

Zhu, L.-C. et al. A model to discriminate malignant from benign thyroid nodules using artificial neural network. 8, e82211 (2013).

Song, G., Xue, F. & Zhang, C. J. J. O. U. I. M. A model using texture features to differentiate the nature of thyroid nodules on sonography. 34, 1753–1760 (2015).

Chang, Y. et al. Computer-aided diagnosis for classifying benign versus malignant thyroid nodules based on ultrasound images: A comparison with radiologist-based assessments. 43, 554–567 (2016).

Donahue, J. et al. In International conference on machine learning. 647–655.

Zeiler, M. D. & Fergus, R. In European conference on computer vision. 818–833 (Springer).

Sharif Razavian, A., Azizpour, H., Sullivan, J. & Carlsson, S. In Proceedings of the IEEE conference on computer vision and pattern recognition workshops. 806–813.

Litjens, G. et al. A survey on deep learning in medical image analysis. 42, 60–88 (2017).

Pan, S. J. & Yang, Q. J. I. T. O. K. & engineering, d. A survey on transfer learning. 22, 1345–1359 (2010).

Oquab, M., Bottou, L., Laptev, I. & Sivic, J. In Proceedings of the IEEE conference on computer vision and pattern recognition. 1717–1724.

Ma, J., Wu, F., Zhu, J., Xu, D. & Kong, D. J. U. A pre-trained convolutional neural network based method for thyroid nodule diagnosis. 73, 221–230 (2017).

Zhu, Y., Fu, Z. & Fei, J. In Computer and Communications (ICCC), 2017 3rd IEEE International Conference on. 1819–1823 (IEEE).

Liu, T., Xie, S., Yu, J., Niu, L. & Sun, W. In Acoustics, Speech and Signal Processing (ICASSP), 2017 IEEE International Conference on. 919–923 (IEEE).

Chi, J. et al. Thyroid nodule classification in ultrasound images by fine-tuning deep convolutional neural network. J Journal of digital imaging 30, 477–486 (2017).

Krizhevsky, A., Sutskever, I. & Hinton, G. E. In Advances in neural information processing systems. 1097–1105.

Sermanet, P. et al. Overfeat: Integrated recognition, localization and detection using convolutional networks. (2013).

Chatfield, K., Simonyan, K., Vedaldi, A. & Zisserman, A. J. A. P. A. Return of the devil in the details: Delving deep into convolutional nets (2014).

Simonyan, K. & Zisserman, A. J. A. P. A. Very deep convolutional networks for large-scale image recognition. (2014).

He, K., Zhang, X., Ren, S. & Sun, J. In Proceedings of the IEEE conference on computer vision and pattern recognition. 770–778.

Szegedy, C., Vanhoucke, V., Ioffe, S., Shlens, J. & Wojna, Z. In Proceedings of the IEEE conference on computer vision and pattern recognition. 2818–2826.

Ko, S. Y. et al. A deep convolutional neural network for the diagnosis of thyroid nodules on ultrasound. Head & Neck, to be appeared, https://doi.org/10.1002/hed.25415 (2019).

Song, W. F. et al. Multitask Cascade Convolution Neural Networks for Automatic Thyroid Nodule Detection and Recognition. Ieee J Biomed Health 23, 1215–1224 (2019).

Kim, E. K. et al. New sonographic criteria for recommending fine-needle aspiration biopsy of nonpalpable solid nodules of the thyroid. AJR Am J Roentgenol 178, 687–691, https://doi.org/10.2214/ajr.178.3.1780687 (2002).

Landis, J. R. & Koch, G. G. The measurement of observer agreement for categorical data. Biometrics 33, 159–174 (1977).

Acknowledgements

This work was supported by the National Research Foundation of Korea grant NRF-2015R1A5A1009350. This study was also supported by the Basic Science Research Program through the National Research Foundation of Korea (NRF) by the Ministry of Education (2016R1D1A1B03930375). The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Author information

Authors and Affiliations

Contributions

J.Y.K. – data collection, image segmentation, manuscript writing, conceived, coordinated, and directed all study activities, E.L, H.H – image post-processing, curation of the radiomics-based image features, implementation of CNN feature extractions and classifications, manuscript writing, H.J.K., H.J.M., J.H.B., S.H., J.S., J.Y. - acquisition of imaging data and manuscript review, K.H – statistical analysis. All authors read and approved the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Lee, E., Ha, H., Kim, H.J. et al. Differentiation of thyroid nodules on US using features learned and extracted from various convolutional neural networks. Sci Rep 9, 19854 (2019). https://doi.org/10.1038/s41598-019-56395-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-019-56395-x

This article is cited by

-

Deep learning-based CAD system design for thyroid tumor characterization using ultrasound images

Multimedia Tools and Applications (2023)

-

A comparison of artificial intelligence versus radiologists in the diagnosis of thyroid nodules using ultrasonography: a systematic review and meta-analysis

European Archives of Oto-Rhino-Laryngology (2022)

-

Application of Artificial Intelligence Computer-Assisted Diagnosis Originally Developed for Thyroid Nodules to Breast Lesions on Ultrasound

Journal of Digital Imaging (2022)

-

Diagnosing thyroid nodules with atypia of undetermined significance/follicular lesion of undetermined significance cytology with the deep convolutional neural network

Scientific Reports (2021)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.