Abstract

The specific connectivity of a neuronal network is reflected in the dynamics of the signals recorded on its nodes. The analysis of how the activity in one node predicts the behaviour of another gives the directionality in their relationship. However, each node is composed of many different elements which define the properties of the links. For instance, excitatory and inhibitory neuronal subtypes determine the functionality of the connection. Classic indexes such as the Granger causality (GC) quantifies these interactions, but they do not infer into the mechanism behind them. Here, we introduce an extension of the well-known GC that analyses the correlation associated to the specific influence that a transmitter node has over the receiver. This way, the G-causal link has a positive or negative effect if the predicted activity follows directly or inversely, respectively, the dynamics of the sender. The method is validated in a neuronal population model, testing the paradigm that excitatory and inhibitory neurons have a differential effect in the connectivity. Our approach correctly infers the positive or negative coupling produced by different types of neurons. Our results suggest that the proposed approach provides additional information on the characterization of G-causal connections, which is potentially relevant when it comes to understanding interactions in the brain circuits.

Similar content being viewed by others

Introduction

The concept of connectivity in neuroscience is closely related to two main subjects: anatomical and functional networks. The first one refers to the relatively static physical links between neurons, the so called wiring diagram of the brain network. It is mainly composed by neuronal axons organized in fibre tracts and, while it changes in long-term (ontogenetic) time scales (during development, maturation and aging of organisms), it is considered stable in most studies in healthy adults investigating fast brain dynamics (from milliseconds to hours to days or even months). Structural networks can be quantified by histological techniques and microscopy, then providing directionality in the connections or a directed graph, or estimated by non-invasive imaging techniques such as diffusion tensor imaging, producing undirected graphs1,2. This is the realm of functional connectivity, which studies the statistical interdependencies between the activities of different areas and is commonly measured with indexes like correlation or coherence. As such, functional connectivity produces un-directed functional graphs. There is yet another definition for connectivity, one concerned with functional interactions but in a directed manner, named, effective connectivity. This connectivity considers the effect of one node over another, establishing causality in the interaction. In recordings of time series, this connectivity is commonly estimated under the assumption that the sender (cause) must precede the receiver (effect) in time3. Techniques such as the Local Field Potential (LFP) and the electroencephalography (EEG), measure the postsynaptic potential of hundreds of neurons, reflecting the average of their electrical activity. The synchronized spiking of cell assemblies gives rise to oscillations in extracellular recordings4,5,6 (for example, theta and gamma rhythms in the hippocampus7,8, with distinct proposed roles). Understanding the rhythms’ properties, couplings, and the mechanisms that generate them can have direct clinical benefits, such as in the diagnosis and treatment of patients with epilepsy and other neurological disorders9,10.

Studies of the causal relationships between time-varying signals were initiated by Wiener11, who hypothesized that if the forecasting of a signal can be improved by incorporating information from the past values of another signal, then there is a causal influence of the latter on the former. This idea was later implemented by Granger through the use of autoregressive (AR) models, a methodology named after him as the Granger causality12,13 (GC). This method has become widely applied in many fields since then. In neuroscience, it has boosted the characterization of brain circuits, revealing remarkable findings as the different pathways for alpha and gamma activities in the visual cortex14, the leading role of the gamma waves in the right over the left hippocampus15 or the bidirectional communication between theta rhythms in the medial septum and the hippocampus16,17.

Despite its extensive use, there are some limitations and misunderstandings of GC18 which, although partially solved in modern approaches19,20,21, have generated uncertainty about its efficacy. Specifically, two main considerations have been recently pointed out as a limit to the application and interpretation of the GC18. First, the GC may be severely biased and of high variance when estimating separated and independent full and partial AR models. Even if the full model is a finite model order regression, the reduced model may be an infinite order process or have a moving average component, being a vector-autoregressive moving average (VARMA) process. These processes are poorly approximated by AR models and result in biased results of connectivity. Nevertheless, this problem has been previously acknowledge22,23 and solved by methods that do not require reduced models21,23,24. Other approaches like state-space (SS) processes25,26 characterize an observed time series by an unobserved state driven by a stochastic process, and are equivalent to VARMA processes27,28, solving the problem of the moving average component25,26. Second, the GC is influenced by the dynamics of the sender and the channel, but not by the receiver18. This limitation has been argued to be a misunderstanding of the concepts of causality and functional connectivity. Methods like GC stablish a statistical relation based on observed responses and are measurements of directed functional connectivity. On the other hand, the identification of the mechanisms that produce an effect in a neural system are referred as causality or effective connectivity and they cannot be achieved solely by indexes like GC and require structural information, included in other methodologies as the dynamic causal modelling (DCM)3,19,29,30. While it is true that it should be used carefully, the GC is a reliable methodology to infer brain connectivity and new approaches are continuously being developed to overcome its limitations19,20,21.

Several other methodologies have been developed to measure the directed functional connectivity in brain networks, considering different features of the time series31,32. For instance, Baccalá and Sameshima defined the direct coherence33 (DC) and the partial directed coherence34 (PDC), two indices based on AR models which allowed a spectral characterization of the connectivity, where the former measures the power that spreads from one structure to another (either directly or indirectly) and the latter represents only the direct pairwise interaction. Note that the distinction between direct (partialized) and indirect is made only with the observed variables, that is, with the signals included in the model. As most neuroscience data are nonstationary and the statistical connectivity may vary along the time, Dhamala and collaborators proposed a spectral time varying GC based on nonparametric statistics35. Instead of solving an AR model, they derived the frequency components directly through Fourier and wavelet transform of the data, bypassing the parametric data modelling. Other methodologies to estimate the directionality without AR models are based on the information theory, as the transfer entropy (TE)36 or the phase transfer entropy (PhTE)37. The main advantage is the lack of linear modelling allowing them to detect nonlinearities in the connectivity. On the contrary, they do not have a spectral representation and require long time series to compute the indices.

Brain computations are realized in a densely but sparsely connected network of excitatory and inhibitory neurons. Integration of synaptic inputs and firing of excitatory neurons are often equated with information encoding and transmission, and respectively, with the inhibitory activity setting the pace of communication by coordinating the nodes and establishing windows of opportunity for the transmission38,39,40,41. Current connectivity indexes quantify the degree of interaction and directionality between brain signals, but they cannot elucidate their functional role without precise knowledge of the underlying wiring diagrams. Here, we propose going one step further by characterizing whether the activity of the receiver follows directly or inversely the dynamics of the sender. Therefore, we define the relationship as positive or negative GC respectively, by using selected elements of AR models. We validate our method in virtual networks, where brain signals are simulated as the extracellular currents, similar to the experimentally measured LFP. Under the theoretical assumption that excitatory and inhibitory activities reflect direct and inverse relationships between populations, we test the efficiency of our approach in different scenarios, combining solely excitatory and inhibitory projections with mixing activity of both sources.

In the first section of this paper, we review the concept of GC and present the theory behind our approach. We then test and validate our method with time series generated numerically from neuronal models of anatomical motifs in which the structural connectivity is known. Finally, we discuss its advantages and limitations and suggest future lines of research in this framework.

Methods

Granger causality

Given two time series, X(t) and Y(t), it is possible to construct an AR model for each signal in which each time point is the linear combination of their p past samples plus a residual:

The coefficients in the A matrix represent the weights of the contributions of past values X and Y in the prediction, and ε and μ are stationary zero mean white noise processes which represent the prediction error. The model order p can be estimated following different criteria, with the Akaike Information Criterion42 (AIC) and the Bayesian Information Criterion43 (BIC) being two of the most common choices:

where T is the sample size, n is the number of variables and ∑ is the covariance noise matrix defined as:

If we add to the Eq. 1 the information that one of the variables has on the prediction of the other, we obtain a bivariate AR model:

If the variance of the error of the bivariate model var(μ2) is smaller than that of the univariate one var(μ1), then the past of Y(t) improves the prediction of X(t); in other words, Y(t) Granger-causes (G-causes) X(t). An advantage of the GC is that it can be extended for multivariable conditions and estimates direct and indirect links between the included nodes. For example, let us suppose that a third variable Z(t) exists and the connectivity of the network is Z → Y → X. Then, using the bivariate model in Eq. 4 with the variables X(t) and Z(t), an interaction from Z(t) to X(t) would be detected, even if it is mediated by Y(t). This issue is solved by including the three variables in a new model:

Comparing the residuals in both the bivariate and multivariate conditions (ε2 or ε3), the variance of ε3 would be the same as that of ε2, as the past values of Z(t) do not improve the forecasting because all the indirect contributions over X(t) are already included in ε2, only using the information in Y(t).

While this procedure represents the main theory behind the detection of GC, the combination of reduced and full regressions (i.e. Equations 4 and 5 respectively) could introduce a bias in the estimation of the GC18,22,23. This issue lies in the definition of the model order of the AR model. While the full AR model may have a finite model order, the same model order is considered when it is decomposed into the reduced regression. However, these processes are generally of infinite order, have a moving average component (VARMA processes) and are poorly modeled using the AR models. One way to overcome this limitation is by the characterization of the model as a SS process instead of an AR process. This way, the observed multiple time series depend upon a possibly unobserved state by an observation matrix and a state transition matrix25,26,27,28 which may be a representation of a VARMA processes with a equivalence between the coefficient A matrix and the state transition matrix27,28.

Note that the estimation of a full AR model is usually limited to the number of nodes with available information in the network. In neuroscience, the variables of the AR model represent different brain units or regions, where the time-series are the recordings (e.g. EEG, fMRI, MEG) of these areas. However, the measurement of all the elements of the circuit is generally unfeasible and the inferred AR model is constrained by the data. These unknown or unrecorded variables introduce a confounding effect in the AR that may drastically affect the estimation of the functional connectivity41,44.

Several algorithms and methods can be used to compute GC, including different statistical analyses to check both the AR model and the result of the analysis23,45,46. In this paper, we used the Matlab toolbox recently proposed by Barnett and Seth23, which overcomes some limitations of previous toolboxes47, including the estimation of GC with a single full regression.

Determining positive and negative Granger causality

The second step consists in identifying whether there is a positive or negative interaction between the sender and the receiver. Let us consider the paradigm where X(t) and Y(t) are the LFP recordings of two different populations. If we assume that there is an excitatory link from V(t) to X(t), an increase of the spiking activity in population Y(t), reflected by an increase of the amplitude of Y(t), would lead to a variation in the future values of X(t). In return, in the case of an inhibitory connection, assuming the same Y(t), we can expect an increase of negative currents in the population X(t) or, in other terms, a negative correlation between Y(t) and X(t). As these changes explain only a fraction of the total variance of both LFPs, the type of interaction cannot be identified straightforwardly. The idea behind our approach is that this information can be extracted from the coefficients Aij of the AR model. Thus, we selected those A components that assess the influence of Y(t) to X(t) (A12k,k = 1,p). If the average of all Aij coefficients is positive, we assume the input from Y(t) to be excitatory. Otherwise, if it is negative, then the input from Y(t) is regarded as inhibitory.

Estimating these coefficients is usually not simple. Even if a single full regression with the correct model order is considered in the analysis, the recordings are not always clean enough to extract the interactions intra- and inter-populations, and sometimes the time series are too short compared to the optimal model order. Moreover, the algorithms used to solve the equations of the AR models tend to assign a value to every Aij coefficient – even to those not improving the forecasting. Thus, we propose to estimate which components of the A matrix do not significantly contribute to the AR model by performing multiple versions of the full AR model in Eq. 5. In each version we set to zero specific coefficients of the matrix A and test as many models as possible combinations of A can be realized with the coefficients forced to zero. Note that we are not building a reduced model but estimating which components of the full regression model are not contributing to the prediction. Once those parameters that are not useful have been identified, a single full AR model is used to infer the functional connectivity. The optimum zero constraints are those belonging to the AR model that minimizes a modified version of the information criteria. The previously defined AIC and BIC terms are redefined by28:

where σ2 is the sum of the estimated residuals (i.e. ε2, μ2…) divided by the sample size T and m is the number of estimated parameters. For the original model, the number of parameters for each equation is the model order p multiplied by the number of variables in the model.

As it was said before, in each version of the AR model certain A coefficients are set to zero. For example, in a one model only the coefficient Aijp is set to zero, in a second model only the coefficient Aijp−1 is set to zero and in a third one, both Aijp and Aijp−1 are set to zero. Therefore, the total number of matrices to test with possible zero constraints is \({2}^{({K}^{2}p)}\), where K is the number of variables in the model. As this value is usually very large, the computational cost for every case can be impracticable. Several strategies have been proposed to overcome this limitation, and we suggest to apply a combination of bottom-up and top-down strategies in order to reduce the number of matrices to test, as it has been previously described in28.

Bottom-up strategy

In general, the order of the selected model should be long enough to capture the slowest relationship between the variables we consider. However, not all lags provide useful information. For example, if we select a model order that is higher than that estimated by the information criteria, we are taking more coefficients than necessary to compute the AR model. Although an increase in the model order yields a better fitting of the data set, since more coefficients can be adjusted to reduce the residue, this improvement is not significant for orders higher than those estimated by the information criteria. In these cases, the algorithms tend to fit the noise instead of the real interactions.

Since the number of coefficients that are necessary to model every link in a system can be different for each interaction, some type of regularization, which does not include more coefficients than necessary, would be desired. The idea behind this method is to compute the minimum order for the contribution of one time series over another. Instead of using the whole model, the equation of each variable is analysed separately. That is, in order to determine the constraints of Eq. 5, we consider the model:

where pxx is the optimal model, which minimizes the selected criterion. To find the value of pxx, where pxx = p, p − 1, …, 0, the information criterion in Eq. 6 is computed for the model in Eq. 7. In each iteration, the model order is decreased by 1 and if the information criterion is lower than the one estimated in the previous iteration (i.e. the new iteration minimizes it), the model order is reduced keeping the same variance for the residue. This step is repeated until a new iteration does not improve the information criterion (or pxx = 0). In the next step, pxx is fixed to its optimum value and a new variable is added into the equation, fitting a new model:

After these steps, a new model order is computed for the participation of one variable to each equation separately, and the coefficients beyond these values are set to zero.

Top-down strategy

Again, we use each variable separately for this method. After setting the excess of coefficients to zero with the previous strategy, the contribution of each remaining value is tested. Initially, we compute the new information criterion value. Then, starting from the furthest nonzero lag, each coefficient is set to zero and the model is estimated again. If the new information criterion is lower, we update the model keeping that zero constraint. As an example, let us consider a system with variables X(t), Y(t) and Z(t). The equation of one of the variables would be:

The first coefficient to test is \({A}_{11{p}_{xx}}\), then \({A}_{11{p}_{xx}-1}\) and so on. If when setting one coefficient to zero the information criterion minimizes, that constraint is kept in further iterations. Otherwise, it remains unchanged and the next iteration is computed.

These strategies allow us to test every single value at once, considerably reducing the computational cost. The total number of required operations is a multiple of K2p, and increases linearly with the number of variables and the model order, avoiding the exponential growth when all matrices are tested. Alternative methods can also be applied to determine the constraints of the A coefficients, each with specific advantages and disadvantages (see28 for details).

Estimating positive and negative Granger causality ratio

Once the linear constraints have been established and the corresponding A coefficients eliminated, we estimate the sign of the GC (sGC) as the following ratio:

where the denominator:

is defined to normalize the index so that sGCij ∈[−1,1].

In our specific paradigm, a sGCij∼ −1 (sGCij ∼1) indicates a dominant inhibitory (excitatory) connection in the link, whereas sGCij = 0 on a significant link (as assessed by the traditional GC) suggests a balance between excitation and inhibition.

Statistical significance

To assess the statistical significance of the defined index, the whole time-series were divided into time windows of the same length, being the main condition that the variables within these windows are covariance stationary48 (i.e., they have constant mean and variance). If the data is acquired in an event-related task, i.e., the response to a stimulus is recorded, the fast changes of the signal may exhibit nonstationary epochs in each repetition. An adaptive multivariate AR model can be employed in those cases49, where a single model is achieved based on the entire data set. Furthermore, the interaction between brain regions is time-varying. This means that a single model cannot reproduce the behaviour of the network. New algorithms involving the estimation of time-variant AR models have been developed to overcome that limitation and contribute to the study of the dynamics in brain connectivity50. The sGC was assessed as the average of the sGC over all windows. The window length was fixed to 5 seconds in this work.

To estimate the statistical significance associated to the sGC, we used a surrogate data analysis (N = 2000 in this work) by block-resampling, where each signal was cut at a random time point and the blocks were permuted. The size of the blocks was the same as that of the segments used for the estimation of the sGC, so that the difference between the surrogates and the original data, if any, could not be attributed to the different number of samples in each case. The block shuffling procedure has the advantage, unlike the phase reshuffling one, to preserve the possible relationship among the phases within each time series, which is known to be a confound when using surrogate data to estimate the existence of correlation between two data sets51. Moreover, this methodology outperforms other techniques based on linear Fourier approaches, as it preserves the irregular fluctuations of the signal (i.e. short transients of non-stationarity)52. In this way, the temporal interactions were broken, minimizing the distortion of the original dynamics. Then, the sGC for each surrogate data was computed following these two criteria. First, no prior GC analysis or strategies to reduce the number of A coefficients were applied. These methodologies would include supplementary statistical tests of connectivity, instead of limiting the analysis to the significance of the sGC value. Secondly, the denominator in Eq. 10 was the same as in the original time-series. Thus, the surrogate sGC value was normalized respecting the original signal, sharing the same scale. The significance was then assessed, as usual, by comparing the value obtained from the original data with the distribution of the values obtained from the surrogates. For this, the normality of the distribution was firstly confirmed using the Kolmogorov-Smirnov test, estimating its associated mean and standard deviation. Finally, the p-value was obtained by evaluating the surrogated cumulative distribution at the sGC original value.

Note that an optimal strategy would be to generate a surrogate data set with the same GC as the original time series but with balanced excitatory and inhibitory interactions. As this situation is unpracticable without knowing the specific anatomical structure of the recordings, what we tested was the error induced in the estimation of the A coefficients with signals of common properties, with the same amplitude and frequency as the original signals but without a G-causal link.

Neural motifs

To test the validity of our approach, we simulated several neuronal motifs with different structural connections (see Fig. 1). The motifs were composed of three to four nodes connected by chemical synapses, which were either excitatory or inhibitory. Each node was a neuronal population composed of 400 excitatory and 100 inhibitory neurons described by the Izhikevich model53, which receives 50 synapses (sparse connectivity 10%) from randomly selected (excitatory or inhibitory) neighbours in the same population. These parameters were based on previous studies that have simulated spiking neuronal population models41,53,54. In addition, in all the simulations each neuron in the network received an independent Poisson input of excitatory synapses. For the coupling between the different nodes, in order to produce each different motif, we assumed that each postsynaptic neuron in a receiver population received 20 synapses from randomly selected presynaptic neurons in the sender population.

(a) Scheme of the structure of all implemented motifs. Broken arrows represent the different options for interconnecting the populations that change for each motif. Links can be excitatory, inhibitory or non-existent. (b) Representative example of one motif, including three links with excitatory projections (blue arrows) and one with an inhibitory projection (red arrow). (c) Examples of the temporal evolution of the mean membrane potential for the three populations in B and their power.

The analysed time series corresponded to the mean membrane potentials of each population, which were calculated as the average value of the membrane potential v of all neurons within the population. The averaged value of v can be thought of as a crude approximation of a LFP recording.

The membrane potential v and the recovery variable u of each neuron are described by53:

The summation ∑Ix is over all the synaptic currents. The model establishes that when v ≥ 30 mV, its value is reset to c, and u is reset to u + d. The parameters a,b,c and d determine the firing pattern of the neuron. We employed (a,b) = (0.02, 0.2) and (c, d) = (−65, 8) + (15, −6)σ2 for excitatory neurons and (a,b) = (0.02, 0.25) + (0.08, −0.05)σ and (c, d) = (−65, 2) for inhibitory neurons, where σ is a random variable uniformly distributed on the interval [0,1] that determines the proportion of different spiking neurons (between regular spiking to bursting modes).

In particular, to test the robustness of sGC against neuronal variability the parameters c and d in Eq. 12 were re redefined as:

Both σ1 and σ2 were random variables uniformly distributed in the interval [0,1]. We simultaneously varied x and y, keeping the relation y = 2x/5 in order to change the proportion of different types of excitatory neurons. Therefore, the maximum values of c and d varied along the line \(d=-\,\frac{6c}{15}-18\). For example, when cmax = −55 (which occurs for x = 0) most neurons were intrinsically bursting neurons, but there were also regular spiking and chattering neurons.

The synaptic current Ix, which can be excitatory, mediated by AMPA (A), or and inhibitory, mediated by GABAA (G) is described by the following equations:

where x = A,G and EA 0 mV and EG = −65 mV are the reversal potentials. Unless otherwise stated, all excitatory (inhibitory) weights were set to gA = 0.5 nS (gG = 2 nS). The dynamics of the fraction of bound synaptic receptors rx is given by:

The summation over k stands for pre-synaptic neurons. D was taken, without loss of generality, equal to 0.05. The time decays were taken as τA = 5.26 ms and τG = 5.6 ms. Each neuron was subject to an independent Poisson input, representing n pre-synaptic neurons, with a spiking rate R/n. Unless otherwise stated R = 600 Hz. The Poissonian synapses were assumed as excitatory (AMPA) connections. For each set of parameters, we ran the simulation for 24 seconds with a sample rate of Fs = 20 kHz. For the GC analysis, we downsampled the data at 250 Hz.We discarded the first 4 seconds to eliminate transient states, until the signal had a covariance stationary, i.e. with constant mean and variance.

In order to study the effects of plasticity on the sGC measures, we incorporated a hybrid spike-timing dependent plasticity (STDP) rule54,55 in the simulations. To this aim, the excitatory conductance of each inter-population synapse was updated as follows:

where τ− = τ+ = 5 ms, A− = 1.0 and A+ = 0.5 nS. For each set of parameters with plasticity, we ran the simulation over 48 seconds.

The effect of the measurement noise was modelled by varying the signal to noise ratio (SNR). For every signal, the SNR was computed as SNR = 20log10 Ax/An, where Ax is the mean amplitude of the extracellular membrane potential of one population (renamed as X(t)) and An the mean amplitude of the noise. Noisy temporal series (N(t)) were constructed matching amplitude spectrum and signal distribution with X(t)56. The amplitude of the noisy time-series was then scaled as a function of the desired SNR and added to the original signal: Xn(t) = X(t) + kN(t) where k = 10SNR/20.

Electrophysiological recordings

To test the proposed methodology in real brain signals, we used a dataset of hippocampal electrophysiological recordings in rats40. Briefly, a total of N = 5 animals were implanted with a multichannel electrode along the dorsal hippocampus. For each subject, we selected three LFP signals, recorded at the stratum radiatum and stratum lacunosum-moleculare in CA1 and one in the dentate gyrus (DG), with the animal freely moving in an open field for 10 minutes. These layers have specific patterns of activity largely contributed by inputs from different afferent pathways to the CA1 region, including the Schaffer collaterals from CA3 to the str. radiatum, the layer III of the entorhinal cortex (EC) to the str. lacunosum-moleculare57,58 and the layer II of the EC to the DG through the performant pathway57. They were firstly identified using their electrophysiological activity, with the presence of sharp-waves in str. radiatum and a maximal theta activity in str. lacunosum-moleculare and the inversion of the theta rhythm in the DG. The location of the electrodes was further verified post-mortem with the histological analysis. The data was acquired at 5 KHz, high-pass filtered at 0.5 Hz to remove the continuous component and Notch-filtered at 50 and 100 Hz to remove the net noise and its first harmonic. For the analysis, the data was downsampled at 250 Hz after low-pass filtering at 250 Hz to avoid aliasing.

Results

Identifying excitatory/inhibitory connections

The first step to validate our approach entailed testing the main assumption underlying sGC, namely, the relationship between the A coefficients of the AR model and the type of projection (excitatory or inhibitory). To do that, we tested 30 motifs composed of three populations (Fig. 1a). Maintaining a constant population structure, each motif presented a different connectivity pattern, where for each link we selected one of the three options: only excitatory, only inhibitory or no direct link. Therefore, we expected a positive sGC for those links with excitatory projections and a negative sGC for those with inhibitory projections. The connectivity of all motifs was set to encompass many different possibilities (Fig. 1b), from the simplest case with only one link, to the most complex case, with excitatory and inhibitory connectivity among all populations.

When we applied the sGC, the connectivity was measured by GC analysis (Fig. 2a; 100% of hits; GC of excitatory links: 0.0864 ± 0.0176; GC of inhibitory links: 0.0244 ± 0.0076; mean ± sd), computing the synaptic ratio only in those networks with a significant G-causality. In summary, 88 links were analysed (48 excitatory, 40 inhibitory). The results show that sGC differentiates both conditions in all cases (excitatory sGC: 0.926 ± 0.026; inhibitory sGC: −0.746 ± 0.145; mean ± sd; p < 0.05), which confirms its ability to identify the type of synapses (excitatory or inhibitory) at every connection. Moreover, different window lengths (see Methods) were used to test the robustness of the method, including 2.5, 5 and 10 seconds (results not shown). In all cases, 100% of the links were correctly classified as excitatory or inhibitory.

(a) Average of GC and sGC across links, revealing differences in both conditions. Links from an excitatory node exhibit a higher GC and sGC value than those from an inhibitory one. (b) Representative GC matrix for the motif showed in Fig. 1B. All significant values correspond to the implemented structure. (c) sGC ratios for each bind in Fig. 1B, where positive and negative values match excitatory and inhibitory connectivity, respectively.

Imposing the zero constraints and fixing the model order at p = 15 (60 ms), a total of A = K2p = 135 coefficients were computed for each motif. The number of elements eliminated by the Bottom-Up strategy was 7.83 ± 2.32 (mean ± sd), while the Top-Down imposed a total of 69.30 ± 5.01 (mean ± sd) constraints. Combining both strategies, the percentage of information deleted of the whole A matrix was 13.35% ± 1.72% (mean ± sd), measured as \(100(1-({\bar{A}}_{cons}/\bar{A})\), where \({\bar{A}}_{cons}\) and \(\bar{A}\) were the mean of the modulus of all A coefficient with and without constraints, respectively. The sGC was also computed using the original A matrix, revealing significant lower values as compared to the restricted version (p < 0.0001, paired t-test, results not shown), although finding the correct mechanism (excitation or inhibition) in all cases.

Determining the excitatory/inhibitory ratio

We also studied a second type of motif with four nodes. The results of the sGC again inferred the correct G-causality matrix (Fig. 3), also identifying the sign of the links in those cases where all the projections were only excitatory or inhibitory. The input from population 2 to population 4 had both types of synapses and, although this link was found, the sCG showed a high positive ratio, suggesting a prevalence of excitation over inhibition.

(a) Scheme of the analyzed motif. Red and blue arrows indicate inhibitory and excitatory projections, respectively. (b) Resulting matrix of GC. Nonzero values represent links with significant connectivity, which match with the physical network. (c) Matrix with the sGC ratio. Only values with significant sGC are represented (p < 0.05). Values near 1 represent predominant excitation; those close to -1 represent higher inhibition. Pairs with a single type of projection (excitatory or inhibitory) are correctly identified, although the projections from population 2 to 4 are considered excitatory, suggesting that excitation is monopolizing the functional connectivity.

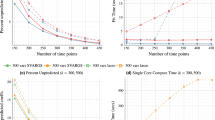

We then created a modified version of this model by increasing the inhibition in the 2 → 4 connection to characterize the ratio of synapses when both types of projection were present in the same connection. As the main activity in the original motif was excitatory, the weight of the inhibition was progressively increased while keeping the rest of the parameters constant. The results showed a reduction of the GC which matched a negative trend in sGC when the inhibition overcame the excitation (Fig. 4). The functional connectivity was restored when the negative sGC dominated the system, increasing systematically with higher values of inhibitory conductance. The correlation between the sGC and the inhibitory conductance followed a sigmoidal function, reaching values near the maximum and minimum of the sGC when the inhibition was lower than 2 nS and higher than 4.5 nS, respectively. The intermediate window can be approximated to a linear regression of both factors (ρ2 = 0.92; p < 0.0001; slope b = −0.75). In our case, the balance was found for an inhibition weight gG ∼3.25 nS and gA = 0.5 nS. Thus, as the inhibition increased, the sGC rate became more negative. The projections were identified as predominantly inhibitory for gG > 5 nS (sGC = −0.91).

(a) GC is highly related to the combination between types of projection. For a fixed value of excitation, a moderate increase of the inhibitory conductance (between 3 and 4 nS) suppresses the functional connectivity, while for higher values (from 5 to 8 nS) it raises again, likely reflecting a competition between excitation and inhibition in the link. (b) The sGC ratio reveals the balance between excitation and inhibition, with a working zone of linear correlation (from 2 nS to 4.5 nS). The progressive increase of the inhibition counterbalances the excitation and minimizes the GC, until it dominates the link (from 5 to 8 nS) and enhances the functional connectivity. Note how for common values of GC (black arrows), the sGC perfectly differentiates both conditions.

In order to study the robustness of our method against the parameters of the model, we compared the connectivity patterns obtained for the four nodes motif in five extra cases. First, we performed simulations in the presence of plasticity rules for the excitatory synapses inter-populations (see Methods for more details). In Fig. 5a we compared how sGC changed with the inhibitory conductance from node 2 to node 4 when STDP rules were applied. The results show a low dependence of the sGC against plasticity, without significant differences in most of the cases compared to the original motif. Only when node 2 was highly inhibiting node 4, the backward link (4 → 2) was not properly identified. Secondly, the robustness of the method against measurement noise was tested by changing the SNR (Fig. 5b), from the perfect situation (without added noise) to SNR = −1 (see Methods). Both the GC and the sGC exhibited proportional decays with increasing noise level, remaining the sign of the sGC stable for all SNR values. Even in conditions of high noise, if the temporal GC was able to detect the functional link, the sGC was also able to reflect the excitation and inhibition projections. Third, for fixed synaptic conductance, we changed the amount of internal variability of the neuronal cells in each population, by modifying the proportion of neurons with different firing patterns (Fig. 5c). We observed that sGC reflected the expected functional connectivity of the network despite the change in the ratio between regular spiking and bursting neurons. Fourth, we changed the amount of external noise received by each neuron, which altered the amount of averaged activity in the network. In Fig. 5d we plot sGC as a function of the Poisson rate. The G-causal network estimated by the method is invariant to different noise inputs in three links, with a sGC close to 1 or -1 from node 2 to node 4, for those inhibitory conductances that allowed for a clear distinction between excitatory or inhibitory sGC in the original model (Fig. 4). Five, the effect of the sampling rate was tested by replicating the analysis and changing the sampling frequency of the data (Fig. 5e,f). The sGC ratio decayed progressively as a function of the sampling rate, matching with the results of the GC. Even if the information included in the AR was similar (the model order in ms was approximately constant, Fig. 5f), a higher sampling frequency implies a larger number of parameters to estimate, therefore increasing the errors in the measurements. These results validated the use of sGC in all those conditions where the GC can be applied.

(a) Evolution of sGC ratio in each link of the motif, with and without STDP plasticity rules (yellow and blue lines, respectively), as a function of the inhibition weight from population 2 to 4. Changes in the value of this projection only affect the sGC results in that link, while the other links remain the same. An increase in the inhibition weight, involving a change in the excitatory/inhibitory ratio, is captured by the method, showing opposite ratios for inhibition weights of 2 nS and 8 nS (where the excitation is kept constant). (b) Variation of GC and sGC in function of the SNR. Both measurements present similar decays when the external noise is increased, remaining the sign of the sGC (excitation/inhibition) stable even for low SNR. GC values were normalized by setting the maximum value to 1, and GC corresponding to link 2 → 4 has been inverted for comparison purposes with sGC. (c) sGC in function of the internal variability of the population, for a case with low (green) and high (purple) inhibitory conductance from 2 to 4. In all cases, the sGC remains stable. (d) Variation of sGC for different noise inputs. The method finds similar results in almost every link when the Poissonian rate is increased, except for the connectivity from 4 to 2, which loses accuracy when the opposite projections are predominantly inhibitory. (e) Comparison of GC and sGC for different sampling rates. The GC decays for higher values of sampling frequency, as well as the sGC. Nevertheless, the sGC finds the correct coupling in all cases. (f) Model orders estimated using the AIC for the simulations in e, with their values in samples (left) and in milliseconds (right).

Positive and negative interactions are present in the hippocampus

We tested whether the proposed methodology offers new information about the functional connectivity in the well-known hippocampal circuit. We selected two LFP signals from the rat CA1 region, recorded at the str. radiatum (RAD) and lacunosum-moleculare (LM) and a third LFP recorded at the DG (Fig. 6a,b). The results of GC showed a significant functional connectivity in all directions, being higher in the direction from LM to RAD (Fig. 6c). In contrast, the sGC revealed distinct couplings, positive in both links to RAD, negative and positive from RAD to LM and from DG to LM, respectively, and negative from LM to DG, while there is no clear sign from RAD to DG. These results were highly consistent across subjects and resistant to variations in the sliding time window length (between 5 and 20 s) and model order between 12 and 24 (48 and 96 ms). Interestingly, the largest values of sGC (negative from RAD to LM and positive from DG to LM) were not associated to the highest GC. We also found that the link with the strongest connectivity measured by GC was associated with a modest and positive sGC. We repeated the analysis of sGC without following the proposed strategies, that is, using the original A matrix without imposing zero constraints, finding similar results. However, the absolute value of the sGC was lower compared to the analysis with restricted coefficients (p < 0.05, paired t-test, results not shown). These results confirmed that our methodology outperforms classic algorithms estimating AR models which do not impose constraints to the computed coefficients.

(a) Histological recording of the hippocampus showing the location of the stratums recorded. (b) Example of time-series and power spectrum of the LFPs recorded at stratum radiatum (RAD LFP), stratum lacunosum-moleculare (LM LFP) and at the dentate gyrus (DG LFP). (c) Results of GC (left) and sGC (right) between hippocampal LFPs. The strongest connectivity is found from LM to RAD, which correspond to a positive sGC with the same value than from DG to RAD. while the links to LM present the highest values of sGC (negative and positive from RAD and from DG, respectively).

Discussion

While the GC is often interpreted as a tool to detect the information flow in terms of bit rate30,59, it remains unclear the functional meaning of that information. Here, we presented a new GC-based method aimed at inferring correlations on Granger-causal interactions of brain signals. In this way, we are able to better characterize the connectivity of the network by determining whether a GC is positive or negative, and relating this result with two different functional mechanisms. We successfully tested it in a specific paradigm, where excitation and inhibition are supposed to interact inversely in a G-causal link, generating different coefficients in the AR model. These two scenarios (i.e. excitation and inhibition) have quite different roles in brain networks38,39, but their estimation is not possible when GC is applied. Our approach demonstrates that the GC of a particular link may be positive or negative, and its sign should be associated to different functional meanings. It is important to note that the specific meaning will depend on the signals being used. This is, positive or negative GC coefficients will likely reflect different underlying neurobiological mechanism when obtained in the analysis of blood oxygenation level dependent (BOLD) signals from fMRI experiments, or when obtained from intracranial electrophysiological recordings. But in all cases, however, positive and negative coefficients will highlight fundamentally different statistical interdependencies in the signals.

The effect of excitatory and inhibitory neurons in functional connectivity has been studied using multi-unit spike activity from large-scale neuronal networks60. Furthermore, previous works have demonstrated the utility of AR models to observe positive and negative couplings in fMRI61. This methodology generally estimates an order-1 model to compute the GC, identifying “excitatory” or “inhibitory” pathways based on the sign of the only coefficient extracted. However, several limitations, e.g., the invariable model order62, have prevented their application to datasets with quite diverse time scales. Our approach overcomes these issues, as it considers all the significant coefficients without prior restrictions of model order, proposing a generic methodology that can be extrapolated to every GC study. Moreover, we have proposed two strategies to mitigate the effect of artefactual parameters included in the AR models, by imposing zero constraints to those elements that are not significantly contributing to the prediction. While these steps are not essential to determine the sGC value and other approaches can be used instead28, the results were always improved when observed (i.e. the absolute value of sGC was higher compared to the non-restricted model).

Our model revealed that excitation and inhibition contribute differently to the measured connectivity. In a balanced situation, the G-causal influence of an excitatory link showed the highest values (Fig. 1b). Furthermore, when both excitation and inhibition are present in a link, they compete to control the target population. Our analysis suggests that, for the neuronal dynamics and composition of neural networks used in our models, excitatory activity tends to mask the inhibitory one, as the sGC ratio did not change significantly as compared to the case when only excitatory projections were present (Fig. 3c). These results demonstrate a correlation between the degree of GC and the type of synapse. However, an increase in the weight of the inhibitory projections progressively reduces the sGC ratio, until breaking the balance and changing the sign of the sGC (Fig. 4). This might provide valuable information on the dynamics of brain connections in which the influence of one region over its efferent targets varies dynamically with, or as a consequence of, the cognitive needs.

The analysis of real LFP signals using sGC unveiled different couplings in the directed functional connectivity of the hippocampus. We computed a multivariate AR model using three signals recorded at specific layers of the CA1 region and the dentate gyrus. However, some limitations of the signals need to be mentioned before speculating about the neurobiological meaning of the obtained sGC results. First, it must be considered that LFP signals are affected by volume conduction that may create spurious correlations between recordings63. Second, the multivariate AR model allows the discrimination of direct and indirect links between the signals included in the model as long as all the relevant links are represented. Therefore, this limitations needs to be considered when applying these methods to a particular dataset41. Moreover, the GC is also sensitive to the signal-to-noise ratio of both time-series64, thus, the high theta power in LM may be affecting the prediction on RAD and DG. With these limitations in mind, we interpret the sGC results as follows: the activity reaching the distal part of the dendrites of CA1 pyramidal neurons from the EC (LM) positively interacts with the inputs arriving downstream in the dendrite from CA3 (RAD). This positive interaction may translate into facilitated integration of afferent information from both pathways. Contrary, the negative interaction found from RAD to LM, may reflect competition between the two inputs and the segregation of the transmission channels. The mechanism underling this negative interaction could be the activation of oriens lacunosum-moleculare (OLM) interneurons, known to receive inputs from the Schaffer collateral and project to the LM inhibiting the EC input65. Therefore, the balance between integration and segregation could be dynamically controlled by the inhibitory tone. The positive sGC from the DG to RAD may represent the information flow through CA3, following the trisynaptic pathway in the hippocampus. Regarding the interaction between LM and DG, it can be explained by the connectivity in the EC since layers II and III in this neocortical structure project to the DG and LM, respectively. The negative sGC from LM to DG may accounting for the inversion of the hippocampal theta rhythm between CA1 and the DG7. Finally, there is not a clear sign in the sGC from RAD to DG which also exhibits the lowest value of connectivity, in consonance with the lack of a direct anatomical pathways between these structures.

Note that GC and sGC are complementary indices and can be simultaneously computed. The former estimates the amount of information transmitted (information flow), while the latter represents, in our framework, a closer look to the underlying causal mechanism3,30. Although the concept of causality is embedded in GC, it implies statistical dependences rather than true physical causal interactions among the variables30. In this line, GC and other methods based in AR models have been classified as directed functional connectivity indexes, while the term effective connectivity is used when determining the circuitry that causes an effect in neural systems. In this line, there are other approaches, like DCM29, which find the optimal mechanism behind the functional links. We have compared the GC with the sGC for simplicity, as the formulation of the sGC derives from the same AR models and shares the same limitations. However, it can be computed jointly with other statistical connectivity indexes like PDC, TE or PhTE. For instance, the pairwise spectral connectivity could be firstly estimated by other metrics, like PDC, and then positive or negative relationship at a specific frequency by filtering the signal and computing the sGC.

In this work, we have stablished the basis of the sGC in the temporal domain and under the assumption of stationarity. It will be interesting to test the performance of sGC in other conditions. For instance, it can be easily extended to a time-varying version with a windowing methodology32,49. In this case, the signal could divided into small epochs considered as stationary, and then the AR model would be computed for each time window49. Future studies include the characterization of positive and negative relationships as a function of the frequency, thus combining the frequency and time varying approaches. An important step will be to separate the elements of the AR model used to compute the GC and the sGC. In the former, it is the variance of the residuals what determines the connectivity, while only some coefficients of the AR model are required to compute the sGC. Following the Fourier transform, the AR model can be expressed by a transfer function (H(w)) and an error66. The information of the A matrix that determines the sGC is, therefore, in the transfer function. The analysis of both, the real and imaginary part of the complex values of these elements, shall determine the spectral sGC.

We have tested this proposal in a theoretical paradigm to demonstrate its efficacy, but several aspects need to be considered to prevent misinterpretations. First, in order to be interpretable, the polarity of the used signals needs to be known. The polarity of the simulated currents in our model is known, yet it is more difficult to establish in most of the LFP and EEG recordings63,67. Therefore, the sign of the GC in these datasets might not be directly interpreted as a readout of excitation and inhibition. Secondly, some conditions such as signal non-stationarity and non-linearity were not considered in this work but are present in biological signals. Recent implementations of GC overcome these issues49,68,69, opening the possibility to implement sGC concepts on these indices. Thirdly, electrophysiological recordings are generated by the contribution of many sources spatiotemporally overlapped63,70,71. Thus, prior knowledge of the system and signals is required. Overall, a positive GC might be interpreted as a directed coupling in which the involved populations, sender and receiver, are linked in a comparable functional state. A negative GC would, complementary, reflect a link between populations in distinct functional states.

References

Sporns, O. Brain connectivity. Scholarpedia 2, 4695 (2007).

Sporns, O. Structure and function of complex brain networks. Dialogues Clin Neurosci 15, 247–262 (2013).

Friston, K. J. Functional and Effective Connectivity: A Review. Brain connectivity 1, 13–36 (2011).

Buzsaki, G. Rhythms of the Brain. (Oxford University Press, 2006).

Canolty, R. T. & Knight, R. T. The functional role of cross-frequency coupling. Trends Cogn Sci 14, 506–515 (2010).

Herrmann, C. S., Strüber, D., Helfrich, R. F. & Engel, A. K. EEG oscillations: From correlation to causality. Int J Psychophysiol 103, 12–21 (2016).

Buzsáki, G. Theta oscillations in the hippocampus. Neuron 33, 325–340 (2002).

Buzsáki, G. & Wang, X.-J. Mechanisms of Gamma Oscillations. Annu Rev Neurosci 35, 203–225 (2012).

Faust, O., Acharya, U. R., Adeli, H. & Adeli, A. Wavelet-based EEG processing for computer-aided seizure detection and epilepsy diagnosis. Seizure 26, 56–64 (2015).

Uhlhaas, P. J. & Singer, W. Abnormal neural oscillations and synchrony in schizophrenia. Nat. Rev. Neurosci. 11, 100–113 (2010).

Wiener, N. The theory of prediction. In Modern Mathematics For The Engineer (McGraw–Hill, 1956).

Bressler, S. L. & Seth, A. K. Wiener-Granger causality: a well established methodology. Neuroimage 58, 323–329 (2011).

Granger, C. W. J. Investigating Causal Relations by Econometric Models and Cross-spectral Methods. Econometrica 37, 424–438 (1969).

van Kerkoerle, T. et al. Alpha and gamma oscillations characterize feedback and feedforward processing in monkey visual cortex. Proc. Natl. Acad. Sci. USA 111, 14332–14341 (2014).

Benito, N., Martín-Vázquez, G., Makarova, J., Makarov, V. A. & Herreras, O. The right hippocampus leads the bilateral integration of gamma-parsed lateralized information. Elife 5, (2016).

Zhang, L. et al. Hippocampal theta-driving cells revealed by Granger causality. Hippocampus 22, 1781–1793 (2012).

Kang, D., Ding, M., Topchiy, I. & Kocsis, B. Reciprocal Interactions between Medial Septum and Hippocampus in Theta Generation: Granger Causality Decomposition of Mixed Spike-Field Recordings. Front Neuroanat 11, 120 (2017).

Stokes, P. A. & Purdon, P. L. A study of problems encountered in Granger causality analysis from a neuroscience perspective. Proc. Natl. Acad. Sci. USA 114, E7063–E7072 (2017).

Barnett, L., Barrett, A. B. & Seth, A. K. Solved problems for Granger causality in neuroscience: A response to Stokes and Purdon. Neuroimage 178, 744–748 (2018).

Faes, L., Stramaglia, S. & Marinazzo, D. On the interpretability and computational reliability of frequency-domain Granger causality. F1000Res 6, 1710 (2017).

Dhamala, M., Liang, H., Bressler, S. L. & Ding, M. Granger-Geweke causality: Estimation and interpretation. Neuroimage 175, 460–463 (2018).

Chen, Y., Bressler, S. L. & Ding, M. Frequency decomposition of conditional Granger causality and application to multivariate neural field potential data. J. Neurosci. Methods 150, 228–237 (2006).

Barnett, L. & Seth, A. K. The MVGC multivariate Granger causality toolbox: a new approach to Granger-causal inference. J. Neurosci. Methods 223, 50–68 (2014).

Dhamala, M., Rangarajan, G. & Ding, M. Estimating Granger causality from fourier and wavelet transforms of time series data. Phys. Rev. Lett. 100, 018701 (2008).

Solo, V. State-Space Analysis of Granger-Geweke Causality Measures with Application to fMRI. Neural Comput 28, 914–949 (2016).

Barnett, L. & Seth, A. K. Granger causality for state-space models. Phys Rev E Stat Nonlin Soft Matter Phys 91, 040101 (2015).

Aoki, M. State Space Modeling of Time Series. (Springer-Verlag, 1990).

Lütkepohl, H. New Introduction to Multiple Time Series Analysis. (Springer Science & Business Media, 2005).

Friston, K. J., Harrison, L. & Penny, W. Dynamic causal modelling. Neuroimage 19, 1273–1302 (2003).

Seth, A. K., Barrett, A. B. & Barnett, L. Granger causality analysis in neuroscience and neuroimaging. J. Neurosci. 35, 3293–3297 (2015).

Pereda, E., Quiroga, R. Q. & Bhattacharya, J. Nonlinear multivariate analysis of neurophysiological signals. Progress in Neurobiology 77, 1–37 (2005).

Cekic, S., Grandjean, D. & Renaud, O. Time, frequency, and time-varying Granger-causality measures in neuroscience. Stat Med 37, 1910–1931 (2018).

Sameshima, K. & Baccalá, L. A. Directed Coherence: A Tool for Exploring Functional Interactions Among Brain Structures. In https://doi.org/10.1201/9781420048254.ch9 (1998).

Baccalá, L. A. & Sameshima, K. Partial directed coherence: a new concept in neural structure determination. Biological Cybernetics 84, 463–474 (2001).

Dhamala, M., Rangarajan, G. & Ding, M. Analyzing information flow in brain networks with nonparametric Granger causality. NeuroImage 41, 354–362 (2008).

Schreiber, T. Measuring information transfer. Phys. Rev. Lett. 85, 461–464 (2000).

Lobier, M., Siebenhühner, F., Palva, S. & Palva, J. M. Phase transfer entropy: A novel phase-based measure for directed connectivity in networks coupled by oscillatory interactions. NeuroImage 85, 853–872 (2014).

Fries, P. A mechanism for cognitive dynamics: neuronal communication through neuronal coherence. Trends in Cognitive Sciences 9, 474–480 (2005).

Hahn, G., Ponce-Alvarez, A., Deco, G., Aertsen, A. & Kumar, A. Portraits of communication in neuronal networks. Nat. Rev. Neurosci. 20, 117–127 (2019).

Lopez-Madrona, V. J. et al. Gamma oscillations coordinate different theta rhythms in the hippocampus. bioRxiv 418434 https://doi.org/10.1101/418434 (2018).

López-Madrona, V. J., Matias, F. S., Pereda, E., Canals, S. & Mirasso, C. R. On the role of the entorhinal cortex in the effective connectivity of the hippocampal formation. Chaos 27, 047401 (2017).

Akaike, H. A new look at the statistical model identification. IEEE Transactions on Automatic Control 19, 716–723 (1974).

Schwarz, G. Estimating the Dimension of a Model. Ann. Statist. 6, 461–464 (1978).

Seth, A. K. & Edelman, G. M. Distinguishing causal interactions in neural populations. Neural Comput 19, 910–933 (2007).

Cui, J., Xu, L., Bressler, S. L., Ding, M. & Liang, H. BSMART: A Matlab/C toolbox for analysis of multichannel neural time series. Neural Netw 21, 1094–1104 (2008).

Niso, G. et al. HERMES: towards an integrated toolbox to characterize functional and effective brain connectivity. Neuroinformatics 11, 405–434 (2013).

Seth, A. K. A MATLAB toolbox for Granger causal connectivity analysis. Journal of Neuroscience Methods 186, 262–273 (2010).

Granger, C. W. J. & Newbold, P. Spurious regressions in econometrics. Journal of Econometrics 2, 111–120 (1974).

Ding, M., Bressler, S. L., Yang, W. & Liang, H. Short-window spectral analysis of cortical event-related potentials by adaptive multivariate autoregressive modeling: data preprocessing, model validation, and variability assessment. Biol Cybern 83, 35–45 (2000).

Milde, T. et al. A new Kalman filter approach for the estimation of high-dimensional time-variant multivariate AR models and its application in analysis of laser-evoked brain potentials. Neuroimage 50, 960–969 (2010).

Schelter, B., Winterhalder, M., Timmer, J. & Peifer, M. Testing for phase synchronization. Physics Letters A 366, 382–390 (2007).

Nakamura, T., Small, M. & Hirata, Y. Testing for nonlinearity in irregular fluctuations with long-term trends. Phys Rev E Stat Nonlin Soft Matter Phys 74, 026205 (2006).

Izhikevich, E. M. Simple model of spiking neurons. IEEE Trans Neural Netw 14, 1569–1572 (2003).

Matias, F. S., Carelli, P. V., Mirasso, C. R. & Copelli, M. Self-Organized Near-Zero-Lag Synchronization Induced by Spike-Timing Dependent Plasticity in Cortical Populations. PLoS ONE 10, e0140504 (2015).

Billings, G. & van Rossum, M. C. W. Memory retention and spike-timing-dependent plasticity. J. Neurophysiol. 101, 2775–2788 (2009).

Schreiber, T. & Schmitz, A. Improved Surrogate Data for Nonlinearity Tests. Phys. Rev. Lett. 77, 635–638 (1996).

Andersen, P. et al. The Hippocampus Book. (Oxford University Press, USA, 2007).

Colgin, L. L. Rhythms of the hippocampal network. Nat. Rev. Neurosci. 17, 239–249 (2016).

Barnett, L., Barrett, A. B. & Seth, A. K. Granger causality and transfer entropy are equivalent for Gaussian variables. Phys. Rev. Lett. 103, 238701 (2009).

Pastore, V. P., Massobrio, P., Godjoski, A. & Martinoia, S. Identification of excitatory-inhibitory links and network topology in large-scale neuronal assemblies from multi-electrode recordings. PLoS Comput. Biol. 14, e1006381 (2018).

Hamilton, J. P., Chen, G., Thomason, M. E., Schwartz, M. E. & Gotlib, I. H. Investigating neural primacy in Major Depressive Disorder: multivariate Granger causality analysis of resting-state fMRI time-series data. Mol. Psychiatry 16, 763–772 (2011).

Zhang, J., Li, C. & Jiang, T. New Insights into Signed Path Coefficient Granger Causality Analysis. Front Neuroinform 10, 47 (2016).

Herreras, O. Local Field Potentials: Myths and Misunderstandings. Front Neural Circuits 10, 101 (2016).

Nolte, G., Ziehe, A., Krämer, N., Popescu, F. & Müller, K.-R. Comparison of Granger Causality and Phase Slope Index. In Causality: Objectives and Assessment 267–276 (2010).

Klausberger, T. & Somogyi, P. Neuronal diversity and temporal dynamics: the unity of hippocampal circuit operations. Science 321, 53–57 (2008).

Geweke, J. Measurement of Linear Dependence and Feedback between Multiple Time Series. Journal of the American Statistical Association 77, 304–313 (1982).

Martín-Vázquez, G., Makarova, J., Makarov, V. A. & Herreras, O. Determining the true polarity and amplitude of synaptic currents underlying gamma oscillations of local field potentials. PLoS ONE 8, e75499 (2013).

Chen, L. Vector time-varying autoregressive (TVAR) models and their application to downburst wind speeds. (2005).

Farokhzadi, M., Hossein-Zadeh, G.-A. & Soltanian-Zadeh, H. Nonlinear effective connectivity measure based on adaptive Neuro Fuzzy Inference System and Granger Causality. Neuroimage 181, 382–394 (2018).

Herreras, O., Makarova, J. & Makarov, V. A. New uses of LFPs: Pathway-specific threads obtained through spatial discrimination. Neuroscience 310, 486–503 (2015).

Makarov, V. A., Makarova, J. & Herreras, O. Disentanglement of local field potential sources by independent component analysis. J Comput Neurosci 29, 445–457 (2010).

Acknowledgements

We thank Elena Pérez-Montoyo for her assistance with the electrophysiological datasets and Dr. Daniele Marinazzo and Dr. Simona Obreja for providing useful comments on the manuscript. This work was supported by the Spanish Ministry of Economy and Competitiveness (Project Nos. TEC2016–80063-C3-3-R, TEC2016-80063-C3-2-R, and BFU2015-64380-C2-1-R), the European Union through the Horizon 2020 programme (Grant Agreement No. 668863, SyBil-AA), the Spanish State Research Agency (SEV- 2013-0317), the Brazilian Coordenação de Aperfeiçoamento de Pessoal de Nível Superior (Grant Nos. 88881.120309/2016-01 and 88881.068077/2014-01), the Brazilian Conselho Nacional de Desenvolvimento Cientìfico e Tecnològico (Grant No. 432429/2016-6) and the US National Science Foundation (grant NSF IIS-1515022). V.J.L. was supported by a predoctoral fellowship La Caixa-Severo Ochoa from Obra Social La Caixa. SC and CM acknowledge the Spanish State Research Agency, through the Severo Ochoa and María de Maeztu Program for Centers and Units of Excellence in R&D (SEV-2017-0723 and MDM-2017-0711, respectively).

Author information

Authors and Affiliations

Contributions

V.L.M., C.M., E.P. and S.C. conceived the project. V.L.M. and F.M. carried out the research. E.P. checked the mathematical methods and did the statistical analysis. All the authors discussed the results. V.L.M. wrote the manuscript and all the authors reviewed it.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

López-Madrona, V.J., Matias, F.S., Mirasso, C.R. et al. Inferring correlations associated to causal interactions in brain signals using autoregressive models. Sci Rep 9, 17041 (2019). https://doi.org/10.1038/s41598-019-53453-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-019-53453-2

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.