Abstract

We propose a realistic hybrid classical-quantum linear solver to solve systems of linear equations of a specific type, and demonstrate its feasibility with Qiskit on IBM Q systems. This algorithm makes use of quantum random walk that runs in \({\bf{O}}\)(N log(N)) time on a quantum circuit made of \({\bf{O}}\)(log(N)) qubits. The input and output are classical data, and so can be easily accessed. It is robust against noise, and ready for implementation in applications such as machine learning.

Similar content being viewed by others

Introduction

Algorithms that run on quantum computers hold promise to perform important computational tasks more efficiently than what can ever be achieved on classical computers, most notably Grover’s search algorithm and Shor’s integer factorization1. One computational task indispensable for many problems in science, engineering, mathematics, finance, and machine learning, is solving systems of linear equations \({\bf{A}}\overrightarrow{x}=\overrightarrow{b}\). Classical direct and iterative algorithms take \({\mathscr{O}}({N}^{3})\) and \({\mathscr{O}}({N}^{2})\) time2,3. Interestingly, the Harrow-Hassidim-Lloyd (HHL) quantum algorithm4,5,6,7,8,9,10,11,12,13, which is based on the quantum circuit model14, takes only \({\mathscr{O}}(\log (N))\) to solve a sparse \(N\times N\) system of linear equations, while for dense systems it requires \({\mathscr{O}}(\sqrt{N}\,\log (N))\)11. Linear solvers and experimental realizations that use quantum annealing and adiabatic quantum computing machines15,16,17 are also reported18,19,20. Most recently, methods21,22 inspired by adiabatic quantum computing are proposed to be implemented on circuit-based quantum computers. Whether substantial quantum speedup exists in these algorithms remains unknown.

In practice, the applicability of quantum algorithms to classical systems are limited by the short coherence time of noisy quantum hardware in the so-called Noisy Intermediate-Scale Quantum (NISQ) era23 and the difficulty in executing the input and output of classical data. Other roadblocks toward practical implementation include limited number of qubits, limited connectivity between qubits, and large error correction overhead. At present, experiments demonstrating the HHL linear solver on circuit quantum computers are limited to \(2\times 2\) matrices24,25,26,27,28,29, while linear solvers inspired by adiabatic quantum computing are limited to \(8\times 8\) matrices21,22. For quantum annealers, the state-of-the-art linear solvers can solve up to \(12\times 12\) matrices20.

In addition to the problems of limited available entangled qubits and short coherence time, the HHL-type algorithms for the so-called Quantum Linear Systems Problem (QLSP) are designed to work only when input and output are quantum states30. This condition imposes severe restriction to practical applications in the NISQ era23,30,31. It has been shown that the HHL algorithm can not extract information about the norm of the solution vector \(\overrightarrow{x}\)4. A state preparation algorithm for inputting a classical vector \(\overrightarrow{b}\) would take \({\mathscr{O}}(N)\) time30,32,33,34, with large overhead for current hardware. In addition, quantum state tomography is required to read out the classical solution vector \(\overrightarrow{x}\), which is a demanding task35,36, except for special cases like one-dimensional entangled qubits37. Inputting the matrix A is also a challenge that may kill the quantum speedup1,24,25,26,27,28,29.

In this work, we propose a hybrid classical-quantum linear solver that uses circuit-based quantum computer to perform quantum random walks. In contrast to the HHL-type linear solvers, the solution vector \(\overrightarrow{x}\) and the constant vector \(\overrightarrow{b}\) in this hybrid algorithm stay as classical data in the classical registers. Only the matrix A is encoded in quantum registers. The idea is similar to that of variational quantum eigensolvers38,39,40,41, where quantum speedup is exploited only for sampling exponentially large state Hilbert spaces, while the rest of computational task is done by classical computer. This makes it easy to perform data input and output: the \(\overrightarrow{b}\) vector can be arbitrary, and the components and the norm of the \(\overrightarrow{x}\) vector can be easily accessed.

We consider matrices that are useful for Markov decision problems such as in reinforcement learning42. We show that these matrices can be efficiently encoded by introducing the Hamming cube structure: a square matrix of size N requires \({\mathscr{O}}(\log (N))\) quantum bits only. The quantum random walk algorithm we here propose takes \({\mathscr{O}}(\log (N))\) time to obtain one component of the \(\overrightarrow{x}\) vector. We also show that in the quantum random walk algorithm the matrices produced as a result of qubit-qubit correlation are inherently complex, which can be an advantage for performing difficult tasks. For the same amount of time, the matrices the classical random walk algorithm can solve are limited to factorisable ones only.

We have tested the quantum random walk algorithm using software development kit Qiskit on IBM Q systems43,44. Numerical results show that this linear solver works on ideal quantum computer, and most importantly, also on noisy quantum computer having a short coherence time, provided the quantum circuit that encodes the A matrix is not too long. The limitation due to machine errors is discussed.

Results

We consider a system of linear equations of real numbers \({\bf{A}}\overrightarrow{x}=\overrightarrow{b}\), where A is a \(N\times N\) matrix to be solved, \(N\times 1\) vectors \(\overrightarrow{x}\) and \(\overrightarrow{b}\) are, respectively, the solution vector and a vector of constants. Without loss of generality, we rewrite A as

where 1 is the identity matrix, and \(0 < \gamma < 1\) is a real number. We take P as a (stochastic) Markov-chain transition matrix, such that \({P}_{I,J}\ge 0\) and \({\sum }_{J}\,{P}_{I,J}=1\), where \({P}_{I,J}\) refers to the P matrix element in the J-th column of the I-th row. This type of linear systems appears in value estimation for reinforcement learning42,45,46, and radiosity equation in computer graphics47. In reinforcement learning algorithms, given a fixed policy of the learning agency, the vector \(\overrightarrow{x}\) is the value function that determines the long-term cumulative reward, and efficient estimation of this function is key to successful learning42. Note that the matrix A given in Eq. (1) used as model Hamiltonian matrix belongs to the so-called stoquastic Hamiltonians48,49.

To solve \({\bf{A}}\overrightarrow{x}=\overrightarrow{b}\), we expand the solution vector as Neumann series, that is, \(\overrightarrow{x}={{\bf{A}}}^{-1}\overrightarrow{b}={({\bf{1}}-\gamma {\bf{P}})}^{-1}\overrightarrow{b}=\)\({\sum }_{s=0}^{\infty }\,{\gamma }^{s}{{\bf{P}}}^{s}\overrightarrow{b}\). Let us define the I0 component of \(\overrightarrow{x}\) truncated up to \({\gamma }^{c}\) terms as

This expression for \({x}_{{I}_{0}}^{(c)}\) can be evaluated by random walks on a graph of N nodes, with the probability of going from node I and node J of the graph given by the matrix element \({P}_{I,J}\), which we set as symmetric (undirected), namely \({P}_{I,J}={P}_{J,I}\). An example of a four-node graph is shown in Fig. 1(a). By performing a series of random walks starting from node I0, walking c steps according to the transition probability matrix P, and ending at some node Ic, Eq. (2) can be readily calculated to get the \({x}_{{I}_{0}}^{(c)}\) value, which is close to the solution \({x}_{{I}_{0}}\) for some large c steps. Truncating the series introduces an error \(\varepsilon \sim {\mathscr{O}}({\gamma }^{c})\). So, for a given \(\gamma \), the number of steps necessary to meet a given tolerance \(\varepsilon \) is equal to \(c\sim \,\log (1/\varepsilon )/\,\log (1/\gamma )\).

(a) Quantum (or classical) random walk on an undirected \(N=4\) graph. The transition probability of going from node \(I\) to node \(J\) or vice versa is equal to \({P}_{I,J}\), these elements forming a \(4\times 4\) matrix. (b) The four nodes on this Hamming cube are labeled by integers \((0,1,2,3)\); they are encoded as four different states \(\mathrm{|00}\rangle \), \(\mathrm{|01}\rangle \), \(\mathrm{|10}\rangle \), \(\mathrm{|11}\rangle \), respectively.

The above procedure can be extended to general matrices A by setting \({\bf{A}}={\bf{1}}-{\bf{B}}\) where \({B}_{I,J}={P}_{I,J}{v}_{I,J}\) for real matrix elements \({v}_{I,J}\) (see Methods 0.4). The calculation converges50,51 provided that the spectral radius \(\rho ({{\bf{B}}}^{\ast }) < 1\) where the matrix B* is defined by \({B}_{I,J}^{\ast }=\frac{{B}_{I,J}^{2}}{{P}_{I,J}}={P}_{I,J}{v}_{I,J}^{2}\). The matrices we here consider is a special case where \({v}_{I,J}=\gamma \) is a constant, and this simplification guarantees convergence of the calculation.

For classical Monte Carlo methods to compute Eq. (2), it takes \({\mathscr{O}}(N)\) time to calculate the cumulative distribution function that is used to determine the next walking step. So, these linear systems can be solved by classical Monte Carlo methods within \({\mathscr{O}}({N}^{2})\) time52,53,54,55,56. Similar Monte Carlo methods have been extended to more general matrices for applications in Green’s function Monte Carlo method for many-body physics57,58,59.

Encoding state spaces on Hamming cubes

As for material resources, in general it takes at least \({\mathscr{O}}(N)\) classical bits to store a row of a stochastic transition matrix P (or A). However, for the classical and quantum random walks we here consider, it is possible to reduce significantly the number of classical or quantum bits necessary to encode the corresponding transition probability matrix P to \({\mathscr{O}}(\log (N))\) by introducing the Hamming cube (HC) structure60. To do it, we first associate each graph node with a bit string. As shown in Fig. 1(b), the four nodes of the \(N=4\) graph are fully represented by two bits. Node states \(\mathrm{|0}\rangle \), \(\mathrm{|1}\rangle \), \(\mathrm{|2}\rangle \), and \(\mathrm{|3}\rangle \) represent binary string states \(\mathrm{|00}\rangle \), \(\mathrm{|01}\rangle \), \(\mathrm{|10}\rangle \), and \(\mathrm{|11}\rangle \), respectively. For a N-node graph, only \({\log }_{2}(N)=n\) (to base 2) bits are needed to encode the integers \(J\in \{0,1,\ldots ,N-1\}\), each representing the n-bit binary string state, namely \(|J\rangle =|{j}_{n-1},\ldots {j}_{1},{j}_{0}\rangle \), where \({j}_{\ell }\) is 0 or 1.

Classical random walk

Before we introduce our quantum random walk algorithm, let us first consider classical random walks.

To perform random walks on a N-node graph, we use a simple coin-flipping process with \({\mathscr{O}}(\log (N))\) time steps. The \(\ell \)-th bit flips with probability \({\sin }^{2}({\theta }_{\ell }/2)\) or does not flip with probability \({\cos }^{2}({\theta }_{\ell }\mathrm{/2)}\), the total probability being equal to 1. The transition probability matrix elements are given by

where the n-bit binary string state \(|I{\rangle }_{c}=|{i}_{n-1},\ldots ,{i}_{1},{i}_{0}{\rangle }_{c}\) is determined by \(|J^{\prime} {\rangle }_{c}=|I{\rangle }_{c}\oplus |J{\rangle }_{c}\), where \(\oplus \) denotes the bitwise exclusive or (XOR) operation, and the subscript c denotes classical states. The total number of \(|{\sin }^{2}({\theta }_{\ell }/2)|\), given by \({d}^{classical}={\sum }_{\ell =0}^{n-1}\,{i}_{\ell }\), is the Hamming weight of \(|I{\rangle }_{c}\), and so corresponds to the Hamming distance between \(|J^{\prime} {\rangle }_{c}\) and \(|J{\rangle }_{c}\) states. This metric measures the number of steps that a walker needs to go from \(|J{\rangle }_{c}\) to \(|J^{\prime} {\rangle }_{c}\) on the Hamming cube.

For the four-node graph shown in Fig. 1, the transition probability matrix P for classical random walks reads

where \(\otimes \) denotes the Kronecker product. The lower triangular part of the matrix is omitted due to symmetry. This simple case demonstrates a general feature for classical transition probability matrix \({{\bf{P}}}^{classical}\): the probability of flipping both bits is simply a product of the probabilities of flipping the 0-th bit and the 1-th bit in arbitrary order. For instance, \({P}_{0,3}^{classical}={P}_{|00\rangle ,|11\rangle }^{classical}={\sin }^{2}({\theta }_{0}/2)\,{\sin }^{2}({\theta }_{1}/2)={P}_{|00\rangle ,|01\rangle }^{classical}{P}_{|00\rangle ,|10\rangle }^{classical}\); similarly for the other \({P}_{I,J}^{classical}\)’s. The fact that \({{\bf{P}}}^{classical}\) can be factorized into a Kronecker product of the matrices of each individual bit indicates that each bit flips independently, as for a Markovian process.

Quantum random walk

We can simulate quantum walks61,62,63,64,65,66,67 on a N-node graph to obtain the solution vector \(\overrightarrow{x}\) from Eq. (2). To do it, we use discrete-time coined quantum walk circuit68,69. The circuit for the four-node graph in Fig. 1 is shown in Fig. 2. The first two qubits \({j}_{0}\) and \({j}_{1}\) are state registers that will be initialized to encode the four-node graph, while the third qubit \({j}_{2}\) is the coin register.

Discrete-time coined quantum walk circuit for the \(4\times 4\) transition matrix given in Eq. (10). Qubits \({j}_{0}\) and \({j}_{1}\) are state register qubits to represent the four-node graph in Fig. 1, first set as 0 before initialization, while the qubit \({j}_{2}\) is the coin register qubit. The measured registers \({c}_{0}\) and \({c}_{1}\) are fed back to initialize the next iteration. The classical-step is repeated c times to obtain the Neumann expansion up to order c.

To derive the quantum transition probability matrix on a graph of N nodes, we consider the state space of the \((n+\mathrm{1)}\)-qubit circuit as spanned by \({\{|{i}_{n}\rangle }_{\diamond }\otimes |{i}_{n-1},\ldots ,{i}_{1},{i}_{0}{\rangle }_{q}\}\) with \(n={\log }_{2}(N)\): the \((n+\mathrm{1)}\)-th qubit registers the coin state \(|{i}_{n}{\rangle }_{\diamond }\), and the other n qubits encode the N-node graph. We take the convention that the rightmost bit is i0. Given a n-bit string \(({j}_{n-1},\ldots ,{j}_{1},{j}_{0})\), the initialized quantum state reads

Next we let the \(|{\psi }_{\mathrm{0,}J}\rangle \) state evolve in random walk: in each walking step, we toss the coin by rotating the coin qubit, and then flip a graph qubit by applying the CNOT gate. This process is repeated on all the n qubits in the \(|{j}_{n-1},{j}_{n-2},\ldots ,{j}_{2},{j}_{1},{j}_{0}{\rangle }_{q}\) state, starting with the 0-th qubit. The corresponding evolution operator reads

where the prime (′) on the Π denotes that the \(k=0\) operator applies first to the right, followed by the \(k=1\) operator, and so on; the 1q operator is an identity map on the n-qubit state \(|J{\rangle }_{q}\), \({X}_{k}\) is a Pauli X gate (the Pauli matrix \({\sigma }_{x}\)) that acts on the \(k\)-th qubit, and \({U}_{3}({\bf{u}})\) is a single-qubit rotation operator

that acts on the coin qubit state. Note that the first parentheses in Eq. (6) represents a CNOT gate. It is important to note that here we use one quantum coin only to decide on the Pauli X gate operation over all the n qubits, so the order of qubit operations plays a role in the determination of the transition probability matrix P.

The first step is to project \({\mathscr{U}}\) on \(|{\psi }_{\mathrm{0,}J}\rangle \), which leads to

with \({i}_{-1}=0\). By tracing out the coin degree of freedom, we obtain the reduced density matrix for the graph and hence the probability matrix \({P}_{J^{\prime} ,J}=\langle J^{\prime} |{{\rm{Tr}}}_{\diamond }[{\mathscr{U}}|{\psi }_{0J}\rangle \langle {\psi }_{0J}|{{\mathscr{U}}}^{\dagger }]|J^{\prime} \rangle \). The resulting quantum transition probability matrix elements then read

where \(|I{\rangle }_{q}=|{i}_{n-1},\ldots ,{i}_{1},{i}_{0}{\rangle }_{q}\) is determined by \(|J^{\prime} {\rangle }_{q}=|I{\rangle }_{q}\oplus |J{\rangle }_{q}\). For one \({\mathscr{U}}\) quantum evolution, the complex phase factors \({e}^{i{\varphi }_{\ell }}\) and \({e}^{i{\lambda }_{\ell }}\) play no role. We will see later that these phases come into play in the case of multiple evolutions \({{\mathscr{U}}}^{q}\).

To understand the transition probability matrix produced by the quantum walk circuit (Fig. 2), let us again consider the four-node graph in Fig. 1, where

Unlike the above classical random walk, this matrix cannot be factorized into a Kronecker product of the matrices of each individual qubit. The probability of one qubit flipping depends on the other, indicating that the two qubits are correlated, or in quantum information theory entangled.

In comparison to Eq. (3) obtained from the classical random walk, we see that additional \({\mathscr{O}}(\log (N))\) XOR operations are required for classical computer to obtain the same quantum transition probability matrix, as can be seen from Eq. (9). In the case of \(N=4\), the classical and quantum transition probability matrices given by Eqs (4) and (10) are related by a permutation \((\begin{array}{cccc}0 & 1 & 2 & 3\\ 0 & 3 & 2 & 1\end{array})\). The quantum version of the Hamming distance between \(|J{\rangle }_{q}\) and \(|J^{\prime} {\rangle }_{q}\) is given by \({d}^{quantum}={\sum }_{\ell =0}^{n-1}\,{i}_{\ell }\oplus {i}_{\ell -1}\), which clearly shows the temporal correlation between the \(\ell \)-th and \((\ell -\mathrm{1)}\)-th qubits. We attribute this correlation to the fact that only one quantum coin is used to decide on the Pauli X gate over all the n qubits, thus creating some connection between qubits, and to the non-Markovian nature of quantum walk dynamics70,71, in which the quantum circuit memorizes the qubit state \(|{i}_{\ell -1}\rangle \) when it is walking in the direction that has the qubit state \(|{i}_{\ell }\rangle \) in the Hamming cube.

It can be of interest to note that the circuit given in Eq. (6) is just one possible design leading to a particular correlation between qubits. In general, there are numerous ways to rearrange the walking steps to obtain different kinds of correlation, and it is possible to design the circuit for specific purposes. A simple way is to perform the walking steps in Eq. (6) in a reverse order, operating the \(k=n-1\) operator to the right first, followed by the \(k=n-2\) operator, and so on. This leads to a different metric \({d}^{quantum}={\sum }_{\ell =0}^{n-1}\,{i}_{\ell +1}\oplus {i}_{\ell }\) with \({i}_{N}=0\). It turns out that this \({d}^{quantum}\) corresponds to the Hamming distance in the Gray code representation.

The Gray code uses single-distance coding for integer sequence \(0\to 1\to \cdots \to N-1\), where adjacent integers differ by single bit flipping. In the case of the four-node graph in Fig. 1, the integers \((0,1,2,3)\) in the Gray code representation correspond to the \(\mathrm{|00}\rangle \), \(\mathrm{|01}\rangle \), \(\mathrm{|11}\rangle \), \(\mathrm{|10}\rangle \) states, respectively. It is obvious that this Gray code representation can be obtained from the natural binary code representation by a permutation \((\begin{array}{cccc}0 & 1 & 2 & 3\\ 0 & 1 & 3 & 2\end{array})\). There also exists a permutation that transforms \({{\bf{P}}}^{classical}\) to \({{\bf{P}}}^{quantum}\) in the Gray code basis. The proof of this correspondence for arbitrary N is given in Methods 0.1. Both the transform and inverse transform between the natural binary code and Gray code representations take \({\mathscr{O}}(\log (N))\) operations using classical computer72. This again shows that the quantum random walk algorithm gains \({\mathscr{O}}(\log (N))\) improvement over the classical one.

As the change of the Hamming distance for each walking step in the Gray code representation is \(\delta d=1\), a quantum walker in a geodesic of a Hamming cube automatically walks with the least action, that is, with the minimum change of the Hamming distance. This geodesic is a Hamiltonian path on hypercubes73.

It is possible to increase the level of correlation in the probability matrix by performing multiple quantum evolutions, \({{\mathscr{U}}}^{q}\), where q is the number of quantum walk evolutions. The probability matrix produced by two quantum walk evolutions, \({{\mathscr{U}}}^{2}\), is given by (see Methods 0.2 for derivation)

where, for \(I=({i}_{n-1},\ldots ,{i}_{0})\) and \(K=({k}_{n-1},\ldots ,{k}_{0})\),

and

The fact that the summation over I in Eq. (11) runs over \({\mathscr{O}}{\mathrm{(2}}^{n})\) state configurations before the square is taken points to the complicated mixing of negative signs and complex phases \({\varphi }_{\ell }\)’s and \({\lambda }_{\ell }\)’s. The sign problem makes it difficult for pure classical Monte Carlo methods to simulate this transition.

In general, the dependence of the two-evolution quantum probability matrix on \({\theta }_{\ell }\)’s, \({\varphi }_{\ell }\)’s and \({\lambda }_{\ell }\)’s, is not trivial. Its explicit expression for the \(N=4\) graph is given in Methods 0.3. The phases \({\varphi }_{\ell }\)’s and \({\lambda }_{\ell }\)’s enter into play for graph sizes \(N\ge 8\). On the other hand, the two-evolution probability matrix for classical random walk is given by

which is still factorisable.

Numerical results

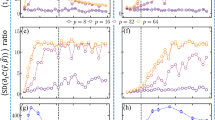

Figure 3 shows the performance of our hybrid quantum random walk algorithm on linear systems of dimension \(N=256\) and \(N=1024\). Their relative errors decrease with increasing sampling number. The relative error is defined as \(\varepsilon =|{x}_{I}^{exact}-{x}_{I}|/|{x}_{I}^{exact}|\) for the I-th component of the solution vector \(\overrightarrow{x}\), where \({\overrightarrow{x}}^{exact}\) is the exact result obtained with the NumPy package. To demonstrate, we use randomly generated vectors \(\overrightarrow{b}\) and matrices A with a uniform distribution, \({b}_{I}\in [\,-\,1,1]\) and \({\theta }_{\ell }\in [0,\pi ]\). We choose \(\gamma \) and c such that the error introduced by the Neumann expansion is within \({\mathscr{O}}{\mathrm{(10}}^{-4})\). See Table 1 for the relevant parameters of the two matrices. The program is written and compiled with Qiskit version 0.7.2. The simulation results (upper figure) are obtained using QASM simulator43, while the quantum machine results (lower figure) are obtained using IBM Q 20 Tokyo device or Poughkeepsie device74,75.

Relative errors \(\varepsilon =|{x}_{I}^{exact}-{x}_{I}|/|{x}_{I}^{exact}|\) as a function of the sampling number ns for \(N=256\) and \(N=1024\) matrices. The relevant parameters and estimated errors for these two matrices can be found in Table 1. Black solid lines represent the \(\mathrm{1/}\sqrt{{n}_{s}}\) error reduction expected for Monte Carlo calculations. (Upper figure) Red dashed line and green dash-dotted line are the results computed by the QASM simulator. (Lower figure) Blue dash-dotted line and red dotted line are data for the same matrices computed by the IBM Q 20 Tokyo machine or Poughkeepsie machine. Cyan and magenta horizontal dashed lines depict the estimated errors.

The curves obtained by the QASM simulator are results averaged over ten runs. Their relative errors decrease as \(\mathrm{1/}\sqrt{{n}_{s}}\), where ns is the number of random walk samplings. This \(\mathrm{1/}\sqrt{{n}_{s}}\) reduction is typical of Monte Carlo simulations, because the hybrid quantum walk algorithm has essentially the same structure as classical Monte Carlo methods. So, we do not gain any speedup in sampling number. Yet, this result substantiates the fact that our proposed algorithm works on ideal quantum computers.

For real IBM Q quantum devices, the accuracy stops improving after a certain number of samplings (see the plateau (blue dash-dotted curve) and oscillation (red dotted curve) in Fig. 3). This hardware limitation can be estimated using an error formula \({\varepsilon }_{0}\sim \kappa \times {E}_{r}\), where \(\kappa \) is the condition number for the matrix A and Er is the readout error of real machines. The condition number \(\kappa \) gauges the ratio of the relative error in the solution vector \(\overrightarrow{x}\) to the relative error in the A matrix3: some perturbation in the matrix, \({\bf{A}}+\delta {\bf{A}}\), can cause an error in the solution vector, \(\overrightarrow{x}+\delta \overrightarrow{x}\), such that \(\parallel \delta \overrightarrow{x}\parallel \sim \kappa \times \parallel \delta {\bf{A}}\parallel \). By taking Er as an estimate for \(\parallel \delta {\bf{A}}\parallel \), we obtain the above error for the solution vector as \({\varepsilon }_{0}=\parallel \delta \overrightarrow{x}\parallel \sim \kappa \times {E}_{r}\). The condition numbers given in Table 1 are computed by using Eq. (9) to construct the A matrices. For the average readout error of IBM Q 20 Tokyo device, we use \({E}_{r}=6.76\times {10}^{-2}\)74. The estimated errors \({\varepsilon }_{0}\) are given in Table 1. We see that the relative errors fall below the respective errors, indicating that the precision limit is due to the readout error of the current NISQ hardware. Note that the machines are calibrated several times during data collection, so the hardware error varies and the Er value is only an estimate.

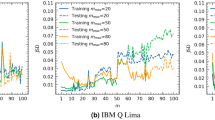

Figure 4 shows the results for linear systems of dimension \(N=64\) and \(N=128\), obtained by the QASM simulator that performs two quantum walk evolutions with uniformly distributed \(({\theta }_{\ell },{\varphi }_{\ell },{\lambda }_{\ell })\in [0,\pi ]\). The relevant parameters for these two matrices are given in Table 1. The results again evidence that the algorithm works well, even in the presence of complex phases \({\varphi }_{\ell }\)’s and \({\lambda }_{\ell }\)’s. Note that we here take (\({\theta }_{\ell }\), \({\varphi }_{l}\), \({\lambda }_{l}\)) as random variables to demonstrate the efficiency of our algorithm, but in real applications, these variables must be provided by other algorithms to generate a proper P matrix Fig. 4.

Relative errors \(\varepsilon =|{x}_{I}^{exact}-{x}_{I}|/|{x}_{I}^{exact}|\) as a function of the sampling number ns for \(N=64\) and \(N=128\) matrices, obtained by performing two quantum walk evolutions, \({{\mathscr{U}}}^{2}\). Black solid lines represent the \(1/\sqrt{{n}_{s}}\) error reduction expected for Monte Carlo calculations. Red dashed line and blue dotted line are the results computed by the QASM simulator.

The communication latency between classical and quantum computer is the most time-consuming part, containing \({\mathscr{O}}(c{n}_{s})\) communications. Fortunately, this number does not scale as N. For users with direct access to the quantum processors, communication bottleneck should be less severe.

Discussion

A comparison of computational resources is given in Table 2. For hybrid quantum walk algorithm, we need \(1+\,\log (N)\) qubits, \(q\,\log (N)\) CNOT gates, and \(q\,\log (N)\) U3 gates, where q is the number of evolutions. The initialization takes log(N) X gates; but since they can be executed simultaneously, the initialization occupies one time slot only. Totally \(1+2q\,\log (N)\) time slots are required for one quantum walk evolution to obtain one component of the solution vector \(\overrightarrow{x}\). This can be an advantage when one is interested in partial information about \(\overrightarrow{x}\). The same amount of time slots can be similarly derived for the classical random walk algorithm. Yet, we stress that these two algorithms deal with different transition probability matrices: factorisable matrices for classical random walk, and more complex correlated matrices for quantum random walk. The qubit-qubit correlation built into the correlated matrix can potentially be harnessed to perform complex tasks.

Other advantages of the algorithms we propose are:

-

(i)

By restricting the matrices A to those that can be encoded in Hamming cubes, we can sample both classical and quantum random walk spaces that scale exponentially with the number of bits/qubits, and hence gain space complexity.

-

(ii)

Classical Monte Carlo methods have time complexity of \({\mathscr{O}}(N)\) for general P matrices. For the matrices here considered, our algorithms have \({\mathscr{O}}(\log (N))\).

-

(iii)

It is easier to access input and output than the HHL-type algorithm.

-

(iv)

Random processes in a quantum computer are fundamental, and so are not plagued by various problems associated with pseudo-random number generators76, like periods and unwanted correlations.

-

(v)

Our quantum algorithm can run on noisy quantum computers whose coherence time is short.

We propose a hybrid quantum algorithm suitable for NISQ quantum computers to solve systems of linear equations. The solution vector \(\overrightarrow{x}\) and constant vector \(\overrightarrow{b}\) we consider here are classical data, so the input and readout can be executed easily. Numerical simulations using IBM Q systems support the feasibility of this algorithm. We demonstrate that, by performing two quantum walk evolutions, the resulting probability matrix become more correlated in the parameter space. As long as the quantum circuit in this framework produces highly correlated probability matrix with a relatively short circuit depth, we can always gain quantum advantages over classical circuits.

Methods

Gray code basis

The natural binary code \(B=({B}_{n-1},{B}_{n-2},\ldots ,{B}_{1},{B}_{0})\) is transformed to the Gray code basis72 according to

\(\forall i\in \{0,\ldots ,n-1\}\) with \({B}_{n}=0\). The probability matrix in the Gray code basis is given by

with \({i}_{N}=0\).

Lemma 1

Let SN be the set of all possible n-bit strings \(\{({S}_{n-1},{S}_{n-2},\ldots ,{S}_{1},{S}_{0})|{S}_{i}\in \{0,1\}\,\forall \,i\in \{0,1,\ldots ,n-1\}\}\) with \(n={\log }_{2}\,N\), and \(\pi \) be a permutation of the set SN. If there exists a function \(f:{S}_{N}\mapsto {\mathbb{R}}\) such that for \(A\in {{\mathbb{R}}}^{N\times N}\),

\(\forall \,I,J\in {S}_{N}\), and if \(\pi \) is bitwise XOR homomorphic, then we have \({A}_{\pi (I\oplus J),\pi (J)}=f(\pi (I))\).

Proof 1 Since \(\pi \) is bitwise XOR homomorphic, Eq. (A.3) leads to

\(\forall \,I,J\in {S}_{N}\).

Lemma 2

Let \(B\in {S}_{N}\) be represented by \(({B}_{n-1},\ldots ,{B}_{0})\). Let \(g:{S}_{N}\mapsto {S}_{N}\) be a function that transforms from natural bit string to Gray code according to \(g{(B)}_{i}={B}_{i+1}\oplus {B}_{i}\), \(\forall \,i\in \{0,1,\ldots ,n-1\}\) with \({B}_{n}=0\). Then g is a bitwise XOR homomorphism.

Proof 2 Let \(I,J\in {S}_{N}\) be represented by bit strings \(({I}_{n-1},\ldots ,{I}_{0})\) and \(({J}_{n-1},\ldots ,{J}_{0})\), respectively. Using

with \({I}_{n}={J}_{n}=0\), we get

\(\forall \,i\in \mathrm{0,}\ldots ,n-1\).

Using Lemma 1 and Lemma 2, the following theorem is clear.

Theorem 1

There exists a permutation that maps the probability matrix produced by classical random walk to the probability matrix given in Eq. (A.2) produced by the quantum random walk circuit in a reverse order, that is, in Gray code basis.

Derivation of Eq. (11)

We use the evolution operator given in Eq. (6),

to compute the two-evolution operator

Next we project the \({{\mathscr{U}}}^{2}\) operator on the \(|{\psi }_{0J}\rangle \) state,

where \(f(I,K)\) is given in Eq. (12) and

We then project \({{\mathscr{U}}}^{2}|{\psi }_{0J}\rangle \) on the final state \(|k{\rangle }_{\diamond }|J^{\prime} {\rangle }_{q}\)

which leads to the probability matrix elements as

Two-evolution quantum walk on N = 4 graph

The probability matrix elements \({P}_{J^{\prime} ,J}^{quantum}\) for two quantum evolutions \({{\mathscr{U}}}^{2}\) on the four-node graph read

Surprisingly, in this case the matrix elements do not depend on the \(({\varphi }_{0},{\varphi }_{1})\) and \(({\lambda }_{0},{\lambda }_{1})\) phases. However, the matrix elements do depend on complex phases when \(N\ge 8\), as can be numerically checked. Note that \(({P}_{01},{P}_{02},{P}_{23},{P}_{13})\) depend on \({\theta }_{1}\) only: the destructive interference between configurations totally eliminates the \({\theta }_{0}\) dependence, which is difficult to do by simple classical random walks.

Solving for general matrices

Here we discuss the applicability of our quantum random walk algorithm to general matrices50,51,77,78. Given an arbitrary matrix A, we can obtain \({\bf{B}}={\bf{1}}-{\bf{A}}\) and \({B}_{I,J}={P}_{I,J}{v}_{I,J}\). Then the linear system \({\bf{A}}\overrightarrow{x}=\overrightarrow{b}\) can be solved by performing random walks according to the \({P}_{I,J}\) transition probabilities and by multiplying the factor \({v}_{I,J}\) at each walking step, provided that the linear solver converges to a solution. In classical random walk algorithms, it has been shown50 that the convergence of the linear solver depends on the spectral radius \(\rho ({{\bf{B}}}^{\ast })\) of the matrix B* where \({B}_{I,J}^{\ast }={B}_{I,J}^{2}/{P}_{I,J}={P}_{I,J}{v}_{I,J}^{2}\), that is, the necessary and sufficient condition for convergence is \(\rho ({{\bf{B}}}^{\ast }) < 1\). We expect a similar condition for quantum random walk algorithms. However, one should consider the hybrid solver presented in this work as a special-purpose solver, in which the quantum circuit is designed for a specific matrix problem. The quantum circuits demonstrated in this work show that there are probability transition matrices that are easy to sample using quantum circuits but difficult using classical circuits. How to tailor a circuit design along with the relevant parameters suitable for the kind of application we are looking for is beyond the scope of this work.

References

Nielsen, M. A. & Chuang, I. L. Quantum Computation and Quantum Information: 10th Anniversary Edition. 10th edn. (Cambridge University Press, New York, NY, USA, 2011).

Golub, G. H. & Van Loan, C. F. Matrix Computations (3rd Ed.). (Johns Hopkins University Press, Baltimore, MD, USA, 1996).

Saad, Y. Iterative Methods for Sparse Linear Systems. 2nd edn. (Society for Industrial and Applied Mathematics, Philadelphia, PA, USA, 2003).

Harrow, A. W., Hassidim, A. & Lloyd, S. Quantum algorithm for linear systems of equations. Phys. Rev. Lett. 103, 150502, https://doi.org/10.1103/PhysRevLett.103.150502 (2009).

Clader, B. D., Jacobs, B. C. & Sprouse, C. R. Preconditioned quantum linear system algorithm. Phys. Rev. Lett. 110, 250504, https://doi.org/10.1103/PhysRevLett.110.250504 (2013).

Montanaro, A. & Pallister, S. Quantum algorithms and the finite element method. Phys. Rev. A 93, 032324, https://doi.org/10.1103/PhysRevA.93.032324 (2016).

Childs, A., Kothari, R. & Somma, R. Quantum algorithm for systems of linear equations with exponentially improved dependence on precision. SIAM Journal on Computing 46, 1920–1950, https://doi.org/10.1137/16M1087072 (2017).

Costa, P. C. S., Jordan, S. & Ostrander, A. Quantum algorithm for simulating the wave equation. Phys. Rev. A 99, 012323, https://doi.org/10.1103/PhysRevA.99.012323 (2019).

Berry, D. W., Childs, A. M., Ostrander, A. & Wang, G. Quantum algorithm for linear differential equations with exponentially improved dependence on precision. Communications in Mathematical Physics 356, 1057–1081, https://doi.org/10.1007/s00220-017-3002-y (2017).

Dervovic, D. et al. Quantum linear systems algorithms: a primer. arXiv e-prints arXiv:1802.08227 (2018).

Wossnig, L., Zhao, Z. & Prakash, A. Quantum linear system algorithm for dense matrices. Phys. Rev. Lett. 120, 050502, https://doi.org/10.1103/PhysRevLett.120.050502 (2018).

Biamonte, J. et al. Quantum machine learning. Nature 549, 195–202, https://doi.org/10.1038/nature23474, 1611.09347 (2017).

Ciliberto, C. et al. Quantum machine learning: a classical perspective. Proceedings of the Royal Society of London Series A 474, 20170551, https://doi.org/10.1098/rspa.2017.0551, 1707.08561 (2018).

Deutsch, D. Quantum theory, the church–turing principle and the universal quantum computer. Proceedings of the Royal Society of London. A. Mathematical and Physical Sciences 400, 97–117 (1985).

Kadowaki, T. & Nishimori, H. Quantum annealing in the transverse ising model. Phys. Rev. E 58, 5355–5363, https://doi.org/10.1103/PhysRevE.58.5355 (1998).

Farhi, E., Goldstone, J., Gutmann, S. & Sipser, M. Quantum Computation by Adiabatic Evolution. eprint arXiv:quant-ph/0001106 (2000).

Aharonov, D. et al. Adiabatic quantum computation is equivalent to standard quantum computation. SIAM Review 50, 755–787, https://doi.org/10.1137/080734479 (2008).

O’Malley, D. & Vesselinov, V. V. Toq.jl: A high-level programming language for d-wave machines based on julia. In 2016 IEEE High Performance Extreme Computing Conference (HPEC), 1–7, https://doi.org/10.1109/HPEC.2016.7761616 (2016).

Borle, A. & Lomonaco, S. J. Analyzing the Quantum Annealing Approach for Solving Linear Least Squares Problems. arXiv e-prints arXiv:1809.07649 (2018).

Chang, C. C., Gambhir, A., Humble, T. S. & Sota, S. Quantum annealing for systems of polynomial equations. Scientific Reports 9, https://doi.org/10.1038/s41598-019-46729-0 (2019).

Wen, J. et al. Experimental realization of quantum algorithms for a linear system inspired by adiabatic quantum computing. Phys. Rev. A 99, 012320, https://doi.org/10.1103/PhysRevA.99.012320 (2019).

Subaşı, Y. B. U., Somma, R. D. & Orsucci, D. Quantum algorithms for systems of linear equations inspired by adiabatic quantum computing. Phys. Rev. Lett. 122, 060504, https://doi.org/10.1103/PhysRevLett.122.060504 (2019).

Preskill, J. Quantum Computing in the NISQ era and beyond. Quantum 2, 79, https://doi.org/10.22331/q-2018-08-06-79 (2018).

Cao, Y., Daskin, A., Frankel, S. & Kais, S. Quantum circuit design for solving linear systems of equations. Molecular Physics 110, 1675–1680, https://doi.org/10.1080/00268976.2012.668289 (2012).

Cai, X.-D. et al. Experimental quantum computing to solve systems of linear equations. Phys. Rev. Lett. 110, 230501, https://doi.org/10.1103/PhysRevLett.110.230501 (2013).

Barz, S. et al. A two-qubit photonic quantum processor and its application to solving systems of linear equations. Scientific Reports 4, 6115, https://doi.org/10.1038/srep06115, 1302.1210 (2014).

Pan, J. et al. Experimental realization of quantum algorithm for solving linear systems of equations. Phys. Rev. A 89, 022313, https://doi.org/10.1103/PhysRevA.89.022313 (2014).

Zheng, Y. et al. Solving systems of linear equations with a superconducting quantum processor. Phys. Rev. Lett. 118, 210504, https://doi.org/10.1103/PhysRevLett.118.210504 (2017).

Lee, Y., Joo, J. & Lee, S. Hybrid quantum linear equation algorithm and its experimental test on ibm quantum experience. Scientific reports 9, 4778 (2019).

Aaronson, S. Read the fine print. Nature Physics 11, 291–293, https://doi.org/10.1038/nphys3272 (2015).

Childs, A. M. Quantum algorithms: Equation solving by simulation. Nature Physics 5, 861, https://doi.org/10.1038/nphys1473 (2009).

Möttönen, M., Vartiainen, J. J., Bergholm, V. & Salomaa, M. M. Quantum circuits for general multiqubit gates. Phys. Rev. Lett. 93, 130502, https://doi.org/10.1103/PhysRevLett.93.130502 (2004).

Plesch, M. & Brukner, I. C. V. Quantum-state preparation with universal gate decompositions. Phys. Rev. A 83, 032302, https://doi.org/10.1103/PhysRevA.83.032302 (2011).

Coles, P. J. et al. Quantum Algorithm Implementations for Beginners. arXiv e-prints arXiv:1804.03719 (2018).

James, D. F. V., Kwiat, P. G., Munro, W. J. & White, A. G. Measurement of qubits. Phys. Rev. A 64, 052312, https://doi.org/10.1103/PhysRevA.64.052312 (2001).

Suess, D., Rudnicki, Ł., Maciel, T. O. & Gross, D. Error regions in quantum state tomography: computational complexity caused by geometry of quantum states. New Journal of Physics 19, 093013, https://doi.org/10.1088/1367-2630/aa7ce9 (2017).

Cramer, M. et al. Efficient quantum state tomography. Nature Communications 1, 149, https://doi.org/10.1038/ncomms1147, 1101.4366 (2010).

Peruzzo, A. et al. A variational eigenvalue solver on a photonic quantum processor. Nature Communications 5, 4213, https://doi.org/10.1038/ncomms5213, 1304.3061 (2014).

Wecker, D., Hastings, M. B. & Troyer, M. Progress towards practical quantum variational algorithms. Phys. Rev. A 92, 042303, https://doi.org/10.1103/PhysRevA.92.042303 (2015).

McClean, J. R., Romero, J., Babbush, R. & Aspuru-Guzik, A. The theory of variational hybrid quantum-classical algorithms. New Journal of Physics 18, 023023, https://doi.org/10.1088/1367-2630/18/2/023023, 1509.04279 (2016).

Kandala, A. et al. Hardware-efficient variational quantum eigensolver for small molecules and quantum magnets. Nature 549, 242–246, https://doi.org/10.1038/nature23879, 1704.05018 (2017).

Sutton, R. S. & Barto, A. G. Introduction to Reinforcement Learning. 1st edn. (MIT Press, Cambridge, MA, USA, 1998).

Aleksandrowicz, G. et al. Qiskit: An open-source framework for quantum computing, https://doi.org/10.5281/zenodo.2562110 (2019).

IBM Q Experience, https://quantumexperience.ng.bluemix.net, Accessed: 12/01/2018 (2016).

Barto, A. & Duff, M. Monte carlo matrix inversion and reinforcement learning. In Proceedings of the 6th International Conference on Neural Information Processing Systems, NIPS’93, 687–694 (Morgan Kaufmann Publishers Inc., San Francisco, CA, USA, 1993).

Paparo, G. D., Dunjko, V., Makmal, A., Martin-Delgado, M. A. & Briegel, H. J. Quantum speedup for active learning agents. Phys. Rev. X 4, 031002, https://doi.org/10.1103/PhysRevX.4.031002 (2014).

Goral, C. M., Torrance, K. E., Greenberg, D. P. & Battaile, B. Modeling the interaction of light between diffuse surfaces. SIGGRAPH Comput. Graph. 18, 213–222, https://doi.org/10.1145/964965.808601 (1984).

Bravyi, S., Divincenzo, D. P., Oliveira, R. & Terhal, B. M. The complexity of stoquastic local hamiltonian problems. Quantum Info. Comput. 8, 361–385 (2008).

Bravyi, S. Monte carlo simulation of stoquastic hamiltonians. Quantum Info. Comput. 15, 1122–1140 (2015).

Ji, H., Mascagni, M. & Li, Y. Convergence analysis of markov chain monte carlo linear solvers using ulam-von neumann algorithm. SIAM Journal on Numerical Analysis 51, 2107–2122 (2013).

Dimov, I. T. & McKee, S. Monte Carlo Methods for Applied Scientists (World Scientific Press, 2004).

Metropolis, N. & Ulam, S. The monte carlo method. Journal of the American Statistical Association 44, 335–341, https://doi.org/10.1080/01621459.1949.10483310, PMID: 18139350 (1949).

Forsythe, G. E. & Leibler, R. A. Matrix inversion by a monte carlo method. Mathematics of Computation 4, 127–129 (1950).

Wasow, W. R. A note on the inversion of matrices by random walks. Mathematical Tables and Other Aids to Computation 6, 78–81 (1952).

Lu, F. & Schuurmans, D. Monte carlo matrix inversion policy evaluation. In Proceedings of the Nineteenth Conference on Uncertainty in Artificial Intelligence, UAI’03, 386–393 (Morgan Kaufmann Publishers Inc., San Francisco, CA, USA, 2003).

Branford, S. et al. Monte carlo methods for matrix computations on the grid. Future Generation Computer Systems 24, 605–612, https://doi.org/10.1016/j.future.2007.07.006 (2008).

Ceperley, D. M. & Alder, B. J. Ground state of the electron gas by a stochastic method. Phys. Rev. Lett. 45, 566–569, https://doi.org/10.1103/PhysRevLett.45.566 (1980).

Negele, J. W. & Orland, H. Quantum many-particle physics (Addison-Wesley, 1988).

Landau, D. & Binder, K. A Guide to Monte Carlo Simulations in Statistical Physics. (Cambridge University Press, New York, NY, USA, 2005).

Hamming, R. W. Error detecting and error correcting codes. The Bell System Technical Journal 29, 147–160, https://doi.org/10.1002/j.1538-7305.1950.tb00463.x (1950).

Aharonov, Y., Davidovich, L. & Zagury, N. Quantum random walks. Phys. Rev. A 48, 1687–1690, https://doi.org/10.1103/PhysRevA.48.1687 (1993).

Childs, A. M., Farhi, E. & Gutmann, S. An example of the difference between quantum and classical random walks. eprint arXiv:quant-ph/0103020 (2001).

Aharonov, D., Ambainis, A., Kempe, J. & Vazirani, U. Quantum walks on graphs. In Proceedings of the Thirty-third Annual ACM Symposium on Theory of Computing, STOC ’01, 50–59, https://doi.org/10.1145/380752.380758 (ACM, New York, NY, USA, 2001).

Moore, C. & Russell, A. Quantum walks on the hypercube. In Rolim, J. D. P. & Vadhan, S. (eds) Randomization and Approximation Techniques in Computer Science, 164–178 (Springer Berlin Heidelberg, Berlin, Heidelberg, 2002).

Szegedy, M. Quantum speed-up of markov chain based algorithms. In Proceedings of the 45th Annual IEEE Symposium on Foundations of Computer Science, FOCS ’04, 32–41, https://doi.org/10.1109/FOCS.2004.53 (IEEE Computer Society, Washington, DC, USA, 2004).

Kendon, V. M. A random walk approach to quantum algorithms. Philosophical Transactions of the Royal Society of London Series A 364, 3407–3422, https://doi.org/10.1098/rsta.2006.1901, quant-ph/0609035 (2006).

Childs, A. Lecture notes on quantum algorithms (2017).

Košk, J. & Bužek, V. Scattering model for quantum random walks on a hypercube. Phys. Rev. A 71, 012306, https://doi.org/10.1103/PhysRevA.71.012306 (2005).

Shikano, Y. & Katsura, H. Localization and fractality in inhomogeneous quantum walks with self-duality. Phys. Rev. E 82, 031122, https://doi.org/10.1103/PhysRevE.82.031122 (2010).

Breuer, H.-P., Laine, E.-M., Piilo, J. & Vacchini, B. Colloquium: Non-markovian dynamics in open quantum systems. Rev. Mod. Phys. 88, 021002, https://doi.org/10.1103/RevModPhys.88.021002 (2016).

de Vega, I. & Alonso, D. Dynamics of non-markovian open quantum systems. Rev. Mod. Phys. 89, 015001, https://doi.org/10.1103/RevModPhys.89.015001 (2017).

Knuth, D. E. The Art of Computer Programming, Volume 4, Fascicle 2: Generating All Tuples and Permutations (Art of Computer Programming) (Addison-Wesley Professional, 2005).

Gilbert, E. N. Gray codes and paths on the n-cube. The Bell System Technical Journal 37, 815–826, https://doi.org/10.1002/j.1538-7305.1958.tb03887.x (1958).

IBM Q devices and simulators, https://www.research.ibm.com/ibm-q/technology/devices/, Accessed: 2019-02-20 (2019).

Cramming More Power Into a Quantum Device, https://www.ibm.com/blogs/research/2019/03/power-quantum-device/, Accessed: 2019-03-21 (2019).

Srinivasan, A., Mascagni, M. & Ceperley, D. Testing parallel random number generators. Parallel Computing 29, 69–94, https://doi.org/10.1016/S0167-8191(02)00163-1 (2003).

Dimov, I., Dimov, T. & Gurov, T. A new iterative monte carlo approach for inverse matrix problem. Journal of Computational and Applied Mathematics 92, 15–35, https://doi.org/10.1016/S0377-0427(98)00043-0 (1998).

Halton, J. H. Sequential monte carlo techniques for the solution of linear systems. J. Sci. Comput. 9, 213–257, https://doi.org/10.1007/BF01578388 (1994).

Acknowledgements

We thank Chia-Cheng Chang, Yu-Cheng Su, Rudy Raymond, and Tomah Sogabe for discussions. Access to IBM Q systems is provided by IBM Q Hub at National Taiwan University. This work is supported in part by Ministry of Science and Technology, Taiwan, under grant No. MOST 107-2627-E-002 -001 -MY3, MOST 106-2221-E-002 -164 -MY3, and MOST 108-2628-E-002 -010 -MY3.

Author information

Authors and Affiliations

Contributions

C.-C.C. performed the calculations and simulations. S.-Y.S. provided theoretical support and revised the manuscript. M.-F.W. provided technical support. Y.-R.W. accessed IBM Q systems. All authors discussed and wrote the paper.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Chen, CC., Shiau, SY., Wu, MF. et al. Hybrid classical-quantum linear solver using Noisy Intermediate-Scale Quantum machines. Sci Rep 9, 16251 (2019). https://doi.org/10.1038/s41598-019-52275-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-019-52275-6

This article is cited by

-

A Framework for Quantum-Classical Cryptographic Translation

International Journal of Theoretical Physics (2021)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.