Abstract

The uncertainty principle, which gives the constraints on obtaining precise outcomes for incompatible measurements, provides a new vision of the real world that we are not able to realize from the classical knowledge. In recent years, numerous theoretical and experimental developments about the new forms of the uncertainty principle have been achieved. Among these efforts, one attractive goal is to find tighter bounds of the uncertainty relation. Here, using an all optical setup, we experimentally investigate a most recently proposed form of uncertainty principle—the fine-grained uncertainty relation assisted by a quantum memory. The experimental results on the case of two-qubit state with maximally mixed marginal demonstrate that the fine-graining method can help to get a tighter bound of the uncertainty relation. Our results might contribute to further understanding and utilizing of the uncertainty principle.

Similar content being viewed by others

Introduction

Since the original idea of uncertainty principle proposed by Heisenberg in 19271, the uncertainty principle, one of the most remarkable features of quantum mechanics distinguishing from the classical physical world, has been studied for over ninety years with various forms of uncertainty relations achieved, such as standard deviation and entropy2,3,4. In recent years, there has been a great interest in the entropic uncertainty relations motivated by its important applications, such as quantum cryptography5,6,7,8, entanglement witnesses9,10,11,12 and quantum metrology13.

Soon after the first entropic uncertainty relation for pairs of non-degenerate observables given by Deutsch14, the popular version of uncertainty relation was conjectured and proved15,16, which can be extended to a pair of POVM measurements17

where H(X) denotes the Shannon entropy of the outcome probability distribution when X is measured. And c = maxi,j|〈ai|bj〉|2, where |ai〉(|bj〉) represents the eigenvectors of the observables R(S), quantifying the complementary of the observables. Nevertheless, the lower bound of the inequality (1) can be improved in the presence of quantum memory6, which have been proved experimentally10,11. The entropic uncertainty relation with quantum memory is

where the conditional von Neumann entropy S(R|B) of the state given by \(\sum _{i}\,(|{\psi }_{i}\rangle \langle {\psi }_{i}|\otimes I){\rho }_{AB}(|{\psi }_{i}\rangle \langle {\psi }_{i}|\otimes I)\), with |ψi〉 being the eigenstate of observable R, quantifies the uncertainty of the measurement outcomes of R with the information stored in a quantum memory B. The conditional von Neumann entropy S(A|B) quantifies the degree of correlation between the particle A and the quantum memory B. With the help of the Fano’s inequality18, the entropic uncertainty can be given by11

where \(H({p}_{d}^{R(S)})=-\,{p}_{d}^{R(S)}\,{\mathrm{log}}_{2}{p}_{d}^{R(S)}-(1-{p}_{d}^{R(S)}){\mathrm{log}}_{2}(1-{p}_{d}^{R(S)})\) is a binary entropy and \({p}_{d}^{R}({p}_{d}^{S})\) is the probability that Alice and Bob choose the same measurement of observables R(S) and get different outcomes.

Even though the entropic function is fairly appropriate in many cases, it is a rather coarse way to describe quantum uncertainty. Each entropy is just a function of the probability distribution of measurement outcomes as a whole, and it can not distinguish the uncertainty inherent in obtaining any combination of outcomes for different measurements. Considering these reasons, Oppenheim and Wehner proposed the so-called fine-grained uncertainty relation19. For a set of measurements T = {t} and the corresponding probability distribution D = {p(t)} over the measurements T, a series of inequalities with one for each combination of possible outcomes can be obtained by

where \(P(\sigma ;\,{\bf{x}})\) is the probability of one possible combination of measurement outcomes, written as a string \({\bf{x}}=({x}^{(1)},{x}^{(2)},\ldots ,{x}^{(n)})\in {{\bf{B}}}^{\times n}\) with \(n=|T|\) representing the number of measurements, \(p{({x}^{(t)}|t)}_{\sigma }\) is the probability of obtaining outcome \({x}^{(t)}\) when the measurement labelled t is performed on the system state \(\sigma \), and \({\zeta }_{{\bf{x}}}=\mathop{{\rm{\max }}\,}\limits_{\sigma }\sum _{t=1}^{n}\,p(t)p{({x}^{(t)}|t)}_{\sigma }\), with the maximization taken over all states allowed on a particular system. Therefore, the uncertainty of measurement outcome occurs whenever \({\zeta }_{{\bf{x}}}\le 1\). Then the idea of fine-grained uncertainty relation was applied to the case of bipartite systems and established the interesting connection with nonlocality by Oppenheim and Wehner19. They considered the nonlocal retrieval game played by Alice and Bob, sharing a bipartite state, receiving a binary question from Chalice whose questions that sent to Alice and Bob is chosen completely randomly, and then giving their binary answers after measurements performed on their own qubit, respectively. Based on the winning condition which can be seen as a particular choice of measurement outcomes in the game, there is a maximum winning probability maximizing over the set of measurement settings chosen by Alice and Bob. There is a close connection between the game and uncertainty relation, in particular, every game can be considered as giving rise to an uncertainty relation, and vice versa.

It has been shown that the fine-grained uncertainty relation can be used to many physical issues of interest, for example, involving nonlocality to discriminate among classical, quantum, and superquantum correlations19,20,21, investigating steerability in theory and experiment with the ability to detect steering in all steerable two-qubit Werner states using only two measurement settings22,23,24, exploring the second law of thermodynamics and concluding that a violation of the uncertainty relation implies a violation of the thermodynamical law25,26, for the uncertainty relation in a relativistic regime suggesting that the uncertainty bound is dependent on the acceleration parameter and the choice of Unruh modes27. Besides, some other studies can be found in28,29.

Pramanik et al. apply the fine-graining strategy into the scenario with the presence of quantum memory and derive a new form of the uncertainty relation, in which the lower bound of entropic uncertainty corresponding to the measurement of two observables is determined by fine graining the possible measurement outcomes30. They consider a quantum game that Alice prepares a two-qubit state ρAB, and sends one of the qubits to Bob as the quantum memory. Then Alice performs one of measurements, corresponding to the two observables R and S on her qubit, and informs Bob of the choice of the measurement. What Bob’s task is to minimize the uncertainty of the measurement outcomes, \(H({p}_{d}^{R})+H({p}_{d}^{S})\). Their fine-grained uncertainty relation reads

The first term at the right hand side of inequality (5) is the entropy as they choose the measurement R = σZ. And \({p}_{inf}^{S}\) is the infimum of \({P}_{d}^{S}\) as S ≠ σZ, which is given by

\({A}_{S}^{a}({B}_{S}^{b})\,\)is the projector of observable S with outcome a(b), and the condition function reads

which is the essence of the fine-grained strategy. The uncertainty relation (5) is independent of the choice of measurement settings because it optimizes the reduction of uncertainty quantified by the conditional Shannon entropy over all possible observables.

Experimental Part

In this paper, we report an all-optical experiment to investigate the fine-grained uncertainty relation with the presence of a quantum memory, in the case of a two-qubit state with maximally mixed marginal, one qubit for the system under consideration, the other for the memory. Our results show that fine-graining actually gives a finer lower bound than previous course-graining entropic functions, that is, there is a gap between the attainable minimal uncertainty after fine-graining and the right hand side of inequality (3). We further make numerical simulations for the cases of different states, which also give similar conclusions.

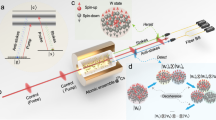

The experiment setup is shown in Fig. 1. It can be divided into two parts, state preparation and detection. First we start with the state preparation. For experimental simplicity, we just consider the two-qubit case. Here, we choose to prepare a maximally mixed marginal state, given by \(\rho =\frac{1}{4}\times ({I}_{4\times 4}+{\sum }_{j=1}^{3}{c}_{j}{\sigma }_{j}\otimes {\sigma }_{j})\), with cj are real constants satisfying the constraint condition that the state ρ is physical. In the experiment, we use EPR source to generate a pair of polarization-entangled photons A and B, with maximally entangled state \(\frac{1}{\sqrt{2}}(|HH\rangle +|VV\rangle )\). The photon A is directly transformed to the state detection part by a fiber, while the photon B passes through a TBS31. In order to prepare the target state which is determined by the parameters c1 = 0.6, c2 = −0.16, c3 = −0.24, we set the angles of the two HWPs in the loop of TBS, θ1 = θ2 ≈ 26°, which means about 62.1% of the entangled photon \(\frac{1}{\sqrt{2}}(|HH\rangle +|VV\rangle )\) is transformed to \(\frac{1}{\sqrt{2}}(|HV\rangle +|VH\rangle )\). Then the photon with the state \(\frac{1}{\sqrt{2}}(|HV\rangle +|VH\rangle )\) is partly decohered into 0.177|HV + VH〉〈HV + VH| + 0.323(|HV〉〈HV| + |VH〉〈VH|) by inserting a 160λ (780 nm) quartz plate (QP) in the long arm before implementing incoherent superposition with the photon in the short arm. After state preparation, photon A and B pass through the 8-nm and 3-nm interference filters32, and the projective measurements \({A}_{S}^{a}=\frac{I+{(-1)}^{a}\hat{n}\cdot \overrightarrow{\sigma }}{2}\) and \({A}_{S}^{b}=\frac{I+{(-1)}^{b}\hat{n}\cdot \overrightarrow{\sigma }}{2}\) of the observable S are performed on the two photons A and B respectively, where the observable S is parameterized by \(S=\hat{n}\cdot \overrightarrow{\sigma }\), \(\hat{n}=\{sin\theta cos\varphi ,sin\theta sin\varphi ,cos\theta \}\), \(\overrightarrow{\sigma }=\{{\sigma }_{x},{\sigma }_{y},{\sigma }_{z}s\}\) are the Pauli matrices, and a (b) takes the value either 0 or 1. So we can get the probability \({P}_{d}^{S}\) that satisfying the winning condition a ⊕ b = 1, which means the uncertainty \(H({P}_{d}^{S})\). By changing the angles of QWPs and HWPs in the state detection part, the projection direction \(\hat{n}\) can be taken all over. Besides, we also need to perform state tomography measurements to evaluate the Berta’s uncertainty bound. And these measurement results help to verify the gap between the fine-grained bound and Berta’s bound.

Experimental setup. There are two parts, state preparation and detection. For state preparation part: The Source contains the process that an ultrafast pulse, emitted from a mode-locked Ti:sapphire laser with 140 fs duration, 76 MHz repetition rate, and 780 nm central wavelength, passes through a frequency doubler to generate the 390 nm pulse. Then the ultraviolet pulse passes a sandwich-like BBO crystal to generate a pair of polarization-entangled photons, A and B, via spontaneous parametric down-conversion (SPDC) process. Photon A is directly measured by the detection part, while photon B passes through an unbalanced Mach-Zehnder interference (UMZ) set-up with one arm M introducing decoherence by adding a quartz plate (QP) and the path difference between the short and long arms of UMZ is about 0.15 m, corresponding to the time difference about 0.5 ns, which is larger than the coherence length of the photons and smaller than the coincidence window. The ratio of the relative amplitude of two arms L and M in the UMZ can be adjusted by a special designed tunable beam splitter (TBS) (black dotted line rectangle), which contains a polarizing beam splitter (PBS), three mirrors and three half-wave plates (HWPs). Taking a photon with the state α|H〉 + β|V〉 as an example. The photon is split into two paths, transmission (path 1) and reflection (path 2) in the loop of TBS when it arrives at the PBS, and the state of photon becomes α|H〉1 + β|V〉2, then they are coincident on the PBS after being transformed by the two HWPs set at the same angle θ1 = θ2 = θ respectively, as α(cos2θ|H〉1 + sin2θ|V〉1) + β(sin2θ|H〉2 − cos2θ|V〉2). So the states at the two output ports M and L of the TBS are cos2θ(α|H〉1 − β|V〉2) and sin2θ(α|V〉1 + β|H〉2), respectively. At last, after tilting the QWP at the M port (not shown in Fig. 1) and inserting a 45° HWP at the L port, the output amplitude ratio between M and L port of the TBS can be obtained, M:L = 1:tan2θ. For detection part, photon A and B pass through interference filters (IFs) with 8 nm and 3 nm respectively, before projective detections of photons are chosen by the angles of quarter-wave plates (QWPs) and HWPs. Then the coincidence detection with 6-ns coincidence window is performed in the coincidence detection unit.

Results

Figure 2 shows the density matrix of the prepared two-qubit state ρ, and the target state. The density matrix of the prepared state is reconstructed by quantum state tomography33, which shows a rather high fidelity with the fidelity \(F=Tr[\sqrt{\sqrt{\rho }{\rho }_{0}\sqrt{\rho }}]\approx 0.9967\pm 0.0013\).

The experimental results and theoretical predictions of the Berta’s uncertainty bound6 and the fine-grained uncertainty relation are shown in Fig. 3. The purple and green curve surfaces indicate the theoretical predictions of fine-grained uncertainty and Berta’s uncertainty bound respectively. The discrete blue spheres represent the experimental results for fine-grained entropic uncertainty \(H({p}_{d}^{{\sigma }_{Z}})+H({p}_{d}^{S})\). And the red spheres represent Berta’s uncertainty bound \({\mathrm{log}}_{2}\frac{1}{c}+S(A|B)\), which we can calculate with the reconstructed density matrix. We also choose two special cases where ϕ = 1.57 and ϕ = 6.28 respectively to observe the difference between the Berta’s uncertainty bound and the fine-grained uncertainty in detail. The minimum value of \(H({p}_{d}^{S})\) occurs in this case for θ = 1.57, ϕ = 6.28, yielding the lower bound of \(H({p}_{d}^{{\sigma }_{Z}})+H({p}_{inf}^{S})\approx 1.69\), and our experimental result is 1.70 ± 0.003, which clearly exhibits a gap above the corresponding Berta’s uncertainty bound 1.534 ± 0.019 calculated from the experimentally reconstructed density matrix. However, to experimentally verify the theoretical prediction, we need to consider the finite sample effect when experimentally evaluating the bounds, which leads to finite error bars in Fig. 3. The error bars are estimated from the standard deviations of the values calculated via the Monte Carlo method34. To obtain the error bars for evaluating a result from a set of measured coincidence counts {Ni}, we repeatedly generate a set of random numbers on computer, which obey the Poisson distributions centered at the corresponding measured coincidence counts, and the widths of the Poisson distributions are set as mean of the counts {\(\sqrt{{N}_{i}}\)}. With each set of random numbers, we can evaluate the desired result one time. Then the error bars can be obtained by calculating the standard deviations of the results after repeating the above process for many times. The coincidence counting rate without polarization projection measurement in our experiment is about 5000 per second, and the data collection time for each measurement is 15 s. The repeating times in the Monte Carlo method is set to be 100. Finally, we get the error bars about ±0.003 for fine-grained entropic uncertainty and ±0.019 for the Berta’s uncertainty bound. These error bars are much smaller than the smallest gap 0.17 between the two kinds of uncertainty bounds, which demonstrate that finite sample effect here would not ruin the experimental results. To note that for every R and S, Berta’s uncertainty bound is also related to the density matrix of state, which contains more parameters than the fine-grained entropic uncertainty. This is the reason why the error bars of the Berta’s uncertainty bound are much larger than the fine-graining bound. From Fig. 3 we see that our experimental results agree with the theoretical predictions very well, which proves the fine-grained uncertainty relation (5) and shows that the Berta’s uncertainty bound is always lower than that of the fine-grained entropic uncertainty. And in most cases the Berta’s lower bound would never be achieved, except in some special cases such as Alice and Bob sharing the maximally entangled states. We also get the same conclusion by numerical simulating, varying both S and R measurements or for different states determined by the parameters c1, c2, c3, and the theoretical results are shown in Supplementary Information. Besides, more numerical simulation results can be obtained from the Mathematica program, as we show in ref.35.

Experimental results and theoretical predictions. (a) Theoretical predictions and experimental results for the Berta’s uncertainty bound and fine-grained uncertainty relation. The purple and green curve surfaces show the theoretical predictions of fine-grained uncertainty \(H({p}_{d}^{{\sigma }_{Z}})+H({p}_{d}^{S})\) and Berta’s uncertainty bound \({\mathrm{log}}_{2}\frac{1}{c}+S(A|B)\) for different θ and ϕ respectively (θ and ϕ are in the unit of radian). The blue and red spheres represent the experimental results of them separately. (b) and (c) represent the special case when ϕ = 1.57 and ϕ = 6.28. The purple and green solid line represent the theoretical predictions with ideal state. The blue and red solid circles represent the corresponding experiment results. In this experiment, the minimum value of \(H({p}_{d}^{S})\) occurs in this case for θ = 1.57, ϕ = 6.28, yielding the lower bound of inequality (5), \(H({p}_{d}^{{\sigma }_{Z}})+H({p}_{inf}^{S})\approx 1.69\), and our experimental result is 1.70. It can be seen that our experimental results coincide with the theoretical predictions very well.

Many previous studies have shown that uncertainty principle could be applied in quantum key distibution protocols6,7,30,36,37. Using the result of ref.38, namely, the lower bound of the amount of key, K, that Alice and Bob are able to extract from the per state ρABE shared by Alice (A), Bob (B) and eavesdropper Eve (E), is S(R|E) − S(R|B), Berta et al. derived a new lower bound on the key extraction rate6, \(K\ge {\mathrm{log}}_{2}\frac{1}{c}-S(R|B)-S(S|B)\). It is worth noting that the minimum of the last two terms of the lower bound, S(R|B) + S(S|B), can be expressed as \({{\rm{\min }}}_{R,S}[H({p}_{d}^{R})+H({p}_{d}^{S})]\) when Alice and Bob chose the same measurement on their respective sides, which enables Pramanik et al. to derive a tighter lower bound of the key extraction rate30, given by \(K\ge {\mathrm{log}}_{2}\frac{1}{c}-[H({p}_{d}^{{\sigma }_{Z}})+H({p}_{inf}^{S})]\). The bound is an optimal lower limit of key extraction, and valid not only for any shared correlation but also for all the measurement setting chosen by Alice and Bob, which may be proved in an experiment of an prototypical quantum key distribution protocol37,39.

Conclusion

In this paper, we have performed an experiment to investigate the fine-grained entropy uncertainty relation using two-qubit state with maximally mixed marginal, and numerically simulated different states by varying state parameters c1, c2, c3. The results show that the fine-graining indeed helps to give a tighter lower bound of the uncertainty relation, which also could be applied in quantum key distribution protocols, deriving an optimized lower bound of the key extraction rate that Alice and Bob are able to obtain, when they perform the same measurements on their respective sides. Besides, our results also may contribute to further knowledge of uncertainty principle.

References

Heisenberg, W. Über den anschaulichen Inhalt der quantentheoretischen Kinematik und Mechanik. Phys. 43, 172 (1927).

Busch, P., Heinonen, T. & Lahti, P. Heisenberg’s Uncertainty Principle. Phys. Rep. 452, 155 (2007).

Wehner, S. & Winter, A. Entropic uncertainty relations-a survey. New J. Phys. 12, 025009 (2010).

Coles, P. J., Berta, M., Tomamichel, M. & Wehner, S. Entropic uncertainty relations and their applications. Rev. Mod. Phys. 89, 015002 (2017).

Koashi, M. Unconditional security of quantum key distribution and the uncertainty principle. J. Phys. Conf. Ser. 36, 98 (2006).

Berta, M., Christandl, M., Colbeck, R., Renes, J. M. & Renner, R. The uncertainty principle in the presence of quantum memory. Nat. Phys. 6, 659 (2010).

Tomamichel, M. & Renner, R. Uncertainty Relation for Smooth Entropies. Phys. Rev.Lett. 106, 110506 (2011).

Liu, S., Mu, L. Z. & Fan, H. Entropic uncertainty relations for multiple measurements. Phys. Rev. A 91, 042133 (2015).

Horodecki, R., Horodecki, P., Horodecki, M. & Horodecki, K. Quantum entanglement. Rev. Mod. Phys. 81, 865 (2009).

Prevedel, R., Hamel, D. R., Colbeck, R., Fisher, K. & Resch, K. J. Experimental investigation of the uncertainty principle in the presence of quantum memory and its application to witnessing entanglement. Nat. Phys. 7, 757 (2011).

Li, C. F., Xu, J. S., Xu, X. Y., Li, K. & Guo, G. C. Experimental investigation of the entanglement-assisted entropic uncertainty principle. Nat. Phys. 7, 752 (2011).

Berta, M., Coles, P. J. & Wehner, S. Entanglement-assisted guessing of complementary measurement outcomes. Phys. Rev. A 90, 062127 (2014).

Hall, M. J. W. & Wiseman, H. M. Heisenberg-style bounds for arbitrary estimates of shift parameters including prior information. New J. Phys. 14, 033040 (2012).

Deutsch, D. Uncertainty in Quantum Measurements. Phys. Rev. Lett. 50, 631 (1983).

Kraus, K. Complementary observables and uncertainty relations. Phys. Rev. D. 35, 3070 (1987).

Maassen, H. & Uffink, J. B. M. Generalized entropic uncertainty relations. Phys. Rev. Lett. 60, 1103 (1988).

Coles, P. J., Yu, L., Gheorghiu, V. & Griffiths, R. B. Information-theoretic treatment of tripartite systems and quantum channels. Phys. Rev. A 83, 062338 (2011).

Fano, R. Transmission of Information: A Statistical Theory of Communications (MIT, Cambridge, MA, 1961).

Oppenheim, J. & Wehner, S. The Uncertainty Principle Determines the Nonlocality of Quantum Mechanics. Science 330, 1072 (2010).

Pramanik, T. & Majumdar, A. S. Fine-grained uncertainty relation and nonlocality of tripartite systems. Phys. Rev. A 85, 024103 (2012).

Dey, A., Pramanik, T. & Majumdar, A. S. Fine-grained uncertainty relation and biased nonlocal games in bipartite and tripartite systems. Phys. Rev. A 87, 012120 (2013).

Pramanik, T., Kaplan, M. & Majumdar, A. S. Fine-grained Einstein-Podolsky-Rosen–steering inequalities. Phys. Rev. A 90, 050305(R) (2014).

Chowdhury, P., Pramanik, T. & Majumdar, A. S. Stronger steerability criterion for more uncertain continuous-variable systems. Phys. Rev. A 92, 042317 (2015).

Orieux, A. et al. Experimental detection of steerability in Bell local states with two measurement settings. J. Opt. 20, 044006 (2018).

Hanggi, E. & Wehner, S. A violation of the uncertainty principle implies a violation of the second law of thermodynamics. Nat. Commun. 4, 1670 (2013).

Ren, L. H. & Fan, H. General fine-grained uncertainty relation and the second law of thermodynamics. Phys. Rev. A 90, 052110 (2014).

Feng, J., Zhang, Y. Z., Gould, M. D. & Fan, H. Fine-grained uncertainty relations under relativistic motion. Euro. Phys. Lett. 122, 60001 (2018).

Friedland, S., Gheorghiu, V. & Gour, G. Universal Uncertainty Relations. Phys. Rev. Lett. 111, 230401 (2013).

Rastegin, A. E. Fine-grained uncertainty relations for several quantum measurements. Quant. Info. Proc. 14, 783 (2015).

Pramanik, T., Chowdhury, P. & Majumdar, A. S. Fine-Grained Lower Limit of Entropic Uncertainty in the Presence of Quantum Memory. Phys. Rev. Lett. 110, 020402 (2013).

Liu, B. H. et al. Time-invariant entanglement and sudden death of nonlocality. Phys. Rev. A 94, 062107 (2016).

Zhang, C. et al. Experimental Greenberger-Horne-Zeilinger-Type Six-Photon Quantum Nonlocality. Phys. Rev. Lett. 115, 260402 (2015).

James, D. F. V., Kwiat, P. G., Munro, W. J. & White, A. G. Measurement of qubits. Phys. Rev. A 64, 052312 (2001).

Altepeter, J. B., Jeffrey, E. R. & Kwiat, P. G. Entangled photon polarimetry. Adv. At. Mol. Opt. Phys. 52, 105 (2005).

Lv, W. M. et al. Theory Simulation Of the Fine-Grained Uncertainty Relation, https://doi.org/10.6084/m9.figshare.6838274 (2018).

Scarani, V. et al. The security of practical quantum key distribution. Rev. Mod. Phys. 81, 1301 (2009).

Tomamichel, M., Lim, C. C. W., Gisin, N. & Renner, R. Tight finite-key analysis for quantum cryptography. Nat. Commun. 3, 634 (2012).

Devetak, I. & Winter, A. Distillation of secret key and entanglement from quantum states. Proc. R. Soc. A 461, 207 (2005).

Winick, A., Lütkenhaus, N. & Coles, P. J. Reliable numerical key rates for quantum key distribution. Quantum 2, 77 (2018).

Acknowledgements

This work was fundNed by the National Key Research and Development Program of China (2017YFA0304100), National Natural Science Foundation of China (61327901, 61490711, 11774335, 61225025, 11474268, 11374288, 11304305, 11404319, 11804330), Key Research Program of Frontier Sciences, CAS (QYZDY-SSW-SLH003), the National Program for Support of Top-notch Young Professionals (Grant BB2470000005), the Fundamental Research Funds for the Central Universities (WK2470000018, WK2470000026).

Author information

Authors and Affiliations

Contributions

Must include all authors, identifed by initials, for example: Y.F. Huang, C.F. Li, B.H. Liu and G.C. Guo conceived the experiment, W.M. Lv, C. Zhang, X.M. Hu, H. Cao, J. Wang and Z.B. Hou conducted the experiment, W.M. Lv, C. Zhang and Y.F. Huang analysed the results. All authors reviewed the manuscript.

Corresponding authors

Ethics declarations

Competing Interests

The authors declare no competing interests.

Additional information

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

41598_2019_45205_MOESM1_ESM.pdf

Supplementary information: Experimental test of fine-grained entropic uncertainty relation in the presence of quantum memory

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Lv, WM., Zhang, C., Hu, XM. et al. Experimental test of fine-grained entropic uncertainty relation in the presence of quantum memory. Sci Rep 9, 8748 (2019). https://doi.org/10.1038/s41598-019-45205-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-019-45205-z

This article is cited by

-

Generalized uncertainty relations for multiple measurements

AAPPS Bulletin (2022)

-

The uncertainty and quantum correlation of measurement in double quantum-dot systems

Frontiers of Physics (2022)

-

Multipartite uncertainty relation with quantum memory

Scientific Reports (2021)

-

Entropic uncertainty lower bound for a two-qubit system coupled to a spin chain with Dzyaloshinskii–Moriya interaction

Optical and Quantum Electronics (2020)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.