Abstract

The validation of a theory is commonly based on appealing to clearly distinguishable and describable features in properly reduced experimental data, while the use of ab-initio simulation for interpreting experimental data typically requires complete knowledge about initial conditions and parameters. We here apply the methodology of using machine learning for overcoming these natural limitations. We outline some basic universal ideas and show how we can use them to resolve long-standing theoretical and experimental difficulties in the problem of high-intensity laser-plasma interactions. In particular we show how an artificial neural network can “read” features imprinted in laser-plasma harmonic spectra that are currently analysed with spectral interferometry.

Similar content being viewed by others

Introduction

Over the last few years the use of machine learning opened up new vistas in many areas of physics, including plasma physics1, condensed-matter physics2,3, quantum physics4,5,6,7, thermodynamics8, quantum chemistry9, particle physics10 and many others. Recent examples include applications for magnetic confinement fusion11,12,13, inertial confinement fusion14,15,16, discovery of phase transitions17,18,19, closure of turbulence models20, representation of quantum states21,22, galaxy classification23 and orbital stability24. One of the origins of this progress is the possibility of processing large sets of data and drawing conclusions based on features that admit no straightforward description and assessment with human languages. In this way, some natural human limitations can be overcome, making machine learning be a useful tool that works in a fruitful synergy with traditional approaches in theoretical and experimental physics.

One area, where machine learning is successfully being employed, is related to the problem of model calibration25,26. The problem concerns finding appropriate parameters of a model based on incomplete and, potentially, inaccurate knowledge about the behaviour of the modelled system in a set of particular cases. Statistical methods, such as Maximum likelihood estimation, Bayes estimation, Kalman filtering and others26,27,28,29,30 are successfully applied in many areas, including financial market analysis31,32, hydrology33,34, urban studies35 and climatology36,37. However, the use of machine learning appears to be a promising alternative, which can provide some new opportunities38,39,40,41.

In this paper we consider the opportunities of using machine learning for solving long-standing problems in laser-plasma physics. We discuss the possibility of using autonomous recognition of difficult-to-qualify features in the data of real or numerical experiments for validating and advancing phenomenological models as well as for reconstructing experimental conditions. Using a phenomenological model for laser-plasma high-harmonic generation, we train an artificial neural network (NN) to reconstruct various parameters based on the recognition of unspecified features in the harmonic spectra. The NN then “identifies” the learned features in the spectra obtained with ab-initio simulations, which we use to mimic real experiments. In this way we can reconstruct the parameters of experiments or determine the most appropriate values for free parameters of incomplete theories. This can also be used to determine the validity regions of different models. It is important that this approach can be applied in case of inaccurate or intrinsically incomplete knowledge about the experimental conditions, i.e. in cases when performing a particular ab-initio simulation is not possible. In such a way this approach can provide new routes for experiments and new insights for theory and model development.

For the sake of completeness, we start from the discussion of basic ideas, using the Galton Board42 as an illustrative example. We then provide a proof-of-principle demonstration of the use of this methodology for the outlined problem in the field of laser-plasma interactions.

Methods

Typically, the validation of a theory is reduced to the experimental observation of some clearly describable feature, such as an observable physical value, its certain dependency on some parameters, a peak in some distribution, etc. These kinds of features are conventionally used to claim for the agreement of experimental and theoretical results.

One natural limitation of this conventional approach is that in the presentation of results we appeal to the consistency in terms of such clearly describable features and consequently do this mostly for features in one, two or three-dimensional sets of data. The essential part of many studies is finding ways of reducing and transforming raw data sets into the forms that expose such describable, indicative features. Of course, there have been developed numerous techniques and approaches, such as statistical and spectral analysis as well as various algebraic transformations. However, this toolbox is inevitably limited and in many cases the solution of the outlined problem requires insightful analysis and development of the theory and experiment with, sometimes, manual search for such a feature in large sets of data.

Another common consequence of developing sophisticated comparison methodology is losing the lucidity in the relation between the compared features and the origins of the theory. In some cases, it is difficult to say whether the observed feature unambiguously indicate the correctness of the theory or if it is peculiar to a family of theories that are thus not disqualified by the experiment. For example, the selected feature can be a generic consequence of some conservation laws rather than of the main principle or assumption that has to be validated. In other words, it can be difficult to quantify in what sense and to what degree the theory is validated and what the alternative theories that are disqualified by the experiment are.

One more limitation of such conventional methodologies is the fact that theories can be benchmarked against the data of experiments with sufficiently complete knowledge about the initial conditions and all important parameters. In some cases, the information is intrinsically incomplete that hampers the use of theories and ab-initio simulations.

In this paper we discuss and apply a methodology that overcomes the outlined limitations with the help of machine learning applied to the recognition of hardly describable features in outputs of ab-initio simulations or experimental data, even in case of essentially incomplete knowledge about the experimental conditions. We provide proof-of-principle demonstration of several essential capabilities of this approach:

-

1.

Comparison, validation or disqualification of theories in a lucid and quantitative way (as a function of position in parameter space);

-

2.

Completing theories through indirect measuring dependencies of free parameters, even in the parameter regions where they are not well-defined in terms of the first principles;

-

3.

Indirect measurement for determination of unknown experimental parameters;

-

4.

Identifying regions in a parameter space where certain ranges of experimental data carry unambiguous information about experimental conditions.

Note that these approaches are not intended to replace traditional methodologies but to supplement them with methods of gaining knowledge that can either be exploited heuristically or used to conceive hypothesis and ideas for further theoretical and experimental studies.

Although many ideas might look very trivial and known, we start from a general discussion of the outlined approaches. To support the discussion we use the well-known Galton Board42 experiment as a simple example of an experiment and a theory. After that we demonstrate how the developed methods can be used for resolving long-standing questions in the physics of high-intensity laser-plasma interactions.

Validation of a theory

Suppose we need to validate a theory \({\mathbb{A}}\) using an experiment \({\mathbb{E}}\). To formulate the problem in a more exact way we assume that we intend to compare theory \({\mathbb{A}}\) with some alternative theory \({\mathbb{B}}\) (or a family of alternative theories) which we intend to disqualify using experimental data. In our notations, both theories \({\mathbb{A}}\) and \({\mathbb{B}}\), as well as the experiment \({\mathbb{E}}\) are denoted as some possibly non-linear and non-deterministic operators that act on a vector of initial conditions c and give a vector of measurable quantities r. These vectors can represent a set of data of arbitrary composition and dimensionality. Suppose we carried out a sequence of experiments, then we can write:

where index i enumerates the experiments. We admit that due to experimental imperfections the values \({{\bf{r}}}_{i}^{E}\) are a subject of some unknown systematic or non-systematic distortions that hamper direct comparison of \({{\bf{r}}}_{i}^{E}\) with \({{\bf{r}}}_{i}^{A}\) and \({{\bf{r}}}_{i}^{B}\). However, we assume that the measurable data r contains some features that can appear in the results of either \({\mathbb{A}}\) or \({\mathbb{B}}\). These features can depend on the conditions c in a complex way, which also should be reproduced by the appropriate theory (at least to some extent). Note, that in general both theories can be applicable in certain regions of the parameter space of vectors c and this is something that we intend to determine.

To solve the problem we develop a unification theory \({\mathbb{U}}\) that depends on at least one parameter p and provides a smooth transition between the theories, for example, \({\mathbb{U}}(p=0)={\mathbb{B}}\), and \({\mathbb{U}}(p=1)={\mathbb{A}}\). In the most primitive case, this can be just a linear combination, i. e. \({\mathbb{U}}(p)=p{\mathbb{A}}+(1-p){\mathbb{B}}\). However, it is better to form the smooth transition not in-between the final results of the theories, but between the essential principles or assumptions that provide the origin for the development of the theories. This is because, even if both theories fulfill basic conservation laws, their linear combination might not (for \(0 < p < 1\)). To avoid this we can extend the dimensionality of the parameter p, so that in this space there exist some route between the points corresponding to \({\mathbb{A}}\) and \({\mathbb{B}}\) so that at each point of this route all the conservation laws are fulfilled. With help of simple examples we will see further why it is important.

We can now apply the unified theory for various possible initial conditions and generate a sufficiently large set of pairs ck and \({{\bf{r}}}_{k}^{U}={\mathbb{U}}{{\bf{c}}}_{k}\) for various random values of p and conditions c (k is the index running over the set). Next, we train a feed forward fully connected NN \({\mathbb{N}}\) to learn how to reconstruct p and c from rk, i.e.

According to43 any continuous real-valued function can be approximated with such a NN for any given accuracy. Thus, we choose this type of NN as an approximation of unknown function that maps p and c to r. We can then apply the trained NN to the experimental data \({{\bf{r}}}_{i}^{E}\). If the values of cE are systematically close to the reconstructed values cN in the whole or some certain region of parameter space, we can interpret this as if the NN “recognizes” some indicative features in this region of parameter space. In this region the reconstructed value p can indicate the validity of one of the theories: a systematic tendency of p to 1(0) indicates the validity of theory \({\mathbb{A}}\)(\({\mathbb{B}}\)). This procedure can also show the transition between the applicability regions of theories explicitly, through plotting p as a function of parameters c.

It would be reasonable to ask: In what sense is the validity of a theory is demonstrated by this procedure? Of course, there exists a large variety of relations between the output value r and the parameter p and certainly not all of them are necessarily sensible in terms of physics. In other words, the NN can establish successful correlation between p and some feature of little importance or complete irrelevance to the physics of the process. To avoid this, we train the NN to reproduce not only p, but a sufficiently large set of physically essential values c. This favours establishing correlations with some features that significantly depend on the initial conditions and, in this sense, have some physical meaning. Although a more rigorous analysis would be of interest, in this paper we focus on showing proof-of-principle examples that convincingly demonstrate the rationality of this concept. However, we would like to emphasize that one should not overrate the validity of the inference obtained, by using it outside the context of (1) the range of validity in the space of c, (2) the view of the output rU, where we search for the indicative features, and (3) the set c used as the reference for the physical sense. We will discuss the rationality of this meaning in the last section.

Another potential difficulty is related to the possibility for the measured output to contain simultaneously two unique types of features each described by one of the theories. In this case, the NN can potentially select the one that describes more apparent features, i.e. more descriptive theory. One way to deal with such a difficulty is to consider more narrow or restricted output, so that only one type of features is included.

We can outline one important peculiarity of the method: the procedure does not require a complete knowledge about the experimental parameters ci. We can use only the known components of vector c to see whether the NN “recognizes” the features of importance or not.

Before we move to an illustrative example, we would like to outline a limitation that is crucial for the applicability of this procedure. Since we assign the NN to reconstruct the inputs p and c based on the measurable output rU the procedure assumes that this inverse problem admits a solution. If the inverse problem is ill-posed the NN will not converge to a reasonable reconstruction. Thus, this will not lead us to a wrong conclusion, but might hamper the applicability of the method. In practice, this might be a matter of using sufficiently informative output that uniquely identify the input. However, the inverse problem can turn out being ill-posed because of two other reasons, which worth mentioning. First, the physics of the studied process can have some symmetry that makes two or more sets of values for the input parameters indistinguishable in reality. Second, the physics can be self-similar, which means that the physics remains the same if two or more input parameters are changed simultaneously, in a specific way that keeps a certain single parameter constant. One can say that the process depends on this similarity parameter rather than individually on the parameters determining it. These parameters can then be replaced with the similarity parameter to make the inverse problem well-posed. This shows that some understanding of the problem properties is necessary for making use of the described procedure. The NN not establishing a reasonable solution for the inverse problem could, in fact, point to the presence of such symmetry or self-similarity. In the further discussion we will show how both problems can be handled in practice.

As a proof-of-principle example we consider the Galton Board (GB), which is also known as a bean machine. We highlight that the Galton Board is chosen as a clear illustrative example, while one can certainly apply standard statistical methods for this problem. The reasons and some advantages of using machine learning will be discussed later in a separate section.

We use index j to denote the final position of a bead that bypasses n horizontal rows of pegs. Bouncing from each peg leads to equal probability of bypassing it from each of two sides. The probability of coming to the j-th positions is then given by

where the approximation is the de Moivre-Laplace theorem applied under the assumption of \(n\gg 1\). We will use this Gaussian distribution of limited applicability as theory \({\mathbb{A}}\), which we intend to validate using experiments. As the experiment for this problem we will use the numerical implementation of Monte-Carlo method, with a limited number of beads. The theory \({\mathbb{A}}\) will be validated relative to an alternative theory \({\mathbb{B}}\) that suggests that the distribution is super-Gaussian:

We use this form as a particular example because it is difficult to describe and appeal to the difference between the Gaussian and super-Gaussian distribution without elaborating and applying additional data processing. We here deliberately do not apply any transformation to show the capability of the used approach. Note also that theory \({\mathbb{B}}\) is formulated incompletely. The missing factor in front of the exponent can be determined using the normalization conditions. We here intentionally do not compute this factor to demonstrate how one can deal with incomplete theories.

We now define a unified theory \({\mathbb{U}}\):

We see that theory \({\mathbb{A}}\) corresponds to \({p}_{1}\ne 0\), \({p}_{2}=0\) while theory \({\mathbb{B}}\) is characterized by \({p}_{1}=0\), \({p}_{2}\ne 0\). (More precisely, theory \({\mathbb{A}}\) corresponds to \({\bf{p}}=(0.5,2/n,0)\), but this is not important for now.) Note, that there exist a value of p0 that provides probability normalization. In other words, within the family of theories \({\mathbb{U}}\) there exist theories of type \({\mathbb{B}}\) that fulfill the probability normalization. As we will see, we can disqualify \({\mathbb{B}}\) even without knowing this value of p0.

We now randomly generate values of p0, p1 and p2 from 0 to 1, generate the result rj according to the unified theory \({\mathbb{U}}\) and train the NN to reconstruct the generated vector \({\bf{p}}=({p}_{0},{p}_{1},{p}_{2})\). In our implementation we used \(n=16\) and the result was sampled through 16 values rj. For the proof-of-principle demonstration we used a rather small feed forward fully connected NN that contained four layers with the neuron numbers 16, 16, 16 and 3, respectively. The three output neurons were associated with the components of the vector p. We used logistic sigmoid, squared error measure and stochastic gradient descent, which resulted in an accuracy of p determination of the order of 10−3.

Next we perform a number of numerical experiments using a random number generator to simulate a number of beads that pass through \(n=16\) layers of pegs. The number of beads reached each of the positions is normalized by the total number of beads to get the experimental values \({r}_{j}^{E}\). These distributions are then used as input for the NN, which reconstructs p according to the learned unified theory.

In Fig. 1 (left column) we plot the distribution of reconstructed values on the plane of p0 and p1 for the number of beads equal to 103 (upper panels) and 105 (lower panels). As we see, in both cases the reconstructed values are localized mostly in the vicinity of the point related to the theory \({\mathbb{A}}\), i.e. (\({p}_{2}=0\) and \({p}_{1}=0.5\)). However, this is more obvious in the case of using the larger number of beads. This is not surprising because the NN was trained to reproduce values based on exact distribution without any stochastic deviations. To establish some tolerance of the NN to noise we train it using an artificial noise that we apply to the theoretical values before sending them to the input of the NN. For this purpose we multiply each value \({r}_{j}^{U}\) by a factor \((1-0.005+0.01r)\), where r is random value from 0 to 1. As we can see from the comparison of the panels in Fig. 1, this results in a better localization of the distribution around the expected point (precise analysis shows that this improvement appears for both p2 and p0). This result demonstrates that this approach can be used to retrieve more efficiently the information from the experimental results with noise.

Validation of the theory for the Galton Board in the limit of large number of layers of pegs (Eq. (5)). The plots show the distribution of reconstructed values of parameters of the unified theory (Eq. (7)) by the NN that receives the distributions obtained with Monte-Carlo method using 103 (upper panels) and 105 (lower panels) beads. The tendency of p2 to 0 indicates irrelevance of the super-Gaussian component and invalidity of such alternative theory. The right column shows the results of using a NN that is trained with additional noise that leads to higher tolerance to the experimental noise caused by smaller number of beads.

We can note that the distributions of reconstructed values are centered at a point that is close to, but notably different from the point predicted by the theory. This is an indication of the fact that the theory is valid in the limit \(n\gg 1\), while we here use a large, but finite value, \(n=16\). In other words, we see the closest fitting of experimental data in the framework of theories described by the unified theory \({\mathbb{U}}\). This observation leads to the next opportunity that we identify and describe in the next subsection.

Completing a theory

Suppose we have an incomplete theory \({\mathbb{A}}(\alpha )\) that contains some free parameter α (or several parameters). Alternatively we can have a hypothesis that some theoretical approach can be applied under the appropriate choice of a parameter that we were not able to determine. We can use the methodology described in the previous subsection to determine both the possibility and the appropriate values in such cases.

We generate a sequence of theoretical results for various values of parameters c and values of α in the appropriate range:

and train a NN to reconstruct the values of c and α. We can then vary the experimental conditions c and send each result to the input of the trained NN. The agreement of the reconstructed values to the known experimental parameters c would indicate that the NN “relates” some features in the input to the ones peculiar to the theory \({\mathbb{A}}(\alpha )\). If such agreement is not observed, this procedure does not indicate weather such a theory can be applied or not. Alternatively, if the agreement for c is observed, but the values of α are not exposing any systematic tendency, we can conclude that there is no appropriate choice for free parameter α.

However, if, in some region of parameters, the reconstructed values of c are systematically close to the known values in the experiment, this procedure can show the systematic dependency of α on the parameters c.

One can approximate the obtained dependencies and use the approximations as a heuristic way to complete the theory. However, this can also provide a hint for further development of a theory in a deductive way. For example, the possibility of applying certain assumptions or phenomenological model can become clear and be validated rigorously.

Finally, we would like to highlight that this procedure can be applied to determine the experimental conditions that are not known or even not measurable. This procedure can thus be treated as indirect measurement.

As an illustrative example, we again use the Galton Board experiment. We assume that we determined only the fact of having a Gaussian distribution, but did not determine the coefficient that defines the spread. We can use Eq. 7 with \({p}_{2}=0\). Then the unknown free parameter is p1 and our purpose is to determine its dependency on the number of rows n.

We perform numerical experiments with different number of rows from \(n=2\) to \(n=32\). In all experiments we had 105 beads. Using the same NN trained with noise as in the previous subsection, we reconstruct the value of p1 as a function of n. The result shown in Fig. 2 clearly demonstrates that we can systematically determine the value of p1. As expected, the result is close to the analytical dependency determined by the Eq. (5) and shown with the dashed blue curve.

Indirect measurement applied as a way to complete theory (Eq. (7)) for the Galton Board. The distribution of reconstructed values of p1 (defines the width of Gaussian distribution) is shown as a function of number of rows n. In the limit of large n the distribution show systematic tendency towards the known analytical answer (Eq. (5)). The deviation in the region of small n provides an extension of the theory in the framework of Eq. (7).

Note that for small values of n the determined values show a systematic deviation from the analytical trend. This is an indication that the analytical trend is not valid for small n. This procedure provides the possibility to not only see this but also measure and determine the closest choice of p1 in the framework of the theory (Eq. (7)). In such a way, based on experimental data, we can perform an indirect measurement of any parameter and its dependency even beyond the range where the parameter has intended physical meaning.

Indirect measurement

We would like to note that the procedure described in the previous subsection can be applied also for indirect measurement of a real physical variable that defines physical conditions modeled by the theory. This means that we can use this procedure to perform indirect measurements of unknown parameters in the experiments and, in such a way, complete the information about experimental conditions in case they are not known for us. Moreover, instead of a theory we can use ab-initio simulations, which makes the method applicable for many complex situations. To do so one needs to parametrize the conditions of the process using knowledge about real conditions and perform sufficiently large set of ab-initio simulations to train NN for the described procedure.

Why using NN?

One can ask a very reasonable question: What is the benefit of using a NN instead of collecting all possible outcomes of a model and then determining, which one is the closest to the experimental measurement? To clarify the benefit, we note that this alternative procedure would inevitable require setting some metric for calculating the closeness between the known output and the measured result. This is the main trouble. First, the appropriate metric can be different for different regions in the parameters space. Second, it can be sensitive to the experimental noise. Finally, it can be sensitive to systematic distortions in the experimental measurements. For example, a systematic shift of a certain useful pattern can hamper its identification in case of using the mean standard deviation as the metric.

In the discussed methods (on the basis of NN), we only set the metric for a number of physical parameters and the effect of the used metric is more transparent. The NN is automatically adjusted to relate the parameters to the most indicative features in the output. As we showed, one can even train NN to have tolerance to noise and some distortions.

Application for the physics of laser-plasma interactions

In this section we show how the discussed methodology can be applied for resolving long-standing questions in the field of high-intensity laser-plasma interactions. The process of such interactions is crucial for many applied and fundamental research direction related to the use of modern high-intensity lasers44. Even compact table-top lasers can now produce pulses with relativistic intensity, which means that not only almost instantaneous ionization but also a relativistic, collective dynamics in the produced plasma can be caused on the surface of a target. This opens opportunities for driving large variety of highly non-linear interaction regimes and thus for converting laser energy into energetic particles or unique, tailored forms of radiation. This hold promise for numerous applications ranging from fundamental studies to new diagnostic tools in medicine and nuclear waste utilization45,46,47,48.

The ionization of solids leads to the formation of high-density plasma that hampers the penetration of laser radiation. However, the light pressure can be strong enough to cause relativistic, repeated shifts of electron bulks that is balanced by the attraction to the residual ions that are less mobile due to higher mass. Using the appropriate reference frame and some reasonable assumptions, one can reduce the problem to 1D radiation-plasma dynamics49. However, the application of first principles leads to a highly complex problem formulation, which is largely inaccessible for analytical tools. At the same time, the use of ab-initio simulations lacks generality and is also of limited use due to commonly incomplete knowledge about experimental conditions. This naturally impedes search for useful regimes in a multi-dimensional space of parameters.

One way of overcoming this difficulty is developing phenomenological models50,51,52,53,54,55, i.e. theories that are based on introducing entities (and the rules of their behavior) that model patterns systematically observed in ab-initio simulations. The applicability of such models is typically motivated and analyzed based on theoretical estimates.

Historically the first and probably the most known phenomenological model for the nonlinear radiation reflection from plasmas is referred to as the relativistic oscillating mirror (ROM)50. The underlying principle of this model states that the reflection happens according to the Leontovich boundary condition (equality of incoming and outgoing energy fluxes) at some oscillating apparent reflection point, which can approach but not reach the relativistic speed limit just as real particles. When this point moves against the incident radiation with relativistic velocity, a quick transition is formed in the reflected radiation. High harmonics generated in such a way undergo a universal spectral law \({I}_{k}\sim {k}^{-8/3}\), where Ik is the intensity of k-th harmonic56. Although some other trends in spectra are also observed in simulations51,54,55,57,58, the observation of this trend in some experiments59 established a conviction in the validity and applicability of the ROM model.

An alternative model is referred to as the relativistic electronic spring (RES)60,61. The underlying principle of this model states that under the light pressure some varied part of foremost electrons becomes and remains bunched, while the resulted bunch moves so that its radiation precisely cancels out the incident radiation in the plasma bulk. The resulted description agrees well with ab-initio simulations in many aspects in a wide range of conditions and provides several important predictions62,63 including the possibility of producing unprecedentedly intense and short bursts60,64,65 of radiation with controllable ellipticity66. However, the experimental validation of the RES model is difficult. Current experiments are largely limited to the observation of high-harmonic spectra, which are typically analyzed in terms of the exponent of the power-law fall, while setting aside more peculiar signatures that can indicate the validity and applicability of the RES model. More accurate comparison requires cutting-edge experimental and theoretical developments based on reveling and retrieving indicative features in the experimental data67,68,69,70. We now show how such analysis can be performed with the help of a NN on the basis of the methodology described in the previous section.

Comparing the RES and the ROM models

Here we show how we can use the measurable spectra of generated high-harmonics for comparing and determining the validity regions of the RES and ROM models. Although the procedure can be based on experimental data, here we use ab-initio simulations to obtain the spectra in the frequency range of up to harmonic order 12.8, which mimics the capabilities of typical experimental arrangements.

To perform the comparison, we need to develop a unification theory. Since the ROM model was intended and motivated for the case of sharp density drop at the plasma surface, we consider the RES equations for this case and also assume the most indicative case of P-polarized incidence:

Here fin and \({f}_{{\rm{out}}}^{RES}\) are the shape of the incoming laser pulse and the shape of the outgoing reflected signal that carries generated high harmonics. The first three equations can be solved to find the temporal evolution xs(t) of the bunch that accommodates peripheral electrons according to the RES model. We can then substitute the obtained solution xs(t) into the fourth equation to obtain the outgoing signal \({f}_{{\rm{out}}}^{RES}(x+t)\). The second equation implies the ultra-realistic limit for the bunch velocity components along the pulse propagation direction (βx), and along the electric field direction (βy). The shapes fin and \({f}_{{\rm{out}}}^{RES}\) are given in laboratory reference frame, while the consideration is carried out in the moving reference frame49 that provides a way to account for arbitrary incidence angle θ. The relativistic similarity parameter S is defined as \(S=n/a\), where n is the plasma density given in laboratory reference frame in critical units, a is the pulse field amplitude given in relativistic units; both units are computed relative to the laser wavelength \(\lambda =1\,\mu \)m. For more details see refs60,61.

The ROM model does not provide a complete set of differential equations for computing \({f}_{{\rm{out}}}^{ROM}\). Instead the model provides a way to compute directly the indicative spectral properties. The only two essential assumptions that lead to this result are the Leontovich boundary conditions (\({f}_{{\rm{out}}}^{ROM}({x}_{ARP}+t)=-\,{f}_{{\rm{in}}}({x}_{ARP}-t)\)) and the fact that they are applied to the point xARP that passes through the stage of moving with the speed close to the speed of light against the incident radiation (in the moving reference frame). From Eqs (9) and (12) we see that the boundary conditions of the RES model implies inequality between the incident and outgoing radiation. To provide a smooth transition between these types of boundary conditions and use xs as xARP (i.e. admitting the same relativistic motion) we modify the Eqs (9–12) and formulate the unification theory:

Here we introduce the parameter p that provides the needed transition through its variation from 0 to 1. In the limit \(p=1\) the Eqs (13–16) coincide exactly with the equations of the RES model. In the limit \(p=0\) the Eqs (13 and 16) imply the Leontovich boundary conditions, while the solutions for xs include instances of approaching relativistic limit of motion against the incident radiation (see more details below). Thus the unified theory for \(p=0\) exposes the same spectral properties as the ROM model. However, the unified theory still provides one particular way of completing the ROM model to a set of equations that determine not only spectral properties, but also the explicit form of fout. We thus will refer to this theory as the completed ROM or ROMc.

The numerical solution of Eqs (13–15) is straightforward. From Eq. (13) one can express:

One can see that when R changes sign, so does βy. According to Eq. (14), this means that βx passes close to 1 or −1 as it was mentioned above. The Eq. (17) together with Eq. (14) have one relevant solution for βx:

where g(α, p) is the indirect solution of the equation \(\alpha {x}^{p}+x-2=0\). We can solve this equation numerically and use this to determine the evolution xs(t). Then we can use Eq. (14) to obtain \({f}_{{\rm{out}}}^{U}({x}_{s}+t)\) and calculate its spectrum.

Once the unification theory is developed we can use it to calculate the spectra. For our studies we considered two-cycle laser pulse \({f}_{{\rm{in}}}(\eta =x-t)\) characterized by the vector potential in the form of ~\(\sin \,(\eta +\varphi )\,{\sin }^{4}\,(\eta /4)\), which results in:

where the phase \(\varphi \) can have arbitrary value. For this study we set \(\varphi =\pi /2\).

We numerically solve the equations of the unified theory for the parameter space spanned by:

Each spectrum was sampled with 16 equidistant points in the interval from 0 to harmonic order 12.8 and the value is converted to appropriate logarithmic units so that the values mostly lie in the interval from 0 to 1. Next we train the NN to reconstruct the values of S, θ and p from this data. We use the same topology and training method of the NN as in the previous experiments with the Galton Board. The achieved accuracy was about 3 × 10−3 for the square error measure applied to the parameters normalized to unity.

Next, we perform a series of particle-in-cell (PIC) simulations for the parameter space spanned by Eqs (20 and 21) and obtain spectra using the field distribution obtained in each simulation. For this purpose we used 1D version of PIC code ELMIS60,71. In all simulations we used field amplitude \(a=200\) and the density determined in accordance to the value of S. The spectra are then sent to the input of the trained NN that provides the reconstructed values of parameters.

In Fig. 3 we show the distribution of the values of the parameter p as a function of S and θ. The results point to the fact that in all cases the most appropriate choice of p is close to 1, which corresponds to the RES model.

Advancing the RES model

The original RES model has no fitting parameters and still describes fairly accurately the shape of the pulse computed with ab-initio PIC simulations for a wide range of relativistic laser-plasma interaction scenarios61,72. In particular, the model indicates the possibility of producing singularly intense XUV bursts, identifies the optimal conditions for this process60,72 and determines the polarization states of the XUV bursts66. However, the original RES theory does not predict the amplitude of these bursts that appear as singular points for the theory.

These bursts are originated from the singularity of the second term in the right-hand side of Eq. (12), when βy changes sign and βx becomes close to −1 according to Eq. (10). To see this one can substitute βy/\((1-{\beta }_{x})\) expressed from Eq. (9) into Eq. (12):

One can formally bound the resulting field through introducing a constant α that is close to, but less than, unity:

and also relate α to the effective bounding gamma factor γb in terms of Eq. (10):

Large values \({\gamma }_{b} > 10\) result in \(0.99 < \alpha < 1\) and thus almost do not affect the values of \({f}_{{\rm{out}}}^{RES}\) everywhere except the vicinity of \({\beta }_{x}=-\,1\), where they formally bound the result. This bound, however, affects crucially the high-frequency end of the spectra measurable in experiments. Simulations show64 that the amplitude of the XUV bursts can be up to factor 20 times higher than that of the incident radiation and this factor grows with the laser intensity in a complex way. This means that determining γb is a matter of theory beyond the self-similarity \(S=n/a\) implied by the RES model. Determining γb is thus an important theoretical problem for both experimental validations and future applications of ultra-intense XUV bursts.

Serebryakov et al. (see ref.62) analyzed the possibility to relate the bounding factor to the actual gamma factor of electrons in the bunch. However, the electrons in the bunch have only similar velocity, but genuinely different gamma factors, since they all experience different acceleration over different intervals of time61.

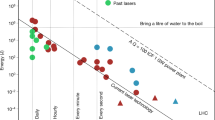

We will now use the methodology of indirect measurements to examine heuristically the appropriate values of γb as a function of laser field amplitude. We use the RES model extended with parameter α through Eq. (24). We obtain the same spectral data for various parameters in the space spanned by:

We train the same kind of NN and reach roughly the same level of accuracy of determining these parameters on the basis of spectral data.

Next, we perform a series of PIC simulations for \(\theta \in [0,3\pi /8]\), \(S\in [1,10]\) and field amplitudes \(a\in [5,500]\), which is relevant to current and near-future experiments. The obtained spectra are used as inputs for the trained ANN that reconstructs the values of α. In Fig. 4 we see that heuristic value of \({\gamma }_{b}\approx 10\) appears as universal for the amplitude values \(a\gtrsim 10\). On the right panel we also see that this tendency becomes more prominent for large values of S.

Indirect measurement of the γb parameter for the extension of the RES model according to Eq. (24). The distribution of reconstructed values of γb are shown as a function of the laser field amplitude a for \(1 < S < 10\) (left panel) and for \(3 < S < 10\) (right panel).

This result can be used either directly to advance heuristically the RES model or for further theoretical analysis of the physics of the process. Note, that since the parameter γb has no meaning in terms of first principles we can hardly determine it from these principles. In opposite, we here determine its value in terms of its intended role as a parameter of the extended RES model.

Indirect measurements based on incomplete knowledge about experimental conditions

The previous examples primarily concerned the questions of theory. As a final example we demonstrate how we can make a clear use of the discussed methodology in complex experiments, when the information about the initial conditions of the studied process is not complete. This is not related to the use of any models and can be based on ab-initio simulations. However, here, we again use the RES model and ab-initio PIC simulations to mimic such experimental scenarios.

Suppose we perform an experiment about high-harmonic generation through the interaction of a high-intensity laser pulse with a solid target. We know and can vary the incidence angle θ. We also know the duration and the amplitude of the laser pulse. However, we do not know the carrier envelope phase (CEP) \(\varphi \) and we do not know precisely the density profile that is the result of plasma spreading after heating by foregoing laser radiation. This is rather common experimental situation.

We will mimic the unknown initial plasma state through considering a steep density profile with unknown density in the plasma bulk. This can be related to any plasma distribution through the approach of effective S-number proposed in ref.72. Note, however, that this is just for showing proofs of principles and one can use any plasma density profile in both RES and PIC calculations61.

We will assume that the pulse has the form given by Eq. (19) with amplitude \(a=200\). The limited knowledge about plasma density n can be interpreted as limited knowledge about the \(S=n/a\), which we assume to stay in the range \(S\in [1,10]\).

In Fig. 5 we show common spectral data obtained for various values of S, θ and \(\varphi \). One can see that the data contains sophisticated features that depend on parameters in a complex way. Although they seemed to encode ambitiously the scenario of interaction and the initial conditions, it would be very difficult to describe them using human language so that one can determine the initial conditions systematically. Developing a methodology of retrieving such information from the spectral data appears as an intrinsically complex problem that is a matter of advanced developments69,70. Here we demonstrate that this problem can be solved with a NN.

Note, that in essence the results of the RES model (red curves) agree with the results of PIC simulations. However, since the agreement is not ideal and in some cases is rather poor, the problem of reconstructing initial conditions based on the RES model is not trivial. This requires appealing to some essential features rather than to ideal memory about the states. This mimics the possible experimental limitations related to natural noise or potentially systematic distortions.

We use the same kind of NN and train it to reconstruct values of \(\varphi \), θ and S on the basis of spectral data obtained via numerical solutions of the RES model in the parameter space spanned by:

To mimic real experiments we perform PIC simulations with the parameters in the same parameter space. We then use the obtained spectral data to see whether the NN can identify correctly the phase values used in simulations. The results are shown in Fig. 6.

The demonstration of indirect measurement of the pulse carrier envelope phase \(\varphi \) based on spectral data obtained with PIC simulations. The used NN was trained with the RES model. Panel (a) shows all results and the insert to the right shows the notations and standard deviation for different groups of PIC simulations. Panel (b) shows the results for \(\theta =\pi \)/4 and \(S=4.6\) that are optimal for accurate reconstruction. Panel (c) shows the result for the optimal \(\theta =\pi \)/8 in case we have no precise information about \(S\in [2.2,10]\).

As one can see in Fig. 6(a), all the results of the NN are mostly located around the diagonal that corresponds to accurate reconstruction of the phase \(\varphi \). However, from the diagram for the standard deviation (shown in the insert to the right) the accuracy of the reconstruction varies with parameters θ and S.

There could be two reasons for this. First, potentially the RES model has different accuracy for different parameters and this affects the accuracy of reconstruction. Second, the physics of the process can potentially provide more indicative feature for certain ranges of parameters. This conclusion indicates an important capability of this methodology. The accuracy of the reconstruction of the known parameters can show where (in the parameter space) the used theoretical model (or the setup for simulations) is more adequate. Alternatively, this accuracy can indicate where the physics of the process provides more indicative features in the data used for the reconstruction. Moreover, if we can vary the range of used data, we can determine where these features are located.

One trivial outcome of this observation is the possibility to chose the parameters that are most useful for the reconstruction. In our case this is show in Fig. 6(b).

Finally, we demonstrate that the procedure can be efficiently used in case of limited knowledge about the experimental data. As we see in Fig. 6(c), the phase can be reconstructed fairly accurate even if we do not know precisely the plasma density distribution.

Discussion

The presented example demonstrates that the described approach is sensible for selecting and calibrating a model. In the considered case the NN has been supplied with the unified RES-ROM theory and has been trained to reconstruct the unification parameter p and some set of physically important input parameters (S and θ). The NN learned all the features that are useful for the reconstruction of these parameters. The physical meaningfulness of the learned (i.e. selected) features was controlled by the used set of physically meaningful parameters. The resulted (selected and calibrated) model is thus relevant in terms of describing the experimentally observable features that are sensitive to S and θ. One can then ask if the selected and calibrated model is more generally valuable. We here can refer to the general tendency of physical phenomena to be originated from some underlying principles and laws. In other words, if we see that some theory foundations yield a good description of certain phenomena and their dependency on certain parameters, it would be natural to expect that the resulted theory can also describe other phenomena and their dependency on other parameters. The presented possibility of reconstructing the the carrier envelope phase \(\varphi \) serves as a convincing demonstration for this expectation.

We now return to the questions raised earlier concerning the well-posed (or otherwise) nature of the inverse problem, when there is some symmetry and/or self-similarity. The considered problem of laser-plasma interaction provides an illustrative example for both issues.

As we mentioned earlier, the physics of the process in the considered ultra-relativistic regime has the property of self-similarity with the similarity parameter \(S=n/a\): if we proportionally increase/decrease the values of amplitude a and density n the shape of the spectrum remains the same. This means that from the normalized spectrum we can only determine the value of S but not the values of a and n separately. In other words, the inverse problem would be ill-posed in case of using a and n as the input parameters. This is why we used S as the input parameter in all arrangements. (Alternatively, one can use non-normalized spectra, but this would have little sense and would also increase the dimensionality and thus computational demands).

The property of symmetry appears for the specific case of normal incidence, i.e. \(\theta =0\). In this case inverting the directions of the electric and magnetic fields leads to the same plasma dynamics (with reversed velocities of particles in the y and z directions). This means that the problem setting for \(\varphi =0\) and \(\varphi =\pi \) provide exactly the same spectrum. This is why we restrict the values of angle \(\theta \ge \pi \)/8 in Fig. 6. One can ask however, what would happen if we do not pay attention to this symmetry and vary the angle in the whole range making the inverse problem ill-posed for \(\theta =0\). To assess this, we tried to train the NN for the range \(\theta \in [0,3\pi /8]\) and checked whether this ruins the whole procedure or affects the results only locally for \(\theta =0\). In Fig. 7 we plot the average standard deviation for the reconstructed phase as a function of angle θ and also the reconstructed phase for the case of \(\theta =0\), \(S=4.6\).

The demonstration of the tolerance against the presence of parameter ranges, where the inverse problem is ill-posed. The standard deviation is shown for \(\theta \in [0,3\pi /8]\), while for \(\theta =0\) the cases of \(\varphi =0\) and \(\varphi =\pi \) are indistinguishable. Other settings are the same as in Fig. 6.

As expected, the measured deviation is notably larger for \(\theta =0\). However, for other values of θ a reasonably satisfactory result is achieved. Moreover, for previously determined optimal value of \(S=4.6\) the reconstructed values seem to still follow the right trend for all values of \(\varphi \) except the vicinity of the ambiguous points \(\varphi =0\) and \(\varphi =\pi \). This indicates that the method is practically robust against a limited knowledge about the properties that might lead to the ill-posed inverse problem. Moreover, the fact that the NN does not converge to a reasonable reconstruction accuracy in certain range of parameters can be used as an indicator of some symmetry or self-similarity of the problem. In such a way this procedure can guide further theoretical analysis of the problem.

Conclusions

In this paper we discussed and demonstrated the possibility of using machine learning for validating and advancing theories, as well as performing indirect measurements with incomplete knowledge about experimental conditions. The procedure is based on the possibility of using NN for establishing the relation between various parameters (of the process and theory) and the features that might be poorly accessible for description with human language. First, we showed how this can be used to validate, compare and advance theoretical models. Next, we showed how this can be used to perform indirect measurement of parameters of the experiment or theory based on experimental data, even if we have incomplete knowledge about experimental conditions. Finally, we outline that one can use the accuracy of the reconstruction of the known parameters for the identification of indicative features and their locations in the experimental data.

References

Spears, B. K. et al. Deep learning: A guide for practitioners in the physical sciences. Physics of Plasmas 25, 080901, https://doi.org/10.1063/1.5020791 (2018).

Carrasquilla, J. & Melko, R. G. Machine learning phases of matter. Nature Physics 13, 431–434, https://doi.org/10.1038/nphys4035 (2017).

Deng, D.-L., Li, X. & Das Sarma, S. Machine learning topological states. Physical Review B 96, 195145, https://doi.org/10.1103/PhysRevB.96.195145 (2017).

Krenn, M., Malik, M., Fickler, R., Lapkiewicz, R. & Zeilinger, A. Automated Search for new Quantum Experiments. Physical Review Letters 116, 090405, https://doi.org/10.1103/PhysRevLett.116.090405 (2016).

Carleo, G. & Troyer, M. Solving the quantum many-body problem with artificial neural networks. Science (New York, N.Y.) 355, 602–606, https://doi.org/10.1126/science.aag2302 (2017).

Broecker, P., Carrasquilla, J., Melko, R. G. & Trebst, S. Machine learning quantum phases of matter beyond the fermion sign problem. Scientific Reports 7, 8823, https://doi.org/10.1038/s41598-017-09098-0 (2017).

Ch’ng, K., Carrasquilla, J., Melko, R. G. & Khatami, E. Machine Learning Phases of Strongly Correlated Fermions. Physical Review X 7, 031038, https://doi.org/10.1103/PhysRevX.7.031038 (2017).

Torlai, G. & Melko, R. G. Learning thermodynamics with Boltzmann machines. Physical Review B 94, 165134, https://doi.org/10.1103/PhysRevB.94.165134 (2016).

Li, L. et al. Understanding machine-learned density functionals. International Journal of Quantum Chemistry 116, 819–833, https://doi.org/10.1002/qua.25040 (2016).

Baldi, P., Sadowski, P. & Whiteson, D. Searching for exotic particles in high-energy physics with deep learning. Nature Communications 5, 4308, https://doi.org/10.1038/ncomms5308 (2014).

Cannas, B., Fanni, A., Sonato, P. & Zedda, M. A prediction tool for real-time application in the disruption protection system at JET. Nuclear Fusion 47, 1559–1569, https://doi.org/10.1088/0029-5515/47/11/018 (2007).

Vega, J. et al. Results of the JET real-time disruption predictor in the ITER-like wall campaigns. Fusion Engineering and Design 88, 1228–1231, https://doi.org/10.1016/J.FUSENGDES.2013.03.003 (2013).

Rea, C. & Granetz, R. S. Exploratory Machine Learning Studies for Disruption Prediction Using Large Databases on DIII-D. Fusion Science and Technology 74, 89–100, https://doi.org/10.1080/15361055.2017.1407206 (2018).

Humbird, K. D., Peterson, J. L. & McClarren, R. G. Deep neural network initialization with decision trees, 1707.00784 (2017).

Nora, R., Peterson, J. L., Spears, B. K., Field, J. E. & Brandon, S. Ensemble simulations of inertial confinement fusion implosions. Statistical Analysis and Data Mining: The ASA Data Science Journal 10, 230–237, https://doi.org/10.1002/sam.11344 (2017).

Peterson, J. L. et al. Zonal flow generation in inertial confinement fusion implosions. Physics of Plasmas 24, 032702, https://doi.org/10.1063/1.4977912 (2017).

Wang, L. Discovering phase transitions with unsupervised learning. Physical Review B 94, 195105, https://doi.org/10.1103/PhysRevB.94.195105 (2016).

van Nieuwenburg, E. P. L., Liu, Y.-H. & Huber, S. D. Learning phase transitions by confusion. Nature Physics 13, 435–439, https://doi.org/10.1038/nphys4037 (2017).

Hu, W., Singh, R. R. P. & Scalettar, R. T. Discovering phases, phase transitions, and crossovers through unsupervised machine learning: A critical examination. Physical Review E 95, 062122, https://doi.org/10.1103/PhysRevE.95.062122 (2017).

Tracey, B. D., Duraisamy, K. & Alonso, J. J. A Machine Learning Strategy to Assist Turbulence Model Development. In 53rd AIAA Aerospace Sciences Meeting, https://doi.org/10.2514/6.2015-1287 (American Institute of Aeronautics and Astronautics, Reston, Virginia, 2015).

Gao, X. & Duan, L.-M. Efficient representation of quantum many-body states with deep neural networks. Nature Communications 8, 662, https://doi.org/10.1038/s41467-017-00705-2 (2017).

Glasser, I., Pancotti, N., August, M., Rodriguez, I. D. & Cirac, J. I. Neural-Network Quantum States, String-Bond States, and Chiral Topological States. Physical Review X 8, 011006, https://doi.org/10.1103/PhysRevX.8.011006 (2018).

Huertas-Company, M. et al. Deep Learning Identifies High- z Galaxies in a Central Blue Nugget Phase in a Characteristic Mass Range. The Astrophysical Journal 858, 114, https://doi.org/10.3847/1538-4357/aabfed (2018).

Lam, C. & Kipping, D. A machine learns to predict the stability of circumbinary planets. Monthly Notices of the Royal Astronomical Society 476, 5692–5697, https://doi.org/10.1093/mnras/sty022 (2018).

Ljung, L. System identification: theory for the user (Prentice Hall PTR, 1999).

Kennedy, M. C. & O’Hagan, A. Bayesian calibration of computer models. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 63, 425–464, https://doi.org/10.1111/1467-9868.00294 (2001).

Scharf, L. L. & Demeure, C. Statistical signal processing: detection, estimation, and time series analysis. (Addison-Wesley Pub. Co, Reading, Mass., 1991).

Bucklew, J. A. Large deviation techniques in decision, simulation, and estimation (Wiley, 1990).

Milanese, M. & Vicino, A. Optimal estimation theory for dynamic systems with set membership uncertainty: An overview. Automatica 27, 997–1009, https://doi.org/10.1016/0005-1098(91)90134-N (1991).

Van Trees, H. L. Detection, estimation, and modulation theory. Part I, Detection, estimation, and linear modulation theory (Wiley, 2001).

Cont, R. & Tankov, P. Financial modelling with jump processes (Chapman & Hall/CRC, 2004).

Alexander, C. Market models: a guide to financial data analysis (Wiley, 2001).

Brath, A., Montanari, A. & Toth, E. Analysis of the effects of different scenarios of historical data availability on the calibration of a spatially-distributed hydrological model. Journal of Hydrology 291, 232–253, https://doi.org/10.1016/j.jhydrol.2003.12.044 (2004).

Beven, K. & Binley, A. The future of distributed models: Model calibration and uncertainty prediction. Hydrological Processes 6, 279–298, https://doi.org/10.1002/hyp.3360060305 (1992).

Silva, E. A. & Clarke K. C. Calibration of the SLEUTH urban growth model for Lisbon and Porto, Portugal. Computers, Environment and Urban Systems 26, 525–552, https://doi.org/10.1016/S0198-9715(01)00014-X (2002).

Ho, C. K., Stephenson, D. B., Collins, M., Ferro, C. A. T. & Brown, S. J. Calibration Strategies a Source of additional uncertainty in Climate Change Projections, https://doi.org/10.1175/2011BAMS3110.1.

Angulo, C. et al. Implication of crop model calibration strategies for assessing regional impacts of climate change in Europe. Agricultural and Forest Meteorology 170, 32–46, https://doi.org/10.1016/j.agrformet.2012.11.017 (2013).

Garcia, L. A. & Shigidi, A. Using neural networks for parameter estimation in ground water. Journal of Hydrology 318, 215–231, https://doi.org/10.1016/j.jhydrol.2005.05.028 (2006).

Zhang, L., Li, L., Ju, H. & Zhu, B. Inverse identification of interfacial heat transfer coefficient between the casting and metal mold using neural network. Energy Conversion and Management 51, 1898–1904, https://doi.org/10.1016/J.ENCONMAN.2010.02.020 (2010).

Aguir, H., BelHadjSalah, H. & Hambli, R. Parameter identification of an elasto-plastic behaviour using artificial neural networks–genetic algorithm method. Materials & Design 32, 48–53, https://doi.org/10.1016/J.MATDES.2010.06.039 (2011).

Zaw, K., Liu, G., Deng, B. & Tan, K. Rapid identification of elastic modulus of the interface tissue on dental implants surfaces using reduced-basis method and a neural network. Journal of Biomechanics 42, 634–641, https://doi.org/10.1016/j.jbiomech.2008.12.001 (2009).

The Galton Board, https://en.wikipedia.org/wiki/Bean_machine.

Hornik, K. Approximation capabilities of multilayer feedforward networks. Neural Networks 4, 251–257, https://doi.org/10.1016/0893-6080(91)90009-T (1991).

Mourou, G. A., Tajima, T. & Bulanov, S. V. Optics in the relativistic regime. Reviews of Modern Physics 78, 309–371, https://doi.org/10.1103/RevModPhys.78.309 (2006).

Esarey, E., Schroeder, C. B. & Leemans, W. P. Physics of laser-driven plasma-based electron accelerators. Reviews of Modern Physics 81, 1229–1285, https://doi.org/10.1103/RevModPhys.81.1229 (2009).

Teubner, U. & Gibbon, P. High-order harmonics from laser-irradiated plasma surfaces. Reviews of Modern Physics 81, 445–479, https://doi.org/10.1103/RevModPhys.81.445 (2009).

Daido, H., Nishiuchi, M. & Pirozhkov, A. S. Review of laser-driven ion sources and their applications. Reports on Progress in Physics 75, 056401, https://doi.org/10.1088/0034-4885/75/5/056401 (2012).

Macchi, A., Borghesi, M. & Passoni, M. Ion acceleration by superintense laser-plasma interaction. Reviews of Modern Physics 85, 751–793, https://doi.org/10.1103/RevModPhys.85.751 (2013).

Bourdier, A. Oblique incidence of a strong electromagnetic wave on a cold inhomogeneous electron plasma. Relativistic effects. Physics of Fluids 26, 1804, https://doi.org/10.1063/1.864355 (1983).

Gordienko, S., Pukhov, A., Shorokhov, O. & Baeva, T. Relativistic Doppler Effect: Universal Spectra and Zeptosecond Pulses. Physical Review Letters 93, 115002, https://doi.org/10.1103/PhysRevLett.93.115002 (2004).

Pirozhkov, A. S. et al. Attosecond pulse generation in the relativistic regime of the laser-foil interaction: The sliding mirror model. Physics of Plasmas 13, 013107, https://doi.org/10.1063/1.2158145 (2006).

Quéré, F. et al. Coherent Wake Emission of High-Order Harmonics from Overdense Plasmas. Physical Review Letters 96, 125004, https://doi.org/10.1103/PhysRevLett.96.125004 (2006).

Sanz, J., Debayle, A. & Mima, K. Model for ultraintense laser-plasma interaction at normal incidence. Physical Review E 85, 046411, https://doi.org/10.1103/PhysRevE.85.046411 (2012).

Debayle, A., Sanz, J., Gremillet, L. & Mima, K. Toward a self-consistent model of the interaction between an ultra-intense, normally incident laser pulse with an overdense plasma. Physics of Plasmas 20, 053107, https://doi.org/10.1063/1.4807335 (2013).

Debayle, A., Sanz, J. & Gremillet, L. Self-consistent theory of high-order harmonic generation by relativistic plasma mirror. Physical Review E 92, 053108, https://doi.org/10.1103/PhysRevE.92.053108 (2015).

Baeva, T., Gordienko, S. & Pukhov, A. Theory of high-order harmonic generation in relativistic laser interaction with overdense plasma. Physical Review E 74, 046404, https://doi.org/10.1103/PhysRevE.74.046404 (2006).

Boyd, T. J. M. & Ondarza-Rovira, R. Anomalies in Universal Intensity Scaling in Ultrarelativistic Laser-Plasma Interactions. Physical Review Letters 101, 125004, https://doi.org/10.1103/PhysRevLett.101.125004 (2008).

Boyd, T. & Ondarza-Rovira, R. Plasma effects in high harmonic spectra from ultrarelativistic laser–plasma interactions. Physics Letters A 380, 1368–1372, https://doi.org/10.1016/j.physleta.2016.02.008 (2016).

Dromey, B. et al. High harmonic generation in the relativistic limit. Nature Physics 2, 456–459, https://doi.org/10.1038/nphys338 (2006).

Gonoskov, A. A., Korzhimanov, A. V., Kim, A. V., Marklund, M. & Sergeev, A. M. Ultrarelativistic nanoplasmonics as a route towards extreme-intensity attosecond pulses. Physical Review E 84, 046403, https://doi.org/10.1103/PhysRevE.84.046403 (2011).

Gonoskov, A. Theory of relativistic radiation reflection from plasmas. Physics of Plasmas 25, 013108, https://doi.org/10.1063/1.5000785 (2018).

Serebryakov, D. A., Nerush, E. N. & Kostyukov, I. Y. Incoherent synchrotron emission of laser-driven plasma edge. Physics of Plasmas 22, 123119, https://doi.org/10.1063/1.4938206 (2015).

Svedung Wettervik, B., Gonoskov, A. & Marklund, M. Prospects and limitations of wakefield acceleration in solids. Physics of Plasmas 25, 013107, https://doi.org/10.1063/1.5003857 (2018).

Bashinov, A., Gonoskov, A., Kim, A., Mourou, G. & Sergeev, A. New horizons for extreme light physics with mega-science project XCELS. The European Physical Journal Special Topics 223, 1105–1112, https://doi.org/10.1140/epjst/e2014-02161-7 (2014).

Fuchs, J. et al. Plasma devices for focusing extreme light pulses. The European Physical Journal Special Topics 223, 1169–1173, https://doi.org/10.1140/epjst/e2014-02169-y (2014).

Blanco, M., Flores-Arias, M. T. & Gonoskov, A. Controlling the ellipticity of attosecond pulses produced by laser irradiation of overdense plasmas, https://doi.org/10.1063/1.5044482, 1706.04785 (2017).

Thaury, C. et al. Plasma mirrors for ultrahigh-intensity optics. Nature Physics 3, 424–429, https://doi.org/10.1038/nphys595 (2007).

Vincenti, H. et al. Optical properties of relativistic plasma mirrors. Nature Communications 5, 3403, https://doi.org/10.1038/ncomms4403 (2014).

Borot, A. et al. Attosecond control of collective electron motion in plasmas. Nature Physics 8, 416–421, https://doi.org/10.1038/nphys2269 (2012).

Kormin, D. et al. Spectral interferometry with waveform-dependent relativistic high-order harmonics from plasma surfaces. Nature Communications 9, 4992, https://doi.org/10.1038/s41467-018-07421-5 (2018).

Gonoskov, A. Ultra-intense laser-plasma interaction for applied and fundamental physics. Ph.D. thesis, Umea universitet, https://doi.org/10.1017/S1367943003003160 (2013).

Blackburn, T. G., Gonoskov, A. A. & Marklund, M. Relativistically intense XUV radiation from laser-illuminated near-critical plasmas. Physical Review A 98, 023421, https://doi.org/10.1103/PhysRevA.98.023421 (2018).

Acknowledgements

A.G. would like to thank M. Marklund and T. G. Blackburn for useful discussions. Simulations were performed on resources provided by the Swedish National Infrastructure for Computing (SNIC) at the High Performance Computing Centre North (HPC2N). The research was supported by the Swedish Research Council under Grant No. 2017-05148.

Author information

Authors and Affiliations

Contributions

All authors contributed to discussions and preparation of the manuscript, A.P. and I.M. were responsible for machine learning techniques, E.W. performed some of the numerical experiments, A.G. proposed the methodology and led the project.

Corresponding author

Ethics declarations

Competing Interests

The authors declare no competing interests.

Additional information

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Gonoskov, A., Wallin, E., Polovinkin, A. et al. Employing machine learning for theory validation and identification of experimental conditions in laser-plasma physics. Sci Rep 9, 7043 (2019). https://doi.org/10.1038/s41598-019-43465-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-019-43465-3

This article is cited by

-

High-harmonic generation from a flat liquid-sheet plasma mirror

Nature Communications (2023)

-

Graph Theory Applied to Plasma Chemical Reaction Engineering

Plasma Chemistry and Plasma Processing (2021)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.