Abstract

Machine learning (ML) techniques such as (deep) artificial neural networks (DNN) are solving very successfully a plethora of tasks and provide new predictive models for complex physical, chemical, biological and social systems. However, in most cases this comes with the disadvantage of acting as a black box, rarely providing information about what made them arrive at a particular prediction. This black box aspect of ML techniques can be problematic especially in medical diagnoses, so far hampering a clinical acceptance. The present paper studies the uniqueness of individual gait patterns in clinical biomechanics using DNNs. By attributing portions of the model predictions back to the input variables (ground reaction forces and full-body joint angles), the Layer-Wise Relevance Propagation (LRP) technique reliably demonstrates which variables at what time windows of the gait cycle are most relevant for the characterisation of gait patterns from a certain individual. By measuring the time-resolved contribution of each input variable to the prediction of ML techniques such as DNNs, our method describes the first general framework that enables to understand and interpret non-linear ML methods in (biomechanical) gait analysis and thereby supplies a powerful tool for analysis, diagnosis and treatment of human gait.

Similar content being viewed by others

Introduction

The ability to walk is crucial for human mobility and enables to predict quality of life, morbidity and mortality1,2,3,4,5,6,7,8,9,10. Its importance is underlined by the fear of losing the ability to walk, which is frequently considered to be the first and most significant concern from individuals that sustain diagnoses like stroke11,12 or Parkinson disease3,13. However, gait and balance are no longer regarded as purely motor tasks, but are considered as complex sensorimotor behaviours that are heavily affected by cognitive and affective aspects14. This may partially explain the sensitivity to subtle neuronal dysfunction, and why gait and postural control can predict the development of disease such as diabetes, dementia or Parkinson even years before they are diagnosed clinically14,15,16,17,18.

In order to prevent, diagnose, or rehabilitate a loss of independence due to (gait) impairments, gait analysis is common practice to support and standardise researchers’, clinicians’ and therapists’ decisions when assessing gait abnormalities and/or identifying changes due to orthopaedic or physiotherapeutic interventions19. But although becoming gradually established over the past decades, most biomechanical gait analyses have examined the influence of single time-discrete gait variables, like gait velocity, step length or range of motion, as risk factor or predictor for (gait) disease in isolation20,21. While conventional approaches have addressed successfully many important clinical and scientific questions related to human gait (impairments), they exhibit some inherent limitations: When single time-discrete variables (e.g. the range of motion in the knee joint) are extracted from time-continuous variables (e.g. knee joint angle-gait stride curve), a large amount of data are discarded. In many cases it remains unclear, if and to what degree single pre-selected variables are capable to represent a sufficient description of a whole body movement like human gait22,23,24,25,26. The a priori selection of single gait variables relies mostly on the experience and/or subjective opinion of the analyst, which may lead to a certain risk of investigator bias. Furthermore, single pre-selected variables might miss potentially meaningful information that are represented by – or in combination with – other (not selected) variables. In this context, it seems questionable if the multi-dimensional interactions between gait characteristics and gait disease or disease that impair gait can be entirely represented by a subjective selection of single time-discrete variables22,23,27.

In response to these shortcomings, multivariate statistical analysis25,26,28 and machine learning techniques such as artificial neural networks (ANN)29 and support vector machines (SVM)30,31,32,33 have been used to examine human locomotion based on time-continuous gait patterns27. Significant advances in motion capture equipment and data analysis techniques have enabled a plethora of studies that have advanced our understanding about human gait. Due to extensive new datasets34, the application of machine learning techniques is becoming increasingly popular in the area of clinical biomechanics27,35,36 and provided new insights into the nature of human gait control. The application of ANNs and SVMs highlighted for example that gait patterns are unique to an individual person22,37, exhibited natural changes within different time-scales38,39 and identified that emotional states40 and grades of fatigue41 can be differentiated from human gait patterns. Furthermore, gender and age-specific gait patterns could be differentiated24,42. In the context of clinical gait analysis, several approaches based on machine learning have been published in recent years in order to support clinicians in identifying and categorizing specific gait patterns into clinically relevant groups25,26,27,36. Previous studies were able to differentiate gait patterns from healthy individuals and individuals with (neurological) disorders like Parkinson’s disease43, cerebral palsy44, multiples scleroses44 or traumatic brain injuries45 and pathological gait conditions like lower-limb fractures36 or acute anterior cruciate ligament injury46.

Although machine learning techniques are solving very successfully a variety of classification tasks and provide new insights from complex physical, chemical, biological, or social systems, in most cases they go along with the disadvantage of acting as a black box, rarely providing information about what made them arrive at a particular decision47,48. This non-transparent operating and decision-making of most non-linear machine learning methods leads to the problem that their predictions are not straightforward understandable and interpretable. This black box manner can be problematic especially in applications of machine learning in medical diagnosis like gait analyses and so far strongly hamper their clinical acceptance28. The lack of understanding and interpreting the decision process of machine learning techniques is a clear drawback and recently attracted attention in the field of machine learning47,48,49,50,51,52,53,54,55,56,57,58. In this context, the so called Layer-wise Relevance Propagation (LRP) technique has been proposed as general technique for explaining classifier’s decisions by decomposition, i.e. by measuring the contribution of each input variable to the overall prediction52. LRP has been successfully applied to a number of technical and scientific tasks such as image classification59,60 and text document classification61. Also, interpreting linear and non-linear models have helped to gain interesting insights in neuroscience62,63, bioinformatics64,65,66 and physics67.

Due to benefits of machine learning methods in comparison to conventional approaches in gait analysis27,35, LRP appears highly promising to increase their transparency and therefore make them applicable and reliable for clinical diagnoses25,26,28. In the context of personalised medicine, the aim of the present study was to examine individual gait patterns by:

-

1.

Demonstrating the uniqueness of gait patterns to the individual by using (deep) artificial neural networks for predicting identities based on gait;

-

2.

Verifying that non-linear machine learning methods such as (deep) artificial neural networks use comprehensible prediction strategies and learn meaningful gait characteristics by using the Layer-Wise Relevance Propagation; and

-

3.

Analysing the unique gait signature from an individual by highlighting which variables at what time windows of the gait cycle are used by the model to identify an individual.

The presented approach investigates the suitability of understanding and interpreting the classification of gait patterns using state-of-the-art machine learning methods. This paper therefore presents a first step towards establishing a powerful tool that can be used as the basis for future application of machine learning in (biomechanical) gait analysis and thus enabling automatic classifications of (neurological) disorders and pathological gait conditions applicable in clinical diagnoses.

Results

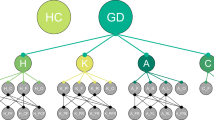

The uniqueness of human walking to the individual was examined based on time-continuous kinematic (full-body joint angles) and kinetic (ground reaction forces) gait patterns (see Methods section for a description of the data). From a biomechanical gait analysis (Fig. 1 I: Record gait data), conducted on 57 healthy subjects, lower-body joint angles (LBJA) and ground reaction forces (GRF) have been measured as input vectors x for the prediction of subjects y using deep artificial neural networks (DNN) (Fig. 1 II: Predict with DNN). LRP decomposes the prediction f(x) of a learned function f given an input sample x into into time-resolved input relevance values Ri for each time-discrete input xi, which enables to explain the prediction of DNNs as partial contributions from individual input components (Fig. 1 III: Explain prediction using LRP). LRP indicates based on which information a model predicts and thereby enables to interpret the input relevance values and their dynamics as representation for a certain class (individual). Hence, the input relevance values point out which gait characteristics were most relevant for the identification of a certain individual. In the following, input relevance values are visualised by colour coding, using a diverging and symmetric high contrast colour scheme as shown in Fig. 1 (III: Explain prediction using LRP). Here, input elements neutral to the predictor (Ri ≈ 0) will be shown in black colour, while warm and hot hues indicate input components supporting the prediction (\({R}_{i}\gg 0\)) of the analysed class and cold hues identify contradictory inputs (\({R}_{i}\ll 0\)).

Overview of data acquisition and data analysis, showing the example of subject 6. (I) The biomechanical gait analysis compromised the recording of 20 times walking barefoot a distance of 10 m at a self-selected walking speed. Two force plates and ten infrared cameras recorded the three-dimensional full-body joint angles and ground reaction forces during a double step. (II) Lower-body joint angles in the sagittal plane (flexion-extension) (LBJAX) and ground reaction forces (GRF) compromising the fore-aft shear force (fore-aft), medial-lateral shear force (med-lat) and vertical force (vert) have been used as time-normalised and concatenated input vectors x for the prediction of subjects y using deep artificial neural networks (DNN). Shaded areas for the LBJAX highlight the time where the respective (left or right) foot is in contact with the ground. (III) Decomposition of input relevance values using the Layer-Wise Relevance Propagation (LRP). Colour Spectrum for the visualisation of input relevance values of the model predictions. Throughout this manuscript, we use LRP to exclusively analyse the prediction for the true class of a sample. Thereby, black line segments are irrelevant to the model’s prediction. Red and hot colours identify input segments causing a prediction corresponding to the subject label, while blue and cold hues are features contradicting the subject label. For subject 6, the predicting model (CNN-A) achieves true positive rates (TP) of 100% for LBJAX and 95.23% for GRF.

As an example, Fig. 1 illustrates the unique gait signature from subject 6 by decomposing the input relevance values using LRP (see Fig. 2 and Supplementary Figs SF1 to SF4 for additional subject specific examples). From the gait feature relevance, we can observe that the extension of the ankle during the terminal stance phase of the right and left leg and the flexion of the knee and hip during the initial contact of the right leg is unique to subject 6. The kinetic data supports this finding, showing the highest input relevance values for the prediction of subject 6 for the vertical GRF during the terminal time window of the right and left stance phase. On these grounds, LRP enables to discover the trial-individual gait signature from a certain individual. This individual signature can serve as indicator in clinical diagnoses and starting point for therapeutic interventions. In our example (Fig. 1), the terminal stance phase is unique to subject 6. While this uniqueness might be interpreted as a reflection of a highly coordinated individual system, it could also indicate first relevant information about (forthcoming) complaints or impairments. Clinicians and researchers are therefore capable to pick up the unique peculiarity during the terminal stance phase for an individualisation of therapeutic interventions, e.g. by changing the strength of the responsible muscles for the extension of the ankle joint or reducing forces during the terminal stance phase during walking by shoes or insoles.

Left: Mean Ground Reaction Force as a line plot, colour coded via input relevance values for the actual class for subject 21, 28, 39, 42, 55 and 57 using convolutional neural network CNN-A. The highest input relevance values per body side are highlighted by a red circle. Right: Mean Lower-Body Joint Angles in the sagittal plane (flexion-extension) as line plot, colour coded via input relevance values for the actual class for subject 6, 23, 32, 37, 47 and 55 using convolutional neural network CNN-A. The highest input relevance values per body side are highlighted by a red circle. Shaded areas for the LBJAX highlight the time where the respective (left or right) foot is in contact with the ground.

As discussed earlier, linear classification models have often been used for the classification of gait patterns27,35,36. However, other domains in machine learning have shown that highly non-linear DNNs are capable to outperform linear and kernel based methods68,69,70,71,72,73. In this study, we therefore investigate the applicability of state-of-the-art non-linear machine learning models such as DNNs for gait analysis and additionally compare different linear and non-linear machine learning methods in terms of prediction accuracy, model robustness and decomposition of input relevance values using the LRP technique (see Methods for detailed description).

Prediction Accuracy and Model Robustness

The mean prediction accuracy for the subject-classification (on an out of sample set also denoted as test set) are summarised in Table 1 (and Supplementary Table ST1). The most striking result to emerge from this table is that most of the tested models were able to predict the correct class (subject) with a high accuracy above 95.4% (ground reaction forces), 99.9% (full-body joint angles) and 99.9% (lower-body joint angles).

The results in Table 1 (and Supplementary Table ST1) are quite revealing in several aspects. First, the highest prediction accuracy can be observed throughout the kinetic and kinematic variables for the linear support vector machines (Linear (SVM)). Second, the linear one-layer fully-connected neural network using Stochastic Gradient Descent (Linear (SGD)), fully-connected neural network (multi layer perceptron (MLP)) using higher number of neurons (MLP (3, 256) and MLP (3, 1024)) and deep (convolutional) neural network architectures (CNN-A and CNN-C3) result in similar and throughout high prediction accuracies, while the prediction accuracy of fully-connected networks using a lower number of neurons (MLP (3, 64)) is decreased. Third, surprisingly, even the linear neural network model architecture (Linear (SGD)) was able to predict the correct individual by quite high mean accuracies of 95.4% (ground reaction forces), 100.0% (full-body joint angles) or 100.0% (lower-body joint angles).

While the prediction accuracy of the linear neural network model (Linear (SGD)) is comparable to the fully-connected and convolutional neural network architectures, the robustness of their predictions against noise on the testing data exhibits considerable differences. As an example, Fig. 3 shows the progression of the mean prediction accuracy of the subject-classification for the stepwise increase of random noise perturbation on the test data. That is, for a given input, we compute a random order by which the components of said input are perturbed, e.g. with the addition of random gaussian noise to the current input component. After each perturbation step (and executing the n-th perturbation for all test samples simultaneously), the prediction performance of a model is re-evaluated. If a model is sensitive to random noise in the data it will react strongly to the ongoing perturbations. Figure 3 shows for each model the average test set prediction accuracy over 50 consecutive perturbation steps, averaged over 10 repetitions of the experiment. It is apparent that the prediction accuracy of the Linear (SGD) model decreases rapidly after only a few perturbation steps, indicating low robustness and reliability of the model. Variability is an inherent feature of human movements that occurs not only between but also within individuals. Therefore robust and reliable model predictions are a mandatory prerequisite for the development of automatic classification tools in clinical gait analysis.

Table 2 (and Supplementary Table ST2) summarises the robustness of the model predictions and presents the mean area over perturbation curve (AOPC)59 of the stepwise increase of random noise perturbation over multiple repetitions of the perturbation runs on the test data. High AOPC values computed with above strategy of random perturbations corresponds to high sensitivity to noise. From the results presented in Table 2 (and Supplementary Table ST2), it is apparent that the stepwise increase of noise on the test data lead to an abrupt decrease in the prediction accuracy of the linear neural network. Furthermore, closer inspection shows that fully-connected models based on a higher number of neurons are more robust against noise perturbations on the test data than models based on lower numbers of neurons. Even though the prediction problem at hand seems simple enough such that linear predictors perform excellently (i.e. the relationship between the input variables and the prediction target is linearly separable) and the performance-wise gain from non-linear and networks is minimal, the deeper architectures bring to the table considerably more robust predictors, which is especially valuable in application settings like gait analysis, where variability is an important factor to consider.

Interpreting and Understanding Model Predictions using Layer-Wise Relevance Propagation

As an example Fig. 2 shows relevance attributions for predictions based on ground reaction force (Fig. 2 (left)) and the lower-body joint angles (Fig. 2 (right)) of a certain individual based on his or her gait patterns.

The input relevance contributions point out which gait characteristics were most relevant for the identification of an individual and thereby reveal the unique gait signature of a certain subject. The comparison of input relevance values from different subjects indicates that individuals were classified by both, different gait characteristics and differing magnitudes or shapes of the same gait characteristic. For example, the highest input relevance values for the prediction of subject 21 (Fig. 2 (left)) can be observed in the medial/lateral ground reaction force at approximately 10% of the gait stride and subject 28 (Fig. 2 (left)) in the vertical ground reaction force at approximately 90% of the gait stride. While the highest input relevance values for the prediction of subject 55 (Fig. 2 (left)) and subject 57 (Fig. 2 (left)) can be both observed in the vertical ground reaction force at approximately 10% of the stance phase. It is further interesting that among all predicted LBJAX curves shown in Fig. 2 (right), subject 37 is the only one that is identified dominantly by gait characteristics during the swing phase (and not the stance phase). The model has associated the pronounced flexion of the right (left) ankle joint during the swing phase unique to subject 37.

In the vast majority of cases, the input relevance values for a certain class (individual) are comparable between the different model architectures (Fig. 4 (left)), i.e. all models pick up on similar features, which are characteristic to the individual subject in general. It is rather apparent from the results that most artificial neural networks and the linear SVM are using a number of different gait characteristics for their predictions, albeit the vast majority of the inputs seems to be irrelevant to the model’s decision (see Fig. 1; the tight interval (R ≈ 0) projected onto black), which are largely based on individual details in a subject’s movement patterns. This explanatory relevance feedback indicates that (non-linear) machine learning methods such as (deep) artificial neural networks are not arbitrarily picking up single randomly distributed input values, but rather learn certain dynamically meaningful features that can be related to functional gait characteristics and thus making them applicable in clinical gait analysis.

Left: Mean Ground Reaction Force as line plot, colour coded via input relevance for the actual class of different models using artificial neural networks and the linear SVM model from subject 57. The highest input relevance values per body side are highlighted by a red circle. Right: Input relevance as colour coded line plots for the predicted class of different models using artificial neural networks and linear models of ground reaction force of the 20 gait trials from subject 28.

When comparing input relevance values from different architectures of artificial neural networks and the SVM strikingly all model architectures (except the CNN-C3) show that not a single gait characteristic (certain variable at a certain time window of the gait cycle) is relevant for the identification of a certain individual, but rather complex combinations thereof.

Even more interesting is the observation that input relevance value attributions appear to be similar between right and the left body side variables (as an example take Fig. 4 (left): Linear (SVM), MLP (3, 1024) and CNN-A). This indicates the importance of symmetries/asymmetries between left and right body side variables for the examination of human gait. Note however that the models all learned without any information about the physiological meaning of the used variables. While the input relevance attributions are throughout comparable for all evaluated architectures – i.e. there is, given a subject, a non-empty set of features recognized as characteristic features to the individual by all models – some models have learned to ground their predictions on supplementary gait characteristics (e.g. Fig. 4 (left): CNN-C3 vs CNN-A).

We can further observe that the use of multiple gait characteristics for prediction can be associated with a model’s robustness to random perturbations of the input. The observed robustness of the evaluated models is also reflected by the reliability of the attributed input relevance values: Over the relevance values of each input component and subject (i.e. 20 relevance values each), the coefficient of variation74 was computed in order to prove their consistency over several trials and cross validation splits. The coefficient of variation represents the (root mean square) normalised band of standard deviation around the relevance signal of an input variable, where low values correspond to high reliability/stability of relevance attributions to a observed feature between samples and data splits, and thus to the model’s ability to generalise. A high coefficient of variation indicates that a model overfits on its respective training split population. As an example, Fig. 4 (right) shows the input relevance values for the actual class prediction for the ground reaction forces. Qualitatively, the highest deviations of input relevance values between trials appeared in the prediction of the linear neural network model (Linear (SGD)), which also is most sensitive to even minute noise added to the test data (Fig. 3 and Table 2). It becomes apparent from Fig. 4 (right) that the variance of input relevance is decreasing in fully-connected neural network architectures composed of increasing numbers of neurons. However, the lowest variance of the input relevance values can be observed in the relevance decomposition of the predictions from the Linear (SVM) and convolutional neural network architectures, which we attribute for the former to the complexity of the regularised training regime and complexity of the model itself for the latter.

Table 3 (Supplementary Table ST3) summarises the mean coefficient of variation for the input relevance values for the subject-classification over all subjects, expressing that decreasing variance (increasing reliability) goes along with increasing model complexity, and also model robustness when compared to Fig. 3 and Table 2. Hence, the lowest reliability is present for the Linear (SGD) model, while reliability is increasing in fully-connected model architectures composed of increasing numbers of layers and neurons and discloses the highest reliability for the convolutional neural networks (CNN-A and CNN-C3). Interestingly, the reliability of the input relevance values from linear support vector machines (Linear (SVM)) are as well on a high level and comparable to convolutional neural networks.

Discussion

The present results verified the uniqueness of characteristics for individual gait patterns based on kinematic and kinetic variables. By decomposing the prediction of machine learning methods such as (deep) artificial neural networks back to the input variables (time-continuous ground reaction forces and full-body joint angles), the LRP technique demonstrated which gait variables were most relevant for the characterisation of gait patterns from a certain individual. By measuring the contribution of each input variable to the prediction of (deep) artificial neural networks, the present paper describes a procedure that enables to understand and interpret the predictions of non-linear machine learning methods in (biomechanical) gait analysis. LRP thereby outlines the first general framework that facilitates to overcome the inherent black box problem of non-linear machine learning methods and makes them applicable in clinical gait analysis. In the context of personalised medicine, the determination of characteristics that are specific for gait patterns of a certain individual facilitates to support clinicians and researchers in the individualisation of their analyses, diagnoses and interventions.

The individual nature of human gait patterns was quantified using different linear and non-linear machine learning methods. The present results support previous studies on the individuality of human movements22,37,40,41 and provide evidence for gait characteristics that are unique to an individual and can be clearly differentiated from gait patterns of other individuals. Most of the artificial neural network architectures classified gait patterns almost error-free to the corresponding individual and achieved very high prediction accuracies that are suitable for clinical applications. However, advantages for more sophisticated model architectures (like fully-connected model using a higher numbers of neurons or deep convolutional neural networks) can be observed in higher prediction accuracies (Table 1 and Supplementary Table ST1) and even more significant in the higher robustness of the model predictions against noise perturbations on the test data (Fig. 3, Table 2 and Supplementary Table ST2). Because variability within individuals38,39 as well as variability due to differences between individuals22,37, genders42 and ages24 is an inherent feature of human motor control, prediction accuracy and model robustness are both essential for the development of reliable clinical applications using machine learning. Consequently, the present results suggest high potential of state-of-the-art non-linear methods such as DNNs compared to linear methods.

One of the issues that emerges from the evidence that gait patterns are unique to an individual, is the demand to evaluate clinical approaches for diagnoses and therapy that consider individual needs22,37. However, previous studies could not address how an individualisation of diagnoses and therapy could be obtained. By measuring the contribution of each input variable to the prediction of machine learning methods, the LRP method enables one for the first time to describe qualitatively why a certain individual could be identified based on his/her gait patterns. The LRP technique provides the possibility to comprehend what a model has learned and to interpret the input relevance values as representation for a certain class (individual). In the context of personalised gait analysis (medicine) that means, the decomposition of input relevance values and their dynamics describe what input variables are most relevant for the identification of a certain individual and thereby indicate which input variables are the most characteristic ones for the gait patterns of a certain individual (Fig. 1).

On these grounds, the input relevance values enable clinicians and researchers to determine the unique gait signature of a certain individual based on single trials and adjust their analyses, diagnoses and interventions to the specific needs of this individual (Figs 1 and 2).

In addition, explaining the model predictions provides interesting insights into the analysis of gait patterns. The input relevance values highlight that in most cases not a single gait characteristic (specific value or shape of a certain variable at a certain time of the gait cycle) is relevant for the identification of a certain individual. It is rather apparent that most artificial neural networks architectures look for the shape of different variables as well as their interaction at the same time window or at different time windows of the gait cycle. Similar results have been found on photographic image data52,60. Interestingly, the prediction of most artificial neural network architectures (except CNN-C3) trace to input relevance values that are similar between right and left body side variables at the same time. That means a certain variable at a certain time window of the gait cycle of the right and the left body side is relevant for the prediction of the models (Fig. 4 (left)), indicating importance of symmetries and asymmetries between right and left body movements for the identification of individuals and probably the examination of human gait in general.

The input relevance values support that machine learning approaches like artificial neural networks are able to consider several variables at various time points of the gait cycle for their predictions. In comparison to most conventional approaches of gait analysis that are based on single pre-selected variables, machine learning approaches seem to be promising to represent the multi-dimensional associations of human locomotion and their connections to functional and neurological disease22,23,27.

The present results demonstrate in the vast majority of cases that the input relevance values are similar between different model architectures (Fig. 4). That means all models pick up similar features for the classification of gait patterns, which are characteristic to the individual subject in general. However, the LRP technique enables to identify the strategy of a certain model to classify a class (individual gait patterns) and to compare strategies between different model architectures60. For an implementation of machine learning in clinical diagnoses and therapeutic interventions, for example in terms of an automatic classification of gait disorders or (neurological) disease36,43,44, the understanding about their decisions and decision-making seems to be inevitable. Since the lack of transparency has so far been a major drawback of preceding applications of machine learning, e.g. in medical applications (like gait analysis), further research on explaining, understanding and interpreting machine learning predictions should get attention.

Here, the decomposition of input variable relevance values using the LRP was consistent over multiple test trials and cross-validation splits. Taken together, the results demonstrate the suitability of the proposed method for the explanation of machine learning predictions in clinical (biomechanical) gait analysis. However, higher reliability of input relevance values between test trials and cross-validation splits indicate advantages for deep (convolutional) neural networks architectures. These findings are in agreement with those observed in earlier studies on text61 or image75 classification that indicated more robust and traceable class representations in deep (convolutional) neural networks.

In conclusion, the present findings underline that methods enabling to understand and interpret the predictions of machine learning, like the LRP, are highly promising for the application and implementation of machine learning in gait analysis. Due to the above discussed advantages of non-linear machine learning methods such as DNNs for the analysis of human gait25,26,27,35, the understanding and interpreting of machine learning predictions is essential in order to overcome one of their major drawbacks (the lack of transparency)25,26,28. Using the testbed of uniqueness of individual gait patterns, the present study proposed a general framework for the understanding and interpretation of non-linear machine learning methods in gait analysis thus providing a solid basis for future studies in biomechanical analysis and clinical diagnosis.

Methods

Subjects and ethics statement

Fifty-seven physically active subjects (29 female, 28 male; 23.1 ± 2.7 years; 1.74 ± 0.10 m; 67.9 ± 11.3 kg) without gait pathology and free of lower extremity injuries participated in the study. The study was carried out according to the Declaration of Helsinki at the Johannes Gutenberg-University in Mainz (Germany). All subjects were informed about the experimental protocol and provided their informed written consent to participate in the study. Subjects appearing in the figures provided informed written consent to the publication of identifying images and videos in an online open-access publication. The approval from the ethical committee of the medical association Rhineland-Palatinate in Mainz (Germany) was received.

Experimental protocol and data acquisition

The subjects performed 20 gait trials in a single assessment session, while they did not undergo any intervention. For each trial upper- and lower-body joint angles as well as ground reaction forces were measured, while the subjects walked on a 10 m path. The subjects were instructed to walk barefoot at a self-selected speed. Kinematic data were recorded using a full-body marker set consisting of 62 retro reflective markers placed on anatomical landmarks (Fig. 5). Ten Oqus 310 infrared cameras (Qualisys AB, Sweden) captured the three-dimensional marker trajectories at a sampling frequency of 250 Hz.

Full body marker set in (A) anterior (B) right lateral (C) posterior view. The markers were placed at os frontale glabella, 7th cervical vertebrae, sternum jugular notch, sacrum (mid-point between left and right posterior superior iliac spine) and bilaterally at greater wing of sphenoid bone, acromion, scapula inferior angle, humerus lateral epicondyle, humerus medial epicondyle, forearm, radius styloid process, ulna styloid process, head of 3rd metacarpal, iliac crest tubercle, femur greater trochanter, femur lateral epicondyle, femur medial epicondyle, fibula apex of lateral malleolus, tibia apex of medial malleolus, posterior surface of calcaneus, head of 1st metatarsus, head of 5th metatarsus and clusters with four markers each at the thigh and shank and clusters of three markers each at the humerus.

The three-dimensional ground reaction forces were recorded by two Kistler force plates (Type 9287CA) (Kistler, Switzerland) at a frequency of 1000 Hz. The recording was managed in time-synchronization by the Qualisys Track Manager 2.7 (Qualisys AB, Sweden). Two experienced assessors attached the markers and conducted the analysis. Every subject was analysed by the same assessor only. The laboratory environment was kept constant during the investigation.

Before the data acquisition, each subject performed 20 test trials to get accustomed to the experimental setup and to assign a starting point for a walk over the force plates. This procedure is described to minimize the impact of targeting on the force plates on the observed gait variables76,77. Additionally, the participants were instructed to watch a neutral symbol (smiley) on the opposing wall of the laboratory to direct their attention away from targeting on the force plates and ensure a natural walk with an upright body position.

Data processing

The gait analysis was conducted for one gait stride per trial. The stride was defined from right foot heel strike to left foot toe off and was determined using a vertical ground reaction force threshold of 10 N. The three-dimensional marker trajectories and ground reaction forces were filtered using a second order Butterworth bidirectional low-pass filter at a cut off frequency of 12 Hz and 50 Hz, respectively. The ground reaction force data were normalized to the body weight. The computation of the upper- and lower-body joint angles was conducted by Visual3D Standard v4.86.0 (C-Motion, USA) for elbow, shoulder, spine, hip, knee and ankle in sagittal, transversal and coronal plane.

Further data processing was executed by a self-written script within the software Matlab 2016a (MathWorks, USA). Each variable time course was normalized to 101 data points, z-transformed and scaled to a range of −1 to 178. The z-transformation was executed for kinematic variables for each trial separately and for kinetic variables for all trials. The scaling was carried out in order to prevent numerical difficulties during the calculation of the artificial neural networks78 and to ensure an equal contribution of all variables to the classification rates and thereby avoid that variables in greater numeric ranges dominate those in smaller numeric ranges78. Scaling is a common procedure for data processing in advance for the classification of gait data22,36

Data Analysis

The data analysis was conducted within the software frameworks of Matlab 2016a (MathWorks, USA) and Python 2.7 (Python Software Foundation, USA). The ability to distinguish gait patterns of one subject from gait patterns of other subjects was investigated in a multi-class classification (subject-classification) using the data from 57 subjects. The classification of gait patterns, based on time-continuous kinetic and kinematic data, was carried out by supervised machine learning models using support vector machines (SVM)30,31,32,33 and artificial neural networks (ANN)28,68,69. While fully-connected ANNs such as multi layer perceptions (MLP) and SVM represent established models for the classification of gait patterns based on joined input vectors of time-continuous kinematic and kinetic data27, convolutional (deep) artificial neural networks (DNN) have not yet been applied for the biomechanical analysis of human movements. Because DNNs showed superior prediction accuracies in domains like image70,71,72 or speech recognition73, they seem to be promising for the given classification of human gait patterns.

In the present paper, an SVM and different architectures of fully-connected and convolutional artificial neural networks were compared in terms of prediction accuracy, model robustness to noise on the test data and decomposition of input relevance values.

As a simple baseline, two linear classification models were implemented. A one-layer fully-connected neural network (Linear (SGD)) and a SVM using a linear kernel function (Linear (SVM)). Among the considered fully-connected artificial neural network architectures were all combinations L × H with \(L\in [2,3]\) describing the number of layers and \(H\in [{2}^{6},\ldots ,{2}^{10}]\) describing the number of neurons per hidden layer. For the convolutional artificial neural network architectures, the number of convolutional layers is \(C\in [1,2,3]\) with the number of hidden neurons depending on the number of channels in the data as well as the stride and shape of the learned convolutional filters. All convolutional neural network architectures are topped off with one linear layer, connecting all neurons of the highest convolutional layer to the number of classes of the prediction problem. Major architectural differences between the evaluated convolutional neural network architectures were the sizes of the input layer filters (3 × 3 and 6 × 6 as well as C × 3, C × 6 and C × C) spanning different amounts of neighbouring channels and time windows in the input samples.

All artificial neural networks using hidden layers (i.e. all architectures except the linear classifier Linear (SGD)) have ReLU-nonlinearities after each linear/convolutional layer as activation functions for the hidden neurons and a SoftMax activation function for the output layer. Both linear and the fully-connected classifiers receive as input the channel × time samples as row-concatenated channel · time dimensional vectors. The convolutional models directly operate on the channel × time shaped samples. For a detailed description of all evaluated model architectures, see the Supplement (Supplementary Tables ST4–ST9).

With the exception of the linear support vector machines predictor, all models have been trained as n-way classifiers using Stochastic Gradient Descent (SGD) Optimization69 for up to \(3\cdot {10}^{4}\) iterations of mini batches of 5 randomly selected training samples and an initial learning rate of 5e−3. The learning rate is gradually lowered to 1e−3 and then 5e−4 after every 104 training iterations. Model weights are initialized with random values drawn from normal distributions with μ = 0 and \(\sigma ={m}^{-\frac{1}{2}}\), where m is the number of inputs to each output neuron of the layer69. The linear support vector machine model has been using regularized quadratic optimization.

For SVM, the multi-class linear support vector classifier of the scikit learn Toolbox for python79 was used with a regularisation parameter C = 0.1.

Prediction accuracies were reported over a ten-fold cross validation configuration, where eight parts of the data are used for training, one part is used as a validation set and the remaining part is reserved for testing. With on average 912 samples per split being reserved for training, a (neural network) model passes the training set up to 164 (=30000 iterations · 5 samples per batch/912 training samples on average) times and each training stage tied to a given learning rate may be terminated prematurely if the model performance has converged on the validation hold out set to avoid overfitting on the training data. For subject-classification, the 20 samples per subject are uniformly distributed across all data partitions at random.

Layer-wise Relevance Propagation

One of the main reasons for the wide-spread use of linear models in the (meta) sciences is the inherent transparency of the prediction function. Given a set of learned model parameters {w, b} where w is a weight vector matching the dimensionality of the input data and a bias term b, the (multi-class) prediction function for an arbitrary input x evaluates for class c as

It is apparent, that component i of the given input x contributes to the evaluation of fc together with the learned parameters as the quantity wicxi. Each decision made by a linear model is therefore transparent, while complex non-linear models are generally considered black box classifiers.

A technique called Layer-Wise Relevance Propagation (LRP)52 has generalized the explanation of linear models for non-linear models such as deep (convolutional) artificial neural networks and arbitrary pipelines of pre-processing steps and nonlinear predictions. As a principled and general approach, LRP decomposes the output of a given decision function fc for an input x and attributes “relevance scores” Ri to all components i of x, such that \({f}_{c}({\bf{x}})={\sum }_{i}\,{R}_{i}\). Similarly to how the prediction of a linear model can be “explained” LRP starts at the model output (after removing any terminating SoftMax layer) by selecting a class output c of interest, initiating fc(x) = Rj (and selecting 0 for all other model outputs) as the initial output neuron relevance value. Note that for two-class problems, there is often one shared model output, with the predicted class being determined by the sign of the prediction. Here, f(x) = Rj initially.

The method can best be described by considering a single output neuron j anywhere within the model. That neuron receives a quantity of relevance Rj from upper layer neurons (or is initiated with that value in case of a model output neuron), and redistributes that quantity to its immediate input neurons i, in proportion to the contribution of the inputs i to the activation of j in the forward pass:

Here, zij is a quantity measuring the contribution of the input neuron i to the output neuron j and zj is the aggregation thereof. This decomposition approach follows the semantic that the output neuron j holds a certain amount of relevance, due to its activation in the forward pass and its influence to consecutive layers and finally the model output. This relevance is then distributed across the neuron’s inputs in proportion of each input’s contribution to the activation of neuron: If a neuron i contributes as zij towards the overall trend zj, it shall receive a positively weighted fraction of Rj. If it fires against the overall trend, e.g. the amount or relevance attributed to it will be weighted negatively.

Usually, the layers of an ANN model implement an affine transformation function \({x}_{j}=({\sum }_{i}\,{x}_{i}{w}_{ij})+{b}_{j}\) or a (component-wise) non-linearity xj = σ(xi). In the former case, we then have zij = xiwij, for example, and zj is the output activation xj. In the latter case, zij = δijσ(xi) where δij is the Kronecker delta, since there is no mixing between inputs and outputs of different subscripts.

The relevance score Ri at input neuron i is then obtained by pooling all incoming relevance values \({R}_{i\leftarrow j}\) from the output neurons to which i contributes in the forward pass:

Together, both above relevance decomposition and pooling steps ensure a local relevance conservation property, i.e. \({\sum }_{i}\,{R}_{i}={\sum }_{j}\,{R}_{j}\) and thus \({\sum }_{i}\,{R}_{i}=f({\bf{x}})\) for all layers of the model. In case of a component-wise operating non-linear activation, e.g. a ReLU (\({\forall }_{i=j}:{{\bf{x}}}_{j}=\,{\rm{\max }}(0,{x}_{i})\)) or Tanh (\({\forall }_{i=j}:{x}_{j}=\,\tanh ({x}_{i})\)), then \({\forall }_{i=j}:{R}_{i}={R}_{j}\). since the top layer relevance values Rj only need to be attributed towards one single respective input i for each output neuron j.

After initiating the algorithm at the model output, it iterates over all the layers of the model towards the input, until relevance scores Ri for all input components xi are obtained. Assuming a (strong) positive model output represents the predicted presence of a class, then the input level relevance scores can be interpreted as follows: Values \({R}_{i}\gg 0\) indicate components xi of the input which, due to the models’ learned decision function, represent the presence of the “explained” class, while conversely \({R}_{i}\ll 0\) contradict the prediction of that class. Ri ≈ 0 identify inputs xi which have no or only little influence to the model’s decision.

Applying above decomposition rules to a linear classifier \(f({\bf{x}})={\sum }_{i}\,{x}_{i}{w}_{i}+b\) with only a single output results in zij = xiwi and zbj = b, since the bias b can be considered a constant always-on neuron, and \({z}_{j}={\sum }_{i}\,{x}_{i}{w}_{i}+b=f({\bf{x}})\). Initiating the (only) model output relevance as Rj = f(x) and substituting both zij and zj in above relevance decomposition and pooling rules in Equations (2) and (3) yields:

Since the model only has one output, the pooling at each input xi (or the bias) becomes \({R}_{i}={R}_{i\leftarrow j}\) (or \({R}_{b}={R}_{b\leftarrow j}\)). In short, the application of LRP to a model consisting of only a single linear layer collapses to Ri = xiwi, the inherent explanation of the decision of a linear model in terms of input variables and the bias. For further details, please refer to52.

Data Availability

The datasets generated and analysed during the current study are available in the Mendeley Data Repository80 (https://doi.org/10.17632/svx74xcrjr.1). The Layer-Wise Relevance Propagation Toolbox81 (https://github.com/sebastian-lapuschkin/lrp_toolbox) and the experimental code derivation thereof is available on GitHub (https://github.com/sebastian-lapuschkin/interpretable-deep-gait).

References

Verghese, J. et al. Epidemiology of gait disorders in community-residing older adults. Journal of the American Geriatrics Society 54, 255–261 (2006).

Verghese, J., Holtzer, R., Lipton, R. B. & Wang, C. Mobility stress test approach to predicting frailty, disability, and mortality in high-functioning older adults. Journal of the American Geriatrics Society 60, 1901–1905 (2012).

Soh, S.-E., Morris, M. E. & McGinley, J. L. Determinants of health-related quality of life in Parkinson’s disease: A systematic review. Parkinsonism & Related Disorders 17, 1–9 (2011).

Studenski, S. et al. Physical performance measures in the clinical setting. Journal of the American Geriatrics Society 51, 314–322 (2003).

Studenski, S. et al. Gait speed and survival in older adults. Jama 305, 50–58 (2011).

Hirsch, C. H., Bůžková, P., Robbins, J. A., Patel, K. V. & Newman, A. B. Predicting late-life disability and death by the rate of decline in physical performance measures. Age and Ageing 41, 155–161 (2011).

Fagerström, C. & Borglin, G. Mobility, functional ability and health-related quality of life among people of 60 years or older. Aging Clinical and Experimental Research 22, 387–394 (2010).

Mahlknecht, P. et al. Prevalence and burden of gait disorders in elderly men and women aged 60–97 years: A population-based study. PLoS One 8, e69627 (2013).

Rubenstein, L. Z., Powers, C. M. & MacLean, C. H. Quality indicators for the management and prevention of falls and mobility problems in vulnerable elders. Annals of Internal Medicine 135, 686–693 (2001).

Forte, R., Boreham, C. A., De Vito, G. & Pesce, C. Health and quality of life perception in older adults: The joint role of cognitive efficiency and functional mobility. International Journal of Environmental Research and Public Health 12, 11328–11344 (2015).

Schmid, A. et al. Improvements in speed-based gait classifications are meaningful. Stroke 38, 2096–2100 (2007).

Seale, J. Gait in Persons With Chronic Stroke: An Investigation of Overall Gait and Quality of Life (Texas Woman’s University, 2010).

Ellis, T. et al. Which measures of physical function and motor impairment best predict quality of life in Parkinson’s disease? Parkinsonism & Related Disorders 17, 693–697 (2011).

Giladi, N., Horak, F. B. & Hausdorff, J. M. Classification of gait disturbances: Distinguishing between continuous and episodic changes. Movement Disorders 28, 1469–1473 (2013).

Verghese, J. et al. Abnormality of gait as a predictor of non-Alzheimer’s dementia. New England Journal of Medicine 347, 1761–1768 (2002).

Baltadjieva, R., Giladi, N., Gruendlinger, L., Peretz, C. & Hausdorff, J. M. Marked alterations in the gait timing and rhythmicity of patients with de novo Parkinson’s disease. European Journal of Neuroscience 24, 1815–1820 (2006).

Buracchio, T., Dodge, H. H., Howieson, D., Wasserman, D. & Kaye, J. The trajectory of gait speed preceding mild cognitive impairment. Archives of Neurology 67, 980–986 (2010).

Valkanova, V. & Ebmeier, K. P. What can gait tell us about dementia? Review of epidemiological and neuropsychological evidence. Gait & Posture 53, 215–223 (2017).

Baker, R. Measuring walking: A Handbook of Clinical Gait Analysis (Mac Keith Press, 2013).

Mills, K., Hunt, M. A. & Ferber, R. Biomechanical deviations during level walking associated with knee osteoarthritis: A systematic review and meta-analysis. Arthritis Care & Research 65, 1643–1665 (2013).

Wegener, C., Hunt, A. E., Vanwanseele, B., Burns, J. & Smith, R. M. Effect of children’s shoes on gait: A systematic review and meta-analysis. Journal of Foot and Ankle Research 4, 3 (2011).

Schöllhorn, W., Nigg, B., Stefanyshyn, D. & Liu, W. Identification of individual walking patterns using time discrete and time continuous data sets. Gait & Posture 15, 180–186 (2002).

Federolf, P., Tecante, K. & Nigg, B. A holistic approach to study the temporal variability in gait. Journal of Biomechanics 45, 1127–1132 (2012).

Eskofier, B. M., Federolf, P., Kugler, P. F. & Nigg, B. M. Marker-based classification of young–elderly gait pattern differences via direct pca feature extraction and svms. Computer Methods in Biomechanics and Biomedical Engineering 16, 435–442 (2013).

Chau, T. A review of analytical techniques for gait data. Part 1: Fuzzy, statistical and fractal methods. Gait & Posture 13, 49–66 (2001).

Chau, T. A review of analytical techniques for gait data. Part 2: Neural network and wavelet methods. Gait & Posture 13, 102–120 (2001).

Schöllhorn, W. Applications of artificial neural nets in clinical biomechanics. Clinical Biomechanics 19, 876–898 (2004).

Wolf, S. et al. Automated feature assessment in instrumented gait analysis. Gait & Posture 23, 331–338 (2006).

Bishop, C. M. Neural Networks for Pattern Recognition (Oxford University Press, 1995).

Boser, B. E., Guyon, I. M. & Vapnik, V. N. A training algorithm for optimal margin classifiers. In Proceedings of the Fifth Annual Workshop on Computational Learning Theory, 144–152 (ACM, 1992).

Cortes, C. & Vapnik, V. Support-vector networks. Machine Learning 20, 273–297 (1995).

Müller, K.-R., Mika, S., Ratsch, G., Tsuda, K. & Schölkopf, B. An introduction to kernel-based learning algorithms. IEEE Transactions on Neural Networks 12, 181–201 (2001).

Schölkopf, B. et al. Learning with kernels: Support Vector Machines, Regularization, Optimization, and Beyond (MIT Press, 2002).

McKay, M. J. et al. 1000 norms project: Protocol of a cross-sectional study cataloging human variation. Physiotherapy 102, 50–56 (2016).

Phinyomark, A., Petri, G., Ibáñez-Marcelo, E., Osis, S. T. & Ferber, R. Analysis of big data in gait biomechanics: Current trends and future directions. Journal of Medical and Biological Engineering 38, 244–260 (2018).

Figueiredo, J., Santos, C. P. & Moreno, J. C. Automatic recognition of gait patterns in human motor disorders using machine learning: A review. Medical Engineering & Physics (2018).

Horst, F., Mildner, M. & Schöllhorn, W. One-year persistence of individual gait patterns identified in a follow-up study – A call for individualised diagnose and therapy. Gait & Posture 58, 476–480 (2017).

Horst, F. et al. Daily changes of individual gait patterns identified by means of support vector machines. Gait & Posture 49, 309–314 (2016).

Horst, F., Eekhoff, A., Newell, K. M. & Schöllhorn, W. I. Intra-individual gait patterns across different time-scales as revealed by means of a supervised learning model using kernel-based discriminant regression. PLoS One 12, e0179738 (2017).

Janssen, D. et al. Recognition of emotions in gait patterns by means of artificial neural nets. Journal of Nonverbal Behavior 32, 79–92 (2008).

Janssen, D. et al. Diagnosing fatigue in gait patterns by support vector machines and self-organizing maps. Human Movement Science 30, 966–975 (2011).

Begg, R. & Kamruzzaman, J. A machine learning approach for automated recognition of movement patterns using basic, kinetic and kinematic gait data. Journal of Biomechanics 38, 401–408 (2005).

Zeng, W. et al. Parkinson’s disease classification using gait analysis via deterministic learning. Neuroscience Letters 633, 268–278 (2016).

Alaqtash, M. et al. Automatic classification of pathological gait patterns using ground reaction forces and machine learning algorithms. In 2011 Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), 453–457 (2011).

Williams, G., Lai, D., Schache, A. & Morris, M. E. Classification of gait disorders following traumatic brain injury. Journal of Head Trauma Rehabilitation 30, E13–E23 (2015).

Christian, J. et al. Computer aided analysis of gait patterns in patients with acute anterior cruciate ligament injury. Clinical Biomechanics 33, 55–60 (2016).

Baehrens, D. et al. How to explain individual classification decisions. Journal of Machine Learning Research 11, 1803–1831 (2010).

Montavon, G., Samek, W. & Müller, K.-R. Methods for interpreting and understanding deep neural networks. Digital Signal Processing 73, 1–15 (2018).

Gevrey, M., Dimopoulos, I. & Lek, S. Review and comparison of methods to study the contribution of variables in artificial neural network models. Ecological Modelling 160, 249–264 (2003).

Simonyan, K., Vedaldi, A. & Zisserman, A. Deep inside convolutional networks: Visualising image classification models and saliency maps. CoRR abs/1312.6034 (2013).

Zeiler, M. D. & Fergus, R. Visualizing and understanding convolutional networks. In European Conference on Computer Vision (ECCV), 818–833 (2014).

Bach, S. et al. On pixel-wise explanations for non-linear classifier decisions by layer-wise relevance propagation. PLoS One 10, e0130140 (2015).

Zintgraf, L. M., Cohen, T. S. & Welling, M. A new method to visualize deep neural networks. CoRR abs/1603.02518 (2016).

Ribeiro, M. T., Singh, S. & Guestrin, C. “Why should I trust you?”: Explaining the predictions of any classifier. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, 1135–1144 (2016).

Zhang, J., Lin, Z., Brandt, J., Shen, X. & Sclaroff, S. Top-down neural attention by excitation backprop. In European Conference on Computer Vision, 543–559 (2016).

Selvaraju, R. R. et al. Grad-cam: Visual explanations from deep networks via gradient-based localization. In IEEE International Conference on Computer Vision (ICCV), 618–626 (2017).

Fong, R. C. & Vedaldi, A. Interpretable explanations of black boxes by meaningful perturbation. In IEEE International Conference on Computer Vision (ICCV), 3449–3457 (2017).

Montavon, G., Lapuschkin, S., Binder, A., Samek, W. & Müller, K.-R. Explaining nonlinear classification decisions with deep taylor decomposition. Pattern Recognition 65, 211–222 (2017).

Samek, W., Binder, A., Montavon, G., Lapuschkin, S. & Müller, K.-R. Evaluating the visualization of what a deep neural network has learned. IEEE Transactions on Neural Networks and Learning Systems 28, 2660–2673 (2017).

Lapuschkin, S., Binder, A., Müller, K.-R. & Samek, W. Understanding and comparing deep neural networks for age and gender classification. In IEEE International Conference on Computer Vision Workshops (ICCVW), 1629-1638 (2017).

Arras, L., Horn, F., Montavon, G., Müller, K.-R. & Samek, W. “What is relevant in a text document?”: An interpretable machine learning approach. PLoS One 12, e0181142 (2017).

Haufe, S. et al. On the interpretation of weight vectors of linear models in multivariate neuroimaging. Neuroimage 87, 96–110 (2014).

Sturm, I., Lapuschkin, S., Samek, W. & Müller, K.-R. Interpretable deep neural networks for single-trial eeg classification. Journal of Neuroscience Methods 274, 141–145 (2016).

Zien, A., Krämer, N., Sonnenburg, S. & Rätsch, G. The feature importance ranking measure. In Joint European Conference on Machine Learning and Knowledge Discovery in Databases, 694–709 (2009).

Stegle, O., Parts, L., Piipari, M., Winn, J. & Durbin, R. Using probabilistic estimation of expression residuals (PEER) to obtain increased power and interpretability of gene expression analyses. Nature Protocols 7, 500 (2012).

Vidovic, M. M.-C., Görnitz, N., Müller, K.-R., Rätsch, G. & Kloft, M. Svm2Motif-- reconstructing overlapping DNA sequence motifs by mimicking an svm predictor. PLoS One 10, e0144782 (2015).

Schütt, K. T., Arbabzadah, F., Chmiela, S., Müller, K. R. & Tkatchenko, A. Quantum-chemical insights from deep tensor neural networks. Nature Communications 8, 13890 (2017).

LeCun, Y., Bengio, Y. & Hinton, G. Deep learning. Nature 521, 436 (2015).

LeCun, Y. A., Bottou, L., Orr, G. B. & Müller, K.-R. Efficient backprop. In Neural Networks: Tricks of the Trade, 9–48 (Springer, 2012).

Krizhevsky, A., Sutskever, I. & Hinton, G. E. Imagenet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems (NIPS), 1097–1105 (2012).

Szegedy, C. et al. Going deeper with convolutions. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 1–9 (2015).

Szegedy, C., Ioffe, S., Vanhoucke, V. & Alemi, A. A. Inception-v4, inception-resnet and the impact of residual connections on learning. In Thirty-First AAAI Conference on Artificial Intelligence (AAAI), 4278-4284 (2017).

Kim, Y. Convolutional neural networks for sentence classification. In Empirical Methods in Natural Language Processing (EMNLP), 1746–1751 (2014).

Winter, D. A. Kinematic and kinetic patterns in human gait: variability and compensating effects. Human Movement Science 3, 51–76 (1984).

Lapuschkin, S., Binder, A., Montavon, G., Müller, K.-R. & Samek, W. Analyzing classifiers: Fisher vectors and deep neural networks. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2912–2920 (2016).

Sanderson, D. J., Franks, I. M. & Elliott, D. The effects of targeting on the ground reaction forces during level walking. Human Movement Science 12, 327–337 (1993).

Wearing, S. C., Urry, S. R. & Smeathers, J. E. The effect of visual targeting on ground reaction force and temporospatial parameters of gait. Clinical Biomechanics 15, 583–591 (2000).

Hsu, C.-W. et al. A practical guide to support vector classification (2003).

Pedregosa, F. et al. Scikit-learn: Machine learning in python. Journal of Machine Learning Research 12, 2825–2830 (2011).

Horst, F., Lapuschkin, S., Samek, W., Müller, K.-R. & Schöllhorn, W. I. A public dataset of overground walking kinetics and full-body kinematics in healthy individuals. Mendeley Data Repository, https://doi.org/10.17632/svx74xcrjr.1 (2018).

Lapuschkin, S., Binder, A., Montavon, G., Müller, K.-R. & Samek, W. The layer-wise relevance propagation toolbox for artificial neural networks. Journal of Machine Learning Research 17, 1–5 (2016).

Acknowledgements

The authors thank all the participating subjects for their time and patience as well as Christin Rupprecht and Eva Klein for her encouragement and support during the data collection. No benefits in any form have been received or will be received from a commercial party related directly or indirectly to the subject of this article. This work was supported by the Institute of Information & Communications Technology Planning & Evaluation (IITP) grant funded by the Korea government (No. 2017-0-00451). This work was also supported by the grant DFG (MU 987/17-1) and by the German Ministry for Education and Research as Berlin Big Data Centre (BBDC) (01IS14013A) and Berlin Center for Machine Learning under Grant 01IS18037I. This publication only reflects the authors views. Funding agencies are not liable for any use that may be made of the information contained herein.

Author information

Authors and Affiliations

Contributions

F.H. and W.I.S. conceived, designed and performed the experiment. F.H., S.L., W.S., K.R.M. and W.I.S. analysed the data. S.L., W.S. and K.R.M. contributed analysis tools. F.H., S.L., W.S., K.R.M. and W.I.S. wrote the paper and drafted the article or revised it critically for important intellectual content. All authors reviewed the manuscript.

Corresponding authors

Ethics declarations

Competing Interests

The authors declare no competing interests.

Additional information

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Horst, F., Lapuschkin, S., Samek, W. et al. Explaining the unique nature of individual gait patterns with deep learning. Sci Rep 9, 2391 (2019). https://doi.org/10.1038/s41598-019-38748-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-019-38748-8

This article is cited by

-

Identification and interpretation of gait analysis features and foot conditions by explainable AI

Scientific Reports (2024)

-

Dynamic modeling and closed-loop control design for humanoid robotic systems: Gibbs–Appell formulation and SDRE approach

Multibody System Dynamics (2024)

-

Normal variation in pelvic roll motion pattern during straight-line trot in hand in warmblood horses

Scientific Reports (2023)

-

High-density EMG, IMU, kinetic, and kinematic open-source data for comprehensive locomotion activities

Scientific Data (2023)

-

Identification of subject-specific responses to footwear during running

Scientific Reports (2023)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.