Abstract

Alzheimer’s disease (AD) is a progressive neurodegenerative condition marked by a decline in cognitive functions with no validated disease modifying treatment. It is critical for timely treatment to detect AD in its earlier stage before clinical manifestation. Mild cognitive impairment (MCI) is an intermediate stage between cognitively normal older adults and AD. To predict conversion from MCI to probable AD, we applied a deep learning approach, multimodal recurrent neural network. We developed an integrative framework that combines not only cross-sectional neuroimaging biomarkers at baseline but also longitudinal cerebrospinal fluid (CSF) and cognitive performance biomarkers obtained from the Alzheimer’s Disease Neuroimaging Initiative cohort (ADNI). The proposed framework integrated longitudinal multi-domain data. Our results showed that 1) our prediction model for MCI conversion to AD yielded up to 75% accuracy (area under the curve (AUC) = 0.83) when using only single modality of data separately; and 2) our prediction model achieved the best performance with 81% accuracy (AUC = 0.86) when incorporating longitudinal multi-domain data. A multi-modal deep learning approach has potential to identify persons at risk of developing AD who might benefit most from a clinical trial or as a stratification approach within clinical trials.

Similar content being viewed by others

Introduction

Alzheimer’s disease (AD) is an irreversible, progressive neurodegenerative disorder characterized by abnormal accumulation of amyloid plaques and neurofibrillary tangles in the brain, causing problems with memory, thinking, and behavior. AD is the most common form of dementia with no validated disease modifying treatment. An estimated 5.7 million Americans are living with AD in 2018. By 2050, this number is projected to rise to nearly 14 million1. Current available treatments decelerate only the progression of AD and no treatment developed so far can cure a patient who is already in AD. Thus, it is of fundamental importance for timely treatment and progression delay to develop strategies for detection of AD at early stages before clinical manifestation. As a result, the concept of mild cognitive impairment (MCI) was introduced. MCI, a prodromal form of AD, is defined to describe people who have mild symptoms of brain malfunction but can still perform everyday tasks. Patients in the phase of MCI have an increased risk of progressing to dementia1,2,3,4. Some patients in their MCI stages are converted to AD within a limit of the time window after baseline, while some are not. It has been reported that MCI patients progress to AD at a rate of 10% to 15% per year and 80% of these MCI patients will have converted to AD after approximately six years of follow-up5,6. It is an ongoing topic among AD-related researches to identify biomarkers that classify patients with MCI who later progress to AD (MCI converter) from those with MCI who do not progress to AD (MCI non-converter).

Various machine learning methods have been applied to identify biomarkers for MCI conversion prediction and improve their performances. Support vector machine (SVM) is one of methods frequently used for solving classification problem. A lot of studies applied SVM for MCI conversion prediction7,8,9,10,11,12. A multi-task learning along with SVM was used to identify AD-relevant features, showing 73.9% accuracy, 68.6% sensitivity, and 73.6% specificity7. For the use of additional subjects, a domain transfer learning method to use auxiliary samples such as AD and cognitively normal older adults (CN) subjects as well as MCI subjects showed 79.4% accuracy, 84.5% sensitivity, and 72.7% specificity8. A linear discriminant analysis (LDA) was used based on cortex thickness data showing 63% sensitivity and 76% specificity13. Furthermore, the integration of multi-modality data improves the performance for MCI conversion prediction by extracting complementary AD-related biomarkers from each modality. Cerebrospinal fluid (CSF), MRI, and cognitive performance biomarkers were combined, resulting in 68.5% accuracy 53.4% sensitivity, and 77% specificity14,15. Along with MRI and CSF biomarkers, APOE ε4 status were integrated16.

In this study, in order to predict MCI to AD conversion, we proposed a multimodal recurrent neural network method, a deep learning approach, based on the integration of demographic information, longitudinal CSF biomarkers, longitudinal cognitive performance, and cross-sectional neuroimaging biomarkers at baseline obtained from the Alzheimer’s Disease Neuroimaging Initiative cohort (ADNI). Our proposed deep learning method can incorporate longitudinal multiple domain data and take variable-length longitudinal data to capture temporal features at multiple time points. In particular, non-overlapping samples as well as overlapping samples from each data can be used to build a prediction model.

Results

Study participants

All individuals used in the analysis were participants of the Alzheimer’s Disease Neuroimaging Initiative (ADNI)17,18. The overall goal of ADNI is to test whether serial magnetic resonance imaging (MRI), position emission tomography (PET), other biological markers, and clinical and neuropsychological assessment could be combined to measure the progression of MCI and early AD. Demographic information, raw neuroimaging scan data, APOE genotype, CSF measurements, neuropsychological test scores, and diagnostic information are publicly available from the ADNI data repository (http://adni.loni.usc.edu). Informed consent was obtained for all subjects, and the study was approved by the relevant institutional review board at each data acquisition site (for up-to-date information, see http://adni.loni.usc.edu/wp-content/themes/freshnews-dev-v2/documents/policy/ADNI_Acknowledgement_List%205-29-18.pdf). All methods were performed in accordance with the relevant guidelines and regulations. In this study, a total of 1,618 ADNI participants aged 55 to 91 were used, which include 415 cognitively normal older adult controls (CN), 865 MCI (307 MCI converter and 558 MCI non-converter), and 338 AD patients (Table 1).

We used four different types, or modalities of data: demographic information, neuroimaging phenotypes measured by MRI, cognitive performance, and CSF measurements. Demographic information includes age, sex, years of education, and APOE ε4 status. Cognitive performance includes composite scores for executive functioning (ADNI-EF) and memory (ADNI-MEM) derived from the ADNI neuropsychological battery using item response theory as described in detail elsewhere19. CSF biomarkers for AD include amyloid-β 1–42 peptide (Aβ1–42), total tau (t-tau), and tau phosphorylated at the threonine 181 (p-tau). AD-related neuroimaging biomarkers measured by MRI include hippocampal volume and entorhinal cortical thickness.

Experimental setting

To evaluate the performance and effectiveness of our proposed longitudinal multi-modal deep learning method, we used three schemes and compared their performances (Table 2). In the experiment named “baseline”, 4 modalities data at baseline visit (cognitive performance, CSF, demographic information, and MRI) were incorporated. In “single modal”, only longitudinal cognitive performance data was used for the predictor (we tried all other single modality, and the performance with cognitive scores was the best). Finally, the four modalities of longitudinal data were combined and used for training the classifier in the experiment marked as “proposed”. Table 3 shows summary statistics of each modalities of data and hyperparameters used for training GRUs.

For training our models, subjects in CN and AD groups are used as well as MCI-C and MCI-NC. This approach is motivated by8,10,11,20,21,22. They use CN and AD subjects for training a classifier such as SVM23 or locally linear embedding (LLE)24, and then the classifier is used for classification of MCI-C and MCI-NC. In our experiment, CN and AD are used as auxiliary dataset to pre-train the classifier, and then MCI-C and MCI-NC are also used for training.

We tested the classifier on MCI patients to predict the conversion after Δt from baseline (6, 12, 18, and 24 months) as shown in Fig. 1. Due to the nature of our data the sample size available for training varies over Δt (Fig. 2). For example, if AD occurs early from the baseline visit, then we have relatively fewer training samples because we have a smaller data window to predict on. At each prediction time (Δt), we ran 5-fold cross-validation 10 times in which every fold has the same ratio of MCI-C and MCI-NC subjects. MCI samples were partitioned into 5 subsets, and one subset was selected for testing, while the remaining subsets were used for training.

An example using longitudinal data for MCI conversion prediction. Contrary to the experiment with baseline visit data, longitudinal data of individuals in all stages (CN, MCI, and AD) was used for training a classifier. Then, portion of longitudinal data was taken to the classifier to predict AD progression after Δt.

Comparison of prediction of MCI to AD conversion using cross-sectional data at baseline and longitudinal data

To evaluate the advantage of using longitudinal data, we first compared the performances of two schemes: “baseline” and “proposed” (Figs 3 and 4). Intuitively, data from multiple time points has more information than data at a single time point. Thus, the GRU analyzes the temporal changes in cognitive performance and CSF to extract features (which are not contained in baseline visit data) for the correct MCI conversion prediction. As shown in Table 4(a,b), the prediction model based on longitudinal data shows better performance than the model using only cross-sectional data at baseline. In particular, sensitivity is an important measure for the prediction task in which identifying true positive rate is crucial25. In prediction of MCI conversion, a classifier with higher true positive rate is more applicable for timely treatment.

Comparison of prediction of MCI to AD conversion using single modal and multimodal data

For evaluating the effectiveness of multimodal data integration, we compared the performances of “proposed” and “single modal” experiments. Figure 3 shows the accuracies of “proposed” and models with single modality of data. We removed the accuracy from the model with demographic data because the prediction performance was too low. The model using cognitive performance was observed to be the most accurate among models that use each single modality of data. Even though the sample size for neuroimaging data was larger than those of cognitive performance and CSF biomarkers (Fig. 3), the model with neuroimaging data showed less accuracy. This is because cognitive performance is a longitudinal data which takes advantage of giving relatively closer data record to MCI conversion point. However, model with cognitive performance shows extremely high variance of sensitivity for predicting 18 and 24 months. It is observed that model only with cognitive performance not a stable predictor for long period of prediction while integrating other biomarkers can alleviate the high variance in proposed.

Discussion

We proposed an integrative approach for the prediction of MCI to AD conversion using a deep learning approach, more specifically, a multi-modal recurrent neural network. Our method takes advantages of longitudinal and multi-modal nature of available data to discover nonlinear patterns associated with MCI progression. To evaluate the advantages of our proposed method, we compared performance outputs from three schemes: “baseline”, “single modal”, and “proposed”. As observed in Fig. 4, “baseline” and “single modal” with cognitive test biomarkers show similar performances over prediction periods. Using longitudinal data or combining multimodal data are effective ways for increasing predictive power thus, it seems natural for combining longitudinal multimodal data (“proposed”) to show the best performance. In Table 5, as predicted further periods, the reliability of performance improvement is lower due to the lack of positive samples. However, specificities of proposed model showed enhanced performance over competing methods consistently. In addition, the prediction results of our model were compared to those of previous studies with machine learning approaches (Table 6). Our method showed comparable prediction ability even though we had a highly unbalanced ratio of positive and negative samples. Specifically, the sensitivity of our model shows higher performance while specificity is lower. Moreover, The balanced accuracy26, which is a measure of accuracy considering sensitivity and specificity shows 0.82 for our model and 0.81 for27.

The biggest advantage of our approach is that irregular longitudinal data can be used. One of the major problems when dealing with longitudinal data is that a preprocessing step is required for handling variable-length of sequential data and missing values. In previous studies, the fixed length of time points was collected by taking data that fell within a certain time window. Additionally, an additional feature extraction phase is required to produce a fixed-size feature representation. In the first training step, separate GRU components make an encoding process, where longitudinal data are transformed into a vector containing AD-sensitive features. Thanks to the structure of GRU, our approach is capable of accepting any irregular length of data as an input without preprocessing.

In addition, our method can make full use of available subjects from each modality for training our classifier. This is a huge advantage in the face of data scarcity. As seen in Fig. 2, the number of subjects with CSF data is smallest in the overlapping sample. Traditional approaches can use only the overlapping samples while non-overlapping samples were abandoned. In our case, non-overlapping samples contribute to training the individual GRU component it belongs to for the better representation learning. Furthermore, additional modality data are easily integrated into the model. Contrary to the kernel-based integration, concatenation-based integration method can incorporate other domains of data such as multi-modal neuroimaging and genomic data without any prior knowledge. Thus, next we will integrate multi-modal neuroimaging and genomics data for learning features that might be useful in predicting early MCI to AD conversion.

Although there are some strengths as described above, our approach has some limitations. In the first training step, the input of each modality was transformed into a feature vector that is optimized for MCI conversion prediction only by single modality. Thus, features that are irrelevant to AD progression with respect to the single modality will be filtered out. However, if there are features that cannot be extracted by single modality but only can be explained by a combination of multi-modality of data then those are also likely to be filtered out. This is because parameters in GRUs are not updated against the final prediction result. In other words, parameter optimization for the second training step does not affect the parameters in each GRU for feature extraction, thus each GRU cannot learn from the final prediction based on the combined features. To solve this problem, we will link GRUs to logistic regressions at the second step so that GRU learns feature representation from multi-modality as well as single modality. In addition, we plan to modify the structure of our model making it possible for individual GRU components to extract integrative features. We are currently investigating this possibility as a sequel to this work.

Methods

Recurrent Neural Network

Recurrent Neural Network (RNN) is a class of deep learning architecture used when sequential data can be considered. In natural language processing (NLP), speech recognition, and anomaly detection in time series, RNN is popularly used for analyzing the sequence of words and time series data28. The advantage of applying RNN is that variable-length sequence can be processed to exploit temporal patterns hidden in the given sequence. In the sentiment analysis task, for example, the goal is to classify the sentiment (good or bad) of a given sentence. The classifier needs to take a sentence (a sequence of words) as an input, understand the context in it, and return a correct sentiment as an output29. For the prediction task that detects initial diagnosis of heart failure in30, RNN takes time series of electronic health records (EHRs) using 12 to 18 month observation window. In these cases where variable-length input should be dealt with RNN is an appropriate candidate to use.

An RNN processes one element of an input sequence at a time and updates its memory state that implicitly contains information about the history of all the past elements of the sequence31. The hidden state is represented as a Euclidean vector (i.e., a sequence of real numbers) and is updated recursively from the input at the given step and the previous value of the hidden state (Fig. 5).

Illustration of recurrent neural network. RNN is composed of input, memory state, and output, each of which has a weight parameter to be learned for a given task. The memory state (blue box) takes the input and computes the output based on the memory state from the previous step and the current input (left). Since the RNN has a feedback loop, variable-length input and output sequence can be represented as an “unfolded” sequence (right).

Suppose we have N number of subjects, each of which has a sequence \(\{{x}_{1}^{n},{x}_{2}^{n},\ldots ,{x}_{t}^{n},\ldots ,{x}_{T}^{n}\}\) where \({x}_{i}^{n}\) is a data record of n-th sample and the t-th element in a sequence and T is the length of the sequence. The corresponding sequence of output is recursively computed as:

Wh, Wx, and Wy are the weight matrices to extract task-specific features from the previous memory state ht−1, the t-th input xt and the current memory state ht, respectively. As can be seen from the equations, the memory states ht and the input xt are all represented as Euclidean vectors as well. Therefore, the dynamics of the entire RNN are captured by a sequence of matrix-vector multiplications, followed by elementwise non-linearity applications. The tanh function is a non-linear activation function taking the form of \(tanh(x)=\frac{2}{1+{e}^{-2x}}-1\). The role of the non-linear activation function is to endow the RNN with higher representational power. \({\hat{y}}_{t}\) is the predicted output resulting from the computation of the network. This final result is computed by the function σ, which is known as the softmax function. The role of the softmax function is to turn an arbitrary vector into a probability vector via the following operation:

where ui is the i-th element of the vector u. In equation (2), we abuse notation to express elementwise application of the above expression.

In our model, the last output sequence provided by the RNN is treated as the probability vector for classification, and the cross-entropy loss function (equation (3)) is used to quantify how “far away” our n-th prediction is from the n-th ground truth label yn. That is, we choose the optimal parameters \({{\rm{W}}}_{{\rm{h}}}^{\ast },{{\rm{W}}}_{{\rm{x}}}^{\ast },{{\rm{W}}}_{{\rm{y}}}^{\ast }\) that minimize the cross-entropy loss of the given data (equation (4)). The algorithm we use to optimize the parameters is Backpropagation Through Time (BPTT)32, which updates the weights in the RNN to minimize the given loss function.

However, when the task requires long sequences of input to be processed, training an RNN is difficult33. This is called the long-term dependency problem. Variants of RNN such as Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) have been developed and practically used to solve this problem34,35. In the proposed model, we use GRU for each modality of data to process multiple time points of the input. The detailed structure of GRU is described in the supplementary.

Multi-modal GRU for MCI conversion prediction

Our problem can be considered as a sequential data classification. The classification objective is to predict whether an individual with MCI at baseline is converted to AD or not using sequence data, which consist of four modalities including cognitive performance, CSF, and MRI biomarkers as well as demographic information. Even though demographic data and MRI biomarkers are not longitudinal data we will consider them as length-one sequential data.

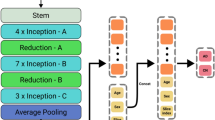

To apply a GRU-based classification algorithm to our problem, we need to design a model that can incorporate the four modalities of data. The main idea of our model is to separately build GRU feature extractors for each modality and integrate the extracted four feature vectors at the end. Our model is comprised of two training steps: (1) learning a single GRU for each modality of data, and (2) learning the integrative feature representation to make the final prediction. At the first training step, a single GRU is trained separately for each modality in which the classification objective is to predict conversion to AD from MCI. Using GRUs is essential to take longitudinal data and transform them into a fixed-size vector. This is quite similar to the approach proposed in36 that maps the input sequence into fixed-length representation. In the second step, MCI conversion is predicted based on the four vectors produced from each GRU components. For merging four vectors, we select concatenation-based data integration, which is conceptually the simplest method to integrate multiple sources of data into a single vector37. For the final prediction, l1-regularized logistic regression38 is used for the classification between MCI-C and MCI-NC. The overview of our proposed method is illustrated in Fig. 6.

Overview of the proposed method. Our proposed method contains multiple GRU components that accept each modality of the dataset. At the first training step (blue dashed rectangle), each GRU component takes both time series or non-time series data to produce fixed-size feature vectors. And then the vectors are concatenated to form an input for the final prediction in the second training step (red dashed rectangle).

Conclusion

Here, we proposed a multi-modal deep learning approach to study the prediction of MCI to AD conversion using longitudinal cognitive performance and CSF biomarkers as well as cross-sectional neuroimaging and demographic data at baseline. We applied multiple GRUs to use longitudinal multi-domain data and all subjects with each modality data. Our results showed that we achieved the better prediction accuracy of MCI to AD conversion by incorporating longitudinal multi-domain data. A multi-modal deep learning approach has potential to identify persons at risk of developing AD who might benefit most from a clinical trial or as a stratification approach within clinical trials.

Data Availability

Demogra phic information, neuroimaging data, APOE genotype, CSF measurements, neuropsychological test scores, and diagnostic information are publicly available from the ADNI data repository (http://adni.loni.usc.edu).

Change history

01 August 2023

A Correction to this paper has been published: https://doi.org/10.1038/s41598-023-39138-x

References

Alzheimer’s, A. 2015 Alzheimer’s disease facts and figures. Alzheimers Dement 11, 332–384 (2015).

Albert, M. S. et al. The diagnosis of mild cognitive impairment due to Alzheimer’s disease: recommendations from the National Institute on Aging-Alzheimer’s Association workgroups on diagnostic guidelines for Alzheimer’s disease. Alzheimers Dement 7, 270–279, https://doi.org/10.1016/j.jalz.2011.03.008 (2011).

Jack, C. R. Jr. et al. Introduction to the recommendations from the National Institute on Aging-Alzheimer’s Association workgroups on diagnostic guidelines for Alzheimer’s disease. Alzheimers Dement 7, 257–262, https://doi.org/10.1016/j.jalz.2011.03.004 (2011).

Sperling, R. A. et al. Toward defining the preclinical stages of Alzheimer’s disease: recommendations from the National Institute on Aging-Alzheimer’s Association workgroups on diagnostic guidelines for Alzheimer’s disease. Alzheimers Dement 7, 280–292, https://doi.org/10.1016/j.jalz.2011.03.003 (2011).

Petersen, R. C. et al. Mild cognitive impairment: clinical characterization and outcome. Archives of neurology 56, 303–308 (1999).

Tábuas-Pereira, M. et al. Prognosis of Early-Onset vs. Late-Onset Mild Cognitive Impairment: Comparison of Conversion Rates and Its Predictors. Geriatrics 1, 11 (2016).

Zhang, D., Shen, D. & Alzheimer’s Disease Neuroimaging, I. Multi-modal multi-task learning for joint prediction of multiple regression and classification variables in Alzheimer’s disease. Neuroimage 59, 895–907, https://doi.org/10.1016/j.neuroimage.2011.09.069 (2012).

Cheng, B., Liu, M., Zhang, D., Munsell, B. C. & Shen, D. Domain Transfer Learning for MCI Conversion Prediction. IEEE Trans Biomed Eng 62, 1805–1817, https://doi.org/10.1109/TBME.2015.2404809 (2015).

Zhang, D., Shen, D. & Initiative, A. S. D. N. Predicting future clinical changes of MCI patients using longitudinal and multimodal biomarkers. PloS one 7, e33182 (2012).

Nho, K. et al. Automatic Prediction of Conversion from Mild Cognitive Impairment to Probable Alzheimer’s Disease using Structural Magnetic Resonance Imaging. AMIA Annu Symp Proc 2010, 542–546 (2010).

Wee, C. Y., Yap, P. T., Shen, D. & Alzheimer’s Disease Neuroimaging, I. Prediction of Alzheimer’s disease and mild cognitive impairment using cortical morphological patterns. Hum Brain Mapp 34, 3411–3425, https://doi.org/10.1002/hbm.22156 (2013).

Wolz, R. et al. Multi-method analysis of MRI images in early diagnostics of Alzheimer’s disease. PLoS One 6, e25446, https://doi.org/10.1371/journal.pone.0025446 (2011).

Cho, Y., Seong, J. K., Jeong, Y., Shin, S. Y. & Alzheimer’s Disease Neuroimaging, I. Individual subject classification for Alzheimer’s disease based on incremental learning using a spatial frequency representation of cortical thickness data. Neuroimage 59, 2217–2230, https://doi.org/10.1016/j.neuroimage.2011.09.085 (2012).

Kim, D. et al. A Graph-Based Integration of Multimodal Brain Imaging Data for the Detection of Early Mild Cognitive Impairment (E-MCI). Multimodal Brain Image Anal (2013) 8159, 159–169, https://doi.org/10.1007/978-3-319-02126-3_16 (2013).

Ewers, M. et al. Prediction of conversion from mild cognitive impairment to Alzheimer’s disease dementia based upon biomarkers and neuropsychological test performance. Neurobiol Aging 33, 1203–1214, https://doi.org/10.1016/j.neurobiolaging.2010.10.019 (2012).

Heister, D. et al. Predicting MCI outcome with clinically available MRI and CSF biomarkers. Neurology 77, 1619–1628, https://doi.org/10.1212/WNL.0b013e3182343314 (2011).

Saykin, A. J. et al. Genetic studies of quantitative MCI and AD phenotypes in ADNI: Progress, opportunities, and plans. Alzheimer’s & dementia: the journal of the Alzheimer’s Association 11, 792–814 (2015).

Saykin, A. J. et al. Alzheimer’s Disease Neuroimaging Initiative biomarkers as quantitative phenotypes: genetics core aims, progress, and plans. Alzheimer’s & dementia: the journal of the Alzheimer’s Association 6, 265–273 (2010).

Nho, K. et al. Voxel and surface-based topography of memory and executive deficits in mild cognitive impairment and Alzheimer’s disease. Brain imaging and behavior 6, 551–567 (2012).

Falahati, F., Westman, E. & Simmons, A. Multivariate data analysis and machine learning in Alzheimer’s disease with a focus on structural magnetic resonance imaging. J Alzheimers Dis 41, 685–708, https://doi.org/10.3233/JAD-131928 (2014).

Westman, E., Aguilar, C., Muehlboeck, J. S. & Simmons, A. Regional magnetic resonance imaging measures for multivariate analysis in Alzheimer’s disease and mild cognitive impairment. Brain Topogr 26, 9–23, https://doi.org/10.1007/s10548-012-0246-x (2013).

Liu, X., Tosun, D., Weiner, M. W., Schuff, N. & Alzheimer’s Disease Neuroimaging, I. Locally linear embedding (LLE) for MRI based Alzheimer’s disease classification. Neuroimage 83, 148–157, https://doi.org/10.1016/j.neuroimage.2013.06.033 (2013).

Cortes, C. & Vapnik, V. Support-vector networks. Machine learning 20, 273–297 (1995).

Roweis, S. T. & Saul, L. K. Nonlinear dimensionality reduction by locally linear embedding. science 290, 2323–2326 (2000).

Huang, M. et al. Longitudinal measurement and hierarchical classification framework for the prediction of Alzheimer’s disease. Scientific reports 7, 39880 (2017).

Brodersen, K. H., Ong, C. S., Stephan, K. E. & Buhmann, J. M. In Pattern recognition (ICPR), 2010 20th international conference on. 3121–3124 (IEEE).

Lu, D., Popuri, K., Ding, G. W., Balachandar, R. & Beg, M. F. Multimodal and Multiscale Deep Neural Networks for the Early Diagnosis of Alzheimer’s Disease using structural MR and FDG-PET images. Scientific reports 8, 5697 (2018).

Deng, L., Hinton, G. & Kingsbury, B. In Acoustics, Speech and Signal Processing (ICASSP), 2013 IEEE International Conference on. 8599–8603 (IEEE).

Tang, D., Qin, B. & Liu, T. In Proceedings of the 2015 conference on empirical methods in natural language processing. 1422–1432.

Choi, E., Schuetz, A., Stewart, W. F. & Sun, J. Using recurrent neural network models for early detection of heart failure onset. Journal of the American Medical Informatics Association 24, 361–370, https://doi.org/10.1093/jamia/ocw112 (2017).

LeCun, Y., Bengio, Y. & Hinton, G. Deep learning. Nature 521, 436–444, https://doi.org/10.1038/nature14539 (2015).

Guo, J. Backpropagation through time. Unpubl. ms., Harbin Institute of Technology (2013).

Bengio, Y., Simard, P. & Frasconi, P. Learning long-term dependencies with gradient descent is difficult. IEEE Trans Neural Netw 5, 157–166, https://doi.org/10.1109/72.279181 (1994).

Cho, K. et al. Learning phrase representations using RNN encoder-decoder for statistical machine translation. arXiv preprint arXiv:1406.1078 (2014).

Hochreiter, S. & Schmidhuber, J. Long short-term memory. Neural computation 9, 1735–1780 (1997).

Srivastava, N., Mansimov, E., Salakhudinov, R. Unsupervised learning of video representations using LSTMs. In: 2015 International Conference on Machine Learning. 843–852 (2015).

Ritchie, M. D., Holzinger, E. R., Li, R., Pendergrass, S. A. & Kim, D. Methods of integrating data to uncover genotype-phenotype interactions. Nature reviews. Genetics 16, 85–97, https://doi.org/10.1038/nrg3868 (2015).

Tibshirani, R. Regression shrinkage and selection via the lasso. Journal of the Royal Statistical Society. Series B (Methodological), 267–288 (1996).

Young, J. et al. Accurate multimodal probabilistic prediction of conversion to Alzheimer’s disease in patients with mild cognitive impairment. Neuroimage Clin 2, 735–745, https://doi.org/10.1016/j.nicl.2013.05.004 (2013).

Acknowledgements

Data used in the preparation of this article were obtained from the Alzheimer’s Disease Neuroimaging Initiative (ADNI) database (adni.loni.usc.edu). As such, the investigators within the ADNI contributed to the design and implementation of ADNI and/or provided data but did not participate in analysis or writing of this report. A complete listing of ADNI investigators can be found http://adni.loni.usc.edu/wp-content/themes/freshnews-dev-v2/documents/policy/ADNI_Acknowledgement_List%205-29-18.pdf. Data collection and sharing for this project was funded by the Alzheimer’s Disease Neuroimaging Initiative (ADNI) (National Institutes of Health Grant U01 AG024904) and DOD ADNI (Department of Defense award number W81XWH-12-2-0012). ADNI is funded by the National Institute on Aging, the National Institute of Biomedical Imaging and Bioengineering, and through generous contributions from the following: Alzheimer’s Association; Alzheimer’s Drug Discovery Foundation; BioClinica, Inc.; Biogen Idec Inc.; Bristol-Myers Squibb Company; Eisai Inc.; Elan Pharmaceuticals, Inc.; Eli Lilly and Company; F. Hoffmann-La Roche Ltd and its affiliated company Genentech, Inc.; GE Healthcare; Innogenetics, N.V.; IXICO Ltd.; Janssen Alzheimer Immunotherapy Research & Development, LLC.; Johnson & Johnson Pharmaceutical Research & Development LLC.; Medpace, Inc.; Merck & Co., Inc.; Meso Scale Diagnostics, LLC.; NeuroRx Research; Novartis Pharmaceuticals Corporation; Pfizer Inc.; Piramal Imaging; Servier; Synarc Inc.; and Takeda Pharmaceutical Company. The Canadian Institutes of Health Research is providing funds to support ADNI clinical sites in Canada. Private sector contributions are facilitated by the Foundation for the National Institutes of Health (www. fnih.org). The grantee organization is the Northern California Institute for Research and Education, and the study is coordinated by the Alzheimer’s Disease Cooperative Study at the University of California, San Diego. ADNI data are disseminated by the Laboratory for Neuro Imaging at the University of Southern California. Samples from the National Cell Repository for AD (NCRAD), which receives government support under a cooperative agreement grant (U24 AG21886) awarded by the National Institute on Aging (AIG), were used in this study. Additional support for data analysis was provided by NLM R01 LM012535, NIA R03 AG054936, and the Pennsylvania Department of Health (#SAP 4100070267). The Department specifically disclaims responsibility for any analyses, interpretations or conclusions. This research was also supported by Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Education [NRF- 2016R1D1A1B03933875].

Author information

Authors and Affiliations

Consortia

Contributions

The study was conceived by Kim, Nho, Kang, and Sohn. Experiments were designed and performed by all authors. The manuscript was written by Lee and Nho. All authors revised the manuscript and approved the final version prior to submission.

Corresponding authors

Ethics declarations

Competing Interests

The authors declare no competing interests.

Additional information

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

*A comprehensive list of consortium members appears at the end of the paper

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Lee, G., Nho, K., Kang, B. et al. Predicting Alzheimer’s disease progression using multi-modal deep learning approach. Sci Rep 9, 1952 (2019). https://doi.org/10.1038/s41598-018-37769-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-018-37769-z

This article is cited by

-

Predicting long-term progression of Alzheimer’s disease using a multimodal deep learning model incorporating interaction effects

Journal of Translational Medicine (2024)

-

A multimodal deep learning approach for the prediction of cognitive decline and its effectiveness in clinical trials for Alzheimer’s disease

Translational Psychiatry (2024)

-

Deep Learning Based Alzheimer Disease Diagnosis: A Comprehensive Review

SN Computer Science (2024)

-

A primer on the use of machine learning to distil knowledge from data in biological psychiatry

Molecular Psychiatry (2024)

-

DeepGAMI: deep biologically guided auxiliary learning for multimodal integration and imputation to improve genotype–phenotype prediction

Genome Medicine (2023)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.