Abstract

Prior research using static facial stimuli (photographs) has identified diagnostic face regions (i.e., functional for recognition) of emotional expressions. In the current study, we aimed to determine attentional orienting, engagement, and time course of fixation on diagnostic regions. To this end, we assessed the eye movements of observers inspecting dynamic expressions that changed from a neutral to an emotional face. A new stimulus set (KDEF-dyn) was developed, which comprises 240 video-clips of 40 human models portraying six basic emotions (happy, sad, angry, fearful, disgusted, and surprised). For validation purposes, 72 observers categorized the expressions while gaze behavior was measured (probability of first fixation, entry time, gaze duration, and number of fixations). Specific visual scanpath profiles characterized each emotional expression: The eye region was looked at earlier and longer for angry and sad faces; the mouth region, for happy faces; and the nose/cheek region, for disgusted faces; the eye and the mouth regions attracted attention in a more balanced manner for surprise and fear. These profiles reflected enhanced selective attention to expression-specific diagnostic face regions. The KDEF-dyn stimuli and the validation data will be available to the scientific community as a useful tool for research on emotional facial expression processing.

Similar content being viewed by others

Introduction

Facial expressions are assumed to convey information about a person’s current feelings and motives, intentions and action tendencies. Most research on expression recognition has been conducted under a categorical view, using six basic expressions: happiness, anger, sadness, fear, disgust and surprise1 (for a review, see2). Emotion recognition relies on expression-specific diagnostic (i.e., distinctive) features, in that they are necessary or sufficient for recognition of the respective emotion: Anger and sadness are more recognizable from the eye region (e.g., frowning), whereas happiness and disgust are more recognizable from the mouth region (e.g., smiling), while recognition of fear and surprise depends on both regions3,4,5,6,7,8. In the current study, we aimed to determine the profile of overt attentional orienting to and engagement with such expression-diagnostic features; that is, whether, when, and how long they selectively attract eye fixations from observers. Importantly, we addressed this issue for dynamic facial expressions, thus extending typical approaches using photographic stimuli.

Prior eyetracking research using photographs of static expressions has provided non-conclusive evidence regarding the pattern and role of selective visual attention to facial features. First, during expression recognition, gaze allocation is often biased towards diagnostic face regions (e.g., the eye region receives more attention in sad and angry faces, whereas the mouth region receives more attention in happy and disgusted faces3,8,9,10,11). However, in other studies, the proportion of fixation on the different face areas was modulated by expression less consistently or was not affected12,13,14,15. Second, increased visual attention to diagnostic facial features is correlated with improved recognition performance16. Looking at the mouth region contributes to recognition of happiness3,8 and disgust8, and looking at the eye/brow area contributes to recognition of sadness3 and anger8. However, results are less consistent for other emotions, and the role of fixation on diagnostic regions depends on expressive intensity, with recognition of subtle emotions being facilitated by fixations on the eyes (and a lesser contribution by the mouth), whereas recognition of extreme emotions is less dependent on fixations14.

Nonetheless, facial expressions are generally dynamic in daily social interaction. In addition, research has shown that motion benefits facial affect recognition (see17,18,19). Consistently, relative to static expressions, the viewing of dynamic expressions enhances brain activity in regions associated with processing of social-relevant (superior temporal sulci) and emotion-relevant (amygdala) information20,21, which might explain the dynamic expression recognition advantage. Accordingly, it is important to investigate oculomotor behavior during the recognition of this type of expressions. To our knowledge, only a few studies have measured fixation patterns during dynamic facial expression processing, with non-convergent results. Lischke et al.22 reported an enhanced gaze duration bias towards the eye region of angry, sad, and fearful faces, while gaze duration was longer for the mouth region of happy faces (although differences were not statistically analyzed). In contrast, in the Blais, Fiset, Roy, Saumure-Régimbald, and Gosselin23 study, fixation patterns did not differ across six basic expressions and were not linked to a differential use of facial features during recognition.

It is, however, possible that the lack of fixation differences across expressions in the Blais et al.23 study was due to the use of (a) a short stimulus display (500 ms), thereby limiting the number of fixations (two fixations per trial); and (b) a small stimulus size (width: 5.72°), as the eyes and mouth were close to (1.7° and 2.1°) the center of the face (initial fixation location), and thus they could be seen in parafoveal vision (which then probably curtailed saccades). If so, such stimulus conditions might have reduced sensitivity of measurement. Yet, it must be noted that—in the absence of differences as a function of expression—fixations did vary as a function of display mode, with more fixations on the left eye and the mouth in the static than in the dynamic condition23. To clarify this issue, first, we used longer stimulus displays (1,033 ms), thus approximating the typical duration of expression unfolding for most basic emotions19,24. Second, we used larger face stimuli (8.8° width × 11.6° height, at an 80-cm viewing distance), which approximates the size of a real face (i.e., 13.8 × 18.5 cm, viewed from 1 m). In fact, in the Lischke et al.22 study (where fixation differences did occur as a function of expression), the stimulus display was longer (800 ms) and the size was larger (17° × 23.6°) than in the Blais et al.23 study.

An additional contribution of the current study involves the recollection of norming eyetracking data for each of 240 video-clip stimuli that will be available as a new dynamic expression stimulus set (KDEF-dyn) for other researchers. A number of dynamic expression databases have been developed (for a review, see25). To our knowledge, however, for none of them have eyetracking measures been obtained. Thus we make a contribution by devising a facial expression database for which eye movements and fixations are assessed while observers scan faces during emotional expression categorization. The current approach will provide information about the time course of selective attention to face regions, in terms of both orienting (as measured by the probabilities of entry and of first fixation on each region) and engagement (as indicated by gaze duration and number of fixations). If observers move their eyes to face regions that maximize performance determining the emotional state of a face26, then regions with expression-specific diagnostic features should receive selective attention, in the form of earlier orienting or longer engagement, relative to other regions. Thus, in a confirmatory approach, we predict enhanced attention to the eye region of angry and sad faces, to the mouth region of happy and disgusted faces, and a more balanced attention to the eyes and mouth of fearful and surprised faces. In an exploratory approach, we aim to examine how each attentional component, i.e., orienting and engagement, is affected.

We used a dynamic version (KDEF-dyn) of the original (static) Karolinska Directed Emotional Faces (KDEF) database27. The photographic KDEF stimuli have been examined in large norming studies28,29, and widely employed in behavioral30,31,32 and neurophysiological33,34,35 research (according to Google Scholar, the KDEF has been cited in over 2,000 publications). We built dynamic expressions by applying morphing animation to the KDEF photographs, whereby a neutral face changed towards a full-blown emotional face, trying to mimic real-life expressions and the average natural speed of emotional expression unfolding24,36. This approach provides fine-grained control and standardization of duration, speed, and intensity. Further, dynamically morphed facial expression stimuli have often been employed in behavioral18,24,37,38 and neurophysiological39,40,41,42 research. Although this type of expressions may not convey the same naturalness as online video recordings, some studies indicate that natural expressions unfold in a uniform and ballistic way43,44, thus actually sharing properties with morphed dynamic expressions.

Method

Participants

Seventy-two university undergraduates (40 female; 32 male; aged 18 to 30 years: M = 21.3) from different courses participated for course credit or payment, after providing written informed consent. A power calculation using G*Power (version 3.1.9.245) showed that 42 participants would be sufficient to detect a medium effect size (Cohen’s d = 0.60) at α = 0.05, with power of 0.98, in an a priori analysis of repeated measures within factors (type of expression and face region) ANOVA. As this was a norming study of stimulus materials, a larger participant sample (i.e., 72) was used to obtain stable and representative mean scores. The study was approved by the University of La Laguna ethics committee (CEIBA, protocol number 2017–0227), and conducted in accordance with the WMA Declaration of Helsinki 2008.

Stimuli

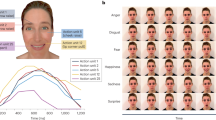

The color photographs of 40 people (20 female; 20 male) from the KDEF set27, each displaying six basic expressions (happiness, sadness, anger, fear, disgust, and surprise), were used (see the KDEF identities in Supplemental Datasets S1A and S1B). For the current study, 240 dynamic video-clip versions (1,033 ms duration) of the original photographs were constructed. The face stimuli were subjected to morphing by means of FantaMorph© software (v. 5.4.2, Abrosoft, Beijing, China). For each expression and poser, we created a sequence of 31 (33.33-ms) frames, with intensity increasing at a rate of 30 frames per second, starting with a neutral face as the first frame (frame 0; original KDEF), and ending with the peak of an emotional face (either happy, sad, etc.) in the last frame (frame 30; original KDEF). A similar procedure and display duration has been used in prior research19,46,47. The stimuli and the norming data are available at http://kdef.se/versions.html; KDEF-dyn II).

Procedure

All 72 participants were presented with all 240 video-clips (40 posers × 6 expressions) in six blocks of 40 trials each. Block order was counterbalanced, and trial order and type of expression were randomized for each participant. The stimuli were displayed on a computer screen by means of SMI Experiment Center™ 3.6 software (SensoMotoric Instruments GmbH, Teltow, Germany). Participants were asked to indicate which of six basic expressions was shown on each trial by pressing a key out of six. Twelve video-clips served as practice trials, with two new models showing each expression.

The sequence of events on each trial was as follows. After an initial 500-ms central fixation cross on a screen, a video-clip showed a facial expression unfolding for 1,033 ms. The face subtended a visual angle of 11.6° (height) × 8.8° (width) at a 80-cm viewing distance. Following face offset, six small boxes appeared horizontally on the screen for responding, with each box associated to a number/label (e.g., 4: happy; 5: sad, etc.). For expression categorization, participants pressed one key (from 4 to 9) in the upper row of a standard computer keyboard with their dominant index finger. The assignment of expressions to keys was counterbalanced. The chosen response and reaction times (from the offset of the video-clip) were recorded. There was a 1,500-ms intertrial interval.

Design and measures

A within-subjects experimental design was used, with expression (happiness, sadness, anger, fear, disgust, and surprise) as a factor. As dependent variables, we measured three aspects of expression categorization performance: (a) hits, i.e., the probability that responses coincided with the displayed expression (e.g., responding “happy” when the face stimulus was intended to convey happiness); (b) reaction times (RTs) for hits; and (c) type of confusions, i.e., the probability that each target stimulus (the displayed expression) was categorized as each of the other five, non-target expressions (e.g., if the target was anger in a trial, the five non-targets were happiness, sadness, disgust, fear, and surprise).

Eye-movements were recorded by means of a 500-Hz (binocular; spatial resolution: 0.03°; gaze position accuracy: 0.4°) RED system eyetracker (SensoMotoric Instruments, SMI, Teltow, Germany). The following measures were obtained: (a) probability that the first fixation on the face (following the initial fixation on the central fixation point on the nose) landed on each of three regions of interest (see below); (b) probability of entry in each region during the display period (entry times are also reported in Supplemental Datasets S1A), but were not analyzed because some regions were not looked at by all viewers; thus the mean entry times are informative only by taking the probability of entry into account); (c) number of fixations (if ≥80 ms duration) on each region; and (d) gaze duration or total fixation time on each region. The probability of first fixation and entry assessed attentional orienting. The number of fixations and gaze duration assessed attentional engagement. In addition, to examine the time course of selective attention to face regions along expression unfolding, we computed the proportion of gaze duration for each face region during each of 10 consecutive intervals of 100 ms each (i.e., from 1 to 100 ms, from 101 to 200 ms, etc.) across the 1,033-ms display (the final 33 ms were not included). Net gaze duration was obtained and analyzed after saccades and blinks were excluded. For saccade and fixation detection parameters, we used a velocity-based algorithm with a 40°/s peak velocity threshold and 80 ms for minimum fixation duration (for details, see48).

Three face regions of interest were defined: eye and eyebrow (henceforth, eye region), nose/cheek (henceforth, nose), and mouth (see their sizes and shapes in Fig. 1). About 97% of total fixations occurred within these three regions (the forehead and the chin were excluded because they received only 1.2% of fixations).

Results

Given that one major aim of the study was to obtain and provide other researchers with validation measures for each stimulus in the KDEF-dyn database, the statistical analyses were performed by items, with the 240 video-clip stimuli as the units of analysis (and scores averaged for the 72 participants). For all the following analyses, the post hoc multiple comparisons across expressions used a familywise error rate (FWER) procedure, with single step (i.e., equivalent adjustments made to each p value) Bonferroni corrections (with a p < 0.05 threshold).

Analyses of expression recognition performance and confusions

For the probability of accurate responses, a one-way (6: Expression stimulus: happiness, surprise, anger, sadness, disgust, and fear) ANOVA yielded significant effects, F(5, 234) = 39.34, p < 0.001, ηp2 = 0.46. Post hoc contrasts revealed better recognition of happiness, surprise, and anger (which did not differ from one another), relative to sadness and disgust (which did not differ), which were recognized better than fear (see Table 1, Hits row). The correct response reaction times, F(5, 234) = 50.26, p < 0.001, ηp2 = 0.52, were faster for happiness than for all the other expressions, followed by surprise, followed by disgust, anger, and sadness (which did not differ from one another), and fear was recognized most slowly (see Table 1, Hit RTs row).

A 6 (Expression stimulus) × 6 (Expression response) ANOVA on confusions yielded interactive effects, F(25, 1170) = 581.13, p < 0.001, ηp2 = 0.92, which were decomposed by one-way (6: Expression response) ANOVAs for each expression stimulus separately (see Table 1). Facial happiness, F(5, 195) = 6489.44, p < 0.001, ηp2 = 0.99, was minimally confused. Surprise, F(5, 195) = 6781.17, p < 0.001, ηp2 = 0.99, was slightly confused with fear and happiness; anger, F(5, 195) = 2231.79, p < 0.001, ηp2 = 0.98, with disgust and fear; sadness, F(5, 195) = 241.36, p < 0.001, ηp2 = 0.86, with fear and disgust; disgust, F(5, 195) = 289.88, p < 0.001, ηp2 = 0.87, with anger, sadness, and fear; and fear, F(5, 195) = 94.24, p < 0.001, ηp2 = 0.71, was confused mainly with surprise.

Analyses of eye movement measures

A 6 (Expression stimulus) ×3 (Face region: eyes, nose/cheek, and mouth) ANOVA was conducted on each eye-movement measure. The significant interactions were decomposed by means of one-way (6: Expression) ANOVAs for each region. Post hoc multiple comparisons examined how much the processing of each expression relied on a face region more than other expressions did. The critical comparisons involved contrasts across expressions for each region (which was of identical size for all the expressions), rather than across regions for each expression (as regions were different in size, thus probably affecting gaze behavior). The first fixation on the nose was removed as uninformative, given that the initial fixation point was located on this region.

For probability of first fixation, effects of region, F(2, 468) = 2361.70, p < 0.001, ηp2 = 0.91, but not of expression, F(5, 234) = 1.90, p = 0.095, ns, and an interaction, F(10, 468) = 9.75, p < 0.001, ηp2 = 0.17, emerged. The one-way (Expression) ANOVA yielded effects for the eye region, F(5, 234) = 10.26, p < 0.001, ηp2 = 0.18, and the mouth, F(5, 234) = 17.05, p < 0.001, ηp2 = 0.27, but not the nose, F(5, 234) = 1.56, p = 0.17, ns. As indicated in Table 2 (means and multiple contrasts), (a) the eye region was more likely to be fixated first in angry faces relative all the others, except for sad faces, which, along with surprised, disgusted, and fearful faces, were more likely to be fixated first on the eyes than happy faces were; and (b) the mouth region of happy faces was more likely to be fixated first, relative to the other expressions.

For probability of entries, effects of region, F(2, 468) = 3274.54, p < 0.001, ηp2 = 0.93, expression, F(5, 234) = 4.66, p < 0.001, ηp2 = 0.09, and an interaction, F(10, 468) = 47.91, p < 0.001, ηp2 = 0.51, emerged. The one-way (Expression) ANOVA yielded effects for the eye region, F(5, 234) = 46.04, p < 0.001, ηp2 = 0.50, the nose, F(5, 234) = 20.03, p < 0.001, ηp2 = 0.30, and the mouth, F(5, 234) = 37.01, p < 0.001, ηp2 = 0.44. As indicated in Table 3 (means and multiple contrasts), (a) the probability of entry in the eye region was higher for the angry, sad, and surprised faces than for disgusted and happy faces; (b) it was higher in the nose region for happy and disgusted faces than for the others; and (c) it was highest in the mouth region for happy faces.

For gaze duration, effects of region, F(2, 468) = 2007.02, p < 0.001, ηp2 = 0.90, but not of expression (F < 1), and an interaction, F(10, 468) = 42.45, p < 0.001, ηp2 = 0.48, emerged. The one-way (Expression) ANOVA yielded effects for the eye region, F(5, 234) = 51.76, p < 0.001, ηp2 = 0.52, the nose, F(5, 234) = 10.60, p < 0.001, ηp2 = 0.19, and the mouth, F(5, 234) = 49.31, p < 0.001, ηp2 = 0.51. As indicated in Table 4 (means and multiple contrasts), (a) the eye region was fixated longer in angry and sad faces, relative to the others; (b) the nose region, in disgusted faces; and (c) the mouth, in happy faces.

For number of fixations, effects of region, F(2, 468) = 1624.24, p < 0.001, ηp2 = 0.87, but not of expression, F(5, 234) = 2.06, p = 0.071, ns, and an interaction, F(15, 702) = 39.71, p < 0.001, ηp2 = 0.46, appeared. The one-way (Expression) ANOVA yielded effects for the eye region, F(5, 234) = 46.11, p < 0.001, ηp2 = 0.50, the nose, F(5, 234) = 6.87, p < 0.001, ηp2 = 0.13, and the mouth, F(5, 234) = 36.63, p < 0.001, ηp2 = 0.45. As indicated in Table 5 (means and multiple contrasts), (a) the eye region was fixated more frequently in angry, sad, and surprised faces; (b) the nose, in disgusted and happy faces; and (c) the mouth, in happy faces.

Time course of selective attention to expression-diagnostic features

An overall ANOVA of Expression (6) by Region (3) by Interval (10) was performed on the proportion of gaze duration for each region during each of 10 consecutive 100-ms intervals across expression unfolding. Effects of region, F(2, 702) = 2818.37, p < 0.001, ηp2 = 0.89, and interval, F(9, 6818) = 8.79, p < 0.001, ηp2 = 0.01, were qualified by interactions of region by expression, F(10, 702) = 61.10, p < 0.001, ηp2 = 0.47, interval by region, F(18, 6318) = 2178.64, p < 0.001, ηp2 = 0.86, and a three-way interaction, F(90, 6318) = 39.62, p < 0.001, ηp2 = 0.36 (see Fig. 2a,b,c; see also Supplemental Datasets S1C Tables). To decompose the three-way interaction, two-way ANOVAs of Expression by Interval were run for each region, further followed by one-way ANOVAs testing the effect of Expression in each time window, with post hoc multiple comparisons (p < 0.05, Bonferroni corrected). This approach served to determine two aspects of the attentional time course: the threshold (i.e., the earliest interval) and the amplitude (i.e., for how many intervals) each face region was looked at more for an expression than for the others.

(a,b,c) Time course of fixation on each region. Proportion of fixation time on each region (a: Eyes; b: Nose/cheek; c: Mouth) for each facial expression across 10 consecutive 100-ms intervals. For each interval, expressions within a different dotted circle/oval are significantly different from one another (in post hoc multiple contrasts; after p < 0.05 Bonferroni corrections); expressions within the same circle/oval are equivalent. For (b), the scale has been slightly stretched, to better notice differences between expressions.

For the eye region, effects of expression, F(5, 234) = 51.76, p < 0.001, ηp2 = 0.53, and interval, F(9, 2106) = 556.98, p < 0.001, ηp2 = 0.70, and an interaction, F(45, 2106) = 35.06, p < 0.001, ηp2 = 0.43, appeared. Expression effects were significant for all the intervals from the 301-to-400 ms time window onwards, with statistical significance ranging between F(5, 234) = 6.86, p < 0.001, ηp2 = 0.13 and F(5, 234) = 72.25, p < 0.001, ηp2 = 0.61. The post hoc contrasts and the significant differences across expressions within each interval are shown in Fig. 2a. An advantage emerged for sad and angry expressions, with the threshold located at the 401-to-500-ms interval, where their eye regions attracted more fixation time than for all the other expressions, and the amplitude of this advantage remained until 900 ms post-stimulus onset. Secondary advantages appeared for surprised and fearful faces, relative to disgusted and happy faces (see Fig. 2a).

For the nose/cheek region, effects of expression, F(5, 234) = 10.86, p < 0.001, ηp2 = 0.19, and interval, F(9, 2106) = 2910.16, p < 0.001, ηp2 = 0.93, were qualified by an interaction, F(45, 2106) = 4.58, p < 0.001, ηp2 = 0.09. Expression effects were significant for all the intervals from the 401-to-500 ms time window onwards, ranging between F(5, 234) = 5.67, p < 0.001, ηp2 = 0.11 and F(5, 234) = 15.62, p < 0.001, ηp2 = 0.25. The post hoc contrasts and the significant differences across expressions within each interval are shown in Fig. 2b. An advantage emerged for disgusted expressions over all the others, except for happy faces, with the threshold located at the 401-to-500-ms interval: The mouth/cheek region attracted more fixation time for disgusted faces than for all the other expressions (except happy faces), and the amplitude of this advantage remained until the end of the 1,000-ms display.

For the mouth region, effects of expression, F(5, 234) = 49.96, p < 0.001, ηp2 = 0.52, interval, F(9, 2106) = 1206.45, p < 0.001, ηp2 = 0.84, and an interaction, F(45, 2106) = 38.87, p < 0.001, ηp2 = 0.45, emerged. Expression effects were significant from the 301-to-400 ms interval onwards, ranging between F(5, 234) = 6.99, p < 0.001, ηp2 = 0.13 and F(5, 234) = 70.02, p < 0.001, ηp2 = 0.60. The post hoc multiple contrasts and the significant differences across expressions within each interval are shown in Fig. 2c. An advantage emerged for happy expressions over all the others, with the threshold located at the 401-to-500-ms interval: The smiling mouth region attracted more fixation time than the mouth region of all the other expressions, and the amplitude of this advantage remained until the end of the 1,000-ms display. Secondary advantages appeared for surprised and fearful faces, relative to sad and angry faces (see Fig. 2c).

Potentially spurious results involving the nose/cheek region

The eye and the mouth regions are typically the most expressive sources in a face and, in fact, most of the statistical effects reported above emerged for these regions. Yet for disgusted (and, to a lesser extent, happy) expressions effects appeared also in the nose and cheek region (e.g., longer gaze duration). As indicated in the following analyses, these effects—rather than being spurious or irrelevant—can be explained as a function of morphological changes in the nose/cheek region of such expressions.

According to FACS (Facial Action Coding System) proposals49, facial disgust is typically characterized by AU9 (Action Unit; nose wrinkling or furrowing), which directly engages the nose/cheek region; and happiness is characterized by AU6 (cheek raiser) and AU12 (lip corner puller), which engage the mouth region and extend to the nose/cheek region. We used automated facial expression analysis50,51 by means of Emotient FACET SDK v6.1 software (iMotions; http://emotient.com/index.php) to assess these AUs in our stimuli. A one-way (6: Expression) ANOVA revealed higher AU9 scores for disgusted faces (M = 3.48) relative to all the others (ranging from −5.22 [surprise] to 0.19 [anger]), F(5, 234) = 134.46, p < 0.001, ηp2 = 0.74. Relatedly, for happy faces, AU6 scores (M = 2.88) and AU12 (M = 4.06) scores were higher than for all the others, F(5, 234) = 126.00, p < 0.001, ηp2 = 0.73 (AU6 ranging from to −2.32 [surprise] to 1.02 [disgust]), and F(5, 234) = 204.85, p < 0.001, ηp2 = 0.81 (AU12 ranging from −1.80 [anger] to −0.76 [fear]), respectively.

Discussion

The major goal of the present study was to investigate gaze behavior during recognition of dynamic facial expressions changing from neutral to emotional (happy, sad, angry, fearful, disgusted, or surprised). We determined selective attentional orienting to and engagement with expression-diagnostic regions; that is, those that have been found to contribute to (in that they are sufficient or necessary for) recognition3,4,5,6,7,8. As a secondary goal, we also aimed to validate a new stimulus set (KDEF-dyn) of dynamic facial expressions, and provide other researchers with norming data of categorization performance and eye fixation profiles for this instrument.

The relative recognition accuracies, efficiency, and confusions across expressions in the current study are consistent with those in prior research on emotional expression categorization. With static face stimuli, (a) recognition performance is typically higher for facial happiness, followed by surprise, which are higher than for sadness and anger, followed by disgust and fear18,41,52,53; (b) happy faces are recognized faster, and fear is recognized most slowly, across different response systems4,53,54,55; and (c) confusions occur mainly between disgust and anger, surprise and fear, and sadness and fear28,38,55,56. Regarding dynamic expressions in on-line video recordings, a pattern of recognition accuracies and reaction times comparable to ours (except for the lack of confusion of sadness as fear) has been found in prior research19. In addition, in studies using facial expressions in dynamic morphing format18,38,41,57, the pattern of expression recognition accuracy and confusions was also comparable to those in the current study. Thus, our recognition performance data concur with prior research data from static and dynamic expressions. This validates the KDEF-dyn set, and allows us to go forward and examine the central issues of the present approach concerning selective attention to dynamic expression-diagnostic face regions.

Our major contribution dealt with selective overt attention during facial expression processing, as reflected by eye movements and fixations. These measures have been obtained in many prior studies using static faces3,15,58,59, but scarcely in studies using dynamic faces22,23. Lischke et al.22 reported a trend towards longer gaze durations for expression-specific regions (i.e., the eyes of angry, sad, and fearful faces, and the mouth of happy faces). Our own results generally agree with these findings (except for fear) and extend them to additional expressions (disgust and surprise) and other eye-movement measures. In contrast, Blais et al.23 found no differences across the six basic expressions of emotion. However, as we argued in the Introduction, the lack of fixation differences in the Blais et al.23 study could be due to the use of a short stimulus display (500 ms) and a small stimulus size (5.72° width). In the current study (also in Lischke et al.22), we used longer displays (1,033 ms) and stimulus size (8.8° width) to increase sensitivity of measurement, which probably allowed for selective attention effects to emerge as a function of face region and expression.

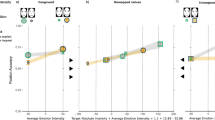

The current study addressed two aspects of selective visual attention to diagnostic features in dynamic expressions that were not considered previously: The distinction and time course of attentional orienting and engagement. As summarized in Fig. 3 (also Fig. 2a,b,c), the effects on orienting and engagement were generally convergent (except for minor discrepancies regarding disgusted faces): (a) happy faces were characterized by selective orienting to and engagement with the mouth region, which showed a time course advantage (i.e., both an earlier threshold and a longer amplitude of visual processing), relative to the other expressions; (b) angry and sad faces were characterized by orienting to and engagement with the eye region, with an earlier and longer time course advantage; (c) disgusted faces were characterized mainly by engagement with the nose/cheek, with a time course advantage; and (d) for surprised and fearful faces, both orienting and engagement were attracted by the eyes and the mouth in a balanced manner, with no dominance. This suggests that facial happiness, anger, sadness, and disgust processing relies on the analysis of single features (either the eyes or the mouth, or the nose), whereas facial surprise and fear processing would require a more holistic integration (see60,61). Further, our findings reveal a close relationship between expression-specific diagnostic regions3,4,5,7,8 and selective attention to them for dynamic (not only for static) facial expressions.

Summary of major findings of gaze behavior. Preferentially fixated (probability of first fixation, probability of entry, gaze duration, and number of fixations) face regions, and time course of fixation advantage (threshold, i.e., earliest time point; and amplitude, i.e., duration of advantage) across different expressions. Asterisks indicate a delayed and partial advantage for surprise and fear, relative to some—but not all—expressions (i.e., relative to disgust and happiness, for the eye region; or to sadness and anger, for the mouth region.

These findings have theoretical implications regarding the functional value of fixation profiles for expression categorization. It has been argued that fixation profiles reflect attention to the most diagnostic regions of a face for each emotion8. We have shown that the diagnostic facial features previously found to contribute to expression recognition3,4,5,6,7,8 are also the ones receiving earlier and longer overt attention during expression categorization. This allows us to infer that enhanced selective fixation on diagnostic regions of the respective expressions is functional for (i.e., facilitates) recognition. This is consistent with the hypothesis that observers move their eyes to face regions that maximize performance determining the emotional state of a face26, and the hypothesis of a predictive value of fixation patterns in recognizing emotional faces14. Nevertheless, beyond the aims and scope of the current study, an approach that directly addresses this issue should manipulate the visual availability or unavailability of diagnostic face regions, and examine how this affects actual expression recognition.

There are practical implications for an effective use of the current KDEF-dyn database: If the scanpath profiles when inspecting a face are functional (due to the diagnostic value of face regions), then such profiles can be taken as criteria for stimulus selection. We used a relatively large sample of stimuli (40 different models; 240 video-clips), which allows for selection of sub-samples depending on different research purposes (expression categorization, time course of attention, orienting, or engagement). Our stimuli vary in how much the respective scanpaths reflect the dominance (e.g., earlier first fixation, longer gaze duration, etc.) of diagnostic regions for each expression, and how much the scanpaths match the ideal pattern (e.g., earlier and longer gaze duration on the eye region of angry faces, etc.). This information can be obtained from our datasets (Supplemental Datasets S1A and S1B). Researchers could thus choose the stimulus models having the regions with enhanced attentional orienting or engagement, or a speeded time course (e.g., threshold) of attention. Of course, selection can also be made on the basis of recognition performance (hits, categorization efficiency, and type of confusions). Thus, the current study provides researchers with a useful methodological tool.

To conclude, we developed a set of morphed dynamic facial expressions of emotion (KDEF-dyn; see also62 for a complementary study using different measures). Expression recognition data were consistent with findings from prior research using static and other dynamic expressions. As a major contribution, eye-movement measures assessed selective attentional orienting and engagement, and its time course, for six basic emotions. Specific attentional profiles characterized each emotion: The eye region was looked at earlier and longer for angry and sad faces; the mouth region was looked at earlier and longer for happy faces; the nose/cheek region was looked at earlier and longer for disgusted faces; the eye and the mouth regions attracted attention in a more balanced manner for surprise and fear. This reveals selective visual attention to diagnostic features typically facilitating expression recognition.

Data Availability

The authors declare that the data of the study are included in Supplemental Datasets S1A and S1B linked to this manuscript.

References

Ekman, P. & Cordaro, D. What is meant by calling emotions basic. Emotion Review 3(4), 364–370 (2011).

Calvo, M. G. & Nummenmaa, L. Perceptual and affective mechanisms in facial expression recognition: An integrative review. Cogn Emot. 30(6), 1081–1106 (2016).

Beaudry, O., Roy-Charland, A., Perron, M., Cormier, I. & Tapp, R. Featural processing in recognition of emotional facial expressions. Cogn Emot. 28(3), 416–432 (2014).

Calder, A. J., Young, A. W., Keane, J. & Dean, M. Configural information in facial expression perception. Journal of Experimental Psychology Human Perception and Performance 26(2), 527–551 (2000).

Calvo, M. G., Fernández-Martín, A. & Nummenmaa, L. Facial expression recognition in peripheral versus central vision: Role of the eyes and the mouth. Psychological Research 78(2), 180–195 (2014).

Kohler, C. G. et al. Differences in facial expressions of four universal emotions. Psychiatry Res. 128(3), 235–244 (2004).

Smith, M. L., Cottrell, G. W., Gosselin, F. & Schyns, P. G. Transmitting and decoding facial expressions. Psychological Science 16(3), 184–189 (2005).

Schurgin, M. W. et al. Eye movements during emotion recognition in faces. Journal of Vision 14(13), 1–16 (2014).

Calvo, M. G. & Nummenmaa, L. Detection of emotional faces: salient physical features guide effective visual search. J Exp Psychol Gen. 137(3), 471–494 (2008).

Ebner, N. C., He, Y. & Johnson, M. K. Age and emotion affect how we look at a face: visual scan patterns differ for own-age versus other-age emotional faces. Cogn Emot. 25(6), 983–997 (2011).

Eisenbarth, H. & Alpers, G. W. Happy mouth and sad eyes: Scanning emotional facial expressions. Emotion 11(4), 860–52011 (2011).

Bombari, D. et al. Emotion recognition: The role of featural and configural face information. Quarterly Journal of Experimental Psychology 66(12), 2426–2442 (2013).

Jack, R. E., Blais, C., Scheepers, C., Schyns, P. G. & Caldara, R. Cultural confusions show that facial expressions are not universal. Curr Biol. 19(18), 1543–8154 (2009).

Vaidya, A. R., Jin, C. & Fellows, L. K. Eye spy: The predictive value of fixation patterns in detecting subtle and extreme emotions from faces. Cognition 133(2), 443–456 (2014).

Wells, L. J., Gillespie, S. M. & Rotshtein, P. Identification of emotional facial expressions: effects of expression, intensity, and sex on eye gaze. PloS ONE 11(12), e0168307 (2016).

Wong, B., Cronin-Golomb, A. & Neargarder, S. Patterns of visual scanning as predictors of emotion identification in normal aging. Neuropsychology 19(6), 739–749 (2005).

Krumhuber, E. G., Kappas, A. & Manstead, A. S. R. Effects of dynamic aspects of facial expressions: A review. Emotion Review 5(1), 41–46 (2013).

Calvo, M. G., Avero, P., Fernandez-Martin, A. & Recio, G. Recognition thresholds for static and dynamic emotional faces. Emotion 16(8), 1186–1200 (2016).

Wingenbach, T. S., Ashwin, C. & Brosnan, M. Validation of the Amsterdam Dynamic Facial Expression Set - Bath Intensity Variations (ADFES-BIV): A set of videos expressing low, intermediate, and high intensity emotions. PloS ONE 11(12), e0168891 (2016).

Arsalidou, M., Morris, D. & Taylor, M. J. Converging evidence for the advantage of dynamic facial expressions. Brain Topography 24(2), 149–163 (2011).

Trautmann, S. A., Fehr, T. & Herrmann, M. Emotions in motion: Dynamic compared to static facial expressions of disgust and happiness reveal more widespread emotion-specific activations. Brain Research 1284, 100–115 (2009).

Lischke, A. et al. Intranasal oxytocin enhances emotion recognition from dynamic facial expressions and leaves eye-gaze unaffected. Psychoneuroendocrinology 37(4), 475–481 (2012).

Blais, C., Fiset, D., Roy, C., Saumure-Régimbald, C. & Gosselin, F. Eye fixation patterns for categorizing static and dynamic facial expressions. Emotion 17(7), 1107–1119 (2017).

Hoffmann, H., Traue, H. C., Bachmayr, F. & Kessler, H. Perceived realism of dynamic facial expressions of emotion: Optimal durations for the presentation of emotional onsets and offsets. Cogn Emot. 24(8), 1369–76 (2010).

Krumhuber, E. G., Skora, L., Küster, D. & Fou, L. A review of dynamic datasets for facial expression research. Emotion Review 9(3), 280–292 (2017).

Peterson, M. F. & Eckstein, M. P. Looking just below the eyes is optimal across face recognition tasks. PNAS 109(48), E3314–3323 (2012).

Lundqvist, D., Flykt, A. & Öhman, A. The Karolinska Directed Emotional Faces–KDEF [CD-ROM]. Department of Clinical Neuroscience, Psychology section, Karolinska Institutet, Stockholm, Sweden ISBN 91-630-7164-9 (1998).

Calvo, M. G. & Lundqvist, D. Facial expressions of emotion (KDEF): Identification under different display-duration conditions. Behavior Research Methods 40(1), 109–115 (2008).

Goeleven, E., De Raedt, R., Leyman, L. & Verschuere, B. The Karolinska Directed Emotional Faces: A validation study. Cogn Emot. 22(6), 1094–1118 (2008).

Calvo, M. G., Gutiérrez-García, A., Avero, P. & Lundqvist, D. Attentional mechanisms in judging genuine and fake smiles: Eye-movement patterns. Emotion 13(4), 792–802 (2013).

Gupta, R., Hur, Y. J. & Lavie, N. Distracted by pleasure: Effects of positive versus negative valence on emotional capture under load. Emotion 16(3), 328–337 (2016).

Sanchez, A., Vazquez, C., Gómez, D. & Joormann, J. Gaze-fixation to happy faces predicts mood repair after a negative mood induction. Emotion 14(1), 85–94 (2014).

Adamaszek, M. et al. Neural correlates of impaired emotional face recognition in cerebellar lesions. Brain Research 1613, 1–12 (2015).

Bublatzky, F., Gerdes, A. B., White, A. J., Riemer, M. & Alpers, G. W. Social and emotional relevance in face processing: Happy faces of future interaction partners enhance the late positive potential. Frontiers in Human Neuroscience 8, 493 (2014).

Calvo, M. G. & Beltrán, D. Brain lateralization of holistic versus analytic processing of emotional facial expressions. NeuroImage 92, 237–247 (2014).

Pollick, F. E., Hill, H., Calder, A. & Paterson, H. Recognising facial expression from spatially and temporally modified movements. Perception 32(7), 813–826 (2003).

Fiorentini, C. & Viviani, P. Is there a dynamic advantage for facial expressions? Journal of Vision 11(3), 1–15 (2011).

Recio, G., Schacht, A. & Sommer, W. Classification of dynamic facial expressions of emotion presented briefly. Cogn Emot. 27(8), 1486–1494 (2013).

Harris, R. J., Young, A. W. & Andrews, T. J. Dynamic stimuli demonstrate a categorical representation of facial expression in the amygdala. Neuropsychologia 56, 47–52 (2014).

Popov, T., Miller, G. A., Rockstroh, B. & Weisz, N. Modulation of alpha power and functional connectivity during facial affect recognition. The Journal of Neuroscience: The official journal of the Society for Neuroscience 33(14), 6018–6026 (2013).

Recio, G., Schacht, A. & Sommer, W. Recognizing dynamic facial expressions of emotion: Specificity and intensity effects in event-related brain potentials. Biological Psychology 96, 111–125 (2014).

Vrticka, P., Lordier, L., Bediou, B. & Sander, D. Human amygdala response to dynamic facial expressions of positive and negative surprise. Emotion 14(1), 161–169 (2014).

Hess, U., Kappas, A., McHugo, G. J., Kleck, R. E. & Lanzetta, J. T. An analysis of the encoding and decoding of spontaneous and posed smiles: The use of facial electromyography. Journal of Nonverbal Behavior 13(2), 121–137 (1989).

Weiss, F., Blum, G. S. & Gleberman, L. Anatomically based measurement of facial expressions in simulated versus hypnotically induced affect. Motivation & Emotion 11(1), 67–81 (1987).

Faul, F., Erdfelder, E., Lang, A. G. & Buchner, A. G*Power 3: A flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behavior Research Methods 39(2), 175–191 (2007).

Schultz, J. & Pilz, K. S. Natural facial motion enhances cortical responses to faces. Experimental Brain Research 194(3), 465–475 (2009).

Johnston, P., Mayes, A., Hughes, M. & Young, A. W. Brain networks subserving the evaluation of static and dynamic facial expressions. Cortex 49(9), 2462–2472 (2013).

Holmqvist, K., Nyström, N., Andersson, R., Dewhurst, R., Jarodzka, H., & Van de Weijer, J. Eye tracking: A comprehensive guide to methods and measures (Oxford University Press, Oxford, UK, 2011).

Ekman, P., Friesen, W. V. & Hager, J. C. Facial action coding system (A Human Face, Salt Lake City, 2002).

Cohn, J. F. & De la Torre, F. Automated face analysis for affective computing. In: Calvo, R. A., Di Mello, S., Gratch, J. & Kappas, A. (editors). The Oxford handbook of affective computing, 131–151 (Oxford University Press, New York, 2015).

Bartlett, M. & Whitehill, J. Automated facial expression measurement: Recent applications to basic research in human behavior, learning, and education. In: Calder, A., Rhodes, G., Johnson, M. & Haxby, J. (editors). Handbook of face perception, 489–513 (Oxford University Press, Oxford, UK, 2011).

Nelson, N. L. & Russell, J. A. Universality revisited. Emotion Review 5(1), 8–15 (2013).

Calvo, M. G. & Nummenmaa, L. Eye-movement assessment of the time course in facial expression recognition: Neurophysiological implications. Cognitive, Affective & Behavioral Neuroscience 9(4), 398–411 (2009).

Elfenbein, H. A. & Ambady, N. When familiarity breeds accuracy: Cultural exposure and facial emotion recognition. Journal of Personality and Social Psychology 85(2), 276–290 (2003).

Palermo, R. & Coltheart, M. Photographs of facial expression: Accuracy, response times, and ratings of intensity. Behavior Research Methods, Instruments, & Computers 36(4), 634–638 (2004).

Tottenham, N. et al. The NimStim set of facial expressions: Judgments from untrained research participants. Psychiatry Research 168(3), 242–249 (2009).

Langner, O. et al. Presentation and validation of the Radboud Faces Database. Cogn Emot. 24(8), 1377–1388 (2010).

Hsiao, J. H. & Cottrell, G. Two fixations suffice in face recognition. Psychological Science 19(10), 998–1006 (2008).

Kanan, C., Bseiso, D. N., Ray, N. A., Hsiao, J. H. & Cottrell, G. W. Humans have idiosyncratic and task-specific scanpaths for judging faces. Vision Research 108, 67–76 (2015).

Meaux, E. & Vuilleumier, P. Facing mixed emotions: Analytic and holistic perception of facial emotion expressions engages separate brain networks. NeuroImage 141, 154–173 (2016).

Tanaka, J. W., Kaiser, M. D., Butler, S. & Le Grand, R. Mixed emotions: Holistic and analytic perception of facial expressions. Cogn Emot. 26(6), 961–977 (2012).

Calvo, M. G., Fernández-Martín, A., Recio, G. & Lundqvist, D. Human observers and automated assessment of dynamic emotional facial expressions: KDEF-dyn database validation. Frontiers in Psychology 9:2052 (2018).

Acknowledgements

This research was supported by Grant PSI2014-54720-P to MC from the Spanish Ministerio de Economía y Competitividad.

Author information

Authors and Affiliations

Contributions

M.C. designed the study and wrote the manuscript. A.F.M. developed the materials, conducted the experiment, and compiled the eye-movement data. A.G.G. developed the materials and performed the data analysis. D.L. wrote the manuscript. All authors reviewed the manuscript and approved the final version for submission.

Corresponding author

Ethics declarations

Competing Interests

The authors declare no competing interests.

Additional information

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Calvo, M.G., Fernández-Martín, A., Gutiérrez-García, A. et al. Selective eye fixations on diagnostic face regions of dynamic emotional expressions: KDEF-dyn database. Sci Rep 8, 17039 (2018). https://doi.org/10.1038/s41598-018-35259-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-018-35259-w

Keywords

This article is cited by

-

Depressive symptoms and visual attention to others’ eyes in healthy individuals

BMC Psychiatry (2024)

-

A Dynamic Disadvantage? Social Perceptions of Dynamic Morphed Emotions Differ from Videos and Photos

Journal of Nonverbal Behavior (2024)

-

Drift–diffusion modeling reveals that masked faces are preconceived as unfriendly

Scientific Reports (2023)

-

The role of facial movements in emotion recognition

Nature Reviews Psychology (2023)

-

Visual Attention to Dynamic Emotional Faces in Adults on the Autism Spectrum

Journal of Autism and Developmental Disorders (2023)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.