Abstract

Growing evidence indicates that perceptual-motor codes may be associated with and influenced by actual bodily states. Following a spinal cord injury (SCI), for example, individuals exhibit reduced visual sensitivity to biological motion. However, a dearth of direct evidence exists about whether profound alterations in sensorimotor traffic between the body and brain influence audio-motor representations. We tested 20 wheelchair-bound individuals with lower skeletal-level SCI who were unable to feel and move their lower limbs, but have retained upper limb function. In a two-choice, matching-to-sample auditory discrimination task, the participants were asked to determine which of two action sounds matched a sample action sound presented previously. We tested aural discrimination ability using sounds that arose from wheelchair, upper limb, lower limb, and animal actions. Our results indicate that an inability to move the lower limbs did not lead to impairment in the discrimination of lower limb-related action sounds in SCI patients. Importantly, patients with SCI discriminated wheelchair sounds more quickly than individuals with comparable auditory experience (i.e. physical therapists) and inexperienced, able-bodied subjects. Audio-motor associations appear to be modified and enhanced to incorporate external salient tools that now represent extensions of their body schemas.

Similar content being viewed by others

Introduction

The notion of embodied cognition postulates that knowledge is grounded on actual bodily states and that higher-order processes, such as mind- and intention- reading or action- and perception- understanding, can be mapped onto modal sensorimotor cortices1. The bodily instantiation of cognitive operations, or “embodiment”, and perceptual-motor state, or “simulation”, are thought to enable the inter-individual sharing of experiences2. Based on the results of single-cell recordings in monkeys3,4, many neuroimaging and neurophysiological studies have proposed that the adult human brain is equipped with neural systems and mechanisms that affect the perception and execution of actions in a common format5,6,7,8. Direct action perception strengthens motor representation9, and short-term motor experiences with a particular action may influence its visual recognition10 and facilitate action prediction11.

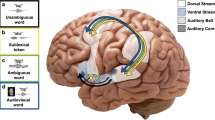

Both visual and auditory channels participate in perception-action coupling12. The mechanisms and neural structures involved in the motor coding of action-related sounds have been explored in able individuals using correlative and causative approaches13,14,15,16,17,18. These studies indicate that the perception of sounds from body part specific actions (e.g. ripping a sheet of paper) activates the left fronto-parietal network19 in a somatotopic arrangement16,20. Moreover, greater involvement of the left vs. right inferior parietal lobe has been reported when an observer’s attention is explicitly directed toward action sounds21.

The inability to perform or perceive a given motor action may impact on the structural integrity of that action representation22. In patients with apraxia, impairment in specific actions execution (e.g. inability to clap the hands) greatly reduces the individual’s capacity to acoustically recognize the corresponding motor event. However, the inadequate discrimination critically depends on a properly functioning left fronto-parietal network23. Individuals with congenital blindness and deafness who have total perceptual loss rely on less implicit motor representations when perceiving human actions24,25,26. Blind people, however, may still rely on the coding of aural tool action via simulation, which could reflect the activity of an inherent motor system24,26. This pattern of results suggests that the failure to “capture” the sensorial or motor information hinders the processes of auditory mapping of actions. Individuals with spinal cord injury (SCI) who are unable to move their lower limbs, have a reduced ability to discriminate between different observed movements, suggesting that action mapping may be fully determined by immediate motor signals27,28. However, recent data suggested the original representation of the limb deafferented persists in the sensorimotor cortex, even decades after deafferentation29,30,31. Thus, the origins of reduced perceptual sensitivity for biological motion are very unclear.

This functional imbalance of perceptual motor states may be partially restored with active tool use32. A body-held tool, for example, may become essential to the user if it facilitates mobility or other essential functions33,34. When this is the case, the tool may be processed as a part of one’s own body35,36,37,38 and guide visual-motor39 and audio-motor33,40 interactions. Indeed, people with SCI who are paralyzed and wheelchair-bound could treat their relevant artificial tools (wheelchair) as an extension/substitution of the functionality of the affected body part41.

In principle, individuals with SCI may be ideal for testing two fundamental, largely unaddressed simulation and embodiment issues: (i) how motor afference/efference influences the functional integrity of audio-motor mapping; and (ii) how relevant extracorporeal tools (e.g. wheelchairs) affect action representations.

We hypothesize that the perceptual and motor experiences induced by the sounds of a wheelchair and lower limb activity should differ substantially between subjects with different levels of exposure to wheelchair- and limb- action. To test this hypothesis, we examined audio-motor mapping in three groups of participant. Wheelchair-bound patients with SCI have extensive motor and auditory experience of wheelchair sounds42, but do not have motor use of their legs. Physical therapists with normal limb function have extensive perceptual experience of wheelchairs, but are not personally motor dependent on them. The third group consisted of able-bodied controls that had no previous experience of wheelchairs. We devised a novel psychophysical task that evaluated the auditory discrimination ability of sounds originating from actions produced by wheelchair use, the upper and lower limb, and animals. Listening to sounds of various actions performed by a tool or lower limbs allowed us to dissociate the perceptual and motor contributions of biological or artificial mobility entities18. Furthermore, the given task allowed us to investigate the inverse relationship between movements that the patients had previously possessed, lost, and then regained with wheelchair use, providing a way to move and to act in the world again.

Methods

Participants

At the Santa Lucia Hospital in Rome, Italy, we recruited 20 subjects with established lumbar or thoracic SCI (17 men; mean age, 40.8 years; range, 19–56 years), 20 able-bodied participants who had worked exclusively with SCI patients as physical therapists (18 men; mean age, 39.9 years; range, 27–54 years), and 20 able-bodied subjects who were not physical therapists (12 men; mean age, 40.8 years; range, 20–66 years). The three groups did not differ in age and level of education (p > 0.95). The physical therapists were employed full-time and had an average of five years experience in SCI patient rehabilitation (range, 1–25 years). All of the subjects were right-handed, as determined by the 10-item version of the Edinburgh Handedness Inventory43. No participants presented auditory discrimination deficits or signs of psychiatric disorders, and none had a history of substance abuse. Written, informed consent was obtained from each participant for all procedures. The experimental protocol was approved by the ethics committee of the Fondazione Santa Lucia and was performed in accordance with the relevant guidelines and regulations of the 1964 Declaration of Helsinki.

Assessment of individuals with SCI

All of the patients had a traumatic lesion at the thoracic or lumbar level of the spinal cord that caused paralysis of the lower limbs while sparing upper limb function. Lesions were located between T3 and L1, and the patients ranged from 6.3 to 219 months post-SCI (mean, 65 ± 75 months). Each patient was examined by a neurologist (G.S.) with specific, long-standing expertise in treating SCI patients. The neurological injury level was determined using the American Spinal Injury Association (ASIA) for the classification of SCIs44. Functional ability was quantified using the third version of the Spinal Cord Independence Measure (SCIM III)45. For the purposes of the experiment, the Self-care and the Management and Mobility subscales were considered. All patients were manual wheelchair users and recruited from physiotherapy programs of Spinal Cord Unit. None of the patients had experienced head or brain lesions, as documented by an MRI. The demographic and additional clinical data of the patients are presented in Table 1.

Sound-into-action translation test

Because the auditory system is an intact sensory channel to individuals with paraplegia, we used a sound-into-action translation task to explore the effects of a massive loss of motor function in the lower extremities on the ability to distinguish between different action-sounds. In a two-choice, matching-to-sample auditory action discrimination task, the participants were asked to determine which of two probe sounds matched the previously heard single sample sound. The sounds used included upper (URAS) and lower (LRAS) limb-related action sounds, wheelchair-related action sounds (WRAS), and no human (animal) action-related sounds (NHRAS).

Stimuli and task

The auditory stimuli (44.1 kHz, 16 bit, and monophonic) included 120 real-world sounds compiled by a sound engineer using professional collections (Sound Cinecittà, Rome, Italy, and Sound Ideas, Richmond Hill, Ontario, Canada). Many of these sounds were identical to those used in our previous studies23,26. The sounds were trimmed to an average duration of 4 sec (range, 3–6.5 sec) and presented to the participants at a comfortable decibel level through Sennheiser PC165 earphones, using the Presentation software (version 12.2, Neurobehavioral Systems, Inc.) on a Windows operating system.

Each sound belonged to one of the following four categories:

(1) Upper limb actions: a group of 10 sets of three different sounds. In this category, the sample, the matching and the non-matching stimuli were sounds of meaningful actions executed by the hands (e.g. knocking on a door).

(2) Lower limb actions: a group of 10 sets of three different sounds. In this category, the stimuli were three sounds of meaningful actions executed by the feet (e.g. descending footsteps on stairs).

(3) Wheelchair actions: a group of 10 sets of three different sounds. In this category, the stimuli were sounds of meaningful actions executed by manual or electronic wheelchair actions (e.g. WHC braking) and manual/electrical vehicle motion (e.g. bicycle braking).

(4) Non-human animal actions: a group of 10 sets of three sounds related to animal physical actions, excluding vocalizations (e.g. a bird flying).

A list of the auditory stimuli and information on the preliminary psychophysical studies are provided in the Supplementary Material.

Procedures

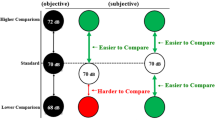

Each participant was tested in a single experimental session that lasted approximately 20 minutes. During this period, the subjects wore earphones and sat approximately 50 cm from a 17-inch computer monitor. Each trial was initiated by the presentation of a sample action sound that was selected randomly from one of the four categories (i.e. URAS, LRAS, WRAS or NHRAS). At the end of the sample sound presentation, two subsequent matching and non-matching action-sound stimuli were presented in quick succession, separated by an approximate interval of 100 msec. The matching action-sound represented the same motor act as the sample, but with different acoustic features. The non-matching action-sound was acoustically similar to the sample, but linked to a different action within the same category. The sequence of matching and non-matching sound stimuli was counter balanced. For example in the URAS category, a brief sample sound of an individual clapping three times was presented, after which the subjects listened to two additional sounds, one of which represented the same action as the sample sound, but was produced using a different source (e.g. group applause) and the other sound represented a completely different action produced by using the same body part, which was acoustically similar (e.g. knocking on a door three times). A schematic representation of the human lower limb auditory stimuli and procedure is shown in Fig. 1.

Action sound discrimination task. In each trial, following the presentation of a sample sound, two subsequent probe sounds were presented. Only one of the two probe sounds was specifically related to the sample sound. In the set of lower limb actions (e.g. “male footsteps on a glass surface” [the sample sound]), one probe sound represented the same action as the sample sound but was produced using a different source (e.g. “female footsteps on a wood surface”), whereas the other probe sound represented a totally different action produced using the same body part (e.g. “running”). No image associated with an aural action was provided.

The subjects were asked to choose between the two auditory stimuli to identify the sound that evoked the same action heard in the sample sound. To better discriminate among the three different sounds, the words “action sound” (for the sample sound) and the numerals 1 (for the first probe sound) and 2 (for the second probe sound) appeared on the black screen while each respective sound was played. No image associated with the aural action was provided. After all three sounds were presented, the final screen prompted the subject to choose a response by pressing a button. The participants were instructed to answer as accurately and quickly as possible, and their accuracy and response times after the prompt (i.e. latency) were recorded and analyzed. Before beginning the test, the participants were given four practice trials, after which performance feedback was provided. The practice auditory stimuli differed from those used in the experimental phase, after which no feedback was provided.

To evaluate the subjective rating of each sound category, a post-test session was conducted, in which the same participants were instructed to rank each sound in terms of familiarity and perceived motor intensity on a vertical 10-cm visual analog scale (VAS). The first question was intended to assess their experience with each sound category (“How familiar is this sound to you?”), while the second investigated a subject’s experience with the amount of movement sensations triggered by each sound (“To what degree do you feel your own movement is based on the action you have just heard?”). With regard to the first question, the lower and upper extremes of the VAS were “no familiarity” and “high familiarity,” respectively, whereas for the second question, these extremes indicated “no perceived movement” and “maximum perceived movement,” respectively. The participants were explicitly asked to rate the sounds, which were presented randomly, in a counterbalanced order. Finally, we collected structured reports on the implicit and explicit introspective experiences of regular wheelchair use in patients with SCI, using an adapted and slightly modified version of an ad-hoc devised questionnaire41.

Data analyses

The accuracy (raw data) and mean latency were calculated for each participant in each experimental condition (10 trials per category). Trials in which the reaction times (RTs) were two or more standard deviations above the mean for each subject were eliminated prior to the analysis (2% of the trials)46. Half of the eliminated trials were associated with URAS actions. Only the RTs for the correct response were considered. The individual accuracy, mean latency values and subjective ratings were entered into separate mixed-model analyses of variance (ANOVAs), with group (healthy subjects, patients with SCI, and physical therapists) as the between-subjects factor and sound category (URAS, LRAS, WRAS, and NHRAS) as the within-subjects factor. All pair-wise comparisons were performed using the Duncan post hoc test. The partial eta-squared (ηp2) measure of variance was selected as the index of effect size47. A significance threshold of p < 0.05 was set for all of the statistical analyses. The data are reported as mean ± standard error of the mean (SEM).

Results

Sound-into-action translation test

Sound discrimination performance in patients with SCI was >83% for all conditions. No significant effects of group (F2,57 = 1.17, p = 0.31), of sound category (F3,171 = 1.77, p < 0.15), and no group × sound category interactions (F6,171 = 1.06, p = 0.4) were observed, indicating that the three groups had comparable performance in the four different sound categories.

The ANOVA of latency (Fig. 2) revealed no significant main effects of group (F2,57 = 0.85, p = 0.43) but a significant effect of sound category (F3,171 = 8.3, p = 0.0001, ηp2 > 0.13) and group × sound category interaction (F6,171 = 9.95, p = 0.0001, ηp2 > 0.26). SCI patient performance was similar for sounds related to actions of the lower (LRAS = 760 msec) and upper (URAS = 703 msec) limbs (p > 0.19), suggesting that patients with SCI retained their upper and lower limb sound performance.

Latency in action-sound discrimination. The mean latency for each sound category (upper (URAS) and lower (LRAS) limb-related action sounds, wheelchair-related action sounds (WRAS), and animal action-related sounds (NHRAS)) in the three subject groups (healthy individuals, physical therapists and individuals with spinal cord injuries). The error bars indicate the standard error of the mean (SEM). The asterisk (*) indicates significant results from the post hoc comparisons (p < 0.05).

No significant latency differences were observed between the lower and upper limb sounds in any of the three groups: healthy subjects (LRAS = 653 msec, URAS = 695 msec), physical therapists (LRAS = 682 msec, URAS = 744 msec), and patients with SCI (all ps > 0.1). Importantly, the post hoc comparisons revealed that the patients with SCI discriminated the WRAS earlier (652 msec) than the able-bodied individuals with comparable auditory experience (physical therapists: 813 msec, p < 0.01) and those with no comparable perceptual experience (healthy subjects: 949 msec, p < 0.0001). The latency difference in RTs between the physical therapists and healthy subjects was also statistically significant (p < 0.01). Notably, in patients with SCI, the RTs for WRAS were comparable to the RTs elicited by upper (URAS = 703 msec, p > 0.26) but not of lower limb action sounds (LRAS = 760 msec, p < 0.02). Regular wheelchair use contributed to a specific and significant readiness to recognize the sounds produced by a wheelchair. Latency improvements were not accompanied by changes in accuracy, thereby ruling out potential speed/accuracy trade-off effects.

There were also no differences between the three groups with regard to their responses to animal action sounds (p > 0.60). Moreover, no differences were observed in discrimination latency between the WHCM and WHCE sounds or with regard to the order of the presentation of the correct probe (all p > 0.80).

We also examined whether the time since the injury influenced RTs for sound discrimination. No significant correlations were found between the SCI lesion-testing interval and latency in the discrimination of each sound category (Spearman correlation analyses; LRAS, r20 = −0.06, t18 = −0.28, p > 0.78; URAS, r20 = 0.04, t18 = 0.20, p > 0.84; WRAS, r20 = 0.12, t18 = 0.5, p > 0.62). All patients were in the chronic injury phase (at least six months post-injury), and the time since injury did not appear to play a major role in sound discrimination related to wheelchair action, suggesting that plastic changes could occur rapidly and lead to behavioral gain.

Altogether, these findings suggest that active use of a sound-producing device, as opposed to mere exposure to the sounds, modulates readiness to recognize associated sounds. The inability of patients with SCI to move their lower limbs did not influence their ability to discriminate sounds of lower limb movement.

Subjective ratings of familiarity and perceived motor reactivity when listening to sounds

At the end of the test, a VAS was used to measure each participant’s perceived motor reactivity and auditory familiarity ratings for each of the four sound categories. The mean VAS ratings are shown in Fig. 3.

Subjective ratings of action-sound familiarity and perceived motor intensity. The mean subjective Visual Analog Scale (VAS) ratings for auditory familiarity and perceived movement for each sound category (upper (URAS) and lower (LRAS) limb-related action sounds, wheelchair-related action sounds (WRAS), and animal action-related sounds (NHRAS)) in the three subject groups (healthy individuals, physical therapists and individuals with spinal cord injuries). The error bars indicate the standard error of the mean (SEM). The asterisks (*) indicate significant results from the post hoc comparisons (p < 0.05).

The ANOVA of perceived reactivity motor ratings for each sound (Fig. 3) yielded significant effects of sound category (F3,171 = 13.6, p < 0.0001, ηp2 > 0.19). Post hoc testing revealed that the subjectively perceived motor reactivity during passive listening was higher for human action sounds (LRAS = 6.8, URAS = 5.6; p = 0.01) than for WRAS (4.6; p < 0.02) or NHRAS (4.7; p < 0.03). No significant differences were observed between groups (F2,57 = 1.61, p = 0.20). Crucially, the ANOVA revealed a significant group × sound category interaction (F6,171 = 16.02, p < 0.0001, ηp2 > 0.35). In patients with SCI, the perceived motor reactivity to sounds that implied lower limbs movement (2.6 ± 2.4; p = 0.0005) consistently received lower ratings than in able-bodied individuals (healthy individuals: LRAS = 7.3 ± 1.7; physical therapists: LRAS = 7.1 ± 2.7) and was significantly lower when compared with WRAS sounds (6.9 ± 2.9) and URAS sounds (6.5 ± 3.04). These results suggest that the absence of motor signals reduces the reactivity with which actions can be perceived from an associated sound48. Instead, the perceived motor reactivity to WHC sounds received higher ratings in the patients with SCI (WRAS = 6.9, p < 0.01) than in able-bodied individuals (healthy subjects: WRAS = 2.3 ± 2.4; physical therapists: WRAS = 4.5 ± 2.7).

Despite the aural expertise of physical therapists, their perceived motor reactivity to WRAS sounds was significantly lower than their reactivity to human (URAS = 7.4 ± 2.5, LRAS = 7 ± 2.7; p = 0.0001) and NHRAS (5.1 ± 2.9; p = 0.002) action sounds. Unsurprisingly, healthy subjects were unaccustomed to the WRAS sounds, and their perceived motor reactivity to them was significantly lower than their reactivity to the human (URAS = 6.6 ± 2.5, LRAS = 7.3 ± 1.7; p = 0.0001) and was comparable to NHRAS (3.9 ± 2.8; p = 0.16) action sounds. No significant differences in perceived motor reactivity were observed between the two groups of able-bodied individuals (p > 0.24).

The ANOVA of subjective familiarity ratings (Fig. 3) revealed a significant main effect of sound category (F3,171 = 34.8, p < 0.0001, ηp2 > 0.37). Specifically, higher VAS ratings were found for human action sounds (LRAS = 8.4, URAS = 8.3) than for non-human action sounds (WRAS = 5.7, NHRAS = 6.7; p < 0.003). No significant differences in familiarity were observed between participant groups (F2,57 = 0.66, p = 0.42). However, we did observe a significant group × sound category interaction (F6,171 = 17.35, p < 0.0001, ηp2 > 0.37). Physical therapists (7.1 ± 1.7) and SCI patients (6.9 ± 2.9) had similar levels of familiarity with regard to WRAS actions sounds (p > 0.37), and this level of familiarity was not significantly different from the familiarity with human lower limbs (physical therapists: LRAS = 8.18 ± 2; SCI patients: LRAS = 7.6 ± 2.3) and upper limbs (physical therapists: URAS = 8.17 ± 1.7; SCI patients: URAS = 8.2 ± 1.9) action sounds and non-human action sounds (physical therapists: NHRAS = 6.9 ± 1.6; SCI patients: NHRAS = 6.7 ± 2.4; all p > 0.14).

As expected, in the group of healthy subjects, the familiarity ratings for WRAS action sounds (2.6 ± 1.9) were significantly lower than the familiarity ratings for the human (URAS = 8.9 ± 1.3, LRAS = 9.2 ± 0.8; p = 0.0001) and non-human (NHRAS = 6.4 ± 1.7; p = 0.0001) action sounds. The familiarity rating for WRAS was significantly different from those measured in the physical therapists and SCI patients (all p < 0.0001).

The subjects were also briefly interviewed with regard to their feelings about the auditory stimuli. A “yes” or “no” response was required for the following questions: (1) “Do you pay more attention to a specific category of auditory stimuli?” and (2) “Do you feel more emotional participation when hearing a precise sound category?” In the case of a “yes” response, the subject was asked to explain the answer. Sound discrimination does not appear to be explained by category-specific, attention-driving tendencies. Indeed, all subjects stated that they did not pay particular attention to the specific sound category. Presenting the auditory stimuli in a random order may have prevented the subjects from focusing their attention on a particular auditory stimulus category. Four SCI patients declared an increase in emotional participation when hearing lower limb sounds, while another three reported greater emotional participation when hearing animal sounds. Only one SCI patient and one physical therapist (who was married to an individual with SCI) experienced more emotional involvement when hearing the WHC sounds compared with the other sound categories.

Together, these findings suggest that although the SCI patients and auditory experts (i.e. physical therapists) demonstrated the same degree of familiarity with the WHC sounds, the greatest differences between the three groups occurred with regard to the subjective motor experiences associated with WHC and lower limb action sounds.

Discussion

Many theories have proposed an association between the perception and execution of actions, suggesting that both are coded according to a common representational format49,50,51,52,53,54,55. Neural studies in healthy16,56,57 and brain-damaged58,59 individuals indicate that action perception and execution rely on largely overlapping neural substrates. A lesser corticospinal motor reactivity to vision24,25 and sound of action25,26 in individual with congenital blindness and deafness, respectively, was found. Importantly, it is unclear whether lifelong (mobility by lower limbs) and newly acquired (mobility by WHC) perceptual and motor experiences differently impact the integrity of action-perception mapping. The present study investigated action sound mapping in SCI patients and revealed two key findings. First, SCIs that have induced a total loss of lower limb function do not lead to a general reduction of the perceptual motor mapping of lower limb action sounds. Second, we provided empirical measures revealing that the sounds related to their wheelchairs were more salient in patients with SCI than medical professionals who worked with wheelchair users.

Multimodal coding of long-term action in the complete absence of motor mediation

Alteration of the action network involved in the perception of human motor actions may occur in the absence of a cortical lesion, as in blind24,26, deaf25 and SCI28,60 individuals. This prompted us to investigate whether somatosensory deafferentation and motor deefferentation of specific body parts alter the audio-motor mapping of actions generated by the affected body part. Thoracic and lumbar SCIs lead to a loss of movement in the legs while sparing arm function. Consequently, these types of injuries offer an ideal experimental approach for exploring how sound-related actions associated with the upper and lower limbs are processed in an individual.

As mentioned previously, patients with SCIs exhibit reduced visual perceptual sensitivity regarding the biological motion of point-light displays of the entire body28 and specific impairments in the visual perception of form and action in disconnected body parts27. In this study, we expected that the processing of sounds depicting upper-limb actions would be unimpaired while the processing of sounds depicting lower-limb actions might be degraded. However, we obtained psychophysical evidence that paraplegic patients recognize lower- and upper-limb actions as efficiently as able-bodied individuals. Importantly, in SCI individuals, the perceptual ability to discriminate upper- and lower-limb action sounds was comparable. The inability to move and feel the lower limbs did not lead to a deficit in the sound discrimination of the actions, even several years after the initial injury.

Several mechanisms may explain the preservation of perceptual signaling referring to the paralyzed portion of the body following SCI. Even with a long history of absent sensation and movement after injury, accurate perceptual discrimination may be mediated by long-term motor representations that were learned before the injury. Studies of amputee patients have revealed that perceptual sensitivity associated with the missing limb remains accurate61, including in processes that require motor simulation62. Additionally, in clinical conditions such as the Möbius sequence, studies of expression recognition difficulties have provided evidence that feedback from facial movement is unnecessary, which is contrary to the strongest form of the embodied simulation theory63.

It is important to note that the sounds utilized in the present study are highly relevant to everyday motor functions that the patients had performed regularly prior to injury. These representations could, however, be updated and reinforced through visual and acoustic experiences involving ambulatory individuals encountered in daily life. Action-related networks may be activated to mediate motor limb sound representation even if motor plans have not been utilized for years. Accordingly, the brain regions involved in foot movements appear to remain relatively preserved and active even years after the body has been massively deafferented/deefferented64,65,66,67,68,69.

All of the patients recruited into our study were also involved in a motor program at a rehabilitation center. As part of the motor imagery program, they attempted natural movements and exercise training, including attempts at moving the foot and, to a lesser degree, walking. Accordingly, recent neuroimaging studies have demonstrated that a common observation-execution network including the ventral premotor cortex, parietal cortex and cerebellum is activated at a normal level through attempts to move a given body part and through observations of the movements of other individuals, long after the onset of complete SCI67,68,70,71,72.

Studies of either virtual (via transcranial magnetic stimulation) or natural lesions have probed the essential role of the fronto-parietal regions in mediating the auditory and visual processing of body actions13,15,73,74,75. The preservation of perceptual ability in SCI patients suggests that the cortical regions involved in action simulation could play a compensatory role by facilitating the maintenance of intact audio-motor resonance in patients with impaired lower-limb motor functions. However, the presence of intact audio-motor mapping in patients with profoundly impaired body-brain communication may conflict with findings from studies of visual-motor action translation in SCI patients28,27. One way to reconcile this potential discrepancy concerns the quality of the experience of actions mediated through visual vs. auditory inputs. Indeed, whereas vision allows one to directly simulate a specific action (e.g., grasping an object), auditory input may elicit the simulation of more than one action related to the sound that was heard (e.g., clapping different hands), thus enabling the simulation of the heard action in multiple, indirect ways as well as enabling higher degrees of compensatory flexibility19. Importantly, although we used ecologically relevant sounds of daily human actions, perceptual alterations in SCI studies appear only when a more demanding task that requires the recognition of an unnatural expression (e.g., the direction of motion of a point-light28 or a humanoid form that assumes a sports posture27) is presented. Embodied simulation for most basic of perceptions could be not necessary63.

Does the auditory coding of actions evoked by assistive tools trigger embodied simulation?

The present study investigated action sound mapping in individuals with SCI with functioning upper limbs and nonfunctioning lower limbs who have regained mobility using an assistive tool such as a wheelchair. We provide the first psychophysical evidence that patients with SCI can distinguish WHC sounds from other distracting sounds more rapidly than individuals with no direct perceptual or motor wheelchair experience. Notably, the ability of the audio–motor system to distinguish wheelchair actions recalls the greater perceived motor reactivity present when passively listening to WHC sounds. These findings suggest that the active use of a wheelchair—as opposed to a mere perceptual, passive exposure to it—modulates the readiness to recognize its associated sounds. Visual and auditory wheelchair familiarity, although not fundamental, certainly play a role in distinguishing its sounds, as indicated by the enhanced WHC sound discrimination of physical therapists when compared with healthy subjects. However, the acquisition of motor skills through physical vs. perceptual practice may imply a highly selective coupling of perceptual motor information.

Processing the sounds of a wheelchair may be involved in associating or matching motor ideas regarding upper limb manipulations that could be linked with sound production in order to set an accurate movement on wheelchair; this emphasizes the intimate relationships among perceptual motor systems. Thus, these patients’ ability to quickly extract and distinguish relevant WHC sounds may not be determined so much by familiarity with the signal characteristics of their assistive tool, but rather by the relationship between the sound and their experiences with the probable actions of wheelchair-executed motion76. Additionally, when a tool extends the movement potential of a physically impaired individual, it may be included in the internal representation of the body schema to meet the novel demands of immobile limbs, a phenomenon called tool embodiment. The striking effects of perceptual motor practice with specific objects have been shown to induce long-term structural changes in monkey77 and human40,78 body representations. Thus, the more rapid response to wheelchair sounds could be attributed to the integration of the signals (and their interactions) belonging to the person in terms of the active control and perception of the tool and the experience of the embodiment of the instrument into the motor abilities, including the perceptual features of the wheelchair42,79,80. As posited by previous theoretical34,81 and quantitative38,41,82,83 studies, the acquisition of wheelchair skills by SCI patients alters their body schema by adding corporeal awareness of the device. That is, tools that have been in contact with the body recalibrate multisensory representation84,85 and induce short- and long-term neuroplastic changes in the motor system86 following active (rather than passive) use of the body87,88,89. This means that the tool becomes part of the body in action and in the person in the sense that it modifies the way the person perceives38, moves in90, and relates to the world34. Although the process that regulates tool incorporation has different complexity levels, the embodiment and agency of devices could induce intuitive control and could facilitate learning, user efficiency, and the acceptance of new assistive device7,12,13,14. However, at present, embodied technology remains a concept that needs to be experimentally explored in order to forge new rehabilitative opportunities33,40. Accordingly, multimodal modulation—not only visual modulation—may be a viable intervention for tool rehabilitation and treatment following SCI91,92.

Data Availability

Data that support the findings of this study are available from the corresponding author upon reasonable request.

References

Barsalou, L. W. Grounded cognition. Annu Rev Psychol 59, 617–645, https://doi.org/10.1146/annurev.psych.59.103006.093639 (2008).

Gallese, V. Embodied simulation: from mirror neuron systems to interpersonal relations. Novartis Found Symp 278, 3–12; discussion 12–19, 89–96, 216–221 (2007).

Gallese, V., Fadiga, L., Fogassi, L. & Rizzolatti, G. Action recognition in the premotor cortex. Brain: a journal of neurology 119(Pt 2), 593–609 (1996).

Fogassi, L. et al. Parietal lobe: from action organization to intention understanding. Science 308, 662–667, https://doi.org/10.1126/science.1106138 (2005).

Rizzolatti, G. & Craighero, L. The mirror-neuron system. Annual review of neuroscience 27, 169–192, https://doi.org/10.1146/annurev.neuro.27.070203.144230 (2004).

Van Overwalle, F. & Baetens, K. Understanding others’ actions and goals by mirror and mentalizing systems: a meta-analysis. NeuroImage 48, 564–584, https://doi.org/10.1016/j.neuroimage.2009.06.009 (2009).

Hommel, B., Musseler, J., Aschersleben, G. & Prinz, W. The Theory of Event Coding (TEC): a framework for perception and action planning. The Behavioral and brain sciences 24, 849–878; discussion 878-937 (2001).

Schutz-Bosbach, S. & Prinz, W. Perceptual resonance: action-induced modulation of perception. Trends in cognitive sciences 11, 349–355, https://doi.org/10.1016/j.tics.2007.06.005 (2007).

Stefan, K. et al. Formation of a motor memory by action observation. The Journal of neuroscience: the official journal of the Society for Neuroscience 25, 9339–9346, https://doi.org/10.1523/JNEUROSCI.2282-05.2005 (2005).

Casile, A. & Giese, M. A. Nonvisual motor training influences biological motion perception. Current biology: CB 16, 69–74, https://doi.org/10.1016/j.cub.2005.10.071 (2006).

Pazzaglia, M. Does what you hear predict what you will do and say? The Behavioral and brain sciences, 42–43, https://doi.org/10.1017/S0140525X12002804 (2013).

Aglioti, S. M. & Pazzaglia, M. Representing actions through their sound. Exp Brain Res 206, 141–151, https://doi.org/10.1007/s00221-010-2344-x (2010).

Aziz-Zadeh, L., Iacoboni, M., Zaidel, E., Wilson, S. & Mazziotta, J. Left hemisphere motor facilitation in response to manual action sounds. The European journal of neuroscience 19, 2609–2612, https://doi.org/10.1111/j.0953-816X.2004.03348.x (2004).

Alaerts, K., Swinnen, S. P. & Wenderoth, N. Interaction of sound and sight during action perception: evidence for shared modality-dependent action representations. Neuropsychologia 47, 2593–2599, https://doi.org/10.1016/j.neuropsychologia.2009.05.006 (2009).

Ticini, L. F., Schutz-Bosbach, S., Weiss, C., Casile, A. & Waszak, F. When sounds become actions: higher-order representation of newly learned action sounds in the human motor system. Journal of cognitive neuroscience 24, 464–474, https://doi.org/10.1162/jocn_a_00134 (2012).

Gazzola, V., Aziz-Zadeh, L. & Keysers, C. Empathy and the somatotopic auditory mirror system in humans. Current biology: CB 16, 1824–1829, https://doi.org/10.1016/j.cub.2006.07.072 (2006).

Lahav, A., Saltzman, E. & Schlaug, G. Action representation of sound: audiomotor recognition network while listening to newly acquired actions. The Journal of neuroscience: the official journal of the Society for Neuroscience 27, 308–314, https://doi.org/10.1523/JNEUROSCI.4822-06.2007 (2007).

De Lucia, M., Camen, C., Clarke, S. & Murray, M. M. The role of actions in auditory object discrimination. NeuroImage 48, 475–485, https://doi.org/10.1016/j.neuroimage.2009.06.041 (2009).

Aglioti, S. M. & Pazzaglia, M. Sounds and scents in (social) action. Trends in cognitive sciences 15, 47–55, https://doi.org/10.1016/j.tics.2010.12.003 (2011).

Schubotz, R. I., von Cramon, D. Y. & Lohmann, G. Auditory what, where, and when: a sensory somatotopy in lateral premotor cortex. NeuroImage 20, 173–185 (2003).

Lewis, J. W., Phinney, R. E., Brefczynski-Lewis, J. A. & DeYoe, E. A. Lefties get it “right” when hearing tool sounds. Journal of cognitive neuroscience 18, 1314–1330, https://doi.org/10.1162/jocn.2006.18.8.1314 (2006).

Pazzaglia, M. Impact Commentaries. Action discrimination: impact of apraxia. J Neurol Neurosurg Psychiatry 84, 477–478, https://doi.org/10.1136/jnnp-2012-304817 (2013).

Pazzaglia, M., Pizzamiglio, L., Pes, E. & Aglioti, S. M. The sound of actions in apraxia. Current biology: CB 18, 1766–1772, https://doi.org/10.1016/j.cub.2008.09.061 (2008).

Ricciardi, E. et al. Do we really need vision? How blind people “see” the actions of others. The Journal of neuroscience: the official journal of the Society for Neuroscience 29, 9719–9724, https://doi.org/10.1523/JNEUROSCI.0274-09.2009 (2009).

Alaerts, K., Swinnen, S. P. & Wenderoth, N. Action perception in individuals with congenital blindness or deafness: how does the loss of a sensory modality from birth affect perception-induced motor facilitation? Journal of cognitive neuroscience 23, 1080–1087, https://doi.org/10.1162/jocn.2010.21517 (2011).

Lewis, J. W. et al. Cortical network differences in the sighted versus early blind for recognition of human-produced action sounds. Human brain mapping 32, 2241–2255, https://doi.org/10.1002/hbm.21185 (2011).

Pernigo, S. et al. Massive somatic deafferentation and motor deefferentation of the lower part of the body impair its visual recognition: a psychophysical study of patients with spinal cord injury. The European journal of neuroscience. https://doi.org/10.1111/j.1460-9568.2012.08266.x (2012).

Arrighi, R., Cartocci, G. & Burr, D. Reduced perceptual sensitivity for biological motion in paraplegia patients. Current biology: CB 21, R910–911, https://doi.org/10.1016/j.cub.2011.09.048 (2011).

Makin, T. R. et al. Phantom pain is associated with preserved structure and function in the former hand area. Nature communications 4, 1570, https://doi.org/10.1038/ncomms2571 (2013).

Makin, T. R., Scholz, J., Henderson Slater, D., Johansen-Berg, H. & Tracey, I. Reassessing cortical reorganization in the primary sensorimotor cortex following arm amputation. Brain: a journal of neurology 138, 2140–2146, https://doi.org/10.1093/brain/awv161 (2015).

Makin, T. R. & Bensmaia, S. J. Stability of Sensory Topographies in Adult Cortex. Trends in cognitive sciences 21, 195–204, https://doi.org/10.1016/j.tics.2017.01.002 (2017).

Pereira, B. P., Kour, A. K., Leow, E. L. & Pho, R. W. Benefits and use of digital prostheses. The Journal of hand surgery 21, 222–228, https://doi.org/10.1016/S0363-5023(96)80104-3 (1996).

Serino, A., Bassolino, M., Farne, A. & Ladavas, E. Extended multisensory space in blind cane users. Psychological science 18, 642–648, https://doi.org/10.1111/j.1467-9280.2007.01952.x (2007).

Papadimitriou, C. Becoming en-wheeled: The situated accomplishment of re-embodiment as a wheelchair user after spinal cord injury. Disability & Society 23, 691–704 (2008).

Longo, M. R. & Serino, A. Tool use induces complex and flexible plasticity of human body representations. The Behavioral and brain sciences 35, 229–230, https://doi.org/10.1017/S0140525X11001907 (2012).

Farne, A. & Ladavas, E. Dynamic size-change of hand peripersonal space following tool use. Neuroreport 11, 1645–1649 (2000).

Lenggenhager, B., Pazzaglia, M., Scivoletto, G., Molinari, M. & Aglioti, S. M. The sense of the body in individuals with spinal cord injury. PLoS One 7, e50757, https://doi.org/10.1371/journal.pone.0050757 (2012).

Fuentes, C. T., Pazzaglia, M., Longo, M. R., Scivoletto, G. & Haggard, P. Body image distortions following spinal cord injury. J Neurol Neurosurg Psychiatry 84, 201–207, https://doi.org/10.1136/jnnp-2012-304001 (2013).

Iriki, A., Tanaka, M. & Iwamura, Y. Coding of modified body schema during tool use by macaque postcentral neurones. Neuroreport 7, 2325–2330 (1996).

Bassolino, M., Serino, A., Ubaldi, S. & Ladavas, E. Everyday use of the computer mouse extends peripersonal space representation. Neuropsychologia 48, 803–811, https://doi.org/10.1016/j.neuropsychologia.2009.11.009 (2010).

Pazzaglia, M., Galli, G., Scivoletto, G. & Molinari, M. A Functionally Relevant Tool for the Body following Spinal Cord Injury. PloS one 8, e58312, https://doi.org/10.1371/journal.pone.0058312 (2013).

Galli, G., Lenggenhager, B., Scivoletto, G., Molinari, M. & Pazzaglia, M. Don’t look at my wheelchair! The plasticity of longlasting prejudice. Med Educ 49, 1239–1247, https://doi.org/10.1111/medu.12834 (2015).

Oldfield, R. C. The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia 9, 97–113 (1971).

Marino, R. J. et al. International standards for neurological classification of spinal cord injury. The journal of spinal cord medicine 26, Suppl 1, S50–56 (2003).

Catz, A. & Itzkovich, M. Spinal Cord Independence Measure: comprehensive ability rating scale for the spinal cord lesion patient. Journal of rehabilitation research and development 44, 65–68 (2007).

Ratcliff, R. Methods for dealing with reaction time outliers. Psychological bulletin 114, 510–532 (1993).

Cohen, J. Eta-squared and partial eta-squared in fixed factor ANOVA designs. Educational and Psychological Measurement 33, 107–112 (1973).

de Vignemont, F. Embodiment, ownership and disownership. Consciousness and cognition 20, 82–93, https://doi.org/10.1016/j.concog.2010.09.004 (2011).

Prinz, W. Perception and action planning. European Journal of Cognitive Psychology 9, 129–154 (1997).

Brass, M., Bekkering, H. & Prinz, W. Movement observation affects movement execution in a simple response task. Acta psychologica 106, 3–22 (2001).

Brass, M., Bekkering, H., Wohlschlager, A. & Prinz, W. Compatibility between observed and executed finger movements: comparing symbolic, spatial, and imitative cues. Brain Cogn 44, 124–143, https://doi.org/10.1006/brcg.2000.1225 (2000).

Craighero, L., Bello, A., Fadiga, L. & Rizzolatti, G. Hand action preparation influences the responses to hand pictures. Neuropsychologia 40, 492–502 (2002).

Kilner, J. M., Paulignan, Y. & Blakemore, S. J. An interference effect of observed biological movement on action. Current biology: CB 13, 522–525 (2003).

Sturmer, B., Aschersleben, G. & Prinz, W. Correspondence effects with manual gestures and postures: a study of imitation. Journal of experimental psychology. Human perception and performance 26, 1746–1759 (2000).

Repp, B. H. & Knoblich, G. Action can affect auditory perception. Psychological science 18, 6–7, https://doi.org/10.1111/j.1467-9280.2007.01839.x (2007).

Rizzolatti, G., Fadiga, L., Gallese, V. & Fogassi, L. Premotor cortex and the recognition of motor actions. Brain research. Cognitive brain research 3, 131–141 (1996).

Doehrmann, O., Weigelt, S., Altmann, C. F., Kaiser, J. & Naumer, M. J. Audiovisual functional magnetic resonance imaging adaptation reveals multisensory integration effects in object-related sensory cortices. The Journal of neuroscience: the official journal of the Society for Neuroscience 30, 3370–3379, https://doi.org/10.1523/JNEUROSCI.5074-09.2010 (2010).

Pazzaglia, M., Smania, N., Corato, E. & Aglioti, S. M. Neural underpinnings of gesture discrimination in patients with limb apraxia. The Journal of neuroscience: the official journal of the Society for Neuroscience 28, 3030–3041, https://doi.org/10.1523/JNEUROSCI.5748-07.2008 (2008).

Pazzaglia, M. & Galli, G. Translating novel findings of perceptual-motor codes into the neuro-rehabilitation of movement disorders. Front Behav Neurosci 9, 222, https://doi.org/10.3389/fnbeh.2015.00222 (2015).

Pazzaglia, M. et al. Phantom limb sensations in the ear of a patient with a brachial plexus lesion. Cortex, https://doi.org/10.1016/j.cortex.2018.08.020 (2018).

Nico, D., Daprati, E., Rigal, F., Parsons, L. & Sirigu, A. Left and right hand recognition in upper limb amputees. Brain: a journal of neurology 127, 120–132, https://doi.org/10.1093/brain/awh006 (2004).

Fiorio, M., Tinazzi, M. & Aglioti, S. M. Selective impairment of hand mental rotation in patients with focal hand dystonia. Brain: a journal of neurology 129, 47–54, https://doi.org/10.1093/brain/awh630 (2006).

Bate, S., Cook, S. J., Mole, J. & Cole, J. First report of generalized face processing difficulties in mobius sequence. PloS one 8, e62656, https://doi.org/10.1371/journal.pone.0062656 (2013).

Corbetta, M. et al. Functional reorganization and stability of somatosensory-motor cortical topography in a tetraplegic subject with late recovery. Proceedings of the National Academy of Sciences of the United States of America 99, 17066–17071, https://doi.org/10.1073/pnas.262669099 (2002).

Cramer, S. C., Lastra, L., Lacourse, M. G. & Cohen, M. J. Brain motor system function after chronic, complete spinal cord injury. Brain: a journal of neurology 128, 2941–2950, https://doi.org/10.1093/brain/awh648 (2005).

Cramer, S. C., Orr, E. L., Cohen, M. J. & Lacourse, M. G. Effects of motor imagery training after chronic, complete spinal cord injury. Experimental brain research. Experimentelle Hirnforschung. Experimentation cerebrale 177, 233–242, https://doi.org/10.1007/s00221-006-0662-9 (2007).

Hotz-Boendermaker, S., Hepp-Reymond, M. C., Curt, A. & Kollias, S. S. Movement observation activates lower limb motor networks in chronic complete paraplegia. Neurorehabilitation and neural repair 25, 469–476, https://doi.org/10.1177/1545968310389184 (2011).

Hotz-Boendermaker, S. et al. Preservation of motor programs in paraplegics as demonstrated by attempted and imagined foot movements. NeuroImage 39, 383–394, https://doi.org/10.1016/j.neuroimage.2007.07.065 (2008).

Alkadhi, H. et al. What disconnection tells about motor imagery: evidence from paraplegic patients. Cereb Cortex 15, 131–140, https://doi.org/10.1093/cercor/bhh116 (2005).

Mattia, D. et al. Motor cortical responsiveness to attempted movements in tetraplegia: evidence from neuroelectrical imaging. Clinical neurophysiology: official journal of the International Federation of Clinical Neurophysiology 120, 181–189, https://doi.org/10.1016/j.clinph.2008.09.023 (2009).

Mattia, D. et al. Motor-related cortical dynamics to intact movements in tetraplegics as revealed by high-resolution EEG. Human brain mapping 27, 510–519, https://doi.org/10.1002/hbm.20195 (2006).

Truccolo, W., Friehs, G. M., Donoghue, J. P. & Hochberg, L. R. Primary motor cortex tuning to intended movement kinematics in humans with tetraplegia. The Journal of neuroscience: the official journal of the Society for Neuroscience 28, 1163–1178, https://doi.org/10.1523/JNEUROSCI.4415-07.2008 (2008).

Moro, V. et al. The neural basis of body form and body action agnosia. Neuron 60, 235–246, https://doi.org/10.1016/j.neuron.2008.09.022 (2008).

Fazio, P. et al. Encoding of human action in Broca’s area. Brain: a journal of neurology 132, 1980–1988, https://doi.org/10.1093/brain/awp118 (2009).

Pazzaglia, M. & Galli, G. Loss of agency in apraxia. Front Hum Neurosci 8, 751, https://doi.org/10.3389/fnhum.2014.00751 (2014).

Kording, K. P. & Wolpert, D. M. The loss function of sensorimotor learning. Proceedings of the National Academy of Sciences of the United States of America 101, 9839–9842, https://doi.org/10.1073/pnas.0308394101 (2004).

Quallo, M. M. et al. Gray and white matter changes associated with tool-use learning in macaque monkeys. Proceedings of the National Academy of Sciences of the United States of America 106, 18379–18384, https://doi.org/10.1073/pnas.0909751106 (2009).

Aglioti, S. M., Cesari, P., Romani, M. & Urgesi, C. Action anticipation and motor resonance in elite basketball players. Nature neuroscience 11, 1109–1116 (2008).

Galli, G. & Pazzaglia, M. Commentary on: “The body social: an enactive approach to the self”. A tool for merging bodily and social self in immobile individuals. Front Psychol 6, 305, https://doi.org/10.3389/fpsyg.2015.00305 (2015).

Pazzaglia, M. & Molinari, M. The embodiment of assistive devices-from wheelchair to exoskeleton. Phys Life Rev 16, 163–175, https://doi.org/10.1016/j.plrev.2015.11.006 (2016).

Standal, O. F. Re-embodiment: incorporation through embodied learning of wheelchair skills. Medicine, health care, and philosophy 14, 177–184, https://doi.org/10.1007/s11019-010-9286-8 (2011).

Arnhoff, F. & Mehl, M. Body image deterioration in paraplegia. Journal of Nervous & Mental Disease 137, 88–92 (1963).

Higuchi, T., Hatano, N., Soma, K. & Imanaka, K. Perception of spatial requirements for wheelchair locomotion in experienced users with tetraplegia. J Physiol Anthropol 28, 15–21 (2009).

Miller, L. E., Cawley-Bennett, A., Longo, M. R. & Saygin, A. P. The recalibration of tactile perception during tool use is body-part specific. Experimental brain research. Experimentelle Hirnforschung. Experimentation cerebrale 235, 2917–2926, https://doi.org/10.1007/s00221-017-5028-y (2017).

Miller, L. E., Longo, M. R. & Saygin, A. P. Tool morphology constrains the effects of tool use on body representations. Journal of experimental psychology. Human perception and performance 40, 2143–2153, https://doi.org/10.1037/a0037777 (2014).

Giraux, P., Sirigu, A., Schneider, F. & Dubernard, J. M. Cortical reorganization in motor cortex after graft of both hands. Nature neuroscience 4, 691–692, https://doi.org/10.1038/89472 (2001).

Cardinali, L. et al. Tool-use induces morphological updating of the body schema. Current biology: CB 19, R478–479, https://doi.org/10.1016/j.cub.2009.05.009 (2009).

Cardinali, L. et al. Grab an object with a tool and change your body: tool-use-dependent changes of body representation for action. Experimental brain research. Experimentelle Hirnforschung. Experimentation cerebrale 218, 259–271, https://doi.org/10.1007/s00221-012-3028-5 (2012).

Pazzaglia, M. B. and Odors: Non Just Molecules, After All. Current Directions in Psychological Science. 24, 329–333 (2015).

Olsson, C. J. Complex motor representations may not be preserved after complete spinal cord injury. Experimental neurology 236, 46–49, https://doi.org/10.1016/j.expneurol.2012.03.022 (2012).

Moseley, G. L. Using visual illusion to reduce at-level neuropathic pain in paraplegia. Pain 130, 294–298, https://doi.org/10.1016/j.pain.2007.01.007 (2007).

Pazzaglia, M., Haggard, P., Scivoletto, G., Molinari, M. & Lenggenhager, B. Pain and somatic sensation are transiently normalized by illusory body ownership in a patient with spinal cord injury. Restor Neurol Neurosci 34, 603–613, https://doi.org/10.3233/RNN-150611 (2016).

Acknowledgements

The authors acknowledge research support from the International Foundation for Research in Paraplegie [P133]; EU Information and Communication Technologies Grant [VERE project, FP7-ICT-2009-5, Prot. Num. 257695] and ANIA Foundation. We are profoundly grateful for the collaboration of all patients with spinal cord injury and physical therapists who participated in the study.

Author information

Authors and Affiliations

Contributions

M.P. study concept and experimental design, data analysis and interpretation, manuscript drafting, obtaining funding. G.G. data acquisition, data analysis and interpretation, manuscript drafting. J.W.L. selection of stimuli, data interpretation, manuscript drafting. G.S. performed acquisition of neurologic data, critical revision of manuscript for intellectual content. A.G. and M.M. critical revision of manuscript for intellectual content.

Corresponding author

Ethics declarations

Competing Interests

The authors declare no competing interests.

Additional information

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Pazzaglia, M., Galli, G., Lewis, J.W. et al. Embodying functionally relevant action sounds in patients with spinal cord injury. Sci Rep 8, 15641 (2018). https://doi.org/10.1038/s41598-018-34133-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-018-34133-z

Keywords

This article is cited by

-

Multisensory integration in humans with spinal cord injury

Scientific Reports (2022)

-

Visuo-motor and interoceptive influences on peripersonal space representation following spinal cord injury

Scientific Reports (2020)

-

My hand in my ear: a phantom limb re-induced by the illusion of body ownership in a patient with a brachial plexus lesion

Psychological Research (2019)

-

Embodying their own wheelchair modifies extrapersonal space perception in people with spinal cord injury

Experimental Brain Research (2019)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.