Abstract

Module or community structures widely exist in complex networks, and optimizing statistical measures is one of the most popular approaches for revealing and identifying such structures in real-world applications. In this paper, we focus on critical behaviors of (Quasi-)Surprise, a type of statistical measure of interest for community structure, accompanied by a series of comparisons with other measures. Specially, the effect of various network parameters on the measures is thoroughly investigated. The critical number of dense subgraphs in partition transition is derived, and a kind of phase diagrams is provided to display and compare the phase transitions of the measures. The effect of “potential well” for (Quasi-)Surprise is revealed, which may be difficult to get across by general greedy (agglomerative or divisive) algorithms. Finally, an extension of Quasi-Surprise is introduced for the study of multi-scale structures. Experimental results are of help for understanding the critical behaviors of (Quasi-)Surprise, and may provide useful insight for the design of effective tools for community detection.

Similar content being viewed by others

Introduction

Networks with complex architectures of connections are ubiquitous in nature. Biological, technological and social networks are found to exhibit many common topological properties, such as clustering, correlation and modularity1. The latter feature means that the networks often consist of communities, clusters or modules, i.e., groups of vertices within which connections are very dense while between which they are sparser1. Communities are closely related to real functional groupings in real-world systems2,3 and can affect dynamical behaviors such as information diffusions and synchronizations4,5,6. Because of the relevance in practical applications, many methods have been proposed to detect graph’s communities, such as spectral analysis7,8, random walks9,10,11,12, synchronization13,14, diffusion15, statistical models16,17, label propagation18,19,20,21, optimization22,23,24,25,26 and others27,28,29,30,31,32,33,34,35. Most of them consist in optimizing quality functions that can capture the intuition of community structures, such as Modularity36, Hamiltonians16,37, (Quasi-)Surprise (Sq)38,39,40, Significance (Sg)41 and “fitness” functions42,43.

For real applications, it becomes crucial to analyze the methods’ behavior in depth. For instance, some methods, e.g. based on modularity optimization and Bayesian inference, were shown to have phase transitions from detectable to undetectable structures, which actually constitute a limitation for their achievable performance44,45,46. On the other hand, the limits of Modularity, such as the resolution limit47,48,49, implies the possible existence of multi-scale structures in networks, and promoted the proposal of various (improved) methods, especially the multi-resolution Modularity or Potts-based Hamiltonians50,51,52,53,54. Various approaches were proposed to improve the Modularity-based methods55,56. For example, Lai et al. improved the Modularity-based belief propagation method by using the correlation between communities to improve the estimating of number of communities56.

Surprise (Sp) is a recently proposed statistical measure of interest for community structure, which is defined as the minus logarithm of the probability that the number of intra-community links larger or equal to the observed one is found in random networks, according to a cumulative hyper-geometric distribution38. While it has been shown to have good performance in many networks38,39,57, it is inherently applicable only to un-weighted networks and it is not easy to be optimized directly due to computational complexity caused by complex nonlinear factors. Recently, Traag et al.40 proposed a kind of accurate asymptotic approximation for Surprise (a method called Quasi-Surprise, Sq). This makes Surprise able to treat weighted networks naturally, and more accessible for theoretical analysis and efficient optimization.

So far, there is less work on the behaviors of (Quasi-)Surprise, though it has been applied to the investigation of module structures. In many networks, by comparing with Modularity as a reference, (Quasi-)Surprise seems to be immune to the resolution limit, because it has super high resolution, and thus it is able to discover the underlying community structure better. However, there may be misunderstanding needed to be clarified here. Moreover, a kind of “potential well” effect is observed in (Quasi-)Surprise, while not in Modularity and Significance. It may make (Quasi-)Surprise more difficult to be optimized than other measures such as Modularity, even if optimization for the measures remains NP-hard.

To analyze the critical behaviors of (Quasi-)Surprise in community detection, we firstly study the effect of the various network parameters on (Quasi-) Surprise, derive the critical number of dense subgraphs in merging/splitting of communities, and provide a kind of phase diagrams to display the parameter regions of dense subgraphs merging, accompanied by a series of comparisons with other methods, e.g. Modularity and Significance. And then, we will show that single group of dense subgraphs merging may be more difficult, due to the effect of “potential well” on optimization algorithms, where (Quasi-)Surprise is not a monotonic function of the number of dense subgraphs merging. This may lead to “false” optimal solutions for (Quasi-)Surprise. Moreover, we further show that the heterogeneity of degrees and community sizes will quicken the splitting of communities. Finally, we propose a kind of multi-scale version of Quasi-Surprise as well as Significance to deal with multi-scale networks.

Results

Effect of network parameters

For convenience, we have constructed a set of single-level networks with r “dense subgraphs” (or called “predefined communities”) that are placed at a circle and are connected to adjacent “dense subgraphs” (see Method and Fig. 1A). In those networks, let us consider a community partition that consists of r/x communities, where each of these communities contains x adjacent and dense subgraphs (or say, predefined communities), denoted by Partition X (see Fig. 1A for an example of a partition with X = 3). The pre-defined partition in the network is a special case for x = 1. For Partition X in the networks, the probability that a link exists within a community can be written as,

and the expected value of such probability can be written as,

Here, min is the number of existing intra-community links in the partition with r/x communities; m is the number of existing links in the network; nc is the number of vertices in each of dense subgraphs (or say, predefined communities); pin is the probability of linking vertices within the same dense subgraphs; pout is the probability of linking vertices respectively in two adjacent dense subgraphs; Mint is the maximal possible number of intra-community links in the partition; M is the maximal possible number of links in a network. As a result, \(S=mD({q}_{x}||{\bar{q}}_{x})\) for the partition, which is a multivariate function (See Methods for details).

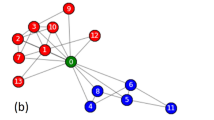

Illustration for networks. (A) A single-level network containing 12 dense subgraphs (i.e. 12 predefined communities), and a community partition consisting of 4 communities (Partition X = 3, marked by dash lines), where each of these communities is formed by the merging of x = 3 adjacent predefined communities. (B) Double-level networks and community structures. (C) LFR network and community structure.

Firstly, we show the relation between Quasi-Surprise and the number x of dense subgraphs being contained in each community of Partition X (Fig. 2). For small r-values, S(x)/S(1) is less than 1 and decreases with x, so there is no merging of dense subgraphs; and as expected, when r is large enough, S(x)/S(1) has a clear peak, so the merging of x dense subgraphs will appear. In the most modular networks (Fig. 2, Right), where clique-like dense subgraphs are connected by only one inter-community link, the merging is very difficult or even almost impossible (because it requires large r-values), but, for other parameters (e.g. Fig. 2, Left), the merging is easier obviously. The difficulty of dense subgraphs merging will greatly reduce with the increase of pout/pin.

Secondly, we compare the normalized S(r)-curves for different x-values (Fig. 3A,B). The S(r)-values increase with r. For small r-values, S(r, x = 1) is larger than others. With the increase of r, S(r, x = 2, 3 and 4) will be larger than others in turn, that is, the merging of (x = 2, 3 and 4) dense subgraphs will be preferred. As in Fig. 2, the merging is very difficult to appear in the most modular networks (Fig. 3A), but it may appear much more easily in other networks (e.g. Fig. 3B).

Thirdly, we show that for different x-values, the (normalized) S-curves decrease with pout/pin (Fig. 3C,D). For small pout/pin-values, S(x = 1) is larger than others, so there is no merging. With the increase of pout/pin, S(x = 2, 3 and 4) will be larger than others in turn, that is, the (x = 2, 3 and 4) merging will be preferred in turn. And for larger-size networks (i.e. larger r-values), the merging will appear more easily.

For the sake of comparison, we also analyzed the effect of network parameters on other quality functions, the original Surprise, Significance and Modularity, which have similar behaviors to Quasi-Surprise (see Figs S1–S3).

Phase transition in merging/disconnecting dense subgraphs

When the number r of dense subgraphs in the networks or pout/pin is large enough, the dense subgraphs may merge. To study the critical number \({r}_{x}^{\ast }\) of dense subgraphs, we firstly analyze the transition of Quasi-Surprise from one partition to another in the single-scale networks (see Method). When \({\rm{\Delta }}S=S(x)-S(1)\ge 0\), the predefined partition was not preferred, compared with Partition X. By solving ΔS = 0 for r, one can obtain,

where \(\beta =\frac{x-1}{x}\frac{2{p}_{out}}{{p}_{in}+2{p}_{out}}\). For comparison, we analytically derive the critical point of Modularity for r/x groups of x dense subgraphs merging in the networks (see Method),

See Fig. 4 and Supplementary information (SI) for the critical points of other measures in the networks.

(A) For Quasi-Surprise and Modularity, relation between critical r-values and x for different pout/pin-values (lines + symbol for Quasi-Surprise, while the corresponding lines for Modularity) in single-level networks. (B) For Quasi-Surprise and Modularity, relation between critical r-values and pout/pin for different x-values in single-level networks. The inset graph is for more explicitly comparison. (lines + symbol for Quasi-Surprise, while the corresponding lines for Modularity (Mod)). “(1 group)” denotes community partitions where there are just several (e.g. x = 2 or 3) “dense subgraphs” merging into 1 group, generating a community with 2 or 3 “dense subgraphs”, and other “dense subgraphs” are still considered as independent communities. (C) For comparison of different methods (Sq, Sp, Sg and Mod), the critical r-values in partition transition as a function of pout/pin in single-level networks. (D) For comparison of different methods, the critical r-values in partition transition as a function of pout1/pin in double-level networks.

For Quasi-Surprise, the critical number \({r}_{x}^{\ast }\) of dense subgraphs increases monotonically with the increase of x for different pout/pin-values (Fig. 4A), meaning that, the larger the x-values, the larger the critical \({r}_{x}^{\ast }\)-values. And \({r}_{x}^{\ast }\) decays quickly with the increase of pout/pin for all x–values (Fig. 4B), meaning that, the larger the pout/pin–values, the more easily the merging appears, in other words / namely, the lower the resolution of it. Moreover, the resolution of Quasi-Surprise is far higher than that of Modularity for small pout/pin–values.

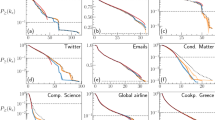

Figure 4C provides a comparison of the critical r-values of various methods (Quasi-Surprise, Surprise, Significance and Modularity) in the single-level networks (see SI). On one hand, the critical r-values of the methods as a function of pout/pin have similar behaviors; on the other hand, the curves of the critical r-values give a precise comparison of the resolutions of the methods.

Figure 4 could be regarded as a kind of phase diagrams in which, below the corresponding curve, the x-community-merging partition is not allowed compared with the pre-defined one. And the phase diagrams show that the appearance of community merging is not as difficult as imagined, e.g., in the most modular networks with small pout/pin-values. Note that, for simplicity, we have only considered the transition from the predefined partition to Partition X. Through more detailed analysis, e.g., comparing Partition X = 3 with Partition X = 2, one can find that the critical r-values will be larger than estimated above (see Method). Moreover, notice Fig. 4D and SI for phase transition of various methods in double-level networks.

We further verify the above conclusions directly by using Louvain algorithm to optimize the quality functions. Table 1 shows that, in the single-level networks, (a) for the fixing number r of dense subgraphs, dense subgraphs merging will appear when pout is large enough for all methods, because the increase of the links between dense subgraphs makes dense subgraphs more and more close together; (b) with the increase of r-values, the needed pout-values for dense subgraphs merging become smaller, meaning that dense subgraphs merging become easier; (c) the effect of “potential well” appears in some cases where f* is less than 1 but there is no merging (see the “potential well” in the following section); (d) Modularity is more inclined to merge dense subgraphs than other methods, while (Quasi-)Surprise and Significance display relatively high resolution (partly due to the effect of “potential” well). (e) By comparing results of different pin-values, the decrease of pin-values will make the needed pout-values and r-values for dense subgraphs merging become smaller (see Tables 1 and S1), and (f) in the double-level networks, there are similar results (see Tables S2 and S3).

Moreover, the above methods are applied to a set of real-word networks (Table S7). Similar to the results in the model networks, Quasi-Surprise has higher resolution than Modularity, and thus it tends to generate more communities in the real-world networks than Modularity. Original Surprise and Significance can find more communities in the networks than other methods, because they have higher resolution than others.

Effect of “potential well” on community detection

Because of nonlinearity of (Quasi-)Surprise, a kind of unexpected phenomenon may appear when one searches the optimal community structure for (Quasi-)Surprise in an agglomerative manner, e.g., by Louvain algorithm. Generally, for some statistical measures, e.g., Modularity, one (or two) set(s) of x-dense subgraphs merging will be allowed, or say it can lead to the increase of the statistical measure, when Partition X is allowed, namely, it can stimulate the increase of the measure. However, it may not be the case for (Quasi-)surprise in some cases, because (Quasi-) Surprise as a function of the number of dense subgraphs merging has obvious region of low S-value, which may be difficult to get across by general greedy (agglomerative or divisive) algorithms. We call the phenomenon as “potential well” effect by borrowing the concept in Quantum mechanics.

Now, consider a partition with only one group of x dense subgraphs merging in the single-level networks. For small increments of q and \(\bar{q}\), by comparing the original partition, the increments of Quasi-Surprise can be estimated by,

where \({\rm{\Delta }}q=\frac{2(x-1){p}_{out}}{{p}_{in}+2{p}_{out}}\cdot \frac{1}{r}\) and \({\rm{\Delta }}\bar{q}=({x}^{2}-x)/r\). By solving ΔS = 0 for r, one can obtain,

Figure 4B clearly shows that the line “x = 2(1 group)” is above the line “x = 2”, and the line “x = 3(1 group)” is above the line “x = 3”, where “(1 group)” denotes community partitions that have only several (e.g. x = 2 or 3) “dense subgraphs” merging into 1 group and other “dense subgraphs” are still separated. This is a counterintuitive result, because single group of x dense subgraphs merging is more difficult to happen than r/x groups of x dense subgraphs merging.

Figure 5A,B confirms the conclusion again. When r > r2, the partition for r/2 groups of 2 dense subgraphs merging already has larger S-value than the predefined one. However, only when \(r > {r}_{2^{\prime} }\), generating one group of 2 dense subgraphs merging is able to be approved in the agglomerative algorithms, because it will lead to the decrease of S before \(r > {r}_{2^{\prime} }\), even if r > r2 (see Fig. 5C–J). This may lead that the expected 2-community-merging partition cannot be found until \(r > {r}_{2^{\prime} }\).

(A,B) Critical points of communities merging in the networks with different parameters. r is the number of dense subgraphs (i.e. predefined communities). ΔS denotes the increment of S. For illustration of the critical points, define the function f as f(ΔS > 0) = 1 and f(ΔS > 0) = 0. “x = 2(1 group)” denotes the partitions where there are only 2 dense subgraphs merging into 1 group, generating a community with 2 dense subgraphs, and other dense subgraphs are considered to be separated communities. (C–J) For Quasi-Surprise, the longitudinal coordinates are the increment of S, normalized by the number of edges in the networks, where S0 is the S-value of the original partition. And the horizontal ordinates are the ratio of the number of communities with 2 dense subgraphs to the number of dense subgraphs in the networks (denoted by Ratio).

Figure 5C–J clearly shows that, the increment of S (ΔS) decreases monotonously with the number of groups of 2 dense subgraphs merging, when r < r2; while it increases monotonously when \(r > {r}_{2^{\prime} }\). In the two cases, generally agglomerative algorithms should be able to find the optimal partition for S. However, when \({r}_{2} < r < {r}_{2^{\prime} }\), there exists “potential well”, where ΔS will firstly decrease and then increase. It is also the case for 3-community merging. It may be difficult for general greedy algorithms to get across the “potential well”. This is frustrating, because this may lead “false” optimal results for some algorithms; while from another viewpoint, this may be a “good” news, because this means that S will be more difficult to encounter the resolution problem than estimated by Equation (3).

Whether could the “potential well” problem be solved by divisive algorithms? The answer may be negative. Because another kind of “potential well” still exists in some cases for the algorithms. As shown in Fig. 5F,J, S of Partition X = 2 is less than that of Partition X = 1, but the disconnecting of dense subgraphs or the decrease of fraction of dense subgraphs merging will lead to the decrease of S. Because of the existence of the “potential well”, general greedy divisive algorithms may be unable to get across the “potential well”, e.g., from Partition X = 2 to Partition X = 1. Maybe only some ergodic but time-consuming algorithms could solve the “potential well” problem to find the “true” optimal partition for Quasi-Surprise.

Does the “potential well” problem also exist in other methods? For comparison, we have confirmed that the original Surprise also has the “potential well”, while it was not found in Significance and Modularity, because of the additional property for communities (see Fig. S4). We have proved strictly that Significance have the same critical point for r/2 groups and single group of 2 dense subgraphs merging,

where \(H(y)=-\,y\,\mathrm{ln}(y)-(1-y)\,\mathrm{ln}(1-y)\), p1 = pi, p2 = (pi + po)/2 and p = (pi + 2po)/r. Modularity also has the same critical point \({r}_{2}={r}_{2^{\prime} }={p}_{in}/{p}_{out}+2\) in the two cases. Therefore they have no “potential well” effect (see SI for the proof).

Table 1 just shows a little sign of the “potential well” effect, therefore we have further tested the “potential well” effect of distinct measures by directly optimizing the measures (Fig. 6). As discussed above, when the number r of dense subgraphs is large enough, S2 (Q2) > S1 (Q1), dense subgraphs should merge. In the networks, for example, the identified partition should have a transition from the first-level partition to the second-level one. However, because of the effect of “potential” well, with the increase of r-values, Quasi-Surprise and Surprise have clear delays for the transition – the identified partition is still the first-level one, though S2 (Q2) > S1 (Q1). While this kind of delay is not observed for Significance and Modularity. This confirms that the effect of “potential well” really exists in Quasi-Surprise and Surprise, while not for significance and modularity.

Effect of “potential” well in community detection. S1/S2, Sd/S2, Q1/Q2 and Q/Q2 as a function of the number of (the first-level) communities in the two-level networks. S1 (Q1), S2 (Q2) and Sd (Qd) denote the values of quality functions respectively for the first-level partition (without communities merging), the second-level partition (with communities merging), and the identified partition by different methods. Each measure is tested in three networks, denoted by I, II and III respectively. The parameters in the networks are set as follows: (A) Quasi-Surprise: pi = 1, pout1 = 0.19(I), 0.20(II) and 0.21(III), pout2 = 0.01. (B) Surprise: pi = 1, pout1 = 0.53(I), 0.54(II) and 0.55(III), pout2 = 0.1. (C) Significance: pi = 1, pout1 = 0.38(I), 0.40(II) and 0.42(III), pout2 = 0.2. (D) Modularity: pi = 1, pout1 = 0.03(I), 0.04(II) and 0.05(III), pout2 = 0.01.

Effect of heterogeneity of vertex degree and community size

To study the effect of heterogeneity of vertex degrees and community sizes on Quasi-Surprise, we further apply various methods to a type of classical modular network, Lancichinetti-Fortunato- Rachicchi (LFR) networks58 (Fig. 1C), which are able to mimic the general properties of many real-world networks, such as the community structures, the heterogeneity of vertex degrees and community sizes.

Firstly, a set of networks with different heterogeneity of vertex degrees and community sizes are generated by the increase of maximal degree and maximal community size. The heterogeneity of the two kinds make the inhomogeneity of link density within communities emerges gradually due to the random fluctuations of links. When the inhomogeneity of link density is large enough in a community, the community may be split. The results (Tables 2 and S4 and Fig. S7) show that, (1) almost all methods work well in homogeneous networks; (2) the heterogeneity of community size leads the splitting of communities (see Sq, Sp, Sg and Mod); (3) while the heterogeneity of degree aggravates the splitting of communities further. (4) The partitions by Sq, Sp and Sg contain more groups of vertices than the pre-defined ones, because they (as well as LP19) are more inclined to split the communities, especially when comparing with other methods (such as Modularity22,36, Infomap10, Walktrap11 and OSLOM59).

Then, we study the effect of the mean degree of the networks. With the decrease of the mean degree of the networks, links within communities become more and more sparse. Thus, the inhomogeneity of link density will emerge gradually in the communities due to the random fluctuations. As a result, communities will also tend to split (see Tables S5 and S6, Figs S8–S11 and the details of analysis).

Further, we also analyzed the effect of the network size on the above results. For Quasi-Surprise, Surprise, Significance and Modularity, with the increase of network size, (1) the tendency to split is also weakened gradually; (2) the difference between identified and pre-defined partition gradually decreases; and (3) NMI gradually increases (see Tables 2 and S4–S6, Figs S8–S11).

Extension to multi-scale networks

Analysis of critical behaviors in multi-scale networks

We further study the critical behaviors of various measures in the networks with two-scale community structures, a generalization of the single-scale networks (Fig. 1B). In the networks, the critical r-value of Sq can be estimated by (see SI for the proof),

For only one group of 2 (first-level) communities merging in the networks,

When pout1 = pout2, the networks are equivalent to the single-scale networks. When pout1 > pout2, two-scale structures emerge. With the decrease of pout2/pin, \({r}_{2}^{\ast }\) decreases. When pout2 = 0, \({r}_{2}^{\ast }\approx \frac{{p}_{out1}}{{p}_{in}}\cdot {(\frac{2{p}_{in}}{{p}_{in}+{p}_{out1}})}^{\frac{{p}_{in}}{{p}_{out1}}+1}\), so the effect of pout2 is limited. \({r}_{2}^{\ast }\)-value is mainly determined by pout1/pin; also, equation (9) has similar behaviors (see Fig. S6). See SI for detailed analysis of critical behaviors of various measures in the networks. We provided a systematical comparison of phase diagrams of various measures (Sq, Sp, Sg and Mod) in the networks (See Figs 4D, S5 and S6).

In the networks, (Quasi-)Surprise also has \({r}_{2} < {r}_{2^{\prime} }\) (Figs 4D, S5 and S6). On the one hand, this means the “potential well” effect still exists when \({r}_{2} < r < {r}_{2^{\prime} }\), and thus general greedy optimization algorithm may prefer either the first-level partition (for agglomerative algorithms, see Fig. 6) or the second-level partition (for divisive algorithms), leading to “false” optimal solutions. So the identified level depends on applied algorithms.

On the other hand, the partition of which level is identified is closely related to the number of communities in the networks, partly because (Quasi-)Surprise is just single-scale method. When r < r2, the first level is found; when \({r}_{2^{\prime} } < r\), the second level is preferred; when \({r}_{2} < r < {r}_{2^{\prime} }\), the identified level depends on whether optimization algorithm could get across the “potential well” to find true optimal solutions. Moreover, the critical values of \({r}_{2^{\prime} }\) (as well as r2) will quickly decrease with the increase of pout1/pin, and therefore small pout1/pin-values will prefer the first level in a network with fixed r, while large pout1/pin-values will prefer the second level (in this case, the communities easily merge).

Because of the accumulative property, Modularity has the same critical \({r}_{2}^{\ast }\)-value for r/2 groups and single group of 2 communities merging in the networks: \({r}_{2}^{\ast }=({p}_{in}+{p}_{out1}+{p}_{out2})/{p}_{out1}\), and Significance is too: \({r}_{2}^{\ast }=({p}_{in}+{p}_{out1}+{p}_{out2})\cdot \exp \{(2H({p}_{2})-H({p}_{1}))/(2{p}_{2}-{p}_{1})\}\), where p1 = pin and p2 = (pin + pout1)/2 (see SI). They thus do not show the potential-well phenomenon, but there still is similar resolution problem in the networks: the second level is found when \(r > {r}_{2}^{\ast }\), otherwise the first level is found.

Extension to multi-scale community detection

As discussed above, (Quasi-) Surprise as well as many other methods are just single-scale methods with limited resolutions, so the identified partitions or levels closely depend on the network parameters such as the (inter- and intra-community) link densities and the number of communities in the networks (note that the “potential well” effect is also related to the number of communities). And multi-scale structures extensively exist in various complex networks. So, developing multi-resolution (or multi-scale) methods is of importance. Here, we proposed an extension of Quasi-Surprise to multi-scale networks, by adjusting the random model (See Methods;), because the original Quasi-Surprise closely depends on the difference between the probability of links existing within communities and the expected values in the random model. Similarly, other measures such as Significance and Modularity can also be extended to multi-scale networks (see Methods). The exact formulations of the methods are provided in the additional material.

To display the effectiveness of the multi-scale methods in detecting communities at different scales, the methods are applied to two kinds of networks with multi-scale community structures (Figs 7, S12 and S13). The results show that they are able to identify the partitions of the pre-defined scales in the networks. The proposed multi-scale extensions are simple while effective, which provide alternative approaches to analyze the community structures at different scales.

Community partitions identified by Quasi-Surprise in (A,B) double-level networks with nc = 10 and r = 20, and (C,D) hierarchical networks with 256 vertices and two-scale community structures. Nc is the number of identified communities in the networks. NMI-1 denotes the NMI between identified and level-1 partitions. NMI-2 denotes the NMI between identified and level-2 partitions.

Discussion and Conclusion

Community structure (or module structure) widely exists in various complex networks. Detecting the communities (or modules) in complex networks is an issue of interest in the research of complex networks. Many methods have been proposed to detect community structures in complex networks, and optimizing quality functions for community structure is one of the most important strategies for community detection. The existing methods could help in discovering the intrinsic structures in networks, but they also have respective scopes of application. Therefore, it is necessary to study the behaviors of the methods for the theoretical research and real applications. This is of help in understanding the methods themselves, and can promote the improvement of the methods or the development of more effective methods. However there is less work on the behaviors of (quasi-)Surprise–a kind of statistical measure of interest for community structure until now.

This paper provided the detailed study for the critical behaviors of (Quasi-)Surprise, accompanied by a series of comparison with other methods, including Significance, Modularity, Infomap, Walktrap, LP and OSLOM. We analyzed the effect of various network parameters on (Quasi-)Surprise, and derive the critical number of dense subgraphs in merging/splitting of dense subgraphs. To display the phase transitions of various measures from one partition to another one, we provided the phase diagrams of the critical points in merging/splitting of dense subgraphs, which give a clear comparison for the critical points of various measures. The critical number of dense subgraphs for (Quasi-)Surprise has a clearly super-exponential increase with the difference between inter- and intra-community link possibilities, but it is close to that of Modularity for small difference of link possibilities, for which the difference between the resolutions of (Quasi-)Surprise and Modularity is far less than in the most modular structures.

A kind of “potential well” effect for (Quasi-) Surprise was revealed in community detection. In some cases, just one group of x dense subgraphs merging may be more difficult to appear than all r/x groups of x dense subgraphs merging, because, when \({r}_{2} < r < {r}_{2^{\prime} }\), (Quasi-) Surprise as a function of the number of dense subgraphs merging has obvious “potential well”. The “potential well” is generally difficult to get across by greedy (agglomerative or divisive) algorithms, e.g. the popular Louvain. This may result in “false” optimal solutions for the algorithms, though this also may be able to implicitly mitigate the resolution problem or the excessive split of communities, to some extent. Maybe, only some ergodic but time-consuming algorithms, e.g., simulated annealing, can avoid the problem.

Overall, (Quasi-) Surprise tends to split communities due to such reasons as the heterogeneity of link density, degree and community size, often displays higher resolution, and thus identify more communities than other methods, e.g., Modularity, in community detection, but it also may lead to the excessive splitting of communities due to the density inhomogeneity inside communities, e.g., caused by the heterogeneity of degrees and community sizes.

Moreover, it is believed that multi-scale structures widely exist in various complex networks. In the multi-scale networks, e.g. the double-level networks above, different methods may identify structures at different scales. (Quasi-) Surprise is a kind of statistical measures of interest for community detection, but it is just a single-scale method. And the results suggest the necessity of developing multi-resolution methods, though it may be not easy for (Quasi-)Surprise. We proposed an extension of Quasi-Surprise to multi-scale networks, which provide alternative approaches for identifying the multi-scale structures.

Finally, we expected that the above analysis could be helpful for the understanding of the critical behaviors of the statistical measures and provide useful insight for developing more effective community-detection methods in complex networks.

Method

Networks

Single-level networks

For convenience of analysis, a set of community-loop networks with single-level community structure is constructed, where r “dense subgraphs” (predefined communities) are placed at a circle and are connected to adjacent ones. For each network, let nc the number of vertices in each (predefined) community, while n = r · nc the number of vertices in the network; pin is the probability of linking vertices within the same (predefined) community; pout is the probability of linking vertices respectively in two adjacent (predefined) communities; \(m=r\cdot {n}_{c}^{2}{p}_{in}+2r\cdot {n}_{c}^{2}{p}_{out}\) is the number of edges in the networks (Fig. 1A).

Double-level networks

To further analyze the phenomena in the merge and breakup of communities, we construct the community-loop networks with double-level community structures. Let r the number of communities and nc the number of vertices in each community at the first level, while n = r · nc the number of vertices in the network. pin the probability of linking vertices within the first-level community; pout1 the probability of linking vertices respectively in the first-level and adjacent communities contained in the same second-level community; pout2(<pout1) the probability of linking vertices respectively in two adjacent and first-level communities contained in two different second-level communities (Fig. 1B).

LFR networks

Lancichinetti-Fortunato-Rachicchi (LFR) networks is a type of classical modular networks, which are able to mimic the general properties of many real-world networks, such as the community structures, the heterogeneity of vertex degrees and community sizes58. In the networks, vertex degrees and community sizes follow power-law distributions with exponents τ1 and τ2 respectively, and a common mixing parameter μ controls the ratio between the external degree of each vertex with respect to its community and the total degree of the vertex. The smaller the μ-values, the more obvious the communities. Here, low μ-values are used, so communities are well separated from each other (Fig. 1C).

Hierarchical networks

The networks have 256 vertices and two-scale community structures14. The first scale consists of 4 groups of 64 vertices and the second scale consists of 16 groups of 16 vertices. The number of links of each vertex with the most internal community is kin0, the number of links of each vertex with the most external community is kin1, and the number of links with any other vertex at random in the network is 1.

Statistical measures for community structure

Surprise (S p)

Surprise is a statistical approach to assess the quality of community partition in a network, with higher values corresponding to better partitions38. It was shown that Surprise can give better characterization for community structures than modularity in several complex benchmarks. Given a community partition in a network, Surprise is defined as the minus logarithm of the probability that the observed number of intra-community links or more appears in Erdös-Rényi random networks. According to a cumulative hyper-geometric distribution, it can be written as,

where M is the maximal possible number of links in a network; Mint is the maximal possible number of intra-community links in a given partition; m is the number of existing links in the network; while mint is the number of existing intra-community links in the partition.

Quasi-Surprise (S q)

The original definition of Surprise is for un-weighted networks and it involves complex nonlinear factors, leading to the difficulties of the theoretical analysis and numerical computations. So it is very useful to provide a kind of effective approximate expression for Surprise. By only taking into account the dominant term and using Stirling’s approximation of the binomial coefficient, a kind of Quasi-Surprise reads,

where q = mint/m denotes the probability that a link exists within a community; \(\bar{q}={M}_{{\rm{int}}}/M\) denote the expected value of q; \(D(x||y)=x\,\mathrm{ln}\,\tfrac{x}{y}+(1-x)\mathrm{ln}\,\tfrac{1-x}{1-y}\) is the Kullback-Leibler divergence, which measures the distance between two probability distributions x and y40.

The original Quasi-Surprise is based on the difference between the probability of links existing within communities and the expected values in random model. We propose a kind of alternative approach to extend the Quasi-Surprise to multi-scale case, by using a resolution parameter to adjust the expected values in random model. This results in the multi-scale Quasi-Surprise,

where γ is the resolution parameter.

Significance (S g)

Significance also is a recently proposed measure for estimating the quality of community structures, which looks at how likely dense communities appear in random networks41. It is defined as

Here the sum runs over all communities; the density of community s, ps, is the ratio of the number of existing edges to the maximum in the community; the density of network, p, is the ratio of the number of existing edges to the maximum in the whole network. It cloud also be directly optimized as objective function to find the optimal community partitions.

To extend the original Significance to multi-scale cases, we use a parameter to adjust the density of network, because the original Significance is based on the difference between the density of community and the density of network. As a result, the multi-scale Significance can be written as,

where γ is the resolution parameter.

Modularity (Q or Mod)

For given community division of a network, it is defined as36

where M is the total number of edges in the network, \({k}_{s}^{in}\) the inner degree of group s, ks the total degree of group s, and the sum runs over all communities in the given network. Modularity evaluates the fraction of edges within communities in the network minus the expected value in a random graph (i.e. in the null model). In general, the larger the modularity, the better the division. In recent years, it has become one of the most popular quality functions for community detection.

To detect communities at different scales, the multi-scale Modularity can be defined as,

where γ is the resolution parameter.

Normalized mutual information (NMI)

This measure is taken from information theory and estimates the similarity between two community partitions42. When perfectly matched, NMI = 1. Otherwise, the less is the match, the smaller is the value of NMI. NMI is often used to evaluate the performance of methods by assessing the amount of community information correctly extracted by the methods in networks with known community structures.

Critical number of dense subgraphs in partition transition

For the single-level networks, we derive the critical number \({r}_{{x}_{1}\to {x}_{2}}^{\ast }\) of dense subgraphs from Partition X1 to Partition X2 (Partition X denotes the partition with r/x groups of x dense subgraphs merging). When \({\rm{\Delta }}S=S({x}_{2})-S({x}_{1}) > 0\), Partition X2 will be preferred, compared to Partition X1. Here, ΔS, divided by the number of links, can be written as,

where \(r-{x}_{1}\approx r-{x}_{2}\approx r\), \(D(x||y)=x\,\mathrm{ln}\,\tfrac{x}{y}+(1-x)\,\mathrm{ln}\,\tfrac{1-x}{1-y}\) and \(\beta =(\frac{{x}_{2}-1}{{x}_{2}}-\frac{{x}_{1}-1}{{x}_{1}})\frac{2{p}_{out}}{{p}_{in}+2{p}_{out}}\).

By solving ΔS = 0 for r, one can obtain the critical number of dense subgraphs,

For comparison, in the same networks, we derive the critical point of modularity for r/x groups of x dense subgraphs merging. For Partition X,

By solving Qx2 − Qx1 = 0 for r, one can obtain,

For x1 = 1, \({r}_{x}^{\ast }=\frac{{p}_{in}+2{p}_{out}}{2{p}_{out}}{x}_{2}\).

References

Fortunato, S. Community detection in graphs. Phys. Rep. 486, 75–174 (2010).

Chen, P. & Redner, S. Community structure of the physical review citation network. Journal of Informetrics 4, 278–290 (2010).

Zhang, S.-H., Ning, X.-M., Ding, C. & Zhang, X.-S. Determining modular organization of protein interaction networks by maximizing modularity density. BMC Syst. Biol. 4, 1–12 (2010).

Stegehuis, C., van der Hofstad, R. & van Leeuwaarden, J. S. H. Epidemic spreading on complex networks with community structures. Sci. Rep. 6, 29748 (2016).

Nematzadeh, A., Ferrara, E., Flammini, A. & Ahn, Y.-Y. Optimal Network Modularity for Information Diffusion. Phys. Rev. Lett. 113, 088701 (2014).

Yan, S., Tang, S., Fang, W., Pei, S. & Zheng, Z. Global and local targeted immunization in networks with community structure. J. Stat. Mech. 2015, P08010 (2015).

Cheng, J.-J. et al. A divisive spectral method for network community detection. J. Stat. Mech. 2016, 033403 (2016).

Qin, X., Dai, W., Jiao, P., Wang, W. & Yuan, N. A multi-similarity spectral clustering method for community detection in dynamic networks. Sci. Rep. 6, 31454 (2016).

Su, Y., Wang, B. & Zhang, X. A seed-expanding method based on random walks for community detection in networks with ambiguous community structures. Sci. Rep. 7, 41830 (2017).

Rosvall, M. & Bergstrom, C. T. Maps of random walks on complex networks reveal community structure. Proc. Natl. Acad. Sci. USA 105, 1118–1123 (2008).

Pons, P. & Latapy, M. Computing communities in large networks using random walks. J. Graph Algorithms Appl. 10, 191–218 (2006).

Delvenne, J.-C., Yaliraki, S. N. & Barahona, M. Stability of graph communities across time scales. Proc. Natl. Acad. Sci. USA 107, 12755–12760 (2010).

Chen, J., Wang, H., Wang, L. & Liu, W. A dynamic evolutionary clustering perspective: Community detection in signed networks by reconstructing neighbor sets. Physica A 447, 482–492 (2016).

Arenas, A., Díaz-Guilera, A. & Pérez-Vicente, C. J. Synchronization Reveals Topological Scales in Complex Networks. Phys. Rev. Lett. 96, 114102 (2006).

Cheng, X.-Q. & Shen, H.-W. Uncovering the community structure associated with the diffusion dynamics on networks. J. Stat. Mech. 2010, P04024 (2010).

Reichardt, J. & Bornholdt, S. Statistical mechanics of community detection. Phys. Rev. E 74, 016110 (2006).

Karrer, B. & Newman, M. E. J. Stochastic blockmodels and community structure in networks. Phys. Rev. E 83, 016107 (2011).

Han, J., Li, W. & Deng, W. Multi-resolution community detection in massive networks. Sci. Rep. 6, 38998 (2016).

Steve, G. Finding overlapping communities in networks by label propagation. New J. Phys. 12, 103018 (2010).

Liu, W., Jiang, X., Pellegrini, M. & Wang, X. Discovering communities in complex networks by edge label propagation. Sci. Rep. 6, 22470 (2016).

Hou Chin, J. & Ratnavelu, K. A semi-synchronous label propagation algorithm with constraints for community detection in complex networks. Sci. Rep. 7, 45836 (2017).

Blondel, V. D., Guillaume, J.-L., Lambiotte, R. & Lefebvre, E. Fast unfolding of communities in large networks. J. Stat. Mech. 2008, P10008 (2008).

Li, H. J., Bu, Z., Li, A. H., Liu, Z. D. & Shi, Y. Fast and Accurate Mining the Community Structure: Integrating Center Locating and Membership Optimization. Ieee Transactions on Knowledge and Data Engineering 28, 2349–2362 (2016).

Li, H. J. & Daniels, J. J. Social significance of community structure: Statistical view. Phys. Rev. E 91, 10 (2015).

Mei, G., Wu, X., Chen, G. & Lu, J.-a Identifying structures of continuously-varying weighted networks. Sci. Rep. 6, 26649 (2016).

Sobolevsky, S., Campari, R., Belyi, A. & Ratti, C. General optimization technique for high-quality community detection in complex networks. Phys. Rev. E 90, 012811 (2014).

Jia, C., Li, Y., Carson, M. B., Wang, X. & Yu, J. Node Attribute-enhanced Community Detection in Complex Networks. Sci. Rep. 7, 2626 (2017).

Quiles, M. G., Macau, E. E. N. & Rubido, N. Dynamical detection of network communities. Sci. Rep. 6, 25570 (2016).

Fu, J., Zhang, W. & Wu, J. Identification of leader and self-organizing communities in complex networks. Sci. Rep. 7, 704 (2017).

Yang, Z., Algesheimer, R. & Tessone, C. J. A. Comparative Analysis of Community Detection Algorithms on Artificial Networks. Sci. Rep. 6, 30750 (2016).

Ju, Y., Zhang, S., Ding, N., Zeng, X. & Zhang, X. Complex Network Clustering by a Multi-objective Evolutionary Algorithm Based on Decomposition and Membrane Structure. Sci. Rep. 6, 33870 (2016).

Ding, Z., Zhang, X., Sun, D. & Luo, B. Overlapping Community Detection based on Network Decomposition. Sci. Rep. 6, 24115 (2016).

Chen, Y., Zhao, P., Li, P., Zhang, K. & Zhang, J. Finding Communities by Their Centers. Sci. Rep. 6, 24017 (2016).

Žalik, K. R. Maximal Neighbor Similarity Reveals Real Communities in Networks. Sci. Rep. 5, 18374 (2015).

Yang, L., Jin, D., Wang, X. & Cao, X. Active link selection for efficient semi-supervised community detection. Sci. Rep. 5, 9039 (2015).

Newman, M. E. J. & Girvan, M. Finding and evaluating community structure in networks. Phys. Rev. E 69, 026113 (2004).

Reichardt, J. & Bornholdt, S. Detecting Fuzzy Community Structures in Complex Networks with a Potts Model. Phys. Rev. Lett. 93, 218701 (2004).

Aldecoa, R. & Marín, I. Surprise maximization reveals the community structure of complex networks. Sci. Rep. 3, 1060 (2013).

Nicolini, C. & Bifone, A. Modular structure of brain functional networks: breaking the resolution limit by Surprise. Sci. Rep. 6, 19250 (2016).

Traag, V. A., Aldecoa, R. & Delvenne, J. C. Detecting communities using asymptotical surprise. Phys. Rev. E 92, 022816 (2015).

Traag, V. A., Krings, G. & Van Dooren, P. Significant Scales in Community Structure. Sci. Rep. 3, 2930 (2013).

Lancichinetti, A., Fortunato, S. & Kertész, J. Detecting the overlapping and hierarchical community structure in complex networks. New J. Phys. 11, 033015 (2009).

Havemann, F., Heinz, M., Struck, A. & Gläser, J. Identification of overlapping communities and their hierarchy by locally calculating community-changing resolution levels. J. Stat. Mech. 2011, P01023 (2011).

Nadakuditi, R. R. & Newman, M. E. J. Graph Spectra and the Detectability of Community Structure in Networks. Phys. Rev. Lett. 108, 188701 (2012).

Decelle, A., Krzakala, F., Moore, C. & Zdeborová, L. Inference and Phase Transitions in the Detection of Modules in Sparse Networks. Phys. Rev. Lett. 107, 065701 (2011).

Reichardt, J. & Leone, M. (Un)detectable Cluster Structure in Sparse Networks. Phys. Rev. Lett. 101, 078701 (2008).

Zhang, X. S. et al. Modularity optimization in community detection of complex networks. Europhys. Lett. 87, 38002 (2009).

Good, B. H., de Montjoye, Y.-A. & Clauset, A. Performance of modularity maximization in practical contexts. Phys. Rev. E 81, 046106 (2010).

Fortunato, S. & Barthélemy, M. Resolution limit in community detection. Proc. Natl. Acad. Sci. USA 104, 36–41 (2007).

Xiang, J. et al. Local modularity for community detection in complex networks. Physica A 443, 451–459 (2016).

Xiang, J. et al. Multi-resolution community detection based on generalized self-loop rescaling strategy. Physica A 432, 127–139 (2015).

Ronhovde, P. & Nussinov, Z. Local resolution-limit-free Potts model for community detection. Phys. Rev. E 81, 046114 (2010).

Arenas, A., Fernández, A. & Gómez, S. Analysis of the structure of complex networks at different resolution levels. New J. Phys. 10, 053039 (2008).

Li, H. J., Wang, Y., Wu, L. Y., Zhang, J. H. & Zhang, X. S. Potts model based on a Markov process computation solves the community structure problem effectively. Phys. Rev. E 86, 10 (2012).

Xiang, J. et al. Enhancing community detection by using local structural information. J. Stat. Mech. 2016, 033405 (2016).

Lai, D., Shu, X. & Nardini, C. Correlation enhanced modularity-based belief propagation method for community detection in networks. J. Stat. Mech. 2016, 053301 (2016).

Jiang, Y., Jia, C. & Yu, J. An efficient community detection algorithm using greedy surprise maximization. J. Phys. A 47, 165101 (2014).

Lancichinetti, A., Fortunato, S. & Radicchi, F. Benchmark graphs for testing community detection algorithms. Phys. Rev. E 78, 046110 (2008).

Lancichinetti, A., Radicchi, F., Ramasco, J. J. & Fortunato, S. Finding Statistically Significant Communities in Networks. Plos One 6, e18961 (2011).

Acknowledgements

This work was supported by the construct program of the key discipline in Hunan province, the Training Program for Excellent Innovative Youth of Changsha, the Beijing Natural Science Foundation (Grant No. 9182015), the National Natural Science Foundation of China (Grant No. 61702054 and Grant No. 71871233), the Hunan Provincial Natural Science Foundation of China (Grant No. 2018JJ3568), the Scientific Research Fund of Education Department of Hunan Province (Grant No. 17A024), the Scientific Research Project of Hunan Provincial Health and Family Planning Commission of China (Grant No. C2017013), and the Hunan key laboratory cultivation base of the research and development of novel pharmaceutical preparations(Grant No. 2016TP1029).

Author information

Authors and Affiliations

Contributions

J.X., H.J.L., Z.B., Z.W. and J.M.L. devised the study, analyzed the data and wrote the manuscript; H.J.L., L.T., M.H.B. and J.M.L. performed the experiments and prepared the figures. All authors contributed to the discussion of results and reviewed the manuscript.

Corresponding authors

Ethics declarations

Competing Interests

The authors declare no competing interests.

Additional information

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Xiang, J., Li, HJ., Bu, Z. et al. Critical analysis of (Quasi-)Surprise for community detection in complex networks. Sci Rep 8, 14459 (2018). https://doi.org/10.1038/s41598-018-32582-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-018-32582-0

Keywords

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.