Abstract

How are the myriad stimuli arriving at our senses transformed into conscious thought? To address this question, in a series of studies, we asked whether a common mechanism underlies loss of information processing in unconscious states across different conditions, which could shed light on the brain mechanisms of conscious cognition. With a novel approach, we brought together for the first time, data from the same paradigm—a highly engaging auditory-only narrative—in three independent domains: anesthesia-induced unconsciousness, unconsciousness after brain injury, and individual differences in intellectual abilities during conscious cognition. During external stimulation in the unconscious state, the functional differentiation between the auditory and fronto-parietal systems decreased significantly relatively to the conscious state. Conversely, we found that stronger functional differentiation between these systems in response to external stimulation predicted higher intellectual abilities during conscious cognition, in particular higher verbal acuity scores in independent cognitive testing battery. These convergent findings suggest that the responsivity of sensory and higher-order brain systems to external stimulation, especially through the diversification of their functional responses is an essential feature of conscious cognition and verbal intelligence.

Similar content being viewed by others

Introduction

Understanding the brain mechanisms of conscious cognition is one of the great frontiers of cognitive neuroscience. A much-researched yet unresolved question is how the myriad sensory inputs arriving at our senses become integrated into meaningful representations that inform cognitive performance and give rise to individual differences in intellectual abilities. In the conscious brain, cognition is thought to arise from iterative interactions among brain regions of graded functional specialization. These include sensory-driven, e.g., auditory and visual, regions on one end of the functional hierarchy, and supramodal regions in frontal and parietal lobes that carry out higher-order cognition, such as executive function, on the other1,2,3. However, to fully understand how the interactions of these widespread brain systems give rise to conscious information processing, it is necessary to factor out brain processes that are not intrinsic to consciousness4. To this end, functional neuroimaging of individuals rendered unconscious under deep anesthesia or after severe brain injury provides a unique window for demarcating unconscious processes, and conversely, shedding light on brain mechanisms that are essential for conscious information processing and cognition in the healthy brain.

In a series of studies, we asked whether a common mechanism underlies loss of information processing in unconscious states across different conditions, which could shed light on the brain mechanisms of conscious cognition. To address this question we brought together, for the first time, data from the same paradigm—a highly engaging auditory-only narrative—in three independent domains: anesthesia-induced unconsciousness, unconsciousness after brain injury, and individual differences in intellectual abilities during conscious cognition.

Despite a growing number of anesthesia studies, it remains unknown how loss of consciousness affects synthesis of information across sensory and higher-order brain systems. To date, the majority of functional Magnetic Resonance Imaging (fMRI) studies of anesthesia have investigated the brain during a task- and stimulus-free condition, known as the “resting” state, because behavioral responses and eye opening are impaired by sedation prior to loss of consciousness5, which render traditional experimental paradigms that probe complex information processing impossible to implement. However, because resting state studies do not use sensory stimulation, they cannot shed light on how the synthesis of external information breaks down from loss of consciousness. Several studies have used simple psychophysical stimuli and, therefore, have limited their investigation to well-circumscribed responses in sensory-specific cortex6. In the auditory domain, studies have used simple auditory stimuli to investigate the limits of auditory processing during anesthetic-induced sedation. Following light anesthesia with sevoflurane, activation to auditory word stimuli relative to silence was preserved in bilateral superior temporal gyri, right thalamus, bilateral parietal, left frontal, and right occipital cortices7. Parallel results have been found with both propofol and the short-acting barbiturate thiopental, suggesting that basic auditory processing remains intact during reduced or absent conscious awareness6,8,9,10.

By contrast, light anesthesia impairs more complex auditory processing11,12. For example, one study13 showed that the characteristic bilateral temporal-lobe responses to auditorily presented sentences were preserved during propofol- induced sedation, whereas ‘comprehension-related’ activity in inferior frontal and posterior temporal regions to ambiguous versus non-ambiguous sentences was abolished. However, this study did not achieve the unconscious state due to low anesthetic doses. Thus, to date, no anesthetic study has directly investigated how the loss of consciousness affects the processing of a complex, real-world narratives across sensory-driven and higher-order brain systems.

Another group of individuals—patients who lose consciousness after severe brain injury—stand to shed light on the brain mechanism affected by loss of consciousness. Following serious brain injury, a proportion of patients manifest disorders of consciousness (DoC) and exhibit very limited responsivity to commands administered at the bedside by the clinical staff. If entirely behaviorally non-responsive, they are thought to lack consciousness—be in a vegetative state (VS)14— or, if they have reproducible but inconsistent willful responses, to be in a minimal conscious state (MCS)15. The clinical, behavioral assessment of behaviorally non-responsive patients is particularly difficult and can result in high misdiagnosis rate (41%)16. Studies show that, despite the apparent absence of external signs of consciousness, a significant minority of patients (~19%)17,18,19, thought to be in a VS, can demonstrate conscious awareness by willful modulation of their brain activity20,21,22,23,24,25,26, a phenomenon captured by the recently proposed term ‘cognitive motor dissociation’ (CMD)27. In the present study, to circumvent the limitations of behavioral testing and ensure that patients categorized as unconscious showed no willful brain responses, each patient underwent an fMRI-based assessment with a previously established command-following protocol for detecting covert awareness22,28. Similarly to the deep anesthesia context, experimental paradigms that probe the processing of complex external information have, until recently, not been implemented in DoC patients29,30,31,32. Although, the disrupted brain mechanism in patients who are genuinely unconscious has been studied in the resting state paradigm33,34,35,36,37,38,39, this, by default, cannot help to elucidate fully the mechanisms underlying loss of information processing in severely brain-injured unconscious patients.

The inherent limitations in testing unconscious individuals and the absence of identical sensory stimulation paradigms in anesthesia and severe brain injury investigations has hindered understanding of common mechanisms underlying loss of information processing across these conditions. To address this knowledge gap, in two different studies, we used the same paradigm and a novel approach30 for measuring complex information processing in unconsciousness from deep anesthesia and severe brain injury, as participants freely listened to richly evocative stimulation in the form of a plot-driven narrative—a brief (5 minute) auditory-only excerpt from the kidnapping scene in the movie ‘Taken’. This approach circumvents traditional limitations by requiring neither behavioral response nor eye opening, and, importantly, elicits both sensory and fronto-parietal brain responses that are known to support high-order cognition, such as executive function40,41,42,43,44,45,46,47. By their very nature, engaging narratives are designed to give listeners a common conscious experience driven, in part, by the recruitment of similar executive processes, as each listener continuously integrates their observations, analyses and predictions, while filtering out any distractions, leading to an ongoing involvement in the story’s plot. We have previously shown30,31,32 that when different individuals freely listen to the same narrative, stereotyped changes of brain activity across these frontal and parietal cortical regions are observed, which reflect a robust and similar recruitment of executive function across different individuals. Thus, this paradigm is particularly suited for investigating the extent of information processing in behaviorally non-responsive individuals in unconscious states.

Conversely, we asked whether the principles of information processing revealed by the anesthesia and severe brain-injury studies could predict conscious cognitive performance, an independent domain that relies on continuously efficient processing of external information. Understanding individual differences in intellectual abilities is profoundly important as it may, in the future, help facilitate their enhancement, yet the underlying brain mechanisms remain poorly understood. Previous studies have suggested that functional connectivity within the fronto-parietal network during executive or cognitive tasks is related to individual differences in intelligence40. This approach has been useful in identifying functionally segregated neural correlates of intelligence, i.e., the fronto-parietal network, but it does not reflect the role of sensory-driven networks or of their interactions with higher-order systems.

In the first study, we asked how information processing across the auditory and fronto-parietal systems during the story was affected by loss of consciousness in deep anesthesia in healthy participants (N = 16). In the second study, we tested whether the insights gleaned from the anesthesia study could generalize to loss of consciousness after severe brain injury, in a group of patients (N = 11) with disorders of consciousness that underwent fMRI scanning during the same audio story as healthy participants from study one. In the third study, we investigated how the cognitive performance of the individuals from the anesthesia study (N = 14) independently-measured with a cognitive battery weeks after the sedation study related to their synthesis of complex sensory information between auditory and fronto-parietal systems during the audio-story task.

Results

Information processing under deep anesthesia

To measure information processing during the story, we adopted a previously established method using the same audio story30, where we showed that the extent of stimulus-driven cross-subject correlation provided a measure of regional stimulus-driven information processing (Fig. 1A–C). In the wakeful condition of the anesthesia study, we observed widespread and significant (p < 0.05; FWE cor) cross-subject correlation between healthy participants within sensory-driven (primary and association) auditory cortex, as well as higher-order frontal and parietal regions (Fig. 1D), consistent with Naci et al.30. By contrast, in deep anesthesia, the significant (p < 0.05; FWE cor) cross-subject correlation was limited to the auditory cortex, with the exception of two small clusters in left prefrontal and right parietal cortex (Fig. 1E), suggesting that the processing of sensory information was preserved in the sensory, but almost entirely abolished in fronto-parietal regions.

Brain-wide inter-subject correlation of neural activity during the audio story. (A) The audio story elicited significant (p < 0.05; FWE cor) inter-subject correlation across the brain, including frontal and parietal cortex, thought to support executive function. (B) The baseline elicited significant (p < 0.05; FWE cor) inter-subject correlation within primary and association auditory cortex. A small cluster was also observed in right inferior prefrontal cortex. None was observed in dorsal prefrontal and parietal cortex. (C) The audio story elicited significantly (p < 0.05; FWE cor) more inter-subject correlation than the auditory baseline derived from the same stimulus, in parietal, temporal, motor, and dorsal/ventral frontal/prefrontal cortex. A, B, C, adapted with permission from Naci et al.30. (D) The audio story elicited significant (p < 0.05; FWE cor) inter-subject correlation across the brain, including frontal and parietal cortex, in the wakeful state of the anesthesia study. (E) In the deep anesthesia state, significant (p < 0.05; FWE cor) inter-subject correlation was limited to the auditory cortex with the exception of two small clusters, one in left prefrontal and the other in right parietal cortex. (F) The audio story elicited significantly (p < 0.05; FWE cor) more cross-subject correlation in the awake than deeply sedated condition bilaterally in temporal, ventral prefrontal and frontal cortex, and further in parietal, motor, and dorsal frontal and prefrontal cortex in the right hemisphere. Warmer colors depict higher t-values of cross-subject correlation. Warmer colors depict higher t-values of inter-subject correlation.

Subsequently, we investigated the impaired brain mechanism underlying loss of information processing in these higher-order regions. Current theories of consciousness48,49,50, such as the Integrated Information Theory (IIT), propose that conscious cognition relies on the brain’s capacity to efficiently integrate information across different specialized systems48, suggesting that both interconnectedness and functional differentiation of brain systems are important for information processing. However, different putative mechanisms are consistent with our results, including reduced/abolished connectivity among distinct brain systems49 and loss of functional differentiation48 (i.e., homogeneous connectivity across them). To directly investigate the underlying mechanism, we distinguished four possible impairment patterns consonant with theories of consciousness that could explain impaired information processing in deep anesthesia: 1) a loss of long-range connectivity between auditory and fronto-parietal networks (Fig. 2A); (2) a loss of connectivity between areas within each network, e.g., between frontal and parietal regions (Fig. 2B); (3) a combination of 1 and 2 (Fig. 2C); and (4) a loss of differentiation between auditory and fronto-parietal networks (Fig. 2D).

Candidate patterns of connectivity perturbations caused by deep propofol anesthesia. (A) Loss of long-range connectivity between different networks; (B) Loss of long-range connectivity within a specific network, e.g., between frontal and parietal regions; (C) A combination of patterns in A and B; (D) Loss of functional differentiation between different brain networks.

The global effect of anesthesia on brain networks’ connectivity

Initially, we investigated how deep propofol anesthesia perturbed the patterns of global connectivity. During the audio story, a two-way ANOVA with factors connectivity type (within, between) and state (wakeful, deep anesthesia) showed that connectivity across networks increased significantly (main effect of state: F(16) = 8.57; p = 0.01) (Fig. 3A,B) in deep anesthesia relative to wakefulness. Connectivity between increased more than within networks (interaction effect, state x connectivity type: F(15) = 5.58; p = 0.03) (Fig. 3E), driven by a significant increase in the between network connectivity (t(15) = 3.82, p = 0.002) and no overall change in the within connectivity (see SI for complete results; Figures S3, S4). By contrast, during the resting state, deep anesthesia showed the opposite effect on between and within network connectivity, with a larger impact on the within relative to between network connectivity (interaction effect, state x connectivity type: F(15) = 5.4; p = 0.03) (Fig. 3C,D). Connectivity within was significantly reduced, but no changes were observed in deep anesthesia in the between network connectivity (see SI for complete results).

Global within- and between-network functional connectivity perturbations by deep propofol anesthesia (A–D) Functional connectivity matrices for five brain networks in the story and resting state conditions, in the wakeful and deep anesthesia states. Each cell represents the correlation of the time-course of one region of interest (ROI) with another, or itself (in the center diagonal). Cells representing correlations of ROIs within each network are delineated by red squares. Warm/cool colors represent high/low correlations, as shown in heat-bar scale. (E) Average connectivity (z values) within- and between- networks in the wakeful (W) and the deep anesthesia (D) states, during the story and resting state conditions. DMN/DAN/ECN/VIS/AUD = Default Mode/Dorsal Attention/Executive Control/Visual/Auditory network.

A direct comparison between the audio story and resting state confirmed that anesthesia affected connectivity in the two conditions in opposite directions. A two-way ANOVA with factors condition (audio story, resting state) and state (wakeful, deep anesthesia) showed a condition x state interaction [F(15) = 7.01; p = 0.02] that was driven by an overall connectivity reduction during the resting state and connectivity increase during the audio story in deep anesthesia. The effects were the same when functional differentiation was measured as the ratio of between- to within-network connectivity.

These suggest that, when the brain is at rest, reduced connectivity within brain networks rather than loss of functional differentiation between them, characterizes the unconscious state. By contrast, when the brain is exposed to complex naturalistic stimuli from the environment, reduced functional differentiation between brain networks leads to loss of information processing in the unconscious state. However, these results must be interpreted with caution, in light of the consistent block order in deep sedation.

The effect of anesthesia on auditory and fronto-parietal networks’ connectivity

Next, we asked specifically whether reduced functional differentiation between the auditory and fronto-parietal networks drove the loss of information processing in the fronto-parietal regions during the story. Consistent with effects at the whole-brain level, we found a significant increase in the AUD–DAN and AUD–ECN connectivity [t(15) = 2.6, p = 0.02; t(15) = 4.98, p = 0.0002, respectively] (Fig. 4A–D), or a significant reduction of the functional differentiation between the AUD and DAN, ECN in deep anesthesia relative to wakefulness. By contrast, in the resting state, connectivity between these networks was not affected by sedation (Fig. 3). These results suggested that reduced functional differentiation between the auditory and fronto-parietal networks leads to loss of external information processing in the unconscious state. Conversely, they suggested that the functional differentiation between the auditory and fronto-parietal networks underlies conscious processing of complex auditory information.

Perturbations of auditory and fronto-parietal connectivity by deep propofol anesthesia in the audio story condition. Only connectivity between the AUD and DAN/ECN, respectively, was significantly modulated by propofol, showing a significant reduction of functional differentiation between sensory and higher-order networks in deep anesthesia relative to wakefulness. (A,B) Functional connectivity matrices for ROIs comprising the DAN and AUD (A)/ECN and AUD (B) networks in the wakeful and deep anesthesia states of the audio story condition. (C,D) Average connectivity (z-values) within and between the DAN and AUD (C)/ECN and AUD (D), in the wakeful and deep anesthesia states.

To test specifically whether functional differentiation in the conscious state would be driven by the complex features of the audio story, including its narrative, rather than merely the presence of external stimulation, we compared the AUD–DAN and AUD–ECN pairwise connectivity in wakeful individuals during the audio story with those in the two baseline conditions, a scrambled version of the story that retained the sensory features but was devoid of the narrative, and the resting state. During the scrambled story, functional connectivity between the AUD and DAN, but not ECN, was significantly lower than in the resting state [AUD–DAN: t(14) = −3.4, p = 0.005]. Furthermore, during the intact story, functional connectivity between the AUD and DAN, ECN was significantly lower than in the scrambled story [AUD–DAN: t(14) = −11.2, p = 0.00000002; AUD–ECN: t(14) = −10.62, p < 0.00000004], and than in the resting state [AUD–DAN: t(14) = −7.3, p < 0.000004; AUD–ECN: t(14) = −2.7, p < 0.015] (Fig. 5A–H). These results suggested that the AUD–DAN connectivity was modulated by the presence of low-level sensory stimulation over the resting state baseline, and further by the presence of the high-order features of story, including its narrative, over the scrambled baseline.

Functional connectivity between the auditory and fronto-parietal networks in healthy wakeful individuals, during the audio story and baseline conditions. Connectivity between the auditory and fronto-parietal networks was significantly modulated by the presence of complex meaningful stimuli, with the functional differentiation between the AUD and DAN/ECN increasing significantly in the audio story as compared to the scrambled story and resting state baseline conditions. (A–C) Connectivity between the ROIs within the AUD and DAN networks in the intact story (A), scrambled story (B), and resting state (C) baseline. (D) Average AUD–DAN connectivity (z values) for each condition. (E–G) Connectivity between the ROIs within the AUD and ECN networks in the intact story (E), scrambled story (F), and resting state (G) baseline. (H) Average AUD–ECN connectivity (z values) for each condition. A1: Primary auditory cortex; LFEF: Left frontal eye field; RFEF: Right frontal eye field; LPIPS: Left posterior IPS; RPIPS: Right posterior IPS; LAIPS Left anterior IPS; RAIPS: Right anterior IPS LMT: Left middle temporal area; RMT: Right middle temporal area; DMPFC: Dorsal medial PFC; LAPFC: Left anterior prefrontal cortex; RAPFC: Right anterior prefrontal cortex LSP: Left superior parietal; RSP: Right superior parietal.

The effect of severe brain injury on the auditory and fronto-parietal networks’ connectivity

Results from both conscious and unconscious conditions in the previous study suggested that functional differentiation between the auditory and fronto-parietal networks underlined conscious processing of complex auditory information. In the next study, we further tested this claim in severe brain-injury, which served as an independent manipulation of consciousness.

The structural profiles and full behavioral description of the convenience sample of brain-injured patients (N = 11) are shown in Figure S1 and Tables S2, S3. Patients who showed willful brain responses in the independent command-following assessment22,28 were considered covertly aware and labeled DoC+ (N = 6), and those who showed no signs of conscious awareness were labeled as DoC− (N = 5) (Fig. 6), for subsequent analyses. Similarly to conscious individuals (Fig. 5), we expected DoC+ patient group to show a heightened differentiation/down-regulation of the AUD and DAN, ECN pairwise connectivity during the audio story relative to resting state baseline connectivity. By contrast, we did not expect a down-regulation of the connectivity between these networks during the audio story in DoC- patient group.

Summary of DoC patients’ clinical and fMRI assessment data. Auditory processing. In the fMRI assessment, three patients clinically diagnosed to be in a VS did not show evidence of auditory processing. The other eight patients who showed evidence of auditory processing, two patients clinically diagnosed as VS did not show evidence of brain-based command-following, and the other six, including two diagnosed as VS, showed evidence of brain-based command-following, and thus, of covert awareness. Command-following. 6/11 patients followed task commands by willfully modulating their brain activity as requested, and thus, provided evidence of conscious awareness. Two of these (P2, P5) presented a CMD profile, or a behavioral diagnosis of VS that was inconsistent with their positive fMRI results. 5/11 patients showed no evidence of willful responses in the fMRI command-following task, and, thus, provided no neuroimaging evidence of awareness. One (P7) showed no neuroimaging evidence of awareness despite an MCS diagnosis, due to falling asleep in the scanner for the entirely of the session (Materials and Methods).

The DoC+ group showed a significant down-regulation of the auditory and fronto-parietal networks connectivity in the audio story relative to the resting state [AUD–DAN: t(5) = −1.9, p = 0.05; AUD–ECN: t(5) = −3.4, p = 0.02] (Fig. 7A–H, E–L), and was significantly different from the DoC- (N = 5) group, who did not show this effect (Fig. 7C–J, E–L) [AUD–DAN: t(9) = −3.6, p = 0.005; AUD–ECN: t(9) = −3.4, p = 0.008]. The predicted effect pattern was also observed for individual patients, with 5/6 DoC+ patients showing a down-regulation of the connectivity between AUD and DAN, ECN (Fig. 7F,L). The effect in the DoC+ group was consistent with the effect observed in healthy conscious individuals (Fig. 5). By contrast, the DoC– group showed significantly enhanced AUD–DAN connectivity during the audio story relative to resting state [t(4) = 4.52; p = 0.01] (Fig. 7E). This was consistent with the up-regulation of the AUD–DAN connectivity observed in the anesthesia-induced unconscious state in the previous study.

Modulation of auditory to fronto-parietal connectivity by meaningful stimulation in DoC patients. Similarly to healthy individuals, connectivity between the auditory and fronto-parietal networks in DoC+ patients was significantly modulated by the presence of complex meaningful stimuli, with the functional differentiation between the AUD and DAN/ECN increasing significantly in the audio story as compared to the resting state baseline condition. (A–D) Connectivity between the ROIs within the AUD and DAN networks, during the audio story and resting state baseline, in the DoC+ (A,B) and DoC− (C,D) patients. (E) Differential averaged AUD–DAN connectivity (z values) for each patient group. (F) Differential averaged AUD–DAN connectivity (z values) for each individual patient. (G–J) Connectivity between the ROIs within the AUD and ECN networks, during the audio story and resting state baseline, in the DoC+ (G,H) and DoC− (I,J) patients. (K) Differential averaged AUD–ECN connectivity (z values) for each patient group. (L) Differential averaged AUD–ECN connectivity (z values) for each individual patient. A1: Primary auditory cortex; LFEF: Left frontal eye field; RFEF: Right frontal eye field; LPIPS: Left posterior IPS; RPIPS: Right posterior IPS; LAIPS Left anterior IPS; RAIPS: Right anterior IPS LMT: Left middle temporal area; RMT: Right middle temporal area; DMPFC: Dorsal medial PFC; LAPFC: Left anterior prefrontal cortex; RAPFC: Right anterior prefrontal cortex LSP: Left superior parietal; RSP: Right superior parietal.

Network connectivity and individual differences in conscious cognition

Taken together, the results from the two previous two studies suggested that heightened differentiation between the auditory and fronto-parietal networks supports conscious processing of complex auditory information, and more broadly, conscious cognition. In the third study, we further tested this claim directly, by asking whether it predicted individual differences in cognitive performance. We assessed the cognitive performance of a participant subset (14/16) from the anesthesia study, who came back to the laboratory weeks later, with a battery comprising 12 cognitive tests51 that measured short-term memory, reasoning, and verbal acuity (SI, Table S4). Based on converging results from studies 1 and 2, we expected stronger differentiation between the auditory and fronto-parietal networks during the audio story to predict stronger cognitive performance, or, a negative relationship between the AUD and DAN, ECN connectivity and the independently measured cognitive performance in the same individuals.

The individuals’ AUD–DAN connectivity during the audio story was significantly negatively correlated (r = −0.66; p < 0.007) with their cognitive performance in the verbal acuity (Fig. 8A,B) component of the battery, which accounted for the variance of tasks that used verbal stimuli (i.e., digit span, verbal reasoning, color-word remapping; Supporting Information). The AUD–DAN connectivity did not predict performance in the other two components, and the AUD–ECN connectivity did not predict performance in any of the three (Fig. 8A,C). Pairwise connectivity between these networks in the resting state did not predict cognitive performance in any of the domains (Fig. 8A). Further, we found no relationship between the connectivity of the AUD and default mode network (DMN), included as a control high-order network, and cognitive performance.

The relationship between brain network connectivity during the audio story and independently-measured cognitive performance. The functional connectivity between AUD and DAN, but not ECN (or DMN, used here as a high-level control network) during the story, and not the resting state baseline condition, was significantly inversely related to verbal performance. (A) Group-averaged correlation between the functional connectivity (FC) of the AUD and the DMN/DAN/ECN networks during the audio story and resting state conditions and verbal performance. (B,C) For each participant, the correlation between their AUD–DAN (B)/AUD–ECN (C) connectivity during the story and their verbal performance is displayed.

In summary, the results of the third study converged with the other two, and suggested that the extent to which the functional responses of the auditory and fronto-parietal networks to complex auditory stimuli dissociated from one another predicted independent cognitive performance in the verbal domain, and thus, may be a determining factor in individual differences in verbal acuity.

Discussion

In a series of studies, we asked whether a common mechanism underlies loss of information processing in unconscious states across different conditions, which could shed light on the brain mechanisms of conscious cognition. To this end, for the first time, we brought together two very disparate conditions where consciousness is lost—deep anesthesia and severe brain injury—to investigate the modulation of functional connectivity between the auditory and fronto-parietal networks by identical complex stimulation in identical paradigms. Subsequently, we tested whether findings from these studies predicted individual differences in intellectual abilities during conscious information processing.

Common mechanism for loss of information processing in unconsciousness during anesthesia and after severe brain injury

We use a novel approach30,31,32, to measure external information processing in response to richly evocative stimulation portraying real-world events, during deep anesthesia and severe brain injury. In the anesthesia study, we found that the processing of the story information was preserved in auditory cortex, but almost entirely abolished in fronto-parietal regions. Deep anesthesia led to a significant reduction in the functional differentiation of several networks across the brain, and specifically, between the auditory and fronto-parietal networks, during the story condition. These results suggested that anesthesia impaired the processing of complex external information in fronto-parietal regions by eroding their functional differentiation from sensory (e.g., auditory) systems, and not by impairing connections between or within them. Propofol was used here as a common anesthetic agent, and future studies that employ the same paradigm across different agents will help elucidate whether specific agents vary in their effect on connectivity during complex stimulation. Our results are consistent with previous findings from resting state studies, suggesting that anesthesia reduces the repertoire of discriminable brain states52,53, and that during loss of consciousness global synchrony impairs information processing by leading to a breakdown of causal interactions between brain areas54,55,56. Further, they are consistent with resting state studies using sleep-induced altered states of consciousness, which show that hyper-synchrony perturbs the feed-forward propagation of auditory information57, as well as feedback projections58, and more broadly, the stable patterns of causal interactions in response to external stimulation across the brain59. While these previous resting state studies suggest that global synchrony breaks down causal interactions, the investigation of causal cortico-cortical interactions was outside the scope of this work. We did not find an effect of deep sedation on thalamo-cortical connectivity in any of the five brain networks (SI), and while outside of our scope here, a potential causal role of thalamic inputs to cortico-cortical connectivity in deep sedation remains to be investigated further.

Our findings from the resting state condition in deep anesthesia manipulation agree with a previously reported reduction of brain connectivity in deep propofol anesthesia during the resting state49,54,60, in particular with a reduction of connectivity within the default-mode60,61,62; but, see53,63), and the executive control networks60,64. Although consistency with previous studies suggests otherwise, we note that differences between the story and resting state conditions in deep sedation must be interpreted with caution, in light of the consistent block order. Nevertheless, the results from the sensory stimulation condition reveal a different mechanism underlying the loss of external information processing than suggested by resting state studies. First, in the audio-story condition, the connectivity within networks was affected by sedation in the opposite direction to the resting state. Second, in the resting state condition, we observed no effect of deep anesthesia on connectivity between distinct networks, which, by contrast, increased significantly during the auditory stimulation condition suggesting loss of functional differentiation across the cortex. Another type of stimulation— transcranial magnetic stimulation—has previously been used to directly perturb the cortex in unconscious states and demonstrate that responses across the cortex become undifferentiated from one another55,65. In summary, these results suggest that deep anesthesia affects the brain differently when it is exposed to complex external stimulation relative to rest, with the stimulus-evoked feed-forward processing cascade being echoed undifferentiated throughout the brain, thus overcoming the inhibitory effect of propofol on neural connectivity that has been reported in resting state studies66.

Similarly to deeply anesthetized unconscious individuals, severely brain-injured patients who were not consciously aware during the study showed significantly reduced differentiation between the auditory and fronto-parietal networks during the story relative to their resting baseline. Conversely, similarly to healthy wakeful individuals, severely brain-injured patients who were covertly aware showed the opposite effect: significantly enhanced differentiation between the auditory and fronto-parietal networks during the story relative to their resting baseline. The modulation of the sensory to higher-order networks’ relationship by environmental stimuli in severely brain-damaged (albeit conscious) patients suggests this is a fundamental feature of the conscious brain, which is resilient to substantial metabolic dysfunction following brain injury67. We caution that our results do not suggest that each DoC+ patient understood or processed the story similarly as healthy individuals. Foremost, DoC patients who retain covert awareness vary widely in their arousal level throughout the day. Further, although the individual DoC+ patients discussed here retained the functional brain architecture to support covert conscious awareness, the absence of a sensory baseline and lack of individual-level statistics, render it impossible to ascertain the extent of conscious processing of the story or its understanding in individual patients.

Previous studies that have compared anesthetized and unconscious brain-injured patients have highlighted that, similarly to the effect of common anesthetic agents including propofol60,68, brain dysfunction in this population is prevalent within the fronto-parietal network69,70. They have indicated preserved sensory processing (e.g., responses to noxious stimulation, auditory or speech perception) in the absence of higher-order components (e.g., neural evidence of pain perception, language comprehension)5, and suggested that disconnection between sensory and fronto-parietal systems is common to both populations. By contrast, our findings suggest that, when the brain is exposed to complex external information, these systems do not disconnect from one another in these unconscious states, as previously suggested by aforementioned resting state studies. Rather, our findings demonstrate that the erosion of functional differentiation among these systems underlies impaired information processing when consciousness is lost. Conversely, the significant increase of functional differentiation between these systems by low-level sensory stimulation relative to the resting state baseline in different conscious populations (i.e., healthy and brain-injured individuals) suggests that functional responsivity to external stimulation is a robust feature of the conscious brain.

Although loss of consciousness is common to both deeply anesthetized and some severe brain-injured patients, these two populations differ greatly. In the former no structural changes occur, and the functional brain response is altered pharmacologically. In the latter, an array of structural damage, greatly varying across patients, is present and affects altered brain responses, leading to complete functional loss in some domains and potential functional re-organization and preservation in others. Given the large differences between these two, the similarity of the functional response to previously validated targeted stimulation30 across these populations provides strong evidence for a common mechanism underlying loss of information processing in the unconscious state. These results are consistent with current theories of consciousness, which suggest that it requires both differentiation and integration of information in neural circuits48,54,71, and elucidate the underlying brain mechanism by showing the critical role of functional differentiation between sensory and higher-order systems when information processing is required.

Mechanism for conscious information processing and cognition

The third study further confirmed the role of the functional differentiation between the sensory and higher-order systems in conscious cognition. Individuals who showed higher differentiation between the auditory and dorsal attention network (DAN) in response to the audio story had higher verbal acuity scores than individuals who showed lower differentiation. The story elicited a range of cognitive processes such as the orientation and modulation of attention to the saliency of incoming auditory inputs— a function subserved primarily by the DAN72 — and language perception and comprehension, which corresponded to those engaged by the verbal acuity tasks of the cognitive battery. The functional relationship between the auditory and executive control network (ECN) was not predictive of cognitive performance, which is likely accounted for by the nature of the stimulus and fMRI paradigm which did not require behavioral response planning or monitoring— a function sub-served primarily by the ECN73. There was no relationship between the auditory and fronto-parietal connectivity in response to the story and performance in the short-term memory or reasoning components of the cognitive battery, likely due to the story’s cognitive demands low loading on these components. We note that the verbal component of the cognitive battery, which comprised 12 tasks, accounted for the majority of variance in a subset of different tasks that used verbal stimuli (digit span, verbal reasoning, color-word remapping; full description in SI). Thus, in capturing a cross-section of processes employed in these different tasks, the verbal component represented a robust example of varied domain-specific processes, which are abstracted away from the demands of particular tasks. Therefore, although these results suggested that the relationship between brain connectivity and intelligence is domain-specific, future studies are required to further test the sensory–higher-order networks’ relationship and other cognitive domains/processes.

Further, these results agree with a previous proposal that the relationship between brain connectivity and intelligence is context specific74. In contrast to the a-priory predicted relationship between these networks’ connectivity and intelligence during complex sensory stimulation, we found no relationship between them in the resting state. Notably, these results were predicted from two different populations where loss of from information in unconsciousness suggested a common mechanism for information processing during conscious cognition. Consistent with a recent emerging view in the field75, they suggested that individual differences in intellectual abilities rely on the dynamic reconfigurations of connectivity in response to incoming sensory information76, within a widespread system comprising sensory-specific and extra-modal cortices in fronto-parietal cortex.

In summary, findings herein suggest that the dissolution of functional differentiation

is a common basis for loss of information processing across widely different conditions where consciousness is lost. Conversely, they suggest that the responsivity of sensory and higher-order brain systems to external stimulation, especially through the diversification of their functional responses is an essential feature of conscious cognition and domain-specific intelligence.

Material and Methods

Participants

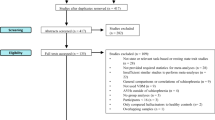

Ethical approval was obtained from the Health Sciences Research Ethics Board and Psychology Research Ethics Board of Western University. All experiments were performed in accordance with the relevant guidelines and regulations set out by the research ethics boards. All healthy volunteers were right-handed, native English speakers, and had no history of neurological disorders. The respective substitute decision makers gave informed written consent for each patient’s participation. They signed informed consent before participating and were remunerated for their time. 19 (18–40 years; 13 males) healthy volunteers, 11 (19–55 years; 5 males) DoC patients, and 14 (18–40 years; 12 males) healthy volunteers participated in study 1, 2, and 3, respectively. Three volunteers (1 male) were excluded from data analyses of study 1, due to headphone malfunction or physiological impediments to reaching deep anesthesia in the scanner.

Stimuli and Design

In study 1, a plot-driven audio story (5 minutes) was presented in the fMRI scanner to healthy volunteers and they were asked to simply listen with their eyes closed. A resting state scan (8 minutes) was also acquired, during which volunteers were asked to relax with their eyes closed and not fall asleep. A novel re-analysis of data from the scrambled story condition from Naci et al.30 (SI) was performed, as a baseline condition with the intact audio story. In study 2, severely brain-injured patients were scanned as they listened to the same audio story as healthy volunteers, and also during the resting state. In study 3, 14/16 of volunteers from the anesthesia study completed a cognitive battery comprising 12 tasks based on classical cognitive psychology paradigms (www.CambridgeBrainSciences.com) (SI). The stimuli and design for each were reported in Hampshire et al.51.

Sedation procedure

fMRI data was acquired during the audio story and resting state conditions while participants were awake (non-sedated) and deeply anesthetized with propofol (Ramsay score 5)77. Prior to acquiring fMRI data for the wakeful and deeply anesthetized states, 3 independent assessors (two anesthesiologists and one anesthesia nurse) evaluated each participant’s Ramsay level by communicating with them in person inside the fMRI scanner room, as follows. Awake Non-sedated. Volunteers were fully awake, alert and communicated appropriately. For the wakeful session, they were not scored on the Ramsay sedation scale, which is intended for patients in critical care settings or patients requiring sedation. During the wakeful audio story and resting state conditions, wakefulness was monitored with an infrared camera placed inside the scanner. Deep anesthesia. Intravenous propofol was administered with a Baxter AS 50 (Singapore). We used an effect-site/plasma steering algorithm in combination with the computer-controlled infusion pump to achieve step-wise increments in the sedative effect of propofol. The infusion pump was manually adjusted to achieve desired levels of sedation, guided by targeted concentrations of propofol, as predicted by the TIVA Trainer (the European Society for Intravenous Aneaesthesia, eurosiva.eu) pharmacokinetic simulation program. The pharmacokinetic model provided target-controlled infusion by adjusting infusion rates of propofol over time to achieve and maintain the target blood concentrations as specified by the Marsh 378 compartment algorithm for each participant, as incorporated in the TIVA Trainer software. Propofol infusion commenced with a target effect-site concentration of 0.6 µg/ml and oxygen was titrated to maintain SpO2 above 96%. If Ramsay level was lower than 5, the concentration was slowly increased by increments of 0.3 µg/ml with repeated assessments of responsiveness between increments to obtain a Ramsay score of 5. Once participants stopped responding to verbal commands, were unable to engage in conversation, and were rousable only to physical stimulation they were considered to be at Ramsay level 5. The mean estimated effect-site propofol concentration was 2.48 (1.82–3.14) µg/ml, and the mean estimated plasma propofol concentration was 2.68 (1.92–3.44) µg/ml. Mean total mass of propofol administered was 486.58 (373.30–599.86) mg. The variability of these concentrations and doses is typical for studies of the pharmacokinetics and pharmacodynamics of propofol (SI). For both sessions, prior to the scanning, volunteers were asked to perform a basic verbal recall memory test and a computerized (4 minute) auditory target detection task (SI), which further assessed each individual’s wakefulness/deep anesthesia level independently of the anesthesia team. Scanning commenced only once the agreement among the 3 anesthesia assessors on the Ramsey level 5 was consistent with the lack of response in both verbal and computerized behavioral tests.

Scanning took place in a research not hospital setting, thus, breathing in the deeply anesthetized individuals could not be protected by intubation and was kept under spontaneous individual control. Therefore, although individuals were monitored closely by two anesthesiologists, airway security was at risk during scanning and time inside the scanner was kept at the minimum to ensure return to normal breathing. Thus, safety concerns for the deeply anesthetized individuals dictated that the novel condition of the naturalistic audio story be presented first. The baseline condition of the resting state was considered of secondary importance, as it has been reported previously in deep sedation condition of clinical studies. Therefore, this condition was acquired after the story condition across participants. However, the mean estimated effect-site propofol concentration and the mean estimated plasma propofol concentrations were kept stable by the pharmacokinetic model delivered via the TIVA Trainer infusion pump throughout the deep sedation session, and the lack of significant differences in the frame-wise movement parameters (assessed according to Power et al.)79 between the story and the resting state conditions further suggested no difference in the level of sedation between the two conditions. For similar safety reasons, data on the meaningless baseline (scrambled version of the audio story) that was designed to clarify processing mechanisms in wakeful individuals, was not collected in deeply anesthetized individuals. Throughout the deep sedation scanning session, the participant’s behavioral profile was monitored inside the scanner room by the anesthesia nurse and one of the anesthesiologists and outside from the scanner control room, with an infrared camera that displayed the participant’s face. No movement, fluctuations of sedation, or any other state change, was observed during the deep sedation scanning for any of the participants included in the study.

Patients

The severely brain-injured patients were selected based on their clinical diagnoses (i.e., VS/MCS/LIS, at the time of fMRI data acquisition) to form a convenience sample of the disorders of consciousness (DoC) population. No previous fMRI data was available for any of the patients at the time of scanning. Prior to commencing the scanning sessions, all VS/MCS patients were tested behaviorally at their bedside (outside of the scanner) with the Comma Recovery Scale-Revised (CRS-R)80. At the bedside behavioral testing, six patients met the recognized criteria for the vegetative state (VS), four for the minimally conscious state (MCS), and one for the locked-in syndrome (LIS) (full description of behavioral scores in Table S3). LIS describes an individual who, as a result of acute injury to the brain stem, has (almost) entirely lost the ability to produce motor actions, apart for small, but reproducible eye movements that confirm the presence of consciousness81. The patients’ demographic and clinical data are summarized in Tables S2, S3, and the structural, functional MRI assessment data in Figs 6 and S1, S5, S6.

Inside the scanner, each patient underwent a previously established fMRI-based protocol for assessing auditory perception and detecting covert awareness22,28 (Figs 6 and S5), in the same visit as the audio story scan to help establish the genuine status of consciousness. Prior to assessing command-following, we assessed auditory perception to ensure that it could not have been a limiting factor to producing willful brain responses. Patients had complex underlying medical states, including head flexion and overall muscle rigidity, tracheal tubes for assisted feeding and suctioning, etc., and the highly physically constraining scanning environment compromised their comfort. Some could not lie flat for long periods, others needed frequent suctioning, and other still became agitated after an initial brief period in the scanner. Therefore, to limit patient discomfort, time in the scanner was kept at a minimum and data on the meaningless baseline (scrambled version of the audio story) was not collected.

fMRI Acquisition and Analysis

Healthy individuals

Functional images were acquired on a 3 Tesla Siemens Tim Trio system, with a 32-channel head coil. Standard preprocessing procedures and data analyses were performed with SPM8 and the AA pipeline software82. In the processing pipeline, a temporal high-pass filter with a cut-off of 1/128 Hz was applied and movement was accounted for by regressing out the 6 motion parameters (x, y, z, roll, pitch, yaw). Additionally, frame-wise movement parameters according to Power et al.79 were computed. Prior to analyses, the first five scans of each session were discarded to achieve T1 equilibrium and to allow participants to adjust to the noise of the scanner. To avoid the formation of artificial anti-correlations, a confounding effect previously reported by Murphy and others83,84, we performed no global signal regression. Group-level correlational analyses explored, for each voxel, the inter-subject correlation in brain activity, by measuring the correlation of each subject’s time-course with the mean time-course of all other subjects. Significant clusters/voxels survived the p < 0.05 threshold, corrected for multiple comparisons with the family wise error (FWE). Functional connectivity (FC) was measured by computing via Pearson correlation the similarity of the fMRI time-courses of regions of interest (ROI)—based on well-established landmark ROIs from the resting state literature85 (Table S1)—within and between different networks86 (SI). As this measure of connectivity reflected the degree of similarity between the networks’ functional time-courses, an increase/up-regulation of connectivity indicated more similar time-courses between networks, and thus a loss of functional differentiation. Thus, ‘differentiation’ in this context is measured as the inverse of the Pearson correlation value and must not be confused with measures used in other approaches48. We note that Pearson correlation is a simple FC measure that, while advantageous for its minimal assumptions regarding the true nature of brain interactions and breath of its use in the neuroscientific literature, does not directly imply causal relations between neural regions. However, it is an adequate measure of FC for our purposes, because the time-course and spatial extent of the auditory and fronto-parietal networks encompassed a vast swath of the hierarchical processing cascade and, thus, many regions of cause-effect space were triggered by the stimulus. Their FC, as measured through Pearson correlation, reflected their interactions over the several minutes and the resulting computations on the information content of the auditory inputs. Future studies will also investigate the connectivity between these regions by using direct measures of causal relationships87,88. T-tests used to explore effects of interest between functional connectivity and cognitive performance were Bonferroni corrected for multiple comparisons.

Severely brain-injured patients

Patient scanning was performed using the same 3 Tesla Siemens Tim Trio system, 32-channel head coil, and data acquisition parameters as for the healthy participants. The same data preprocessing and analyses procedures as for healthy participants were applied to patient data. The patients’ spontaneous arousal during the audio story condition was monitored with an infrared camera placed inside the scanner. One patient (P7) fell asleep in the scanner for the entirely of the session and thus, showed no neuroimaging evidence of awareness despite an MCS diagnosis. The extent of information processing in individual patients (Fig. S6) was investigated with a novel technique developed by Naci and colleagues30,32. This approach did not involve normalization to a healthy template, nor did it constrain the patient’s expected brain activity based on the localization of the effect in healthy controls. Instead, the time-course of brain activity in healthy controls served to build a strong prediction for the temporal evolution of brain activity in the patients. The precise location of a patient’s brain activity was expected to deviate from that of the healthy controls’. Not only is this naturally the case for individual healthy participants, but also, importantly, it is to be expected in brain-injured patients as a result of structural and concomitant functional re-organization of the brain. Nevertheless, a spatial heuristic based on the controls’ data informed the interpretation of the patients’ results, helping to infer the nature of the underlying residual brain function. In summary, drawing comparisons in the temporal domain enabled direct relation of the healthy controls’ activation to that of brain-injured patients, while avoiding stringent spatial constraints on the patients’ functional anatomy (Fig. S6). By contrast, for the analysis of functional connectivity based on a set of network nodes pre-defined in the healthy literature in the MNI standard neurological space, each patient’s brain was normalized to the healthy template. We reasoned that any damage within the regions of interest in each patient’s brain would add noise to the brain activity measurement and reduce the power to detect an effect. Therefore, any results in brain injured patients, that aligned with a-priory hypotheses based on the anesthesia study were highly unlikely given the heterogeneous structural preservation and would present a conservative estimate of the underlying effect.

Data Availability Statement

The datasets generated during and/or analysed during the current study are available from the corresponding author on reasonable request.

References

Dehaene, S. & Changeux, J. P. Experimental and theoretical approaches to conscious processing. Neuron 70(2), 200–27 (2011).

Dehaene, S., Changeux, J., Naccache, L., Sackur, J. & Sergent, C. Conscious, preconscious, and subliminal processing: a testable taxonomy. Trends In Cognitive Sciences 10, 204–211 (2006).

Lamme, V. A. F. & Roelfsema, P. R. The distinct modes of vision offered by feedforward and recurrent processing. Trends Neurosci. 23, 571–579 (2000).

Dehaene, S., Charles, L., King, J. R. & Marti, S. Toward a computational theory of conscious processing. Curr. Opin. Neurobiol. 25, 76–84 (2014).

MacDonald, A., Naci, L., MacDonald, P. & Owen, A. M. Anesthesia and neuroimaging: Investigating the neural correlates of unconsciousness. TICS 19(2), 100–107 (2015).

Dueck, M. H. et al. Propofol attenuates responses of the auditory cortex to acoustic stimulation in a dose-dependent manner: A FMRI study. Acta Anaesthesiol. Scand. 49, 784–791 (2005).

Kerssens, C. et al. Attenuated brain response to auditory word stimulation with sevoflurane: a functional magnetic resonance imaging study in humans. Anesthesiology 103, 11–19 (2005).

Veselis, R. A. et al. Thiopental and propofol affect different regions of the brain at similar pharmacologic effects. Anesth. Analg. 99, 399–408 (2004).

Plourde, G. et al. Cortical processing of complex auditory stimuli during alterations of consciousness with the general anesthetic propofol. Anesthesiology 104, 448–457 (2006).

Liu, X. et al. Propofol disrupts functional interactions between sensory and high-order processing of auditory verbal memory. Hum. Brain Mapp. 33, 2487–2498 (2012).

Heinke, W. et al. Sequential effects of propofol on functional brain activation induced by auditory language processing: an eventrelated functional magnetic resonance imaging study. Br. J. Anaesth. 92, 641–650 (2004).

Adapa, R. M. et al. Neural correlates of successful semantic processing during propofol sedation. Hum. Brain Mapp. 35, 2935–2949 (2014).

Davis, M. H. et al. Dissociating speech perception and comprehension at reduced levels of awareness. Proc. Natl. Acad. Sci. USA 104, 16032–16037 (2007).

The Multi-Society Task Force on PVS. Medical aspects of the persistent vegetative state. New England Journal of Medicine 330, 1499–1508 (1994).

Giacino, J. T. et al. The minimally conscious state: Definition and diagnostic criteria. Neurology 58(3), 349–353 (2002).

Schnakers, C. et al. Diagnostic accuracy of the vegetative and minimally conscious state: clinical consensus versus standardized neurobehavioral assessment. BMC Neurology 9(1), 35 (2009).

Kondziella, D., Friberg, C. K., Frokjaer, V. G., Fabricius, M. & Møller, K. Preserved consciousness in vegetative and minimal conscious states: systematic review and meta-analysis. J Neurol Neurosurg Psychiatry, jnnp-2015.

Cruse, D. et al. Bedside detection of awareness in the vegetative state: a cohort study. The Lancet 378(9809), 2088–2094 (2012).

Monti, M. M. et al. Willful modulation of brain activity in disorders of consciousness. N. Engl. J. Med. 362, 579–589 (2010).

Bodien, Y. G., Giacino, J. T. & Edlow, B. L. Functional MRI Motor Imagery Tasks to Detect Command Following in Traumatic Disorders of Consciousness. Frontiers in Neurology 8, 688 (2017).

Gibson, R. M. et al. Somatosensory attention identifies both overt and covert awareness in disorders of consciousness. Annals of Neurology, In Press, https://doi.org/10.1002/ana.24726 (2016).

Naci, L. & Owen, A. M. Making every word count for nonresponsive patients. JAMA Neurol. 70, 1235–41 (2013).

Chennu, S. et al. Dissociable endogenous and exogenous attention in disorders of consciousness. Neuroimage Clin. 3, 450–61 (2013).

Fernández-Espejo, D. & Owen, A. M. Detecting awareness after severe brain injury. Nat. Rev. Neurosci. 14, 801–9 (2013).

Bardin, J. C. et al. Dissociations between behavioural and functional magnetic resonance imaging-based evaluations of cognitive function after brain injury. Brain 134, 769–82 (2011).

Owen, A. M. et al. Detecting Awareness in the Vegetative State. Science. 313, 1402–1402 (2006).

Schiff, N. D. Altered consciousness. In: Winn R, ed. Youmans and Winn’s Neurological Surgery. 7th ed. New York, NY: Elsevier Saunders. In press (2016).

Naci, L., Cusack, R., Jia, V. Z. & Owen, A. M. The brain’s silent messenger: using selective attention to decode human thought for brain-based communication. The Journal of Neuroscience 33(22), 9385–9393 (2013).

Haugg, A. et al. Do patients thought to lack consciousness retain the capacity for internal as well external awareness? Frontiers in Neurology. In Press (2018).

Naci, L., Sinai, L. & Owen, A. M. Detecting and interpreting conscious experiences in behaviorally non-responsive patients. Neuroimage 145(Pt B), 304–313 (2017).

Sinai, L., Owen, A. M. & Naci, L. Mapping preserved real-world cognition in brain-injured patients. Frontiers in Bioscience. 22, 815–823 (2017).

Naci, L., Cusack, R., Anello, M. & Owen, A. M. A common neural code for similar conscious experiences in different individuals. Proc. Natl. Acad. Sci. USA 111, 14277–82 (2014).

Fischer, D. B. et al. A human brain network derived from coma-causing brainstem lesions. Neurology 87(23), 2427–2434 (2016).

Demertzi, A. et al. Intrinsic functional connectivity differentiates minimally conscious from unresponsive patients. Brain. 138(Pt 9), 2619–31 (2015).

Demertzi, A. et al. Multiple fMRI system-level baseline connectivity is disrupted in patients with consciousness alterations. 52, 35–46 (2014).

Soddu, A. et al. Resting state activity in patients with disorders of consciousness. Funct Neurol. 26(1), 37–43 (2011).

Soddu, A. et al. Reaching across the abyss: recent advances in functional magnetic resonance imaging and their potential relevance to disorders of consciousness. Prog Brain Res. 177, 261–74 (2009).

Vanhaudenhuyse, A. et al. Default network connectivity reflects the level of consciousness in non-communicative brain-damaged patients. Brain 133(Pt 1), 161–71 (2010).

Boly, M. et al. Functional connectivity in the default network during resting state is preserved in a vegetative but not in a brain dead patient. Hum Brain Mapp. 30(8), 2393–400 (2009).

Duncan, J. The multiple-demand (MD) system of the primate brain: mental programs for intelligent behaviour. Trends Cogn. Sci. 14, 172–179 (2010).

Elliot, R. Executive functions and their disorders. Br Med Bull 65, 49–59 (2003).

Shallice, T. From neuropsychology to mental structure (Cambridge University Press, 1988).

Ptak, R. The Frontoparietal Attention Network of the Human. Brain: Action, Saliency, and a Priority Map of the Environment. Neurosci. 18, 502–515 (2012).

Woolgar, A. et al. Fluid intelligence loss linked to restricted regions of damage within frontal and parietal cortex. Proc. Natl. Acad. Sci. USA 107, 14899–14902 (2010).

Barbey, A. K. et al. An integrative architecture for general intelligence and executive function revealed by lesion mapping. Brain 135, 1154–1164 (2012).

Hampshire, A. & Owen, A. M. Fractionating attentional control using event-related fMRI. Cereb Cortex 16, 1679–1689 (2006).

Sauseng, P., Wolfgang Klimesch, M. S. & Doppelmayr, M. Fronto-parietal EEG coherence in theta and upper alpha reflect central executive functions of working memory. Int J Psychophysiol 57, 97–103 (2005).

Tononi, G., Boly, M., Massimini, M. & Koch, C. Integrated information theory: from consciousness to its physical substrate. Nat rev Neurosci 17, 450–61 (2016).

Alkire, M. T., Hudetz, A. G. & Tononi, G. Consciousness and anesthesia. Science 322, 876–880 (2008).

Singer, W. Consciousness and the binding problem. Ann N Y Acad Sci. 929, 123–46 (2001).

Hampshire, A., Highfield, R. R., Parkin, B. L. & Owen, A. M. Fractionating Human Intelligence. Neuron 76, 1225–1237 (2012).

Barttfeld, P. et al. Signature of consciousness in the dynamics of resting-state brain activity. Proc. Natl. Acad. Sci. 112, 887–892 (2015).

Stamatakis, E. A., Adapa, R. M., Absalom, A. R. & Menon, D. K. Changes in resting neural connectivity during propofol sedation. PloS one 5(12), e14224 (2010).

Sarasso, S. et al. Consciousness and Complexity during Unresponsiveness Induced by Propofol, Xenon, and Ketamine. Curr Biol. 25, 3099–105 (2015).

Casali, A. G. et al. A theoretically based index of consciousness independent of sensory processing and behavior. Sci Transl Med. 15(198), 198ra105 (2013).

Massimini, M., Ferrarelli, F., Sarasso, S. & Tononi, G. Cortical mechanisms of loss of consciousness: insight from TMS/EEG studies. Arch Ital Biol. 150(2–3), 44–55 (2012).

Wilf, M. et al. Diminished Auditory Responses during NREM Sleep Correlate with the Hierarchy of Language Processing. PLoS One. 11(6), e0157143 (2016).

Strauss, M. et al. Disruption of hierarchical predictive coding during sleep. Proc Natl Acad Sci USA 112(11), E1353–62 (2015).

Pigorini, A. et al. Bistability breaks-off deterministic responses to intracortical stimulation during non-REM sleep. Neuroimage. 112, 105–13 (2015).

Boveroux, P. et al. Breakdown of within- and between-network resting state functional magnetic resonance imaging connectivity during propofol-induced loss of consciousness. Anesthesiology 113, 1038–1053 (2010).

Jordan, D. et al. Simultaneous electroencephalographic and functional magnetic resonance imaging indicate impaired cortical top-down processing in association with anesthetic-induced unconsciousness. Anesthesiology 119, 1031–1042 (2013).

Greicius, M. D. et al. Persistent default-mode network connectivity during light sedation. Hum. Brain Mapp. 29, 839–847 (2008).

Martuzzi, R., Ramani, R., Qiu, M., Rajeevan, N. & Constable, R. T. Functional connectivity and alterations in baseline brain state in humans. Neuroimage 49, 823–834 (2010).

Boly, M. et al. Connectivity Changes Underlying Spectral EEG Changes during Propofol-Induced Loss of Consciousness. Journal of Neuroscience 32, 7082–7090 (2012).

Casarotto, S. et al. Stratification of unresponsive patients by an independently validated index of brain complexity. Ann Neurol. 80(5), 718–729 (2016).

Brown, E. N., Lydic, R. & Schiff, N. D. General anesthesia, sleep, and coma. N Engl J Med. 363(27), 2638–50 (2010).

Laureys, S. The neural correlate of (un)awareness: lessons from the vegetative state. Trends Cogn. Sci. 9, 556 (2005).

Ku, S. W., Lee, U., Noh, G. J., Jun, I. G. & Mashour, G. A. Preferential Inhibition of Frontal-to-Parietal Feedback Connectivity Is a Neurophysiologic Correlate of General Anesthesia in Surgical Patients. PLoS ONE 6, e25155 (2011).

Thibaut, A. et al. Metabolic activity in external and internal awareness networks in severely brain-damaged patients. J. Rehabil. Med. 44, 487–944 (2012).

Laureys, S. et al. Brain function in the vegetative state. Adv. Exp. Med. Biol. 550, 229–238 (2004).

Seth, A. K., Izhikevich, E., Reeke, G. N. & Edelman, G. M. Theories and measures of consciousness: an extended framework. Proc Natl Acad Sci USA 103(28), 10799–804 (2011).

Corbetta, M. & Shulman, G. L. Control of goal-directed and stimulus-driven attention in the brain. Nat. Rev. Neurosci. 3, 201–215 (2002).

Kroger, J. K. et al. Recruitment of anterior dorsolateral prefrontal cortex in human reasoning: a parametric study of relational complexity. Cerebral cortex 12(5), 477–485 (2002).

Hearne, L. J., Mattingley, J. B. & Cocchi, L. Functional brain networks related to individual differences in human intelligence at rest. Sci Rep. 6, 32328 (2016).

Barbey, A. K. Network Neuroscience Theory of Human Intelligence. Trends Cogn Sci. 22(1), 8–20 (2018).

Cusack, R., Veldsman, M., Naci, L., Mitchell, D. J. & Linke, A. Seeing different objects in different ways: measuring ventral visual tuning to sensory and semantic features with dynamically adaptive imaging. Hum Brain Mapp 33(2), 387–397 (2012).

Ramsay, M., Savage, T., Simpson, B. & Goodwin, R. Controlled sedation with alphaxalone-alphadolone. Br. Med. J. 2(5920), 656–9 (1974).

Marsh, B. M., White, M., Morton, N. & Kenny, G. N. Pharmacokinetic model driven infusion of propofol in children. British journal of anaesthesia 67(1), 41–48 (1991).

Power, J. D., Barnes, K. A., Snyder, A. Z., Schlaggar, B. L. & Petersen, S. E. Spurious but systematic correlations in functional connectivity MRI networks arise from subject motion. Neuroimage 59(3), 2142–2154 (2012).

Giacino, J. T., Kalmar, K. & Whyte, J. The JFK Coma Recovery Scale-Revised: measurement characteristics and diagnostic utility. Archives of physical medicine and rehabilitation 85(12), 2020–2029 (2004).

Schnakers, C. et al. Cognitive function in the locked-in syndrome. J. Neurol. 255, 323–30 (2008).

Cusack, R. et al. Automatic analysis (aa): efficient neuroimaging workflows and parallel processing using Matlab and XML. Frontiers in neuroinformatics 8, 90 (2015).

Murphy, K., Birn, R. M., Handwerker, D. A., Jones, T. B. & Bandettini, P. A. The impact of global signal regression on resting state correlations: are anti-correlated networks introduced? Neuroimage 44(3), 893–905 (2009).

Anderson, J. S. et al. Network anticorrelations, global regression, and phase‐shifted soft tissue correction. Human brain mapping 32(6), 919–934 (2011).

Raichle, M. E. The Restless Brain. Brain Connect. 1, 3–12 (2011).

van den Heuvel, M. P. & Pol, H. E. H. Exploring the brain network: A review on resting-state fMRI functional connectivity. European Neuropsychopharmacology 20(8), 519–534 (2010).

Smith, S. M. et al. Network modelling methods for FMRI. Neuroimage 54, 875–891 (2011).

Ramsey, J. D. et al. Six problems for causal inference from fMRI. Neuroimage 49, 545–1558 (2010).

Acknowledgements

We thank Dr. Conor Wild and Ms. Leah Sinai for their help with the scrambled audio stimulus generation and data acquisition, Dr. Tim Bayne, and the anonymous reviewers for their valuable feedback. This research was supported by the L’Oréal-Unesco for Women in Science Excellence Research Fellowship and the Wellcome Trust Institutional Strategic Support Fund to L.N. and a Canada Excellence Research Chair to A.M.O.

Author information

Authors and Affiliations

Contributions

Conceptualization, L.N. Methodology, L.N., A.H., R.C. Recruitment, E.H., A.M., M.A., L.G. Data Acquisition, L.N., E.H., A.M., M.A., S.N., M.A., C.H. Formal Analyses, L.N., A.H., E.H., R.C. Writing, L.N. Supervision, A.M.O. Funding and resource acquisition, M.A., C.H., A.M.O.

Corresponding author

Ethics declarations

Competing Interests

The authors declare no competing interests.

Additional information

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Naci, L., Haugg, A., MacDonald, A. et al. Functional diversity of brain networks supports consciousness and verbal intelligence. Sci Rep 8, 13259 (2018). https://doi.org/10.1038/s41598-018-31525-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-018-31525-z

This article is cited by

-

Whole-brain modelling identifies distinct but convergent paths to unconsciousness in anaesthesia and disorders of consciousness

Communications Biology (2022)

-

The complexity of the stream of consciousness

Communications Biology (2022)

-

Reversed and increased functional connectivity in non-REM sleep suggests an altered rather than reduced state of consciousness relative to wake

Scientific Reports (2021)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.