Abstract

Incomplete or partial observations of network structures pose a serious challenge to theoretical and engineering studies of real networks. To remedy the missing links in real datasets, topology-based link prediction is introduced into the studies of various networks. Due to the complexity of network structures, the accuracy and robustness of most link prediction algorithms are not satisfying enough. In this paper, we propose a quantum-inspired ant colony optimization algorithm that integrates ant colony optimization and quantum computing to predict links in networks. Extensive experiments on both synthetic and real networks show that the accuracy and robustness of the new algorithm is competitive in respect to most of the state of the art algorithms. This result suggests that the application of intelligent optimization to link prediction is promising for boosting its accuracy and robustness.

Similar content being viewed by others

Introduction

Many complex systems, including social, biological, information and technological systems can be well described as networks where nodes represent individuals or organizations and edges represent the relationships or interactions between them. But real-world networks are typically incomplete or inaccurate, which makes it hard to completely and precisely depict the properties of these networks. To handle this problem, researchers turn to link prediction to refine the networks before their specific studies on them1,2.

Link prediction in complex networks concerns the probability of establishing a link between two disconnected nodes. In the past decade, there are many applications of link prediction for various networks3,4,5,6,7,8,9,10,11,12,13,14,15,16. For instance, in online social networks, such as Facebook, Weibo and Twitter, link prediction is used to recommend registered users to connect with someone they know but not recognized in the network5,6,7. As a personalized service, an accurate recommendation is likely to promote its users’ loyalty5. In biological networks, such as protein-protein interaction networks and metabolic networks, the links are typically neither complete (high false positives) nor highly reliable (high false negative)8,9,10. Accurate link prediction based on known network structure and some specific biological information is helpful for designing targeted experiments, which may substantially reduce experimental time and cost11,12,13,14. In the application to monitoring the network of criminals, link prediction is used to discover the possible connections among criminals (including potential criminals), which is useful for locating some specific criminals and thus detecting and disrupting terror attacks15,16.

So far, topology-based link prediction algorithms can be roughly divided into three categories. The first category is based on various generating mechanisms, for instance the common neighbours (CN) algorithm17,18,19 and the cannistraci-resouce-allocation algorithm (CRA, which can be also called Cannistraci-Hebb network automata model (CH) based on local community paradigm)20,21,22. Their computational complexity is relatively low, while precision is not so satisfying. The second category is based on probabilistic models, for instance the stochastic block model (SBM)23,24 and the fast probability block model (FBM)25. Relatively, their precision is generally higher, while computational complexity is likewise higher. Thus, they are not suitable for large-scale networks. The third category is model-free, such as the structural perturbation method (SPM)26,27. Among them, the SBM and FBM usually perform poorly because of the issues of inference in the maximum likelihood procedure, which may fall in local optimum or in the configuration of maximum likelihood that are unrealistic. The CRA (or CH) algorithm performs the best specifically on the networks that are generated by a hyperbolic geometry because its generative mechanism (which is a local community paradigm) is associated to that geometry. The SPM algorithm is the most robust and well-performed in general regardless of the hidden generative model of the network. This may originate from its model free property, which is adaptive since it does not assume any underlying mechanism.

In this paper, we propose a quantum-inspired ant colony optimization algorithm (QACO) to predict missing links in networks, which integrates ant colony optimization and quantum computing28. To sum up, our contributions are three-folds. First, based on the assumption that the pheromone of artificial ants can reflect the importance of a path, we introduce a biased ant colony optimization algorithm to remedy incomplete datasets. In our algorithm, the visibility integrates the quasi-local information of nodes. Second, quantum bits and quantum logic gates are introduced into the ant colony optimization algorithm, which can effectively prevent the optimization process from falling into a local optimum. Third, extensive experiments are conducted on many benchmark networks. Our experimental results show that the accuracy and robustness of the QACO algorithm is competitive in respect to most of the state of the art algorithms.

Results

The QACO algorithm

Overview

Consider an undirected network G = (V, E), the corresponding complete network G′ is constructed by connecting all the disconnected node pairs in G. Let all artificial ants randomly walk in G′, where links and nodes are allocated some amount of pheromone. The probability that a link or node is visited by ants is proportional to its pheromone.

Assume an ant visits n nodes, which results in a walking path. The probability that an ant travels from node vi to node vj is pij. The value of pij depends on the pheromone of the path, the visibility of link (vi, vj) and the quantum pheromone of vj. After an ant reaches its destination (say vj), the pheromone on the links and nodes in the path will be updated according to a certain rule. In turn, the updated pheromone on the links and nodes will affect the paths of the ants in the next iteration. Generally, the pheromone and visibility of the links will lead the ants to the globally optimal paths, since following the quantum pheromone is an effective way to avoid local optimums. Finally, the pheromone τij and visibility ηij on link (vi, vj) can, to some extent, reflect the similarity between vi and vj.

Quantum Pheromone

-

Quantum computation: The basic principle of quantum computation is that the relative phase and the probability amplitude of each ground state of the superposition state are not constant. The occurrence probability of each ground state varies with time, leading to the corresponding variations of the superposition state. Quantum computation is based on a device called quantum gate, which can realize a logical transformation in a certain time interval. The properties of quantum computation include interference, superposition and parallelism. In this paper, we apply quantum bits to representing the quantum pheromone of nodes and quantum rotation gate to updating quantum pheromone of ants29,30.

-

Quantum pheromone representation: In quantum computation, |0〉 and |1〉 denote two basic states of microscopic particles. An arbitrary state of a single quantum bit can be represented by a linear combination of the two basic states. The sign |〉 is called Dirac mark, which represents an eigenstate in quantum mechanics. Each quantum bit is typically in a superposition state, which is a combination of the two eigenstates. Thus, it can be represented by |φ〉 = α|0〉 + β|1〉, where α and β are a pair of complex numbers, which denote the probability amplitudes of quantum states. For the quantum state |φ〉, the probabilities of collapsing to |0〉 and |1〉 are |α|2 and |β|2 respectively, where |α|2 + |β|2 = 1.

A quantum bit represents two states |0〉 and |1〉. Naturally, a quantum bit of length m can represent 2m different states. The probability amplitude of individual j with m quantum bits is thus defined as \({P}_{j}{=[}_{{\beta }_{1}}^{{\alpha }_{1}}{|}_{{\beta }_{2}}^{{\alpha }_{2}}{|}_{\cdots }^{\cdots }{|}_{{\beta }_{m}}^{{\alpha }_{m}}]\), where |αi|2 + |βi|2 = 1 for i = 1, 2, …, m.

In the QACO, the quantum pheromone is represented by the quantum bit. Let the size of population be n. The quantum pheromone is defined as p = (p1, p2, p3, …, pn), where pj (j = 1, 2, …, n) is the quantum pheromone of individual j31,32.

-

Quantum pheromone updating: The core of ant colony optimization algorithm is that the ants select their paths according to the density of pheromone, which can be applied to link prediction. For each ant, the probability of choosing a node is proportional to the density of pheromone left on the node. The update of the quantum pheromone intensity of a node can be implemented by updating the rotation angle of the quantum rotating gate in QACO. Concretely, the quantum bit of a node is updated by tuning the probability amplitude of quantum bit in the quantum rotating gate. Here, the quantum rotating gate is defined as:

with i = 1, 2, …, m. Here, m is the number of ants used, \({[{\alpha }_{i}^{t+1},{\beta }_{i}^{t+1}]}^{T}\) is the probability amplitude of the i-th quantum bit at the t-th iteration. θi is the rotation angle of the i-th quantum bit. Its size and orientation can be determined according to the formula θi = Δθ * sign(αi * βi). sign(αI * βi) is a sign function. For αi * βi > 0, sign(αi * βi) = 1; for αi * βi < 0, sign(αi * βi) = −1; and for αi * βi = 0, sign(αi * βi) = 0. Δθ usually falls in the range of [0.01π, 0.08π]33.

Parameters setting

-

Parameter initialization: At the beginning, we uniformly set the pheromone on all links to

$${\tau }_{ij}=\delta ,$$(2)where δ is a constant.

-

On the other hand, we set the initial value of visibility of link (vi, vj) to

$${\eta }_{ij}=\sum _{z\in {\rm{\Gamma }}(i)\cap {\rm{\Gamma }}(j)}\frac{1}{{[k(z)]}^{\iota }}+\omega \sum _{x,y\in {l}_{i\to j}}\frac{1}{{[k(x)k(y)]}^{\iota }},$$(3)where ι is a constant, varying with the topology of network. Following the settings of previous studies34, we set ω = 0.01. Γ(i) and Γ(j) denote the sets of i and j’s neighbors, respectively. kx, ky and kz denote the degrees of x, y and z, respectively. li→j denotes the node set in the paths from node i to node j, the length of which is 3. x and y are intermediate nodes in the set li→j.

Finally, we set the intensity of quantum pheromone at node vj to

$${\mu }_{j}=\frac{1}{|{\alpha }_{j}{|}^{2}},$$(4)where |αj|2 denotes the probability that the quantum state of the j-th quantum bit collapses to |0〉, while 1 − |αj|2 denotes the probability collapsing to |1〉.

-

State transition rule: In each iteration, the transition probability that an ant k moves from vi to vj is defined as

$${p}_{ij}^{k}=\frac{{[{\tau }_{ij}]}^{\lambda }{[{\eta }_{ij}]}^{\kappa }{[{\mu }_{j}]}^{\nu }}{\sum _{l}{[{\tau }_{il}]}^{\lambda }{[{\eta }_{il}]}^{\kappa }{[{\mu }_{l}]}^{\nu }},$$(5)where τij and ηij are the pheromone and visibility of link (vi, vj), respectively. μj is the quantum pheromone intensity of node vj. The parameters λ, κ and ν are used to control the impact of the link pheromone, visibility and node pheromone intensity, respectively.

-

Fitness function: In each iteration, an ant walks through a path of N nodes. The path of the k-th ant is denoted by S(k) = (v1, v2, v3, …, vn), where vi ∈ V is the i-th node in the path. The fitness function is defined to evaluate each path and update the pheromone. Here, the fitness function is defined as \(Q(S)=C\ast \frac{1}{n}{\sum }_{i=1}^{n}d({v}_{i})\), where C is a positive constant and d(vi) is the degree of node vi. Generally, a path with more densely-connected nodes gets a higher score.

-

Pheromone updating: After each iteration, the QACO algorithm updates the pheromone of each link according to the following formula:

$${\tau }_{ij}^{new}=\rho \cdot {\tau }_{ij}^{old}+{\rm{\Delta }}\tau (i,j),$$(6)where Δτ(i, j) is the variation of pheromone on link (vi, vj), which is defined as \({\sum }_{k=1}^{m}{\rm{\Delta }}{\tau }_{k}(i,j)\). Here, Δτk(i, j) is the pheromone released by ant k on link (vi, vj), ρ is the trajectory persistence, which is in the range of [0, 1). Obviously, Δτ(i, j) is proportional to the frequency that the link is visited by ants. If we consider only the fitness Q in the update of pheromone, the gaps of pheromone among links may grow. In this case, the paths of ants are likely to fall into local optimums. To remedy this drawback, βk is introduced to Eq. (6). In Eq. (6), Δτk(i, j) is defined as Q⋅(1 − |βk|2), where the probability that ant k selects node vj is proportional to |βk|2. In this way, we can effectively prevent that the gap of the pheromone among links drastically grows. In other words, it can keep the optimizing process from falling into local optimums.

-

Termination conditions and evaluations: Once the number of iterations exceeds a prespecified threshold Nc, the optimizing process will be terminated. Finally, we can obtain a matrix τ + ε · η, which is normally referred to as the score matrix in some ref.35. To evaluate the performance of the QACO algorithm for link prediction, we will compare the precision of our algorithm to that of a number of existing algorithms in the performance evaluation section.

The Algorithm

The proposed algorithm is outline in Algorithm 1, where the major steps are explained as follows:

-

1.

Step 1 (Line 1–2): In the network G of n nodes, m ants are used. For node j, its status in the t-th iteration is \({P}_{j}^{t}=[\begin{array}{c}{\alpha }_{1}^{t}\\ {\beta }_{1}^{t}\end{array}|\begin{array}{c}{\alpha }_{2}^{t}\\ {\beta }_{2}^{t}\end{array}|\begin{array}{c}\cdots \\ \cdots \end{array}|\begin{array}{c}{\alpha }_{m}^{t}\\ {\beta }_{m}^{t}\end{array}]\), t = 1, 2, …, Nc (the number of iterations). In the first iteration, \({\alpha }_{i}={\beta }_{i}=\frac{1}{\sqrt{2}}\) for all i (i = 1, 2, …, m). The initial settings of the pheromone matrix τ and the visibility matrix η follow Eq. (2) and Eq. (3), respectively. In the experiments, we set δ, λ, κ, ν, ρ and ε to 1, 1, 2, 1, 0.9 and 0.2, respectively. ι is determined by the topology of networks, which is set to 0 or 1.

-

2.

Step 2 (Line 4–7): The m ants are randomly assigned to m nodes after shuffling the order of nodes. For example, to assign 3 ants to 3 of 5 nodes. We first shuffle the order of 5 nodes, say the result is 3, 1, 5, 2, 4. Then the 3 ants are assigned to the nodes of 3, 1 and 5, respectively.

-

3.

Step 3 (Line 8–19): The transition probability of ant k from vi to vj, \({p}_{ij}^{k}=\frac{{p}_{ij}^{k}}{{\sum }_{l}{p}_{il}^{k}}\). In each iteration, m ants simultaneously walk for n steps without interaction.

-

4.

Step 4 (Line 20–34): After each iteration, the pheromone matrix τ of links will be updated according to Eq. (6). Similarly, the pheromone of nodes will be updated too, according to Eq. (1).

-

5.

Step 5 (Line 36–37): Let t = t + 1. If t < Nc, then go to Step 2. Otherwise, output the matrix τ + ε · η, which will be used to calculate the precision.

-

Computational Complexity: Let n be the number of nodes in the network, and 〈k〉 be the average degree of nodes. Firstly, in order to calculate the visibility matrix η, all the neighbours of the second-order neighbours of each node are required to loop over. Therefore, the time complexity of calculating visibility matrix η is O(n〈k〉3). Secondly, the number of qubits on each node is the same as the number of ants m. Therefore, the time complexity of initializing quantum pheromone μ is O(nm). Thirdly, the paths are the traces that the ants traverse in the network. The time complexity for forming a path in each iteration is O(n2). Then the time complexity for recording m paths in Nc iterations is O(Ncmn2). In conclusion, the overall time complexity of the QACO algorithm can be estimated as O(n〈k〉3) + O(nm) + O(Ncmn2). Since 〈k〉, m and Nc are constants, the time complexity of the algorithm is O(n2).

Experimental results and analysis

In our experiments, we provide the performance comparison of the proposed algorithm against four state-of-the-art algorithms mentioned in Section Methods, including the algorithms based on the indices of SPM, SBM, FBM and CH. Among them, the SPM, SBM and FBM algorithms are three global algorithms for topological link prediction, while the CH algorithm is a local algorithm for topological link prediction. The recent studies have confirmed that the SPM and CH algorithms are actually one of the best-performing state-of-the-art global and local algorithms, respectively20. In order to verify the robustness of our algorithm, many different types of networks (the artificial, small-size, large-size and time-evolving networks) introduced in Table SI of the Supplementary Information (SI) are used. In addition, considering the high time complexity of the SBM and FBM algorithms, we only compare the SPM and CH algorithms on the large-size and time-evolving networks. Their performance will be shown as the following.

Evaluation on artificial networks

Figures 1 and 2 show the precision of the tested algorithms on the nPSO and WS networks, respectively. Figure 1 shows the average precision on the nPSO networks with 8 communities. We select the parameters (r, m, T and N) of the nPSO model, based on the topological features of the small-size real networks mentioned above. In Fig. 1, one can observe that the algorithms achieve fluctuating performances for low temperature (high clustering), relatively stable performances for medium temperature (medium clustering) and pretty stable performance for high temperature (low clustering). Furthermore, the performance of these algorithms generally decays with temperature. In general, the CH algorithm outperforms the other algorithms for most of the parameter domain. Meanwhile, as the network size N expands, the advantage of CH algorithm becomes more pronounced. Whereas, our algorithm is ranked the second. This result also confirms the robustness of our algorithm.

The precision evaluation of six algorithms with 90% of the links used as the training set on the nPSO networks with 8 communities. Synthetic networks are generated by the nonuniform PSO model with parameters γ = 3 (power-law degree distribution exponent), m = [10, 12, 14] (half of average degree), T = [0.1, 0.3, 0.5] (temperature, inversely related to the clustering coefficient), N = [100, 500, 1000] (network size) and 8 communities. For each combination of parameters, 100 networks are generated. For each parameter combination, the plots report the mean precision and standard error over the random iterations. Note that for SBM only 10 networks are considered due to the high time complexity. In addition, HD is the hyperbolic distances between the nodes in the original network.

The precision evaluation of three algorithms with 90% of the links used as the training set on the Watts-Strogatz networks. Synthetic networks are generated by the Watts-Strogatz model with parameters N = [100, 500, 1000] (network size), m = [10, 12, 14] (half of average degree) and β = [0.001, 0.01, 0.1] (rewiring probability). For each combination of parameters, 100 networks are generated. For each parameter combination, the plots report the mean precision and standard error over the random iterations.

Figure 2 shows the average precision on the Watts-Strogatz networks. The values of N and m are the same as what they are in the nPSO networks. The values of the parameter β are adjusted to build a network sharing a similar clustering coefficient and average shortest path length with the nPSO networks. In Fig. 2, one can observe that the performance of the SPM algorithm is ranked first, while that of our algorithm is ranked second again. Generally speaking, the robustness of our algorithm is better than that of the SPM and CH algorithms on both artificial networks.

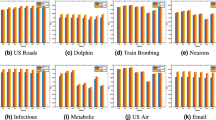

Evaluation on small-size complex networks

In order to test the robustness of our algorithm, 20 small-size network datasets are collected, which covers social networks, biological networks and technology networks, etc.

Table 1 shows the precision of the tested algorithms on the 20 small-size networks. One can observe that the SPM algorithm achieves the highest performance in 12 out of 20 networks, tying for the first place in two of them, whereas our algorithm receives the best result in four networks, tying for the first place in one of them. In Table 1, the mean precision of our algorithm over the tested datasets is tied with the SBM algorithm for second place, just following the SPM algorithm. The last row in Table 1 also shows the mean ranking of the tested algorithms on the networks. The precision-ranking metric is a more robust and reliable metric for assessing the overall performance. The mean ranking of the algorithms over all the networks represents the final evaluation score. One can observe that the SPM algorithm is still the best performing approach, as already deducible from mean precision, with an average ranking of 2.15. Our algorithm is ranked second with 2.78. The third approach is CH with 3.20. The SBM and FBM algorithms are ranked the fourth and fifth, respectively (The result of ranking score for each network can refer to Table SII in the SI). In short, compared with these state-of-the-art algorithms, the performance of our algorithm basically exceeds the CH, SBM and FBM algorithm, while lags behind the global algorithm, SPM. In addition, the performance of the CH algorithm (a local approach) exceeds that of the SBM and FBM algorithm (two global algorithms), which demonstrates its capability of prediction.

In order to check the statistical significance of the difference in performance between the algorithms, we perform the pairwise permutation tests (10,000 iterations), based on the precision-ranking values of each algorithm over the networks (columns of Table SII in the SI). Table SIII in the SI presents the pairwise p-values, adjusted for multiple hypothesis comparison by the Benjamini-Hochberg correction. The p-values lower than the significance level 0.05 are highlighted in bold. We find that the mean performances of the SPM-CH, SPM-SBM, SPM-FBM and QACO-FBM pairs are significantly different. In the sense, the result confirms the advantage of the SPM in performance, on the other hand, confirms that our algorithm is competitive in respect to the four state of the art algorithms. Note that the SPM is a global algorithm with time complexity, N3, while our algorithm is a quasi-local algorithm with time complexity, N2.

Comparing the performance of our algorithm in a variety of networks, we find that our algorithm is suitable for the networks with disassortative mixing36. For example, for the four networks, mouse neural, ACM2009 contacts, physicians innovation and haggle contacts networks, our algorithm is all ranked first in terms of precision-ranking. Their Pearson coefficients are −0.52, −0.12, −0.08 and −0.47, respectively. Conversely, when the Pearson coefficient is positive, our algorithm typically performs poorly. For example, the precision-ranking values of our algorithm are 4, 3, 4, 4.5 and 4 on the football, jazz, residence hall friends, infectious contacts and email networks, respectively. All of the networks are assortative mixing (The details can refer to Table SI and Table SII in the SI.).

Evaluation on large-size real complex networks

Aiming to test the scalability of our algorithm, 12 large-size network datasets of different type are selected, which covers social networks, internet networks and technology networks, etc. Their topological features of common interest are likewise shown in Table SI. Table 2 shows the precision of the tested algorithms on the networks. It is evident that the CH algorithm obtains the highest performance in 6 out of 12 networks, tying the first position in two of them. The overall performance can be measured by the mean ranking. The mean ranking of the CH algorithm is 1.88, which is higher than its counterparts. With respect to the value of precision, the CH algorithm performs very poorly on the router network, which drags its mean precision. This result may be induced by the sparsity of the router network (its average clustering coefficient is 0.01), which restricts the formation of local community and ultimately leads to poor performance of CH algorithm on this network. Instead, the SPM algorithm performs very well on the yeast and arxiv astroph network. The remarkable advance leads to its mean precision is ranked first. However, its mean ranking is at the bottom, since its performance on the other networks is not desirable. The performance of the QACO algorithm is ranked second in both rankings.

We also perform the pairwise permutation tests (10,000 iterations), based on the precision-ranking values of each algorithm over the large-size real networks (columns of Table SIV). From Table SV, one can find that the mean performances of the three algorithms are not significantly different on these networks. Relatively speaking, the CH algorithm performs better on most of the large-size real networks.

Evaluation on time-evolving real networks

In order to maintain the diversity of evaluation framework, we adopt the evaluation framework mentioned in previous study20, which considers the link-growth evolution of a real network over time. Six Autonomous systems (AS) Internet topologies collected by CAIDA are selected, which is from September 2009 to December 2010, spanning 3 months in total. Their topological features of common concern are shown in Table SI.

In Table 3, one can observe that the QACO algorithm outperforms the CH and SPM algorithm, with a mean precision of 0.15. One can see that the precision grows with time, going from 0.13 to 0.16 for the QACO, from 0.11 to 0.14 for the CH and from 0.07 to 0.11 for the SPM, respectively.

In conclusion, different from the removal and re-prediction framework in which the set of missing links is artificially generated by a random procedure, here the set of links that will appear after two consecutive time points is given by ground-truth information, which makes the result even more truthful and significant, confirming the effectiveness of the QACO algorithm.

In summary, our algorithm is competitive in respect to most of the state-of-the-art algorithms in balancing precision and robustness on the tested networks.

Discussions

Inspired by ant colony optimization and quantum computing, we propose a quantum-inspired ant colony optimization algorithm for link prediction in networks. By utilizing visibility, the algorithm integrates the quasi-local structural information of individuals. By using quantum bits and quantum logic gates, it can effectively keep the optimization process from being trapped in local optimums. Compared with a series of the state-of-the-art algorithms on the artificial, small-size, large-size and time-evolving networks, our algorithm exhibits a satisfying robustness and scalability. Especially on the time-evolving real networks, our algorithm outperforms all the tested algorithms. We believe that the quantum-inspired ant colony optimization algorithm provides a new paradigm for the future studies of link prediction.

Admittedly, there may be other definitions of pheromone and visibility that can improve the performance of our algorithm. The pheromone updating strategy and the parameter selecting procedure can also be further optimized. Apart from the ant colony optimization algorithm, other intelligent optimization algorithms may be more effective on some networks. All these will be explored in our future work.

Methods

Link prediction problem

Consider an undirected network G(V, E) where V is a set of nodes and E is a set of links. Here, self-connections and multiple links are not considered. For each pair of disconnected nodes x, y ∈ V in the network, a link prediction algorithm assigns a score Sxy, which indicates the probability of x and y connecting with each other. By sorting the scores of all disconnected node pairs, those pairs at the top of the list are more likely to be connected.

In order to test the performance of the algorithm, the existing links in the network, E, are randomly divided into two sets: the training set ET and the probe set EP. Here, E = ET ∪ EP and ET ∩ EP = ∅. The algorithm estimates the scores of disconnected node pairs in G based on the information of the training set, and EP is used as the benchmark for evaluating the prediction result.

Evaluation metrics

In order to measure the accuracy of link prediction, we use the following metrics: Precision and Precision-ranking37,38,39,40.

-

Precision refers to the fraction of correctly predicted links in the predicted links. It is defined as:

where m denotes the number of correctly predicted links, L denotes the number of predicted links. For a given L, the greater precision is, the better the performance of an algorithm is.

-

Precision-ranking is a more robust and reliable metric for assessing the overall performance. For each network, all the algorithms are ranked by precision in descending order. The mean ranking of the algorithms over all the networks represents the final score.

Benchmark algorithms

For a comprehensive comparison, three global algorithms (SPM, SBM and FBM) and a local algorithm (CH) are considered.

Let x and y be two randomly selected nodes in a network. Γ(X) and Γ(y) denote the sets of x and y’s neighbors, respectively. In the following, we will briefly introduce the definitions of the algorithms mentioned above.

The SPM algorithm is a structural perturbation method that relies on a theory similar to the first-order perturbation in quantum mechanics. The idea behind this algorithm is that a missing part of the network is predictable if it does not significantly change the structural features of the observable part26,41. It is thus defined as:

where λk, xk and Δλk are the eigenvalue of the observed matrix, the corresponding orthogonal normalized eigenvector and the eigenvalue of a perturbation set, respectively.

The SBM algorithm is based on the assumption that the probability that two nodes are connected depends only on the groups to which they belong, and it is one of the most general network models23.

The FBM algorithm is a global algorithm based on the same network partitioning theory as the SBM algorithm, but it introduces a greedy strategy for an efficient sampling over the space of the possible partitions, which leads to high improvements in the computational time25.

The CH index is based on the assumption that two nodes are more likely to be connected if their common neighbours are densely connected20,42. It is thus defined as:

where γ(z) refers to the sub-set of z′s neighbours which are also common neighbours of x and y. |γ(z)| is defined as the local community degree of z.

Data sets

In order to validate the proposed algorithm, we provide the performance comparison over two groups of network datasets. The first group composes of two synthetic networks that are Watts-Strogatz (WS)’s small-world network43 and Cannistraci’s nonuniform popularity-similarity optimization (nPSO) network44. The WS small-world network is generated by rewiring r * |E| links on a regular lattice with n nodes, where r denotes the randomized rewiring probability and |E| denotes the total number of links45,46. The nPSO model generates synthetic networks in the hyperbolic space where heterogeneous angular node attractiveness is forced by sampling the angular coordinates from a tailored nonuniform probability distribution, and the nPSO model allows to explicitly control the size, the mixing property and the number of communities of the generated network47.

The second group composes of three types of real-world networks: 20 small-size, 12 large-size and 6 time-evolving real networks, which are described in detail in the SI. In addition, all real networks have been transformed into undirected, unweighted and no self-loops. Moreover, we consider only the largest component of each of the all real-world networks. The basic topological properties of the largest component in each tested network are shown in Table SI.

References

Li, F. H. et al. Node-coupling clustering approaches for link prediction. Knowledge-Based Systems 89, 669–680 (2015).

Tan, S. Y., Wu, J. & Lü, L. Y. Efficient network disintegration under incomplete information: the comic effect of link prediction. Sci. Rep. 6, 22916 (2016).

Zhang, Q. M., Shang, M. S. & Lü, L. Y. Similarity-based classification in partially labeled networks. International Journal of Modern Physics C 21, 813–824 (2010).

Vinayagam, A., Stelzl, U. & Foulle, R. A directed protein interaction network for investigating intracellular signal transduction. Science Signaling 4, rs8 (2011).

Zhang, Y., Zheng, Z. & Lyu, M. R. An online performance prediction framework for service-oriented systems. IEEE Transactions on Systems, Man, and Cybernetics: Systems 44, 1169–1181 (2014).

Aiello, L. M. et al. Friendship prediction and homophily in social media. ACM Transactions on the Web 6, 1–33 (2012).

Zhang, Z. K., Zhou, T. & Zhang, Y. C. Tag-aware recommender systems: a state-of-the-art survey. Journal of Computer Science and Technology 26, 767–777 (2011).

Maslov, S. & Sneppen, K. Specificity and stability in topology of protein networks. Science 296, 910–913 (2002).

Yu, H. Y. et al. High-quality binary protein interaction map of the yeast interactome network. Science 322, 104–110 (2008).

Jeong, H., Mason, S. P. & Barabási, A. L. Lethality and centrality in protein networks. Nature 411, 41–42 (2001).

Bu, D. B., Zhao, Y. & Cai, L. Topological structure analysis of the protein-protein interaction network in budding yeast. Nucleic Acids Research 31, 2443–2450 (2003).

Stumpf, M. P. H. et al. Estimating the size of the human interactome. Proceedings of the National Academy of Sciences of the United States of America 105, 6959–6964 (2008).

Cannistraci, C. V., Alanis-Lobato, G. & Ravasi, T. From link-prediction in brain connectomes and protein interactomes to the local-community-paradigm in complex networks. Sci. Rep. 3, 1613 (2013).

Cannistraci, C. V., Alanis-Lobato, G. & Ravasi, T. Minimum curvilinearity to enhance topological prediction of protein interactions by network embedding. Bioinformatics 29, 199–209 (2013).

Knoke, D. Emerging Trends in Social Network Analysis of Terrorism and Counterterrorism. John Wiley & Sons (2015).

Li, F. et al. A Clustering-based Link Prediction Method in Social Networks. Procedia Computer Science 29, 432–442 (2014).

Tan, F., Xia, Y. X. & Zhu, B. Y. Link Prediction in Complex Networks: A Mutual Information Perspective. PLoS One 9, e107056 (2014).

Lü, L. Y. & Zhou, T. Link Prediction. BeiJing: Higher education press (2013).

Zhou, T., Lü, L. Y. & Zhang, Y. C. Predicting missing links via local information. Eur Phys J B 71, 623–630 (2009).

Muscoloni, A. & Cannistraci, C. V. Local-ring network automata and the impact of hyperbolic geometry in complex network link-prediction. arXiv preprint arXiv:1707.09496 (2017).

Daminelli, S., Thomas, J. M., Durán, C. & Cannistraci, C. V. Common neighbours and the local-community-paradigm for topological link prediction in bipartite networks. New Journal of Physics 17, 113037 (2015).

Liu, W. P. & Lü, L. Y. Link prediction based on local random walk. Europhysics Letters 89, 58007 (2010).

Airoldi, E. M. et al. Mixed-membership stochastic block models. Journal of Machine Learning Research Jmlr 9, 1981–2014 (2007).

Pan, L. M., Zhou, T. & Lü, L. Y. Predicting missing links and identifying spurious links via likelihood analysis. Sci. Rep. 6, 22955 (2016).

Liu, Z. et al. Correlations between Community Structure and Link Formation in Complex Networks. PLoS One 8, e72908 (2013).

Lü, L. Y., Pan, L. M. & Zhou, T. Toward link predictability of complex networks. PNAS 112, 2325–2330 (2015).

Lü, L. Y. & Zhou, T. Link prediction in complex networks: A survey. Physica A: statistical mechanics and its applications 390, 1150–1170 (2011).

He, X. F. & Ma, L. A quantum-inspired ant colony algorithm for graph coloring problem. Operations Research Transactions 17, 19–26 (2013).

Yang, J., Xu, Q. & Zhang, J. R. A novel quantum ant colony optimizing algorithm. Acta Scientiarum Naturalium Universitaties Sunyatseni 48, 22–27 (2009).

Chen, W. N. & Zhang, J. An Ant Colony Optimization Approach to a Grid Workflow Scheduling Problem with Various QoS Requirements. IEEE Transactions on Systems, Man, and Cybernetics, Part C 39, 29–43 (2009).

He, X. F. & Ma, L. Quantum-inspired ant algorithm for solving 0–1 knapsack problem. Computer Engineering and Applications 47, 29–31 (2011).

Olawale, T. & Alan, C. Quantum annealing of the graph coloring problem. Discrete Optimization 8, 376–384 (2011).

Chen, X. F., Xia, X. Y. & Yu, R. Y. Improved quantum ant colony algorithm based on bloch coordinates. Journal of Computers 8, 1536–1543 (2013).

Lü, L. Y., Jin, C. H. & Zhou, T. Similarity index based on local paths for link prediction of complex networks. Physical Review E 80, 046122 (2009).

Chen, B. L. & Chen, L. A link prediction algorithm based on ant colony optimization. Applied Intelligence 41, 694–708 (2014).

Newman, M. E. J. Assortative mixing in networks. Physical review letters 89, 208701 (2002).

Davis, J. & Goadrich, M. The relationship between Precision-Recall and ROC curves. ICML ’06 Proceedings of the 23rd international conference on Machine learning, 233–240 (2006).

Yang, Y., Lichtenwalter, R. N. & Chawla, N. V. Evaluating link prediction methods. Knowledge and Information Systems 45, 751–782 (2015).

Bai, M., Hu, K. & Tang, Y. Link prediction based on a semi-local similarity index. Chin.Phys.B 20, 498–504 (2011).

Zhu, B. Y. & Xia, Y. X. An information-theoretic model for link prediction in complex networks. Sci. Rep. 5, 13707 (2015).

Zhao, J. et al. Prediction of Links and Weights in Networks by Reliable Routes. Sci. Rep. 5, 12261 (2015).

Liu, J., Xu, B. M. & Xu, X. A link prediction algorithm based on label propagation. Journal of Computational Science 16, 43–50 (2016).

Watts, D. J. & Strogatz, S. H. Collective dynamics of ‘small-world’ networks. Nature 393, 440–442 (1998).

Muscoloni, A. & Cannistraci, C. V. A nonuniform popularity-similarity optimization (nPSO) model to efficiently generate realistic complex networks with communities. New Journal of Physics 20, 052002 (2018).

Ahn, M. W. & Jung, W. S. Accuracy test for link prediction in terms of similarity index: The case of WS and BA models. Physica A 429, 177–183 (2015).

Zhu, B. Y., Xia, Y. X. & Zhang, X. J. Weight prediction in complex networks based on neighbor set. Sci. Rep. 6, 38080 (2016).

Muscoloni, A. & Cannistraci, C. V. Leveraging the nonuniform PSO network model as a benchmark for performance evaluation in community detection and link prediction. New Journal of Physics 20, 063022 (2018).

Acknowledgements

The authors sincerely thank the anonymous reviewers for their valuable comments that have led to the present improved version of the original manuscript. Y. Z. was supported by the National Natural Science Foundation of China (No. 61503285), Municipal Natural Science Foundation of Shanghai (No. 17ZR1446000). J. G. was supported by the National Natural Science Foundation of China (No. 61772367 and 61373036), Program of Shanghai Subject Chief Scientist (15XD1503600), and Program of Shanghai Science and Technology Committee (16511105200). S. Z. was supported by the Key Projects of Fundamental Research Program of Shanghai Municipal Commission of Science and Technology under Grant No. 14JC1400300 and the Program of Science and Technology Innovation Action of Science and Technology Commission of Shanghai Municipality (STCSM) (No. 17511105204).

Author information

Authors and Affiliations

Contributions

All authors designed the experiments together. Z.C. implement the experiments and prepared all the figures. Z.C. and Y.Z. wrote the main manuscript text together. J.G. and S.Z. reviewed the manuscript.

Corresponding author

Ethics declarations

Competing Interests

The authors declare no competing interests.

Additional information

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Cao, Z., Zhang, Y., Guan, J. et al. Link Prediction based on Quantum-Inspired Ant Colony Optimization. Sci Rep 8, 13389 (2018). https://doi.org/10.1038/s41598-018-31254-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-018-31254-3

This article is cited by

-

Implementable hybrid quantum ant colony optimization algorithm

Quantum Machine Intelligence (2022)

-

Nature inspired link prediction and community detection algorithms for social networks: a survey

International Journal of System Assurance Engineering and Management (2021)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.