Abstract

The interconnectedness of financial institutions affects instability and credit crises. To quantify systemic risk we introduce here the PD model, a dynamic model that combines credit risk techniques with a contagion mechanism on the network of exposures among banks. A potential loss distribution is obtained through a multi-period Monte Carlo simulation that considers the probability of default (PD) of the banks and their tendency of defaulting in the same time interval. A contagion process increases the PD of banks exposed toward distressed counterparties. The systemic risk is measured by statistics of the loss distribution, while the contribution of each node is quantified by the new measures PDRank and PDImpact. We illustrate how the model works on the network of the European Global Systemically Important Banks. For a certain range of the banks’ capital and of their assets volatility, our results reveal the emergence of a strong contagion regime where lower default correlation between banks corresponds to higher losses. This is the opposite of the diversification benefits postulated by standard credit risk models used by banks and regulators who could therefore underestimate the capital needed to overcome a period of crisis, thereby contributing to the financial system instability.

Similar content being viewed by others

Introduction

One lesson learned from the recent credit crisis is that the stability of the financial system cannot be assessed focussing exclusively on each individual bank or financial institution. A broader approach to systemic risk, defined as the risk that a considerable part of the financial system is disrupted1, is required, as interconnections and interactions are at least as important in contributing to the overall dynamics2,3,4,5,6,7,8,9. A number of regulatory boards and committees, such as the Financial Policy Committee (FPC) at the Bank of England, the European Systemic Risk Board (ERSB) and the Financial Stability Oversight Council in the United States, have been created in order to identify, monitor and take action to remove or reduce systemic risk. They are looking at new methodologies and ideas from different disciplines to deepen their understanding of the complex phenomena involved in financial crises10. In particular techniques borrowed from network science11,12 have been successfully applied to the study of network resilience to external shocks13,14,15 and have proven useful in the analysis of financial systemic risk16,17,18,19,20,21. In this context, financial institutions are described as nodes in a network, connected by different kinds of edges, indicating: cross ownership22, investments in the same set of assets (overlapping portfolios)23,24,25 or credit exposures (for example loans)26,27,28,29.

In this article, we will focus on the analysis on the propagation of the financial distress through direct credit exposures, where the distressed event is the insolvency of the financial institutions. We will introduce a new hybrid framework, the so-called PD model, which constructively combines together two different and almost complementary approaches to assess the risk of insolvency of financial institutions.

The first approach, from now on referred to as network theory approach, analyses the spread of the contagion of an external stress in the network of exposures between banks. The banks can use their capital as a buffer to absorb the shocks, but they default if the loss is greater than the capital. Through a cascade mechanism of sequential defaults over the network, the initial external stress can lead to the disruption of a substantial part of the system.

The second approach, the credit risk approach, is normally used by banks to estimate their economic capital (i.e. the capital that is necessary to overcome a period of crisis without major disruption for the business) against the risk of default of their counterparties in lending transactions. It is based on assigning a probability of default to each counterparty and using a model to describe the tendency of some of them to default together. A time horizon is chosen for the analysis and a potential loss distribution is obtained via Monte Carlo simulation. Typically the economic capital is obtained as the difference between a quantile of the loss distribution and its mean. This approach can be used in the financial systemic risk context imagining the financial institutions as a portfolio of risky assets owned by the regulators30.

The two approaches have been developed by two different communities of researchers that have been pursued their research independently, with no significant interaction and cross pollination, until now. Our model, the so-called PD model aims at creating a bridge between the two approaches, making valuable use of all the available information about the system to analyse and quantify its systemic risk. At its core the PD model is a credit risk model with a contagion mechanism that increases the probability of default of nodes affected by defaults in their neighbourhood, defined by the exposure network.

One of the main and somewhat counter-intuitive results of the PD model is that there are situations for which lower correlations between nodes correspond to higher risk. As far as we are aware, this fact is not known within the financial risk management community that is used to think that a diversified (less correlated) portfolio always require less capital. As a result, the economic capital calculations might not be conservative enough, exposing banks and financial system to the next severe crisis.

Two modelling approaches to financial risk

The Network Theory approach

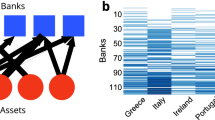

Financial institutions are described as the N nodes of a network as shown in Fig. 1a. The links of the network are directed and their topology is described by the matrix a = {a ij }, where the weight a ij equals to the sum of the exposures of node i to the default of node j. Example of exposures are: loans, bonds, share ownership and derivative contracts. Each node is characterised by its total asset Ai = {1, ..., N}, i.e. the set of anything a financial institution owns and that can be converted to cash, by a threshold Ei = {1, ..., N}, denoting the capital of the bank that can be used to absorb losses, and by a loss given default LGDi = {1, ..., N}, representing the percentage of the total asset that would be lost in case of default. A node i is considered insolvent and in default if E i (t) ≤ 0. To start a contagion process, the system is initially perturbed with a sudden loss, and a model that simulates financial contagion is used to estimate the total loss of the network.

The PD model merges network theory and credit risk approaches. (a) In the network theory approach, the focus is on the propagation of the stress on the network of exposures {a ij } between financial institutions, and the capital E i represents the buffer that can be used by bank i to absorb shocks. (b) The credit risk approach focuses on the default probabilities PD i and on the tendency of the nodes to default together as described by a Gaussian latent variable model with correlation matrix ρ = {ρ ij }. The objective is to obtain a loss distribution and its quantiles. (c) The PD model takes into account both the matrices {a ij } and {ρ ij }, and all the available information about the nodes, namely total asset A i , capital E i , loss given default LGD i and probability of default PD i . For visualization purposes, only the maximum spanning tree of the correlation network is shown in panel (b) and (c).

The models originally proposed to study network stability18,31 relied on a variant of the ‘domino effect’ to propagate the stress and, if the original shock was not big enough to start the chain reaction, no quantifiable effect could be calculated. To overcome this limitation Battiston et al.32 introduced DebtRank, a new measure of systemic risk. The DebtRank of node i, is a number measuring the fraction of the total economic value in the network that is potentially affected by the distress or the default of node i. The measure presented interesting characteristics such as being expressed in monetary terms and being able to ‘feel’ the stress in the network also in absence of actual defaults. However, it is not evident how, in the real world, the propagation of the stress postulated by the model would happen and how it would translate in an actual loss for the banks. In order to fill this gap Bardoscia et al.33 proposed a slightly modified model and a derivation of the dynamics for the shock propagation using basic accounting principles. To obtain their results, the authors had to make the not fully financially justified assumption that the exposures towards other banks lose their value proportionally to the loss in capital suffered by the borrowing banks, namely:

where E j (t) and a ij (t) are, respectively, the capital of bank j and the exposure that bank i has with bank j at time t. The above updating equation is used when bank j has not defaulted in the previous time period, otherwise a ij (t + 1) is set to be zero. In such approach it is also crucial to understand how the time step is defined: is it a year, a quarter or a minute? The answer is not irrelevant because one of the findings of ref.33 is that, no matter how small the initial shock is, if the modulus of the largest eigenvalue of the interbank leverage matrix \({{\rm{\Lambda }}}_{ij}=\frac{{a}_{ij}}{{E}_{i}}\) is greater than one, at least one bank fails. This is clearly unrealistic in actual financial networks. In reality, even if it is tempting to interpret t as a time, it should be considered just as an index identifying a step in the algorithm. No well defined time length is specified and the process can be thought as instantaneous.

The Credit Risk approach

Within this approach the system is considered as a portfolio of investments Ai = {1, ..., N} and the goal is to obtain an estimate of the risk, expressed as statistics (usually quantiles) of the potential loss distribution within a chosen time period (in the financial industry, it is usually taken as one year)34,35. As shown in Fig. 1b, each investment is associated with a probability of default within the time interval PDi = {1, ..., N}, a loss given default LGDi = {1, ..., N} and a correlation matrix ρ = {ρ ij } relative to the stochastic process of the assets returns. The focus of the credit risk approach, with respect to the network theory one, is on the probabilities of default PD, as in this case the nodes are intrinsically unstable and can default even in absence of any externally-applied stress. The evaluation of the probability of default of a counterparty is a crucial activity performed routinely by banks, when assessing the risk involved in lending transactions. The probability of default can also be obtained from credit rating agencies (Moody’s, Standard & Poors, Fitch, etc.) that use their estimation model on historical default data.

In order to define a random process to simulate the default of banks, which takes into account their tendency to default during the same time step, the basic idea is to use correlated random variables Xi = {1, ..., N} drawn from a multivariate distribution to drive the defaults. The loss distribution is obtained performing a Monte Carlo simulation of the random variables Xi = {1, ..., N} for one time period. The asset k defaults when the simulated X k = x k falls below a numeric value that is a function of PD k .

Gaussian latent variable model

In the so-called Gaussian latent variable model36, a multivariate Gaussian distribution with zero mean, unit variance and correlation matrix {ρ ij }, is used to sample Xi = {1, ..., N}. The condition for the default of asset k is chosen as:

where Φ is the univariate Gaussian distribution, while, for example, the implied probability PD ij of double default of node i and j is:

where Φ2 is the bivariate standard Gaussian distribution. Statistics of the loss distribution are then used to estimate the capital that is needed to remain solvent during the chosen time period at a certain level of confidence. The simulation is repeated a sufficient number of times to lower the Monte Carlo error below a level that is deemed acceptable. The Gaussian latent variable model was introduced for the first time by Vasicek37 in 1987. It has then been adopted by the portfolio credit risk methodology called CreditMetrics35 and used as the underlying methodology for the capital requirements of loan positions by the Basel committee38,39.

The main ideas behind the Gaussian latent variable model were already introduced in 1974 by Merton40 with his option model for corporate default based on the capital structure of a company (see Methods). The Gaussian latent variable model can be seen as a proxy of a “multi-company” generalization of the Merton model where companies default if they experience a high negative asset return described by the random variables Xi = {1, ..., N} with asset return correlation {ρ ij }41. Instead of using the asset return correlations for calibrating the matrix ρ, it is an accepted industry practice to use equity return correlations42, where daily time series data are available (a brief explanation of why equity return correlations can be used as a substitute for asset return correlations can be found in ref.43).

Merging the two approaches: the PD model framework

Our model combines network theory and credit risk approaches, using all the available information about the system. As shown in Fig. 1c, we consider the financial system as a portfolio of risky assets as if it were “owned” by the regulators, and we use credit risk techniques to calculate its loss distribution. At the same time, as in the network theory approach, we consider each individual bank as a node in a network of exposures. In order to include a contagion mechanism we use a multi-period Gaussian latent variable model with M time steps43. The length Δt of the time step is chosen coherently with the available data about the probabilities PD ≡ PD(t, t + Δt) of having a default between t and t + Δt. Usually Δt for which PD data is available is one year. The total length of time T = MΔt is an input of the model and depends by the type of analysis to be performed. For analysing a systemic crisis we found that T = 7 years is a reasonable choice. The contagion mechanism is particularly intuitive and simple: the default of one node increases the probability of default of the neighbouring nodes in subsequent time steps44,45,46,47 according the characteristics of the network of exposures {a ij }. In particular, a node i experiences an impact I i (t) at time t:

where δ j (t) is equal to 1 if node j has defaulted at time t, and is 0 otherwise. The quantity a ij (t) represents the exposure of node i to the default of node j, and the index j in the sum includes all the nodes that have not defaulted at the previous times 0, .., t − Δt. The impact I i (t) increases the probability of default PD i (t + Δt) at the successive time step. In our framework, t is a proper time variable and not just an identifier for a step of an algorithm, hence it is possible to write an updating equation for all the basic variables of the system as a function of the impact I(t):

In general, it is also possible to introduce updating equations for the matrix ρ, for LGD i and for the network a = {a ij }, as well as dependencies to evolving macroeconomic scenarios and model financial institutions as complex agents reacting to the contingent situation of the system.

In this paper, we specialize to the case with ρ ij , a ij and LGD i as constant in time. For updating the probabilities of default we use two alternative equations that we have called respectively “Merton update” and “Linear update”.

Merton update

In the Merton update we use Eq. (20) to update the probabilities of defaults. Assuming Δt = 1 year, B i (t) = B i = A i (0) − E i (0) and σ i as constants and with the further assumption that μ i = 0, we can write:

We have also set PD i (t + Δt) = 1 if I i (t) ≥ E i , i.e the bank defaults if the impact is greater than the capital. The parameters σ i can be obtained inverting Eq. (6) at time t = 0 given PD i (0). Other choices of μ and σ are also possible. For example the assumption of constant σ is not completely satisfactory as it is reasonable to expect that the volatility increases when the company approaches the default. It is possible to devise a more complex implementation of the model that includes a dynamics for σ(t) and μ(t).

Linear update

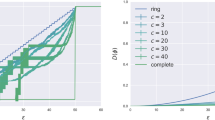

The Merton update is the financially “correct” way to update the probabilities of default. However we have found useful to introduce an alternative updating equation for PD i (t + Δt) where the increase in PD i is directly proportional to the impact I i (t). This can be thought as a proxy version of the Merton update when the volatility σ is extremely large (see Fig. 2).

with PD i (t + Δt) being capped to 1 when the impact I i (t) is greater or equal to E i (t).

Probability of default PD of a node as a function of the impact I expressed as a fraction of the capital E. When the ratio I/E is equal or greater than 1 we set PD = 1 as the financial institution is insolvent and it will default during the next time period. The continuous line describes the Linear update while the dots represent the Merton update with different values of the asset volatility σ.

Calculation of the loss distribution

As described in the paragraph relative to the credit risk approach, the default of financial institution i at time t corresponds to a drawn value x i of random variable X i in the sampling (X1 = x1, X2 = x2, …, X N = x N ) smaller than Φ−1(PD i (t)). If at least a node has defaulted at time t, we update the variables of the system for the next time t + Δt as in Eq. (5). Defaulted nodes are then removed with their respective edges. Instead, if no node has defaulted at time t, we proceed to the following time step and the new sampling with the same network and stochastic process parameters. The simulation is then continued for M temporal iterations. The loss L(t) for the entire network at time t is calculated as:

while the total loss Ltot is obtained by summing up the discounted values of the losses at the different time periods:

where D(t) is the discount factor relative to time t. In the following we indicate with the symbol \(\overline{\bullet }\) averages of the loss distribution.

Risk measures: PDImpact and PDRank

In our framework, the nodes are characterized by an initial probability of default PD(t) ≡ (PD1, PD2, …, PD N ) at time t = 0. Hence, even in absence of any external shock, the system can suffer losses during the simulations within the considered time frame of M time periods. The loss distribution so obtained, and in particular the expected loss \({\overline{L}}_{{\rm{t}}ot}({\bf{P}}{\bf{D}})\) can be used as the base-line for comparison with the losses in presence of stress. Since a distress of the network is described as an increased probability of default of a set of nodes, δPD, we can introduce the so-called Probability of Default Impact (PDImpact), indicated as C(δPD), of the stressing perturbation δPD onto the initial probability of default PD as:

where the two terms on the right hand side are respectively the average loss of the network in the presence and absence of the additional stress δPD.

Analogously, we can also introduce a node centrality measure, that we name the Probability of Default Rank, or PDRank, for assessing the relative importance of each financial institution. The PDRank of node i is obtained multiplying the probability of default of node i by the additional average loss experienced by the network due to the default of node i:

where PDDi is the initial probability vector in which the probability corresponding to node i has been set to 1 at t = 0, while PDIi is the initial probability vector where the probability corresponding to node i has been set to 0 and kept at the value 0 for each time t ≥ 0 (the node cannot default during the simulation). Therefore, the quantities \({\overline{L}}_{{\rm{t}}ot}({\bf{P}}{{\bf{D}}}^{Di})\) and \({\overline{L}}_{{\rm{t}}ot}({\bf{P}}{{\bf{D}}}^{Ii})\) represent respectively the average loss, during the simulation, when node i defaults at time 1, and when node i cannot default (the average loss that the network would suffer anyway irrespective of the node i). In practice, PDRank i of node i measures the expected loss “due” to node i. As the already known DebtRank, it is expressed as a monetary value and can be used to rank the nodes in terms of their ‘systemic risk’. Introducing PDRank and PDImpact we maintain the characteristics of DebtRank: a monetary value for the ‘centrality measure’ of a node and the sensitivity to a distress of the network also in absence of actual default.

A further characterization of a network, which we name PDBeta, can be obtained by quantifying the sensitivity of the system to a percentage increase of all the initial probabilities of default. Assuming an approximate linear relationship between the PDImpact C(δPD*) obtained for an increase of the probabilities of default δPD* ≡ PD ⋅ x/100 and the percentage of increase x, we can define PDBeta as follow:

In this way PDBeta represents the variation of PDImpact for a unitary percentage variation of the probabilities of default.

Results

To illustrate how our model works and its differences with respect to the standard approaches, we will study the case of a network with only two nodes, which can easily be treated within a Markov chain approach. We will then present the results of numerical simulations of the PD model for the network of the European Globally Systemic Important Banks. Among the main findings, we will show cases where the system presents “strong contagion” effects characterized by an increased risk when the average correlation between nodes is lower. Such behaviour is counter-intuitive as the lower the correlation the lower should be the tendency of defaulting together triggering large losses. This is indeed what happens in standard credit risk models where only one time period is taken into account. Analysing multiple time periods, as in the PD model, the contagion effects start playing a role and, in appropriate circumstances, they dominate the dynamics. When this happens, a lower correlation increases the probability of single node defaults in the first time steps. The network then experiences an increase of the probability of default of the remaining nodes, and severe losses follow in the subsequent time steps.

The two banks case

Let us consider the network with two nodes shown in Fig. 3. The network is described by the exposure a12 of node 1 to node 2, the exposure a21 of node 2 to node 1, and by the correlation ρ between the two nodes. Moreover, we have the following quantities associated with the nodes: the capitals E1 and E2, the total assets A1 and A2, and the probabilities of default PD1 and PD2.

A network with two nodes is used to show the characteristics of different models. The system is characterized by nodes with a total asset A, a capital E, a loss given default LGD and a probability of default PD. The correlation between the nodes is ρ, while the exposures of a node to the default of the other are respectively a12 and a21.

Network theory approaches

The first network theory approach that we consider is the so-called Furfine model31, which is based on a domino effect mechanism that propagates the stress of a node if and only if it is severe enough to wipe out the entire capital of the neighbouring nodes. The process starts with the application of an external shock (a loss) S to, let us say, node 1, but the stress is not propagated over the network if S ≤ E1. If instead S > E1, node 1 defaults with a loss A1 ⋅ LGD1. Node 2 will in turn default if and only if a21 ⋅ LGD1 > E2, with an additional loss A2 ⋅ LGD2. The problem of this model is that it does not feel the stress on the network. For example, even if S is just below E1, nothing happens as the capital can absorb the stress, and similarly if the impact a21 ⋅ LGD1 is just below E2.

In order to overcome this limitation, the Generalized DebtRank model can be used instead. In this model, the stress applied to node i is described by a continuous variable h i (t) = 1 − E i (t)/E i representing the percentage loss of the capital at iteration t, with h i = 1 corresponding to a default33. In our network with two nodes, the node variables are updated at each iteration as:

where we have introduced the quantities \({\hat{a}}_{ij}={a}_{ij}\cdot LG{D}_{j}\). Again, the process is started by the application of an initial stress 0 < S < 1 to node 1 at iteration t = 0. Thus, we set h1(0) = S and h2(0) = 0, with h1(−1) = h2(−1) = 0. The algorithm is iterated until the stress on the nodes converges to the values \({\tilde{h}}_{1}\) and \({\tilde{h}}_{2}\), and the loss is calculated as:

This algorithm presents the opposite problem with respect to the Furfine model as it can be extremely sensitive to external shocks. For example, in case of \(\frac{{\hat{a}}_{21}}{{E}_{2}} > 1\) and \(\frac{{\hat{a}}_{12}}{{E}_{1}} > 1\), the shock is amplified at each iteration until at least one of the two nodes defaults. This unrealistic outcome occurs no matter how small the initial loss is. In the actual financial system, the capital of a bank is usually greater of an exposure toward any other bank, however instabilities as outlined above can nevertheless arise in financial networks as described in Bardoscia et al.16.

Standard credit risk approach

The example with two nodes is particularly convenient as Monte Carlo simulations are not necessary and the system can be described in terms of a four states Markov chain48. In order to further simplify the treatment we study the case where the nodes are symmetric. In particular we assume they have the same numerical values for the parameters A, PD and LGD. The four states of the Markov chain are named according to the defaulted nodes: {0} no node has defaulted, {1} node 1 has defaulted, {2} node 2 has defaulted and {12} both nodes have defaulted. Starting with the system in state {0}, in the following time step, it will move to state {1} with probability p0→1, to state {2} with probability p0→2 and to state {12} with probability p0→12. Examining Fig. 4 it is evident that standard credit risk calculations performed by credit risk managers cannot probe the entire chain because they use only a single time step. For example, the probability of default of node 2 given the default of node 1, indicated as p1→12, would start playing a role only from the second time step. The transition probabilities of the Markov chain can be obtained from the parameters of the Gaussian latent variable model as follows:

Calling π0(t), π1(t), π2(t), π12(t) the probabilities of being, respectively, in state {0}, {1}, {2} and {12} at time t, we can see from Fig. 5a that π12(t = 1) ≡ p0→12 is an increasing function of ρ and, according to Eq. (15), it only depends on ρ and PD, and not on other network parameters. This is what bank risk managers would expect as their credit risk model would normally consider only one time step. In order to calculate a loss distribution at time t, which is the goal of any model to assess credit risk, we need to consider the loss associated with each of the four states of the Markov chain: L0 = 0, L1 = L2 = A ⋅ LGD, L12 = 2A ⋅ LGD and their corresponding probabilities π0(t), π1(t), π2(t) and π12(t). The average loss and the quantiles at the desired confidence level can be calculated from the loss distribution and can be used to assess the risk of the system. For example, in a standard credit risk model with t = 1, the average loss is given by equation \({\overline{L}}_{{\rm{t}}ot}(t=\mathrm{1)}={\sum }_{s\mathrm{=\{0\},}\ldots \mathrm{\{12\}}}{L}_{s}{\pi }_{s}(t=\mathrm{1)}\). In the analysis above we have neglected the discount factor from time t to time 0.

The Markov chain corresponding to a two banks network. The chain has four states: {0} no node has defaulted, {1} node 1 has defaulted, {2} node 2 has defaulted, {12} both nodes have defaulted. The arrows represent the possible transitions between states with their associated transition probabilities. The continuous black ones connect the states that can be reached from state {0} in a single time step as in a standard credit risk model, while the dashed arrows refer to transitions that are taken into account only by the PD model.

Probability of double default versus ρ in a standard credit risk approach and in the PD model. We consider a symmetric two node network, with parameters A = 200 bn EUR, PD1 = PD2 = 0.001 and \(\hat{a}\mathrm{=1}\) bn EUR. (a) In the standard credit risk approach, the probability π12 of being in state {12} after one time step increases monotonically with the correlation ρ between the two nodes. (b) In the PD model with 7 time periods of one year each, the probability π12 of being in state {12} is an increasing or decreasing function of ρ depending on the initial capital E. The chart can also be interpreted as the probability of undergoing a loss L tot = 2A ⋅ LGD, which is approximately the loss corresponding to a double default.

The PD model

In analysing the system with the PD model, we extend the parameters that we take into consideration, and maintaining the symmetry of the two nodes we also set E1 = E2 = E, with a12 = a21 = a and \(\hat{a}=a\cdot LGD\). In order to calculate the loss distribution, we use the Markov chain as in Fig. 4, with multiple time steps M. M is an input of the analysis and depends on the total time length that we want to investigate. It should be chosen to be large enough so that the probability of being in a particular state is sufficiently spread along the chain, but small enough so that the probability of being in the absorbing state, with all the nodes that have defaulted, is not overwhelming. We are going to use M = 7 periods Δt of one year each. We will consider different values of the capital E and the corresponding values of σ obtained inverting Eq. (6). Using the PD model with the Merton update described in Section “Merging the two approaches: the PD model framework” it is possible to obtain p1→12 and p2→12 as updating equations depending on the network parameter a, on the node characteristics A and E and on the volatility σ:

The additional transition probabilities of the Markov chain can be obtained considering that {12} is an absorbing state, hence p12→12 = 1, and from the fact that the sum of the transition probabilities from one state to all the states that can be reached with one time step must add up to 1. For example, p1→1 can be obtained as p1→1 = 1−p1→12.

Results at odds with the common intuition appear in the PD model, where we find the emergence of what we have called a strong contagion regime, in which the probability of suffering the maximum loss (double default) decreases with increasing correlation between the two banks. This is shown in Fig. 5b where we plot the probability of a double default π12(t) after t = 7 time periods versus ρ, and we explore different values of the initial capital E. We notice that, for the three largest values of E, the probability π12(t = 7) increases with increasing correlation ρ. This behaviour is not different from that found in the single-period simulation reported in Fig. 5a. Conversely, for the three smallest values of E, the probability π12(t = 7) is a decreasing function of ρ. This occurs because the probabilities p1→12 and p2→12 are larger for small values of E and the “contagion” paths [p0→1, p1→12] and [p0→2, p2→12] become dominant compared to the direct route [p0→12]. When this happens, we have a strong contagion regime, where the probability π12(t = 7) decreases with increasing correlation ρ, as it is less likely that the system moves to states {1} or {2} during the first iterations hence it cannot take “advantage” of the high transfer probability links p1→12 and p2→12.

The loss distribution can be derived analogously to the standard credit risk approach, associating each state to a relative loss. In particular, Fig. 5b can be interpreted as showing that the probability of experiencing a loss corresponding to the double default state {12}, L tot = 2A ⋅ LGD, is a decreasing function of ρ for sufficiently small capital E.

The network of European Global Systemically Important Banks

We have applied our model to analyse the data collected by the European Banking Authority (EBA) relative to the European Global Systemically Important Banks (GSIB). As it is standard in this field of investigation, a complete set of data relative to the exposure matrix {a ij } is not available as it is sensitive information and usually not even the regulators have it. We have followed the practice commonly accepted in the research community16,28,29 of inferring the bilateral network of exposures using the data that we do have, namely, for each node i, the component of the total exposures ∑ j a ij and the total liability ∑ j a ji toward the other financial institutions. We have used a new algorithm described in Methods to create a set of ten bilateral networks and we have used averages over the ensemble to perform our analysis. The initial values of the probabilities of default have been obtained from public information about the credit rating of the banks and from statistics available on the Fitch website (see Methods), while the other characteristics of the banks such as the capital E and the total asset A are available from the EBA data set. Following43, we will use a single value of pairwise correlation coefficient ρ for each non-diagonal entry of the correlation matrix and where not otherwise specified, we will assume ρ = 0.5 which can be interpreted as the average correlation between banks. To complete the set of parameters we have assigned a LGD = 0.6 to each financial institution. Setting the same LGD for all the GSIB banks is a reasonable approximation given that they pertain to the same industry sector and geographic area. The value of LGD = 0.6 has been chosen according to the analysis in ref.49. The numerical results obtained in this section reflects the approximations and assumptions made and are conservative as we have not included the likely reaction of regulators and banks after the first defaults (replenishing their capital for example).

The strong contagion regime

We have used a PD model with 7 periods of one year each, and we have performed 100000 Monte Carlo simulations for each period and changed the network from one year to the next one by using both the Merton and the Linear update rules. We have repeated the process for different values of ρ and for each of the ten networks of the ensemble created by the algorithm in Methods. The distributions of the total loss Ltot experienced by the network under different values of average correlations ρ are shown in Fig. 6. While the distribution relative to the Merton update presents a relatively low risk of severe losses, for the Linear update the risk is considerably higher. This is to be expected as, in the Linear update, the probability of default of the nodes increases substantially after the first defaults, triggering further losses in the following time steps. The Linear update distribution shows also the defining characteristic of what we have called strong contagion regime, i.e. a regime where the probability of extreme losses decreases with increasing correlation, as it is less likely to have defaulting nodes during the initial time steps that would act as catalysts for the contagion process. As described for the two banks case, these effects are not present in standard credit risk models and, if not properly taken into account, could bring to an underestimation of the risk. The PD model with the Merton update is the financially relevant model and, for the GSIB data, it does not exhibit strong contagion effects, so it is reasonable to question if they can arise in actual financial networks. The answer is affirmative, as the Linear update can be seen as an approximation of the Merton update when the asset volatilities are extremely high (see Fig. 2). The same effects can occur in the Merton update also when the capitalization of the banks is insufficient. To show this, in Fig. 7 we report the loss distribution obtained reducing the capital of the banks by 50% and using the Merton update. In this case, the capital is not enough and any shock can increase drastically the probability of default, hence the strong contagion effect of decreasing risk with increasing correlation.

Loss distribution obtained with the PD model for the GSIB bank network and for different values of average correlation ρ. The plot reports the number of counts (relative to 100,000 simulations) with a given value of loss at the final time interval of M = 7 years. The number of counts with loss equal to 0 are not shown. We have assigned a loss given default LGD = 0.60 to each bank, so the maximum loss is 60% of the total asset of the system, defined as the sum of the total assets of the banks. The panel on the right represents the loss distribution obtained using the Merton update where it can be seen that the risk of a complete collapse of the system increases with increasing ρ as in standard credit risk models. In the left panel, relative to the Linear update, the tail of the distribution is a decreasing function of ρ due to strong contagion effects. The error bars represent the maximum and minimum number of counts with respect to an ensemble of networks inferred from the available GSIB data.

Loss distribution obtained with the Merton update when the capital of all the GSIB banks has been halved. All the other parameters of the PD model have been set as in the case considered in Fig. 6. The tail of the distribution is a decreasing function of ρ due to strong contagion effects arising because the network is weakened having only half of the capital to absorb the shocks. The error bars represents the maximum and minimum number of counts with respect to an ensemble of networks inferred from the available GSIB data.

Furthermore, the error bars in Figs 6 and 7 do not substantially modify the shape of the loss distributions from which the risk measures are derived, implying that the results of the PD model are robust with respect to the uncertainties in the network construction. It appears that the constraints imposed by knowing the total financial exposures and liabilities are quite stringent, so that our analysis is robust and representative of the actual and unknown matrix of exposures. For this reason, in the following we will focus our analysis on a single network of the ensemble.

To investigate the sensitivity of the system to a variation in the probability of default of the banks, we have calculated the PDImpact as defined in Section “Merging the two approaches: the PD model framework”. Figure 8 confirms the existence of an approximate linear relationship between PDImpact and a percentage increase of the initial probabilities of default, allowing the definition of the measure PDBeta in Eq. (12) that can be used, together with the expected loss \({\overline{L}}_{tot}\), to gauge the riskiness of the network. Defining the global asset of the network as \({A}_{glob}={\sum }_{k=1}^{N}{A}_{k}\), we have found \({\overline{L}}_{tot}/{A}_{glob}=\mathrm{0.93 \% }\) and PDBeta/A glob = 0.0124% for the Merton update, and \({\overline{L}}_{tot}/{A}_{glob}\mathrm{=5.125 \% }\) and PDBeta/A glob = 0.0318% for the Linear update. As expected, the average loss and the PDBeta for the Linear update are larger than the ones relative to the Merton update, reflecting its greater capability of spreading the contagion.

PDImpact vs a percentage increase in the PD of each node. Approximate linear dependence between PDImpact C(δPD*) obtained for an increase of the probabilities of default δPD* ≡ PD ⋅ x/100 and the percentage of increase x. In the analysed network, the average loss increase per 1% increase in the probability of default is about 3.5 and 9 billion EUR respectively for the Merton and for the Linear update.

The critical nodes of the network

We can now analyse the relative contribution of the nodes to the systemic risk of the network. Table 1 reports the values of PDRank in billion (bn) of EUR, obtained using the Merton update while in Table 2 we used the Linear update. The ranking of the most important nodes is different in the two cases and the corresponding values can vary by more than one order of magnitude for nodes with a high probability of default such as BFA with PD = 0.0116 and MPS with PD = 0.0093. These nodes can act as a catalyst for a chain reaction of losses especially in a “strong contagion” regime: relatively small losses can have a dramatic effect on the probability of default of the impacted nodes and this explains why they are at the top of the PDRank table in the Linear update case. The ranking implied by PDRank is different from the one that takes into consideration the total asset of the financial institutions (as in a “too big to fail” approach). This is evident as the PDRank definition includes the probability of default, which is not related to the total asset. We can investigate if PDRank can be explained by the probability of default multiplied by the total asset. Figure 9 shows that this is not the case even if there is a positive correlation. It is interesting to note that BFA and MPS are well above the regression line in case of the Linear update (Fig. 9b), which reflects once again the increased role of the probability of default in a strong contagion regime.

PDRank as a function of Total Asset ⋅ PD. PDRank (m EUR) of a financial institution is shown as a function the probability of default of the corresponding node times its Total Asset. Panel (a) and (b) refer to the Merton and the Linear update respectively. While there is a positive correlation, PDRank cannot be explained completely with a linear regression and the differences can be thought as due to network effects.

Discussion

Our model, the PD model, can be used by regulators to quantify the systemic risk of a financial network in terms of statistics of a loss distribution in a language that is familiar to financial risk managers. The banks can be classified according to their contribution to systemic risk using the measure that we have called PDRank, while the resilience of the financial system to external stress can be estimated with PDImpact. The PD model is a dynamic model that allows following the evolution of the system in time, hence it can be used for scenario analysis and for assessing the likely outcome of policy measures introduced by regulators. The data relative to the network of bilateral exposures between banks, used by our model, are usually not available. However, we have found that our analysis is robust and only weakly dependant on the specific network inferred given the constraints imposed by the available data, namely the aggregated total exposures and the total liabilities of each bank to the others. When the capitalization of the banks is insufficient or in period of extreme volatility, we have identified a strong contagion regime where initial losses substantially increase the probability of default of the nodes so that it is likely that further losses ensue in the remaining time steps. Crucially we have shown that the system can change its behaviour, varying the parameters of the network, as illustrated with the data relative to the European Global Systemically Important Banks where halving the capital would move the system to a strong contagion regime (see Fig. 7). One of the striking characteristics of the strong contagion regime is that lower average correlation between nodes correspond to larger losses. Diversification in this context increases the risk. This is of extreme importance for banks, and as far as we know the community of risk managers is not aware of these effects as their credit risk models cannot capture strong contagion effects. This in turn can cause banks to underestimate the capital needed to overcome periods of crisis with severe consequences for the financial system stability.

Methods

Default correlation vs correlation matrix in the Gaussian latent variable model

The default correlation \({\hat{\rho }}_{ij}\) represents the tendency of two assets i and j to default together:

where δi = 1 if the node i defaults during the unit time interval and 0 otherwise. The symbol 〈⋅〉 indicates the expectation value of a quantity, so that 〈δ i 〉 is equal to the probability of default PD i previously defined, while 〈δ i δ j 〉 is equal to the probability PD ij of simultaneous default of nodes i and j. However, in practice, the matrix of correlations between defaults defined above is rarely used as in the general case does not interpolate between −1 and 1 and it is difficult to calibrate with the available financial data, given the scarcity of the events of default. What is used instead are models, such as the Gaussian latent variable model introduced in Subsection “The Credit Risk approach”, which describe correlated events and that imply a value for PD ij , hence indirectly, via Eq. (19), a value for \({\hat{\rho }}_{ij}\).

Merton model

Merton model is an option model for corporate default based on the capital structure of a company40. He considered a simplified model with a company having total assets A(t) and capital E(t) at time t and a single liability B(t) = A(t)−E(t) expiring at time T = t + Δt. The value of the total assets A of the company is assumed to follow a lognormal random process with drift μ and volatility σ. A default occurs if during a simulation A(t) falls below the value B(T) at time T. In that case the assets of the company are not enough to pay back the liability B(T), the capital E(T) = A(T)−B(T) is negative and the stakeholders would declare bankruptcy to avoid the payment of the difference. With standard stochastic calculus techniques it is possible to calculate the probability of default as36:

where Φ is the cumulative Gaussian distribution.

Inferring the network

We describe a new algorithm to infer the network of bilateral exposure from the aggregated total asset and liabilities of each bank toward the other banks. In the literature, a maximum entropy algorithm50 has often been used but it is known that it might not represent the best choice for recreating a realistic interbank network51 and different alternatives have been proposed26,52,53. We want to capture the fact that small financial institutions are more inclined to have connections with a small number of bigger banks. The level of exposure tends to be above a certain minimum value as the creation of a credit relationship involves a maintenance cost. This was already addressed by Anand et al.54 but here we propose an alternative algorithm that we find more intuitive and that allows controlling over the minimum exposure amount and the “degree of attraction” between smaller nodes and bigger ones. The main idea is to match asset with liabilities, building the adjacency matrix in steps: (1) The smaller borrower nodes choose first where to get the money from; (2) The lender (a different node) is chosen randomly with a probability that is proportional to its remaining assets to the power of alpha (alpha being the parameter for tuning the degree of attraction between heterogeneous nodes and set to 1 for the calculations in this paper). (3) The loan amount is chosen as a percentage of the total liabilities of the borrower node and represents the minimum exposure that it is convenient to exchange, constrained by the ‘residual’ assets of the lender and the ‘residual’ liabilities of the borrower. (4) The adjacency matrix and the residual asset and liabilities amount are updated. (5) The process continues till all the assets are matched with all the liabilities. (6) If at the end remains one node that can borrow money only from itself, the procedure re-routes some of the previous loans so that the adjacency matrix is completed with zero values on the diagonal.

Data availability

The datasets analyzed during the current study are available on-line as follows. As part of its mandate, the European Banking Authority collects data annually from the Global Systemically Important Banks (GSIB) in the European Union and publishes the results on its website where we have chosen the data from the year 2014 (https://www.eba.europa.eu/risk-analysis-and-data/global-systemically-important-institutions/2015). The data set contains the fields “Intra-financial system assets” and “Intra-financial system liabilities” that we use in our model to recreate the individual exposures using the algorithm described in the previous paragraph. The field “Total exposures” provides a proxy for the total assets A i . The capital has been obtained from another study performed by EBA in cooperation with European Systemic Risk Board (ESRB): ‘The EU-wide stress test’, that aims at ‘assessing the resilience of financial institutions to adverse market developments’ (http://www.eba.europa.eu/risk-analysis-and-data/eu-wide-stress-testing/2014/results).We have selected the Banks that were in both exercises and we identified 35 institutions. The initial probability of default has been obtained from the table “Financial Institutions Average Annual Transition Matrix: 1990–2014” in the document “2015 Form NRSRO Annual Certification” obtained from Fitch website www.fitchratings.com.

References

Bank of England - financial policy committee. Available at https://www.bankofengland.co.uk/about/people/financial-policy-committee (2017).

Allen, F. & Gale, D. Systemic risk and regulation. The Risks of Financial Institutions 341–376 (University of Chicago Press, 2007).

Eisenberg, L. & Noe, T. H. Systemic risk in financial systems. Manag. Sci. 47, 236–249 (2001).

Georg, C. P. The effect of the interbank network structure on contagion and common shocks. J. Bank. Finance 37, 2216–2228 (2013).

Glasserman, P. & Young, P. How likely is contagion in financial networks. J. Bank. Finance 50, 383–399 (2015).

Haldane, A. G. Managing global finance as a system. Available at https://www.bankofengland.co.uk/speech/2014/managing-global-finance-as-a-system (2014).

Iori, G., De Masi, G., Precup, O. V., Gabbi, G. & Caldarelli, G. A network analysis of the italian overnight money market. J. Econ. Dyn. Control 32, 259–278 (2008).

Kenett, D., Perc, M. & Boccaletti, S. Networks of networks – An introduction. Chaos Solit. Fractals 80, 1–6 (2015).

Jalili, M. & Perc, M. Information cascades in complex networks. J. Complex Netw. 5, 665–693 (2017).

Approaches to monetary policy revisited - lessons from the crisis. Sixth ECB Central Banking Conference 18–19 november 2010. Available at http://www.ecb.europa.eu/press/pr/date/2011/html/pr110603.en.html (2011).

Boccaletti, S., Latora, V., Moreno, Y., Chavez, M. & Hwang, D. Complex networks: Structure and dynamics. Phys. Rep. 424, 175–308 (2006).

Strogatz, S. H. Exploring complex networks. Nature 410, 268–276 (2001).

Crucitti, P., Latora, V. & Marchiori, M. Model for cascading failures in complex networks. Phys. Rev. E 69, 045104/1–045104/4 (2004).

Leduc, M. V. & Thurner, S. Incentivizing resilience in financial networks. J. Econ. Dyn. Control 82, 44–66 (2017).

Podobnik, B. et al. The cost of attack in competing networks. J. Royal Soc. Interface 12, 20150770, https://doi.org/10.1098/rsif.2015.0770 (2015).

Bardoscia, M., Battiston, S., Caccioli, F. & Caldarelli, G. Pathways towards instability in financial networks. Nat. Commun. 8, 14416, https://doi.org/10.1038/ncomms14416 (2017).

Birch, A. & Aste, T. Systemic losses due to counterparty risk in a stylized banking system. J. Stat. Phys. 156, 998–1024 (2014).

Gai, P. & Kapadia, S. Contagion in financial networks. Proc. Royal Soc. A 466, 2401–2423 (2010).

Haldane, A. G. & May, R. M. Systemic risk in banking ecosystems. Nature 469, 351–355 (2011).

Leduc, M. V., Poledna, S. & Thurner, S. Systemic risk management in financial networks with credit default swaps. J. Netw. Theory Finance 32, 19–39 (2017).

Poledna, S. & Thurner, S. Elimination of systemic risk in financial networks by means of a systemic risk transaction tax. Quant. Finance 16, 1599–1613 (2016).

Vitali, S., Glattfelder, J. B. & Battiston, S. The network of global corporate control. PLoS ONE 6, e25995 (2011).

Huang, X., Vodenska, I., Havlin, S. & Stanley, H. E. Cascading failures in bi-partite graphs: Model for systemic risk propagation. Sci. Rep. 3, 1219, https://doi.org/10.1038/srep01219 (2013).

Sakamoto, Y. & Vodenska, I. Systemc risk propagation in bank-asset network: new perspective on japanese banking crisis in 1990s. J. Complex Netw. 5, 315–333 (2017).

Cont, R. & Wagalath, L. Running for the exit: distressed selling and endogenous correlation in financial markets. Math. Finance 23, 718–741 (2013).

De Masi, G., Iori, G. & Caldarelli, G. Fitness model for the italian interbank money market. Phys. Rev. E 74, 066112/1–066112/5 (2006).

Poledna, S., Molina-Borboa, J. L., Martinez-Jaramillo, S., van der Leij, M. & Thurner, S. The multi-layer network nature of systemic risk and its implications for the costs of financial crises. J. Financial Stab. 20, 70–81 (2015).

Upper, C. & Worms, A. Estimating bilateral exposures in the german interbank market: Is there a danger of contagion? Eur. Econ. Rev. 48, 827–849 (2004).

Wells, S. UK interbank exposures: systemic risk implications. Financial Stab. Rev. 13, 175–182 (2002).

Lehar, A. Measuring systemic risk: A risk management approach. J. Bank. Finance 29, 2577–2603 (2005).

Furfine, C. Interbank exposures: Quantifying the risk of contagion. J. Money Credit Bank. 35, 111–128 (2003).

Battiston, S., Puliga, M., Kaushik, R., Tasca, P. & Caldarelli, G. DebtRank: too central to fail? financial networks, the FED and systemic risk. Sci. Rep. 2, 541 (2012).

Bardoscia, M., Battiston, S., Caccioli, F. & Caldarelli, G. DebtRank: a microscopic foundation for shock propagation. PLoS ONE 10, e0134888 (2015).

Alam, M., Hao, C. & Carling, K. Review of the literature on credit risk modeling: development of the past 10 years. Banks and Bank Systems 5, 43–60 (2010).

Gupton, G. M., Finger, C. C. & Bhatia, M. CreditMetrics: Technical Document. (J.P. Morgan & Co., 1997).

O’Kane, D. The gaussian latent variable model in Modelling Single-name and Multi-name Credit Derivatives 241–259 (Wiley Finance, 2008).

Vasicek, O. A. Probability of Loss on Loan Portfolio in Finance, Economics and Mathematics 143–146 (John Wiley & Sons, 2015).

Gordy, M. B. A risk-factor model foundation for ratings-based bank capital rules. J. Financ. Intermed. 12, 199–232 (2003).

Gordy, M. B. A comparative anatomy of credit risk models. J. Bank. Finance 24, 119–149 (2000).

Merton, R. C. On the pricing of corporate debt: The risk structure of interest rates. J. Finance 29, 449–470 (1974).

Hull, J. C., Predescu, M. & White, A. The valuation of correlation-dependent credit derivatives using a structural model. J. Credit Risk 6, 99–132 (2010).

Duellmann, K., Kull, J. & Kunisch, M. Estimating asset correlations from stock prices or default rates - which method is superior? J. Econ. Dyn. Control 34, 2341–2357 (2010).

Huang, X., Zhou, H. & Zhu, H. A framework for assessing the systemic risk of major financial institutions. J. Bank. Finance 33, 2036–2049 (2009).

Cousin, A., Dorobantu, D. & Rulliere, D. An extension of davis and lo’s contagion model. Quant. Finance 13, 407–420 (2013).

Davis, M. A. & Lo, V. Modelling default correlation in bond portfolios in Mastering Risk, Volume 2: Applications 141–151 (FinancialTimes, 2001).

Herbertsson, A. & Rootzen, H. Pricing kth-to-default swaps under default contagion: the matrix-analytic approach. J. Comput. Finance 12, 49–78 (2008).

Yu, F. Correlated defaults in intensity-based models. Math. Finance 17, 155–173 (2007).

Avellaneda, M. & Wu, L. Credit contagion: Price cross-country risk in brady debt markets. Int. J. Theor. Appl. Finan. 04, 921–938 (2001).

Altman, E., Resti, A. & Sironi, A. Default recovery rates in credit risk modelling: a review of the literature and empirical evidence. Econ. Notes 33, 183–208 (2004).

Upper, C. Simulation methods to assess the danger of contagion in interbank markets. J. Financial Stab. 7, 111–125 (2011).

Mistrulli, P. E. Assessing financial contagion in the interbank market: Maximum entropy versus observed interbank lending patterns. J. Bank. Finance 35, 1114–1127 (2011).

Drehmann, M. & Tarashev, N. Measuring the systemic importance of interconnected banks. J. Financ. Intermed. 22, 586–607 (2013).

Halaj, G. & Kok, C. Assessing interbank contagion using simulated networks. Comput. Manag. Sci. 10, 157–186 (2013).

Anand, K., Craig, B. & Von Peter, G. Filling in the blanks: Network structure and interbank contagion. Quant. Finance 15, 625–636 (2015).

Acknowledgements

We are grateful for discussions with Alessandro Fiasconaro, Neofytos Rodosthenous, Fabio Caccioli, Gerardo Ferrara and Pedro Gurrola-Perez. V. L. acknowledges support from the EPSRC project EP/N013492/1.

Author information

Authors and Affiliations

Contributions

V.L. and D.P. designed the research, analysed the data and wrote the paper; D.P. performed the research and the computer simulations.

Corresponding author

Ethics declarations

Competing Interests

Vito Latora declares no competing interest. Daniele Petrone has been working as a consultant for major financial institutions for the past twenty years.

Additional information

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Petrone, D., Latora, V. A dynamic approach merging network theory and credit risk techniques to assess systemic risk in financial networks. Sci Rep 8, 5561 (2018). https://doi.org/10.1038/s41598-018-23689-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-018-23689-5

This article is cited by

-

Contingent convertible bonds in financial networks

Scientific Reports (2023)

-

An AI approach for managing financial systemic risk via bank bailouts by taxpayers

Nature Communications (2022)

-

Dangerous liasons and hot customers for banks

Review of Quantitative Finance and Accounting (2022)

-

The multiplex nature of global financial contagions

Applied Network Science (2020)

-

Dynamically induced cascading failures in power grids

Nature Communications (2018)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.