Abstract

Reconstruction of the seismic wavefield from sub-sampled data is important and necessary in seismic image processing; this is partly due to limitations of the observations which usually yield incomplete data. To make the best of the observed seismic signals, we propose a joint matrix minimization model to recover the seismic wavefield. Employing matrix instead of vector as weight variable can express all the sub-sampled traces simultaneously. This scheme utilizes the collective representation rather than an individual one to recover a given set of sub-samples. The matrix model takes the interrelation of the multiple observations into account to facilitate recovery, for example, the similarity of the same seismic trace and distinctions of different ones. Hence an l2, p(0 < p ≤ 1)-regularized joint matrix minimization is formulated which has some computational challenges especially when p is in (0, 1). For solving the involved matrix optimization problem, a unified algorithm is developed and the convergence analysis is accordingly demonstrated for a range of parameters. Numerical experiments on synthetic and field data examples exhibit the efficient performance of the joint technique. Both reconstruction accuracy and computational cost indicate that the new strategy achieves good performance in seismic wavefield recovery and has potential for practical applications.

Similar content being viewed by others

Introduction

Reconstruction of the seismic wavefield has recently attracted increasing attentions in geophysical community. This is due to the fact that seismic acquisition often violates the Shannon sampling theorem because of the restrictions of investment, topography, noise, bad traces and so on. The under-sampled data will bring aliasing and artifacts which will influence results of migration1, de-noising2, multiple elimination3 and AVO analysis4. In addition, huge storage of the massive data is also a problem, lossless compression methods are desirable5. An important branch of these methods is the sparse transform based method combined with a regularization strategy6,7. For this method, seismic interpolation is treated as an inverse problem, and seismic events are assumed to be sparse in some transformed domain, such as the Fourier transform1,8,9,10,11, or the linear Radon transform12. Usually the acquired geophysical data is subsampled due to the variations of landform1,13,14, hence the seismic wavefield recovery is an ill-posed inverse problem. Therefore, a key issue is how to invert the mathematical model using only incomplete, sub-sampled data1,13,14. Variety of regularization methods has been developed to improve the quality of image and seismic wavefield recovery6,15,16,17.

Previous methods for such a recovery problem are based on the l q -norm minimization, e.g., the basis pursuit denoising (BPDN) criterion using (orthogonal) matching pursuit method18,19 and the least absolute shrinkage and selection operator (LASSO)20 for l1-norm constrained minimization problems. Efficient optimization algorithms include conjugate gradient methods with preconditioning techniques21 and gradient projection methods22,23,24,25,26. For solving the l q -norm minimization problem, people usually convert the matrix form of the wavefield into the vector form and solve the corresponding matrix-vector equations. We observed that the seismic wavefield can be represented using matrix instead of vector as weight variable to express all the signals simultaneously, which takes the interrelation of the sampled observations into account. This is more reasonable as the seismic signals are correlated transversely. Therefore, in this paper we propose a matrix optimization model for the seismic wavefield recovery and study the related properties. The mixed matrix minimization models have been used in machine learning. Rakotomamonjy et al.27 proposed to use the mixed matrix norm lq, p (1 ≤ q < 2, 0 < p ≤ 1) in multi-kernel and multi-task learning. But the induced optimization problems in27 have to be solved separately by different algorithms with respect to p = 1 and 0 < p < 1. For grouped feature selection, Suvrit28 addressed a fast projection technique onto l1, p-norm balls particularly for p = 2, ∞. But the derived method in28 does not match the proposed matrix optimization problem (11). Similar joint sparse representation has been used for robust multi-modal biometrics recognition in29. Sumit et al.29 employed the traditional alternating direction method of multipliers to solve the involved optimization problem. Wang et al.30 applied l2, 0 + -norm to semi-supervised robust dictionary learning, while the optimization algorithm has not displayed definite convergence analysis30.

Recently, matrix-minimization methods with nuclear norm have been developed for seismic wavefield recovery31,32,33,34 which mainly considers the rank reduction as the sparse pattern in 2D cases. To avoid the expensive computations in solving the involved matrix completion optimization problems, a matrix factorization strategy was developed in31,32. This paper proposes a different matrix minimization approach based on l2, q−l2, p norm which naturally generalizes the representative vector to matrix in joint distribution sense. A unified method is developed to solve the matrix optimization problem with mixed norm for any q = 2 and 0 < p ≤ 1. The innovations of this paper can be listed as follows:

-

1)

A jointly sparse matrix minimization model is developed for seismic wavefield recovery. This approach employs matrix to expresses multiple signals simultaneously. The measurement of matrix row coefficients are expected to exhibit the compact priori of multiple observations which is different from the existed methods based on matrix nuclear-norm minimization31,32,33,34.

-

2)

A unified algorithm is developed to solve the mixed matrix optimization problem (7) for any p \(\in \) (0, 1]. This algorithm needs only matrix-vector operations but not matrix factorization which can be easily adapted to large-scale cases. The convergence analysis is also demonstrated.

-

3)

Numerical experiments on synthetic and field data are carried out. The results on seismic wavefield recovery exhibit the efficient recovery performance of the joint sparse expression strategy.

Modeling

Given a set of seismic signals (traces) x1, x2, …, x l in n-dimensional space, each signal x j (j = 1, 2, …, l) is sensed by m sensors to yield seismic wavefield records as

where Ai is a row vector representing the impulse response of the i-th sensor. Denote A = [(A1)T, (A2)T, …, (Am)T]T, then the seismic observations d j = [d1j, d2j, …, d mj ]T \(\in \) Rm can be reformulated as d j = Ax j (j = 1, 2, …, l). Sparse expression is a popular strategy to restore x j with m much less than n of the mapping operator A.

Suppose that the original seismic signal x j can be spanned by a series of orthogonal bases \({\{{{\rm{\Psi }}}_{k}\}}_{k=1}^{K}\) such that

where \({m}_{j}^{k}=({x}_{j},{{\rm{\Psi }}}_{k})\). Denote Ψ the orthogonal matrix constituted by the orthogonal bases, then we have a more compact transformation L = AΨ \(\in \) Rm × K. Consequently the systems (1) and (2) can be incorporated to

where \({m}_{j}={\rm{\Psi }}\ast {x}_{j}={\{{m}_{j}^{k}\}}_{k=1}^{K}\) is the coefficient vector (weighting factor) corresponding to the seismic signal x j . Usually, problem (3) is ill-posed due to the limitation of acquisition and violation of sampling requirements. Sparse regularization is preferred to restore the operation coefficients from the under-determined linear combination system (3). A general l q −l p (q > 0, p > 0) model was presented in [16]

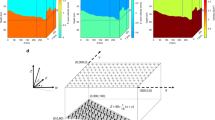

where \(\parallel {m}_{j}{\parallel }_{p}^{p}=\sum _{k=1}^{K}|{m}_{j}^{k}{|}^{p}\) is the stabilizer bearing prior information with respect to d j and α j > 0 is a regularization parameter. When 0 < p ≤ 1, the minimization model (4) tries to find a sparse recovery coefficient m j with the least nonzero entries. However, the framework (4) recovers the weight factor m j only using the j-th seismic trace record d j independently which totally ignores the correlation with other sampled data \({d}_{\hat{j}}\) (\(\hat{j}\ne j\)). Generally, multiple seismic wavefield traces are related to each other. The similarity and difference hidden in the given group of seismic traces are expected to improve the recovery performance. To detailedly demonstrate the correlationship among multiple seismic traces, we randomly choose three trace observations from a seismogram generated from a seven layers geologic velocity model (see Experimental Section for details). Two neighboring traces are denoted by d1 and d2 while the third one d3 is relatively far from them. We separately recover the representation coefficients \({m}_{j}^{\ast },j=1,2,3\) by solving

where \({m}_{j}^{k}\) is k-th entry of m j . The weight values of recovered coefficients are plotted in Fig. 1(a–c). The horizontal axis denotes the coordinates of the representation vector while the vertical axis shows the weight quantities of representation coefficients, namely \(|{({m}_{j}^{\ast })}^{k}|,k=1,2,\cdots ,256;j=1,2,3\). The curves clearly display the similar clustering and sparse pattern of three recovered coefficients. The correlations inspire us to assume that the multiple traces coefficients share the same distribution. For comparison, we jointly recover three coefficients simultaneously from D1, 2, 3 = [d1, d2, d3] \(\in \) Rm × 3 by a matrix minimization problem

where \({M}_{1,2,3}^{\ast }\in {R}^{K\times 3}\) and \({m}_{1,2,3}^{k}\in {R}^{3}\) is the k-th row of M1, 2, 3. Since three vector minimizations as (5) are integrated to a matrix one (6), each entry \({m}_{j}^{k}\) of representative vector is spanned to a row vector \({m}_{1,2,3}^{k}\in {R}^{3}\). Hence the absolute values of weight entries in (5) are naturally generalized to l2 norm of row vector for its smoothness, that is \(|{m}_{j}^{k}|\to \parallel {m}_{1,2,3}^{k}{\parallel }_{2}\). To illustrate the jointly recovered coefficient matrix \({M}_{1,2,3}^{\ast }\) of (6) also follows the similar variation as in Fig. 1(a–c), we measure the l2 norm of each row vector in the joint sense corresponding to \(|{({m}_{j}^{\ast })}^{k}|\),

Clearly, the joint representation coefficients also exhibit similar sparse pattern and weight concentration to the individual models (see Fig. 1(d)).

Under the assumption that multiple seismic wavefield traces jointly share the similar weight parameter pattern, we propose to express all the sub-sampled observations over the same bases simultaneously as

where D = [d1, d2, …, d l ] is composed of l seismic observations and M = [m1, m2, …, m l ] denotes the corresponding coefficient matrix. As far as the columns are concerned, the equation (8) is an easy consequence of the equation (3). Figure 1 has demonstrated that the multiple seismic traces are related to each other, especially when the samples are obtained in the similar fields. We reasonably measure the joint compactness and correlation of the multiple observations in row sense. By reviewing l q −l p (q > 0, p > 0) model (4), we notice that the expression errors e j = Lm j −d j , j = 1, 2, …, l and the priori of representation coefficients are assumed to submit to the independent identically distribution,

where \({m}_{j}^{k}\) is the k-th entry of representation vector m j \(\in \) RK. The solution \({m}_{j}^{\ast }\) to (4) can be rewritten as the maximum likelihood estimation

Because each coefficient component \({m}_{j}^{k}\) in (3) is spanned to a row vector in the joint expression system (8), the absolute value of the scalar component is naturally replaced by a vector norm. Euclidean norm is preferred for its smoothness and easiness. Based on the analysis (9) and (10), the joint sparse priori of coefficient matrix M and fidelity error matrix E = LM−D can be considered

where mk, ek are the k-th row vectors of M \(\in \) Rk × l and E \(\in \) Rm × l respectively.α k > 0 is a constant and \({\Vert .\Vert }_{2}\) stands for the Euclidean norm. In the similar relationship between (4) and (9), the joint matrix minimization approach for the ill-posed linear system (8) can be generally formulated as

where the l2, p norm of the priori matrix M is defined as

Here \(\parallel LM-D{\parallel }_{2,q}(q > 0)\) denotes the l2, q matrix norm of LM−D, \({\rm{\Lambda }}=diag{\{{\alpha }_{k}\}}_{k=1}^{K}\) is a regularization matrix and its diagonal entry α k > 0 is the regularization parameter for the k-th row of M. Especially, if M contains only one column m j , each \(\parallel {m}^{k}{\parallel }_{2}\) is reduced to \(|{m}_{j}^{k}|\) while \(\parallel M{\parallel }_{2,p}\) is equivalent to \(\parallel {m}_{j}{\parallel }_{p}\). When Λ takes scalar identity, the joint system (11) is exactly reduced to (4).

There are different choices of the parameter pair q > 0 and p > 0. Here we are interested in q = 2 and p \(\in \) (0, 1] for the practical purpose. Extensive studies have illustrated that the fractional norm l p (p \(\in \) (0, 1)) has better sparsity than l1 norm35,36,37,38,39. But the l p norm is neither Lipschitz nor convex which brings computational challenge. This paper presents a unified algorithm to solve the mixed l2, p regularized matrix minimization problem (11) for any p \(\in \) (0, 1]. The computational results in seismic wavefield recovery validate the efficient performance of the joint matrix minimization approach. The convergence properties of our new algorithm are also analyzed.

Algorithms

In this section, a unified method will be developed to solve the l2, q−l2, p matrix minimization problem for any q = 2 and 0 < p ≤ 1. Especially when p is fractional, (11) is neither convex nor Lipschitz continuous which brings many computational difficulties. Actually the unconstrained l q -l p minimization is strongly NP-hard for any 0 < q or p < 140. Reweighed minimization algorithm35,41,42 is an efficient algorithm for solving the l2-l p (0 < p < 1) vector minimization problem which has been extended by Wang et al.43 to solve matrix minimization problem. Even the problem considered in43 is the special case of (11) with q = p \(\in \) (0, 1], the idea motivates us to develop an iteratively quadratic algorithm for the generalized l2, p matrix minimization for p \(\in \) (0, 1]. Moreover, the convergence analysis will be uniformly demonstrated.

After simple transformation, \(\parallel {\rm{\Lambda }}M{\parallel }_{2,p}^{p}\) can be rewritten as

where \(Tr(\cdot )\) stands for the trace operation and

where mk (k = 1, 2, …, K) is the k-th row vector of M.

Hence the objective function of (11) for q = 2, p \(\in \) (0, 1] can be reformulated as

It is well known that the KKT point of the unconstrained optimization problem (11) is also the stationary point of J(M)44. Compute the derivative of J(M) with respect to matrix M and set it to zero, we get the KKT equation of the problem (11) as follows

Thus solving (11) is reduced to finding the solution of the nonlinear equation (16). If H is fixed and the matrix \(N={L}^{T}L+\frac{p}{2}H\) is invertible, equation (16) can be solved by

We notice that if some row of M is zero, the diagonal entries of H cannot be generated, nor can N. Then the iteration breaks down. In view of the seismic wavefield recovery, the zero row means the corresponding basis function has no contribution to reconstruct all the observed seismic traces. For example, if mk = 0, then L k (the k-th column of transformation matrix L) is nothing with the observations D in the representation system (8). To avoid the possible breakdown of the matrix N in (17) and reasonably explain this numerical behavior, we apply the Sherman-Morrison-Woodbury formula45 to N−1. Denote

then the formula (17) can be rewritten as

where I m is m-dimensional identity operator. If matrices G and M are computed alternatively corresponding to equations (18) and (19) respectively, then an iterative procedure can be naturally developed

The iterative algorithm is outlined in Algorithm 1.

Algorithm 1. An iterative procedure for solving problem (16)

Step 1. Input L \(\in \) Rm × K, D \(\in \) Rm × l. Set the sparse parameter p \(\in \) (0, 1] and diagonal matrix \({\rm{\Lambda }}=diag\{{\alpha }_{1},{\alpha }_{2},\cdots ,{\alpha }_{K}\}\,\succ \,0\) (here \(\succ \) refers to the positive definite). Given the stopping criterion \(\epsilon > 0\).

Step 2. Set t = 1 and initialize M1 \(\in \) RK × l.

Step 3. For t = 1, 2, … until \({\rho }_{t}\le \epsilon \) do:

The \({m}_{t}^{k}\) (k = 1, 2, …, K) means the k-th row vector of M t . Algorithm 1 aims to solve the fixed-point system (16) which is the stationary equation of the matrix function (15). Based on the iterative procedure of Algorithm 1, the iterative point M k is the solution of the nonlinear equation (16) if and only if M t = [G t −G t LT(I m + LG t LT)−1LG t ]LTD which is equivalent to M k = Mk + 1. From this iteration on, the iteration point will not update which indicates that a stationary point has been found. Hence the stopping criterion of Algorithm 1 can be chosen as \({\rho }_{t}:=\frac{\parallel {M}_{t+1}-{M}_{t}{\parallel }_{F}}{\parallel {M}_{t}{\parallel }_{F}}\le \epsilon \), where \(\parallel \cdot {\parallel }_{F}\) stands for the Frobenius norm46.

Based on the definition (12) of \(\parallel M{\parallel }_{2,p}\), the sparse parameter p \(\in \) (0, 1] aims to find a solution with many zero row vectors of the l2, p-regularized matrix minimization problem (11). This means that many basis functions have no contribution to reconstruct the seismic wavefields which accords with the prior knowledge. Therefore (m t )k = 0 might frequently occur during the iterations of Algorithm 1. We may formulate the following statement.

Remark. In Algorithm 1, if \({m}_{{t}_{0}}^{k}=0\) happens for some iteration \({M}_{{t}_{0}}\), then \({m}_{t}^{k}=0\) for t ≥ t0.

We give explanations of the above remark as follow. If \({m}_{{t}_{0}}^{k}=0\) in the t0-th iteration, then the diagonal entry of \({G}_{{t}_{0}}\) is zero, namely \({({G}_{{t}_{0}})}_{kk}=0\). From the update formula \({M}_{{t}_{0}+1}={G}_{{t}_{0}}[{I}_{K}-{L}^{T}{({I}_{m}+L{G}_{{t}_{0}}{L}^{T})}^{-1}L{G}_{{t}_{0}}]{L}^{T}D\), we know that \({m}_{{t}_{0}+1}^{k}=0\) holds, so does \({m}_{t}^{k}=0\) for t ≥ t0. After t0 iterations with \({m}_{{t}_{0}}^{k}=0\), the k-th column of the matrix L is unnecessary in the linear system (8) and the variational function J(M) in (15). So we can discard the k-th column of the matrix L to reduce the system without any loss. The improvement of Algorithm 1 can be concluded as Algorithm 2.

Algorithm 2. Solving problem (16) for any p \(\in \) (0, 1]

Step 1. Input L \(\in \) Rm × K, D \(\in \) Rm × l. Set the sparse parameter p \(\in \) (0, 1] and the diagonal matrix \({\rm{\Lambda }}=diag\{{\alpha }_{1},{\alpha }_{2},\cdots ,{\alpha }_{K}\}\,\succ \,0\). Given stopping criterion \(\epsilon > 0\).

Step 2. Set t = 1 and initialize \({\hat{M}}_{1}\in {R}^{K\times l}\). Let Ω0 = {1, 2, …, K}.

Step 3. For t = 1, 2, … until \({\rho }_{t}\le \epsilon \) do:

In Algorithm 2, \({M}_{t}={\hat{M}}_{t}({{\rm{\Omega }}}_{t};:)\) means to keep the rows of \({\hat{M}}_{t}\) corresponding to the index set Ω t while L t = L(:;Ω t ) keeps the column of L corresponding to Ω t . Compared with Algorithm 1, Algorithm 2 removes the zero rows of the approximation solution in each iteration and the corresponding columns of the bases matrix L. This technique iteratively reduces the inactive set of data.

Based on the procedure of Algorithm 2, \({N}_{t}={L}_{t}^{T}{L}_{t}+\frac{p}{2}{H}_{t}\) is well defined and \({\hat{M}}_{t+1}\) is the solution of the linear system \({N}_{t}M={L}_{t}^{T}D\). Since N t is symmetric and positive definite, \({\hat{M}}_{t+1}\) is also the optimal matrix solution of the following quadratic subproblem

We would have \({Q}_{t}({\hat{M}}_{t+1})\le {Q}_{t}({M}_{t})\), which is equivalent to

It is noticed that \(J({M}_{t})=\parallel {L}_{t}{M}_{t}-D{\parallel }_{F}^{2}+\parallel {{\rm{\Lambda }}}_{t}{M}_{t}{\parallel }_{2,p}^{p}\) and \(J({M}_{t+1})=J({\hat{M}}_{t+1})\). Using inequalities (A-2) (see the Appendix A) and (22), we can derive that

which means {J(M t )} will decrease with respect to iterations for any p \(\in \) (0, 1].

Once J(Mt + 1) = J(M t ) happens for some t, the equalities in (A-2) (see the Appendix A) and (22) hold simultaneously. From Proposition 2 of the Appendix A, we obtain \(\parallel {\hat{m}}_{t+1}^{k}{\parallel }_{2}=\parallel {m}_{t}^{k}{\parallel }_{2}\) for all k \(\in \) Ω t . Thus Gt + 1 = G t and Ht + 1 = H t , which implies that \({\hat{M}}_{t+1}\) is a solution of the equation (17). Since the objective function sequence {J(M t )} for all t is strictly decreasing and lower bounded, any accumulation of the set {M t } is a stationary point of the equation (11). At the same time, the descending quantity of {J(M t )} measures the convergence precision of the matrix sequence {M t }.

Once the nonzero set of the t-th iteration has been fixed, the subproblem (21) can be solved in a variety of ways such as preconditioned conjugate gradient methods46, nonmonotone gradient descent methods47,48, and so on. The framework can be concluded as Algorithm 3.

Algorithm 3. A unified algorithm for solving problem (16) for any p \(\in \) (0, 1]

Step 1. Input L \(\in \) Rm × K, D\({\rm{\Lambda }}=diag\{{\alpha }_{1},{\alpha }_{2},\cdots ,{\alpha }_{K}\}\,\succ \,0\) \(\in \) Rm × l. Set the sparse parameter p \(\in \) (0, 1] and the diagonal matrix . Given stopping criterion \(\epsilon > 0\).

Step 2. Set t = 1 and initialize \({\hat{M}}_{1}\in {R}^{K\times l}\). Let Ω0 = {1, 2, …, K}.

Step 3. For t = 1, 2, … until \({\rho }_{t}\le \epsilon \) do:

Solve \({N}_{t}M={L}_{t}^{T}D\) for the solution \({\hat{M}}_{t+1}\);

Experimental results

To validate the efficiency of the joint matrix minimization approach and the unified algorithm for the problem (11), we perform three tests: (1) restoration of the input one-dimensional random signal with the randomly generated matrix L; (2) restoration of the synthetic seismic data with random loss of traces; (3) restoration of the field data.

One-dimensional signal reconstruction

We randomly take samples to generate the matrix L. For implementation, we try to restore the signal by the model (11) with q = 2 and p \(\in \) (0, 1].

The stopping precision in Algorithm 3 is set to \(\epsilon ={10}^{-3}\). The sparse parameter p and regularization parameter α k are typically chosen in (0, 1]. Results for other values of p are similar. The relative error of the recovered signal Mrec to the true (given) signal Mtrue is defined by

To quantify the results, we define the signal-to-noise ratio (SNR) as \({\rm{SNR}}=10{\mathrm{log}}_{10}\frac{\parallel {d}_{{\rm{org}}}{\parallel }_{2}^{2}}{\parallel {d}_{{\rm{org}}}-{d}_{{\rm{rec}}}{\parallel }_{2}^{2}}\), where dorg refers to the original data and drec is the restored data.

For the one-dimensional case, the matrix M is reduced to a vector, hence the unified Algorithm 3 can be used for solving (11). For comparison, we also apply spectral projected gradient (SPG) method49 to solve the l1-regularization problem. The code of SPG is downloaded from http://www.cs.ubc.ca/~mpf/spgl1/index.html. Two algorithms are carried out in the same environment and choose their best regularization parameters. The comparison items include errrel value, SNR and CPU running time (second). Each experiment is repeated five times and the average values are reported in Table 1. It indicates that both methods perform well for one-dimensional signal reconstruction problem.

Apart from the regular data, we also consider the noisy cases to show the robustness of two methods. Different noise levels are added to the simulated data. Noise level 0.001 means the noise is randomly generated with zero mean and 0.001 variance. The results of Algorithm 3 with sparse parameters p = 1 and p = 0.5 are displayed in Table 1. Compared with the l1-regularized minimization model, the half-norm regularized minimization behaves better in reconstruction. Figure 2 plots the recovery performance of the Algorithm 3 with p = 0.5 on noisy data. Figure 2(a) is the comparison of the real signal and the recovered signal, Fig. 2(b) illustrates the difference between the recovered signal and the input (true) signal. The recovery images of other cases are similar. The figures reveal that our model and algorithm perform well for one-dimensional seismic wavefield reconstruction problem even in noisy cases.

Reconstruction of seismograms from a layered model

Now we consider a seismogram generated from a seven layers geologic velocity model where the spatial sampling interval is 15 meters and the time sampling interval is 0.002 second. The velocity varies from 2500 m/s to 5500 m/s. The seismogram is generated using a source function given by a Ricker wavelet with central-frequency of 25 Hz. The dataset contains 256 traces with 256 time samples in each trace. Different percentages of missing traces in original data, 10%, 25% and 50%, are used to test the limitation of recovery methods. The joint matrix model (11) with Algorithm 3 is applied to reconstruct the seismic wavefield. Since the spectral projected gradient method only solves an l1-regularized vector minimization problem, we decompose the matrix representation system (11) into the l1-regularized vector minimization problem. Each column is considered as a subproblem to reconstruct its weight vector separately. Then all the solutions of the subproblems are sequentially aligned into a weighted matrix to evaluate the reconstruction performance. The experimental results on missing percentages 10% and 25% can be seen in Tables 2 and 3.

As for the data without noise but missing 50% traces, the reconstruction performance of joint matrix model with Algorithm 3 is much worse than missing percentages of 10% and 25%. The errrel value is 0.5414 and SNR is around 5.1904dB, almost the same for any p \(\in \) (0, 1]. These results mean that our method may not completely recover the seismic wavefield well if the missing trace signals are more than 50%. Actually, the sub-sampled data missing 50% itself is a failed collection of seismic recodes.

The original shot gathers are shown in Fig. 3(a). The data with 25% traces missing are shown in Fig. 3(b). In forming the under-determined matrix L, a Haar wavelet orthogonal base is used to form the transform matrix Ψ. The unified Algorithm 3 is applied to solve the joint matrix minimization problems (11) with q = 2 and typical parameters p \(\in \) (0, 1]. Good recovery performance is observed and the result is demonstrated in Fig. 3(c). The error of the original and the recovered data shown in Fig. 3(d) illustrates the efficient recovery performance of joint matrix minimization approach. In displaying the results, the amplitude scale of the error map is the same as the amplitude scale of the data. Of course, other values of the sparse parameter p can be chosen, the results in visualization are similar. So, we only list the quantitative results in Tables 2 and 3.

Comparatively, the recovery image of the SPG algorithm for the case of 25% traces missing is presented in Fig. 4. Figure 4(a) is the reconstruction and Fig. 4(b) displays the difference between the original and reconstructed seismic signals. It is noticed that SPG algorithm for the l1-regularization vector minimization restores the seismic wavefield as accurate as the joint matrix approach with Algorithm 3. These results are obtained using the same code from http://www.cs.ubc.ca/~mpf/spgl1/index.html.

To show the anti-noise property of our algorithm, we add random noise with noise level 0.001 to the simulated data. The unified Algorithm 3 is applied to solve the joint matrix minimization problems. The errrel value, SNR and CPU running time (second) are listed in Table 2 for 3 sparse parameters. The recovery image and the error of the original and the recovered data are shown in Fig. 5(a and b) respectively. The low relative error and high SNR indicate that our algorithm is stable for seismic data restoration.

To save memory requirement of large-scale data, we have observed the restoration behavior of our method on patch of the input synthetic data. We evenly partition the collection of trace signals D into several blocks, such as D = [D1, D2, …, D f ], where \({D}_{g}\in {R}^{m\times {l}_{g}}\) and \(\sum _{g=1}^{f}{l}_{g}=l\). Each D g is input separately to recover the seismic signals by system (11). Then all the sub-solutions M g , g = 1, 2, …, f are combined into M = [M1, M2, …, M f ]. When the number of segments is two or three, the recovered errrel values and SNR are almost the same as the integral case. When each column is considered as a segment, the joint matrix model is reduced to a sequence of vector recoveries, the recovery errrel values and SNR are similar to the integral case but the computational time is around 50 times more.

Reconstruction of seismograms from a heterogeneous model

Next we consider a seismogram generated from a velocity model varying both vertically and transversely (Wang et al.5). The original seismic wavefield, sub-sampled data (37% traces are randomly removed) and recovered data are shown in Fig. 6(a–c), respectively. The difference of the original data and the recovered data is illustrated in Fig. 6(d). In displaying the results, the amplitude scale of the error map is the same as the amplitude scale of the data. It illustrates that all the initial seismic energy is recovered with minor errors. Though the reconstruction is not perfect, most of the details of the wavefield are preserved. Again, to test the quality of our algorithm in seismic data restoration for complex structure, we calculate the signal-to-noise ratio and the relative error. From our calculation, for p = 0.5, the values of SNR and errrel are 26.9792 and 0.0448, respectively; for p = 1, the values of SNR and errrel are 25.6940 and 0.0519, respectively. The high value of SNR and low value of errrel indicate our algorithm works for seismic data restoration even with complex structure.

To show the robustness of our algorithm to interference, we add random noise with level 0.001 and 0.01 to the simulated data respectively. The unified Algorithm 3 with p = 0.5 is applied to solve the joint matrix minimization problems. The values of SNR and errrel for noise level equaling 0.001 are 26.9074 and 0.0451, and for noise level equaling 0.001 are 18.0355 and 0.1254, respectively.

In the noisy case, e.g., noise level equaling 0.01, the frequency information of the original data, sub-sampled data and the recovered data are shown in Fig. 7(a–c), respectively. Again, the aliasing of the sub-sampled data is reduced greatly in the recovered data.

Field data

Finally, we examine the efficiency of the new method with field data. The seismic data is a marine shot gather shown in Fig. 8(a) which consists of 256 traces with spacing 25 m and time sampling interval 2 ms. There are damaged traces in the original gather. The subsampled gather is shown in Fig. 8(b) with 42% of the original traces randomly removed. This sub-sampled gather was used to restore the original gather with suitable solution methods. Again, the unified Algorithm 3 is applied to solve the joint matrix minimization problems (11) with q = 2 and p = 0.5. The recovery result is demonstrated in Fig. 8(c). The error of the original and the recovered data shown in Fig. 8(d) illustrates the efficient recovery performance of joint matrix minimization approach. In displaying the results, the amplitude scale of the error map is the same as the amplitude scale of the data. Comparing the subsampled image with the original image, the restored image can reconstruct most of the details. In addition the damaged trace in the original gather was restored as a good trace. Using the same definition of SNR as above, for p = 0.5, the value of SNR equals 19.7301; for p = 1 the value of SNR equals 19.7919. We only show figures for p = 1, since in visualization the results are similar for p = 0.5.

The frequency information of the original data, sub-sampled data and the recovered data are shown in Fig. 9(a–c), respectively. It indicates that the aliasing of the sub-sampled data is reduced greatly in the recovered data.

Conclusion

Sparse optimization has broad applications in seismic data processing. In this paper we focus on data restoration problem. Noticing that the seismic wavefield can be represented using matrix instead of vector as weight variable to express all the signals simultaneously, in this paper we propose a matrix optimization model to the seismic wavefield recovery. We first reformulate the data restoration problem using an l2, p-norm constrained matrix minimization model for any p \(\in \) (0, 1], which is a nonconvex and non-Lipschitz continuous minimization problem. Then we develop a unified algorithm to solve the mixed matrix optimization problem for any p \(\in \) (0, 1]. Convergence analysis of the new algorithm is also addressed. Numerical results on synthetic problems and the field data example indicate potential usage of our method for practical applications.

Appendix Properties of the new algorithms

In this section, we will analyze the convergence property of the Algorithm 2. The main result is that the objective function J(M t ) strictly decreases with respect to iterations until the matrix sequence {M t } converges to a stationary point of J(M).

Proposition 1. Let \(\phi (\tau )=\tau -a{\tau }^{\frac{1}{a}}\) be a function of the variable τ, where a \(\in \) (0, 1). Then for any τ > 0, φ(τ) ≤ 1−a, and τ = 1 is the unique maximizer.

To verify the above statements, let us take the derivative of φ(τ) and set it to be zero, that is

then φ′(τ) = 0 has the unique solution τ = 1 for any a \(\in \) (0, 1) which is just the maximizer of φ(τ) in (0, +∞).

Based on Proposition 1, for a given a \(\in \) (0, 1),

holds for τ \(\in \) (0, +∞) and “=’’ is active if and only if τ = 1. Let a takes special values such as \(a=\frac{p}{2}\,(p\in (0,1])\), the inequality (A-1) will result in the following formula associated with \(||M|{|}_{2,p}^{p}(0 < p\le 1)\).

Proposition 2. Suppose that M t and \({\hat{M}}_{t+1}\) are generated in the t-th iteration by Algorithm 2, the following inequality holds,

where \({{\rm{\Lambda }}}_{t}=diag{\{{\alpha }_{k}\}}_{k\in {{\rm{\Omega }}}_{t}}\). Moreover, the equality in (A-2) holds if and only if \(\parallel {\hat{m}}_{t+1}^{k}{\parallel }_{2}=\parallel {m}_{t}^{k}{\parallel }_{2}\) for k \(\in \) Ω t .

Consider the approximate value M t . Since each \({\Vert {m}_{t}^{k}\Vert }_{2}\ne 0\) for k \(\in \) Ω t , so we can r \(\tau =\frac{{\Vert {\hat{m}}_{t+1}^{k}\Vert }_{2}^{p}}{{\Vert {m}_{t}^{k}\Vert }_{2}^{p}}\) and \(a=\frac{p}{2}\) in Proposition 1. It can be obtained that

Multiplying equation (A-3) by \({\alpha }_{k}\parallel {m}_{t}^{k}{\parallel }_{2}^{p}\), we have the following inequality

Summing up k \(\in \) Ω t in formula (A-4}), we can derive at (A-2).

Based on Proposition 1, τ = 1 is the unique minimizers for φ(τ) in (0, +∞) when \(a=\frac{p}{2}\). Namely, \({\Vert {\hat{m}}_{t+1}^{k}\Vert }_{2}={\Vert {m}_{t}^{k}\Vert }_{2}(k\in {{\rm{\Omega }}}_{t})\) is necessary and sufficient for equality holding in (A-4). Now, we can establish the following convergence property of the Algorithm 2.

Proposition 3. Suppose that {M t } is the matrix sequence generated by Algorithm 2. Then J(M t ) strictly decreases with respect to t for any 0 < p ≤ 1 until {M t } converges to a stationary point of J(M).

Proposition 4. Based on the derivation of Proposition 3, so long as the subproblem (16) is solved with \({Q}_{t}({\hat{M}}_{t+1})\le {Q}_{t}({M}_{t})\), the convergence of Algorithm 3 will be guaranteed for any p \(\in \) (0, 1].

References

Liu, B. & Sacchi, M. D. Minimum weighted norm interpolation of seismic records. Geophysics 69, 1560–1568 (2004).

Soubaras, R. Spatial interpolation of aliased seismic data. Expanded Abstracts, 74th Annual Meeting SEG, Denver, USA (Denver, Oct. 2004), 1167–1170 (2004).

Naghizadeh, M. & Sacchi, M. D. Beyond alias hierarchical scale curvelet interpolation of regularly and irregularly sampled seismic data. Geophysics 75, WB189–202 (2010).

Sacchi, M. D. & Liu, B. Minimum weighted norm wavefield reconstruction for AVA imaging. Geophysical Prospecting 53, 787–801 (2005).

Wang, Y. F., Stepanova, I. E., Titarenko, V. N. & Yagola, A. G. Inversion problems in geophysics and solution methods (Higher Education Press, Beijing, 2011).

Wang, Y. F., Cao, J. J. & Yang, C. C. Recovery of seismic wavefields based on compressive sensing by l 1 an-norm constrained trust region method and the piecewise random subsampling. Geophys. J. Int. 187, 199–213 (2011).

Wang, Y. F., Yang, C. C. & Cao, J. J. On Tikhonov regularization and compressive sensing for seismic signal processing. Mathematical Models and Methods in Applied Sciences. 22, 1150008-1–1150008-24 (2012).

Sacchi, M. D. & Ulrych, T. J. Estimation of the discrete Fourier transform, a linear inversion approach. Geophysics 61, 1128–36 (1996).

Sacchi, M. D., Ulrych, T. J. & Walker, C. J. Interpolation and extrapolation using a high-resolution discrete Fourier transform. IEEE Transactions on Signal Processing 46, 31–38 (1998).

Duijndam, A. J. W. & Schonewille, M. A. Non-uniform fast Fourier transform. Geophysics 64, 539–551 (1999).

Xu, S., Zhang, Y., Pham, D. & Lambare, G. Anti-leakage Fourier transform for seismic data regularization. Geophysics 70, V87–V95 (2005).

Trad, D., Ulrych, T. & Sacchi, M. Accurate interpolation with high-resolution time-variant Radon transforms. Geophysics 67, 644–656 (2002).

Herrmann, F. J. & Hennenfent, G. Non-parametric seismic data recovery with curvelet frames. Geophysical Journal International 173, 233–248 (2008).

Sacchi, M. D., Verschuur, D. J. & Zwartjes, P. M. Data reconstruction by generalized deconvolution. Expanded Abstracts 74th Annual Meeting SEG, Denver, USA (Denver, Oct. 2004), 1989–1992 (2004).

Wang, Y. F., Cao, J. J., Yuan, Y. X., Yang, C. C. & Xiu, N. H. Regularization active set method for nonnegatively constrained ill-posed multichannel image restoration problem. Appl. Opt. 48, 1389–1401 (2009).

Wang, Y. F. Sparse optimization methods for seismic wavefields recovery. Proc. Inst. Math. Mech. 18(1), 42–55 (2011).

Cao, J. J. & Wang, Y. F. Seismic data restoration with a fast L1 norm trust region method. J. Geophys. Eng. \bf 11(4), 045010 (2015).

Chen, S., Donoho, D. & Saunders, M. Atomic decomposition by basis pursuit. SIAM Journal on Scientific Computing 20, 33–61 (1998).

Tropp, J. A. & Gilbert, A. C. Signal recovery from random measurements via orthogonal matching pursuit. IEEE Transactions on Information Theory 53, 4655–4666 (2007).

Tibshirani, R. Regression shrinkage and selection via the lasso. Journal Royal Statistical Society B 58, 267–288 (1996).

Kim, S.-J., Koh, K., Lustig, M., Boyd, S. & Gorinevsky, D. An interior-point method for large-scale l 1-regularized least squares. IEEE Journal on Selected Topics in Signal Processing 1, 606–617 (2007).

Dai, Y. H. & Fletcher, R. Projected Barzilai-Borwein methods for large-scale box-constrained quadratic programming. Numerische Mathematik 100, 21–47 (2005).

Wang, Y. F. & Ma, S. Q. Projected Barzilai-Borwein methods for large scale nonnegative image restorations. Inverse Problems in Science and Engineering 15, 559–583 (2007).

Figueiredo, M. A. T., Nowak, R. D. & Wright, S. J. Gradient projection for sparse reconstruction: application to compressed sensing and other inverse problems. IEEE Journal of Selected Topics in Signal Processing 1, 586–597 (2007).

Ewout, V. B. & Michael, P. F. Probing the pareto frontier for basis pursuit solutions. SIAM Journal on Scientific Computing 31, 890–912 (2008).

Cao, J. J., Wang, Y. F. & Wang, B. F. Accelerating seismic interpolation with a gradient projection method based on tight frame property of curvelet. Exploration Geophysics 46, 253–260 (2015).

Rakotomamonjy, A., Flamary, R., Gasso, G. & Canu, S. l p -l p Penalty for sparse linear and sparse multiple kernel multitask learning. IEEE Transactions on Neural Networks 22(8), 1307–1320 (2011).

Suvrit, S. Fast projection onto l 1, q-norm balls for grouped feature selection. Proceeding of Machine Learning and Knowledge Discovery in Databases, Athens, Greece (2011).

Sumit, S., Vishal, M. P., Nasser, M. N. & Rama, C. Joint sparse representation for robust multimodal biometrics recognition. IEEE Trans. PAMI 36(1), 113–126 (2014).

Wang, H., Nie, F. P., Cai, W. D. & Huang, H.Semi-supervised robust dictionary learning via efficient l 2, 0+-norms minimizations. IEEE International Conference on Computer Vision, 1145–1152 (2013).

Aravkin, A., Kumar, R., Mansour, H., Recht, B. & Herrmann, F. J. Fast methods for denoising matrix completion formulations, with applications to robust seismic data interpolation. SIAM J. Sci. Comput. 36(5), S237–S266 (2014).

Kumar, R. et al. Efficient matrix completion for seismic data reconstruction. Geophysics 80(5), V97–V114 (2015).

Rodriguez, I. V., Sacchi, M. D. & Gu, Y. J. Simultaneous recovery of origin time, hypocentre location and seismic moment tensor using sparse representation theory. Geophys. J. Int. 188, 1188–1202 (2012).

Kreimer, N., Stanton, A. & Sacchi, M. D. Tensor completion based on nuclear norm minimization for 5D seismic data reconstruction. Geophysics 78(6), V273–V284 (2013).

Candés, E. J., Wakin, M. B. & Boyd, S. P. Enhancing sparsity by reweighed l 1 minimization. Journal of Fourier Analysis and Applications 14(5), 877–905 (2008).

Chartrand, R. Exact reconstructions of sparse signals via nonconvex minimization. IEEE Signal Processing Letters 14(10), 707–710 (2007).

Chartrand, R. & Yin, W. Iteratively reweighed algorithms for compressive sensing. 33rd International Conference on Acoustics, Speech, and Signal Processing, 3869–3872 (2008).

Chen, X. J., Xu, F. M. & Ye, Y. Y. Lower bound theory of nonzero entries in solutions of l 2-l p minimization. SIAM J. Scientific Computing 32(5), 2832–2852 (2010).

Xu, Z. B., Zhang, H., Wang, Y., Chang, X. Y. & Liang, Y. L 1/2 regularizer. Science in China (Series F). 52(6), 1159–1169 (2010).

Chen, X. J., Ge, D. D., Wang, Z. Z. & Ye, Y. Y. Complexity of unconstrained L 2 -L p minimization. Math. Program. (Ser. A) 143, 371–383 (2014).

Chen, X. J. & Zhou, W. J. Convergence of the reweighted l 1 minimization algorithm for l 2-l p minimization. Computational Optimization and Applications 59, 47–61 (2014).

Lu, Z. S. Iterative reweighted minimization methods for regularized unconstrained nonlinear programming. Mathematical Programming 147, 277–307 (2014).

Wang, L. P., Chen, S. C. & Wang, Y. P. A unified algorithm for mixed l 2, p-minimizations and its application in feature selection. Computational Optimization and Applications 58, 409–421 (2014).

Yuan, Y. X. Numerical Methods for Nonlinear Programming (Shanghai Science and Technology Publication, Shanghai, 1993).

Dai, H. Matrix Theory (Science Press, Beijing, 2004).

Golub, G. H. & Loan, C. F. Matrix Computation (The Johns Hopkins University Press (3rd), 1996).

Barzilai, J. & Borwein, J. M. Two-point step size gradient methods. IMA Journal of Numerical Analysis 8, 141–148 (1988).

Wang, Y. F. & Yang, C. C. Accelerating migration deconvolution using a non-monotone gradient method. Geophysics 75, S131–S137 (2010).

van den, Berg, E. & Friedlander, M. P. Probing the Pareto frontier for basis pursuit solutions. SIAM Journal on Scientific Computing 31(2), 890–912 (2008).

Acknowledgements

We thank reviewers very much for their valuable suggestions and comments which help us improve our paper. This work is supported by National Natural Science Foundation of China under grant numbers 91630202, 11471159 and 61661136001.

Author information

Authors and Affiliations

Contributions

Y.F. designed the study. Y.F. and W. conducted experiments. Y.F. and W. wrote the paper. All authors contributed to synthetic data interpretation and provided significant input to the final manuscript.

Corresponding author

Ethics declarations

Competing Interests

The authors declare that they have no competing interests.

Additional information

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wang, L., Wang, Y. A joint matrix minimization approach for seismic wavefield recovery. Sci Rep 8, 2188 (2018). https://doi.org/10.1038/s41598-018-20556-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-018-20556-1

This article is cited by

-

Deep learning for irregularly and regularly missing data reconstruction

Scientific Reports (2020)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.