Abstract

Quantum machine learning witnesses an increasing amount of quantum algorithms for data-driven decision making, a problem with potential applications ranging from automated image recognition to medical diagnosis. Many of those algorithms are implementations of quantum classifiers, or models for the classification of data inputs with a quantum computer. Following the success of collective decision making with ensembles in classical machine learning, this paper introduces the concept of quantum ensembles of quantum classifiers. Creating the ensemble corresponds to a state preparation routine, after which the quantum classifiers are evaluated in parallel and their combined decision is accessed by a single-qubit measurement. This framework naturally allows for exponentially large ensembles in which – similar to Bayesian learning – the individual classifiers do not have to be trained. As an example, we analyse an exponentially large quantum ensemble in which each classifier is weighed according to its performance in classifying the training data, leading to new results for quantum as well as classical machine learning.

Similar content being viewed by others

Introduction

In machine learning, a classifier can be understood as a mathematical model or computer algorithm that takes input vectors of features and assigns them to classes or ‘labels’. For example, the features could be derived from an image and the label assigns the image to the classes “shows a cat” or “shows no cat”. Such a classifier can be written as a function \(f:{\mathscr{X}}\to {\mathscr{Y}}\) mapping from an input space \({\mathscr{X}}\) to the space of possible labels \({\mathscr{Y}}\). The function can depend on a set of parameters θ, and training the classifier refers to fitting the parameters to sample data of input-label pairs in order to get a model \(f({\bf{x}};\theta ),{\bf{x}}\in {\mathscr{X}}\) that generalises from the data how to classify future inputs. It is by now common practise to consult not only one trained model but to train an ensemble of models f (x; θ i ), i = 1, ..., E and derive a collective prediction that supersedes the predictive power of a single classifier1.

In the emerging discipline of quantum machine learning, a number of quantum algorithms for classification have been proposed2,3,4 and demonstrate how to train and use models for classification on a quantum computer. It is an open question how to cast such quantum classifiers into an ensemble framework that likewise harvests the strengths of quantum computing, and this article is a first step to answering this question. We will focus on a special type of quantum classifier here, namely a model where a set of parameters θ can be expressed by a n-qubit state |θ〉, and the classification result is encoded in a separate register of qubits. This format allows us to create a ‘superposition of quantum models’ ∑ θ |θ〉 and evaluate them in parallel. A state preparation scheme can be used to weigh each classifier, thereby creating the ensemble. This allows for the instantaneous evaluation of exponentially large quantum ensembles of quantum classifiers.

Exponentially large ensembles do not only have the potential to increase the predictive power of single quantum classifiers, they also offer an interesting perspective on how to circumvent the training problem in quantum machine learning. Training in the quantum regime relies on methods that range from sampling from quantum states4 to quantum matrix inversion2 and Grover search5. However, for complex optimisation problems where little mathematical structure is given (an important example being feed-forward neural networks), the translation of iterative methods such as backpropagation to efficient quantum algorithms is less straight forward (see ref.6). It is known from classical machine learning that one can avoid optimisation by exchanging it for integration: In Bayesian learning one has to solve an integral over all possible parameters instead of searching for a single optimal candidate. The idea of integrating over parameters can be understood as forming a collective decision by consulting all possible models from a certain family and weigh them according to their desired influence – which is the approach of the ensemble framework. In other words, quantum ensembles of quantum classifiers offer an interesting perspective to optimisation-free learning with quantum computers.

In order to illustrate the concept of quantum ensembles of quantum classifiers, we investigate an exponentially large ensemble inspired by Bayesian learning, in which every ensemble member is weighed according to its accuracy on the data set. We give a quantum circuit to prepare such a quantum ensemble for general quantum classifiers, from which the collective decision can be computed in parallel and evaluated from a single qubit measurement. It turns out that for certain models this procedure effectively constructs an ensemble of accurate classifiers (those that perform on the training set better than random guessing). It has been shown that in some cases, accurate but weak classifiers can build a strong classifier, and analytical and numerical investigations show that this may work. To our knowledge, this result has not been established in the classical machine learning literature and shows how quantum machine learning can stimulate new approaches for traditional machine learning as well.

Background and related results

Before introducing the quantum ensemble framework as well as the example of an accuracy-weighed ensemble, we provide a review of classical results on ensemble methods. An emphasis lies on studies that analyse asymptotically large ensembles of accurate classifiers, and although no rigorous proofs are available, we find strong arguments for the high predictive power of large collections of weak learners. The problem we will focus on here is a supervised binary pattern classification task. Given a dataset \({\mathscr{D}}=\{({{\bf{x}}}^{\mathrm{(1)}},{y}^{\mathrm{(1)}}),...,\,({{\bf{x}}}^{(M)},{y}^{(M)})\}\) with inputs \({{\bf{x}}}^{(m)}\,\in \,{{\mathbb{R}}}^{N}\) and outputs or labels y(m) ∈ {−1, 1} for m = 1, ..., M, as well as a new input \(\tilde{{\bf{x}}}\). The goal is to predict the unknown label \(\tilde{y}\). Consider a classifier

with input \({\bf{x}}\in {\mathscr{X}}\) and parameters θ. As mentioned above, the common approach in machine learning is to choose a model by fitting the parameters to the data \({\mathscr{D}}\). Ensemble methods are based on the notion that allowing only one final model θ for prediction, whatever intricate the training procedure is, will neglect the strengths of other candidates even if they have an overall worse performance. For example, one model might have learned how to deal with outliers very well, but at the expense of being slightly worse in predicting the rest of the inputs. This ‘expert knowledge’ is lost if only one winner is selected. The idea is to allow for an ensemble or committee \( {\mathcal E} \) of trained models (sometimes called ‘experts’ or ‘members’) that take the decision for a new prediction together. Considering how familiar this principle is in our societies, it is surprising that this thought only gained widespread attention as late as the 1990 s.

Many different proposals have been put forward of how to use more than one model for prediction. The proposals can be categorised along two dimensions7, first the selection procedure they apply to obtain the ensemble members, and second the decision procedure defined to compute the final output (see Fig. 1). Note that here we will not discuss ensembles built from different machine learning methods but consider a parametrised model family as given by Eq. (1) with fixed hyperparameters. An example is a neural network with a fixed architecture and adjustable weight parameters. A very straight-forward strategy of constructing an ensemble is to train several models and choose a prediction according to their majority vote. More intricate variations are popular in practice and have interesting theoretical foundations. Bagging8 trains classifiers on different subsamples of the training set, thereby reducing the variance of the prediction. AdaBoost9,10 trains subsequent models on the part of the training set that was misclassified previously and applies a given weighing scheme, which can be understood to reduce the bias of the prediction. Mixtures of experts11 train a number of classifiers using a specific error function and in a second step train a ‘gating network’ that defines the weighing scheme. For all these methods, the ensemble classifier can be written as

The principle of ensemble methods is to select a set of classifiers and combine their predictions to obtain a better performance in generalising from the data. Here, the N classifiers are considered to be parametrised functions from a family {f (x; θ)}, where the set of parameters θ solely defines the individual model. The dataset \({\mathscr{D}}\) is consulted in the selection procedure and sometimes also plays a role in the decision procedure where a label \(\tilde{y}\) for a new input \(\tilde{x}\) is chosen.

The coefficients w θ weigh the decision \(f(\tilde{x};\theta )\in \{-\mathrm{1,}\,\mathrm{1\}}\) of each model in the ensemble \( {\mathcal E} \) specified by θ, while the sign function assigns class 1 to the new input if the weighed sum is positive and −1 otherwise. It is important for the following to rewrite this as a sum over all E possible parameters. Here we will use a finite number representation and limit the parameters to a certain interval to get the discrete sum

In the continuous limit, the sum has to be replaced by an integral. In order to obtain the ensemble classifier of Eq. (2), the weights w θ which correspond to models that are not part of the ensemble \( {\mathcal E} \) are set to zero. Given a model family f, an interval for the parameters as well as a precision to which they are represented, an ensemble is therefore fully defined by the set of weights {w0…wE−1}.

Writing a sum over all possible models provides a framework to think about asymptotically large ensembles which can be realised by quantum parallelism. Interesting enough, this formulation is also very close to another paradigm of classification, the Bayesian learning approach12,13. Given a training dataset \({\mathscr{D}}\) as before and understanding x as well as y as random variables, the goal of classification in the Bayesian setting is to find the conditional probability distribution

from which the prediction can be derived, for example by a Maximum A Posteriori estimate. The first part of the integrand, p (x, y|θ), is the probability of an input-label pair to be observed given the set of parameters for the chosen model. The correspondence of Eqs (3) and (4) becomes apparent if one associates f (x; θ) with p (x, y|θ) and interprets w θ as an estimator for the posterior \(p(\theta |{\mathscr{D}})\) of θ being the true model given the observed data. If we also consider different model families specified by the hyperparameters, this method turns into Bayesian Model Averaging which is sometimes included in the canon of ensemble method (although being based on a rather different theoretical foundation14).

Beyond the transition to a Bayesian framework, increasing the size of the ensemble to include all possible parameters has been studied in different contexts. In some cases adding accurate classifiers has been shown to increase the performance of the ensemble decision. Accurate classifiers have an accuracy a (estimated by the number of correctly classified test samples divided by the total samples of the test set) of more than 0.5, and are thus better than random guessing, which means that they have ‘learned’ the pattern of the training set to at least a small extend. The most well-known case has been developed by Schapire9 leading to the aforementioned AdaBoost algorithm where a collection of weak classifiers with accuracy slightly better than 0.5 can be turned into a strong classifier that is expected to have a high predictive power. The advantage here is that weak classifiers are comparably easy to train and combine. But people thought about the power of weak learners long before AdaBoost. The Cordocet Jury Theorem from 1758 states that considering a committee of judges where each judge has a probability p with p > 0.5 to reach a correct decision, the probability of a correct collective decision by majority vote will converge to 1 as the number of judges approaches infinity. This idea has been applied to ensembles of neural networks by Hansen and Salamon15. If all ensemble members have a likelihood of p to classify a new instance correctly, and their errors are uncorrelated, the probability that the majority rule classifies the new instance incorrectly is given by

where E is again the ensemble size. The convergence behaviour is plotted in Fig. 2 (left) for different values of p. The assumption of uncorrelated errors is idealistic, since some data points will be more difficult to classify than others and therefore tend to be misclassified by a large proportion of the ensemble members. Hansen and Salamon argue that for the highly overparametrised neural network models they consider as base classifiers, training will get stuck in different local minima, so that the ensemble members will be sufficiently diverse in their errors.

Left: Prediction error when increasing the size of an ensemble of classifiers each of which has an accuracy p. Asymptotically, the error converges to zero if p > 0.5. Right: For p > 0.5, the odds ratio p/(1 − p) grows slower than its square. Together with the results from Lam et al. described in the text, this is an indication that adding accurate classifiers to an ensemble has a high chance to increase its predictive power.

A more realistic setting would also assume that each model has a different prediction probability p (that we can measure by the accuracy a), which has been investigated by Lam and Suen16. The change in prediction power with the growth of the ensemble obviously depends on the predictive power of the new ensemble member, but its sign can be determined. Roughly stated, adding two classifiers with accuracies a1, a2 to an ensemble of size 2n will increase the prediction power if the value of \(\frac{{a}_{1}{a}_{2}}{(1-{a}_{1})(1-{a}_{2})}\) is not less than the odds ratio \(\frac{{a}_{i}}{(1-{a}_{i})}\) of any ensemble member, i = 1, …, E. When plotting the odds ratio and its square in Fig. 2 (right), it becomes apparent that for all a i > 0.5 chances are high to increase the predictive power of the ensemble by adding a new weak learner. Together, the results from the literature results suggest that constructing large ensembles of accurate classifiers can lead to a strong combined classifier.

Before proceeding to quantum models, another result is important to mention. If we consider all possible parameters θ in the sum of Eq. (3) and assume that the model defined by θ has an accuracy a θ on the training set, the optimal weighing scheme17 is given by

It is interesting to note that this weighing scheme corresponds to the weights chosen in AdaBoost for each trained model, where they are derived from what seems to be a different theoretical objective.

Results

The main idea of this paper is to cast the notion of ensembles into a quantum algorithmic framework, based on the idea that quantum parallelism can be used to evaluate ensemble members of an exponentially large ensemble in one step.

The quantum ensemble

Consider a quantum routine \({\mathscr{A}}\) which ‘computes’ a model function f(x; θ),

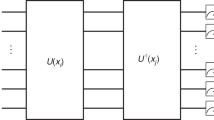

which we will call a quantum classifier in the following. The last qubit |f (x; θ)〉 encodes class f (x; θ) = −1 in state |0〉 and class 1 in state |1〉. Note that it is not important whether the registers |x〉, |θ〉 encode the classical vectors x, θ in the amplitudes or qubits of the quantum state. If encoding classical information into the binary sequence of computational basis states (i.e. x = 2 → 010 → |010〉), every function f (x; θ) a classical computer can compute efficiently could in principle be translated into a quantum circuit \({\mathscr{A}}\). This means that every classifier leads to an efficient quantum classifier (possibly with large polynomial overhead). An example for a quantum perceptron classifier can be found in ref.18, while feed-forward neural networks have been considered in19. With this definition of a quantum classifier, \({\mathscr{A}}\) can be implemented in parallel to a superposition of parameter states.

For example, given θ ∈ [a, b], the expression \(\frac{1}{\sqrt{E}}{\sum }_{{\boldsymbol{\theta }}}|\theta \rangle \) could be a uniform superposition,

where each computational basis state |i〉 corresponds to a τ-bit length binary representations of the parameter, dividing the interval that limits the parameter values into 2τ candidates (see Fig. 3).

As explained before, an ensemble method can be understood as a weighing rule for each model in the sum of Eq. (3). We will therefore require a second quantum routine, \({\mathscr{W}}\), which turns the uniform superposition into a non-uniform one,

weighing each model θ by a classical probability w θ . We call this routine a quantum ensemble since the weights define which model contributes to what extend to the combined decision. The normalisation factor χ ensures that \({\sum }_{\theta }\frac{{w}_{\theta }}{\chi E}=1\). Note that this routine can be understood as a state preparation protocol of a qsample.

Together, the quantum routines (\({\mathscr{A}}\), \({\mathscr{W}}\)) define the quantum ensemble of quantum classifiers, by first weighing the superposition of models (in other words the basis states in the parameter register) and then computing the model predictions for the new input \(\tilde{{\bf{x}}}\) in parallel. The final quantum state is given by

The measurement statistics of the last qubit contain the ensemble prediction: The chance of measuring the qubit in state 0 is the probability of the ensemble deciding for class −1 and is given by

while the chance of measuring the qubit in state 1 reveals \(p(\tilde{y}=\mathrm{1)}\) and is given by

where \({ {\mathcal E} }^{\pm }\) is the subset of \( {\mathcal E} \) containing only models with \(f(\tilde{{\bf{x}}};\theta )=\pm 1\). After describing the general template, we will now look at how to implement a specific weighing scheme with a quantum routine \({\mathscr{W}}\) for general models \({\mathscr{A}}\) and analyse the resulting classifier in more detail.

Choosing the weights proportional to the accuracy

As an illustrative case study, we choose weights that are proportional to the accuracy a θ of each model, as measured on the training set. More precisely, this is the proportion of correct classifications over the number of data points

Note that while usually an estimate for the accuracy of a trained model is measured on a separate test or validation set, we require the accuracy here to build the classifier in the first place and therefore have to measure it on the training set. The goal of the quantum algorithm is to prepare a quantum state where each model represented by state |θ〉 has a probability to be measured that is proportional to its accuracy, w θ ∝ a θ .

The weighing routine \({\mathscr{W}}\) can be constructed as follows. Required for computation is a system of (δ + 1) + τ + 1 + 1 qubits divided into four registers: the data register, the parameter register, the output register and the accuracy register,

Assume a quantum classification routine \({\mathscr{A}}\) is given. As a first step, τ Hadamards bring the parameter register into a uniform superposition, and a Hadamard is applied to the accuracy qubit:

Each |i〉 thereby encodes a set of parameters θ. We now ‘load’ the training pairs successively into the data register, compute the outputs in superposition by applying the core routine \({\mathscr{A}}\) and rotate the accuracy qubit by a small amount towards |0〉 or |1〉 depending on whether target output and actual output have the same value (i.e. by a XOR gate on the respective qubits together with a conditional rotation of the last qubit). The core routine and loading step are then uncomputed by applying the inverse operations. Note that one could alternatively prepare a training superposition \(\frac{1}{\sqrt{M}}{\sum }_{m}|{{\bf{x}}}^{m}\rangle \) and trace the training register out in the end. The costs remain linear in the number of training vectors times the bit-depth for each training vector for both strategies.

After all training points have been processed, the accuracy qubit is entangled with the parameter register and in state \(|{q}_{\theta }\rangle =\sqrt{{a}_{\theta }}|0\rangle +\sqrt{1-{a}_{\theta }}|1\rangle \). A postselective measurement on the accuracy qubit only accepts when it is in in state |0〉 and repeats the routine otherwise. This selects the |0〉-branch of the superposition and leaves us with the state

where the normalisation factor χ is equal to the acceptance probability \({p}_{{\rm{acc}}}=\frac{1}{E}{\sum }_{\theta }{a}_{\theta }\). The probability of acceptance influences the runtime of the algorithm, since a measurement of the ancilla in 1 means we have to abandon the result and start the routine from scratch. We expect that choices of the parameter intervals and data pre-processing allows us to keep the acceptance probability sufficiently high for many machine learning applications, as most of the a θ can be expected to be distributed around 0.5. This hints towards rejection sampling as a promising tool to translate the accuracy-weighted quantum ensemble into a classical method.

Now load the new input into the first δ qubits of the data register, apply the routine \({\mathscr{A}}\) once more and uncompute (and disregard) the data register to obtain

The measurement statistics of the last qubit now contain the desired value. More precisely, the expectation value of \({\mathbb{I}}\alpha \otimes {\sigma }_{z}\) is given by

and corresponds to the classifier in Eq. (3). Repeated measurements reveal this expectation value to the desired precision.

Why accuracies may be good weights

We will now turn to the question why the accuracies might be a good weighing scheme. Recall that there is a lot of evidence that ensembles of weak but accurate classifiers (meaning that a θ > 0.5 for all θ) can lead to a strong classifier. The ensemble constructed in the last section however contains all sorts of models which did not undergo a selection procedure, and it may therefore contain a large –or even exponential– share of models with low accuracy or random guessing. It turns out that for a large class of model families, the ensemble effectively only contains accurate models. This to our knowledge is a new result also interesting for classical ensemble methods.

Assume the core machine learning model has the property to be point symmetric in the parameters θ,

This is true for linear models and neural networks with an odd number of layers such as a simple perceptron or an architecture with 2 hidden layers. Let us furthermore assume that the parameter space Θ is symmetric around zero, meaning that for each θ ∈ Θ there is also −θ ∈ Θ. These pairs are denoted by θ+ ∈ Θ+, θ− ∈ Θ−. With this notation one can write

From there it follows that ∫θ ∈ Θf (x; θ) = 0 and a (θ+) = 1 − a(θ−). To get an intuition, consider a linear model imposing a linear decision boundary in the input space. The parameters define the vector orthogonal to the decision boundary (in addition to a bias term that defines where the boundary intersects with the y-axis which we ignore for the moment). A sign change of all parameters flips the vector around; the linear decision boundary remains at exactly the same position, meanwhile all decisions are turned around (see Fig. 4).

For point symmetric models the expectation can be expressed as a sum over one half of the parameter space:

The result of this ‘effective transformation’ is to shift the weights from the interval [0, 1] to [−0.5, 0.5], with profound consequences. The transformation is plotted in Fig. 5. One can see that accurate models get a positive weight, while non-accurate models get a negative weight and random guessers vanish from the sum. The negative weight consequently flips the decision f (x; θ) of the ‘bad’ models and turns them into accurate classifiers. This is a linearisation of the rule mentioned in Eq. (5) as the optimal weight distribution for large ensembles (plotted in black for comparison).

Comparison of the effective weight transformation described in the text and the optimal weight rule17. Both rules flip the prediction of a classifier that has an accuracy of less than 0.5.

With this in mind we can rewrite the expectation value as

with the new weights

for parameters θ+ as well as θ−.

If one cannot assume a point-symmetric parameter space but still wants to construct an ensemble of all accurate models (i.e., a θ > 0.5), an alternative to the above sketched routine \({\mathscr{W}}\) could be to construct an oracle that marks such models and then use amplitude amplification to create the desired distribution. A way to mark the models is to load every training vector into a predefined register and compute | f (xm; θ)〉 as before, but perform a binary addition on a separate register that “counts” the number of correctly classified training inputs. The register would be a binary encoding of the count, and hence require \(\lceil \,\mathrm{log}\,M\rceil \) qubits (as well as garbage qubits for the addition operation). If logM = μ for an integer μ, then the first qubit would be one if the number of correct classifications is larger than M/2 and zero otherwise. In other words, this qubit would flag the accurate models and can be used as an oracle for amplitude amplification. The optimal number of Grover iterations depends on the number of flagged models. In the best case, around \(\frac{1}{4}\) th of all models are accurate so that the optimal number of iterations is of the order of \(\sqrt{E/\frac{1}{4}E}=2\) Of course, this number has to be estimated before performing the Grover iterations. (If one can analytically prove that \(\frac{1}{2}\) E of all possible models will be accurate as in the case of point-symmetric functions, one can artificially extend the superposition to twice the size, prevent half of the subspace from being flagged and thereby achieve the optimal amplitude amplification scheme).

Analytical investigation of the accuracy-weighted ensemble

In order to explore the accuracy-weighted ensemble classifier further, we conduct some analytical and numerical investigations for the remainder of the article. It is convenient to assume that we know the probability distribution p (x, y) from which the data is picked (that is either the ‘true’ probability distribution with which data is generated, or the approximate distribution inferred by some data mining technique). Furthermore, we consider the continuous limit ∑ → ∫. Each parameter θ defines decision regions in the input space, \({ {\mathcal R} }_{-1}^{\theta }\) for class −1 and \({ {\mathcal R} }_{1}^{\theta }\) for class 1 (i.e. regions of inputs that are mapped to the respective classes). The accuracy can then be expressed as

In words, this expression measures how much of the density of a class falls into the decision region proposed by the model for that class. Good parameters will propose decision regions that contain the high-density areas of a class distribution. The factor of 1/2 is necessary to ensure that the accuracy is always in the interval [0, 1] since the two probability densities are each normalised to 1.

The probability distributions we consider here will be of the form p(x, y = ±1) = g (x; μ±, σ±) = g± (x). They are normalised, \({\int }_{-\infty }^{\infty }{g}_{\pm }(x)dx=1\), and vanish asymptotically, g± (x) → 0 for x → −∞ and x → +∞. The hyperparameters μ−, σ− and μ+, σ+ define the mean or ‘position’ and the variance or ‘width’ of the distribution for the two classes −1 and 1. Prominent examples are Gaussians or box functions. Let G (x; μ±, σ±) = G± (x) be the integral of g (x; μ±, σ±), which fulfils G± (x) → 0 for x → −∞ and G± (x) → 1 for x → +∞. Two expressions following from this property which will become important later are

and

We consider a minimal toy example for a classifier, namely a perceptron model on a one-dimensional input space, f (x; w, w0) = sgn (wx + w0) with \(x,w,{w}_{0}\,\in \,{\mathbb{R}}\). While one parameter would be sufficient to mark the position of the point-like ‘decision boundary’, a second one is required to define its orientation. One can simplify the model even further by letting the bias w0 define the position of the decision boundary and introducing a binary ‘orientation’ parameter o ∈ {−1, 1} (as illustrated in Fig. 6),

For this simplified perceptron model the decision regions are given by

for the orientation o = 1 and

for o = −1. Our goal is to compute the expectation value

of which the sign function evaluates the desired prediction \(\tilde{y}\).

Inserting the definitions from above, as well as some calculus using the properties of p (x) brings this expression to

and evaluating the sign function for the two cases \(\tilde{x} > {w}_{0},\tilde{x} < {w}_{0}\) leads to

To analyse this expression further, consider the two class densities to be Gaussian probability distributions

The indefinite integral over the Gaussian distributions is given by

Inserting this into Eq. (12) we get an expectation value of

Figure 7 plots the expectation value for different inputs \(\tilde{x}\) for the case of two Gaussians with σ− = σ+ = 0.5, and μ− = −1, μ+ = 1. The decision boundary is at the point where the expectation value changes from a negative to a positive value, \({\mathbb{E}}[f(\hat{x};{w}_{0},o)]=0\). One can see that for this simple case, the decision boundary will be in between the two means, which we would naturally expect. This is an important finding, since it implies that the accuracy-weighted ensemble classifier works - arguably only for a very simple model and dataset. A quick calculation shows that we can always find the decision boundary midway between the two means if σ− = σ+. In this case the integrals in Eq. (13) evaluate to

and

with the integral over the error function

The black line plots the expectation value of the accuracy-weighed ensemble decision discussed in the text for the two class densities and for different new inputs x. A positive expectation value yields the prediction 1 while a negative expectation value predicts class −1. At \({\mathbb{E}}[f(x;{w}_{0},o)]=0\) lies the decision boundary. The plot shows that the model predicts the decision boundary where we would expect it, namely exactly between the two distributions.

Assuming that the mean and variance of the two class distributions are of reasonable (i.e. finite) value, the error function evaluates to 0 or 1 before the limit process becomes important, and one can therefore write

The expectation value for the case of equal variances therefore becomes

Setting \(\tilde{x}=\hat{\mu }={\mu }_{-}+0.5({\mu }_{+}+{\mu }_{-})\) turns the expectation value to zero; the point \(\hat{\mu }\) between the two variances is shown to be the decision boundary. Simulations confirm that this is also true for other distributions, such as a square, exponential or Lorentz distribution, as well as for two-dimensional data (see Fig. 8).

Perceptron classifier with 2-dimensional inputs and a bias. The ensemble consist of 8000 models, each of the three parameters taking 20 equally distributed values from [−1, 1]. The resolution to compute the decision regions was Δx1 = Δx2 = 0.05. The dataset was generated with python scikit-learn’s blob function and both classes have the same variance parameter. One can see that in two dimensions, the decision boundary still lies in between the two means of μ−1 = [−1, 1] and μ1 = [1, −1].

The simplicity of the core model allows us to have a look into the structure of the expectation value. Figure 9 shows the components of the integrand in Eq. (11) for the expectation value, namely the accuracy, the core model function as well as their product. Example 1 shows the same variances σ+ = σ−, while Example 2 plots different variances. The plots show that for equal variances, the accuracy is a symmetric function centred between the two means, while for different variances, the function is highly asymmetric. In case the two distributions are sufficiently close to each other, this has a sensitive impact on the position of the decision boundary, which will be shifted towards the flatter distribution. This might be a desired behaviour in some contexts, but is first and foremost an interesting result of the analytical investigation.

Detailed analysis of the classification of \(\tilde{x}\,\mathrm{=}\,1\) for two examples of normal data distributions. The upper Example 1 shows \(p(x,y=-1)={\mathscr{N}}(-1,0.5)\) and \(p(x,y=1)={\mathscr{N}}(1,0.5)\) while the lower Example 2 shows \(p(x,y=-1)={\mathscr{N}}(-1,0.5)\) and \(p(x,y=1)={\mathscr{N}}(1,2)\) (plotted each in the top left figure of the four). The top right figure in each block shows the classification of a given new input \(\tilde{x}\) for varying parameters b, plotted for o = 1 and o = −1 respectively. The bottom left shows the accuracy or classification performance a(w0, o = 1) and a(w0,o = −1) on the data distribution. The bottom right plots the product of the previous two for o = 1 and o = −1, as well as the resulting function under the integral. The prediction outcome is the integral over the black curve, or the total of the gray shaded areas. One can see that for models with different variances, the accuracies loose their symmetry and the decision boundary will therefore not lie in the middle between the two means.

As a summary, analysing a very simple classifier in relation to one-dimensional Gaussian data distributions gives evidence that the weighted average ensemble classifier can lead to meaningful predictions for separated data, but there seems to be a sensitive dependency on the shape of the distributions. Further investigations would have to show how the accuracy-weighted ensemble classifier behaves with more complex base classifiers and/or realistic datasets. Low resolution simulations with one-dimensional inputs confirm that nonlinear decision boundaries can in fact be learnt. However, computing the exact expectation value is a huge computational effort. For example, the next more complex neural network model requires two hidden layers to be point symmetric, and with one bias neuron and for two-dimensional inputs the architecture has already seven or more weights. If each weight is encoded in three qubits only (including one qubit for the sign), we get an ensemble of 221 members whose collective decision needs to be computed for an entire input space in order to determine the decision boundaries. Sampling methods could help to obtain approximations to this result, and would open these methods to classical applications as well.

Discussion

This article proposed a framework to construct quantum ensembles of quantum classifiers which use parallelism in order to evaluate the predictions of exponentially large ensembles. The proposal leaves a lot of space for further research. First, as mentioned above, the quantum ensemble has interesting extensions to classical methods when considering approximations to compute the weighted sum over all its members’ predictions. Recent results on the quantum supremacy of Boson sampling show that the distributions of some state preparation routines (and therefore some quantum ensembles), cannot be computed efficiently on classical computers. Are there meaningful quantum ensembles for which a speedup can be proven? Are the rules of combining weak learners to a strong learner different in the quantum regime? Which types of ensembles can generically prepared by quantum devices? Second, an important issue that we did not touch upon is overfitting. In AdaBoost, regularisation is equivalent to early stopping20, while Bayesian learning has inbuilt regularisation mechanisms. How does the accuracy-based ensemble relate to these cases? Is it likely to overfit when considering more flexible models? A third question is whether there are other (coherent) ways of defining a quantum ensemble for quantum machine learning algorithms that do not have the format of a quantum classifier as defined above. Can mixed states be used in this context?

As a final remark, we want to comment on how the quantum ensemble could be interesting in the context of near-term quantum computing with devices of the order of 100 qubits and limited circuit depth without fault-tolerance. While inference with quantum computing can be done with as little resources as a swap test, coherent training is usually a much more resource intense part of quantum machine learning algorithms2,21,22. One response to this challenge are hybrid quantum-classical training schemes23,24 or quantum feedback25. This article suggests another path, namely to replace optimisation by an integration problem in which quantum parallelism can be leveraged. The only requirement is that a) the preparation of the weighed superposition of parameters as well as b) inference with the core quantum classifier can be implemented with reasonable error with a low-depth and low-width circuit. In the example of an accuracy-weighed ensemble this means that the training data as well as the parameters of a model can be both represented by the number of qubits accessible to the device, which is unsuitable for big data processing. However, the ensemble method as such has no further resource requirements, while we expect it to boost the performance of a quantum classifier similarly to ensemble methods in classical machine learning.

Data availability

Data from the numerical simulation can be made available upon request.

References

Dietterich, T. G. Ensemble methods in machine learning. In International Workshop on Multiple Classifier Systems, 1–15 (Springer 2000).

Rebentrost, P., Mohseni, M. & Lloyd, S. Quantum support vector machine for big data classification. Physcial Review Letters 113, 130503 (2014).

Lloyd, S., Mohseni, M. & Rebentrost, P. Quantum algorithms for supervised and unsupervised machine learning. arXiv preprint arXiv 1307, 0411 (2013).

Amin, M. H., Andriyash, E., Rolfe, J., Kulchytskyy, B. & Melko, R. Quantum Boltzmann machine. arXiv preprint arXiv 1601, 02036 (2016).

Kapoor, A., Wiebe, N. & Svore, K. Quantum perceptron models. In Advances In Neural Information Processing Systems, 3999–4007 (2016).

Rebentrost, P., Schuld, M., Petruccione, F. & Lloyd, S. Quantum gradient descent and Newton’s method for constrained polynomial optimization. arXiv preprint quant-ph/1612.01789 (2016).

Kuncheva, L. I. Combining pattern classifiers: Methods and algorithms (John Wiley & Sons 2004).

Breiman, L. Random forests. Machine Learning 45, 5–32 (2001).

Schapire, R. E. The strength of weak learnability. Machine Learning 5, 197–227 (1990).

Freund, Y. & Schapire, R. E. A desicion-theoretic generalization of on-line learning and an application to boosting. In European Conference on Computational Learning Theory, 23–37 (Springer, 1995).

Jacobs, R. A., Jordan, M. I., Nowlan, S. J. & Hinton, G. E. Adaptive mixtures of local experts. Neural computation 3, 79–87 (1991).

Ghahramani, Z. Probabilistic machine learning and artificial intelligence. Nature 521, 452–459 (2015).

Duda, R. O., Hart, P. E. & Stork, D. G. Pattern classification. (John Wiley & Sons, New York, 2012).

Minka, T. P. Bayesian model averaging is not model combination. Comment available electronically at http://www.stat.cmu.edu/minka/papers/bma.html (2000)

Hansen, L. K. & Salamon, P. Neural network ensembles. IEEE transactions on Pattern Analysis and Machine Intelligence 12, 993–1001 (1990).

Lam, L. & Suen, S. Application of majority voting to pattern recognition: An analysis of its behavior and performance. IEEE Transactions on Systems, Man, and Cybernetics-Part A: Systems and Humans 27, 553–568 (1997).

Shapley, L. & Grofman, B. Optimizing group judgmental accuracy in the presence of interdependencies. Public Choice 43, 329–343 (1984).

Schuld, M., Sinayskiy, I. & Petruccione, F. How to simulate a perceptron using quantum circuits. In Physics Letters A 379, 660–663 (2015).

Wan, K. H., Dahlsten, O., Kristjánsson, H., Gardner, R. & Kim, M. Quantum generalisation of feedforward neural networks. npj Quantum Information 3, 36 (2017).

Schapire, R. E. Explaining Adaboost. In Empirical inference, 37–52 (Springer 2013).

Wiebe, N., Braun, D. & Lloyd, S. Quantum algorithm for data fitting. Physical Review Letters 109, 050505 (2012).

Schuld, M., Fingerhuth, M. & Petruccione, F. Implementing a distance-based classifier with a quantum interference circuit. EPL (Europhysics Letters) 119, 60002 (2017).

Benedetti, M., Realpe-Gómez, J., Biswas, R. & Perdomo-Ortiz, A. Quantum-assisted learning of graphical models with arbitrary pairwise connectivity. arXiv preprint arXiv:1609.02542 (2016).

Romero, J., Olson, J. P. & Aspuru-Guzik, A. Quantum autoencoders for efficient compression of quantum data. Quantum Science and Technology 2, 045001 (2017).

Alvarez-Rodriguez, U., Lamata, L., Escandell-Montero, P., Martn-Guerrero, J. D. & Solano, E. Supervised quantum learning without measurements. Scientific Reports 7, 13645 (2017).

Acknowledgements

This work was supported by the South African Research Chair Initiative of the Department of Science and Technology and the National Research Foundation. We thank Ludmila Kuncheva and Thomas Dietterich for their helpful comments.

Author information

Authors and Affiliations

Contributions

M.S. and F.P. both contributed towards the design of the model. M.S. was responsible for analytical and numerical investigations. Both authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing Interests

The authors declare no competing interests.

Additional information

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Schuld, M., Petruccione, F. Quantum ensembles of quantum classifiers. Sci Rep 8, 2772 (2018). https://doi.org/10.1038/s41598-018-20403-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-018-20403-3

This article is cited by

-

Quantum Computing in the Next-Generation Computational Biology Landscape: From Protein Folding to Molecular Dynamics

Molecular Biotechnology (2024)

-

A kernel-based quantum random forest for improved classification

Quantum Machine Intelligence (2024)

-

Resource saving via ensemble techniques for quantum neural networks

Quantum Machine Intelligence (2023)

-

Quantum Audio Steganalysis Based on Quantum Fourier Transform and Deutsch–Jozsa Algorithm

Circuits, Systems, and Signal Processing (2023)

-

Machine Learning Research Trends in Africa: A 30 Years Overview with Bibliometric Analysis Review

Archives of Computational Methods in Engineering (2023)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.