Abstract

An optimization method to reconstruct the object profile is performed by using a flexible laser plane and bi-planar references. The bi-planar references are considered as flexible benchmarks to realize the transforms among two world coordinate systems on the bi-planar references, the camera coordinate system and the image coordinate system. The laser plane is confirmed by the intersection points between the bi-planar references and laser plane. The 3D camera coordinates of the intersection points between the laser plane and a measured object are initially reconstructed by the image coordinates of the intersection points, the intrinsic parameter matrix and the laser plane. Meanwhile, an optimization function is designed by the parameterized differences of the reconstruction distances with the help of a target with eight markers, and the parameterized reprojection errors of feature points on the bi-planar references. The reconstruction method with the bi-planar references is evaluated by the difference comparisons between true distances and standard distances. The mean of the reconstruction errors of the initial method is 1.01 mm. Moreover, the mean of the reconstruction errors of the optimization method is 0.93 mm. Therefore, the optimization method with the bi-planar references has great application prospects in the profile reconstruction.

Similar content being viewed by others

Introduction

The vision measurement including structured light is an effective non-contact 3D measurement method1,2,3,4,5,6,7. As a laser projector provides more strong and narrowband illumination than a normal digital projector, the laser projector is considered as a flexible symbol for projecting a laser plane onto the object to be measured8,9. The laser curve between the measured object and the laser plane contains the depth information about both the intersection position and the measured object. Therefore, the measurement system based on the structured light is favored by many researchers.

The vision measurement based on the structured light is widely studied due to the advantages of wide measurement range, reasonable test speed and precision in visual measurement approaches10,11,12. Huynh13 presents a calibration method for the structured light system derived from the projector. Three collinear world points contribute the cross ratio value in the image, which is the same with the cross ratio calculated by the world points. The recovery matrix of the stripe plane is provided according to the projection from the structured light to the image. A double cross ratio method is also reported by Wei14 to achieve the calibration of the structured-light-stripe vision sensor. Wei15 employs the vanishing points and vanishing lines, which are derived from a target with parallel lines, to improve the laser plane calibration. Li16 designs a flexible laser scanning system including an industry robot arm and a laser scanner. The rotations and translations of the robot arm are considered in the scanning model. Niola17 describes a calibration for the laser scanner in robotic applications. A target with the known movement is employed to model the geometry of the laser emitter and camera. An approach is illustrated by Le18 by fusing the structured illumination with the data to reconstruct the 3D sharp edge and realize the automatic inspection and reconstruction. In addition, an algorithm is introduced to reconstruct the 3D object profile with the sharp edge. A reconstruction method of 3D profile is proposed by Ma19 on the basis of a nonlinear iterative optimization to reduce the errors from the lens distortion. According to the shape of projection light, the vision measurement methods based on the structured light can be divided into four parts: point structured light, line structured light, grating structured light and coded structured light. The point structured light method can obtain the 3D data of a point. However, it is slow for the large object measurement. The methods of grating or coded structured light are generally based on the digital light processing (DLP) projector and the camera20,21. Villa22 proposes a reference-plane based method to reconstruct the 3D data on the object. The depth is performed by moving the flat plate on a linear stage. Then, a pattern of crossed gratings along the x and y directions is projected on the measured object. The x and y coordinates are determined by the fringe phases generated from the Fourier transform and the inverse Fourier transform. Zhang23 presents a method considering the digital projector as a camera. The digital micro-mirror device (DMD) image is established by the mapping between the (Charge Coupled Device) CCD pixels and DMD pixels. Then the intrinsic parameter matrix of the digital projector is calibrated as the matrix of a camera. The extrinsic parameter matrices are derived from the Zhang’s method. Finally, the 3D coordinates are reconstructed by the image coordinates and the extrinsic parameter matrices. Hu24 introduces an approach to calibrate the projector-camera system. The absolute phase map is solved by a three-step algorithm. Then, a flat plate is designed on a linear stage and driven by a stepper motor. By moving the flat plate and the projection images, four unknown parameters are calibrated for the measurement system. Finally, the system parameters are optimized by a coordinate measurement machine (CMM), a plate with holes and an iterative algorithm. The digital-projector-based methods take the advantages of high efficiency and plenty of data. However, as the pattern of the digital projector is generated from a light bulb, the coded light pattern of the digital projector tends to be impacted by the illuminations from environment, such as the sunlight. After the calibration, the relative position between the digital projector and the camera is fixed. As the planar laser projector contributes the narrow-band and high-density structured light, it is appropriate to measure the complex surface with a moderate test speed, by avoiding the environmental interference illuminations.

Camera calibration is the basis of the accuracy of a vision measurement system. 3D recovery depends on the single or multi-view images captured by a camera. Therefore, the camera calibration has also attracted researchers to improve the 3D reconstruction accuracy. At present, the calibration methods are mainly divided into 1D calibration25,26, 2D calibration27,28,29 and 3D calibration30. The 1D calibration reference is simple in structure and easy to be manufactured, but the calibration accuracy is the lowest due to the lack of information on the 1D reference. Although the structure of the 2D calibration reference is a little more complicated than the one of the 1D reference, it is convenient to be made and moved to different places with the advantage of high calibration accuracy. The 3D calibration reference provides the highest accuracy for the camera calibration. Nevertheless, the 3D calibration reference is complex to be made and suitable for specific situations. Although a 2D reference can be used to calibrate the projection laser plane31, many images of the planar reference in different positions should be captured to calibrate only one laser plane. One target can provide sufficient number of features for solving the intersection points, when the relative position between the camera and the projector is fixed in previous work. We focus on the other situation, which is to reconstruct the intersection points from a flexible laser plane. There is only one intersection line between the flexible laser plane and the target. As the flexible laser plane is determined by at least two lines on the plane, a reconstruction method adopting the bi-planar references is proposed to perform the laser plane calibration in the camera coordinate system. The bi-planar method with two planar references contributes four main advantages that belong to the 2D reference and 3D reference. As the two planar references locate on different planes, the feature points on the bi-planar references are similar to the ones on the 3D reference. Thus, it should be noted that the calibration and reconstruction are achieved by only one image of the camera. Moreover, the calibration reference consists of two planar references, which are easier to be manufactured than the 3D references. Then, the large 3D or 2D references are complicated to be prepared for the profile measurement in a large view field. The reconstruction method of the bi-planar references is easy to be extended to the method of n-planar references. The n-planar references method extends the effective measurement in a larger view field. However, the large 3D or 2D references for a larger view field are replaced by the small 2D references that are convenient to be realized in the reconstruction. Finally, the laser plane is flexible, e.g. hand-held, as the laser plane that is projected to the bi-planar references can be solved in only one image.

An optimization reconstruction method of the object profile is proposed for the vision measurement using the flexible planar laser and bi-planar references. Two planar references are separately distributed at the left and right sides of the measured object. The two planar references are non-coplanar in space. First, the laser plane, generated from a laser projector, is projected onto the measured object. Therefore, the laser plane intersects with the object as well as the planar references providing an intersection curve and two intersection lines. Then, in the camera coordinate system, the flexible laser plane is modeled by the projections of feature points on the two planar references and the image coordinates of the laser intersection points. Finally, the 3D coordinates of the measured object are determined by the projection of the intersection curve and further enhanced by the optimization function. The optimization method is compared with the initial method by the reconstructed distance errors to verify the accuracy of the reconstruction method.

Methods

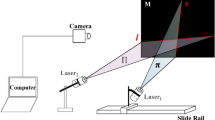

For the 3D profile reconstruction problem, a random laser plane is projected to the measured object and two planar references in Fig. 1. Two world coordinate systems OW1-XW1YW1ZW1, OW2-XW2YW2ZW2, the camera coordinate system OC-X CY CZ C and the image coordinate system OI-XIYIZI are defined on the two planar references, the camera and the image, respectively. Two checker-board-pattern references are considered as the transform bridges among the measured object, the laser plane and the camera.

The flexible laser plane intersects two planar references with two lines. According to the camera pinhole model32, the 3D points on the intersection lines and the projected image points are represented by

where \({{\bf{X}}}_{i}^{({\rm{W}},{k})}={[{X}_{i}^{({\rm{W}},{k})},{Y}_{i}^{({\rm{W}},{k})},0,1]}^{{\rm{T}}}\) is the 3D point of the intersection laser line on the planar reference. \({{\bf{x}}}_{i}^{({\rm{IW}},{k})}={[{x}_{i}^{({\rm{IW}},{k})},{y}_{i}^{({\rm{IW}},{k})},1]}^{{\rm{T}}}\) is the projection point of \({{\bf{X}}}_{i}^{({\rm{W}},{k})}\) in the image coordinate system. \({{\rm{R}}}^{({\rm{W}},{k})}={[{r}_{pq}^{({\rm{W}},{k})}]}_{3\times 3}\), \({{\bf{t}}}^{({\rm{W}},{k})}={[{t}_{1}^{({\rm{W}},{k})},{t}_{2}^{({\rm{W}},{k})},{t}_{3}^{({\rm{W}},{k})}]}^{{\rm{T}}}\) are the rotation matrix and translation vector of the reference, from two world coordinate systems to the camera coordinate system. \({\rm{A}}=[\begin{array}{ccc}\alpha & \gamma & {u}_{0}\\ 0 & \beta & {v}_{0}\\ 0 & 0 & 1\end{array}]\) is the intrinsic parameters of the camera. \({{\rm{R}}}^{({\rm{W}},{k})},{{\bf{t}}}^{({\rm{W}},{k})}\) and A are generated from the Zhang’s method33. s(k) is the scale factor. k = 1, 2 correspond to the coordinate systems Ow1-X w1Y w1Z w1, Ow2-X w2Y w2Z w2, respectively.

According to the projectivity in Eq. (1), the first and second coordinates of \({{\bf{X}}}_{i}^{({\rm{W}},{k})}\) are given by33

where \({{\rm{R}}}^{({\rm{W}},{k})}=[{{\bf{r}}}_{p1}^{({\rm{W}},{k})}\quad {{\bf{r}}}_{p2}^{({\rm{W}},{k})}\quad {{\bf{r}}}_{p3}^{({\rm{W}},{k})}]\). Thus, \({{\bf{X}}}_{i}^{({\rm{W}},{k})}={[{X}_{i}^{({\rm{W}},{k})},{Y}_{i}^{({\rm{W}},{k})},0,1]}^{{\rm{T}}}\), and \({X}_{i}^{({\rm{W}},{k})},{Y}_{i}^{({\rm{W}},{k})}\) are derived from Eq. (2).

Therefore, the 3D point of the intersection laser line in the camera coordinate system is expressed by34

where \({{\bf{X}}}_{i}^{({\rm{C}},{k})}\) is the 3D point on the intersection laser line.

Let a point set \({{\bf{X}}}_{i}^{({\rm{C}})}=[{{\bf{X}}}_{i}^{({\rm{C}},1)},{{\bf{X}}}_{i}^{({\rm{C}},2)}]\). The points \({{\bf{X}}}_{i}^{({\rm{C}},1)}\) and \({{\bf{X}}}_{i}^{({\rm{C}},2)}\) are derived from different lines. Moreover, the 3D points \({{\bf{X}}}_{i}^{({\rm{C}})}\) of the intersection laser lines locate on the laser plane. Therefore,

where Π = [Π1, Π2, Π3, 1]T is the coordinate of the laser plane, which is determined by the point sets \({{\bf{X}}}_{i}^{({\rm{C}},1)}\) and \({{\bf{X}}}_{i}^{({\rm{C}},2)}\) on the two intersection laser lines. 02 is a 2-dimensional zero vector.

The set of the 3D points \({{\bf{X}}}_{i}^{({\rm{C}})}\) of the intersection laser lines is \({\rm{Q}}={[{{\bf{X}}}_{1}^{({\rm{C}})},{{\bf{X}}}_{2}^{({\rm{C}})},...{{\bf{X}}}_{i}^{({\rm{C}})},...,{{\bf{X}}}_{n}^{({\rm{C}})}]}^{T}\). As the points in Q are located on two different intersection lines, from Eq. (4), we have

where 02n is a 2n-dimensional zero vector. The laser plane Π can be solved by the singular value decomposition (SVD)35.

The 3D point \({{\bf{X}}}_{i}^{({\rm{M}})}\) on the measured object as well as on the laser plane obeys to34

Moreover, in view of the camera pinhole model32, the 3D point \({{\bf{X}}}_{i}^{({\rm{M}})}\) on the measured object in the camera coordinate system also satisfies the projection

where \({{\bf{x}}}_{i}^{({\rm{IM}})}={[{x}_{i}^{({\rm{IM}})},{y}_{i}^{({\rm{IM}})},1]}^{{\rm{T}}}\) are the 2D projection points of \({{\bf{X}}}_{i}^{({\rm{M}},{k})}\) in the image coordinate system. s(M) is a scale factor.

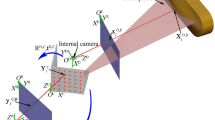

The closed form solution of the 3D point \({{\bf{X}}}_{i}^{({\rm{M}})}\) on the measured object can be generated from stacking Eqs (6) and (7). The diagram of the closed form solution is shown in Fig. 2.

In order to improve the reconstruction accuracy of the points on the measured object, the standard distance is employed as an optimization object to enhance the measurement accuracy24. In Fig. 3, we design a target for the benchmarks of the standard distances. There are eight different markers with the coordinate \({{\bf{X}}}_{j}^{({\rm{M}})}\) on the target. Four standard distances are given by the eight markers. The laser plane intersects two markers on the target. As the distance reconstructed by the laser plane should be equal to the real distance between two markers, the parameterized function is constructed by the difference between the distance on the target and the parameterized reconstruction distance given by

where\(\begin{array}{rl}{X}_{j}^{({\rm{M}})}= & [\beta \alpha ({u}_{0}-{x}_{j}^{({\rm{I}}{\rm{M}})})-\gamma \alpha ({v}_{0}-{y}_{j}^{({\rm{I}}{\rm{M}})})]\{[\gamma \alpha ({v}_{0}-{y}_{j}^{({\rm{I}}{\rm{M}})})-\beta \alpha ({u}_{0}-{x}_{j}^{({\rm{I}}{\rm{M}})})]{{\Pi }}_{1}\\ & {-{\alpha }^{2}({v}_{0}-{y}_{j}^{({\rm{I}}{\rm{M}})}){{\Pi }}_{2}+{\alpha }^{2}\beta {{\Pi }}_{3}\}}^{-1}\\ {Y}_{j}^{({\rm{M}})}= & \alpha ({v}_{0}-{y}_{j}^{({\rm{I}}{\rm{M}})}){[({v}_{0}-{y}_{j}^{({\rm{I}}{\rm{M}})})(\gamma {{\Pi }}_{1}-\alpha {{\Pi }}_{2})-\beta {{\Pi }}_{1}({u}_{0}-{x}_{j}^{({\rm{I}}{\rm{M}})})+\alpha \beta {{\Pi }}_{3}]}^{-1}\\ & {Z}_{j}^{({\rm{M}})}=\alpha \beta {[\beta {{\Pi }}_{1}({u}_{0}-{x}_{j}^{({\rm{I}}{\rm{M}})})-\alpha \beta {{\Pi }}_{3}-({v}_{0}-{y}_{j}^{({\rm{I}}{\rm{M}})})(\gamma {{\Pi }}_{1}-\alpha {{\Pi }}_{2})]}^{-1}\end{array}\) are the parameterized coordinates of the reconstruction point on the target.

Equation (8) covers the intrinsic parameters of the camera and the laser plane. However, the laser plane depends on the intersection laser points on the target, which is also determined by the extrinsic parameters R(W,k) and t(W,k) of the camera. Furthermore, the extrinsic parameters R(W,k) and t(W,k) represent the relative positions among the two planar references and the camera, as well as the coordinate of the laser plane. Therefore, on the basis of Eq. (8), we enhance the objective function by the parameterized reprojection errors with the extrinsic parameters. The final parameterized function is

where \({{\bf{X}}}_{i}^{({\rm{R}},{k})}\), \({{\bf{x}}}_{i}^{({\rm{IR}},{k})}\) are the known 3D world coordinates and 2D image coordinates of the feature points on the two references. The optimized solutions of R(W,k), t(W,k), α, β, γ, u0, v0, Π1, Π2, Π3 are related to the minimized value of the parameterized function in Eq. (9). The optimized points on the measured object are solved by the optimized solutions. The diagram of the optimization solution is shown in Fig. 4.

Results

Two 280 mm × 400 mm planar references are employed in the experiments. The references are covered with a check board pattern. The distance between the adjacent corner points on the planar reference is 20 mm. Each planar reference includes 247 feature points. The resolution of the captured images is 2048 × 1536 in the experiments. In the camera calibration, Harris corner recognition36 is adopted to acquire the coordinates of the feature points in the images of two planar references.

The reconstruction experiment results of the flexible laser plane and two planar references are shown in Fig. 5. Figure 5(a,c,e and g) are the experimental photographs in the four groups of reconstruction experiments. A cylindrical tube, a vehicle model, a cup and a box are selected as the objects to be reconstructed. Figure 5(b,d,f and h) represent the point reconstruction results that are related to the Fig. 5(a,c,e and g). The red tetrahedrons show the distribution of feature points on the two planar references. The green spheres represent the points on the intersection lines between the flexible laser plane and the planar references in the camera coordinate system. The intersection line between the laser plane and the planar reference includes 40 green spheres. Two intersection lines determine the position of the laser plane. 10 different positions of the laser plane are selected in the four groups of the reconstruction experiments. The blue spheres illustrate the points on the intersection lines between the measured object and the flexible laser plane in the camera coordinate system. The blue curve is formed by 20 blue spheres on the intersection between the measured object and the laser plane.

The reconstruction experiment results of the flexible laser plane and two planar references. (a) The reconstruction image of the cylindrical tube. (b) The point reconstruction results of the cylindrical tube. (c) The reconstruction image of the vehicle model. (d) The point reconstruction results of the vehicle. (e) The reconstruction image of the cup. (f) The point reconstruction results of the cup. (g) The reconstruction image of the box. (h) The point reconstruction results of the box.

In order to evaluate the precision of the reconstruction method, the eight different markers on the target in Fig. 3 are also considered as the benchmarks of the standard test distances. The relationships between the reconstruction errors and the standard test distances are analyzed by varying the standard distance. The standard test distance is determined by 20 mm, 30 mm, 40 mm and 50 mm, respectively. Four measurement distances between the camera and the object being measured are 900 mm, 1000 mm, 1100 mm and 1200 mm, respectively. The differences between the reconstructed distances and standard test distances are used to verify the accuracy of the profile reconstruction method. It is defined by37

where E k is the reconstruction error of the test distance, D k and Dk−1 are the reconstruction points of the markers, D0 is the standard test distance. 40 images of the target with the eight different markers are captured by the camera. 20 images of them are conducted to the optimization function. The other 20 images are chosen to evaluate the reconstruction error of the test distance.

The reconstruction error results of the proposed optimization method are compared with ones of the initial closed-form-solution method in Fig. 6. The corresponding statistics are summarized in Table 1. The scopes of the reconstruction errors of the initial method are 0.21 mm–2.31 mm, 0.20–2.26 mm, 0.18 mm–2.22 mm and 0.17 mm–2.39 mm when the measurement distances between the camera and the measured object are 900 mm, 1000 mm, 1100 mm and 1200 mm. Moreover, the corresponding scopes of the optimization method are 0.16 mm–2.26 mm, 0.13 mm–2.21 mm, 0.13 mm–2.18 mm, 0.10 mm–2.28 mm. The average errors of the initial method are 1.02 mm, 0.98 mm, 1.00 mm and 1.04 mm when the measurement distances between the camera and the measured object are 900 mm, 1000 mm, 1100 mm and 1200 mm. The corresponding average errors of the optimization method are 0.94 mm, 0.90 mm, 0.92 mm and 0.95 mm. The root mean squares of errors of the initial method are 1.13 mm, 1.10 mm, 1.11 mm and 1.16 mm when the measurement distances between the camera and the measured object are 900 mm, 1000 mm, 1100 mm and 1200 mm. The corresponding root mean squares of the errors of the optimization method are 1.05 mm, 1.03 mm, 1.04 mm and 1.08 mm.

The experimental results of the reconstruction errors obtained from the initial method and the optimization method. The standard test distances are 20 mm, 30 mm, 40 mm and 50 mm, respectively. The measurement distances between the camera and the measured object are 900 mm, 1000 mm, 1100 mm and 1200 mm, which correspond to (a) to (d).

In the test with the standard distance of 20 mm, the ranges of the minimum value, the maximum value, the mean and the root mean square of the reconstruction errors of the initial method are 0.17 mm–0.21 mm, 0.70 mm–0.82 mm, 0.48 mm–0.53 mm and 0.50 mm–0.56 mm. However, the ones of the optimization method are 0.10 mm–0.16 mm, 0.65 mm–0.78 mm, 0.41 mm–0.45 mm and 0.43 mm–0.48 mm. In the test with the standard distance of 30 mm, the scopes of the minimum value, the maximum value, the mean and the root mean square of the reconstruction errors of the initial method are 0.33 mm–0.42 mm, 1.30 mm–1.40 mm, 0.83 mm–0.88 mm and 0.87 mm–0.92 mm. Then, the ones of the optimization method are 0.31 mm–0.35 mm, 1.28 mm–1.33 mm, 0.76 mm–0.81 mm and 0.81 mm–0.85 mm. In the test with the standard distance of 40 mm, the ranges of the minimum value, the maximum value, the mean and the root mean square of the reconstruction errors of the initial method are 0.42 mm–0.57 mm, 1.73 mm–1.88 mm, 1.12 mm–1.22 mm and 1.19 mm–1.27 mm. However, the ones of the optimization method are 0.29 mm–0.47 mm, 1.67 mm–1.72 mm, 1.02 mm–1.09 mm and 1.09 mm–1.16 mm. The scopes of the minimum value, the maximum value, the mean and the root mean square of the reconstruction errors of the initial method are 0.64 mm–0.83 mm, 2.22 mm–2.39 mm, 1.49 mm–1.55 mm and 1.55 mm–1.62 mm with the standard distance of 50 mm. Moreover, the ones of the optimization method are 0.59 mm–0.77 mm, 2.18 mm–2.28 mm, 1.41 mm–1.47 mm and 1.48 mm–1.53 mm.

The relative errors of the initial method are 2.59%, 2.88%, 2.96% and 3.04% while the relative errors of the optimization method are 2.19%, 2.62%, 2.69% and 2.91%, when the measurement distance between the camera and the measured object is 900 mm and the standard test distances are 20 mm, 30 mm, 40 mm and 50 mm. When the measurement distance between the camera and the measured object are 1000 mm, the relative errors of the initial method are 2.40%, 2.78%, 2.79% and 2.99% while the relative errors of the optimization method are 2.03%, 2.54%, 2.55% and 2.83%, in the test with the standard distances of 20 mm, 30 mm, 40 mm and 50 mm. The relative errors of the initial method are 2.47%, 2.81%, 2.91% and 3.02% while the relative errors of the optimization method are 2.13%, 2.58%, 2.64% and 2.88% when the measurement distance between the camera and the measured object is 1100 mm. The relative errors of the initial method are 2.65%, 2.92%, 3.04% and 3.11% while the relative errors of the optimization method are 2.25%, 2.69%, 2.71% and 2.94%, with the measurement distance of 1200 mm and the standard test distances of 20 mm, 30 mm, 40 mm and 50 mm.

Two examples in Fig. 7 are provided for the measurement of mechanical parts by using the described method. The true values of the two mechanical parts, which are measured by a vernier caliper, are 90.16 mm and 49.50 mm, respectively. Nevertheless, the mean errors of the initial method and the optimization method obtained from the different 20 images of the first measured part are 2.94 mm and 2.77 mm. The average relative errors of the initial method and the optimization method are 3.26% and 3.07%. The average errors of the initial method and the optimization method of the second measured mechanical part are 1.55 mm and 1.40 mm. The average relative errors of the initial method and the optimization method are 3.12% and 2.84%, respectively.

The reconstruction examples of two mechanical parts with the flexible laser plane and two planar references. (a) The reconstruction example of the first mechanical part. (b) The point reconstruction errors of the first example. (c) The reconstruction example of the second mechanical part. (d) The point reconstruction errors of the second example.

Discussion

In this paper, a 3D reconstruction process is realized by the flexible laser plane without position limitation relative to the camera and the bi-planar references. In order to calibrate the flexible laser plane relative to the camera, 3D and 2D targets are naturally considered as the references to solve the flexible laser plane. The 3D target could be designed with the combination of two orthogonal planes or the two planes with the known relative position. This kind of target is difficult to be manufactured. And then, we solve the flexible laser plane by the 2D target. However, a flexible laser plane cannot be reconstructed from only one planar target. It is because there is only one intersection line between the laser plane and the planar target, and a laser plane cannot be determined by only one line. In view of the above reasons, two planar targets are chosen as the references to reconstruct the flexible laser plane. As there are two intersection lines between the laser plane and the two planar targets, the flexible laser plane is derived from the two intersection lines. In this method, the laser plane is flexible to the camera and there is no strict restriction of the position between two planar targets.

From the experimental results, we naturally come to the conclusion that the reconstruction errors of the optimization method are generally smaller than those of the initial method. It is to say, the optimization method is closer to the real 3D value than the initial method. This proves that the optimization function effectively reduces the reconstruction experimental error and contributes good stability and reliability. In addition, the relative errors of the optimization method are smaller than those of the initial method. Furthermore, the relative errors of the two methods are less than 4%. The averages, the root mean squares and relative errors present steady growing trends with the increasing standard test distance from 20 mm to 50 mm. As the measurement distance between the camera and the measured object rises from 900 mm to 1200 mm, the distance reconstruction errors decrease firstly, then increase gradually. It is worth noting that the averages and the root mean squares of the distance reconstruction errors achieve the smallest values when the measurement distance between the camera and the measured object is 1000 mm. The averages and the root mean squares of the distance reconstruction errors are the largest values when the camera is 1200 mm away from the object being measured in the experiments. The averages and the root mean squares of the distance reconstruction errors under the measurement distance of 1100 mm are slightly smaller than those under the distance of 900 mm. Therefore, the experimental results show that the reconstruction results are the closest to the true values when the camera is 1000 mm away from the object being measured and the standard test distance is 20 mm. Two mechanical parts are measured by the method. The average relative errors of the initial method and the optimization method are less than 5%. As the laser projector is small and flexible to the camera, the measurement just requires the laser projector to approach the measured object. Therefore, the measurement can be achieved in a small space by the laser projector. The method has potential applications in the object profile measurement fields, such as automotive industry and aviation industry.

Summary

An optimization reconstruction method of object profile is realized in this paper by using a flexible laser plane and bi-planar references. Two planar references are adopted to build the transformations among the measured object, the laser plane and the camera. Two world coordinate systems are determined by the two planar references. Therefore, the camera internal parameters, the rotation matrix and the translation vector are determined by the projections from the feature points on the two planar references to ones on the image. The laser plane is modeled by the camera coordinates of the points on the intersection lines between the laser plane and two planar references. The camera coordinates of the points on the intersection curves of the measured object are obtained by the laser plane and the projection relationship of the camera. A target taking the markers with different distances is adopted to evaluate the reconstruction accuracy of the 3D points on the measured object. Then, an optimization function is established to enhance the accuracy of the reconstruction results by minimizing the parameterized differences of the standard test distances and the reconstruction distances and minimizing the parameterized reprojection errors of the feature points on two planar references. The effects of the measurement distance between the camera and the measured object, and the ones of the standard test distance on the target are investigated by the experiments. The mean of the reconstruction error of the initial method is 1.01 mm while the mean of the reconstruction error of the optimization method is 0.93 mm. The root mean square of the reconstruction errors of the initial method is 1.12 mm while the root mean square of the reconstruction errors of the optimization method is 1.05 mm. Furthermore, the means of the relative errors of the initial method and the optimization method are 2.84% and 2.57%, respectively. Therefore, the optimization method effectively improves the accuracy of the reconstruction method and provides the relative errors smaller than 4%. Two mechanical parts are measured by the method to explain the applications. The reconstructed values are compared with the true values from a vernier caliper. The average relative errors of the initial method and the optimization method are less than 5%. Consequently, the proposed optimization reconstruction method, using the bi-planar references and a flexible laser plane, has potential applications in the object profile measurement fields.

Data availability

The datasets generated during the current study are available from the corresponding author on reasonable request.

References

Kiddee, P., Fang, Z. & Tan, M. A practical and intuitive calibration technique for cross-line structured light. Optik 127, 9582–9602 (2016).

Santolaria, J., Pastor, J., Brosed, F. & Aguilar, J. A one-step intrinsic and extrinsic calibration method for laser line scanner operation in coordinate measuring machines. Meas. Sci. Technol. 20, 045107 (2009).

Wang, P. et al. Calibration method for a large-scale structured light measurement system. Appl. Optics 56, 3995–4002 (2017).

Li, D., Chi, F. C., Ren, M., Zhou, L. & Zhao, X. Autostereoscopy-based three-dimensional on-machine measuring system for micro-structured surfaces. Opt. Express 22, 25635–25650 (2014).

Mcclatchy, D. I. et al. Calibration and analysis of a multimodal micro-CT and structured light imaging system for the evaluation of excised breast tissue. Phys. Med. Biol. 62, 9893 (2017).

Głowacz, A. & Głowacz, Z. Diagnosis of the three-phase induction motor using thermal imaging. Infrared Phys. Techn. 81, 7–16 (2017).

Bakirman, T. et al. Comparison of low cost 3D structured light scanners for face modeling. Appl. Optics 56, 985–992 (2017).

Kiddee, P., Fang, Z. & Tan, M. An automated weld seam tracking system for thick plate using cross mark structured light. Int. J. Adv. Manuf. Tech. 87, 1–15 (2016).

Sitnik, R. Four-dimensional measurement by a single-frame structured light method. Appl. Optics 48, 3344–3354 (2009).

Zhu, Y., Gu, Y., Jin, Y. & Zhai, C. Flexible calibration method for an inner surface detector based on circle structured light. Appl. Optics 55, 1034–1039 (2016).

Rodriguez-Martin, M., Rodriguez-Gonzalvez, P., Gonzalez-Aguilera, D. & Fernandez-Hernandez, J. Feasibility study of a structured light system applied to welding inspection based on articulated coordinate measure machine data. IEEE Sens. J. 17, 4217–4224 (2017).

Liu, K. et al. Optimized stereo matching in binocular three-dimensional measurement system using structured light. Appl. Optics 53, 6083–6090 (2014).

Huynh, D. Q., Owens, R. A. & Hartmann, P. E. Calibrating a structured light stripe system: a novel approach. Int. J. Comput. Vision 33, 73–86 (1999).

Wei, Z., Zhang, G. & Xu, Y. Calibration approach for structured-light-stripe vision sensor based on the invariance of double cross-ratio. Opt. Eng. 42, 2956–2966 (2003).

Wei, Z., Shao, M., Zhang, G. & Wang, Y. Parallel-based calibration method for line-structured light vision sensor. Opt. Eng. 53, 033101 (2014).

Li, J. et al. Calibration of a multiple axes 3-D laser scanning system consisting of robot, portable laser scanner and turntable. Optik 122, 324–329 (2011).

Niola, V., Rossi, C., Savino, S. & Strano, S. A method for the calibration of a 3-d laser scanner. Robot. & Com-Int. Manuf. 27, 479–484 (2011).

Le, M. T., Chen, L. C. & Lin, C. J. Reconstruction of accurate 3-D surfaces with sharp edges using digital structured light projection and multi-dimensional image fusion. Opt. Laser. Eng. 96, 17–34 (2017).

Ma, S. et al. Flexible structured-light-based three-dimensional profile reconstruction method considering lens projection-imaging distortion. Appl. Optics 51, 2419–2428 (2012).

Zhu, L., Zhang, X. & Li, Y. Color code identification in coded structured light. Appl. Optics 51, 5340–5356 (2012).

Huang, X. et al. Target enhanced 3D reconstruction based on polarization-coded structured light. Optics Express 25, 1173–1184 (2017).

Villa, Y., Araiza, M., Alaniz, D., Ivanov, R. & Ortiz, M. Transformation of phase to (x, y, z)-coordinates for the calibration of a fringe projection profilometer. Opt. Laser. Eng. 50, 256–261 (2012).

Zhang, S. & Huang, P. “Novel method for structured light system calibration,”. Opt. Eng. 45, 083601 (2006).

Hu, Q., Huang, P., Fu, Q. & Chiang, F. Calibration of a three dimensional shape measurement system. Opt. Eng. 42, 487–493 (2003).

Zhao, Y., Li, X. & Li, W. Binocular vision system calibration based on a one-dimensional target. Appl. Optics 51, 3338–3345 (2012).

Peng, E. & Li, L. Camera calibration using one-dimensional information and its applications in both controlled and uncontrolled environments. Pattern Recogn. 43, 1188–1198 (2010).

Ricolfeviala, C. & Sanchezsalmeron, A. J. Camera calibration under optimal conditions. Optics Express 19, 10769–10775 (2011).

Xu, G., Zheng, A., Li, X. & Su, J. Position and orientation measurement adopting camera calibrated by projection geometry of Plücker matrices of three-dimensional lines. Sci. Rep. 7, 44092 (2017).

Wang, Z., Wu, Z., Zhen, X., Yang, R. & Xi, J. An onsite structure parameters calibration of large FOV binocular stereovision based on small-size 2D target. Optik 124, 5164–5169 (2013).

Xu, G. et al. An optimization solution of a laser plane in vision measurement with the distance object between global origin and calibration points. Sci. Rep. 5, 11928 (2015).

Xu, G., Zhang, X., Su, J., Li, X. & Zheng, A. Solution approach of a laser plane based on plücker matrices of the projective lines on a flexible 2D target. Appl. Optics 55, 2653–2656 (2016).

David, A. F. & Jean, P. Computer Vision, A Modern Approach (Prentice Hall, 2003).

Zhang, Z. A flexible new technique for camera calibration. IEEE T. Pattern Anal. 22, 1330–1334 (2000).

Hartley, R. & Zisserman, A. Multiple View Geometry in Computer Vision (Cambridge University Press, 2003).

Horn, R. A. & Johnson, C. R. Matrix Analysis (Cambridge University Press, 2012).

Ryu, J. B., Lee, C. G. & Park, H. H. Formula for Harris corner detector. Electron. Lett. 47, 180–181 (2011).

Xu, G., Yuan, J., Li, X. & Su, J. 3D reconstruction of laser projective point with projection invariant generated from five points on 2D target. Sci. Rep. 7, 7049 (2017).

Acknowledgements

This work was funded by National Natural Science Foundation of China under Grant Nos 51478204, 51205164, and Natural Science Foundation of Jilin Province under Grant Nos 20170101214JC, 20150101027JC.

Author information

Authors and Affiliations

Contributions

G.X. provided the idea, G.X., J.Y. and X.T.L. performed the data analysis, writing and editing of the manuscript, J.Y. and J.S. contributed the program and experiments, J.Y. prepared the figures. All authors contributed to the discussions.

Corresponding author

Ethics declarations

Competing Interests

The authors declare that they have no competing interests.

Additional information

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Xu, G., Yuan, J., Li, X. et al. Optimization reconstruction method of object profile using flexible laser plane and bi-planar references. Sci Rep 8, 1526 (2018). https://doi.org/10.1038/s41598-018-19928-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-018-19928-4

This article is cited by

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.