Abstract

Although electro-holography can reconstruct three-dimensional (3D) motion pictures, its computational cost is too heavy to allow for real-time reconstruction of 3D motion pictures. This study explores accelerating colour hologram generation using light-ray information on a ray-sampling (RS) plane with a graphics processing unit (GPU) to realise a real-time holographic display system. We refer to an image corresponding to light-ray information as an RS image. Colour holograms were generated from three RS images with resolutions of 2,048 × 2,048; 3,072 × 3,072 and 4,096 × 4,096 pixels. The computational results indicate that the generation of the colour holograms using multiple GPUs (NVIDIA Geforce GTX 1080) was approximately 300–500 times faster than those generated using a central processing unit. In addition, the results demonstrate that 3D motion pictures were successfully reconstructed from RS images of 3,072 × 3,072 pixels at approximately 15 frames per second using an electro-holographic reconstruction system in which colour holograms were generated from RS images in real time.

Similar content being viewed by others

Introduction

Electro-holography is a technique that can reconstruct three-dimensional (3D) motion pictures by switching holograms using a spatial light modulator (SLM)1,2,3,4,5,6,7,8,9,10,11,12. This technique uses a computer-generated hologram (CGH), and several methods are available for hologram generation. The point-based method generates holograms from a 3D object based on point cloud data13,14,15,16,17,18. Although this method involves simple calculations, the computation time typically depends on the number of point clouds and the number of pixels in a hologram. This indicates that it is significantly difficult to realise real-time hologram generation when large holograms are generated from complex 3D objects. In addition, hidden surface removal and gloss reproduction processes are required in hologram generation19,20,21, which results in increased computation time. Conversely, previous studies have proposed a hologram generation method based on light-ray reproduction22,23. This method can realise hidden surface removal and gloss reproduction by rendering computer graphics (CG) in a pre-process. However, the rendering and hologram planes are in the same position, and the rendered image becomes blurred when the object is distant from the hologram plane. Subsequently, the ray-sampling (RS) plane method has been proposed24. The RS plane method suppresses blurring of the rendered image by rendering on the RS plane set up near the object. The computational cost of this method is lower than that of other point-based methods because it employs fast Fourier transform (FFT). However, the computational cost is still too high for real-time reconstruction of 3D motion pictures. Thus, we focus on the computation time of the aforementioned method and specifically aim at speeding up colour hologram generation from 3D polygon data based on an RS plane using a graphic processing unit (GPU) to reconstruct 3D motion pictures in real time. In addition, GPU memory buffer overflows is one of the technical difficulties in implementing the RS plane method onto a GPU. We also aim to reduce the amount of GPU memory used in order to achieve high-quality 3D images.

Results

We used the following environment to generate a hologram: Microsoft Windows 10 Enterprise; 3.40-GHz Intel Core i7-6800K central processing unit (CPU) (full use of six cores) with 32 GB memory; Microsoft Visual C++ 2013; NVIDIA Geforce GTX 1080 GPUs (1,823-MHz GPU clock, 5,005-MHz memory clock, 8,192 MB memory and 2,560 cores) and compute unified device architecture (CUDA) version 8.0 as an integrated development environment for GPU. For the reconstruction environment, we set the hologram resolution to 1,920 × 1,080 pixels, the pixel pitch to 8.0 μm, the blue wavelength to 450 nm, the green wavelength to 532 nm, the red wavelength to 650 nm and the propagation distance to 0.7 m. We used phase-modulation SLMs (Holoeye Photonics AG, ‘PLUTO’) to display holograms. Table 1 shows the computation time of the RS plane method by the CPU and GPU. Here, we arranged three GPUs on the PC to generate holograms. The values in Table 1 represent the total computation time involved in acquiring wavefront information, Fresnel diffraction and hologram calculation. Here the transfer time HtoD is the transfer time from the host PC to the device (i.e. the GPU), while the transfer time DtoH is the transfer time from the device to the host PC. As shown in Table 1, the computation time required by the GPU is approximately 300–500 times faster than that of the CPU, and the frame-rate of hologram generation from 3,072 × 3,072-pixel RS images corresponds to approximately 15 frames per second.

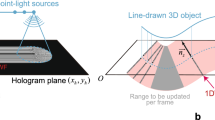

Figure 1a shows the optical system to reconstruct colour 3D images by electro-holography15. Figure 1b shows a schematic of the electro-holographic reconstruction system in this experiment. Figure 1c–e show images reconstructed from three RS images at resolutions of 2,048 × 2,048, 3,072 × 3,072 and 4,096 × 4,096 pixels with zero padding of 2 N × 2 N pixels (described in the Methods section). Figure 1f–h show images reconstructed from three RS images at resolutions of 3,072 × 3,072, 3,072 × 3,072 and 6,144 × 6,144 pixels with zero paddings of 4.096 × 4,096, 8,192 × 8,192 and 8,192 × 8,192 pixels, respectively (described in the Discussion section). A comparison of the enlarged view of each reconstructed image reveals that the resolution of the RS image increases when the quality of the reconstructed images improves. This is because reconstructed image quality in the RS plane method improves with an increase in the number of elemental images of the RS image. Because we fixed the resolution of each elemental image at 16 × 16 pixels, the number of elemental images is proportional to the resolution of the RS images. Figure 2 and Supplementary Video 1 shows the real-time reconstructed moving pictures from eighty 3,072 × 3,072 RS images obtained using the optical system. Supplementary Video 2 shows the manner in which the program generates holograms and reconstructs 3D images in real time. Figures 1c–h, 2, Supplementary Videos 1 and 2 demonstrate that the CGH calculation is successfully performed in real time.

(a) Optical system to reconstruct colour 3D images by electro-holography, (b) overview of the hologram setup. Image reconstructed from six RS images at resolutions of (c) 2,048 × 2,048, (d) 3,072 × 3,072, (e) 4,096 × 4,096, (f) 3,072 × 3,072, (g) 3,072 × 3,072 and (h) 6,144 × 6,144, and the resolution after expanding by zero padding is (c) 4,096 × 4,096, (d) 6,144 × 6,144, (e) 8,192 × 8,192 pixels, (f) 4,096 × 4,096, (g) 8,192 × 8,192 and (h) 8,192 × 8,192.

Reconstructed images (several frames in Supplementary Video 1).

Even in the image shown in Fig. 1e, the depth difference and volume effect in the 3D space are not clearly observed. Next, we placed an additional object containing the text “3D” ~2 cm behind the dinosaur and generated an RS image and a hologram from the two objects. We captured two reconstructed images by focusing a digital camera on the dinosaur (Fig. 3a) and the “3D” text (Fig. 3b); the “3D” text and dinosaur images were blurred. These images indicate that the reconstructed holographic images showed a depth difference and volume effect in the 3D space.

Discussion

The difference in computation time is small between the 3,072 × 3,072 and 4,096 × 4,096 pixel RS images (Table 1). In other words, there is little difference in the computation time of the wavefront propagation, which requires the most time for hologram generation, between 3,072 × 3,072 and 4,096 × 4,096 RS images. This results from the computational efficiency of the two-dimensional (2D) FFTs. A convolution-based Fresnel diffraction calculation was employed to perform wavefront propagation (described in the Methods section); therefore, it takes more time to calculate a 2D FFT and inverse 2D FFT than other calculations. In addition, we employed zero padding to expand the resolution of the RS images from N × N pixels to 2 N × 2 N pixels (described in the Methods section). Table 2 shows the FFT computation time with a GPU and image resolution changes by zero padding. The computational efficiency of FFT reaches a maximum value when the number of elements is a power of two because the number of FFT calculations equals O(2n log n), where n is the number of elements. There is little difference in computational time between images with 3,072 × 3,072 pixels and those with 4,096 × 4,096 pixels. Moreover, the computational time at a resolution of 8,192 × 8,192 pixels is shorter than that at 6,144 × 6,144 pixels. Therefore, we consider that the reduction of wavefront propagation computation cost is effective for accelerating hologram generation. Figure 1f and g show the images reconstructed from the RS image at a resolution of 3,072 × 3,072 pixels. The resolution of the RS image was expanded to 4,096 × 4,096 (Fig. 1f) and 8,192 × 8,192 (Fig. 1g) pixels by zero padding. As shown in Table 2, a resolution of 4,096 × 4,096 is appropriate from a computational efficiency perspective. In addition, comparing the enlarged view of each reconstructed image, both Fig. 1f and g have the same image quality as Fig. 1d, which was obtained by expanding the RS image with a resolution of 3,072 × 3,072 pixels to 6,144 × 6,144 pixels. This indicates that the number of pixels required for zero padding is practically less than 2 N × 2 N, and it is possible to calculate the hologram and reconstruct the desired image from 3,072 × 3,072 expanded to 4,096 × 4,096 pixels using zero padding without practical problems. Supplementary Video 3 shows real-time reconstructed moving pictures from eighty 3,072 × 3,072 RS images expanded to 4,096 × 4,096 pixels. Table 3 shows the hologram generation computation time for expanding the RS image from 3,072 × 3,072 pixels to 4,096 × 4,096 pixels. We achieved approximately 30 frames per second by setting the resolution after conducting zero padding to an efficient resolution for FFT.

In addition, it is impossible to calculate a hologram from a 6,144 × 6,144-pixel RS image by performing 2 N × 2 N zero padding due to GPU memory buffer overflows that occur in FFT for wavefront propagation when the RS image resolution is expanded to 12,288 × 12,288 pixels. On the other hand, a hologram can be calculated from a 6,144 × 6,144 RS image by setting the zero padding resolution to 8,192 × 8,192 pixels, which reduces the amount of memory used. Figure 1h shows an image reconstructed from a 6,144 × 6,144 RS image. We confirm that the image reconstructed from the 6,144 × 6,144 RS image in Fig. 3c demonstrates higher image quality than the 4,096 × 4,096 RS image in Fig. 1e.

Methods

Hologram generation from RS plane

This section discusses the hologram generation method based on the RS plane24. Figure 4a shows the flow of the method. Here, a method that involves placing an RS plane near an object and placing a hologram plane distant from the object is used24. As shown in Fig. 4b, in this method, the first elemental images p i j [m, n] are sampled from the object at the (x i , y j ) coordinates on the RS plane. Here, m and n denote the x- and y-coordinates in each elemental image comprising M × N pixels, respectively, and I and J denote the number of elemental images in the horizontal and vertical directions, respectively. As shown in Fig. 4c, the elemental images are considered 2D images with different viewpoint positions of the object as obtained by a camera array. As a result, each pixel of the elemental image maintains information related to different light-rays with different intensities and directions; thus, it is possible to represent the light-ray using plane waves, i.e. the angular spectra24. However, the number of elemental images is equivalent to the resolution of the reconstructed image, and the resolution of the elemental image is equivalent to the angular resolution of the reconstructed image. Therefore, when the resolution of the elemental image is fixed, the resolution of the reconstructed image increases depending on the resolution of the RS image.

Figure 4d shows the relationship between the positions of the RS and hologram planes. The previous section discussed the acquisition of angular spectra by sampling light-ray information from the object. However, as shown in Fig. 4b, the RS plane is separate from the hologram plane; therefore, it is necessary to calculate the wavefront propagation from the RS plane to the hologram plane24. This section explains the method used to transform angular spectra to wavefront propagation. Here, the angular spectra acquired in the previous section are denoted by A(f X , f Y , 0). Subsequently, the complex optical field U(x, y, 0) required for wavefront information is expressed as follows:

here f X and f Y denote spatial frequencies in x and y directions. The FFT is expressed as follows:

furthermore, by comparing Eqs. (1) and (2), it can be seen that U(x, y, 0) is equivalent to the Fourier-transformed A(f X , f Y , 0). Therefore, U (x, y, 0) can be expressed as follows:

Figure 4e summarises the procedure used to transform light-ray information to wavefront information. The left side of the figure shows the elemental images maintaining light-ray information on the RS plane, and the right side represents the transformed light-ray information (i.e. the wavefront information). Here, the elemental image p i j [m, n] at (xi, y j ) was added by a random phase φ i j [m, n] to diffuse light. The range of the random phase corresponds to (0, 2π). Subsequently, the wavefront information P i j [k, l] is obtained via FFT as follows:

here j denotes the imaginary unit. Subsequently, wavefront information (i.e. the complex optical field) on the hologram plane is calculated by a propagation calculation from the RS plane to the hologram plane. Here, a convolution-based Fresnel diffraction calculation is used. An aperture plane (i.e. the complex optical field g(x, y)) and a screen u(X, Y) are assumed as shown in Fig. 4f, and the complex optical field u(X, Y) is represented by Fresnel diffraction and expressed as follows:

here, k = 2π/λ denotes the wave number and λ denotes the wavelength. Note that Eq. (5) is a convolution integral and can be represented using FFT as follows:

here, Fresnel diffraction is used, and the complex optical field W H [k H , l H ] on the hologram from the wavefront information W RS [k RS , l RS ] on the RS plane is expressed as follows:

where k H is 0, 1, …, IM − 1 and l H is 0, 1, …, JN − 1. In Eq. (7), the propagation calculation is represented by only a 2D FFT. Subsequently, zero padding is performed to prevent any aliasing noise from overlapping the desired reconstructed image. Here, we expand the resolution of RS images of N × N pixels to 2 N × 2 N pixels by employing zero padding, as shown in Fig. 5.

The complex optical field on the hologram is then converted to a phase-only hologram because a phase-modulated SLM was used. Therefore, a kinoform-type phase hologram H[k H , l H ] can be calculated as follows:

3D model specifications

Elemental images were acquired by scanning with a virtual camera using the Blender 3DCG modelling tool25. Here, the 3DCG object data are created by us. Figure 6 shows the front, side and top views of the virtual objects.

Note that the unit length is defined as 1 in Blender. A unit length of 1 in real space depends on the pixel pitch p, camera scanning distance d and the resolution of RS image s, and is obtained as follows:

We scanned the virtual camera for 4.0 on the x- and y-axis when the elemental images were acquired. Figure 6d shows the elemental images. Here, the resolutions of the RS images are 2,048 × 2,048, 3,072 × 3,072 and 4,096 × 4,096 pixels, and the number of elemental images for each RS image is 128 × 128 ply, 192 × 192 ply and 256 × 256 ply. The resolution for all elemental images is 16 × 16 pixels, which is the same for all RS images.

Implementing GPU method

This section explains the manner in which the hologram generation method based on the RS plane was implemented. The implementation was executed using three GPUs, and red, green and blue holograms were calculated by each GPU. Then, we used one of three GPUs for delivering a hologram to the SLMs. We transferred the colour holograms to the RGB splitter, which divided the colour-synthesized input signal into three output signals (red, green and blue) via digital visual interface cables, and monochromatic holograms were displayed on the SLMs.

Note that acquiring wavefront information from light-ray information requires a similar number of FFTs as the number of elemental images. The process of 2D FFT for each elemental image corresponds to one of the heaviest computational processes in this method. This is followed by using the cuFFT library, an FFT CUDA library. Moreover, cufftPlanMany26, which is one of the function of cuFFT library, is used to accelerate the 2D FFT process of elemental images as cufftPlanMany can parallelise several 2D FFTs and can realise speedy execution of the 2D FFT process.

Note that the calculations in Eq. (8) are independent relative to each pixel. The calculation is parallelised by allotting the calculation of each pixel to each GPU thread. The numbers of blocks and threads of a GPU correspond to those of the elemental images and pixels of each elemental image, respectively.

References

Hilaire, P. S. et al. Electronic display system for computational holography. Proc. SPIE 1212, Practical Holography IV, 174, Los Angeles, CA. https://doi.org/10.1117/12.17980, May 1 (1990).

Hashimoto, N., Morokawa, S. & Kitamura, K. Real-time holography using the high-resolution LCTV-SLM. Proc. SPIE 1461, Practical Holography V, 291, https://doi.org/10.1117/12.44740, July 1 (1991).

Lucente, M. Optimization of hologram computation for real-time display. Proc. SPIE 1667, Practical Holography VI, 32, San Jose, CA. https://doi.org/10.1117/12.59617, May 1 (1992).

Senoh, T., Mishina, T., Yamamoto, K., Oi, R. & Kurita, T. Viewing-zone-angle-expanded color electronic holography system using ultra-high-definition liquid crystal displays with undesirable light elimination. J. Display Technology 7, 382–390 (2011).

Tsang, P., Cheung, W.-K., Poon, T.-C. & Zhou, C. Holographic video at 40 frames per second for 4-million object points. Opt. Express 19, 15205–15211 (2011).

Seo, Y. et al. A New System Implementation for Generating Holographic Video using Natural Color Scene. J. Broadcast Eng. 18, 149–158 (2013).

Son, J.-Y., Lee, B.-R., Chernyshov, O. O., Moon, K.-A. & Lee, H. Holographic display based on a spatial DMD array. Opt. Lett. 38, 3173–3176 (2013).

Kujawinska, M. et al. Multiwavefront digital holographic television. Opt. Express 22, 2324–2336 (2014).

Xue, G. et al. Multiplexing encoding method for full-color dynamic 3D holographic display. Opt. Express 22, 18473–18482 (2014).

Kakue, T. et al. Aerial projection of three-dimensional motion-picture by electro-holography and parabolic mirrors. Sci. Rep. 5, 11750 (2015).

Takaki, Y. & Nakaoka, M. Scalable screen-size enlargement by multi-channel viewing-zone scanning holography. Opt. Express 24, 18772–18781 (2016).

Lim, Y. et al. 360-degree tabletop electronic holographic display. Opt. Express 24, 24999–25009 (2016).

Waters, J. P. Holographic image synthesis utilizing theoretical methods. Appl. Phys. Lett. 9, 405–406 (1966).

Ichihashi, Y. et al. HORN-6 special-purpose clustered computing system for electroholography. Opt. Express 17, 13895–13903 (2009).

Nakayama, H. et al. Real-time color electroholography using multiple graphics processing units and multiple high-definition liquid-crystal display panels. Appl. Opt. 49, 5993–5996 (2010).

Niwase, H. et al. Real-time electroholography using a multiple-graphics processing unit cluster system with a single spatial light modulator and the InfiniBand network. Opt. Eng. 55, 093108 (2016).

Hasegawa, N., Shimobaba, T., Kakue, T. & Ito, T. Acceleration of Hologram Generation by Optimizing the Arrangement of Wavefront Recording Planes. Appl. Opt. 56, A97–A103 (2017).

Nishitsuji, T., Shimobaba, T., Kakue, T. & Ito, T. Review of Fast Calculation Techniques for Computer-generated Holograms with the Point Light Source-based Model. IEEE Trans. Industr. Inform. (2017).

Underkoffler, J. S. Occlusion processing and smooth surface shading for fully computed synthetic holography. Proc. SPIE 3011, Practical Holography XI and Holographic Materials III, 19, San Jose, CA. https://doi.org/10.1117/12.271359, April 10 (1997).

Matsushima, K. Exact hidden-surface removal in digitally synthetic full-parallax holograms. Proc. SPIE 5742, Practical Holography XIX: Materials and Applications, 25, San Jose, California, United States. https://doi.org/10.1117/12.592520, May 5 (2005).

Yamaguchi, K. & Sakamoto, Y. Computer generated hologram with characteristics of reflection: reflectance distributions and reflected images. Applied Optics 48, H203–H211 (2009).

Yatagai, T. Stereoscopic approach to 3-D display using computer-generated holograms. Appl. Opt. 15, 2722–2729 (1976).

Mishina, T., Okui, M. & Okano, F. Calculation of holograms from elemental images captured by integral photography. Appl. Opt. 45, 4026–4036 (2006).

Wakunami, K. & Yamaguchi, M. Calculation for computer generated hologram using ray-sampling plane. Opt. Express 19, 9086–9101 (2011).

Blender https://www.blender.org/ (2017).

cuFFT:: CUDA Toolkit Documentation - NVIDIA Documentation. http://docs.nvidia.com/cuda/cufft/#axzz4HlSjFAVV (2017).

Acknowledgements

This work was partially supported by JSPS Grant-in-Aid No. 25240015 and the Institute for Global Prominent Research, Chiba University.

Author information

Authors and Affiliations

Contributions

T.K., Y.I., K.Y. and T.I. directed the project. H.N. created the 3DCG object data. H.S. calculated the holograms with T.K., Y.I., K.W., R.O. and K.Y., H.S. accelerated the hologram calculation processing with T.K., Y.E., T.S. and T.I., H.S. and T.K. designed and performed the hologram reconstruction experiments. All authors contributed to discussions.

Corresponding author

Ethics declarations

Competing Interests

The authors declare that they have no competing interests.

Additional information

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Sato, H., Kakue, T., Ichihashi, Y. et al. Real-time colour hologram generation based on ray-sampling plane with multi-GPU acceleration. Sci Rep 8, 1500 (2018). https://doi.org/10.1038/s41598-018-19361-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-018-19361-7

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.