Abstract

Accurate time perception is critical for a number of human behaviours, such as understanding speech and the appreciation of music. However, it remains unresolved whether sensory time perception is mediated by a central timing component regulating all senses, or by a set of distributed mechanisms, each dedicated to a single sensory modality and operating in a largely independent manner. To address this issue, we conducted a range of unimodal and cross-modal rate adaptation experiments, in order to establish the degree of specificity of classical after-effects of sensory adaptation. Adapting to a fast rate of sensory stimulation typically makes a moderate rate appear slower (repulsive after-effect), and vice versa. A central timing hypothesis predicts general transfer of adaptation effects across modalities, whilst distributed mechanisms predict a high degree of sensory selectivity. Rate perception was quantified by a method of temporal reproduction across all combinations of visual, auditory and tactile senses. Robust repulsive after-effects were observed in all unimodal rate conditions, but were not observed for any cross-modal pairings. Our results show that sensory timing abilities are adaptable but, crucially, that this change is modality-specific - an outcome that is consistent with a distributed sensory timing hypothesis.

Similar content being viewed by others

Introduction

In recent decades many attempts have been made to understand the processes underlying timing judgements. Notable theoretical developments include proposals for internal clock mechanisms1,2 that manage converting objective time into subjective time3. At the heart of the internal clock model sits the presence of an internal regulator or ‘pacemaker’ mechanism that emits a steady series of pulses. The number of pulses emitted during a particular time window are then counted by an ‘accumulator’ which subsequently determines temporal duration2. On the other hand, the channels hypothesis suggests that, analogous to visual modules of motion and orientation, distinct subcortical channels exist dedicated to processing specific features of time4,5. Efforts to deconstruct the components of subjective time have used duration judgements of intervals6,7, comparing timing abilities across senses with cross-modal interval discrimination8,9 and assessing the accuracy and flexibility of timing judgements by varying sensory presentation through induced asynchrony adaptation10.

Fundamentally, it is not yet clear whether sensory timing mechanisms operate on a centralised and supramodal basis with one, generalised timing faculty regulating timing across sensory systems or, rather, that distributed mechanisms exist with multiple internal clocks overlooking each individual sense11,12,13,14. Evidence from rate perception experiments, show that concurrently presented auditory stimuli bias judgements of visual flicker, suggesting mechanisms exist that allow the shared communication (and influence) of temporal information across the senses; consistent with a centralised rate processing mechanism15,16. Conversely, in studies of duration adaptation, it has been found that after repeated presentations of specific durations, contingent after-effects were found across unimodal conditions, yet the same effects were absent cross-modally. These results are at odds with the centralised theory of timing and instead suggest the presence of distinct and distributed mechanisms, each separately modulating the perception of time17.

The judgement of temporal rate is one area that has produced substantial disagreement in previous literature. Becker & Rasmussen18 demonstrated strong repulsive after-effects on a test beat, following adaptation to a train of beats of varying temporal frequency. Adapting to a fast train of beats made a moderate train appear slower, and vice versa. Crucially, however, adapting to an auditory beat had no effect on a subsequent test train of visual flashes – in other words, the after-effect was limited to the sensory modality of the adapting stimulus. A very different outcome was reported in a more recent study by Levitan and colleagues19. After being exposed to a 5 Hz temporal frequency presentation, a 4 Hz presentation was perceived as slower than its physical rate. Again, the effect occurs bi-directionally in the sense that after being exposed to a 3 Hz temporal frequency modulation, the same 4 Hz test stimulus then appeared much faster. Critically, Levitan and colleagues reported that adaptation-induced after-effects transferred across modalities (bi-directionally in audition and vision). This result is somewhat surprising as temporal frequency perception is generally thought to be a low-level process operating during the earlier stages of sensory analysis, perhaps as early as receptor surfaces. Thus, a temporal frequency after-effect that transfers across modalities implies that these early sensory pathways are also cross-modal in nature. Controversy over the existence, or otherwise, of cross-modal adaptation after-effects can also be found in other studies of sensory timing15,20,21,22. Disagreement over such a fundamental issue of centralised versus distributed timing mechanisms significantly limits our progress in developing models of how humans quantify sensory time. It is currently unclear whether we should promote a supramodal, central timing mechanism or a series of timing mechanisms co-existing and operating similarly to one another in different senses23.

Here we address this issue by mapping out the magnitude and temporal extent of rate adaptation effects across the auditory and visual systems and extend these findings to the previously unexplored tactile modality. In particular, we ask whether adaptation in one sense carries over to representation in a different sensory modality, using a method of rate reproduction.

Results

Mean reproduction and corresponding mean standard error values were calculated for each adapting temporal frequency for each sensory combination. A best-fitting curve was fitted to the data representing the first derivative of a Gaussian, as in Heron et al.5 to extract relevant parameters such as the magnitude and extent of any adaptation effects.

Figure 1 shows this function fitted to a data set from one observer, in a condition where the adapting and test stimuli were of the same modality (both visual). The data demonstrate that adapting to a slower rate than 3 Hz, results in a 3 Hz test stimulus appearing faster than it actually is (close to 3.5 Hz for this observer), whereas adapting to a faster rate than 3 Hz subsequently makes the same 3 Hz presentation feel significantly slower than it actually is; a bi-directional after-effect. This adaptive shift is band-limited, in that the matching frequency tends to return to baseline levels (or at least level off in magnitude) for large temporal differences between adaptor and test. This pattern of results is entirely consistent with the rate after-effects presented by Becker and Rasmussen18, and is also typical of other classic repulsive after-effects that result from visual adaptation24,25,26,27.

Example data for the unimodal visual condition. The subject was exposed to a range of adapting temporal frequencies in the visual modality and was required to match the perceived rate of a subsequent 3 Hz test stimulus, also in the visual modality. The curve fit to the data represents the optimum (least-squared residuals) fit of Equation 1. Vertical blue arrows indicate the amplitude of the effect (µ) and the horizontal red arrows indicate the spread of effect (σ), error bars indicate standard error.

Data for this same subject are now plotted for all nine possible adapt/test combinations of the three stimulus modalities (Table 1, Fig. 2). The key to the sensory combinations is shown in the following table (adapting modality followed by test modality; ‘A’ denotes auditory, ‘T’ denotes tactile and ‘V’ visual).

Data for all nine adapt/test stimulus pairings for subject DW. The sensory combination is shown at the top of each plot and follows the key presented previously. The three unimodal conditions are shown diagonally from top left to bottom right. Error bars indicate standard error. See text for further description.

Note first that the reproduced rates of the 3 Hz test stimulus are not consistently veridical. This particular subject tends to overestimate rate for the tactile stimuli (right hand column) and, to a lesser extent, the auditory test stimuli (left hand column). This type of individual variation in perceived rate across sensory domains been reported previously28,29. Of more interest, however, is the modulation in perceived rate as a temporal rate difference is introduced between the adapting and test stimuli. Two clear effects emerge; first, robust repulsive after-effects are found for each of the unisensory conditions. Second, for all other conditions (the cross-modal conditions) there are no systematic variations in the magnitude of adaptation effects across the adapt/test stimulus range. Equivalent plots for two other observers are also presented below (Figs 3 and 4) and show a very similar pattern of results.

As for Fig. 2 but for subject AM.

As for Fig. 2 but for subject YL.

The effect size of the adaptation effect (μ) and temporal extent (spread) of the adaptation effects (σ) are shown in Table 2 for each of the unimodal conditions. Subject AM shows the largest adaptation effects, averaging 0.66 Hz (22% of baseline temporal frequency). No clear pattern emerges of a consistent difference in amplitude of effect size across the three sensory conditions. Subject DW shows the tightest tuning of effect size, with the largest temporal spread of effect shown by the naïve observer YL. Again, no clear pattern of any inter-sensory differences in spread of effect is evident. The significance of effect sizes for all nine sensory combinations were calculated by dividing the amplitude (μ) value by its standard error. A two-tailed, one-sample t-test (df = 6) was then conducted for each subject in each condition and a further Holm-Bonferroni correction was conducted for all subjects to determine the significance of adaptation effects. All unimodal conditions show highly significant effect sizes (p < 0.01), but this is not the case for any cross-modal pairings (p > 0.05) (Table 2).

A potential problem with the data presented thus far is that observers were always aware of the test modality to be presented, and which they subsequently were required to reproduce. There is the possibility that observers somehow failed to attend to the adapting stimulus if they were aware that the test stimulus was going to be in a different modality. Becker and Rasmussen18 acknowledged this possibility, whilst considering it unlikely to have affected their findings. Levitan et al.19 went a step further, and actively controlled attention to the adapting stimulus by the use of a practical gap-counting paradigm during the adaptation phase. We therefore ran a control experiment using the auditory/visual pairing in which the modality of the test stimulus was unknown to the observer – 50% of trials were auditory, the other 50% were visual. The paradigm is a simple one – any purposeful attentional strategy during the adaptation phase would affect both auditory and visual test stimuli alike, meaning that any adaptation effects should either be present or absent in both test conditions. Alternatively, should adaptation persist in the unimodal condition yet remain absent in the cross-modal pairing, then the potentially contaminating role of attention during the adaptation phase can be eliminated.

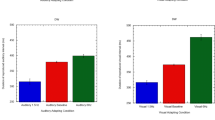

Results are shown in Fig. 5 for subject DW (for other subjects see Supplementary Figures S1 and S2 and Supplementary Table S1). The findings are conclusive – marked after-effects occur for both adaptation conditions (auditory, left column; visual, right column) only when the test stimulus is of the same modality (upper plots), but not when they are a different modality (lower figures).

Data from the control experiment using the auditory/visual pairing (subject DW). Left-hand plots represent the auditory adaptation condition, right-hand plots visual adaptation. Upper plots represent unimodal conditions (adapt and test same modality), lower plots cross-modal conditions; error bars indicate standard error. See text for a description of the control methodology.

Discussion

Our findings reveal strong band-limited repulsive after-effects for rate perception in all three sensory systems of audition, touch and vision. However, rate perception is unchanged when the modality of the adapting stimulus differs from that of the test. Adaptation is thought to result from the sensory history of neural populations; when the adapting and test stimuli activate overlapping populations, the effects on perception are revealed as repulsive after-effects. Thus, evidence that rate after-effects fail to transfer across sensory modalities suggests that rate adaptation occurs relatively early in the sensory processing hierarchy, perhaps in the sensory cortices themselves. There is physiological evidence for temporal frequency-selective neurons within the respective cortices30,31 and these may well form the basis for temporal ‘channels’32,33. The processing of temporal rate therefore appears to share a strong commonality with other channel-based temporal judgments such as perceived duration5,17 despite suggested differences in neural substrates34.

Neural evidence for ‘tuned’ temporal representations in humans was recently presented by Hayashi et al.35 using an fMRI duration adaptation paradigm. When a subject was repeatedly presented with stimuli of the same duration, a substantially decreased level of activity was reported in the right inferior parietal lobule (IPL). Testing on a range of subsecond durations produced the same result, suggesting neurons in the human IPL are preferentially tuned to specific subsecond durations35. Studies of perceptual learning in the auditory domain also show that learning effects are ‘tuned’ to the temporal interval that has been trained36,37. Similar temporally-specific practice effects are found in somatosensory interval discrimination38,39. These findings suggest a dedicated circuitry underlying the processing of specific timeframes, reinforcing the band-limited tuning found in the current rate adaptation experiments.

There is some evidence to suggest that the processing of sensory time is spatially-specific38,40. In addition, some multisensory interactions are extinguished when component unisensory stimuli are presented at sufficiently disparate spatial locations41. It may be, therefore, that after-effects are only present when the spatial locations of sensory stimuli overlap. Our main experiments presented auditory stimuli over headphones and visual stimuli on a display – perhaps this is the reason behind our inability to find cross-modal adaptation effects? To address this potential criticism, we conducted a further control experiment in which auditory and visual stimuli were spatially coincident. This was achieved by projecting visual stimuli onto a thin fabric sheet allowing transparency of acoustic signals, and simultaneously projecting auditory stimuli via a speaker placed directly behind the screen. All other features of the set-up were kept consistent with our main experiment. Data was gathered for all possible pairings encompassing the auditory and visual modalities (AA, VV, AV, VA) over a minimum of 105 trials for each observer. Sample data from one observer is presented below (Fig. 6) and plots from another observer and data from all statistical tests are in supplementary materials (Supplementary Figure S3 and Supplementary Table S2):

Data for all four adapt/test stimulus pairings for subject AM where stimuli were spatially and temporally overlapped. The sensory combination is shown at the top of each plot. The two unimodal conditions are shown in the top panel (left; AA, right; VV) whereas the cross-modal conditions are presented in the lower panel (left; AV, right; VA). Error bars indicate standard error. See text for further description.

Results clearly follow the same pattern of findings as our main and control experiments: adapting to a given rate in the adapting phase significantly affects the perception of a test rate but only when both adapting and test stimuli belong to the same sensory modality. Our findings indicate that, whilst some temporal aftereffects become manifest only when adapting and test stimuli are co-localised in space, this is not sufficient to produce any cross-modal effects in sensory rate adaptation.

Our data are consistent with those of Becker and Rasmussen18 even when extended to a new sensory modality (tactile) and show that temporal rate perception is highly specific to sensory modality. The question arises as to why Levitan et al.19 found significant cross-modal effects in rate perception? Their experimental approach was to use a method of single stimuli in which the frequency of a test sequence was compared with an internal mean, generated through an initial testing phase. This is a very efficient method, yet it has the much-criticised disadvantage42 that the internal mean can be readily corrupted by subsequent stimulus presentation. Levitan and colleagues employed a lengthy period of rate adaptation, yet, they also employed a lengthy period of test stimulus presentation and no ‘top-up’ adaptation thereby potentially neglecting the role of decay in adaptation after-effects or corruption of the internal mean by the test stimuli themselves. However, inconsistent with this explanation is the fact that their effects, like ours, were tuned. A 4 Hz signal in one sense was distorted by a 5 Hz adaptor in another, yet it was less affected by an 8 Hz adaptor, and not at all by 12 Hz. This suggests that their effects were genuine sensory distortions resulting from adaptation rather than higher-level distortions in an internal cognitive representation.

Some studies suggest the existence of both low-level, modality-specific timing mechanisms and higher-level modality-independent processes. Stauffer et al.43 modelled the accuracy of rhythm perception in different senses and found their data were best described by a two-stage model. Further support for the existence of a common, amodal timing mechanism comes from the duration literature44. Additionally, results from interval timing suggest a level of interaction between independent auditory and visual processing systems45. It is reported that when subjects are instructed to compare standards of continuously presented and flickering visual stimuli, whilst ignoring simultaneously presented auditory flutters, auditory flutters influence the subjective judgements of visual stimuli. Results suggest that whilst interval timing perception is largely governed by modality-dependent mechanisms, interactions between auditory and visual modalities can, on occasion, modulate perception45. The very fact that we are able to compare temporal rate in one sense with that in another indicates that some higher-level analysis of multisensory rate must exist, but our data indicate that this comparison stage is not susceptible to cross-modal rate adaptation. Levitan’s data however, seem to suggest that these after-effects are bandwidth-limited for both low-level modality-independent processes and also for their higher-level, modality-independent counterparts.

Our perceptual system appears to deal with sensory input on a dynamic basis. Interestingly, when sensory stimuli are presented concurrently, temporal information from the two senses interferes with one another in subsequent rate discrimination tasks46 yet when the same sensory stimuli are presented successively, as they were in the present tasks, rate perception appears to remain segregated by sensory modality. Thus, the structure of sensory presentation holds influence over how segregated temporal processing will be, suggesting that adaptation mechanisms precede multisensory integration.

In summary, using a sensory adaptation paradigm we demonstrate that our senses are rapidly susceptible to adaptation effects in the perception of temporal rate, but only when adaptation and test are of the same modality. This supports the existence of distributed timing mechanisms, each specific to a particular sensory modality. Future work might examine whether similar adaptation effects occur in the perception of single intervals (gaps between brief sensory pairs). This would tell us whether there is something special about ‘rate’ or whether it is simply equivalent to a train of sensory ‘gaps’.

Methods

Subjects

3 participants (2 female and 1 male) (mean age = 33) participated, with self-reported normal hearing and visual abilities. Following initial practice sessions, a lengthy process (20–25 hours) of data collection began, in a series of sessions spread over several weeks. Two of the participants (authors) had previous experience of psychophysical data collection. The third participant had no such experience and was naïve to the purpose of the experiments. The experiments received ethical approval from the Research Ethics Committee at the School of Optometry and Vision Sciences, University of Cardiff and all experiments were performed in accordance with relevant guidelines and regulations. Informed consent was obtained for study participation.

Stimuli

Brief (16 msec duration) sensory stimuli were presented – either in the auditory, visual or somatosensory modality and all stimuli were grossly suprathreshold. Stimulus generation and presentation was controlled by an Intel ® Core ™ i5–4460 desktop computer running Microsoft Windows 7. The programming environment involved MATLAB 8.6 (Mathworks, USA) in combination with Psychophysics Toolbox 3 (http://www.psychtoolbox.org). Stimulus timing was verified using a dual-channel oscilloscope.

Visual

Visual stimuli were presented on an Eizo EV2436W monitor. These were bright (274 cd/m2) white circular flashes presented centrally against a uniform dark background (0.32 cd/m2). Stimulus duration was a single frame (approximately 16ms at the monitor frame rate of 60 Hz). At the viewing distance of 60 cm the circular flash subtended a diameter of approximately 10.5 degrees of visual angle.

Auditory

Auditory stimuli consisted of brief (16ms duration) bursts of white noise generated by a Xonar Essence STX (ASUS) soundcard (https://www.asus.com/us/Sound-Cards/Xonar_Essence_STX/) with a sampling rate of 44,100 Hz. Stimuli were delivered using Sennheiser HD 280 Pro Headphones at an SPL of 70 dB. Auditory stimuli for the second control experiment where auditory and visual stimuli arose from the same spatial location were delivered using a TEAC two-way speaker system (http://www.teac-audio.eu/en/products/ls-101hr-129505.html).

Tactile

Tactile stimuli were produced using the amplified (LP-2020A + Lepai Tripath Class-T Hi-Fi Audio Mini Amplifier) ‘audio-out’ voltage of the sound card which controlled a miniature electromagnetic solenoid-type stimulator (Dancer Design Tactor http://www.dancerdesign.co.uk/products/tactor.html). Using brief (16ms) audio bursts of white noise the Tactor produced taps to the index finger of the left hand. The Tactor was enclosed within a fabric occluder in order to eliminate the possibility of auditory cues.

Responses

Subjects were required to reproduce a given sensory rate by tapping with their right forefinger on a piezoelectric transducer (https://www.amazon.co.uk/Piezo-electric-disk-transducer-15mm/dp/B01K8X9E5K). The resulting voltage output was fed to the ‘audio in’ of the soundcard as a recording which was analysed within MATLAB to extract the average temporal frequency of tapping. The transducer was enclosed in a sound-dampening environment and shielded from sight of the subject. To further eliminate the possibility of auditory feedback, white noise was played via the headphones throughout the tapping response phase.

Procedure

A single trial began with an adaptation period (8–10 seconds) of a pre-chosen temporal frequency (ranging between 1.06 Hz and 8.46 Hz in seven logarithmically-spaced steps). This was followed by a test phase (lasting between 2 and 2.5 seconds) of 3 Hz stimulation, after which the subjects were required to reproduce the perceived rate of the test phase by tapping the piezoelectric transducer for approximately 5 seconds. Subjects knew to start responding when they heard the white-noise mask begin (to eliminate a possible auditory cue from tapping) (Fig. 7).

Schematic demonstrating the three phases of each trial (see text for details). Response phase drawing accessed from www.iconsmind.com.

After a total of 5 trials the mean of the tapping rate in the response phase was presented on the computer monitor and recorded. A break of at least 3 minutes was then taken before another run with a different adapting frequency and adapt/test pairing – this was to ensure no adaptation effects crossed-over from one run to the next. Each adapting temporal frequency for each adapt/test pairing was repeated 5 times in total to give a mean and standard error of the mean. The order of testing conditions was randomised. Additionally, a control experiment was conducted using the audio-visual pairing, in which the modality of the test phase was unknown to the observer on any trial.

The datasets generated and analysed during the current study are available from the corresponding author on reasonable request.

References

Gibbon, J., Malapani, C., Dale, C. L. & Gallistel, C. Toward a neurobiology of temporal cognition: Advances and challenges. Current Opinion in Neurobiology 7, 170–184 (1997).

Treisman, M. Temporal discrimination and the indifference interval: Implications for a model of the “internal clock”. Psychological Monographs: General and Applied 77, 1–31 (1963).

Danckert, J. A. & Allman, A. A. Time flies when you’re having fun: Temporal estimation and the experience of boredom. Brain Cognition 59, 236–245 (2005).

Ivry, R. B. The representation of temporal information in perception and motor control. Current Opinion in Neurobiology 6, 851–857 (1996).

Heron, J. et al. Duration channels mediate human time perception. Proceedings of the Royal Society of London B: Biological Sciences 279, 690–698 (2012).

Grondin, S., Roussel, M. E., Gamache, P. L., Roy, M. & Ouellet, B. The structure of sensory events and the accuracy of time judgments. Perception 34, 45–58 (2005).

Drake, C. & Botte, M. C. Tempo sensitivity in auditory sequences: Evidence for a multiple-look model. Perception & Psychophysics 54, 277–286 (1993).

Penney, T. B., Gibbon, J. & Meck, W. H. Differential effects of auditory and visual signals on clock speed and temporal memory. Journal of Experimental Psychology: Human Perception and Performance 26, 1770–1787 (2000).

Wearden, J. H., Edwards, H., Fakhri, M. & Percival, A. Why “sounds are judged longer than lights”: Application of a model of the internal clock in humans. Quarterly Journal of Experimental Psychology: Section B 51, 97–120 (1998).

Roach, N. W., Heron, J., Whitaker, D. & McGraw, P. V. Asynchrony adaptation reveals neural population code for audio-visual timing. Proceedings of the Royal Society B: Biological Sciences 278, 1314–1322 (2010).

Ivry, R. B. & Schlerf, J. E. Dedicated and intrinsic models of time perception. Trends in Cognitive Sciences 12, 273–280 (2008).

Merchant, H., Harrington, D. L. & Meck, W. H. Neural basis of the perception and estimation of time. Annual Review of Neuroscience 36, 313–336 (2013).

Bruno, A. & Cicchini, G. M. Multiple channels of visual time perception. Current Opinion in Behavioral Sciences 8, 131–139 (2016).

Murai, Y., Whitaker, D. & Yotsumoto, Y. The centralized and distributed nature of adaptation-induced misjudgments of time. Current Opinion in Behavioral Sciences 8, 117–123 (2016).

Recanzone, G. H. Auditory influences on visual temporal rate perception. Journal of Neurophysiology 89, 1078–1093 (2003).

Shipley, T. Auditory flutter-driving of visual flicker. Science 145, 1328–1330 (1964).

Walker, J. T., Irion, A. L. & Gordon, D. G. Simple and contingent aftereffects of perceived duration in vision and audition. Perception & Psychophysics 29, 475–486 (1981).

Becker, M. W. & Rasmussen, I. P. The rhythm aftereffect: Support for time sensitive neurons with broad overlapping tuning curves. Brain and Cognition 64, 274–281 (2007).

Levitan, C. A., Ban, Y. H. A., Stiles, N. R. B. & Shimojo, S. Rate perception adapts across the senses: Evidence for a unified timing mechanism. Scientific Reports 5, 8857 (2015).

Chen, L. & Zhou, X. Fast transfer of crossmodal time interval training. Experimental Brain Research 232, 1855–1864 (2014).

Welch, R. B., DutionHurt, L. D. & Warren, D. H. Contributions of audition and vision to temporal rate perception. Perception & Psychophysics 39, 294–300 (1986).

Li, B., Yuan, X. & Huang, X. The aftereffect of perceived duration is contingent on auditory frequency but not visual orientation. Scientific Reports 5, 10124 (2015).

van Wassenhove, V., Buonomano, D. V., Shimojo, S. & Shams, L. Distortions of subjective time perception within and across senses. PloS ONE 3, e1437 (2008).

Blakemore, C. T. & Campbell, F. On the existence of neurones in the human visual system selectively sensitive to the orientation and size of retinal images. Journal of Physiology 203, 237–260 (1969).

Barlow, H. B. & Hill, R. M. Evidence for a physiological explanation of the waterfall phenomenon and figural after-effects. Nature 200, 1345–1347 (1963).

Maffei, L., Fiorentini, A. & Bisti, S. Neural correlate of perceptual adaptation to gratings. Science 182, 1036–1038 (1973).

Thompson, P. & Burr, D. Visual aftereffects. Current Biology 19, R11–R14 (2009).

Grondin, S. & Rousseau, R. Judging the relative duration of multimodal short empty time intervals. Perception & Psychophysics 49, 245–256 (1991).

Grondin, S. Overloading temporal memory. Journal of Experimental Psychology: Human Perception and Performance 31, 869–879 (2005).

Hawken, M. J., Shapley, R. M. & Grosof, D. H. Temporal-frequency selectivity in monkey visual cortex. Visual Neuroscience 13, 477–492 (2009).

Mendelson, J. R. & Cynader, M. S. Sensitivity of cat primary auditory cortex (Al) neurons to the direction and rate of frequency modulation. Brain Research 327, 331–335 (1985).

Smith, R. A. Studies of temporal frequency adaptation in visual contrast sensitivity. Journal of Physiology 216, 531–552 (1971).

Kay, R. H. & Matthews, D. R. On the existence in human auditory pathways of channels selectively tuned to the modulation present in frequency-modulated tones. Journal of Physiology 225, 657–677 (1972).

Teki, S., Grube, M., Kumar, S. & Griffiths, T. D. Distinct neural substrates of duration-based and beat-based auditory timing. Journal of Neuroscience 31, 3805–3812 (2011).

Hayashi, M. J. et al. Time adaptation shows duration selectivity in the human parietal cortex. PLoS Biology 13, e1002262 (2015).

Karmarkar, U. R. & Buonomano, D. V. Temporal specificity of perceptual learning in an auditory discrimination task. Learning & Memory 10, 141–147 (2003).

Wright, B. A., Buonomano, D. V., Mahncke, H. W. & Merzenich, M. M. Learning and generalization of auditory temporal–interval discrimination in humans. Journal of Neuroscience 17, 3956–3963 (1997).

Johnston, A., Arnold, D. H. & Nishida, S. Spatially localized distortions of event time. Current Biology 16, 472–479 (2006).

Nagarajan, S. S., Blake, D. T., Wright, B. A., Byl, N. & Merzenich, M. M. Practice-related improvements in somatosensory interval discrimination are temporally specific but generalize across skin location, hemisphere, and modality. Journal of Neuroscience 18, 1559–1570 (1998).

Burr, D., Tozzi, A. & Morrone, M. C. Neural mechanisms for timing visual events are spatially selective in real-world coordinates. Nature Neuroscience 10, 423–425 (2007).

Meredith, M. A. & Stein, B. E. Spatial factors determine the activity of multisensory neurons in cat superior colliculus. Brain Research 365, 350–354 (1986).

García-Pérez, M. A. Does time ever fly or slow down? The difficult interpretation of psychophysical data on time perception. Frontiers in Human Neuroscience 8, 415 (2014).

Stauffer, C. C., Haldemann, J., Troche, S. J. & Rammsayer, T. H. Auditory and visual temporal sensitivity: Evidence for a hierarchical structure of modality-specific and modality-independent levels of temporal information processing. Psychological Research 76, 20–31 (2012).

Filippopoulos, P. C., Hallworth, P., Lee, S. & Wearden, J. H. Interference between auditory and visual duration judgements suggests a common code for time. Psychological Research 77, 708–715 (2013).

Yuasa, K. & Yotsumoto, Y. Opposite distortions in interval timing perception for visual and auditory stimuli with temporal modulations. PLoS ONE 10, e0135646 (2015).

Roach, N. W., Heron, J. & McGraw, P. V. Resolving multisensory conflict: A strategy for balancing the costs and benefits of audio-visual integration. Proceedings of the Royal Society B: Biological Sciences 273, 2159–2168 (2006).

Author information

Authors and Affiliations

Contributions

A.M., J.H., P.V.M., N.W.R. & D.W. contributed to the concept and design of the study; A.M. & D.W. collected and analysed the data; A.M., J.H., P.V.M., N.W.R. & D.W. contributed to preparation of the manuscript.

Corresponding author

Ethics declarations

Competing Interests

The authors declare that they have no competing interests.

Additional information

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Motala, A., Heron, J., McGraw, P.V. et al. Rate after-effects fail to transfer cross-modally: Evidence for distributed sensory timing mechanisms. Sci Rep 8, 924 (2018). https://doi.org/10.1038/s41598-018-19218-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-018-19218-z

This article is cited by

-

Temporal rate is not a distinct perceptual metric

Scientific Reports (2020)

-

Synchronising to a frequency while estimating time of vibro-tactile stimuli

Experimental Brain Research (2019)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.