Abstract

We report dielectric microsphere array-based optical super-resolution microscopy. A dielectric microsphere that is placed on a sample is known to generate a virtual image with resolution better than the optical diffraction limit. However, a limitation of such type of super-resolution microscopy is the restricted field-of-view, essentially limited to the central area of the microsphere-generated image. We overcame this limitation by scanning a micro-fabricated array of ordered microspheres over the sample using a customized algorithm that moved step-by-step a motorized stage, meanwhile the microscope-mounted camera was taking pictures at every step. Finally, we stitched together the extracted central parts of the virtual images that showed super-resolution into a mosaic image. We demonstrated 130 nm lateral resolution (~λ/4) and 5 × 105 µm2 scanned surface area using a two by one array of barium titanate glass microspheres in oil-immersion environment. Our findings may serve as a basis for widespread applications of affordable optical super-resolution microscopy.

Similar content being viewed by others

Introduction

The discovery of the photonic nanojet phenomenon generated by a lens-like dielectric micro-object opened a new chapter in optical microscopy in 20041. Placing such micro-object over a sample allowed imaging with a resolution better than predicted by Abbe’s law of diffraction2. Since then, many groups investigated, the nature of the photonic nanojet phenomenon3,4,5,6,7,8. Recently, it became an accepted statement that the photonic nanojet is a narrow light beam with high optical density emerging over a length ~2λ away from the micro-object that created it with a full-width-at-half-maximum (FWHM) of ~λ/39. Parallel to the investigations of understanding and imaging the phenomenon itself, different micro-objects that are capable of creating such a nanojet for imaging purposes were studied10,11, the most common of which being nano-scale lenses2,12, polymer microdroplets13 and dielectric spheres both in the nanometer14 and micrometer range15,16. Subsequently, the research focus shifted towards applications17,18,19,20,21,22,23,24,25,26, but in these papers super-resolution was achieved typically over a very small area that was comparable to the size of the used micro-object. Recently, it was demonstrated that the super-resolved area can be extended by various scanning methods27,28,29,30,31, including the super-resolution imaging ability of dielectric microspheres that were used in an atomic force microscopy (AFM) setup32,33. This technique extended the field-of-view of the imaging system, but also carried the drawbacks of an AFM system, namely the extreme sensitivity on vibration, requiring a dedicated setup. We have engineered the scanning principle and the super-resolution imaging capability of an array of dielectric microspheres into a robust experimental setup and thereby could upgrade a normal optical microscope to a super-resolution one.

Experimental setup

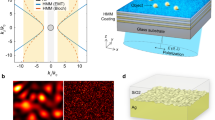

The working principle of our imaging system is explained in Fig. 1. As shown in Fig. 1a, if a dielectric microsphere with refractive index n sphere is placed underneath a light microscope’s objective and is surrounded by a medium with refractive index n medium , a photonic nanojet is created right under the microsphere. The position, shape and size of this photonic nanojet is determined by the wavelength of the illumination light (visible light in our study, 400 nm < λ < 700 nm, with peak at λ = 600 nm), the shape of the micro-object (which is spherical in our case) and the ratio between the two refractive indices (n sphere = 1.95 for the used barium titanate glass – BTG – microspheres and n medium = 1.56 for immersion oil). These parameters must be tuned to generate a photonic nanojet exiting exactly at the surface of the microsphere, to enable imaging sub-diffraction limited features of the sample34,35. When a sample is placed just underneath the microsphere and the incident light is reflected back from the sample (Fig. 1b) the modulation pattern from the sample is transferred through the microsphere, towards the microscope objective. However, besides development of the photonic nanojet, also the near-field interaction with the sample placed underneath the microsphere matters, so that the microsphere-based imaging resolution may become sample-dependent36. Therefore, in certain cases the super-resolution capability of a system can be only slightly better than the diffraction limit. Because of the geometrical optics properties of the microsphere, which acts as a lens, a virtual image will be projected about half the microsphere diameter distance below the sample plane. This virtual image plane can be placed in the focus of the microscope objective. An image, recorded while observing this plane will contain information about the sub-diffraction features, therefore enables super-resolution microscopy. The major drawback of such an imaging is that the field-of-view is limited to size of the central part of the microsphere. To overcome this limitation we established a scanning mechanism, with which we could restore the field-of-view to the full size of the microscope objective.

Operation principle of the imaging system. (a) Excitation: light approaches through the microscope objective towards the dielectric microsphere with diameter d. In absence of an object to be imaged in the light path, the dielectric microsphere generates a photonic nanojet on its shadow side, as is shown on the finite element simulation of the electric field in the inset. (b) If an object is present underneath the microsphere, reflection occurs: the simulation shows reflection from a sample consisting of a modulated pattern of eleven lines and spaces with dimensions below the diffraction limit. The modulation is preserved and the near-field information of the diffraction-limited sample is propagated into the far-field within the microsphere. At the same time, the microsphere acts as a lens and generates a virtual image at d/2 distance below the sample plane, as illustrated by the green cone.

Our setup consists of two major components as shown in Fig. 2. The first is a metal frame, which is composed from 30 mm cage system parts (Thorlabs, Germany) including an SM1Z, Z-axis translator that are fixed to the microscope objective (Fig. 2a,b). An in-house designed and fabricated aluminum element is attached to the inside thread of the SM1Z translator (Fig. 2c), the aim of which is to fix a glass-based microsphere array chip onto the objective (Fig. 2d). The role of the Z-axis translator between the objective revolver and the chip holder is to enable focus adjustment along the Z-axis, as needed for positioning the chip in the right focal plane prior to imaging based on our previous study in this topic37. The array chip was fabricated in the clean room using negative photoresist-based photolithography (Fig. 2e). The dimensions of the chip substrate is 22 × 22 × 0.15 mm3 and it is made of D 263 M borosilicate glass (Menzel-Gläser, Germany). After oxygen plasma cleaning, it was coated with 20 µm 3025 type SU8 (MicroChem, USA). The glass-chromium mask used for the lithography consisted of an array of 40 µm diameter wells with a pitch of 60 µm. After development, a 4 µl droplet of Norland Optical Adhesive 63 (NOA63, Norland Products, USA) was spread on the top of the well array. Then the chip was placed in a vacuum chamber for 20 minutes to remove the air bubbles stuck in the wells of the SU8 layer. Subsequently, we placed 38–45 µm diameter BTG (Cospheric, USA) microspheres on the NOA63 layer and swiped them over the surface until they were located in the wells. The excess amount of microspheres was removed to prevent them acting as a spacer during imaging. Finally, the chip was exposed to UV light until an accumulated dose of 4.5 Joules/cm2 was reached, which is required for curing the NOA63 glue.

Imaging setup. (a) Schematic of the optical microscope with our imaging setup attached. 1: Microscope revolver. 2: Optical cage (black) with spacers (metallic rods). 3: Microscope objective. 4: Aluminum adapter. 5: Immersion oil. 6: Chip template. 7: BTG microsphere. 8: Sample to be imaged. (b) Photograph of the optical cage system and the Z-axis translator. (c) Photograph of the bottom part of the optical cage with a custom aluminum holder attached to the Z-axis translator that clamps a glass microsphere array chip. (d) Photograph of the fabricated microsphere array chip, showing that the microspheres emerge from the surface plane of the chip. (e) Schematic of the fabrication process of the microsphere array chip. 1: A cover glass chip is treated with oxygen plasma. 2: 20 µm SU8 photoresist is spin-coated on the chip. 3: An array of microwells is patterned into the SU8 with photolithography. 4: A layer of NOA63 optical glue is placed on the top, leading to air bubbles stuck into the microwells. 5: The air bubbles are removed with vacuum treatment. 6: BTG microspheres are patterned into the microwells and fixed by UV curing of the optical glue.

We placed our sample on a motorized microscope stage (Axio Imager M2m with HAL100 halogen light source, Zeiss, Germany) which was controlled by our custom algorithm. The scanning protocol was established as follows: after an initial focus setting along the Z-axis, the microscope-attached camera (AxioCam MRm, Zeiss, Germany) took a picture, when focused on the virtual image plane of the sample. To make a single scanning step, the stage moved 5 µm downwards along the Z-axis to prevent scratching the sample and took one step along either the X- or the Y-axis, where the in-plane step-size was set by the user before the scanning. Finally, it moved back to the original Z-axis position and was ready for taking the next picture. This scanning process was repeated until the pre-set sample area was fully scanned. Hereafter, the saved pictures were cropped to the region of interest (ROI) and subsequently stitched together to create a big field-of-view, super-resolution image. We implemented a stitching algorithm that overlapped the regions in the image that were just outside the ROIs, to keep the useful amount of super-resolution pixels at maximum. To achieve that, we used the fact that the scanning went along a predefined path and that the useful area of a taken photograph was always at the same position, so that its size could be calculated in advance. Because of this, we did not have to use the conventional stitching algorithms where the edges of the tiles are compared pixel-to-pixel for stitching. During experiments we used a 63×, oil immersion, NA = 1.4 objective, which limited the field-of-view to a 2 × 2 array of microspheres. Therefore, we had up to four ROIs per picture. Since each ROI was limited by the central part of the microsphere, we could not use conventional stitching algorithms.

Results and Discussion

In Fig. 3a, one can see a typical image captured from the virtual image plane. Technically, up to four microspheres could fit into the field-of-view of the camera. Practically, because of the size distribution of the microspheres and the dependence of the sensitivity of the detection principle on the local distance between the sample and the microsphere surface, we chose to use two microspheres for easy simultaneous imaging. In the center of the two microspheres (marked with the green dashed circles in Fig. 3a) super-resolution imaging is enabled. The yellow and the blue rectangles mark the ROI that will be extracted for the final image. During imaging, the microspheres have a fixed position on the pictures, while the sample is scanned (Fig. 3b). In Fig. 3c a composed image of a silicon-based microscope calibration target (MetroBoost, USA) is shown. The calibration target shows L-shaped line-space patterns with 130, 140 and 150 nm line width, from the left to right, respectively. The patterns are repeated in every row; therefore, the patterns in row nine (marked as R9 S) are nominally the same as the ones in row eight (marked as R8 S). One can observe the individual tiles that were used for stitching (yellow and blue corresponds to the two microspheres) and the overlap between the two scanned areas. The reason for this overlap is the pre-set scanning parameters, as the step-size was set to 5 µm along both X- and Y-axis, meanwhile the full scanned area was 100 × 100 µm. Since the pitch distance of the microspheres is 60 µm, this resulted in a 40 µm-wide overlap area. Based on these results, it is possible to see the two major advantages of implementing scanning with multiple microspheres. With such a configuration, the scanning time could be reduced or the imaged area could be increased. The gain is proportional to the number of microspheres used during the process in both cases.

Demonstration of the microsphere scanning process. (a) Two microspheres of the array that are in the field-of-view of the microscope-mounted camera generate virtual images of the sample. The central regions of the microsphere-generated images (marked with green circles) show super-resolution. At every step of the scanning process, the inside squares (marked with yellow for the first and with blue for the second microsphere, respectively) are retained for generating the final image. (b) Schematics of the step-by-step scanning that is carried out using a motorized stage, controlled by an in-house developed scanning algorithm. (c) Final image at the end of the process. First, individual tiles, two of which are indicated by the white squares, are extracted from the center of the microsphere images and are stitched together to form a mosaic image. Next, the thus-generated mosaic images of the individual microspheres, indicated by yellow and blue tiles, are combined. Since the pitch of the microsphere array is smaller than the scanned area, overlap between the yellow tiles from the first microsphere and blue tiles from the second microsphere occurs. Scale bar 5 µm.

To determine the imaging performance of our system, we measured the modulation of line-space patterns with different lateral dimensions as shown in Fig. 4. Figure 4a is a typical example of an image of 140 nm line-space pattern, showing that lines are better resolved towards the center of the microsphere and less sharp image is generated for increasing radial distance r. Experimentally we placed a 524–565 nm band-pass filter (AHF, Germany) in the optical path and we quantified the imaging performance by measuring the variation of the pixel intensity along the seven dashed lines of a width of 2 µm, corresponding to 22 pixels. Hereby, we repositioned the line-space pattern so that the complete range 0 < r < 12 µm could be studied. The extracted pixel gray values were normalized, taking as hundred percent the lighter region outside of the line pattern and zero percent the darkest pixel intensity of the micro-patterned structures. The peak-to-valley distances of the thus obtained curves were measured and marked as modulation. The graphs of Fig. 4b were constructed by placing line-space patterns with 260 nm, 280 nm and 300 nm pitch, respectively, in the center of a single microsphere. Seven measurement lines were placed along the horizontal axis (shown on Fig. 4a) of the images, starting from the center with 2 µm increments. The modulation rapidly decreases as the local distance between the sample and the microsphere surface increases, in good agreement with theoretical calculations of the evanescent behavior of sub-diffraction-sized nanostructures37. In Fig. 4c the modulation performance of the microscope objective with (yellow) and without (purple) the presence of the microsphere array was compared by imaging line-space patterns with different pitch in the 240–400 nm range. Data analysis showed that there is a significant gain due to the use of the microsphere when the lateral dimension of the sample is below 180 nm, i.e. exactly in the diffraction limited region.

Analysis of the modulation of the imaged line-space patterns. (a) Micrograph of an eleven-line 140 nm-wide line-space pattern, as imaged by a microsphere, showing that the modulation pattern is best resolved towards the center of the microsphere and is attenuated with increasing radial distance r. (b) Measurement of the modulation as a function of r in the case of 260 nm, 280 nm and 300 nm pitch, respectively, along the dashed lines indicated in (a). (c) Measurement of the modulation of differently sized line-space patterns obtained at r = 0. Circles show the measured values when the microsphere array chip was present, while squares show the performance of the microscope objective without the array chip. Points are averages over the eleven lines at a given r, error bars: ±SD.

To benchmark the performance of our approach, we compared the composed picture to the image that was taken by the microscope camera without using a microsphere (Fig. 5). In Fig. 5a, we see the line-space patterns of row nine from the sample of Fig. 3 in the upper part, and the line-space patterns of row eight in the lower part. The white dashed rectangle shows a single field-of-view of the microscope mounted camera. To be able to make fair comparison with our composed image, we took two photographs from the microscope and stitched them together. In the insets, enlarged images of the line-space patterns are shown, clearly indicating that the microscope cannot resolve features below the diffraction limit. To further support this statement, we drew five pixel-wide measurement lines on the taken photographs (blue lines correspond to patterns of row nine, while orange lines correspond to patterns of row eight), on which we evaluated the pixel gray values. We positioned these lines on exactly the same spot for every pattern, except for the 150 nm wide lines where they are shifted up by a few microns, because of a damaged region in the pattern of row eight. To exclude the shift caused by eventual different brightness of the light source, we normalized all pixel gray values, resulting in a modulation pattern as discussed already in Fig. 4a. Therefore, on the plots in the center of Fig. 5a, the zero value corresponds to the darkest pixel and the one value corresponds to the lightest region next to the line-space pattern, i.e. the down-pointing peaks correspond to the dark lines in the pattern. One can observe that the peaks are distinguishable on the most right side plot (evaluating the 150 nm wide lines), but that they disappear as the line width is decreased to 140 nm (center plot) and finally to 130 nm (left side plot).

Resolution analysis of the image. (a) Picture of the sample taken via the microscope objective without use of the microsphere array. Since the field-of-view of the camera is smaller than the demonstrated scanned surface of the sample, two pictures were stitched together, one being marked by a white dashed rectangle. Nanostructures within the upper field-of-view are identical to the ones in the lower field-of-view, and are composed of 130 nm, 140 nm, and 150 nm L shaped line-space patterns from the left to the right, respectively. Black squares are zooms on these patterns. The optical signals are evaluated along the five pixel-wide horizontal lines (blue for the upper part and orange for the lower part) and plotted in the center as normalized gray values (a black pixel generating zero signal and a white pixel generating signal one). One can observe that the 150 nm nanostructures-generated modulation patterns are resolvable; meanwhile no modulation is observed for the 130 nm and 140 nm nanostructures. (b) Picture of the same sample as in (a) and Fig. 3c, taken via the microscope objective using the microsphere array. Yellow colored tiles were recorded by the first microsphere, and blue ones by the second microsphere. Insets show zooms on the recorded modulation patterns. Optical signals are evaluated along the same five pixel-wide lines as in (a). Modulation plots in the center are generated with the same method as in (a), showing that all nanostructures are resolvable. Scale bar 5 µm.

In Fig. 5b we show the image of the same area, but in this case, the picture was created with our microsphere array. We applied yellow and blue colors on the picture to show which part of it was created by the first and which by the second microsphere in our array. The insets show enlarged stitched images of the line-space patterns, with markings of the positions of our measurement lines. Just by eye observation, it is already clear that the lines, independently of their size, are more visible than in Fig. 5a. For evaluating the gray values along the measurement lines, we used the same method as described in the previous paragraph. On the plots in the center of Fig. 5b, one can observe that the peaks corresponding to the black lines on the sample are sharper and that the modulation amplitude is bigger. It is important to note, that the modulation did not change significantly between the biggest (150 nm) and the smallest (130 nm) line width, i.e. our imaging system could well resolve down to 130 nm wide features using a halogen light source.

Finally, to demonstrate the robustness and full possibility of our imaging technique, we show in Fig. 6 a super-resolution imaging corresponding to a large surface area (0.5 mm × 1.0 mm). During scanning, 20 301 individual pictures were collected using our custom algorithm, resulting in ~60 GB of raw data. Our stitching algorithm composed the final image that had ~175 MPixel and ~530 MB file size. One can observe that due to the shear stress generated during the scanning, a slight systematic tilt occurred on the picture, which was corrected by our image reconstruction algorithm. The shadow effect at the edge of the tiles could not be compensated by our algorithm, therefore the quality of the stitching could be improved, e.g. by using seamless stitching in ImageJ, but it is important to note, that our solution completed the stitching ~100× faster than the ImageJ algorithm. As the insets in Fig. 6 show, the 130 nm lateral resolution was preserved over the total area of the scanned surface.

Conclusion

We demonstrated an advanced implementation of an optical microscopy super-resolution imaging technique, using an ordered array of dielectric microspheres. The imaging principle was explained to be related to the existence of a photonic nanojet upon illumination of a microsphere and the near-field interactions between the sample and the microsphere. We showed that it is possible to overcome some of the field-of-view limitations of previously published microsphere-based super-resolution imaging techniques by implementing a scanning and stitching process. Our simple but smart system achieved a 240 nm pitch lateral resolution in static mode. Furthermore, 260 nm pitch and simultaneously a much bigger total field-of-view than the one of the microscope-mounted camera was demonstrated. To show the robustness of the system, a surface scan of 5 × 105 µm2 was presented. However, we believe that even bigger areas can be imaged, since there are no intrinsic limits in our process. Later, the scanning system could eventually be optimized for mass production with the help of 3D printing, as this technique enables very flexible microfabrication of customized parts, as was shown earlier31. We therefore hope that our findings will help repositioning dielectric microsphere-based optical super-resolution microscopy beyond the proof-of-concept stage towards a fully operational real-life application.

Data availability

The data that support the plots within this paper and other findings of this study are available from the corresponding author upon reasonable request.

References

Chen, Z., Taflove, A. & Backman, V. Photonic nanojet enhancement of backscattering of light by nanoparticles: a potential novel visible-light ultramicroscopy technique. Opt. Express 12, 1214–1220 (2004).

Lee, J. Y. et al. Near-field focusing and magnification through self-assembled nanoscale spherical lenses. Nature 460, 498–501 (2009).

Itagi, A. V. & Challener, W. A. Optics of photonic nanojets. JOSA A 22, 2847–2858 (2005).

Heifetz, A. et al. Experimental confirmation of backscattering enhancement induced by a photonic jet. Appl. Phys. Lett. 89, 221118 (2006).

Heifetz, A., Kong, S.-C., Sahakian, A. V., Taflove, A. & Backman, V. Photonic Nanojets. J. Comput. Theor. Nanosci. 6, 1979–1992 (2009).

Devilez, A., Stout, B., Bonod, N. & Popov, E. Spectral analysis of three-dimensional photonic jets. Opt. Express 16, 14200–14212 (2008).

Geints, Y. E., Panina, E. K. & Zemlyanov, A. A. Control over parameters of photonic nanojets of dielectric microspheres. Opt. Commun. 283, 4775–4781 (2010).

Ruiz, C. M. & Simpson, J. J. Detection of embedded ultra-subwavelength-thin dielectric features using elongated photonic nanojets. Opt. Express 18, 16805–16812 (2010).

Yang, H., Trouillon, R., Huszka, G. & Gijs, M. A. M. Super-resolution imaging of a dielectric microsphere is governed by the waist of its photonic nanojet. Nano Lett. 4862–4870 (2016).

Ferrand, P. et al. Direct imaging of photonic nanojets. Opt. Express 16, 6930–6940 (2008).

Zhao, L. & Ong, C. K. Direct observation of photonic jets and corresponding backscattering enhancement at microwave frequencies. J. Appl. Phys. 105, 123512 (2009).

Kim, M.-S. et al. Advanced optical characterization of micro solid immersion lens. in (eds. Gorecki, C., Asundi, A. K. & Osten, W.) 84300E–84300E–10 (2012).

Kang, D. et al. Shape-Controllable Microlens Arrays via Direct Transfer of Photocurable Polymer Droplets. Adv. Mater. 24, 1709–1715 (2012).

Fan, W., Yan, B., Wang, Z. & Wu, L. Three-dimensional all-dielectric metamaterial solid immersion lens for subwavelength imaging at visible frequencies. Sci. Adv. 2, e1600901–e1600901 (2016).

Devilez, A. et al. Three-dimensional subwavelength confinement of light with dielectric microspheres. Opt. Express 17, 2089–2094 (2009).

Darafsheh, A., Limberopoulos, N. I., Derov, J. S., Walker, D. E. & Astratov, V. N. Advantages of microsphere-assisted super-resolution imaging technique over solid immersion lens and confocal microscopies. Appl. Phys. Lett. 104, 061117 (2014).

Wang, Z. et al. Optical Virtual Imaging at 50 Nm Lateral Resolution with a White-Light Nanoscope. Nat. Commun. 2, 218 (2011).

Darafsheh, A., Walsh, G. F., Dal Negro, L. & Astratov, V. N. Optical super-resolution by high-index liquid-immersed microspheres. Appl. Phys. Lett. 101, 141128 (2012).

Li, L., Guo, W., Yan, Y., Lee, S. & Wang, T. Label-free super-resolution imaging of adenoviruses by submerged microsphere optical nanoscopy. Light Sci. Appl. 2, e104 (2013).

Yang, H., Moullan, N., Auwerx, J. & Gijs, M. A. M. Fluorescence Imaging: Super-Resolution Biological Microscopy Using Virtual Imaging by a Microsphere Nanoscope (Small 9/2014). Small 10, 1876–1876 (2014).

Yan, Y. et al. Microsphere-Coupled Scanning Laser Confocal Nanoscope for Sub-Diffraction-Limited Imaging at 25 nm Lateral Resolution in the Visible Spectrum. ACS Nano 8, 1809–1816 (2014).

Darafsheh, A., Guardiola, C., Palovcak, A., Finlay, J. C. & Cárabe, A. Optical super-resolution imaging by high-index microspheres embedded in elastomers. Opt. Lett. 40, 5 (2015).

Yang, H. & Gijs, M. A. M. Optical microscopy using a glass microsphere for metrology of sub-wavelength nanostructures. Microelectron. Eng. 143, 86–90 (2015).

Darafsheh, A., Guardiola, C., Finlay, J., Cárabe, A. & Nihalani, D. Simple super-resolution biological imaging. SPIE Newsroom (2015).

Darafsheh, A. et al. Biological super-resolution imaging by using novel microsphere-embedded coverslips. In (eds Cartwright, A. N. & Nicolau, D. V.) 933705 (2015).

Wang, F. et al. Three-Dimensional Super-Resolution Morphology by Near-Field Assisted White-Light Interferometry. Sci. Rep. 6, 24703 (2016).

Krivitsky, L. A., Wang, J. J., Wang, Z. & Luk’yanchuk, B. Locomotion of microspheres for super-resolution imaging. Sci. Rep. 3, (2013).

Li, Y., Shi, Z., Shuai, S. & Wang, L. Widefield scanning imaging with optical super-resolution. J. Mod. Opt. 62, 1193–1197 (2015).

Li, J. et al. Swimming Microrobot Optical Nanoscopy. Nano Lett. 16, 6604–6609 (2016).

Du, B., Ye, Y.-H., Hou, J., Guo, M. & Wang, T. Sub-wavelength image stitching with removable microsphere-embedded thin film. Appl. Phys. A 122, (2016).

Yan, B. et al. Superlensing microscope objective lens. Appl. Opt. 56, 3142 (2017).

Wang, F. et al. Scanning superlens microscopy for non-invasive large field-of-view visible light nanoscale imaging. Nat. Commun. 7, 13748 (2016).

Duocastella, M. et al. Combination of scanning probe technology with photonic nanojets. Sci. Rep. 7, (2017).

Darafsheh, A. Influence of the background medium on imaging performance of microsphere-assisted super-resolution microscopy. Opt. Lett. 42, 735 (2017).

Darafsheh, A. & Bollinger, D. Systematic study of the characteristics of the photonic nanojets formed by dielectric microcylinders. Opt. Commun. 402, 270–275 (2017).

Luk’yanchuk, B. S., Paniagua-Domínguez, R., Minin, I., Minin, O. & Wang, Z. Refractive index less than two: photonic nanojets yesterday, today and tomorrow [Invited]. Opt. Mater. Express 7, 1820 (2017).

Huszka, G., Yang, H. & Gijs, M. A. M. Microsphere-based super-resolution scanning optical microscope. Opt. Express 25, 15079 (2017).

Acknowledgements

The authors would like to thank for Swiss National Science Foundation Grant (200021–152948) for funding of this project.

Author information

Authors and Affiliations

Contributions

G.H. built the setup, conducted the imaging experiments and prepared Figures 1–6. G.H. and M.A.M.G. wrote the manuscript.

Corresponding author

Ethics declarations

Competing Interests

The authors declare that they have no competing interests.

Additional information

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Huszka, G., Gijs, M.A.M. Turning a normal microscope into a super-resolution instrument using a scanning microlens array. Sci Rep 8, 601 (2018). https://doi.org/10.1038/s41598-017-19039-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-017-19039-6

This article is cited by

-

Planar efficient metasurface for generation of Bessel beam and super-resolution focusing

Optical and Quantum Electronics (2021)

-

In situ printing of liquid superlenses for subdiffraction-limited color imaging of nanobiostructures in nature

Microsystems & Nanoengineering (2019)

-

Microsphere-mediated optical contrast tuning for designing imaging systems with adjustable resolution gain

Scientific Reports (2018)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.