Abstract

Single-pixel imaging is an indirect imaging technique which utilizes simplified optical hardware and advanced computational methods. It offers novel solutions for hyper-spectral imaging, polarimetric imaging, three-dimensional imaging, holographic imaging, optical encryption and imaging through scattering media. The main limitations for its use come from relatively high measurement and reconstruction times. In this paper we propose to reduce the required signal acquisition time by using a novel sampling scheme based on a random selection of Morlet wavelets convolved with white noise. While such functions exhibit random properties, they are locally determined by Morlet wavelet parameters. The proposed method is equivalent to random sampling of the properly selected part of the feature space, which maps the measured images accurately both in the spatial and spatial frequency domains. We compare both numerically and experimentally the image quality obtained with our sampling protocol against widely-used sampling with Walsh-Hadamard or noiselet functions. The results show considerable improvement over the former methods, enabling single-pixel imaging at low compression rates on the order of a few percent.

Similar content being viewed by others

Introduction

High resolution detector arrays together with high quality optics constitute the most important parts of any classical camera. Nonetheless, these components, which in some cases tend to be very sophisticated and costly, are not indispensable elements of imaging systems. Simplifying the optoelectronic hardware of cameras is one of the reasons for the development of indirect imaging techniques. Single-pixel imaging1,2 is a technique which makes use of a single detector, such as a photodiode or photomultiplier, and utilizes spatial and temporal modulation of the optical signal to measure an indirect, compressed and encrypted representation of an image. Currently single-pixel cameras can not compete with the low-cost widely available cameras for the visible wavelength range, however their development offers new possibilities for hyperspectral maging3,4, polarimetric imaging5,6, holographic imaging4,7,8 THz imaging9, 3D imaging10,11,12 or imaging though scattering media13, to mention just some applications. Indirect imaging is mostly limited by the increased time of image acquisition and by the high computational requirements for image reconstruction after the measurement. The branch of mathematics known as compressive sensing1,14,15,16(CS) brings the tools needed to restore the image from an indirect lower dimensional measurement. The reconstruction problem of the full-dimensional image from such a compressive measurement is an ambiguous inverse problem consisting in solving an underdetermined system of linear equations.

Images measured by a single-pixel detector are modulated either with structured illumination or using a structured aperture within the detector. As a result, the detector captures a sequence of average intensities of the modulated image. Mathematically, this is a sequence of dot-products of the measured image X with some sampling functions ψ i which are used for modulation. Usually, the size of measurement is much smaller than the number of pixels of the image at full resolution. This may be seen as a way to capture an encoded and compressed representation of the image which is useful for transmission or storage, and at the same time to deal with the relatively low operation frequencies of current spatial light modulators. For instance, in this work we are using a state-of the art binary spatial light modulator with a maximum resolution of 1024 × 768 and the maximum frame rate of 22 kHz. A simple calculation shows that a full measurement with the dimension equal to the number of pixels would take more than half a minute and require 77 GB of memory to store the binary representation of the sampling functions ψ i , which is impractical. Fortunately, the information content of most real-world images is much lower than that theoretically possible to obtain at the same resolution. In other words, most images are well compressible, and an incomplete measurement may carry enough data to obtain an accurate reconstruction of the image at the original resolution.

Most widespread digital image compression methods are adaptive, which means that the compression algorithm is adjusted to the image contents. A different algorithm may be run on various segments of the image, the algorithm may detect constant parts of the image, and after representing the image in a wavelet or other basis, only the highest resulting coefficient are retained. In effect, digital compression algorithms are usually nonlinear, which is difficult to obtain with single-pixel imaging at the stage of image acquisition. If some a priori information is available on the measured image on top of its compressibility, it makes sense to include this information in the measurement method and to modify the sampling functions accordingly. For instance, a geometrical transformation of the measurement patterns could lead to a nonuniform rate of collecting information from various parts of the image, with the area of interest measured more accurately than the rest17. Another possibility is to select the subset of measurement patterns that belong to a given basis, for instance consisting of Walsh-Hadamard functions, not in a random way but rather according to their expected similarity with the image18. A dynamic adaptive choice of the sampling patterns may lead to a significant decrease of the size of the measurement19,20. The theory of compressive sensing suggests to use sampling functions which have the smallest coherence with a basis, in which the image has a sparse representation21. In simple words, most images are well compressible in the wavelet or cosine basis, thus one should use sampling functions which can not be compressed in these bases. Random sampling is a universal choice. For practical reasons, Walsh-Hadamard or noiselet22,23 functions are usually used instead, since they are discrete, simple to generate, and a respective representation of the image can be calculated with a fast algorithm, which facilitates image reconstruction. Binary sampling has been also recently proposed for the Fourier basis24. In our approach, we develop random sampling functions which sample a specific part of the feature space that we expect to be important for representing a broad class of images.

Morlet wavelet based nonergodic random sampling

Incoherent sampling is based on patterns dissimilar to image contents. On the other hand, a sparse wavelet representation of the image could be also found rapidly by probing the image directly with wavelet functions, if the most probable elements of the wavelet representation are known beforehand. What we propose here, is to combine these two contradictory lines of reasoning into a novel sampling scheme which is both random and based on a wavelet representation at the same time. We propose to apply a novel kind of sampling, equivalent to random sampling in the feature space. A feature space is built out of vectors, whose elements correspond to specific features of images. Simple features may be associated with spatial and frequency contents of an image. For instance, a feature space may be constructed using Gabor filters which are defined as Gaussian functions modulated with a linear phase dependence. A two-dimensional Gabor filter f(x,y) has the following form25,

where N is a normalization constant such that |f | = 1. A feature vector is constructed out of a set of dot-products of the image X with Gabor filters \({X}_{{x}_{0},{y}_{0},a,{u}_{0},{v}_{0}}=\langle X,{f}_{{x}_{0},{y}_{0},a,{u}_{0},{v}_{0}}\rangle \) where the parameters x 0, y 0 are related to probing a certain location of the image, parameter a determines the characteristic scale of the feature, and u 0, v 0 select the part of the probed spatial spectrum. Gabor functions allow for probing images in the spatial domain and in the frequency domain at the same time with the highest possible resolution25. In fact, the Fourier representation of the Gabor function is also a Gabor function, and both functions optimize the uncertainty relation for the two-dimensional Fourier transform. In other words, it is not possible to construct narrower probing functions (with smaller variances) in the spatial and frequency domains at the same time. A zero-mean and normalized Gabor filter is known as the Morlet wavelet or Gabor wavelet. In a two-dimensional situation, a Morlet wavelet is equal to

where the constants κ and N assure that the wavelet function g is normalized |f | = 1 and has zero mean \(\bar{f}=0\). Parameters σ, n p , θ are related to the size of the Gaussian envelope, number of periods within the envelope, and the orientation of modulation. A feature vector is obtained by convolving the wavelets with the image \({X}_{{x}_{0},{y}_{0},\sigma ,{n}_{p},\theta }=X\ast {g}_{\sigma ,{n}_{p},\theta }\).

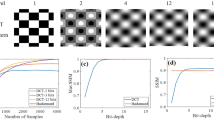

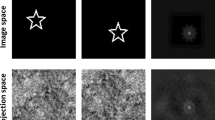

Taking a measurement with a single-pixel detector consists in probing the measured image X with a set of sampling functions ψ i . The set of measured dot-products Y i = 〈X, ψ i 〉 is later used to reconstruct the image X. Now, let us probe the feature space with random functions ψ i . Since \(\langle X\ast {g}_{\sigma ,{n}_{p},\theta },{\psi }_{i}\rangle =\langle X,{\psi }_{i}\ast {g}_{a,{u}_{0},{v}_{0}}\rangle \) instead of probing the feature space, we propose to probe the image X directly with modified sampling functions \({{\rm{\Psi }}}_{\sigma ,{n}_{p},\theta }(x,y)={g}_{\sigma ,{n}_{p},\theta }(x,y)\ast {\psi }_{i}(x,y)\). These sampling functions are obtained as a convolution of Morlet wavelets with realizations of white Gaussian noise. Some examples of sampling functions \({{\rm{\Psi }}}_{\sigma ,{n}_{p},\theta }\) and the procedure for their calculation are illustrated in (Fig. 1a,b).

The proposed Morlet wavelet based nonergodic random sampling functions. (a) Schematic of the generation method: the sampling function is calculated by convolving a Morlet wavelet (see Equation (2)) with white zero-mean Gaussian noise. (b) Examples of sampling functions with varying parameters σ, n p , θ. (c) Examples of binarized sampling functions.

Many interesting natural phenomena in physics, biology or artificial intelligence arise on the verge of random and deterministic behavior of a system. In this work, the proposed sampling functions \({{\rm{\Psi }}}_{\sigma ,{n}_{p},\theta }\) are calculated by convolving random functions ψ i and deterministic Morlet wavelets \({g}_{\sigma ,{n}_{p},\theta }\), and they clearly combine random and deterministic properties. Mathematically, they are zero-mean random matrices with multivariate Gaussian probability density distributions. Each has a distinct power spectrum, dependent on the wavelet used for its generation. As opposed to the wavelets, the sampling functions have a similar random shape at any location, which reflects the property of stationarity. However, their ensemble properties are distinct from the properties of every single realization. This means they do not satisfy the statistical property of ergodicity. This makes them a lot different from the uncorrelated random sampling often used in compressive sensing applications, as well as from the deterministic sampling with noiselet, Walsh-Hadamard or cosine functions as well as from localized functions such as wavelets.

The choice of parameters σ, n p , θ for the random wavelet-based sampling functions has an obvious influence on the quality of a compressive measurement. By decomposing an image database with 49 images of various content, we found that there exists a common parameter range that may be successfully used to represent most of the images with our sampling functions. This decomposition is not unique and finding an optimal decomposition is challenging from the computational viewpoint. Instead, we have used a simplified approach. We generated a large number of sampling functions with randomly selected parameters σ, n p , θ, placed them into a rectangular matrix, and decomposed every image into these sampling functions by left-multiplying the image by the pseudoinverse of this matrix. The Moore-Penrose pseudoinverse is a generalization of matrix inverse for rectangular and singular matrices, it finds application in image reconstruction from compressive measurements26, and further we also use it as one of the methods for image reconstruction in this paper. In this way, we found a typical distribution of coefficients σ, n p , θ required to represent a large variety of real-world images. A graphical representation of the decomposition projected onto the parameter space σ, n p is shown in Fig. 2. The technical details of the calculation method are included in the supplementary materials section S1 (online). Please note, that the units of σ are given in proportion to the image size, and 3σ = 512 pixels. As we can see, the interesting part of the feature space spanned with σ, n p is easily identified from this plot. A method with an adaptive choice of sampling functions could be further developed through a more in-depth analysis of how the decomposition varies for different images. However, in our case the range of obtained parameters was similar for the whole image database and the non-adaptive approach taken in this paper is certainly easier to implement, especially in experimental conditions.

Results

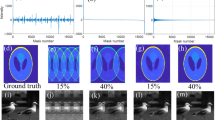

The practical benefit of using the proposed sampling functions becomes clear from a simple comparison with classical sampling methods based on randomly selected Walsh-Hadamard or noiselet functions. Figure 3 presents results of a simulated measurement from a single-pixel detector conducted at a low compression rate of 4% with the use of three different sampling protocols, including the proposed Morlet wavelet-based random functions. The simulation was performed for two 512 × 512 images with different properties, such as spatial frequency spectrum, contrast, or richness of details. The images have been reconstructed by minimizing the total variation norm (TV), which is one of the basic image reconstruction approaches used in CS. Figure 3b,f show the reconstructions from the measurements with Walsh-Hadamard functions, which do not at all resemble the original images. The average quality of the image recovery, measured by the PSNR criterion (see Methods section), for the Walsh-Hadamard sampling is on the level of 14 dB. This is not surprising at such a low compression rate, although noiselet sampling gives considerably better results (PSNR ≈ 22 dB). On the other hand, the Morlet wavelet-based sampling, also measured at the compression rate of only 4% allowed us to reconstruct high quality images with the PSNR of 25 dB on average (see Fig. 3). We would like to emphasize that these measurements are not adaptive, and the sampling functions are randomly selected from the previously estimated range of values of σ, n p , θ, as well as that images shown in (Fig. 3a,e) were not included in the training database. Moreover, the quality and resolution of these reconstructions are uniform within the entire image areas and it is not possible to notice any characteristic resolution, orientation or region of interest of the images, which is enhanced at the cost of some other property. We think that this impressive result comes from the efficient sampling of the properly selected part of the feature space which maps the images accurately both in the spatial and spatial frequency domains.

CS-based reconstruction of two 512 × 512 test images from a compressive measurement simulation at the compression rate of 4%; (a,e) original images; (b,f) reconstruction from a compressive measurement, where sampling was based on a randomly selected set of Walsh-Hadamard functions; (c,g) reconstruction from a compressive measurement, where sampling was based on a randomly selected set of discrete noiselet functions; (d,h) reconstruction from a compressive measurement, where sampling was based on the proposed Morlet wavelet-based random functions.

Morlet wavelet-based random sampling functions allow to reconstruct images from smaller number of measurements. However, these patterns are neither binary nor orthogonal. We will now discuss the practical consequences of these important limitations and show how to overcome them. In optical single-pixel detectors, light modulation is usually accomplished by using spatial light modulators, such as digital micromirror devices (DMD). The DMDs are capable of displaying binary patterns at a rate of over 20 kHz. Gray-scale modulation could be achieved with time multiplexing or would require using a different spatial modulator, for instance based on liquid crystals. However, both of these approaches offer much lower effective modulation rate. Therefore, we have decided to binarize the real-valued Morlet wavelet-based random sampling functions to retain the high measurement performance with a binary DMD. Some examples of binarized sampling functions are shown in (Fig. 1c). Although binary sampling functions are advantageous for displaying on DMD modulators, they however make the reconstruction of the image more problematic, since the CS algorithms require the basis of sampling functions to be orthogonal. While orthogonalization of a basis of continuous functions is rather straightforward, it cannot be obtained with the binarity constraint placed on the functions. To cope with this problem we use matrices precalculated with the singular value decomposition, which has been explained in the Methods section.

We have analyzed the influence of the binarization procedure on the quality of the reconstruction of compressively measured images, and we have found it negligible in the case of image recovery with the use of CS optimization method. The reconstruction quality, measured with the peak signal-to-noise (PSNR) criterion is shown in Fig. 4 as a function of compression. As an alternative to compressive sensing image reconstruction methods we also make use of a direct recovery from the precalculated pseudoinverse of the measurement matrix. The mathematical details of both kinds of methods are briefly summarized in the Methods section. Sample pseudoinverse reconstructions are included in the Supplementary Information (See Supplementary section S2, online). The CS-based recovery offers better quality of the image reconstruction than the pseudoinverse method, especially when binarized sampling patterns are used for image acquisition. However, it can not be obtained in real time. On the other hand, the pseudoinverse-based recovery with the precalculated pseudoinverse matrix requires only the evaluation of a single matrix-vector multiplication, and therefore is very fast. For images of the size of 256 × 256 sampled at the compression rate of a few percent, the reconstruction stage is faster than the measurement with the DMD, and may be also realized on-the-fly in parallel with the measurement.

Comparison of the image reconstruction quality (PSNR) as a function of compression rate for the fast pseudoinverse-based reconstruction (Pinv) with the slower CS-based reconstruction obtained by minimizing the total variation (TV). PSNR is averaged over a set of ten 512 × 512 test images, which have been sampled in a numerical simulation using both real and binary Morlet wavelet-based random sampling functions.

Our single-pixel camera set-up shown in Fig. 5 includes a state-of-the-art DMD with 1024 × 768 pixel resolution and maximum sampling rate of 22 kHz. Signal-to-noise ratio of the measurement is enhanced using the technique of differential photodetection23,27,28. The signals measured by two large-area photodiodes are then collected at the rate of 17 MS/s and digitized with 14-bit resolution using a PC oscilloscope.

A complete measurement at the resolution of 256 × 256 takes 3 s and enables us to reconstruct images with a high quality (see Fig. 6a), restricted only by the imperfections of the experimental set-up and the presence of optical and electronic noise. Compressive measurements take proportionally less time, however the choice of the sampling protocol is crucial for the feasibility of reconstructing the images with a reasonable quality. For instance, at the compression rate of 6%, sampling with a random set of Walsh-Hadamard functions allows to obtain a reconstruction with PSNR on the order of 18 dB on average (see Fig. 6b). At the same time, using the nonergodic Morlet wavelet-based random binary sampling functions leads to the reconstructions with PSNR of over 27 dB at the same compression rate (Fig. 6d), while the PSNR for sampling with discrete noiselet functions is on the order of 25.5 dB (Fig. 6c). Additionally, an image reconstruction obtained with the proposed sampling using pseudoinverse method yields an improved image quality over Walsh-Hadamard sampling with PSNR of 20 dB. While the noise level is increased in comparison with the iterative CS reconstruction, the pseudoinverse enables rapid image recovery by a single matrix multiplication. The sampling scheme proposed in this paper is clearly superior to both other methods also for different compression rates (see Fig. 7).

Experimental comparison of different binary sampling methods. The results are reconstructed at the resolution of 256 × 256 from (a,f) a complete measurement, or (b–e,g–j) from a compressive measurement conducted at the compression rate of 6%. (b–d,g–i) have been obtained with a CS algorithm and (e,j) with the pseudoinverse. Sampling with: (b,g) randomly selected Walsh-Hadamard patterns; (c,h) randomly selected noiselet patterns; (d,e,i,j) Morlet wavelet-based random binary patterns.

Experimental comparison of the image reconstruction quality (PSNR) as a function of compression rate for compressively sensed 256 × 256 image using Walsh-Hadamard, discrete noiselet, and Morlet wavelet correlated random sampling patterns. For the last case, a fast method of reconstruction based on pseudoinverse (Pinv) is also included, in addition to the CS-based recovery obtained by minimizing the total variation (TV).

Discussion

In this work, we proposed a novel random sampling method for single-pixel imaging. It utilizes nonergodic and stationary Morlet-wavelet-based random patterns that may also be binarized for use with binary spatial light modulators. These sampling functions are obtained as a convolution of Morlet wavelets with realizations of white Gaussian noise.

The proposed sampling functions have a rich spatial and frequency content. Individually, each is a realization of a multivariate Gaussian noise with a characteristic feature size, orientation and modulation frequency. Combined together, the sampling functions uniformly probe the feature space spanned over these image features. We have selected a subset of the feature space through the analysis of an image database.

We have tested this kind of sampling with a large variety of images, and the proposed method enabled us to reconstruct these images with a good quality at compression rates of just a few percent. Both theoretical and experimental results show that the proposed sampling is a lot better than random Walsh-Hadamard sampling. It is also better than noiselet sampling.

At such low compression rates it is still possible to use the direct and fast reconstruction method based on the pseudoinverse of the measurement matrix. A direct reconstruction based on a precalculated pseudoinverse matrix may be implemented on-the fly in parallel with image acquisition on a multicore processor. CS-based reconstruction with a better quality requires much longer reconstruction times on the order of seconds.

Methods

CS-based image recovery

We calculate the singular value decomposition of the measurement matrix and following use the total variation (TV) image recovery method implemented in the NESTA 29 numerical package. When the k × n measurement matrix M (with k < n, and the compression ratio denoted as CR = k/n) consists of rows with nonorthogonal sampling functions \({{\rm{\Psi }}}_{\sigma ,{n}_{p},\theta }(x,y)\), it is first decomposed with the singular-value decomposition (SVD) into a product of small k × k square orthogonal matrix U, diagonal k × k matrix D and rectangular semiorthogonal complex conjugate transposed n × k matrix V, i.e. M = U·D·V *. In effect the TV method operates on orthogonal matrices, as is required to reach convergence. The mathematical model of the measurement M·X = Y (where X is the captured image, M is the measurement matrix, and Y is the compressive measurement) is replaced with M′·X = Y′ (where M′ = V *, and Y′ = D −1·U *·Y), with a semiorthogonal matrix M′. SVD calculation is computationally costly and working on the full measurement matrix is memory demanding. Still, a laptop with a 8 GB memory is enough to precalculate the measurement matrix used by us in the optical experiment.

Pseudoinverse-based image recovery

The pseudoinverse of the measurement matrix is calculated through the singular value decomposition M + = V·D −1·U *. For the Morlet-based random sampling functions, the measurement matrix and its pseudoinverse have been precalculated before the measurement. In effect, image recovery has been based on a simple matrix-vector multiplication X ≈ M +·Y, which even for a very large matrix takes a fraction of a second to calculate. Since \({[{M}^{+}\cdot Y]}_{i}={\sum }_{j=1}^{k}{[{M}^{+}]}_{i,j}\cdot {Y}_{j}\), it is possible to calculate this expression on-the-fly during the measurement, as subsequent components Y j become available.

Selection and binarization of Morlet-based random sampling functions

selection of the parameters (n p , σ, θ) for the construction of the sampling functions \({{\rm{\Psi }}}_{\sigma ,{n}_{p},\theta }\) was random. Based on the results from Fig. 2, we assumed continuous normal probability distributions for n p and σ (\({\mu }_{{n}_{p}}=6\), \({\sigma }_{{n}_{p}}=4\), μ σ = 33%, σ σ = 16.5%). We assumed a uniform distribution for θ over [0, 2π]. The units of σ are such that 3σ is the width of the image (512 or 256 pixels). Additionally, a constant function \({{\rm{\Psi }}}_{1}^{bin}(x,y)\equiv 1\) has been always included as well to measure the mean value of the image.

The binarization is based on testing the sign of the real-valued functions with the Heaviside step function Θ H , i.e. \({{\rm{\Psi }}}_{\sigma ,{n}_{p},\theta }^{bin}(x,y)={{\rm{\Theta }}}_{H}({{\rm{\Psi }}}_{\sigma ,{n}_{p},\theta }(x,y))\).

Differential photodetection

The two states of the DMD mirrors direct the reflected light at two different angles. Then two photodiodes measure both \({Y}_{i}=\langle X,{{\rm{\Psi }}}_{i}^{bin}\rangle \) and \({\bar{Y}}_{i}=\langle X,\,1-{{\rm{\Psi }}}_{i}^{bin}\rangle \) at the same time. Their difference is used to eliminate the influence of background light and intensity fluctuations of the source from the measurement Y.

Peak signal to noise ratio

we use a standard definition of the PSNR for the noisy image X and reference image R, \(PSNR(X,R)(dB)=10{\mathrm{log}}_{10}(max{(R)}^{2}/MSE(X,R))\), where MSE is the mean square error.

Discrete noiselet functions

Noiselet sampling22 is a lot less popular than Walsh-Hadamard sampling so we include the definition of discrete noiselets. Let H m denote a m×m Hadamard or noiselet transformation matrix whose rows consist of the basis functions. These matrices may be defined recursively as H 2m = H 2 ⊗ H m where ⊗ denotes the Kronecker product, H 1 = 1, and \({{\bf{H}}}_{2}=\frac{1}{\sqrt{2}}[\begin{array}{ll}1 & 1\\ 1 & -1\end{array}]\) for Walsh-Hadamard matrices, and \({{\bf{H}}}_{2}=\frac{1-i}{2}[\begin{array}{ll}1 & i\\ i & 1\end{array}]\) for noiselet matrices. Apart from the normalization, Hadamard basis consist of binary values {−1, 1}, while noiselet basis, depending on m, consist of values {exp(ipπ/4)} with p = 0, 2, 4, 6 when m is an odd power of 2, and p = 1, 3, 5, 7 when m is an even power of 2. In the first case the real and imaginary parts of noiselet functions are binary, and in the second the sum and difference of their real and imaginary parts are binary23. Two-dimensional transforms are obtained through the Kronecker product of one-dimensional transforms, i.e. \({H}_{m\times m}^{2D}={H}_{m}\otimes {H}_{m}\).

Data availability

The files generated in this work are available from the corresponding author on reasonable request.

References

Candes, E. J. & Wakin, M. B. An introduction to compressive sampling. IEEE Sign. Proc. Mag. 25, 21–30 (2008).

Duarte, M. et al. Single-pixel imaging via compressive sampling. IEEE Sign. Process. Mag. 25, 83–91 (2008).

Bian, L. et al. Multispectral imaging using a single bucket detector. Sci. Rep. 6, 24752 (2016).

Li, Z. et al. Efficient single-pixel multispectral imaging via non-mechanical spatio-spectral modulation. Sci. Rep. 7, 41435 (2016).

Duran, V., Clemente, P., Fernandez-Alonso, M., Tajahuerce, E. & Lancis, J. Single-pixel polarimetric imaging. Opt. Lett. 37, 824 (2012).

Soldevila, F. et al. Single-pixel polarimetric imaging spectrometer by compressive sensing. Applied Physics B 113, 551–558 (2013).

Li, J., Li, H., Li, J., Pan, Y. & Li, R. Compressive optical image encryption with two-step-only quadrature phase-shifting digital holography. Opt. Commun. 344, 166–171 (2015).

Ramachandran, P., Alex, Z. C. & Nelleri, A. Compressive Fresnel digital holography using Fresnelet based sparse representation. Opt. Commun. 340, 110–115 (2015).

Watts, C. M. et al. Terahertz compressive imaging with metamaterial spatial light modulators. Nature Photon. 8, 605–609 (2014).

Sun, B. et al. 3D computational imaging with single-pixel detectors. Science 340, 844 (2013).

Sun, M. et al. Single-pixel three-dimentional imaging with time-based depth resolution. Nat. Commun. 7, 12010 (2016).

Li, L., Xiao, W. & Jian, W. Three-dimensional imaging reconstruction algorithm of gated-viewing laser imaging with compressive sensing. Appl. Opt. 53, 7992 (2014).

Durán, V. et al. Compressive imaging in scattering media. Opt. Express 23, 14424–14433 (2015).

Baraniuk, R. Compressive sensing. IEEE Sign. Proc. Mag. 24, 118–121 (2007).

Romberg, J. Imaging via compressive sampling. IEEE Sign. Proc. Mag. 25, 14–20 (2008).

Eldar, Y. C. Sampling theory, Beyond Bandlimted Systems (Cambridge Univ. Press, 2015).

Phillips, D. et al. Adaptive foveated single-pixel imaging with dynamic supersampling. Sci. Adv. 3, e1601782 (2017).

Sun, M. J., Meng, L. T., Edgar, M. P., Padgett, M. J. & Radwell, N. A Russian dolls ordering of the hadamard basis for compressive single-pixel imaging. Sci. Rep. 7, 3464 (2017).

Huo, Y., He, H. & Chen, F. Compressive adaptive ghost imaging via sharing mechanism and fellow relationship. Appl. Opt. 55, 3356 (2016).

Aßmann, M. & Bayer, M. Compressive adaptive computational ghost imaging. Sci. Rep. 3, 1545 (2013).

Candes, E. & Romberg, J. Sparsity and incoherence in compressive sampling. Inverse Probl. 23, 969 (2007).

Coifman, R., Geshwind, F. & Meyer, Y. Noiselets. Appl. Comput. Harm. Anal. 27–44, 10 (2001).

Pastuszczak, A., Szczygieł, B., Mikołajczyk, M. & Kotyński, R. Efficient adaptation of complex-valued noiselet sensing matrices for compressed single-pixel imaging. Appl. Opt. 55, 5141–5148 (2016).

Zhang, Z., Wang, X., Zheng, G. & Zhong, J. Fast Fourier single-pixel imaging via binary illumination. Sci. Rep. 7, 12029 (2017).

Daugman, J. G. Uncertainity relation for resolution in space, spatial frequency, and orientation optimized by two-dimensional visual cortical filters. J.Opt.Soc.Am.A 2, 1160 (1985).

Zhang, C., Guo, S., Cao, J., Guan, J. & Gao, F. Object reconstitution using pseudo-inverse for ghost imaging. Opt. Express 22, 30063–30073 (2014).

Sun, B., Welsh, S. S., Edgar, M. P., Shapiro, J. H. & Padgett, M. J. Normalized ghost imaging. Opt. Express 20, 16892 (2012).

Yu, W. et al. Complementary compressive imaging for the telescopic system. Sci. Rep. 4, 5834 (2014).

Becker, S., Bobin, J. & Candés, E. J. Nesta: a fast and accurate first-order method for sparse recovery. SIAM J. Imaging Sci. 4, 1–39 (2009).

Acknowledgements

The authors acknowledge financial support from the Polish National Science Center (grant No. UMO-2014/15/B/ST7/03107).

Author information

Authors and Affiliations

Contributions

K.C. introduced the Morlet wavelet-based sampling functions and conducted numerical analysis. R.K. proposed the idea of nonergodic random sampling. A.P. constructed the optical set-up and conducted the measurements. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing Interests

The authors declare that they have no competing interests.

Additional information

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Czajkowski, K.M., Pastuszczak, A. & Kotyński, R. Single-pixel imaging with Morlet wavelet correlated random patterns. Sci Rep 8, 466 (2018). https://doi.org/10.1038/s41598-017-18968-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-017-18968-6

This article is cited by

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.