Abstract

In this paper, we propose a strategy for the control of mobile chaotic oscillators by adaptively rewiring connections between nearby agents with local information. In contrast to the dominant adaptive control schemes where coupling strength is adjusted continuously according to the states of the oscillators, our method does not request adaption of coupling strength. As the resulting interaction structure generated by this proposed strategy is strongly related to unidirectional chains, by investigating synchronization property of unidirectional chains, we reveal that there exists a certain coupling range in which the agents could be controlled regardless of the length of the chain. This feature enables the adaptive strategy to control the mobile oscillators regardless of their moving speed. Compared with existing adaptive control strategies for networked mobile agents, our proposed strategy is simpler for implementation where the resulting interaction networks are kept unweighted at all time.

Similar content being viewed by others

Introduction

Synchronization is a ubiquitous collective behavior, widely found in biological, physical, and social systems1,2,3,4,5,6. An issue of particular relevance is the control of networked systems, so as to target them towards specific, collective, and desired functionings. When the topology of connections among the units of a network is fixed, various strategies can be adopted, such as: i) regulating the strength of the coupling between the graph’s components7,8,9,10,11,12,13, ii) applying a time delay14,15, iii) changing adaptively the nodes’ interaction16, iv) pinning specific sequences of network’s components17,18,19. With the rapid development of technology in mobile devices, synchronization and control of mobile agents becomes, however, an issue of primary importance20,21,22. Conditions for synchronization of networks with time-varying structure under various circumstances have been studied. Such conditions include that the time-varying structures are always small world23,24, oscillators perform random walk on networked infrastructure25,26,27, oscillators could move on a one-dimensional ring28, oscillators are allowed to jump randomly in the space29, etc. A good study of the condition of stable synchronization for fast-switching networks, where the network structure changes much faster than the unit dynamics, can be found in ref.30, while a study for slow-switching networks is presented in ref.31. Further, the compound effect of different time scales between structure dynamics and unit dynamics has been studied in ref.32.

The problem of controlling networked mobile oscillators has received many research interests33,34,35,36,37. However, moving-agent systems commonly have features of spatial distribution, communication constraints, and limited sensing capacity, which makes centralized control strategies generally too expensive or even infeasible to be implemented in practice. Therefore, many adaptive control strategies which require only local information, termed as decentralized strategies, have been proposed in the last decade. For instance, an adaptive strategy has been designed for the consensus of networked moving agents38. Further, the situation of input saturation has been taken into consideration39. Because of the advantages of the decentralized method, adaptive control strategies have also been extensively developed for networks with static structures40,41,42.

However, most existing adaptive control strategies are realized by continuously adjusting coupling strength between agents, here we referred as coupling adaption strategy. In this paper, we propose a strategy where agents may adaptively choose neighboring ones to establish temporary connections without tunning coupling strength, which we refer to as connection adaptation strategy. We prove that the temporary networks generated from our strategy have the same synchronization stability as that of the static networks with the same Laplacian spectrum. We further show that the resulting structure is closely related to unidirectional chains. Our study suggests that for such chains there exists a certain range of coupling strength within which the whole chain could be controlled regardless of the length of the chains. This feature further indicates that in this coupling range the networked mobile chaotic agents could be controlled regardless of the speed of the agents. Since the resulting networks generated by our strategy are always unweighted, this connection adaption strategy may be simpler to be implemented than the coupling adaptation strategies.

Model and theory

We start from an ensemble of N moving agents networked on a unit planar space Γ = [0,1]2 (with periodic boundary conditions). Agent i budges with velocity \({{\bf{v}}}_{i}(t)=(v\,\cos \,{\theta }_{i},v\,\sin \,{\theta }_{i})\). The module v (equal for all agents) is constant, and the direction θ i is randomly drawn from the interval [−π, π) with uniform probability (at each time step). The position y i (t) of agent i evolves, therefore, as \({y}_{i}(t+{\rm{\Delta }}t)={y}_{i}(t)+{{\bf{v}}}_{i}(t){\rm{\Delta }}t\), where Δt is an integration step size.

All agents carry an identical chaotic dynamics, described by \({\dot{{\bf{x}}}}^{i}={\bf{F}}({{\bf{x}}}^{i})\), with \({{\bf{x}}}^{i}\in {{\mathbb{R}}}^{m}\), i = 1, 2, …, N, dot denoting temporal derivative, and \({\bf{F}}:{{\mathbb{R}}}^{m}\to {{\mathbb{R}}}^{m}\). Each agent builds its connections based on the state and position information that it collects from other agents located within a neighborhood of radius r. The interacting structure is described by the un-weighted elements of the (generically asymmetric) coupling matrix G(t) where \({g}_{ij}(t)=-1\) when agent i forms a directed connection with agent j, and g ij (t) = 0 otherwise. The diagonal elements \({g}_{ii}(t)=-{\sum }_{j\ne i}{g}_{ij}(t)\) warrant the zero-row-sum property of G(t). The dynamics of each agent is ruled by

where σ is the coupling strength, and \({\bf{H}}:{{\mathbb{R}}}^{m}\to {{\mathbb{R}}}^{m}\) is here a coupling function.

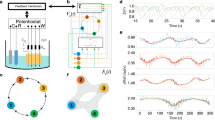

The purpose is to steer the dynamics of all agents towards a desired solution \({{\bf{x}}}^{1}={{\bf{x}}}^{2}=\cdots ={{\bf{x}}}^{N}={{\bf{x}}}^{S}\). To this aim, we first put a unit (carrying the desired state x s) at an arbitrary position in the space. Such a unit (which can be regarded actually as a virtual agent) plays the role of a reference guide for all other agents, so that we refer to it in the following as the guide agent (GA). The position of the GA is fixed once forever, and is known to all other agents. At each time step t, agent i chooses one of its neighbors (say agent j) to form a direct connection (so that g ij = −1). The chosen neighbor is the one which satisfies two conditions: i) it is the nearest one (among all neighbors of agent i within a disk of radius r) to the GA (if the GA is a neighbor of agent i, it will therefore be chosen); ii) the distance between the connected agent and the GA is smaller than that between agent i and the GA. This latter condition ensures that all formed connections uniformly point from the periphery to the GA, and therefore the interaction structure is acyclic. Further, if no agents are found in the disk satisfying the two above conditions, agent i expands its interaction radius until finding a valid connection. The resulting structure is schematically sketched in Fig. 1. Note that, though the GA is located at the center of the square in Fig. 1, the position of GA can be arbitrary.

The control scheme. For clarity, the guide agent (GA) is located at the center of the square. The gray circles (dashed arrow) indicate the agents (the directed connections). Agent i only detects the state and position of other agents that are within the dashed circle with radius r. In the example, agent i builds a connection to agent j since agent j is the neighbor which has the smallest distance to the GA and is closer to the GA than the agent i. The resulting structure is a directed tree, with each agent having one out-going connection converging from the periphery to the GA.

The resulting coupling matrix G(t) adaptively changes in time. After a proper relabeling of the agents [assigning the index 1 to the GA at all times, while giving smaller (larger) indices to agents closer (further) to the GA], an orthogonal transformation M(t) can be defined, by means of which one gets a lower triangular matrix \({\bf{L}}(t)=\{{l}_{ij}(t)\}\in {{\mathbb{R}}}^{(N+\mathrm{1)}\times (N+\mathrm{1)}}\) with \({\bf{L}}(t)={{\bf{M}}}^{{\rm{T}}}(t){\bf{G}}(t){\bf{M}}(t)\) as follows,

where l 11 = 0 and l ii = 1(i ≠ 1). The values of the elements l ij(i>j) [the lower part of L(t)] are either 0 or −1. Solving the related secular equation gives the spectrum of L(t) [and also of G(t)] as λ 1 = 0 and \({\lambda }_{2}=\cdots {\lambda }_{N+1}=1\). The eigen-vector of λ 1 corresponds to the synchronization manifold x s, while the other eigen-vectors are associated to eigen-modes spanning the transverse space of the synchronization manifold. As all such eigen-modes have always the same eigenvalue, they form a unique subspace which is invariant with time. It can be proved that the condition of stable synchronization for this time-varying structure is similar to that for a static network with the same spectrum (see Methods for details on both cases of slow- and fast-varying networks).

For a time-independent coupling matrix, a necessary condition for synchronization is that the Master Stability Function (MSF) Φ(σλ i ) be strictly negative in each transverse mode43. For our control scheme, the guess is therefore that the attainment of x s would occur at that value of the coupling strength σ for which Φ(σ) < 0.

For the sake of illustration, let us now refer to the case of agents carrying the chaotic Rössler oscillator. The dynamics of each unit is therefore described by \({\dot{x}}_{1}^{i}=-({x}_{2}^{i}+{x}_{3}^{i})\), \({\dot{x}}_{2}^{i}={x}_{1}^{i}+a{x}_{2}^{i}\), \({x}_{3}^{i}=b+{x}_{3}^{i}({x}_{1}^{i}-c)\), with \({{\bf{x}}}^{i}=({x}_{1}^{i},{x}_{2}^{i},{x}_{3}^{i}{)}^{{\rm{T}}}\) and \(a=b=0.2\), c = 7. \({\bf{H}}({\bf{x}})=({x}_{1},0,0)\), which realizes a linear coupling on the x 1 variable of the agents. The dynamics is integrated with fixed integration time step Δt = 0.001. Unless otherwise specified, network’s parameters are N = 100 and r = 0.1. The error function \({\delta }_{i}(t)=\frac{1}{3}(|{x}_{1}^{i}-{x}_{1}^{1}|+|{x}_{2}^{i}-{x}_{2}^{1}|+|{x}_{3}^{i}-{x}_{3}^{1}|)\) is monitored to evaluate the control performance on agent i. \(\delta (t)=\frac{1}{N}{\sum }_{i\mathrm{=2}}^{N+1}{\delta }_{i}(t)\) (the average error of all the agents) and 〈δ〉 (the time averaged value of δ(t) over the last 105 integration steps) are evaluated after a suitable transient time, to quantify the global control performance.

With such a choice of parameters (and of output function H), the Rössler system belongs to class III MSF3, i.e. Φ is negative within the range [α 1, α 2] (with \({\alpha }_{1}\simeq 0.2\) and \({\alpha }_{2}\simeq 4.3\)). The necessary condition for stability of the synchronized solution x s is therefore:

Results

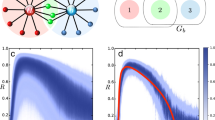

Figure 2 reports 〈δ〉 (the control performance) vs. σ for various values of agents’ velocities. Remarkably [and together with the necessary condition of Eq. (3)], agents’ velocity impacts the control performance, with a range of σ for control which monotonically decreases with decreasing v (see the inset of Fig. 2, from which it is clear that only when v is large enough, the condition (3) is matched). A major conclusion is the existence of a range of the coupling strength (the non-vanishing range of σ at v = 0) where the system can be controlled for all agents’ velocities.

In order to better elucidate the control mechanism, we first concentrate on the case v = 0, where the structure of connections is static and can be regarded as the overlap of a group of unidirectional chains, converging towards the GA and bifurcating at some intermediate points. Since agents closer to the GA are not influenced by those further to them, the chains can be considered as independent to each other. Therefore, controlling the case v = 0 is tantamount to finding under which conditions unidirectional chains can be controlled to x s. In the following, therefore, we focus on the performance of a typical unidirectional chain [sketched in Fig. 3(a)], for which the only structural factor is its length m, i.e. the number of agents to be controlled [indexed by their distance to the GA, and denoted by the gray circles in Fig. 3(a)]. Figure 3(b) reports the relation between the error function 〈δ〉 and m, at σ = 3.5. Remarkably, a threshold value m th = 5 exists, such that the chain cannot be controlled for m > m th. Notice that σ = 3.5 still satisfies the necessary condition (3), which means that the distance of the agent to the GA fundamentally affects the control performance. Figure 3(c) reports the behavior of m th as a function of σ. With the shape of the curve (reminiscent of the V-shape of the MSF for such coupled Rössler oscillators), m th is negatively correlated with the MSF, and reaches 0 at the points of α 1 and α 2. As shown in Fig. 3(b), under a given coupling strength σ the feasible length of a chain for control is restricted by the threshold m th, therefore the control performance for a static network is determined by its longest chain: if the length of the longest chain, l max, is smaller (larger) than m th(σ) the network can (cannot) be controlled. We verified that l max ~ 10 (for our network with v = 0 and r = 0.1), and the corresponding range of σ satisfying \({m}_{{\rm{th}}}(\sigma ) > 10\) is marked by the horizontal green bar in Fig. 3(c). The range of coupling spanned by the green bar is well consistent with that for the case of v = 0 in Fig. 2. We have also compared l max with m th for different radius r, and good consistency appears in all of them (see Supplemental Information).

(a) Diagram of a unidirectional chain. Black arrows indicate directed links between the agents. As in Fig. 1, the black circle denotes the GA and the gray circles denotes the agents. (b) Control error 〈δ〉 vs. the length m of the chain, for σ = 3.5. A threshold m th is observed, above which 〈δ〉 diverges. (c) m th vs. σ. The green bar indicates the feasible range of σ for m th = 10. The dashed line is a guide for eyes. The red curve is, instead, the result of our analysis (see text).

Furthermore, it is seen in Fig. 3(c) that m th(σ) diverges when σ is in the range [0.6, 2.2] (we have verified this divergence with extensive simulations where the the length of a 1D chain is increased up to 1,000, and still synchronization occurs in that range). In order to gather more detailed information, we monitor the time evolution of δ i (t), and find that δ i (t) features intermittent spikes (see Fig. 4(a–c)), which is actually caused by sudden surges in the x 3 variable of the Rössler oscillator. In order to capture the main profile of the evolution, one can then use a smoothing process to remove the spikes. More precisely, a Smooth function \({\bar{\delta }}_{i}(t)=\frac{1}{2\tau }{\int }_{t-\tau }^{t+\tau }{\delta }_{i}(t^{\prime} )dt^{\prime} \) is defined. As the typical time interval spanned by the spikes is about 2 = 2 × 103Δt, when τ is larger than 2 they are eliminated. Figure 4(d–f) show the resulting profile after applying the smoothing function, for τ = 10. One observes that, before vanishing, \({\bar{\delta }}_{i}(t)\) features a characteristic hump, whose height \({\bar{\delta }}_{i}^{h}\) (indicated by the black dots), changes with the index i. For instance, for σ = 3, \({\bar{\delta }}_{i}^{h}\) increases with increasing i, leading eventually to a divergence of \({\bar{\delta }}_{i}\). For σ = 2, instead, \({\bar{\delta }}_{i}^{h}\) does not increase with i, suggesting that the whole chain can be controlled even when its length is very long. These humps can be regarded as transient fluctuations, that can be delivered from one agent to the next. For some coupling strength σ, these fluctuations will be scaled up during the delivery. To accurately describe the tendency of \({\bar{\delta }}_{i}^{h}\) evolving with i, one then defines the ratio between the humps of two adjacent agents \({\bar{\delta }}_{i}^{h}\) as \({\gamma }_{i}(\sigma )={\bar{\delta }}_{i+1}^{h}/{\bar{\delta }}_{i}^{h}\), and the average of such ratios on the chain as \(\gamma (\sigma )=\frac{1}{{m}_{{\rm{th}}}\,-\,1}{\sum }_{i\mathrm{=1}}^{{m}_{{\rm{th}}}-1}{\gamma }_{i}(\sigma )\), which then measures the converging (or diverging) tendency of the whole chain.

(Left panels) Time evolution of δ i (t) (i = 1, …,8) for σ = 2.0 (a), 2.2 (b), and 3.0 (c); (Right panels) Time evolution of \({\bar{\delta }}_{i}(t)\) (i = 1, …, 8) for σ = 2.0 (d), 2.2 (e), and 3.0 (f). Distinct humps appear before \({\bar{\delta }}_{i}(t)\) vanishes. Black dots mark the peak of the humps of \({\bar{\delta }}_{i}(t)\) for a guide to the eyes.

γ(σ) is reported in Fig. 5. It is seen that, when 0.6 < σ < 2.2, \(\gamma \simeq 1\), and therefore \({\bar{\delta }}_{i}^{h}\) does not scale up with increasing i. When instead σ is out of such a range, \({\bar{\delta }}_{i}^{h}\) is amplified with the increase of the index i, and eventually exceeds a certain domain, leading to divergence of the state. By calling the size of the domain as U, then one has \({\bar{\delta }}_{1}^{h}{\langle \gamma (\sigma )\rangle }^{{m}_{{\rm{th}}}^{^{\prime} }-1}=U\), where \({\bar{\delta }}_{1}^{h}\) is the height of the hump of the first agent (i.e. the initial value), and \({m^{\prime} }_{{\rm{th}}}\) denotes the number of amplifications needed to reach U. As a consequence, \(m{{\rm{^{\prime} }}}_{{\rm{th}}}=ln(U/{\bar{\delta }}_{1})/ln\langle \gamma (\sigma )\rangle +1\). The value of \(U/{\bar{\delta }}_{1}\mathrm{=200}\) is the result of a fit, and one can further input the dependencies of \(\gamma (\sigma )\), so as to obtain a prediction for \({m^{\prime} }_{{\rm{th}}}\). \({m^{\prime} }_{{\rm{th}}}\) as a function of σ is reported as a red dashed curve in Fig. 3(c). One can easily see how significantly the two curves for \({m^{\prime} }_{{\rm{th}}}\) and m th are matching, pointing to the fact that the parameter γ represents actually a proper indicator to predict the tendency of \({\bar{\delta }}_{i}^{h}\).

γ (see text for definition) as a function of σ. The entire range of σ can be separated into three regions: I. Strongly controllable region, where \({\rm{\Phi }}(\sigma \mathrm{) < 0}\) and \(\gamma \lesssim 1\) (Light gray); II. Conditionally controllable region, where \({\rm{\Phi }}(\sigma ) < 0\) and γ > 1 (Dark gray); III. Uncontrollable region, where Φ(σ) > 0 (White).

As a conclusion of all the above reasoning, our results suggest that one can partition all the range of σ into three distinct regions (reported in Fig. 5): Region I, characterized by \({\rm{\Phi }}(\sigma ) < 0\) and \(\gamma \lesssim 1\). In this region synchronization occurs regardless of the length of the chain, so that we can safely call this region as Strongly controllable region (SCR); Region II, where \({\rm{\Phi }}(\sigma ) < 0\) but γ > 1. Here, though the necessary condition of the MSF is satisfied, synchronization is restricted to a finite chain length, so that the region is called as Conditionally controllable region; Region III, where Φ(σ) > 0. Here the necessary condition of a negative MSF is not satisfied. Therefore, synchronization is intrinsically unstable, and we call this region as the Uncontrollable region.

Finally, we briefly elaborate on the case where v ≠ 0. There, network’s chains are continuously broken and regrouped, with such a process occurring more often at higher agents’ velocities. When a chain is broken, the fluctuation delivery process is interrupted and current fluctuations may be defused on the newly formed chain. Therefore, increasing the velocity v is equivalent to reducing l max. For instance, at v = 10, the network can be controlled when σ < 3.6 (see Fig. 2), which indicates that chains with lengths longer than 5 (compare with data and discussion on Fig. 3) cannot remain unchanged long enough (under this speed) to induce an effective divergence of the state variables. On the other hand, when v is small, long chains are more easily to remain unmodified, and control therefore fails.

Conclusion

In this paper, we have proposed an adaptive strategy for controlling networked mobile chaotic oscillators with local information. The key idea of this strategy is to allow each agent to select one of its neighbors to form up a connection between them. The resulting temporary structures generated by this strategy is a set of directed trees and their Laplacian spectrum are always kept the same with identity eigenvalues for all the transversal modes. We have proved that such a time-varying structure has the same synchronization stability as that of the static network with the same Laplacian spectrum. Thus, the networks may be controlled without changing coupling strength. We have further shown that the temporary structure generated by the proposed strategy is closely related to a unidirectional chain. By investing this featured structure, we observe a specific region of coupling strength, called strongly controllable region, within which the whole chain can be controlled regardless of its length. This feature is of value for our strategy, since in this region the whole networks can be controlled for arbitrary moving speed of the oscillators.

Compared to the dominant adaptive control scheme for related mobile systems where the coupling strength is adjusted continuously, our strategy may be regarded as a favorable simplification where the coupling ratio only needs to be set as either 0 or 1 regardless of the states of the oscillators, hence significantly reduced the efforts for implementation. We expect our work may inspire more researches in developing simple and efficient decentralized strategies for the control of networked mobile systems. Though in this work the results are presented on a simple model, the proposed scheme can be extended to more realistic situations, e.g., where there exist noises, errors or non-negligible delay in communications between agents and systems are of complicated spacial structures, etc. Such extensions will be our future research interest.

Methods

The necessary condition for control

The linearized equation of the system deviating around the solution \({{\bf{x}}}^{S}\equiv {{\bf{x}}}^{1}={{\bf{x}}}^{2}=\mathrm{....}={{\bf{x}}}^{N+1}\) can be written as

where \(\delta \dot{{\bf{x}}}={(\delta {\dot{{\bf{x}}}}^{1},\cdots ,\delta {\dot{{\bf{x}}}}^{N+1})}^{{\rm{T}}}\), I N+1 is the identity matrix of order N + 1, and D F and D H are the Jacobian functions of F and H, respectively. This equation is in accordance to Eq. (1), and we here label the GA as agent 1 and the other N agents with indices from 2 to N + 1. At time t, the Laplacian G is decomposed as \({\bf{G}}={\sum }_{i\mathrm{=1}}^{N}{\lambda }_{i}{{\bf{u}}}_{i}{{\bf{u}}}_{i}^{{\rm{T}}}\), where the spectrum {λ i } is such that λ 1 = 0 and \({\lambda }_{2}=\cdots ={\lambda }_{N+1}=1\). The eigen-vectors \(\{{{\bf{u}}}_{i}={({u}_{i1},\cdots ,{u}_{i,N+1})}^{{\rm{T}}}\}\) form an ortho-normal basis, with \({{\bf{u}}}_{1}=\frac{1}{\sqrt{N}}{(1,\cdots ,1)}^{{\rm{T}}}\) corresponding to the synchronization manifold x s. Taking \({{\bf{I}}}_{N+1}={\sum }_{i\mathrm{=1}}^{N+1}{{\bf{u}}}_{i}{{\bf{u}}}_{i}^{{\rm{T}}}\), one gets

Setting δ x(t) = Q δ y(t) with \({\bf{Q}}=({{\bf{u}}}_{1},\ldots ,{{\bf{u}}}_{N+1})\otimes {{\bf{I}}}_{m}\), where I m is the identity matrix of order m (m being the number of degrees of freedom of the dynamics carried by each agent), Eq. (5) becomes

where e i is a unit vector whose i-th element is 1 and 0 otherwise. The matrix in Eq. (6) is block diagonal with m × m blocks. Taking δ y as δ y = (δ y 1, …, δ y N)T with \(\delta {{\bf{y}}}^{i}\in {{\mathbb{R}}}^{m}\), one has

Since δ y = Q T δ x (and noting that λ i = 1 for i ≥ 2), Eq. (7) gives

Since here {u ij(i,j≥2)} can be arbitrary set as long as they form an orthonormal basis of eigen-vectors, the solution for δ x j can only be

Therefore, when the spectrum of G(t) satisfies λ 1 = 0 and λ 2 = …λ N+1 = 1, the deviation around x s of all the agents (except for the GA) shall evolves uniformly following Eq. (9).

The above argument reveals how δ x evolves during the time window where the structure is fixed. Now, let us discuss what happens, instead, when the structure switches from one topology to another due to the movement of the agents in the square plane. When this happens, say at time \(t^{\prime} \), then δ x(t′) (the final solution of the deviation during the presence of the previous structure of connections) now becomes the initial condition for the evolution of the deviation in the presence of the new structure. It is important to remark that the eigen-vectors of the new structure may be different from the old ones (though the eigenvalues’ spectra are the same). Specifically, we suppose the new structure could be decomposed as \({\bf{G}}={\sum }_{i\mathrm{=1}}^{N}{\lambda }_{i}{{\bf{v}}}_{i}{{\bf{v}}}_{i}^{{\rm{T}}}\) with eigenvectors \({{\bf{v}}}_{i}=({v}_{i1},\cdots ,{v}_{i,N+1}{)}^{{\rm{T}}}\). Similarly, setting \(\delta {\bf{x}}(t^{\prime} )={\bf{R}}\delta {\bf{z}}(t^{\prime} )\) with \({\bf{R}}=({{\bf{v}}}_{1},\cdots ,{{\bf{v}}}_{N+1})\otimes {{\bf{I}}}_{m}\), one obtains

Owing to the difference between Q and R (i.e. the difference of the eigenvectors), δ y i(t′) and δ z i(t′) could be different. That is to say that the change of eigenvectors may lead to a change of the deviations of eigen-transversal modes for different structures, so that δ y i(t′) changes to δ z i(t′).

However, since both Eqs (7) and (10) bring about Eq. (9), and δ x(t′) is maintained at the moment at which the structure switches, we conclude that Eq. (9) is valid at all times during the structure switching process. Therefore, since in our method the spectrum of the temporal Laplacians always satisfies the condition, we conclude that the criteria for stability of the solution where all agents are synchronized with the GA are equivalent to those for the same solution in a static network featuring the same eigenvalues’ spectrum.

References

Boccaletti, S., Kurths, J., Osipov, G., Valladares, D. L. & Zhou, C. S. The synchronization of chaotic systems. Phys. Rep. 366, 1 (2002).

Pikovsky, A., Rosenblum, M. & Kurths, J. Synchronization. Synchronization (Cambridge University Press, Cambridge, England) (2003).

Boccaletti, S., Latora, V., Moreno, Y., Chavez, M. & Hwang, D.-U. Complex networks: structure and dynamics. Phys. Rep. 424, 175 (2006).

Dorogovtsev, S. N., Goltsev, A. V. & Mendes, J. E. F. Critical phenomena in complex networks. Rev. Mod. Phys. 80, 1275 (2008).

Zou, Y., Pereira, T., Small, M., Liu, Z. & Kurths, J. Basin of Attraction Determines Hysteresis in Explosive Synchronization. Phys. Rev. Lett. 112, 114102 (2014).

Zhang, X., Boccaletti, S., Guan, S. & Liu, Z. Explosive synchronization in adaptive and multilayer networks. Phys. Rev. Lett. 114, 038701 (2015).

Huang, D. Stabilizing Near-Nonhyperbolic Chaotic Systems with Applications. Phys. Rev. Lett. 93, 214101 (2004).

Huang, D. Simple adaptive-feedback controller for identical chaos synchronization. Phys. Rev. E 71, 037203 (2005).

Huang, D. Adaptive-feedback control algorithm. Phys. Rev. E 73, 066204 (2006).

Zhou, J., Lu, J.-A. & Lü, J. Pinning adaptive synchronization of a general complex dynamical network. Automatica 44, 996 (2008).

Wang, L., Dai, H. P., Dong, H., Cao, Y. Y. & Sun, Y. X. Adaptive synchronization of weighted complex dynamical networks through pinning. Euro. Phys. J. B 61, 335 (2008).

Schröder, M., Mannattil, M., Dutta, D., Chakraborty, S. & Timme, M. Transient Uncoupling Induces Synchronization. Phys. Rev. Lett. 115, 054101 (2015).

Yu, W., Lü, J., Yu, X. & Chen, G. Distributed Adaptive Control for Synchronization in Directed ComplexNetworks. SIAM J. Control Optim. 53, 2980 (2015).

Hövel, P. Control of Complex Nonlinear Systems with Delay (Springer, Berlin) (2010).

Flunkert, V., Yanchuk, S., Dahms, T. & Schöll, E. Synchronizing Distant Nodes: A Universal Classification of Networks. Phys. Rev. Lett. 105, 254101 (2010).

Nishikawa, T. & Motter, A. E. Network synchronization landscape reveals compensatory structures, quantization, and the positive effect of negative interactions. Proc. Natl. Acad. Sci. USA 107, 10342 (2010).

Wang, X. & Chen, G. Pinning control of scale-free dynamical networks. Physica A 310, 521 (2002).

Li, X., Wang, X. & Chen, G. Pinning a complex dynamical network to its equilibrium. IEEE Transactions on Circuits and Systems 51, 2074 (2004).

Chen, T., Liu, X. & Lu, W. Pinning Complex Networks by a Single Controller. Circuits and Systems I: Regular Papers, IEEE Transactions 54, 1317 (2007).

Porfiri, M., Stilwell, D. J. & Bollt, E. M. Synchronization in random weighted directed networks. IEEE Transactions on Circuits and Systems I 55, 3170 (2008).

Wang, L., Shi, H. & Sun, Y.-X. Induced synchronization of a mobile agent network by phase locking. Phys. Rev. E 82, 046222 (2010).

Kim, B., Do, Y. & Lai, Y.-C. Emergence and scaling of synchronization in moving-agent networks with restrictive interactions. Phys. Rev. E 88, 042818 (2013).

Belykh, I. V., Belykh, V. N. & Hasler, M. Blinking Model and Synchronization in Small-World Networks with a Time-Varying Coupling. Physica D 195, 188 (2004).

Belykh, I., Belykh, V. & Hasler, M. Synchronization in complex networks with blinking interactions. Physics and Control 86 (2005).

Skufca, J. D. & Bollt, E. M. Communication and Synchronization in Disconnected Networks with Dynamic Topology: Moving NeighborhoodNetworks. Math. Bio. and Eng. 1, 347 (2004).

Porfiri, M., Stilwell, D. J., Bollt, E. M. & Skufca, J. D. Random talk: Random walk and synchronizability in a moving neighborhood network. Physica D: Nonlinear Phenomena 224, 102 (2006).

Porfiri, M., Stilwell, D. J., Bollt, E. M. & Skufca, J. D. Stochastic synchronization over a moving neighborhood network. ACC 1413 (2007).

Peruani, F., Nicola, E. M. & Morelli, L. G. Mobility induces global synchronization of oscillators in periodic extended systems. New J. Phys. 12, 093029 (2010).

Frasca, M., Buscarino, A., Rizzo, A., Fortuna, L. & Boccaletti, S. Synchronization of Moving Chaotic Agents. Phys. Rev. Lett. 100, 044102 (2008).

Stilwell, D. J., Bollt, E. M. & Roberson, D. G. Sufficient Conditions for Fast Switching Synchronization in Time Varying Network Topologies. SIAM J. Dynamical Systems 5, 140 (2006).

Zhou, J., Zou, Y., Guan, S., Liu, Z. & Boccaletti, S. Synchronization in slowly switching networks of coupled oscillators. Sci. Rep. 6, 35979 (2016).

Fujiwara, N., Kürths, J. & Daz-Guilera, A. Synchronization in networks of mobile oscillators. Physical Review E 83, 025101 (2011).

Wang, L. & Sun, Y.-X. Pinning synchronization of a mobile agent network. J. Stat. Mech. P11005 (2009).

Dariani, R., Buscarino, A., Fortuna, L. & Frasca, M. Pinning Control in a System of Mobile Chaotic Oscillators. AIP Conference Proceedings 1389, 1023 (2011).

Frasca, M., Buscarino, A., Rizzo, A. & Fortuna, L. Spatial Pinning Control. Phys. Rev. Lett. 108, 204102 (2012).

Klinglmayr, J., Kirst, C., Bettstetter, C. & Timme, M. Guaranteeing global synchronization in networks with stochastic interactions. New J. Phys. 14, 073103 (2012).

Klinglmayr, J., Bettstetter, C., Timme, M. & Kirst, C. Convergence of Self-Organizing Pulse-Coupled Oscillator Synchronization in Dynamic Networks. IEEE Transactions on Automatic Control, https://doi.org/10.1109/TAC.2016.2593642 (2016).

Su, H., Chen, G., Wang, X. & Lin, Z. Adaptive second-order consensus of networked mobile agents with nonlinear dynamics. Automatica 47, 368 (2011).

Wang, X., Wang, X., Su, H. & Chen, G. Fully distributed event-triggered consensus of multi-agent systems with input saturation. IEEE Transactions on Industrial Electronics 64, 5055 (2017).

Li, Z., Duan, Z., Xie, L. & Liu, X. Distributed robust control of linear multi-agent systems with parameter uncertainties. International J. Control 85, 1039 (2012).

Li, Z., Ren, W., Liu, X. & Fu, M. Consensus of multi-agent systems with general linear and Lipschitz nonlinear dynamics using distributed adaptive protocols. IEEE Trans. Autom. Control 58, 1786 (2013).

Li, Z., Chen, M. Z. Q. & Ding, Z. Distributed Adaptive Controllers for Cooperative Output Regulation of Heterogeneous Agents over Directed Graphs. Automatica 68, 179 (2016).

Pecora, L. M. & Carroll, T. L. Master Stability Functions for Synchronized Coupled Systems. Phys. Rev. Lett. 80, 2109 (1998).

Acknowledgements

Work partially supported by the National Natural Scientific Foundation of China (Grant Nos. 11405059, 11575041, and 61503110), Natural Science Foundation of Shanghai (Grant No. 17ZR1444800) and Ministry of Education, Singapore, under contract MOE2016-T2-1-119.

Author information

Authors and Affiliations

Contributions

J.Z., Y.Z., S.G.G., Z.H.L., G.X.X. and S.B. conceived the methods and the research; J.Z. performed the numerical simulations; J.Z. and S.B. wrote the paper. All authors reviewed the Manuscript.

Corresponding authors

Ethics declarations

Competing Interests

The authors declare that they have no competing interests.

Additional information

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zhou, J., Zou, Y., Guan, S. et al. Connection adaption for control of networked mobile chaotic agents. Sci Rep 7, 16069 (2017). https://doi.org/10.1038/s41598-017-16235-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-017-16235-2

This article is cited by

-

Physically-interpretable classification of biological network dynamics for complex collective motions

Scientific Reports (2020)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.