Abstract

The ability to discern the target of another person’s gaze is critical for social and linguistic development, but functions atypically in autism spectrum disorder (ASD). A multi-pronged approach allowed us to deconstruct this complex ability, to uncover the fundamental bases of this impairment. We analyzed performance on a novel gaze perception task with classical psychophysical metrics (precision and accuracy), principal component analysis (in the analysis of spatial biases), and Bayesian computational modeling (in the analysis of individual subjects’ use of contextual salience cues). Compared to controls, adults with ASD were less precise and less accurate in their judgments of gaze direction. Further, although nearly all controls exhibited a prototypical pattern of spatial bias in their judgments, this spatial prior was severely disrupted among a large subset of ASD participants. By contrast, Bayesian computational modeling revealed that both groups exploited contextual salience cues in their gaze judgments, and that the average strength of this contextual prior was similar for both groups. This comprehensive study revealed that although most ASD participants performed atypically in at least one aspect of gaze perception, the particular aspects disrupted varied idiosyncratically across individuals. Impairment in gaze perception in ASD likely arises via heterogeneous underlying mechanisms.

Similar content being viewed by others

Introduction

Impairment in the ability to discern the target of another person’s gaze is one of several documented social difficulties associated with autism spectrum disorder (ASD)1,2,3. Gaze is a critical social cue for dynamically tracking the attention of others, and additionally alerts one to potentially important objects or happenings in the shared environment4,5,6,7. Further, gaze perception facilitates linguistic development, as tracking the attention of the speaker allows for inference about the referent8. Thus, atypical development of this complex social ability9,10 can have pervasive and persistent effects on perception and cognition in the context of ASD.

Although perception of eye gaze is often considered as a singular ability, like many complex social abilities it can be broken down into several dissociable and more fundamental processes11,12. Disruption to any of these underlying mechanisms could therefore result in atypical gaze perception as it manifests in ASD. This study systematically deconstructs eye gaze perception into four more fundamental constructs (precision, accuracy, spatial bias, and integration with context), to ask what specific aspect (or aspects) of this ability lie at the root of of this impairment, and whether the answer to this question is consistent across affected individuals.

Eye gaze perception is partially modulated by relatively low-level perceptual processes13, and the human visual system typically extracts the direction of another person’s eye fixation with remarkable precision14. In ASD, disruption to early visual processing could lead to affected individuals simply extracting this social perceptual cue in a noisier manner—i.e. with less precision. Alternatively, a participant could be highly precise—i.e., reliably reproduce the same response to any respective stimulus—but inaccurate, with these responses being poorly calibrated with respect to correspondence with the underlying ground truth. Importantly, precision and accuracy can potentially be dissociated from one another, but this has only rarely been explicitly examined in the context of gaze perception15.

Even if eye fixation cues were perceptually extracted with typical fidelity in ASD, the signal would still be accompanied by some noise, creating conditions of partial uncertainty and ambiguity. In the face of these conditions, people rely on spatial biases (or priors) to help infer the mostly likely direction of gaze. Notably, people are biased to judge another’s gaze as being in the direction of one’s self16,17), and it has recently been posited that this prior is largely intact in high-functioning adults with ASD18.

Context also helps to resolve this uncertainty and ambiguity; for example, people take into account how salient the potential targets of gaze are—that is, how a priori likely various locations are to be looked at11. Broadly speaking, that individuals with ASD would weight cues atypically (in both social and non-social domains) has been the topic of much recent theoretical speculation19,20. With respect to salience in particular, at least one study has found that adults with ASD may be susceptible to letting salience cues override gaze cues when attempting to discern the referent of a novel word21.

This study comprehensively analyzes performance on a novel gaze perception task with two classical psychophysical metrics (precision and accuracy), principal component analysis (PCA; in the analysis of spatial biases), and Bayesian computational modeling (in the analysis of individual subjects’ use of contextual salience cues). This quantitatively rigorous approach—emblematic of the emergent interdisciplinary field of computational psychiatry22—allows us to not only uncover mean group differences (control vs. ASD) where they exist, but also to investigate whether disruption to gaze perception in ASD stems from a consistent group deficit, or if the fundamental mechanism of impairment idiosyncratically varies among individuals. Given the high degree of heterogeneity characteristic of ASD, we expect the latter, and this approach will further allow us to parse this heterogeneous performance.

Methods

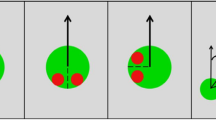

27 adults with ASD and 31 approximately age-, gender-, and IQ-matched controls (see Supplementary Table 1S for full descriptions of the samples) viewed photographs of a person gazing at various target locations on a partially transparent surface, situated between the “gazer” and the camera. Participants judged where on this continuous 2-dimensional surface the gazer was looking (Fig. 1). On most trials (Blocks 2–5), this surface was colored a uniform gray, offering no informative context for participants’ judgments. From these trials, we analyzed the precision, accuracy, and spatial biases exhibited by each participant’s judgments. During Block 1, this surface contained an arbitrary photographic image, allowing us to examine the extent to which individual participants used contextual salience cues to inform their judgments.

After the presentation of a fixation cross for 1000 ms, the scene appeared, with a mouse cursor appearing as a red square at a random location within the projected image (this image was a photograph in block 1, and uniform gray in blocks 2–5). After 2500 ms, the cursor turned green and the participant indicated with a mouse click where he or she thought the gazer was looking. (Note: The fixation crosses and red mouse cursors are enlarged in this figure to be more visible. The projected photograph originates from the LabelMe dataset42, made available to the research community without restrictions).

This experiment employed stimuli used in a previous study11. Unabridged methodological details appear in Supplemental Material. The study was performed in accordance with the tenets of the Declaration of Helsinki, and the experimental methods were approved by the Institutional Review Board of Indiana University. The data generated and analyzed during the current study are available from the corresponding author on reasonable request.

Participants

Participants were recruited from the Bloomington, Indiana area, and responded to flyers (posted around the community, on the university campus, or on Craigslist) or were referred by word of mouth. All participants gave written informed consent and were compensated at a rate of $15 per hour.

The ASD sample consisted of 27 adults (age 17–55, M = 25.1; 22 male) of average or above-average IQ (80–133, M = 116.0), all of whom had previously received clinical diagnoses of autism, Asperger’s Syndrome, or Pervasive Developmental Disability-Not Otherwise Specified (PDD-NOS). DSM-IV-TR diagnoses were confirmed using the Autism Diagnostic Observation Schedule-2 (ADOS-2) Module 4—administered by research reliable personnel23—and a review of background information and neuropsychological assessments (including IQ testing, Autism Spectrum Quotient [AQ], Beck Depression Inventory, State-Trait Anxiety Inventory, and self-reported clinical history), together with clinical judgment. The suggested ADOS cutoff score is 7; however, in light of empirical evidence that the ADOS Module 4 has very similar specificities at thresholds of 6 and 7 when administered to high-functioning adults24, we included participants with an ADOS score of 6 if all other available information supported an ASD diagnosis (n = 3).

The control sample consisted of 31 approximately age- (19–42, M = 25.7), gender- (25 male), and IQ- (99–153, M = 117.0) matched participants. One additional control was excluded from the final sample because of technical problems with the experiment; another was excluded after participation because he later reported a past diagnosis of schizophrenia.

Stimuli

Photographs were taken of a young man seated behind (and gazing upon) a glass surface, which was situated between him and the camera. One of these photographs was taken with the gazer fixating on the origin (i.e. straight ahead, and directly into the camera), and the other 32 photographs were taken with the gazer fixating on 32 respective locations arranged in a lattice of 7 rows and 9 columns (see green dots in Fig. 2). The gazer maintained minimal head and body movement across these 33 photographs.

The possible targets of gaze are represented here with green circles; the target of gaze corresponding to this specific photograph is shown with the filled-in green circle. Each stimulus was shown to each participant 20 times; the 20 judgments this individual participant made in response to this stimulus are shown with white circles. The distance of these responses to their mean (white lines connected to the magenta circle) enters into the calculation of the participant’s precision. The angle between the mean response to this stimulus (magenta line) and the ground truth target (green line) enters into the calculation of the participant’s accuracy.

All fixated locations fell within a rectangular frame superimposed on presented scenes. Either an image (Block 1) or uniform gray (Blocks 2–5) was presented within the frame in each scene, and alpha blended with the background photograph of the gazer. This created a perceptual effect akin to the participant and gazer being on opposite sides of a semitransparent surface, with the gazer’s silhouette faintly visible through it. Only a tight ellipse around the gazer’s eyes was fully visible through the surface, with the area around the eyes smoothly transitioning to greater opacity. Thus, in all conditions the gazer’s eyes were fully visible to the participant, and presented simultaneously with the supposed target of gaze.

The 165 color images used in Block 1 conveyed a variety of indoor and outdoor scenes, and were selected from a larger, publicly available set25.

Procedure

The experiment was programmed in MATLAB using the Psychophysics Toolbox26. It consisted of 5 blocks, each consisting of 165 trials. Over the course of each block, each of the 33 photographs of the gazer (fixating on the 33 respective locations) was featured 5 times, with these 165 total trials being randomly ordered. For Blocks 2–5, the frame in front of the gazer was filled with a uniform gray. For Block 1, one of 165 color images was randomly assigned to each of these 165 trials and projected into the frame; thus, the projected images and the actual target of the gaze were randomly paired. 2.5 s after the onset of the stimulus, the color of the cursor changed from red to green, indicating when the participant was permitted to respond. The participant clicked where he or she believed that the gazer was looking. The scene remained on the screen until the participant responded, or for 2.5 s more (whichever came first). The scene was then replaced with a Gaussian noise mask, and the participant pressed the spacebar to move on.

Analyses

Precision and Accuracy

Precision is defined as how consistently (or inversely, how noisily) a participant responds when presented with the same stimulus over repeated trials. Precision was calculated as the average (squared) error with respect to the overall mean of judgments made by the participant, divided by the average (squared) error with respect to the mean of the condition (i.e. the mean of all responses to a respective gaze pose; see Fig. 2). This yielded a single summary measure of precision for each participant. In Supplementary Figure 1S we also display average (and group differences in) precision with respect to every location in the gazed-upon space.

Accuracy is defined as deviation from a ground truth reference. On a trial-by-trial basis, precision will limit accuracy. But an individual can be accurate and imprecise, to the extent that the mean of his or her repeated responses to the same stimulus tends to converge toward ground truth.

In other words, calculating accuracy on a trial-to-trial basis does not satisfactorily decouple precision from accuracy. We therefore first averaged over the individual’s 20 responses to each single gaze pose (e.g., the magenta dot in Fig. 2), and then calculated the accuracy of this response compared to the ground truth target of gaze (e.g., the green dot in Fig. 2). To the extent that this average persistently deviated from ground truth, it reflected inaccuracy (or bias), and not random noise. We further note that whereas the precision of responses (as we have measured it) could also be potentially influenced by individual differences in visuomotor noise, this factor is likely to cancel out after averaging over repeated trials (i.e., as in our calculation of response accuracy).

We further observed that some participants’ responses were much more spread out than others, overall. This was likely related to how near the participant assumed the gazer was to the gazed-upon surface; this depth dimension was difficult to perceptually infer from the stimuli we used. The closer the participant assumed the gazer was to the surface, the more clustered the responses toward the center, and nearly all participants tended to produce judgments that were much more crowded toward the center of the space than the actual targets of gaze (see Fig. 3 and Supplementary Figure 2S). Further, this tendency appears to have been a trivial individual difference, rather than a meaningful measure of performance, and did not significantly vary by group (operationalized specifically with respect to the mean distance of the subject’s responses from the center of the space; t[56] = 0.86, p = 0.39). For these reasons, calculating accuracy with respect to discrepancy from ground truth in 2-D space was unlikely to be a useful performance metric, and we determined that angular accuracy—calculated as the cosine similarity between the response and the ground truth target of gaze (e.g., the cosine of θ in Fig. 2)—was a more meaningful measure of performance than spatial accuracy.

Spatial Bias

Although the ground truth targets of gaze were distributed approximately uniformly over the gazed-upon 2-D surface, participants’ judgments were not. Errors with respect to ground truth showed systematic, non-random biases. A bias toward the vertical center line was typically observed (see also15), perhaps resulting from the consistent cue of the gazer’s head being oriented straight ahead in each photo, or perhaps from some version of the “looking at you” effect17. A typical participant’s responses tended, overall, to conform to a symmetrical “butterfly” pattern (see Fig. 3 and Supplementary Fig. 2S).

We performed a principal component analysis (PCA) to quantify the extent to which individual participants conformed to similar spatial biases (The details of how input features were selected for the PCA and how participants’ responses were preprocessed have been excerpted to Supplementary Material). If individuals with ASD showed lower accuracy, this approach would further allow us to ask whether all of the individuals with ASD tended to be inaccurate in a similar way, or whether the patterns of individuals’ errors varied idiosyncratically. Using PCA, we placed each individual in a reduced dimensionality space (defined by the first 4 principal components, which explained 63.8% of the variance), in which the similarities and differences among the different participants’ spatial biases could be visualized and quantified.

As a metric of the idiosyncrasy of an individual participants’ biases (i.e. the extent that the participants’ response pattern was not like the others), we calculated the leverage of each participant in the reduced space. Leverage, by analogy from linear regression, is a measure of how much influence an individual point has on the configuration of the space, and was recently shown to be an effective means for labeling outliers in PCA27.

Use of Salience Cues

With minor adjustments, our analysis of participants’ use of contextual salience cues is adopted from an earlier study11, which used Bayesian computational modeling to determine the extent to which each individual participant took this information into account when making gaze judgments. For extensive details of this modeling approach, the reader is directed to Supplemental Material.

Salience was computed by each of two methods25,28, and served as a proxy for the participant’s reasonable expectation—implicit or explicit—of which locations in a scene would be more or less likely to draw the gazer’s visual attention. The visual salience map for each image was treated as the prior in our Bayesian model for participants’ gaze judgments. The extent to which each participant used the salience prior was estimated by fitting a parameter (δ), expressing how much the prior should be weighted to optimize the Bayesian model’s fit to his or her data.

Results

Precision and Accuracy

Control participants were significantly more precise (M = 5.53) than ASD participants (M = 3.66; t[56] = 3.16, p = 0.003). This effect was of similar magnitude toward the center (Cohen’s d = 0.90) and in the periphery (Cohen’s d = 0.77) of the gazed targets. Controls were also more accurate (\({M}_{control}=\mathrm{0.957,}{M}_{ASD}=0.914\); because of left skew in both groups’ distributions, we used a non-parametric permutation test to demonstrate a significant group difference; \(p=0.013\)).

Nearly all control participants and about half of the ASD participants performed in a cluster of high accuracy, with substantial individual differences in precision (see Fig. 4). Though precision and accuracy were correlated (r[56] = 0.378, p = 0.003), there were notable examples of participants for whom these two measures were not in agreement (e.g., participants #1, 9, 12, and 22).

Spatial Bias

Figure 3 displays the first two principal components resulting from PCA analysis of participants’ spatial tendencies. The first component (25.0% variance explained) expresses a tendency toward the typical spatial prototype; participants high on this dimension showed strong conformity to the “butterfly” pattern. The second component (19.5% variance explained) represents a tendency to cluster responses toward the horizontal axis (low) versus the vertical axis (high).

Almost all control participants and about half of ASD participants were clustered closely in the space, showing a tendency toward the butterfly pattern (Dim. 1), and not much additional bias toward the x-axis or y-axis (Dim. 2). Leverage (i.e. idiosyncrasy in spatial bias) was strongly correlated with accuracy (\(r\mathrm{[56]}=-\mathrm{0.737,}\,p < 0.001\)), and also showed a weaker (but significant) correlation with precision (\(r\mathrm{[56]}=-\mathrm{0.302,}\,p=0.021\)). Although half of ASD participants showed typical spatial biases, ASD participants were much more likely to exhibit idiosyncrasy than controls. The distribution of leverage had a heavy tail in the ASD group, and ASD participants on average tended to exhibit higher leverage than controls (\(p=0.005\) by permutation test). Figure 5 shows data from 4 example participants from the ASD sample, one of whom conformed strongly to the prototype (#5), and three of whom exhibited drastically different spatial patterns of gaze judgments.

Examples of response patterns produced by four ASD participants. Raw responses are represented with the smaller dots; the large magenta dots represent the mean responses of the participant to each of the 33 respective gaze stimuli. Participant #5 (top left) produced the prototypical “butterfly” pattern. The other three participants shown here produced patterns of responses that not only deviated strongly from the prototype, but from one another.

Use of Salience Cues

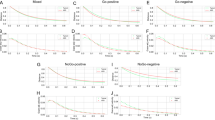

Both groups weighted contextual salience25 significantly above zero (\({M}_{control}=1.4\), \(\,t\mathrm{[28]}=6.60\), \(p < \mathrm{0.001;}\,{M}_{ASD}=\mathrm{1.2,}\,t\mathrm{[26]}=\mathrm{3.88,}\,p < .001\)). There was no significant group difference in the use of the salience cue (\(t\mathrm{[53]}=\mathrm{0.53,}\,p=0.596\)), though the individuals who weighted this cue the most and least tended to be from the ASD sample (Fig. 6). This pattern of results replicated when utilizing an alternative salience algorithm28.

Each of the participants (ASD participants in red, controls in blue) is represented as an increment in these empirical cumulative distribution functions, which illustrate the extent to which the best model fit to each participant’s data exploited a salience map as a prior. Group means are shown with dotted lines.

Participants’ use of salience was not correlated with any of the other measures (accuracy, precision, spatial bias; all p > 0.25).

Heterogeneity of impairments in ASD

Figure 7 summarizes the performance of every individual in the sample, along each of the four metrics. A few ASD participants showed no discernible impairment on any aspect of the task (e.g. #5 and 27). Likewise, very few were impaired across the board (e.g. #7). Most ASD participants performed atypically in one or more aspects of the task, but not in all of them (e.g. #3, 18, and 19). After ranking all participants across both samples along each of these dimensions, 77.8% of ASD individuals were in the bottom quartile on at least one of the measured dimensions, compared to 29.0% of controls. 33.0% of ASD individuals were in the bottom 5% of the sample on at least one of the measured dimensions; this was only true of one of the controls (#40).

Each of the 27 ASD participants (left) and 31 controls (right) are profiled in this summary figure. Four circles arranged in a row represent respective performance on the four metrics (precision, accuracy, spatial bias [i.e. leverage], and use of contextual salience). The 58 participants in the sample were rank ordered, and their performance percentile along each metric is represented with a circle colored on a gradient from red (with thick outlines, meaning impaired or atypical performance) to yellow (with thin outlines, meaning typical or unimpaired performance). Note: 3 participants were excluded from the salience analysis (see Supplementary Material for rationale).

An important aspect of understanding this variability in performance is to examine whether variation along respective metrics reflects meaningful individual differences that relate to other stable traits in individuals with ASD. As an example of this approach, and as an exploratory analysis (especially given the limited sample size), we correlated Autism Spectrum Quotient (AQ) with our four performance metrics. Among our participants with ASD, AQ was not correlated with any of these performance measures (all p > 0.12).

Discussion

The ability to infer the target of another’s gaze—and the nature of its impairment in autism spectrum disorder (ASD)—has been the focus of a great deal of recent scientific attention1,13,18,19,29 and deservedly so: eye gaze perception is fundamental to dynamic social interaction and communication, and critically supports linguistic development. However, as we demonstrate in this paper, gaze perception is not an irreducible social ability, but rather can be unpacked into a variety of more fundamental components. Using an experimental method that relied on the analysis of hundreds of trials from each individual subject, viewed through the lenses of many different performance metrics, we established that performance among control participants tended to cluster within a relatively narrow range. Controls were precise, accurate, exhibited similar spatial biases, and exploited contextual salience cues in the service of their gaze judgments. By contrast, many or most ASD participants performed atypically in one or more aspect of the eye gaze perception task.

However, which particular aspect was disrupted was not necessarily consistent throughout the sample. In other words, idiosyncratic aspects of gaze perception seem to underlie impairments in gaze perception in ASD. Further, a subset of the ASD sample exhibited no discernible impairment at all. We observed nothing systematic that explains this; for example, unlike in an earlier study1, we did not find our female participants with ASD—#4, 8, 11, 16, 24—to be specifically spared.

Our approach contrasted with most previous investigations of gaze perception in ASD in several ways. First, our methodology enabled us to successfully dissociate accuracy from precision. In doing so, we discovered that for a subset of participants, impairment on this task was well characterized as arising from noisy (i.e. imprecise) processing of the eye gaze cue. For an overlapping subset of participants, even after averaging over 20 noisy responses to each repeated stimulus, the judgment of gaze never converged toward accuracy with respect to ground truth. In other words, the mapping of the eye gaze stimulus to the perceived direction of gaze was biased, or miscalibrated, in these individuals.

Second, our stimuli were sampled exhaustively from the space of possible gaze directions. This allowed us to uncover fascinating and highly idiosyncratic spatial patterns in the data that would not have been easily discernible in data derived from tasks with less thorough spatial sampling, or those that use adaptive procedures that are typically geared toward the efficient estimation of discrimination thresholds along a given perceptual parameter (e.g.1).

Our study was motivated in part to test theories that biases or priors might be attenuated in ASD. As in a previous study18, we observed a tendency for individuals from both groups to judge the lateral direction of gaze as being in the direction of the camera. This tendency was captured by Dimension 2 of our PCA solution, which did not systematically differ between the ASD and control samples. That said, spatial biases were plainly idiosyncratic for a subset of the ASD sample (Figs 3 and 5). This same previous study18 speculated that individuals with ASD might exhibit biases that develop differently compared to controls; indeed, we provide evidence here that for certain adults with ASD, these spatial priors may diverge quite drastically from the prototype. Whether this bias occurs at the perceptual or decisional level was not resolvable with these data, though it has been previously demonstrated that eye movements themselves tend to differ in this population during gaze discrimination tasks30.

A related theoretical debate surrounds the hypothesis that in ASD, the processing of incoming sensory information is less noisy, leading to relatively less reliance on priors31. In the analysis of the precision of participants’ gaze judgments, we found contrary evidence. On average, participants with ASD made judgments that reflected diminished precision, suggesting noisier extraction of the social cue. However, it is also possible that group differences in visuomotor precision when indicating a response with the mouse could have contributed to this increased response noise. Unfortunately, we do not have the necessary data to test between these possibilities directly, but this is something that could easily be included in future studies (e.g., with probe trials where participants are simply asked to click a specified location on the screen).

Via computational modeling, we also estimated the extent to which each individual participant used contextual salience information present in the visual scene to infer the direction of gaze. Though salience was a critical cue for the task, there was no overall group difference in its use. There was, however, somewhat greater variability in the ASD sample in the extent to which individuals weighed this cue. Further research—perhaps with a still larger ASD sample—might be necessary to uncover whether a true subset of the ASD group consistently weighs this cue more (or less) than is typical. For an even more sensitive analysis of the nature of this cue integration, it would also be quite useful to derive subject-specific salience maps for each individual. Evidence suggests that individuals with ASD might interpret the relative salience of locations in the environment somewhat differently32,33, and therefore could have subtly different expectations (implicitly or explicitly) about where other people are likely to look, indirectly influencing the resultant inference of gaze direction. Alternately, it is possible that certain individuals with ASD interpret salience typically, but weight this salience context more or less than is typical.

Such an approach could better discern the extent to which these individuals find different locations in a scene salient, and to what extent they weigh this contextual cue differently in the service of gaze perception. More broadly, follow-up experimentation like this can help to answer a deeper question: When one finds performance to be variable among individuals with ASD with respect to dissociable aspects of a social task, to what extent is this variation meaningful? In the example of our gaze perception study, does how the individual performs with respect to one of our four constructs meaningfully correlate with analogous aspects of other social and non-social tasks? After all, there are many social and non-social tasks that rely on the accurate and precise processing of a cue, its context, and the integration thereof, in a manner that reveals prior biases. And do relationships exist with other traits or behavioral phenotypes? We argue that uncovering these correlations will allow for great strides in parsing the heterogeneity characteristic of this disorder.

In other words, whether any of the results observed in this study is specific to gaze perception, or reverses in the context of other social and non-social domains, is an empirical question that should be addressed in future experiments. Especially in light of this limitation, we also note that these results do not provide evidence for a causal role of gaze perception in social difficulties of individuals with ASD. Rather than gaze perception difficulties causing impairment, they could also arise as a consequence of reduced and atypical social or other perceptual experiences. Resolving cause and effect would likely require younger participants and longitudinal experimental designs.

That performance in a social task would be heterogeneous in ASD is consistent with previous findings with this population at the behavioral, neural, and genetic levels34,35,36,37,38. As we have demonstrated, even when one limits analysis to one specific social task—gaze perception—there are many potential paths to the impairment that manifests in ASD. We analyzed several dissociable aspects of gaze perception, but in naturalistic contexts there remain even other social cues—like head and body position16,39,40 or facial expression41–that demonstrably inform the judgment of where another person is looking. This study did not directly investigate the processing of these secondary social cues (we instead isolated eye direction as the primary social cue, while intentionally keeping all other constant), but each additional cue that comes to bear on gaze perception presents yet another possible route to impairment.

In sum, we have deconstructed the task of gaze perception into a number of underlying component processes, and have shown that these can be heterogeneously affected across different individuals sharing a diagnosis of ASD. Disruption to any of these processes can account for atypical gaze processing. Overlaid onto this within-task heterogeneity is the fact that social impairment in ASD can result from disruption to any number of social abilities, including those distinct from gaze perception. Those individuals with ASD who showed no discernible impairment to any aspect of our gaze discrimination task no doubt have difficulty with some other social faculty—hence, their diagnosis. The diversity of underlying abilities that contribute to social behavior—any of which may be disrupted in an individual—creates another layer of possible routes to the global social impairment evident in these individuals’ everyday lives. The extent to which individuals with ASD may be “subtyped” in the service of more precise scientific description or clinical intervention will likely depend on the success of research aimed at deconstructing complex social faculties into corresponding components, and connecting the dots12.

References

d’Arc, B. F. et al. Gaze direction detection in autism spectrum disorder. Autism 1–8 (2016).

Leekam, S., Hunnisett, E. & Moore, C. Targets and cues: Gaze-following in children with autism. Journal of Child Psychology and Psychiatry 39, 951–962 (1998).

Wallace, S., Coleman, M., Pascalis, O. & Bailey, A. A study of impaired judgments of eye-gaze direction and related face-processing deficits in autism spectrum disorders. Perception 35, 1651–1664 (2006).

Borji, A., Parks, D. & Itti, L. Complementary effects of gaze direction and early saliency in guiding fixations during free viewing. Journal of Vision 14, 1–32 (2014).

Frieschen, A., Bayliss, A. & Tipper, S. P. Gaze cueing of attention: visual attention, social cognition, and individual differences. Psychological Bulletin 133, 694–724 (2007).

Kylliäianen, A. & Hietanan, J. K. Attention orienting by another’s gaze direction in children with autism. Journal of Child Psychology and Psychiatry 45, 435–444 (2004).

Mackworth, N. H. & Morandi, A. J. The gaze selects informative details within pictures. Perception & Psychophysics 2, 547–552 (1967).

D’Entremont, B., Yazbeck, A., Morgan, A. & MacAulay, S. Early gaze-following and the understanding of others. In Flomm, R., Lee, K. & Muir, D. (eds.) Gaze-following: Its development and significance, 77–94 (Lawrence Erlbaum Associates, Mahwah, NJ, 2007).

Freeth, M., Chapman, P., Ropar, D. & Mitchell, P. Do gaze cues in complex scenes capture and direct the attention of high functioning adolescents with asd? evidence from eye-tracking. Journal of Autism and Developmental Disorders 40, 534–547 (2010).

Senju, A., Tojo, Y., Dairoku, H. & Hasegawa, T. Reflexive orienting in response to eye gaze and an arrow in children with and without autism. Journal of Child Psychology and Psychiatry 45, 445–458 (2004).

Pantelis, P. & Kennedy, D. P. Prior expectations about where other people are likely to direct their attention systematically influence human gaze perception. Journal of Vision 16, 1–12 (2016).

Schaafsma, S. M., Pfaff, D. W., Spunt, R. P. & Adolphs, R. Deconstructing and reconstructing theory of mind. Trends in Cognitive Sciences 19, 65–72 (2015).

Pellicano, E., Rhodes, G. & Calder, A. J. Reduced gaze aftereffects are related to difficulties categorising gaze driection in children with autism. Neuropsychologia 51, 1504–1509 (2013).

Cline, M. G. The perception of where a person is looking. The American Journal of Psychology 80, 41–50 (1967).

Bock, S. W., Dicke, P. & Thier, P. How precise is gaze following in humans? Vision Research 48, 946–957 (2008).

Masame, K. Perception of where a person is looking: Overestimation and underestimation of gaze direction. Tohoku Psychologica Folia 49, 33–41 (1990).

Mareschal, I. & Calder, A. J. & Clifford, C. W. G. Humans have an expectation that gaze is directed toward them. Current Biology 23, 717–721 (2013).

Pell, P. J. et al. Intact priors for gaze direction in adults with high-functioning autism spectrum conditions. Molecular Autism 7, 1–10 (2016).

Friston, K. J., Lawson, R. & Frith, C. D. On hyperpriors and hypopriors: comment on pellicano and burr. Trends in Cognitive Sciences 17, 1 (2013).

Pellicano, E. & Burr, D. When the world becomes ‘too real’: a Bayesian explanation of autistic perception. Trends in Cognitive Sciences 16, 504–510 (2012).

Aldaqre, I., Paulus, M. & Sodian, B. Referential gaze and word learning in adults with autism. Autism 19, 944–955 (2015).

Sevgi, M., Diaconescu, A. O., Tittgemeyer, M. & Schilbach, L. Social bayes: Using Bayesian modeling to study autistic trait-related differences in social cognition. Biological Psychiatry (2016).

Lord, C. et al. The autism diagnostic observation schedule—generic: A standard measure of social and communication deficits associated with the spectrum of autism. Journal of Autism and Developmental Disorders 30, 205–223 (2000).

Bastiaansen, J. A. et al. Diagnosing autism spectrum disorders in adults: the use of autism diagnostic observation schedule (ados) module 4. Journal of Autism and Developmental Disorders 41 (2011).

Judd, T., Ehinger, K., Durand, F. & Torralba, A. Learning to predict where humans look. In IEEE International Conference on Computer Vision (ICCV) (2009).

Brainard, D. H. The psychophysics toolbox. Spatial Vision 10, 443–446 (1997).

Mejia, A., Nebel, M. B., Eloyan, A., Caffo, B. & Lindquist, M. A. PCA leverage: outlier detection for high-dimensional functional magnetic resonance imaging data. Biostatistics 18, 521–536 (2017).

Zhang, J. & Sclaroff, S. Saliency detection: A boolean map approach. Proceedings of the IEEE International Conference on Computer Vision (ICCV) (2013).

Ashwin, C., Hietanen, J. K. & Baron-Cohen, S. Atypical integration of social cues for orienting to gaze direction in adults with autism. Molecular Autism 6 (2015).

Riby, D. M. & Doherty, M. J. Tracking eye movements proves informative for the study of gaze direction detection in autism. Research in Autism Spectrum Disorders 3, 723–733 (2009).

Brock, J. Alternative Bayesian accounts of autistic perception: comment on Pellicano and Burr. Trends in Cognitive Science 16, 573–574 (2012).

Amso, D., Haas, S., Tenenbaum, E., Markant, J. & Sheinkopf, S. J. Bottom-up attention orienting in young children with autism. Journal of Autism and Developmental Disorders 44, 664–673 (2014).

Wang, S. et al. Atypical visual saliency in autism spectrum disorder quantified through model-based eye tracking. Neuron 88, 604–616 (2015).

Beaz, S. et al. Integrating intention and context: assessing social cognition in adults with Asperger syndrome. Frontiers in Human Neuroscience 6, 1–21 (2012).

Betancur, C. Etiological heterogeneity in autism spectrum disorders: More than 100 genetic and genomic disorders and still counting. Brain Research 1380, 42–77 (2011).

Byrge, L., Dubois, J., Tyszka, J. M., Adolphs, R. & Kennedy, D. P. Idiosyncratic brain activation patterns are associated with poor social comprehension in autism. Journal of Neuroscience 35, 5837–5850 (2015).

Hahamy, A., Behrmann, M. & Malach, R. The idiosyncratic brain: distortion of spontaneous connectivity patterns in autism spectrum disorder. Nature Neuroscience 18, 302–309 (2015).

Lombardo, M. V. et al. Unsupervised data-driven stratification of mentalizing heterogenity in autism. Scientific Reports 6 (2016).

Langton, S. R. H. The mutual influence of gaze and head orientation in the analysis of social attention direction. The Quarterly Journal of Experimental Psychology 53, 825–845 (2000).

Moors, P., Germeys, F., Pomianowska, I. & Verfaillie, K. Perceiving where another person is looking: the integration of head and body information in estimating another person’s gaze. Frontiers in Psychology 6, 1–12 (2015).

Lobmaier, J. S., Tiddeman, B. P. & Perrett, D. I. Emotional expression modulates perceived gaze direction. Emotion 8, 573–577 (2008).

Russell, B., Torralba, A., Murphy, K. & Freeman, W. T. LabelMe: a database and web-based tool for image annotation. International Journal of Computer Vision 77, 157–173 (2008).

Acknowledgements

This research was funded in part by the National Institute of Mental Health (R00-MH094409 and R01-MH110630) and a NARSAD Young Investigator Award from the Brain & Behavior Research Foundation. Thank you to Susannah Burkholder and Brad Caron for help recruiting and running participants, and to Sebastian Kagemann for assistance in creating the stimuli.

Author information

Authors and Affiliations

Contributions

P.C.P. and D.P.K. conceived and designed the experiments and analyses, P.C.P. conducted the experiments and analyses, and P.C.P. and D.P.K. wrote the manuscript.

Corresponding authors

Ethics declarations

Competing Interests

The authors declare that they have no competing interests.

Additional information

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Pantelis, P.C., Kennedy, D.P. Deconstructing atypical eye gaze perception in autism spectrum disorder. Sci Rep 7, 14990 (2017). https://doi.org/10.1038/s41598-017-14919-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-017-14919-3

This article is cited by

-

Application of Eye Tracker to Detect Visual Processing of Children with Autism Spectrum Disorder

Current Developmental Disorders Reports (2022)

-

Social attention to activities in children and adults with autism spectrum disorder: effects of context and age

Molecular Autism (2020)

-

Contraction of distance and duration production in autism spectrum disorder

Scientific Reports (2019)

-

Early behavioral indices of inherited liability to autism

Pediatric Research (2019)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.