Abstract

Link prediction in complex networks aims at predicting the missing links from available datasets which are always incomplete and subject to interfering noises. To obtain high prediction accuracy one should try to complete the missing information and at the same time eliminate the interfering noise from the datasets. Given that the global topological information of the networks can be exploited by the adjacent matrix, the missing information can be completed by generalizing the observed structure according to some consistency rule, and the noise can be eliminated by some proper decomposition techniques. Recently, two related works have been done that focused on each of the individual aspect and obtained satisfactory performances. Motivated by their complementary nature, here we proposed a new link prediction method that combines them together. Moreover, by extracting the symmetric part of the adjacent matrix, we also generalized the original perturbation method and extended our new method to weighted directed networks. Experimental studies on real networks from disparate fields indicate that the prediction accuracy of our method was considerably improved compared with either of the individual method as well as some other typical local indices.

Similar content being viewed by others

Introduction

Link prediction aims at revealing missing or potential relations between data entries from large volumes of data sets which are subject to dynamical changes and uncertainty. With the rapid development of studies on complex networks, the problem of link prediction has received extensive attention from researchers in various fields including physics, mathematics, computer science, social science and so on1,2. On the one hand, the investigation of complex networks may provide some novel insights on real-world linking patterns which is helpful to link prediction. On the other hand, by trying to predict the missing or potential links with a high accuracy, link prediction may also help to provide us with a deeper understanding of the organization of real world networks, which is a longstanding challenge in many branches of science3. From a practical point of view, link prediction is also a fundamental issue for many modern world applications in disparate fields such as recommending friends in online social networks, recommending products in e-commerce web sites4,5,6, and uncovering missing parts of social and biological networks7,8,9.

In link prediction, the central issue is how to predict the missing links effectively and accurately. Of the two aspects the accuracy problem is more fundamental from a theoretical point of view when computational cost is not a major concern. In literature this is termed as the “predictability problem”, i.e., to what extent can the missing or potential links be predicted, which to our knowledge was first proposed and investigated in10. Although the exact predictability can never be obtained due to the complexity and uncertainty intrinsic to real world networks, the practical predictability depends on the extent to which the available information can be exploited and the noise be eliminated. In principle, information is represented by something of regularity or consistency in the network structure when the network is dynamically changing due to, say, some evolution or perturbation processes. On the other hand, noise is represented by something irregular or inconsistent which is always unavoidable in real data sets. From this point of view, to improve link predictability, one should first find some proper description of the information and noise in the available data sets and then design some effective method accordingly.

In the context of complex networks which are usually described as graphs, the global topological information always lies in their adjacency matrices, in which nonzero entries denote links between corresponding nodes, while zero ones denote missing or nonexistent links. The adjacent matrix provides fundamental information for link prediction. And many existing link prediction algorithms are actually based on some kind of manipulations of the adjacent matrices or some of their variants. For example, the CN index11 of a node pair is the inner product of their corresponding rows of the adjacent matrix, and the RA index11 of some weighted adjacent matrix whose column sum is assigned as 1. These are local indices that have explicit physical meanings, while the Katz index12 uses the global information obtained from some series of the adjacent matrices. Motivated by these observations, new link prediction methods based on different kinds of manipulations of the adjacent matrices have been developed. Since the network structure can be well reflected by the eigenvectors of its adjacent matrix13, it is natural to make use of them in link prediction. Following the idea that the consistency in network structure can be represented by the eigenvectors of its adjacent matrices, the authors10 proposed a structural perturbation method (SPM) in which a new matrix was constructed for prediction by perturbing the eigenvalues of the adjacent matrix while fixing the eigenvectors. On the other hand, since the real data of complex networks all are always subjected to interfering noise, it is necessary to eliminate the noise to uncover the unobserved links. In14, by introducing the robust PCA technique, the authors developed a novel global information-based link prediction algorithm which decomposes the adjacent matrix into a low rank backbone structure and a sparse noise matrix.

Although the two aforementioned works have achieved considerable improvements compared with many existing methods, they still have some limitations. An important issue is that each of them only focuses on one of the two complementary aspects to accurate link prediction: information completion and noise reduction. Motivated by these observations, here we propose a novel link prediction method that combines these two complementary methods. That is, we first exploit the information from the adjacent matrix by some perturbation on its eigenvalues, then by the decomposition technique from robust PCA, we remove the sparse noise from the resulting matrix to reveal the backbone matrix for final prediction. Furthermore, for weighted directed networks which may have asymmetric adjacent matrices, we extract its symmetric part by introducing some new decomposition technique so that the original SPM is still applicable. Thus, our new method can be extended to weighted directed networks. Experimental studies indicate that this new method achieves considerable improvement when compared to each of the individual method in most of the networks.

Results

Consider a weighted directed network G(V, E, A), where V = {v 1, …, v n }, \(E\subseteq V\times V\) are the set of nodes and links, respectively, and \(A={[{a}_{ij}]}_{i,j=1}^{n}\) is the weighted directed adjacent matrix such that a ij > 0 if node (v j , v i ) \(\in \) E and a ij = 0 otherwise. If the network is undirected and unweighted, then A is a real symmetric matrix, i.e., a ij = a ji for each i, j = 1, 2, …, n. Otherwise, A may be an asymmetric matrix that has complex eigenvalues and is not diagonalizable. In such case the original SPM is not applicable. To test the accuracy of our new prediction algorithm, we randomly divide the link set E into a training set E T and a probe set E P. Here E T is treated as known information or observed information, while E P is considered as the set of missing links. Obviously, \({E}^{T}\cap {E}^{P}=\varnothing \) and \({E}^{T}\cup {E}^{P}=E\). Our purpose is to try to predict the links in E P based on the information in E T.

In the experiment, we first apply the perturbation procedure to A T, which is the adjacent matrix of G(V, E T). To implement the perturbation, we randomly select a fraction of links from E T to constitute the perturbation set ΔE T, whose adjacent matrix ΔA T acts as the perturbation to A T − ΔA T. As in10, in each prediction the final perturbed matrix \({\tilde{A}}_{T}\) is obtained by averaging over 10 independent selections of ΔE T. Then we apply the robust PCA technique to \({\tilde{A}}_{T}\) to obtain the backbone structure \({\tilde{A}}_{B}\). In this procedure, the parameter λ for each network is chosen as the optimal value in the simulations. Particularly, based on some preliminary simulations, we found that is almost all cases, the optimal values of λ always fall in the interval (0, 0.4). Thus in the experiment, we simulate different values of λ from 0.01 to 0.39 at a step 0.01 and select the value that has the best performance. In the final prediction, we use the matrix \({\tilde{A}}_{B}\) as the score matrix, whose entries corresponding to the unconnected nodes are explained as their connection likelihood.

To measure the prediction accuracy of the algorithm, we use two standard metrics: precision 15 and AUC 16 which are defined as follows.

Given the ranking of the non-observed links according to their scores in descending order, if L r of the top-L links, which are taken as the predicted ones, appear in the probe set, then precision = L r /L. In the experiment, we take L as the number of links in the probe set.

Given the ranking of the non-observed links, AUC is the probability that a randomly chosen missing link has a higher score than a randomly chosen nonexistent link. In the algorithmic implementation, instead of computing its exact value, AUC is usually approximated by the comparison of scores between node pairs randomly chosen from the set of missing links and of nonexistent links. If among n times of independent comparisons, there are n′ times the scores of the missing links are higher than those of the non-observed links, and n″ times they are the same, then

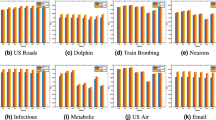

In the experiment, we test our algorithm on both undirected and directed networks from disparate fields. And we compared the results of our method with the original individual methods SPM and Low Rank (LR), as well as some other typical local indices including Common Neighbors(CN)11, Adamic-Adar (AA)17 and Resource Allocation(RA)11. The prediction results in precision and AUC are respectively presented in Tables 1 and 2 for undirected networks and in Tables 3 and 4 for directed networks, where for each network the result is obtained by averaging over 100 independent runs. Since the original SPM only applies to symmetric matrices, here the results for directed networks are obtained by the generalized one described in stage 1 of our method.

From the experimental results, it can be seen that compared to each of the original individual methods, the new method achieves considerable improvements both in precision and in AUC. It gives the best results in most of the networks, especially in the directed ones. Even in the case it is not the best, it is still quite near the best in most cases. In some sparse networks such as CollegeMsg, the precision given by our method is more than two times of those given by others. It is also remarkable to note that in some cases, even when one or both of the two individual methods performs very poor, our method still works very well. This implies that the new method has not only a very competitive but also a very robust performance, which in our opinion is at least partly due to the complementary nature of SPM and LR and justifies the significance of their combination.

Discussion

In this work, by generalizing and combining two previous link prediction methods which are of complementary nature, we propose a new algorithm for link prediction via perturbation and decomposition of the adjacent matrices of the networks. By exploiting the useful information and eliminating the interfering noise simultaneously, the new method takes advantage of both previous methods and robustly achieves considerable improvements on most of real world networks from disparate fields in experimental studies.

Beyond its competitive performances, the significance of this work, as well as that of those previous ones which it depends upon, is that they opened up a new direction for link prediction by directly manipulating the adjacent matrices as a whole. Compared to the classical methods such as similarity indices, link prediction algorithm in this new direction can no doubt take advantage of the rich tools and results available in matrix theory. And it is predictable that many new works in this direction will be done in the near future.

Since the new method is a combination of two existing methods, its computational complexity is roughly the summation of theirs. At stage 1, the time-consuming part is the computation of the eigenvalues and eigenvectors of the adjacent matrix whose complexity is O(n 3)18. At stage 2, it is the singular value decomposition (SVD) of the perturbed matrix whose complexity is O(kn 2)14 where k is the estimated rank of the matrix. Thus in summary, the computational complexity of the proposed algorithm is O(n 3).

Despite these advantages, the new method also faces some difficulties, among which the major one is how to determine the parameter λ. As many other parameterized methods, the parameter plays an important role in the performance of the algorithms, yet there is no explicit rule to determine its optimal value in advance. In the experiment we can choose for each network an optimal value based on the empirical simulations, yet this is not realistic for real-world applications. In that case, as in some learning algorithms, we can only obtain an estimated value of λ based on the training data. That is, we can divide the existent links into training set and probe set as we do in the experiment. Then we can obtain the “optimal” value of λ based on the simulations. Although this value generally is not the true optimal of λ, it should be at least an acceptable approximation, especially when the network is large enough. Moreover, to be safer, when it is possible, we can repeat this process many times and then determine an optimal value of λ based on the distribution of the outputs.

Methods

Given a weighted directed network G(V, E, A), where V, E, and A are defined as before. In the following, we consider undirected networks as special cases of directed networks and will present the method in terms of directed networks in general.

In summary, our method consists of two stages: the perturbation stage and the decomposition one, which are described as follows.

Stage 1: Structural perturbation

This stage can be divided into the following three steps.

Step 1: Preprocessing. Given the weight matrix A of a directed network, to apply the structural perturbation method to it, we first decompose it into the following two parts:

where A S = (A + A Τ)/2 is the symmetric part of A, while A AS = (A − A Τ)/2 is the antisymmetric part of A, with A Τ being the transpose of A. Intuitively, we explain the entries of A S as the average linking tendency between corresponding nodes, while the entries of A AS as the bias in the distribution of this tendency to the two links in opposite directions.

Step 2: Structural perturbation. Apply the structural perturbation method to A S as described in10, which for integrity will be briefly presented as follows. Since A S is a symmetric matrix, it can be written as

where λ k , x k , k = 1, 2, …, n are the eigenvalues and corresponding eigenvectors of A S, respectively.

After some perturbation ΔA S to A S, then the eigenvalue of A S + ΔA S will change to λ k + Δλ k and its corresponding eigenvector to x k + Δx k . Thus we have

Left-multiplying the eigenfunction by \({x}_{k}^{{\rm{T}}}\), and neglecting second-order terms \({\rm{\Delta }}{\lambda }_{k}{x}_{k}^{{\rm{T}}}{\rm{\Delta }}{x}_{k}\) and \({x}_{k}^{{\rm{T}}}{\rm{\Delta }}{A}^{S}{\rm{\Delta }}{x}_{k}\), we can obtain

Fixing the eigenvectors and using the perturbed eigenvalues, we can obtain the perturbed matrix,

Step 3: Postprocessing. Add the antisymmetric part of A to \({\tilde{A}}^{S}\) to get the final perturbed matrix:

Here we fix the antisymmetric part of A based on the idea that the difference between the linking tendency of opposite directions are not changed obviously during the perturbation process.

Stage 2: Noise reduction

In this stage we will remove the supposed noise from the perturbation matrix \(\tilde{A}\) and recover the backbone structure for prediction. For this purpose we introduce the robust principal component analysis (robust PCA) as in14. For the sake of integrity we briefly present it in the following. If the network is highly regularly organized, then its backbone structure should have some low rank property, and the noise should be sparse. Thus we should decompose the matrix \(\tilde{A}\) into two parts: a low rank part \({\tilde{A}}_{B}\) as the backbone structure and a sparse part \({\tilde{A}}_{N}\) as the noise. Mathematically, this can be transformed into the following optimization problem:

where rank(·) denotes the rank of a matrix, \({\parallel \cdot \parallel }_{0}\) is the l 0-norm of a matrix, and γ is the parameter that balances these two expressions. Since this is a highly nonconvex optimization problem that is hard to solve, we use some approximate solution based on robust PCA19, which is the solution of the following optimization problem:

where \({\parallel \cdot \parallel }_{* }\) is the nuclear norm of a matrix, \({\parallel \cdot \parallel }_{1}\) is the l 1-norm, and λ is the parameter that balances the two expressions.

At last, we predict some missing or potential links based on the approximated backbone structure matrix \({\tilde{A}}_{B}\) as in14. That is, we take the entries in \({\tilde{A}}_{B}\) corresponding to the unobserved links as their similarity scores and sort them in a descending order. Then we select the top L links as our prediction result, where L is determined by some other rules.

Data

For experimental studies, we have collected 36 real-world networks from disparate fields, including 19 undirected networks and 17 directed ones. These networks were carefully selected to cover a wide range of properties, including different sizes, average degrees, clustering coefficients, and heterogeneity indices. The basic topological features of the networks are summarized in Tables 5 and 6, respectively. A brief description of these networks are as follows:

Undirected network

-

Karate20: The network of relationship among the members in the karate club.

-

Football21: The network of American football games consisting of Division IA colleges during the regular season, Fall in 2000.

-

Dolphin22: network of bottlenose dolphins living in Doubtful Sound (New Zealand).

-

Everglades23: A network of foodweb in Everglades Graminoids during wet season.

-

WorldTrade24: the network of miscellaneous manufactures of metal among 80 countries in 1994.

-

Macaca25: cortical networks of the macaque monkey.

-

FWM26: the food web in Mangrove Estuary during the wet season.

-

BUP27: A network of political blogs.

-

WorldAdj28: An adjacency network of common adjectives and nouns in the novel David Copperfield by Charles Dickens.

-

FWF29: the network of predator-prey interactions in Florida Bay in the dry season.

-

Jazz30: jazz musician network, the link denotes the relationship between two persons if they used to play together in the same band at least once.

-

Contact31: a contact network between people measured by carried wireless devices.

-

C. elegans32: A neural network of the nematode worm C. elegans compiled by D. Watts and S. Strogatz.

-

USAir23: A network of US air transportation system, which contains 332 airports and 2126 airlines.

-

INF27: A network of face-to-face contacts in an exhibition.

-

Metabolic32: the metabolic network of the nematode worm C. elegans.

-

Email33: the network of email interchanges between the members of the University of Rovira I Virgili.

-

PB34: the network of hyperlinks between weblogs on US politics.

-

Yeast35: the network of protein-protein interaction.

Directed network

-

Bison36: This directed network contains dominance between American bisons in 1972 on the National Bison Range in Moiese (Montana).

-

Cattle37: This directed network contains dominance behaviours observed between dairy cattles at the Iberia Livestock Experiment Station in Jenerette, Louisiana.

-

Football38: the network of American football games consisting of Division IA colleges during the regular season, Fall in 2000;

-

Gramdry39: the network of predator-prey interactions in Everglades Graminoids in the dry season.

-

Gramwet39: the network of predator-prey interactions in Everglades Graminoids in the wet season.

-

Cypdry39: the network of predator-prey interactions in Cypress in the dry season.

-

Cypwet39: the network of predator-prey interactions in Cypress in the wet season.

-

Mangdry39: the network of predator-prey interactions in Mangrove Estuary in the dry season.

-

Mangwet39: the network of predator-prey interactions in Mangrove Estuary in the wet season.

-

Polbooks40: A network of books about US politics published around the time of the 2004 presidential election and sold by the online bookseller Amazon.com.

-

Baydry39: the network of predator-prey interactions in Florida Bay in the dry season.

-

Baywet39: the network of predator-prey interactions in Florida Bay in the wet season.

-

C. elegans32: A neural network of the nematode worm C. elegans compiled by D. Watts and S. Strogatz;

-

USAir23: A network of US air transportation system, which contains 332 airports and 2126 airlines;

-

Email-Eu41: the network was generated using email data from a large European research institution.

-

PB34: A directed network of hyperlinks between weblogs on US politics, recorded in 2005 by Adamic and Glance.

-

CollegeMsg42: This dataset is comprised of private messages sent on an online social network at the University of California, Irvine.

Benchmarks

For comparison, we introduce three benchmarks similarity indices based on structural information, including Common Neighbors(CN), Adamic-Adar Index(AA), and Resource Allocation Index(RA).

-

Common Neighbors(CN). It supposes that two nodes are more likely to be connected if they have more common neighbors, so the number of their common neighbors can be regarded as a measurement of their similarity. Let Γ(x) denote the set of neighbors of x, |Q| denote the cardinality of the set Q, then CN is defined as

$${S}_{xy}^{CN}=| {\rm{\Gamma }}(x)\cap {\rm{\Gamma }}(y)| .$$(10) -

Adamic-Adar(AA). It can be seen as a refined CN index by assigning different weights to different nodes in the set of common neighbors. The larger degree of the common neighbor, the less weight it can contribute, then AA can be calculated by

$${S}_{xy}^{AA}=\sum _{z\in {\rm{\Gamma }}(x)\cap {\rm{\Gamma }}(y)}\,\frac{1}{{\rm{log}}(| {\rm{\Gamma }}(z)| )}{\rm{.}}$$(11) -

Resource Allocation(RA). It is similar to AA, but motivated by the resource allocation process on complex networks. It models the transmission of resources between two unconnected nodes through neighborhood nodes, then RA can be written as

References

Albert, R. & Barabási, A.-L. Statistical mechanics of complex networks. Rev. Mod. Phys. 74, 47–97 (2002).

Newman, M. Networks: An Introduction (Oxford University Press, 2010).

Barabási, A.-L. Scale-free networks: a decade and beyond. Science 325, 412–413 (2009).

Schifanella, R., Barrat, A., Cattuto, C., Markines, B. & Menczer, F. Folks in folksonomies: social link prediction from shared metadata. In Proceedings of the third ACM international conference on Web search and data mining, 271–280 (ACM, 2010).

Aiello, L. M. et al. Friendship prediction and homophily in social media. ACM Trans. Web 6, 9 (2012).

Lü, L. et al. Recommender systems. Phys. Rep. 519, 1–49 (2012).

Mamitsuka, H. Mining from protein–protein interactions. Wiley Interdiscip. Rev.-Data Mining Knowl. Discov. 2, 400–410 (2012).

Cannistraci, C. V., Alanis-Lobato, G. & Ravasi, T. From link-prediction in brain connectomes and protein interactomes to the local-community-paradigm in complex networks. Sci. Rep. 3, 1613 (2013).

Barzel, B. & Barabási, A.-L. Network link prediction by global silencing of indirect correlations. Nat. Biotechnol. 31, 720–725 (2013).

Lü, L., Pan, L., Zhou, T., Zhang, Y.-C. & Stanley, H. E. Toward link predictability of complex networks. Proc. Natl. Acad. Sci. USA 112, 2325–2330l (2015).

Zhou, T., Lü, L. & Zhang, Y.-C. Predicting missing links via local information. Eur. Phys. J. B 71, 623–630 (2009).

Lü, L. & Zhou, T. Link prediction in complex networks: a survey. Physica A 390, 1150–1170 (2011).

Godsil, C. & Royle, G. Algebraic Graph Theory (Springer, New York, 2001).

Pech, R., Hao, D., Pan, L., Cheng, H. & Zhou, T. Link prediction via matrix completion. EPL 117, 38002 (2017).

Herlocker, J. L., Konstan, J. A., Terveen, L. G. & Riedl, J. T. Evaluating collaborative filtering recommender systems. ACM Trans. Inf. Syst. 22, 5–53 (2004).

Hanley, J. A. & McNeil, B. J. The meaning and use of the area under a receiver operating characteristic (ROC) curve. Radiology 143, 29–36 (1982).

Adamic, L. A. & Adar, E. Friends and neighbors on the web. Social Networks 25, 211–230 (2003).

Horn, R. & Johnson, C. Topics in matrix analysis (Cambridge University Press, 1991).

Candès, E. J., Li, X., Ma, Y. & Wright, J. Robust principal component analysis? J. ACM 58, (11 (2011).

Zachary, W. W. An information flow model for conflict and fission in small groups. Journal of Anthropological Research 33, 452–473 (1977).

Newman, M. E. Assortative mixing in networks. Physical Review Letters 89, 208701 (2002).

Cohen, J. E., Schittler, D. N., Raffaelli, D. G. & Reuman, D. C. Food webs are more than the sum of their tritrophic parts. Proc. Natl. Acad. Sci. USA 106, 22335–22340 (2009).

Batagelj, V. & Mrvar, A. Pajek datasets. http://vlado.fmf.uni-lj.si/pub/networks/data/mix/USAir97.net (2006).

De Nooy, W., Mrvar, A. & Batagelj, V. Exploratory social network analysis with Pajek (structural analysis in the social sciences) 71, 605–606 (Cambridge University Press, 2011).

Costa, L. F., Kaiser, M. & Hilgetag, C. C. Predicting the connectivity of primate cortical networks from topological and spatial node properties. BMC Systems Biology 1, 16 (2007).

Baird, D., Luczkovich, J. & Christian, R. R. Assessment of spatial and temporal variability in ecosystem attributes of the st marks national wildlife refuge, apalachee bay, florida. Estuar. Coast. Shelf Sci. 47, 329–349 (1998).

Martnez, V., Berzal, F. & Cubero, J.-C. Adaptive degree penalization for link prediction. Journal of Computational Science 13, 1–9 (2016).

Newman, M. E. Finding community structure in networks using the eigenvectors of matrices. Physical Review E 74, 036104 (2006).

Ulanowicz, R. E. & DeAngelis, D. L. Network analysis of trophic dynamics in south florida ecosystems, FY 97: The Florida Bay Ecosystem. Tech. Rep. CBL 98–123 (1998).

Gleiser, P. M. & Danon, L. Community structure in jazz. Advances in Complex Systems 6, 565–573 (2003).

Kunegis, J. KONECT: the Koblenz network collection. http://konect.uni-koblenz.de/ (2013).

White, J. G., Southgate, E., Thomson, J. N. & Brenner, S. The structure of the nervous system of the nematode caenorhabditis elegans: the mind of a worm. Philos. Trans. R. Soc. Lond. Ser. B-Biol. Sci. 314, 1–340 (1986).

Guimera, R., Danon, L., Diaz-Guilera, A., Giralt, F. & Arenas, A. Self-similar community structure in a network of human interactions. Physical Review E 68, 065103 (2003).

Adamic, L. A. & Glance, N. The political blogosphere and the2004 us election: divided they blog. In Proceedings of the 3rd International Workshop on Link Discovery 36–43, Chicago, Illinois, ACM (Aug, 2005).

Von Mering, C. et al. Comparative assessment of large-scale data sets of protein–protein interactions. Nature 417, 399–403 (2002).

Lott, D. F. Dominance relations and breeding rate in mature male american bison. Ethology 49, 418–432 (1979).

Schein, M. W. & Fohrman, M. H. Social dominance relationships in a herd of dairy cattle. Brit. J. Anim. Behav. 3, 45–55 (1955).

Girvan, M. & Newman, M. E. Community structure in social and biological networks. Proc. Natl. Acad. Sci. USA 99, 7821–7826 (2002).

Melián, C. J. & Bascompte, J. Food web cohesion. Ecology 85, 352–358 (2004).

Newman, M. E. Modularity and community structure in networks. Proc. Natl. Acad. Sci. USA 103, 8577–8582 (2006).

Hall, M. et al. The WEKA data mining software: an update. ACM SIGKDD Explor. Newsl. 11, 10–18 (2009).

Panzarasa, P., Opsahl, T. & Carley, K. M. Patterns and dynamics of users’ behavior and interaction: network analysis of an online community. J. Assoc. Inf. Sci. Tech. 60, 911–932 (2009).

Watts, D. J. & Strogatz, S. H. Collective dynamics of’small-world’ networks. Nature 393, 440–442 (1998).

Acknowledgements

This work was supported by the National Basic Research Program (973 Program) of China(No. 2013CB329402) and the National Natural Science Foundation of China (Nos 61573015, 61472306).

Author information

Authors and Affiliations

Contributions

B.L. designed the research, X.X. performed the research, X.X. and B.L. analyzed the data, X.X., B.L., J.W. and L.J. wrote the paper.

Corresponding author

Ethics declarations

Competing Interests

The authors declare that they have no competing interests.

Additional information

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Xu, X., Liu, B., Wu, J. et al. Link prediction in complex networks via matrix perturbation and decomposition. Sci Rep 7, 14724 (2017). https://doi.org/10.1038/s41598-017-14847-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-017-14847-2

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.