Abstract

Far-from-equilibrium thermodynamics underpins the emergence of life, but how has been a long-outstanding puzzle. Best candidate theories based on the maximum entropy production principle could not be unequivocally proven, in part due to complicated physics, unintuitive stochastic thermodynamics, and the existence of alternative theories such as the minimum entropy production principle. Here, we use a simple, analytically solvable, one-dimensional bistable chemical system to demonstrate the validity of the maximum entropy production principle. To generalize to multistable stochastic system, we use the stochastic least-action principle to derive the entropy production and its role in the stability of nonequilibrium steady states. This shows that in a multistable system, all else being equal, the steady state with the highest entropy production is favored, with a number of implications for the evolution of biological, physical, and geological systems.

Similar content being viewed by others

Introduction

The second law of thermodynamics is often misused to explain that life’s order, e.g. that of DNA, proteins, and cells, cannot emerge by chance (or at least is infinitesimally unlikely)1. In an isolated system, e.g. the whole universe, the entropy (‘disorder’) has to either stay constant or increase. However, in open systems, characterized by fluxes of energy and matter, order can arise as long as the entropy of the surrounding system increases enough so that the total entropy from the two parts of the system together increases2. Note also that the second law does not make any predictions about how fast a system approaches equilibrium, except at the stationary nonequilibrium steady state. Under this circumstance, the Carnot efficiency limits the rate at which entropy is produced by the heat flux into the system3,4. This qualitative nature of the second law makes the prediction of dynamical systems based on thermodynamics notoriously difficult.

For the last 150 years, there has been speculation that universal extremal principles determine what happens in nature5,6,7, most prominent being the maximum entropy production principle (MaxEPP) by Paltridge, Ziegler and others8,9 (see also10,11 for reviews). Its most important conclusion is that there is life on Earth, or the biosphere as a whole, because ordered living structures help dissipate the energy from the sun on our planet more quickly as heat than just absorption of light by rocks and water12,13,14. However, such principles have never rigorously been proven, and conflicting results exist. MaxEPP apparently explains Rayleigh-Bénard convection, flow regimes in plasma physics, the laminar-turbulent flow transition in pipes, crystallization of ice, certain planetary climates, and ecosystems10,15,16,17. For instance, in plasma physics large-scale dissipative structures can increase the impedance and thus sustain high temperature gradients while producing large amounts of entropy at a smaller scale3.

Confusingly, MaxEPP seems to contradict the minimum entropy production principle (MinEPP) as promoted by Onsager, Prigogine and others7,18. MinEPP applies close to equilibrium as an extension of Rayleigh’s principle, and predicts the current distribution in parallel circuits (Kirchhoff’s law) and the viscous flow in Newtonian fluids19. To make things worse, both MinEPP and MaxEPP can apply simultaneously20, or one of the two can be selected depending on the boundary conditions, i.e. whether fluxes (e.g. currents) or forces (e.g. temperature gradients) are constrained3,4,21 (this issue is revisited in the Discussion section). These wide-ranging results, along with the broad range of applications from biochemistry, fluid mechanics, ecosystems, and whole planets, leave the question of extremal principles wide open22.

What about more general theoretical approaches? A promising direction for proving the MaxEPP is based on information-theoretic approaches related to the maximum-entropy inference method23,24, but previous attempts relied on overly strong assumptions (reviewed in25,26,27). One extremal principle is undisputed “the least-action principle (for conservative systems), which can be used to derive most physical theories, including Newton’s laws, Maxwell’s equations, and quantum mechanics28,29. Recently, the stochastic least-action principle was also established for dissipative systems30,31,32. Information theory and the stochastic least-action principle are important corner stones of modern stochastic thermodynamics32.

Here, with an interest in the emergence of protocells and life, we focus on stochastic biochemical systems and ask whether MaxEPP provides a mechanism for selecting states in a multistable system. We demonstrate that previous attempts to disprove MaxEPP suffered from misinterpretations of unintuitive aspects of stochastic systems, and that with modern approaches in stochastic thermodynamics, MaxEPP can be proven. Initially, we focus on the single chemical species, one-dimensional (1D) bistable Schlögl model, but then generalize to stochastic multistable systems. In particular, similar to33, we distinguish between two types of MaxEPPs: the ‘state selection’ principle, addressing which steady state is selected in a multistable system, and the ‘gradient response’ principle, focusing on the response of the average entropy production of a stochastic system to changes in a parameter. We find that if multiple steady states exist, then the first MaxEPP predicts that the steady state with the highest entropy production is the most likely to occur. Furthermore, we demonstrate that MinEPP simply corresponds to the second MaxEPP near equilibrium. These findings should clarify the role of thermodynamics in selecting nonequilibrium states in biological, chemical, and physical systems.

A short primer on entropy production

Imagine a system undergoing state changes due to external driving as shown in Fig. 1a. If this is done reversibly, the total change in entropy is \({\rm{\Delta }}S=\int dQ/T\), where T is the temperature and Q is the added heat. Hence, \(\int dQ/T\) is called the entropy flux (\({\rm{\Delta }}{S}_{e}\)) from the environment into the system. If done irreversibly, \({\rm{\Delta }}S\ge \int dQ/T\). The positive difference is the entropy production \({\rm{\Delta }}{S}_{i}\) 34

which after division by \({\rm{\Delta }}t\) becomes in the infinitesimal limit

where \(d{S}_{i}/dt\ge 0\) is the non-negative entropy production rate and dS e /dt is the entropy flow rate, which can be positive or negative. The entropy flow rate \(d{S}_{e}/dt={T}^{-1}(dQ/dt+{\sum }_{r}d{n}_{r}/dt\,{\rm{\Delta }}{\mu }_{r})\) can have contributions from heat flow (\(dQ/dt\)) at temperature T (not part of Schlögl model) and material flow (due to current \(d{n}_{r}/dt\) and and chemical potential difference \({\rm{\Delta }}{\mu }_{r}\) for reaction r). However, at a nonequilibrium steady state, the time-averaged rate of entropy change is zero, i.e. \(\overline{dS/dt}=0\), and the entropy production rate

is positive in line with the second law of thermodynamics. Put differently, the time-averaged entropy production rate is the negative of the entropy flow rate. Since entropy flow is often easier to calculate, it can be used in place of the entropy production at steady state. At thermodynamic equilibrium, both quantities are zero. Now, we need a microscopic model to investigate this further.

Illustration of a driven system with sources of entropy production and flow. (a) Picture of a driven system (similar to Schlögl model) where fluxes of molecular species A and B drive the concentration of species X out of equilibrium. A system is called closed if only energy is exchanged with the surroundings, and open if also matter is exchanged. (For completeness, in an isolated system, there is no exchange at all with the surroundings. In a special case of the latter, everything is included, i.e. the subsystem and its surroundings.) Entropy flow rate dS e /d t across boundary of reaction volume Ω can have contributions from heat and material flow. Entropy production rate dS i /d t is due to processes inside the volume. At steady state, both time-averaged contributions are equal in magnitude but of opposite sign (see text for details). (b) Illustration of the dynamics of a bistable system with a ‘low’ and a ‘high’ molecule-concentration steady state (left). The high state produces high amounts of entropy as indicated by heat radiation, warm glow, directedness and oscillatory fluxes (projected onto the concentration axis), while the low state is cold and close to equilibrium with little entropy production. The hypothesis of MaxEPP is that the state with high entropy production also is the more likely to occur (right).

Consider a system characterized by a molecular species with molecule number given by X (this can easily be generalized to higher dimensions or more chemical species), where reaction events produce random jumps in molecule number \(X\to X+{\rm{\Delta }}{X}_{r}\) with transition rate \({W}_{r}(X|X+{\rm{\Delta }}{X}_{r})\) (using notation from35). The backward (time-reversed) reaction −r can also occur, producing random jumps \(X+{\rm{\Delta }}{X}_{r}\to X\) with transition rate \({W}_{-r}(X+{\rm{\Delta }}{X}_{r}|X)\). How do we obtain the probability distribution \(P(X,t)\) based on these transition rates? The master equation describing the exact time evolution of \(P(X,t)\) is given by35,36,37

with flux

where the first (second) term on the right-hand side corresponds to an increase (decrease) in probability P(X, t) by jumps towards (away from) the state with X molecules. Generally, for nonequilibrium systems detailed balance \({W}_{r}(X-{\rm{\Delta }}{X}_{r}|X)P(X-{\rm{\Delta }}{X}_{r})={W}_{-r}(X|X+{\rm{\Delta }}{X}_{r})P(X)\) is not fulfilled. Hence, apart from a transient, P(X, t) will relax in time towards a nonequilibrium steady state (from now on simply called ‘state’) with time-independent P(X). For a nonequilibrium system, the Gibbs-Boltzmann entropy of statistical mechanics for probabilities P(X, t) is given by35,36

in units of Boltzmann’s constant \({k}_{B}\) and P(X, 0) the initial condition (prior). The first term on the right-hand side of Eq. (6) describes the average entropy \({S}^{0}(X)\) of a fixed number of molecules due to internal degrees of freedom, while the second term describes the average entropy due to probability distribution P(X, t) itself. When differentiating with respect to time, we obtain for entropy flow and entropy production rates

respectively. The passive contribution in Eq. (7a) is due to advection of molecules of a certain complexity (entropy), while the active contribution is due to changes in number X of molecules. The nonnegativity of \(d{S}_{i}/dt\) is due to inequality \(({R}_{+}-{R}_{-})\,\mathrm{ln}\,({R}_{+}/{R}_{-})\ge 0\) with R + and R − generic forward and backward reaction rates, and demonstrates the second law of thermodynamics (see Supplementary Information for further explanations). Note, that the active part of Eq. (7a) can also be written more compactly37

which again can be used to conveniently calculate the rate of entropy production at steady state upon change of the overall sign. Furthermore, Eq. (7b) represents a lower bound of the entropy production, assuming infinitely fast mixing38,39.

Another way to look at stochastic systems and entropy production is through trajectories Γ, defined by temporally ordered numbers of molecules \({\rm{\Gamma }}(t)={X}_{0}\to {X}_{1}\to {X}_{2}\to \ldots \to {X}_{n}\) for times \(0 < {t}_{1} < {t}_{2} < \ldots < {t}_{n} < t\). The backward trajectory is then given by \(-{\rm{\Gamma }}(t)={X}_{n}\to \ldots \to {X}_{2}\to {X}_{1}\to {X}_{0}\). According to the Evans-Searles fluctuation theorem32, the entropy change along the trajectory Γ is given by the log-ratio of their individual constituent probabilities

where we neglected \(\mathrm{ln}\,\frac{P({X}_{0})}{P({X}_{n})}\) with prior \(P({X}_{0})\) on the right-hand side, valid for long trajectories. This final expression is sometimes called the medium entropy32 or action functional35,37 but in our terminology corresponds to the entropy flow. Equation (9) reflects our intuition that the more the entropy increases during the forward process (along Γ), the less likely is the backward process (−Γ), reflecting the breaking of time-reversal symmetry. (However, this also shows that small stochastic systems can violate the second law of thermodynamics!) At steady state, we again obtain the entropy production, and the ensemble averaged \(\langle {\rm{\Delta }}{S}_{{\rm{\Gamma }}}(t)\rangle \), averaged over trajectories \({\rm{\Gamma }}({t})\) of duration t, is given by the time-integrated entropy production rate35

valid in the long-time limit, and where we defined \(\langle {\rm{\Delta }}{S}_{{\rm{\Gamma }}}(t)\rangle ={\sum }_{{\rm{\Gamma }}}{P}_{{\rm{\Gamma }}}{S}_{{\rm{\Gamma }}}(t)\). In the following, we use both the molecule number and trajectory-based pictures.

Minimal nonequilibrium bistable model

To understand the validity of the MaxEPP, why not investigate it with a simple exactly solvable model? This was indeed attempted using the well-known chemical Schlögl model (of the second kind)40,41. The results were used as an argument against MaxEPP, but, as we highlight later, there are issues with this argument due to Keizer’s paradox. This paradox highlights the fact that microscopic (master equation) and macroscopic mean-field descriptions can yield very different results42,43,44. Here, we describe in more detail the Schögl model, followed by an explanation of Keizer’s paradox in the subsequent section.

The Schlögl model only depends on one chemical species X with interesting features such as bistability (two different stable steady states), first-order phase transition (energy-assisted jumps between states), and front propagation in spatially extended systems40,45. Biochemically, the model converts species A to B and vice versa via intermediate species X

with rate constants as shown (note we use same capital letter symbols for species names and molecule numbers.) The model recently attracted renewed interest due to its mapping onto biologically relevant models with bistability, e.g.45,46,47, with the caveat that Michaelis-Menten enzyme kinetics need to be replaced by mass-action kinetics45 or appropriately Taylor expanded (see Supplementary Information for an example and48 for other minimal biological models based on mass-action kinetics.) Nevertheless, the Schlögl model (with spatial dependence and diffusion) is believed to describe front propagation in CO oxidation on Pt single crystals surfaces, and the nonlinear generation and recombination processes in semiconductors49.

In terms of the master equation, the transition rates are35,40,45

where the molecule numbers of species A and B are fixed. Such a chemical system can be simulated by the Gillespie algorithm, which is a dynamic Monte Carlo method and reproduces the exact probability distribution from the master equation upon long enough simulations (sampling) (Fig. 2a,b)50.

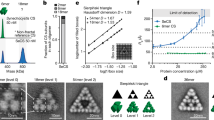

Appearance of Keizer’s paradox in Schlögl model. (a) Gillespie simulations of Schlögl model for b = 4 and Ω = 10. (b) Histogram of concentration levels for simulation from (a). (c) Bifurcation diagram (steady states) from ODE model (black). Average concentration from master equation for Ω = 10 (red solid line) and Ω = 100 (red dashed line). Quantity \({b}_{c}\approx 3.65\) represents the critical value (green arrow). (d) Corresponding entropy production rates. Equilibrium \(d{S}_{i}/dt=0\) occurs for \({b}_{0}=\mathrm{1/6}\) (blue arrow). (e) Curvature (second spatial derivative) of effective potential from ODE model evaluated at steady state. Vertical grey dashed lines for guiding the eye. Parameters: \({k}_{+1}a=\mathrm{0.5,}\,{k}_{-1}=3\), and \({k}_{+2}={k}_{-2}=1\).

For large (but finite) volumes Ω, an analytical formula for the probability distribution can be derived, i.e. \(p(x)=N(x)\exp [-{\rm{\Omega }}{\rm{\Phi }}(x)]\), with lengthy expressions for normalization N(x) and nonequilibrium potential \({\rm{\Phi }}(x)\) (see Supplementary Information for details on the large-Ω limit of the master equation51; Supplementary Fig. S1 compares this potential with other potentials used later.) Note, while the prefactor N(x) has a weak x-dependence, the main x-dependence comes from the potential in the exponential, which dominates for large Ω (\(p(x)=\exp [-{\rm{\Omega }}{\rm{\Phi }}(x)+\,\mathrm{ln}\,N(x)]\approx N({x}^{\ast })\,\exp [-{\rm{\Omega }}{\rm{\Phi }}(x)]\), where \({x}^{\ast }\) is a steady-state value, which minimizes \({\rm{\Phi }}(x)\); see51). While the expression for \({\rm{\Phi }}(x)\) is lengthy, its spatial derivative

is simple (and will be used later), with \({w}_{\pm r}={W}_{\pm r}/{\rm{\Omega }}\) for r = 1, 2.

However, for easier analytical calculations, there are a number of possible simplifying assumptions to the master equations. In the macroscopic (infinite volume) limit, the dynamics of the concentration

(written in small letter symbols) is described by the deterministic ordinary differential equation (ODE)

Since \(dx/dt\) resembles the velocity of an overdamped particle at position x, the right-hand side of Eq. (15) is written in terms of the gradient of an effective potential \({{\rm{\Phi }}^{\prime} }_{{\rm{ODE}}}(x)=d{{\rm{\Phi }}}_{{\rm{ODE}}}(x)/dx\), where \({{\rm{\Phi }}^{\prime} }_{{\rm{O}}DE}(x)\) does not have units of energy over length but concentration over time. Parameters \(a=A/{\rm{\Omega }}\) and \(b=B/{\rm{\Omega }}\) describe the concentrations of the externally fixed reservoir species, used for driving the system out of equilibrium. Equation (15) can be used to produce the steady-state (\(dx/dt=0\)) bifurcation diagram, e.g. as a function of b (Fig. 2c, black line). This demonstrates bistability in a regime of intermediate concentrations of b (two stable steady states and one intermediate unstable state). However, the deterministic model does not predict the weights of the steady states, i.e. the probabilities of the steady states, which requires solving the corresponding stochastic master equation.

To quantify the entropy production rate for maintaining the steady state, the limit \({\rm{\Omega }}\to \infty \) of Eq. (7b) can be taken, leading to35

with \(d{s}_{i}/dt={{\rm{\Omega }}}^{-1}d{S}_{i}/dt\) and units of k B (where we omitted the asterix from Eq. (3) for indicating steady state). In Eq. (16), the sum is over reaction types, and the factor (\({w}_{+r}-{w}_{-r}\)) and the log term represent the flux and the chemical-potential (or Gibbs free-energy) difference (divided by temperature T) of each reaction, respectively (see Supplementary Information for a derivation). This entropy production is illustrated in Fig. 1b, which shows both a low molecule-number state dissipating little, as well as a high-molecule number state dissipating a lot. At equilibrium for \({b}_{0}=1/6\) all subreactions fulfill detailed balance, i.e. \({w}_{+r\mathrm{,0}}={w}_{-r\mathrm{,0}}\) with subscript 0 indicating equilibrium rates, and the entropy production rate is zero. As a consequence, near equilibrium MinEPP is valid: expanding \({w}_{\pm r}={w}_{\pm r\mathrm{,0}}+\delta {w}_{\pm r}\), using \(\delta {\rm{\Delta }}{w}_{r}=\delta {w}_{+r}-\delta {w}_{-r}\) and \(\mathrm{ln}\,\mathrm{(1}+\delta x)\approx \delta x\) for small \(\delta x\), we obtain \(d{s}_{i}/dt\approx {w}_{-r\mathrm{,0}}^{-1}\delta {\rm{\Delta }}{w}_{r}^{2}\), i.e. a quadratic form with positive prefactor. Hence, near equilibrium the entropy production rate is minimized with respect to changes in the rates (or their parameters).

It is important to note that Eq. (15) is indistinguishable from an equilibrium system, e.g. as the equation is equivalent to an overdamped particle in an anharmonic potential, and the entropy production could mistakenly be written like \(d{s}_{i}/dt=({w}_{+1}+{w}_{-2}-{w}_{-1}-{w}_{+2}){\rm{l}}{\rm{n}}[({w}_{+1}+{w}_{-2})/({w}_{-1}+{w}_{+2})]=0\), which may appear like an extreme version of MinEPP! To remedy this problem, we can rewrite Eq. (15) by moving away from concentration constraints (forces) to flux constraints

where Eq. (17b) and (17c) represent explicitly the dynamics of the reservoir species a and b. Imposed flux F ensures that the molecule concentrations a and b are maintained and that the dynamics of species \(x\) are driven out of equilibrium. At steady state (given by \({x}^{\ast }\), \({a}^{\ast }\), and \({b}^{\ast }\)), the flux \(F={w}_{+1}-{w}_{-1}={w}_{+2}-{w}_{-2}\) produces entropy at a rate given by \(d{s}_{i}/dt=F[\mathrm{ln}({w}_{+1}/{w}_{-1})+\,\mathrm{ln}({w}_{+2}/{w}_{-2})]\), and hence Eq. (16) follows naturally as the sum of entropy production rates of the individual reactions, Eq. (17a–17c). The entropy production rate is plotted in Fig. 2d.

Issues with previous attempts to disprove MaxEPP

The Schlögl model was used in the past to ‘disprove’ MaxEPP52 (reviewed in40). The argument goes as follows: In the ODE model, the high state always has the higher entropy production (see Fig. 2c,d). This can easily be understood since overall in the Schlögl model species A is converted to species B (and vice versa). Hence, \(d{s}_{i}/dt=da/dt\cdot {\rm{\Delta }}\mu /T{\rm{\Delta }}x\) with Δμ the chemical potential difference for the overall reaction, T the temperature of the bath and \(da/dt\) a linear function of x (see Eq. (11a)). As a result, if MinEPP is the rule, then the low state should be selected, while MaxEPP would dictate that the high state is more stable.

This argument can be made sharper when we consider the results from the master equation in the large volume limit. The average concentration x, as we discussed before, switches from the low to the high state at a critical value \({b}_{c}\sim 3.65\), with the switch becoming progressively sharper with increasing volume45,53. This indicates a first-order phase transition and loss of bistability (Fig. 2c, red curves). Hence, for \(b < {b}_{c}\) the low state is selected, while for \(b > {b}_{c}\) the high state is selected. Since these correspond respectively to the low and high entropy-production rates (Fig. 2d, black curve), MinEPP (MaxEPP) would apply below (above) b c , and hence neither extremal principle would apply throughout.

What is the issue with this conclusion? Because this and other models with large fluctuations suffer from Keizer’s paradox42,43,44, which says that microscopic (master equation) and macroscopic (ODE) descriptions can yield very different results, in particular when fluctuations play an important role (bistable systems, systems with possibility of extinction etc.). Ultimately, this paradox is caused by the switching of the order of the limits40. In the macroscopic description, the infinite volume limit is taken first (to derive the ODE), and then the infinite time limit is taken (for obtaining the steady states), while in the microscopic description the opposite order is applied. Since the above argument combines the entropy production from the bistable ODE model with the weights from the master equation, which is mono-stable in the infinite volume limit, this mixing of models may have led to the wrong conclusion regarding MaxEPP.

MaxEPP in a simple bistable model

Having identified an inconsistency in the argument to disprove MaxEPP in the Schlögl model, we now proceed to rescue MaxEPP as a valid principle for determining the weights of steady states. Specifically, we would first like to demonstrate that the order of the weights of the two stable steady states (Fig. 3a) matches the order of their entropy production rates so that the state with the larger weight also has the larger entropy production rate. This would confirm the MaxEPP in this particular case. To obtain these rates, we Taylor-expanded the exact potentials around the steady-state values to obtain two Gaussian peaks for the weights of the two states for b near b c and large Ω (so that the analytical solution of the master equation is valid)

with k = 1, 2 for the two Gaussian peaks centered around steady-state values \({x}_{k}^{\ast }\), the inverse of \({\rm{\Phi }}^{\prime\prime} (x)={d}^{2}{\rm{\Phi }}(x)/d{x}^{2}\) proportional to the width of the peak, and \(N={[p({x}_{1}^{\ast })+p({x}_{2}^{\ast })]}^{-1}\) a normalization factor. Indeed, Fig. 3b shows that the exact numerical solution of the master equation, the analytical large-Ω limit, and the Gaussian approximation match reasonably well. Now, using the expression for the average stochastic entropy production rate at steady state (Eq. (8))35,37,54

where the second line is valid for large Ω, shows that the order of the rates indeed matches the order of the weights (Fig. 3c). This can be easily understood by approximating the \({k}^{{\rm{th}}}\) peak by \(\delta \)-function \({p}_{k}(x)=Np({x}_{k}^{\ast })\delta (x-{x}_{k}^{\ast })\), resulting in \(d{S}_{i,k}/dt=Np({x}_{k}^{\ast }){\rm{\Omega }}{\sum }_{r}^{\pm 2}{w}_{-r}({x}_{k}^{\ast }){\rm{l}}{\rm{n}}[{w}_{-r}({x}_{k}^{\ast })/{w}_{r}({x}_{k}^{\ast })]=Np({x}_{k}^{\ast }){\rm{\Omega }}d{s}_{i,k}/dt\) with \(d{s}_{i,k}/dt\) the rate of macroscopic entropy production for state k from Eq. (16). Hence, when the weights of the states change from \(p({x}_{1}^{\ast })\gg p({x}_{2}^{\ast })\) to \(p({x}_{1}^{\ast })\ll p({x}_{2}^{\ast })\) for increased driving, then \(d{S}_{i\mathrm{,1}}/dt > d{S}_{i\mathrm{,2}}/dt\) changes to \(d{S}_{i\mathrm{,2}}/dt > d{S}_{i\mathrm{,1}}/dt\) (even though \(d{s}_{i\mathrm{,1}}/dt < d{s}_{i\mathrm{,2}}/dt\) always applies after dividing by Ω, see Fig. 2d). Consequently, the entropy production rate \(d{S}_{i,k}/dt\), when correctly written as an extensive variable, can indeed be a proxy for the weight of a state \(p({x}_{k}^{\ast })\).

MaxEPP in Schlögl model. (a) Values of \(p({x}^{\ast })\) from large-Ω limit of master equation evaluated at the low (solid curve) and high (dashed curve) steady states \({x}^{\ast }\) for different \(b\) values. (inset) Weight of states from exact master equation by summing up probabilities for each peak in probability distribution (local minimum between low and high state is separatrix). (b) Distribution \(p(x)\) from the exact master equation (red curve), large-Ω limit of the master equation (red dotted curve), and Gaussian approximation (black curve) for low and high states. (c) Entropy production rates calculated for each Gaussian peak. (inset) Entropy productions from exact master equation for each state by summing up contributions for each peak in probability distribution (local minimum between low and high state is separatrix). (a–c) \({\rm{\Omega }}=100\). Remaining parameters as in Fig. 3.

Even without the Gaussian approximation, we can obtain the weights of the states and their entropy production rates using the master equation. We can split up the contributing x values into two parts (corresponding to the two states) by using the separatrix as the natural attractor boundary (local minimum \({x}_{{\rm{\min }}}\approx 0.9\) of probability distribution in Fig. 3b). This produces the same qualitative result that the entropy production rates and weights of the two states are correlated (cf. insets of Fig. 3a,c). Nevertheless, there are quantitative differences between the two approaches as the curves of the weights and the entropy production rates do not cross exactly at the same b value. This is because the order of the weights shown in Fig. 3a also depends on the curvature \({\rm{\Phi }}^{\prime\prime} (x)\) of the potential in a nontrivial way. Consider the ratio of the transition rates between low (1) and high (2) states, given by40,45

(see Supplementary Information for details). The prefactor alone suggests that the lower the curvature the higher the weight of a state but this weak curvature dependence (outside exponential) only reflects the attempt frequency to escape the attractor. In contrast, Fig. 2e suggests that the crossing of the curvature marks the transition (cf. Fig. 2c). In particular, the more stable state (i.e. the low state below \({b}_{c}\) and the high state above \({b}_{c}\)) appears to have the higher curvature. A higher curvature may imply a larger depth of the potential \({{\rm{\Phi }}}_{{\rm{O}}DE}({x}_{k})\) and hence increased stability. However, a proper treatment requires the inclusion of noise, which is done next.

General MaxEPP for nonequilibrium steady states

Can we establish a formal link between the weight of a state and its entropy production and curvature in general? In the following, we approach the problem using the Jaynes’ maximum caliber method19,55, which, put simply, is just an inference method similar to maximum entropy methods for equilibrium systems26. Basically, we wish to find the probability of a certain configuration for a system in a way that neither assumes something we do not know, nor contradicts something we do know. For this purpose, we define the caliber for the probability \({p}_{\Gamma }\) of observing a trajectory of duration t

where the first term on the right-hand side is the Shannon information entropy and the second term is a constraint. Our constraint is designed to implement that the action \({A}_{\Gamma }={\int }_{0}^{t}L(\tilde{t})d\tilde{t}\) is minimized with λ a (positive) Lagrange multiplyer (reflecting our expectation that the observed average action and hence average difference in kinetic and potential energy are finite) and \(L(t)\) the Lagrangian. Indeed, maximizing the entropy with respect to the probability of observing a trajectory

leads to a Boltzmann-like probability distribution (with action in units of λ −1), with \({P}_{{\rm{\Gamma }}}\) the larger the smaller the action, representing the stochastic least-action principle31,32. Now, using the Evans-Searles fluctuation theorem37,56

where the ratio of the probabilities of forward and backward (time-reversed) trajectories corresponds to the exponential of the entropy produced along trajectory Γ at steady state. Hence, the entropy production

is the difference between the backward and forward actions at steady state (see also32). While \({A}_{{\rm{\Gamma }}}\) is minimal by construction, we have no information about \({A}_{-{\rm{\Gamma }}}\) (MaxEPP would be proven if \({A}_{-{\rm{\Gamma }}}\) is maximal). To gain insight into the problem we derive in the following the entropy production for steady states explicitly.

To combine the best of ODEs and master equations, we extend Eq. (17a–17c) by the following set of Langevin equations (stochastic differential equations)

with short notations \({{\rm{\Phi }}^{\prime} }_{{\rm{ODE}}}(x)=\partial {{\rm{\Phi }}}_{{\rm{ODE}}}(a,b,x)/\partial x\), \({{\rm{\Phi }}^{\prime} }_{{\rm{ODE}}}(a)=\partial {{\rm{\Phi }}}_{{\rm{ODE}}}(a,b,x)/\partial a\) and \({{\rm{\Phi }}^{\prime} }_{{\rm{ODE}}}(b)=\) \(\partial {{\rm{\Phi }}}_{{\rm{ODE}}}(a,b,x)/\partial b\) (although a unique \({{\rm{\Phi }}}_{{\rm{ODE}}}(a,b,x)\) may not exist57). In Eq. (25a–25c), the η’s represent noise terms. In the presence of noise, the steady state is now defined by the vanishing time derivatives of the averages \(d{\langle x\rangle }^{\ast }/dt=d{\langle a\rangle }^{\ast }/dt=d{\langle b\rangle }^{\ast }/dt=0\), where notations are related by \({\langle a\rangle }^{\ast }={a}^{\ast }\), \({\langle b\rangle }^{\ast }={b}^{\ast }\), and \({\langle x\rangle }^{\ast }={x}^{\ast }\).

There are a number of different ways of how to model the noise. In chemical reactions, noise is attributed to independent stochastic birth and death processes of the chemical species58, leading to multiplicative noise of the form \({\eta }_{a}(t)=\sqrt{\varepsilon }{g}_{a}{\xi }_{a}(t)\) and \({\eta }_{b}(t)=\sqrt{\varepsilon }{g}_{b}{\xi }_{b}(t)\) with small parameter \(\varepsilon =\mathrm{1/}{\rm{\Omega }}\) and effective temperatures \({g}_{a}={g}_{a}(a,b,x)={w}_{+1}+{w}_{-1}\) and \({g}_{b}={g}_{b}(a,b,x)={w}_{+2}+{w}_{-2}\) (and \({g}_{x}={g}_{a}+{g}_{b}\) for completeness). The fluctuations themselves (\(\xi \)’s) are assumed to be ‘white’ with correlations \(\langle {\xi }_{k}(t){\xi }_{k^{\prime} }(t^{\prime} )\rangle ={\delta }_{k,k^{\prime} }\delta (t-t^{\prime} )\) and \(k=a,b\). The noise in X is just a reflection of the noises in A and B with \({\eta }_{x}(t)=-\sqrt{\varepsilon }[{g}_{a}{\xi }_{a}(t)+{g}_{b}{\xi }_{b}(t)]\), thus avoiding double counting of noise contributions. For simplicity, we assume additive noise from now on, characterized by constant effective temperatures \({g}_{k}^{\ast }={g}_{k}({a}^{\ast },{b}^{\ast },{x}^{\ast })\) as evaluated at the steady state. The usage of a constant effective temperature is a suitable approximation when the noise (or ε) is small and the system is settled into its steady state (rare switching only; however, these effective temperatures can be different for the different states in a multistable system)59.

To reconnect with Eq. (24), the stochastic action \({A}_{{\rm{\Gamma }}}=A[{\bf{q}}(t)]\) of the combined dynamics of \({\bf{q}}(t)=\) \(\{x(t),a(t),b(t)\}\) is required, where we now introduce short notations \(\dot{{\bf{q}}}=\{\dot{x},\dot{a},\dot{b}\}=\{dx/dt,da/dt,db/dt\}\) for the time derivatives. According to60,61 the action for the system of Langevin Eq. (25a–25c) with additive noise is

with \({\sigma }_{a}=1\), \({\sigma }_{b}=-1\), and \({\sigma }_{x}=0\) for a trajectory of duration t (related expressions can be derived for multiplicative noise, see62,63,64,65). Note that the integral (or time average) over \(F\dot{q}\) is zero and hence this term does not appear in Eq. (26). Furthermore, Eq. (26) shows that the curvature \({{\rm{\Phi }}^{\prime\prime} }_{{\rm{O}}DE}\) of the potential does affect the probability of a trajectory, reflecting the disfavoring of high noise32. Now, combining Eqs (24) and (26), the entropy production is given by

with \(\overline{\mathrm{[...]}}\) indicating time averaging for duration \(t\) 66,67. Two comments are in order. First, for the backward action, all time derivatives need reversing in sign, such as for \(\dot{a}\), \(\dot{b}\) and \(\dot{x}\). (The change in sign of \(d\tilde{t}\) in the integral is canceled by the change in order of integration.) Second, the term \(\overline{[\dot{x}{{\rm{\Phi }}^{\prime} }_{{\rm{ODE}}}(x)]}\) is zero as there is no net flux in \(x\) (see Supplementary Information)68,69.

In summary, the action in Eq. (26) contains a classical part (i.e. difference of kinetic and potential energies), a dissipative part (entropy production), and a stochastic part (curvature). Hence, trajectories do not only minimize the classical and stochastic actions (equivalent to solving the dynamical equations) but also maximize the entropy production (due to negative sign in front of the entropy production term). Eq. (26) thus results in the MaxEPP for trajectories in a multistable dynamical system, and is the main result of this paper.

Simplified MaxEPP for nonequilibrium steady states

The entropy production appearing in the action can be brought to a more familiar form, at least heuristically. The entropy production for reservoir species A can be rewritten as

and accordingly for species B. We need to be careful with the last term of Eq. (28). Discretizing the Langevin equation, we obtain

(see Supplementary Information)68,69,70, where we replaced time averages \(\overline{\mathrm{[...]}}=\mathrm{1/}t{\int }_{0}^{t}dt\mathrm{...}\) by ensemble averages \(\langle \ldots \rangle =\int da\,p(a,b,x\mathrm{)...}\) at steady state, valid for sufficiently long trajectories. (However, this ensemble average is technically restricted to sampling from a particular steady state, as we do consider switching between states here). According to Eq. (29) the last term in Eq. (28) is smaller than the first two by a factor ε. Now, introducing ensemble averages throughout, we can, at least heuristically, introduce the potential from the master equation

using the detour of the Fokker-Planck potential as another approximation to the master equation (see Supplementary Information for details). Next, using Jensen’s inequality for convex functions, we obtain the approximate steady-state weight \(p({x}^{\ast })\approx {\langle {p}_{{\rm{\Gamma }}}\rangle }_{{x}^{\ast }}=\langle {e}^{-{A}_{{\rm{\Gamma }}}}\rangle /Q\ge {e}^{\langle {A}_{{\rm{\Gamma }}}\rangle }/Q\), which becomes an exact equality for small noise. Keeping only the highest order terms in Ω (or lowest order terms in ε) and using Eq. (26), the steady-state weight becomes

up to normalization and valid for durations t much smaller than the time scale for switching states. In Eq. (16), the entropy production has now the familiar form given by Eq. (16) (also known as Schnakenber’s formula54). Typically, at steady state we have \(\langle {\dot{a}}^{2}\rangle \approx \langle {\dot{b}}^{2}\rangle \approx \langle {\dot{x}}^{2}\rangle \approx \langle {{\rm{\Phi }}^{\prime} }_{{\rm{ODE}}}{(x)}^{2}\rangle \approx 0\), as well as \(\langle {{\rm{\Phi }}^{\prime} }_{{\rm{ODE}}}{(a)}^{2}\rangle \approx -\langle {{\rm{\Phi }}^{\prime} }_{{\rm{ODE}}}{(b)}^{2}\rangle \approx F\), although tradeoffs among the different terms can occur. Hence, the main difference between the different steady states in a multistable system is the entropy production term, which depends on the steady-state value \({x}^{\ast }\).

Taken together, Eq. (31) demonstrates once more the roles of both the classical and the dissipative action in determining the probability of a steady state. Hence, MaxEPP is a principle for multistable systems in which the entropy production biases the evolution of the system towards the highest-entropy producing state.

Discussion

We showed that MaxEPP is applicable when comparing the two states in the simple bistable Schlögl model (using the master equation; Fig. 3a,c) and when considering trajectories of a multistable system at steady state in the large-volume limit (using the Langevin approximation; Eq. (31)). MaxEPP applies in the former because the weights of the low and high states shift in the exact stochastic approach due to a first-order phase transition. MaxEPP applies in the latter because trajectories minimize the action two-fold: First, the classical action is minimized, meaning that the dynamic system takes on its appropriate solution, i.e. \(\dot{x}=-{{\rm{\Phi }}^{\prime} }_{{\rm{ODE}}}(x)\). Second, the entropy production from the fluxes between the reservoir and the reaction volume is maximized. Hence, in a multistable system, the steady state with the highest entropy production is naturally selected (similar to the ‘state selection’ principle proposed in33). Our analytical derivations show that MaxEPP is a consequence of the least-action principle applied to dissipative systems (stochastic least-action principle). Note however the discrepancy in how the MaxEPP is achieved in the two approaches: using the master equation we observe a first-order phase transition and state switching at the critical point, while using the Langevin approximation, the high state is selected.

In addition to this local MaxEPP for states of a multistable system for a fixed driving force, there is also a trivial global MaxEPP principle, which simply says that the more a system is driven away from equilibrium the more it produces entropy (‘gradient response’ principle)33,71. This statement is simply a result of the average of Eq. (9) (or Eq. (24)) given by the Kullback-Leibler divergence between the forward and backward trajectory distributions \({P}_{\Gamma }\) and \({P}_{-\Gamma }\), respectively,

which has been mentioned before72. Equation (32) is minimally zero (at equilibrium due to detailed balance) and is the larger the more the forward and backward trajectories differ. Hence, the earlier discussed MinEPP is not really a separate principle, but simply a different perspective of the global MaxEPP. Figure 4 summarizes the two MaxEPPs and the MinEPP for a bistable system at steady state.

Min- and MaxEPPs. Illustration of MinEPP and two different MaxEPP in a semi-log plot of entropy production rate \(dS/dt\) versus control parameter b. MinEPP is valid near equilibrium, where \(dS/dt\gtrsim 0\). MaxEPP 1 simply states that the more a system is driven away from equilibrium the more entropy is produced. MaxEPP 2 is more subtle, describing how states are selected in a multistable system.

Our results can be connected to recent results in fluid systems. Similar to the Schlögl model with a nonequilibrium first-order phase transition, flow systems undergo a laminar-turbulent flow transition as the Reynolds number (Re) increases17. In both systems, MaxEPP applies and can be used to predict the critical transition point (Fig. 3a,c in the former and Fig. 2 of17 in the latter). What are the weights of the states in the fluid system? As a macroscopic system, the system is largely monostable - below the critical Re, laminar flow is dominant, while above it, turbulent flow is the result. This is analogous to the macroscopic Schlögl model, where the bistable region disappears for increased system size and a first-order phase transition results (Fig. 2c). However, even in the fluid system, the laminar state can be metastable even for relatively large Re if unperturbed. This is a sign of hysteresis and hence bistability, and so both laminar and turbulent flows may coexist with the turbulent flow the more stable state (turbulent flow never switches back to laminar flow when Re is above the critical value).

Another previously investigated physical system is the fusion plasma, where a thin layer of fluid is heated from one side. Models of heat transport in the boundary layer predict that the MaxEPP (MinEPP) applies when the heat flux (temperature gradient) is fixed3,21. Similarly in the Schlögl model, when the concentrations of species A and B are fixed (like the temperature in the fusion plasma), the system becomes indistinguishable from an equilibrium system (see comments above Eq. (17a)) and the entropy production is zero (extreme version of MinEPP). However, once fluxes are fixed (Eq. (17b) and (17c), or Eq. (25b) and (25c)), the MaxEPP results (Eq. (26)). We believe that flux constraints correspond to the more physically correct scenario as now the entropy production of the macroscopic (ODE) model matches the entropy production of the microscopic model as described by the exact master equation (cf. Eqs (7a) and (16)). In both above described flow systems, dissipative structures form when strongly driven. In the former fluid system, turbulent swirl structures appear while in the latter plasma system a shear flow is induced. What do such dissipative structures correspond to in the Schlögl model? There are large fluctuations and inhomogeneities in the spatial Schlögl model with diffusion for increasing system size, although these may represent the approach of the critical point and less actual dissipative structures45.

Paradoxically, work in the fluid system raised the possibility that both MinEPP and MaxEPP apply simultaneously. MinEPP appears to predict the flow rates in parallel pipes while MaxEPP seems to predict the flow regime (laminar versus turbulent)20. This can potentially be explained by our Eq. (31) as follows: The first term, which represents the classical action, may lead to a reduced entropy production (and potentially MinEPP), if the effective temperature \({g}_{x}^{\ast }\) (noise) is small. In this case, the entropy production (second term) is less crucial to fulfill. In contrast, if the noise is large, the classical action becomes a less important constraint, and the entropy production becomes important, leading necessarily to MaxEPP.

The MaxEPPs was previously also applied to ecosystem functioning, which aims to predict the evolution of large-scale living systems in terms of thermodynamics (also called ecological thermodynamics). Considering simple food-web models of predators, preys, and other resources, the state-selection and gradient-response principles were found to break down in more complicated models with multiple trophic (hierarchical) levels33. However, the stability of the steady states was assessed with linear stability analysis, i.e. through the response to small perturbations around the macroscopic steady state. However, as we showed, the macroscopic Schlögl model predicts the wrong stability and only in the thermodynamic limit of the microscopic master-equation model the MaxEPP is predicted correctly.

Our interpretation of MaxEPP is in line with the recent finding that the entropy production, by itself, is not a unique descriptor of the steady-state probability distribution73. According to Eq. (26), other terms matter for the probability of a trajectory, such as the classical action and terms disappearing in the limit of large Ω. In fact, far-from-equilibrium physics has many pitfalls. While Eq. (32) leads to safe predictions about the expected entropy production, the fluctuation theorem for individual trajectories given by Eq. (23), i.e. \({P}_{{\rm{\Gamma }}}/{P}_{-{\rm{\Gamma }}}=\exp ({\rm{\Delta }}{S}_{{\rm{\Gamma }}})\), has to be treated with caution. A trajectory Γ with a large ratio \({P}_{{\rm{\Gamma }}}/{P}_{-{\rm{\Gamma }}}\) is not necessarily selected because it has a large entropy production; \({P}_{{\rm{\Gamma }}}\) might still be tiny (and \({P}_{-{\rm{\Gamma }}}\) even tinier) so that Γ is extremely unlikely to occur. Whether a trajectory is actually selected depends on the underlying chemical rules or physical laws (see classic action in Eq. (26) and Supplementary Information with Fig. S3 for an explicit example).

Future work may investigate applications of MaxEPP in models of nonequilibrium self-assembly, climate, and the emergence of molecular complexity (or life). Imagine there are two stable steady states, one with high complexity and high entropy production, and another one with low complexity and low entropy production. We speculate that the high complexity state is more likely as long as the extra cost from the entropy reduction due to complexity is offset by a significantly larger entropy production. Another issue to keep in mind is that evolution of our biosphere may not be at steady state, and so transient behavior may need to be investigated.

References

Schopf, J. W. (ed.) Life’s Origin (University of California Press, 2002).

Schrödinger. E., What is Life? (Cambridge University Press, 1944).

Yoshida, Z. & Mahajan, S. M. “Maximum” entropy production in self-organized plasma boundary layer: A thermodynamic discussion about turbulent heat transport. Phys Plasmas 15, 032307 (2008).

Kawazura, Y. & Yoshida, Z. Comparison of entropy production rates in two different types of s elf-organized flows: Bénard convection and zonal flow. Phys Plasmas 19, 012305 (2012).

Helmholtz, H. Zur Theorie der stationären Ströme in reibenden Flüssigkeiten. Wiss. Abh. 1, 223–230 (1968).

Rayleigh., L. On the motion of viscous fluid. Phil. Mag. 26, 776–786 (1913).

Onsager, L. Reciprocal relations in irreversible processes I & II. Phys. Rev. 37, 405–426 & 38, 2265–2279 (1931).

Paltridge, G. W. The steady-state format of global climate. Quart. J. Royal Meteorol. Soc. 104, 927–945 (1978).

H. Ziegler, An Introduction to Thermomechanics (North-Holland Publ. Co., New York, 1977).

Whitfield, J. Complex systems: order out of chaos. Nature 436, 905–907 (2005).

Dewar, R. C., Lineweaver, C., Niven, R. K. & Regenauer-Lieb, K. Beyond the second law - entropy production and non-equilibrium systems, chapters 1 and 7 (Springer, 2014).

Kleidon, A. Beyond Gaia: thermodynamics of life and earth system functioning. Clim. Change 66, 271–319 (2004).

England, J. L. Statistical physics of self-replication. J. Chem. Phys. 139, 121923 (8pp) (2013).

England, J. L. Dissipative adaptation in driven self-assembly. Nature Nanotech 10, 919–923 (2015).

Lorenz, R. D., Lunine, J. I. & Withers, P. G. Titan, Mars and Earth: entropy production by latitudinal heat transport. Geophys. Res. Lett. 28, 415–418 (2001).

del Jesus, M., Foti, R., Rinaldo, A. & Rodriguez-Iturbe, I. Maximum entropy production, carbon assimilation, and the spatial organization of vegetation in river basins. Proc. Natl. Acad. Sci. USA 109, 20837–20841 (2012).

Martyushev, L. M. Some interesting consequences of the maximum entropy production principle. J. Exp. Theor. Phys. 104, 651–654 (2007).

Prigogine, I. Introduction to Thermodynamics of Irreversible Processes (Intersci. Publ., New York, 1967, 3rd edition).

Jaynes, E. T. The Minimum entropy production principle. Annu. Rev. Phys. Chem. 31, 579–601 (1980).

Niven, R. K. Simultaneous extrema in the entropy production for steady-state fluid flow in parallel pipes. J. Non-Equil. Thermodyn. 35, 347–378 (2010).

Kawazura, Y. & Yoshida, Z. Entropy production rate in a flux-driven self-organizing system. Phys. Rev. E 82, 066403 (2010).

Martyushev, L. M. The maximum entropy production principle: two basic questions. Phil. Trans. R. Soc. B 365, 1333 (2010).

Dewar, R. C. Information theory explanation of the fluctuation theorem, maximum entropy production and self-organized criticality in non-equilibrium stationary states. J. Phys. A: Math. Gen. 36, 631–641 (2003).

Dewar, R. C. Maximum entropy production and the fluctuation theorem. J. Phys. A: Math. Gen. 38, L371–L381 (2005).

Bruers, S. A discussion on maximum entropy production and information theory. J. Phys. A: Math. Theor. 40, 7441–7450 (2007).

Dewar, R. C. Maximum entropy production as an inference algorithm that translates physical assumptions into Mmacroscopic predictions: don’t shoot the messenger. Entropy 11, 931–944 (2009).

Ross, J., Corlan, A. D. & Müller, S. C. Proposed principles of maximum local entropy production. J. Phys. Chem. B 116, 7858–7865 (2012).

Feynman, R. P., Leighton, R. B. & Sands, M. L., Feynman Lectures on Physics, Vol. 2, Lecture 19 (San FranciscoCA: Pearson/Addison-Wesley, 2006).

Doi, M. Onsager’s variational principle in soft matter. J. Phys.: Cond. Matt. 23, 284118 (8pp) (2011).

Bialek, W. Stability and noise in biochemical switches. arXiv:cond-mat/0005235v1 (2000).

Wang, Q. A. Maximum entropy change and least action principle for nonequilibrium systems. Astrophys. Space Sci. 305, 273–281 (2006).

Seifert, U. Stochastic thermodynamics, fluctuation theorems and molecular machines. Rep. Prog. Phys. 75, 126001 (58pp) (2012).

Meysman, F. J. & Bruers, S. Ecosystem functioning and maximum entropy production: a quantitative test of hypotheses. Phil. Trans. R. Soc. B 365, 1405–1416 (2010).

Landi, G. T., Tomé, T. & de Oliveira, M. J. Entropy production in linear Langevin systems. J. Phys. A: Math. Theor. 46, 395001 (2013).

Gaspard, P. Fluctuation theorem for nonequilibrium reactions. J. Chem. Phys. 120, 8898–8905 (2004).

Jiu-Li, L., Van der Broeck, C. & Nicolis, G. Stability criteria and fluctuations around nonequilibrium states. Z. Phys. B 56, 165–170 (1984).

Lebowitz, J. L. & Spohn, D. J. A Gallavotti-Cohen-type symmetry in the large deviation functional for stochastic dynamics. J. Stat. Phys. 95, 333–343 (1999).

Esposito, M. Stochastic thermodynamics under coarse-graining. Phys. Rev. E 85, 041124 (2012).

Ziener, R., Maritan, A. & Hinrichsen, H. On entropy production in nonequilibrium systems. J. Stat. Mech.: Theor. Exp. 2015, P08014 (2015).

Vellela, M. & Qian, H. Stochastic dynamics and non-equilibrium thermodynamics of a bistable chemical system: the Schlögl model revisited. J. Roy. Soc. Interface 6, 925–940 (2009).

Schlögl, F. Chemical reaction models for non-equilibrium phase transition. Z. Physik. 253, 147–161 (1972).

Kurtz, T. G. Limit theorems for sequences of jump Markov processes approximating ordinary differential equations. J. Appl. Prob. 8, 344–356 (1971).

Kurtz, T. G. The relationship between stochastic and deterministic models for chemical reactions. J. Chem. Phys. 57, 2976–2978 (1972).

Vellela, M. & Qian, H. A quasi stationary analysis of a stochastic chemical reaction: Keizer’s paradox. Bull. Math. Biol. 69, 1727–1746 (2007).

Endres, R. G. Bistability: requirements on cell-volume, protein diffusion, and thermodynamics. PLoS One 10, e0121681 (2015). (22pp).

Scheffer, M., Carpenter, S., Foley, J. A., Folke, C. & Walker, B. Catastrophic shifts in ecosystems. Nature 413, 591–6 (2001).

Ozbudak, E. M., Thattai, M., Lim, H. N., Shraiman, B. I. & van Oudenaarden, A. Multistability in the lactose utilization network of Escherichia coli. Nature 427, 737–740 (2004).

Wilhelm, T. The smallest chemical reaction system with bistability. BMC Syst. Biol. 3, 90 (2009).

Ertl, G. Engineering of Chemical Complexity II (World Scientific, 2015).

Gillespie, D. T. Exact stochastic simulation of coupled chemical reactions. J. Phys. Chem. 81, 2340–2361 (1977).

Hanggi, P., Grabert, H., Talkner, P. & Thomas, H. Bistable systems: master equation versus Fokker-Planck modelling. Phys. Rev. A 29, 371–378 (1984).

Nicolis, G. & Lefever, R. Comment on the kinetic potential and the Maxwell construction in non-equilibrium chemical phase transitions. Phys. Lett. A 62, 469–471 (1977).

Ge, H. & Qian, H. Thermodynamic limit of a nonequilibrium steady state: Maxwell-type construction for a bistable biochemical system. Phys. Rev. Lett. 103, 148103 (2009).

Schnakenberg, J. Network theory of microscopic and macroscopic behavior of master equation systems. Rev. Mod. Phys. 48, 571–585 (1976).

Presse, S., Ghosh, K., Lee, J. & Dill, K. A. Principles of maximum entropy and maximum caliber in statistical physics. Rev. Mod. Phys. 85, 1115–1141 (2013).

Kurchan, J. Fluctuation theorem for stochastic dynamics. J. Phys. A: Math. Gen. 31, 3719–3729 (1998).

Zhou, J. X., Aliyu, M. D. S., Aurell, E. & Huang, S. Quasi-potential landscape in complex multi-stable systems. J. R. Soc. Interface 9, 3539–3553 (2012).

Kampen, N. G. V. Stochastic Processes in Physics and Chemistry (North Holland, 3rd Edition, 2007).

Hasty, J., Pradines, J., Dolnik, M. & Collins, J. J. Noise-based switches and amplifiers for gene expression. Proc. Natl. Acad. Sci. USA 97, 2075–2080 (2000).

Arnold, P. Symmetric path integrals for stochastic equations with multiplicative noise. Phys. Rev. E 61, 6099–7102 (2000).

Navarra, A., Tribbia, J., Conti, G. The path integral formulation of climate dynamics. PLoS One 8, e67022 (16pp) (2013).

Tang, Y., Yuan, R. & Ao, P. Summing over trajectories of stochastic dynamics with multiplicative noise. J. Chem. Phys. 141, 044125 (8pp) (2014).

Zinn-Justin, J. Quantum Field Theory and Critical phenomena (Claredon Press, Oxford, 1996).

Hänggi, P. Path integral solutions for non-Markovian processes. Z. Phys. B: Cond. Matt. 75, 275–281 (1989).

Wio, H. S., Colet, P., San Miguel, M., Pesquera, L. & Rodriguez, M. A. Path-integral formulation for stochastic processes driven by colored noise. Phys. Rev. A 40, 7312–7324 (1989).

Hatano, T. & Sasa, S. Steady-state thermodynamics of Langevin systems. Phys. Rev. Lett. 86, 3463–3466 (2001).

Seifert, U. Entropy production along a stochastic trajectory and an integral fluctuation theorem. Phys. Rev. Lett. 95, 040602 (4pp) (2005).

Tomé, T. & De Oliveira, M. J. Stochastic mechanics of nonequilibrium systems. Braz. J. Phys. 27, 525–532 (1997).

Tomé, T. Entropy production in nonequilibrium systems described by a Fokker-Planck equation. Braz. J. Phys. 36, 1285–1289 (2006).

Xiao, T., Hou, Z. & Xin, H. Stochastic thermodynamics in mesoscopic chemical oscillation systems. J. Phys. Chem. B 113, 9316–9320 (2009).

Schneider, E. D. & Kay, J. J. Life as a manifestation of the second law of thermodynamics. Math. Comput. Model. 19, 25–48 (1994).

Searles, D. J. & Evans, D. J. Fluctuation relations, free energy calculations and irreversibility. Roy. Soc. Chem. 5, 182–207 (2008).

Zia, R. K. P. & Schmittmann, B. A possible classification of nonequilibrium steady states. J. Phys. A: Math. Gen. 39, L407–L413 (2006).

Acknowledgements

R.G.E. thanks Audrey Yurika Marvin for help with the simulations, Tânia Tomé for numerous helpful discussions, and Linus Schumacher for a critical reading of the manuscript. R.G.E. also thankfully acknowledges financial support from the European Research Council Starting Grant N. 280492-PPHPI and Biotechnological and Biological Sciences Research Council grant BB/G000131/1.

Author information

Authors and Affiliations

Contributions

R.G.E. conceived the study, developed the models and simulations, and wrote the paper.

Corresponding author

Ethics declarations

Competing Interests

The authors declare that they have no competing interests.

Additional information

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Endres, R.G. Entropy production selects nonequilibrium states in multistable systems. Sci Rep 7, 14437 (2017). https://doi.org/10.1038/s41598-017-14485-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-017-14485-8

This article is cited by

-

Synchronization and Random Attractors in Reaction Jump Processes

Journal of Dynamics and Differential Equations (2024)

-

Microbial entropy change and external dissipation process of urban sewer ecosystem

Environmental Monitoring and Assessment (2024)

-

Is the maximum entropy production just a heuristic principle? Metaphysics on natural determination

Synthese (2023)

-

Equilibrium and non-equilibrium furanose selection in the ribose isomerisation network

Nature Communications (2021)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.