Abstract

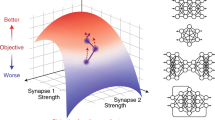

Finding sensory stimuli that drive neurons optimally is central to understanding information processing in the brain. However, optimizing sensory input is difficult due to the predominantly nonlinear nature of sensory processing and high dimensionality of the input. We developed ‘inception loops’, a closed-loop experimental paradigm combining in vivo recordings from thousands of neurons with in silico nonlinear response modeling. Our end-to-end trained, deep-learning-based model predicted thousands of neuronal responses to arbitrary, new natural input with high accuracy and was used to synthesize optimal stimuli—most exciting inputs (MEIs). For mouse primary visual cortex (V1), MEIs exhibited complex spatial features that occurred frequently in natural scenes but deviated strikingly from the common notion that Gabor-like stimuli are optimal for V1. When presented back to the same neurons in vivo, MEIs drove responses significantly better than control stimuli. Inception loops represent a widely applicable technique for dissecting the neural mechanisms of sensation.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 print issues and online access

$209.00 per year

only $17.42 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

All figures were generated from raw or processed data. The data generated and/or analyzed during the current study are available from the corresponding author upon request. No publicly available data was used in this study.

Code availability

Experiments and analyses were performed using custom software developed using the following tools: ScanImage 2018a (ref. 60), CaImAn v.1.0 (ref. 61), DataJoint v.0.11.1 (ref. 62), PyTorch v.0.4.1 (ref. 63), NumPy v.1.16.4 (ref. 64), SciPy v.1.3.0 (ref. 65), Docker v.18.09.7 (ref. 66), Matplotlib v.3.0.3 (ref. 67), seaborn v.0.9.0 (ref. 68), pandas v.0.24.2 (ref. 69) and Jupyter v.1.0.0 (ref. 70). The code for carrying out the data collection and preprocessing is available at https://github.com/cajal/pipeline; the code to perform MEI generation and analysis is available at https://github.com/cajal/inception_loop2019.

References

Adrian, E. D. & Bronk, D. W. The discharge of impulses in motor nerve fibres: Part I. Impulses in single fibres of the phrenic nerve. J. Physiol. 66, 81–101 (1928).

Hartline, H. K. The response of single optic nerve fibers of the vertebrate eye to illumination of the retina. Am. J. Physiol. 121, 400–415 (1938).

Chichilnisky, E. J. A simple white noise analysis of neuronal light responses. Network 12, 199–213 (2001).

Olshausen, B. A. & Field, D. J. in Problems in Systems Neuroscience (eds Sejnowski, T. J. & van Hemmen, L.) 182–211 (Oxford Univ. Press, 2004).

Antolík, J., Hofer, S. B., Bednar, J. A. & Mrsic-flogel, T. D. Model constrained by visual hierarchy improves prediction of neural responses to natural scenes. PLoS Comput. Biol. 12, e1004927 (2016).

Sinz, F. et al. Stimulus domain transfer in recurrent models for large scale cortical population prediction on video. In Proc. Advances in Neural Information Processing Systems 31 (eds Bengio, S. et al.) 7199–7210 (Curran Associates, 2018).

Harth, E. & Tzanakou, E. ALOPEX: a stochastic method for determining visual receptive fields. Vision Res. 14, 1475–1482 (1974).

Földiák, P. Stimulus optimisation in primary visual cortex. Neurocomputing 38–40, 1217–1222 (2001).

Paninski, L., Pillow, J. & Lewi, J. in Computational Neuroscience: Theoretical Insights into Brain Function (eds Cisek, P. et al.) 493–507 (Elsevier, 2007).

Benda, J., Gollisch, T., Machens, C. K. & Herz, A. V. From response to stimulus: adaptive sampling in sensory physiology. Curr. Opin. Neurobiol. 17, 430–436 (2007).

Yamins, D. L. K. & DiCarlo, J. J. Using goal-driven deep learning models to understand sensory cortex. Nat. Neurosci. 19, 356–365 (2016).

Cadieu, C. F. et al. Deep neural networks rival the representation of primate IT cortex for core visual object recognition. PLoS Comput. Biol. 10, e1003963 (2014).

Klindt, D., Ecker, A. S., Euler, T. & Bethge, M. Neural system identification for large populations separating “what” and “where”. Adv. Neural Inf. Process. Syst. 30, 3506–3516 (2017).

McIntosh, L. T., Maheswaranathan, N., Nayebi, A., Ganguli, S. & Baccus, S. A. Deep learning models of the retinal response to natural scenes. Adv. Neural Inf. Process. Syst. 29, 1369–1377 (2016).

Erhan, D. & Bengio, Y. & Courville, A. & Vincent, P. Visualizing higher-layer features of a deep network. Technical Report 1341 (University of Montreal, 2009).

Sofroniew, N. J., Flickinger, D., King, J. & Svoboda, K. A large field of view two-photon mesoscope with subcellular resolution for in vivo imaging. eLife 5, e14472 (2016).

Cadena, S. A. et al. Deep convolutional models improve predictions of macaque V1 responses to natural images.PLoS Comput. Biol. 15, e1006897 (2019).

Kindel, W. F., Christensen, E. D. & Zylberberg, J. Using deep learning to probe the neural code for images in primary visual cortex. J. Vis. 19, 29 (2019).

Zhang, Y., Lee, T. S., Li, M., Liu, F. & Tang, S. Convolutional neural network models of V1 responses to complex patterns. J. Comput. Neurosci. 46, 33–54 (2019).

Adelson, E. H. & Bergen, J. R. Spatiotemporal energy models for the perception of motion. J. Opt. Soc. Am. A 2, 284–299 (1985).

Hubel, D. H. & Wiesel, T. N. Receptive fields of single neurones in the cat’s striate cortex. J. Physiol. 148, 574–591 (1959).

Simoncelli, E. P. & Olshausen, B. A. Natural image statistics and neural representation. Annu. Rev. Neurosci. 24, 1193–1216 (2001).

Lindsey, J., Ocko, S. A., Ganguli, S. & Deny, S. A unified theory of early visual representations from retina to cortex through anatomically constrained deep CNNs. Preprint at bioRxiv https://www.biorxiv.org/content/10.1101/511535v1.full (2019).

DiCarlo, J. J. & Cox, D. D. Untangling invariant object recognition. Trends Cogn. Sci. 11, 333–341 (2007).

Sabour, S., Frosst, N. & Hinton, G. E. Dynamic routing between capsules. In Proc. Advances in Neural Information Processing Systems 30 (eds Guyon, I. et al.) 3856–3866 (2017).

Lehky, S. R. & Sejnowski, T. J. & Desimone, R. Predicting responses of nonlinear neurons in monkey striate cortex to complex patterns.J. Neurosci. 12, 3568–3581 (1992).

Ecker, A. S. et al. A rotation-equivariant convolutional neural network model of primary visual cortex. International Conference on Learning Representations (ICLR) 2019 Conference Poster https://openreview.net/forum?id=H1fU8iAqKX (2018).

Pasupathy, A. & Connor, C. E. Population coding of shape in area V4. Nat. Neurosci. 5, 1332–1338 (2002).

Abbasi-Asl, R. et al. The DeepTune framework for modeling and characterizing neurons in visual cortex area V4. Preprint at bioRxiv https://www.biorxiv.org/content/biorxiv/early/2018/11/09/465534.full.pdf(2018).

Bashivan, P., Kar, K. & DiCarlo, J. J. Neural population control via deep image synthesis. Science 364, eaav9436 (2019).

Ponce, C. R. et al. Evolving images for visual neurons using a deep generative network reveals coding principles and neuronal preferences. cell 177, 999–1009.e10 (2019).

Reimer, J. et al. Pupil fluctuations track fast switching of cortical states during quiet wakefulness. Neuron 84, 355–362 (2014).

Froudarakis, E. et al. Population code in mouse v1 facilitates readout of natural scenes through increased sparseness. Nat. Neurosci. 17, 851–857 (2014).

Garrett, M. E., Nauhaus, I., Marshel, J. H. & Callaway, E. M. Topography and areal organization of mouse visual cortex. J. Neurosci. 34, 12587–12600 (2014).

Pnevmatikakis, E. A. et al. Simultaneous denoising, deconvolution, and demixing of calcium imaging data. Neuron 89, 285–299 (2016).

Russakovsky, O. et al. ImageNet large scale visual recognition challenge. Int. J. Comput. Vis. 115, 211–252 (2015).

Ioffe, S. & Szegedy, C. Batch normalization: accelerating deep network training by reducing internal covariate shift. in Proceedings of the 32nd International Conference on Machine Learning, Lille, France 37, 448–456 (2015).

Clevert, D.-A., Unterthiner, T. & Hochreiter, S. Fast and accurate deep network learning by exponential linear units (ELUs). Preprint at arXiv https://arxiv.org/pdf/1511.07289.pdf (2015).

Jaderberg, M., Simonyan, K., Zisserman, A. & Kavukcuoglu, K. Spatial transformer networks. In Proc. Advances in Neural Information Processing Systems 28 (eds Cortes, C. et al.) 2017–2025 (Curran Associates, 2015).

McGinley, M. J. et al. Waking state: rapid variations modulate neural and behavioral responses. Neuron 87, 1143–1161 (2015).

Fu, Y. et al. A cortical circuit for gain control by behavioral state. Cell 156, 1139–1152 (2014).

Zoccolan, D., Graham, B. & Cox, D. A self-calibrating, camera-based eye tracker for the recording of rodent eye movements. Front. Neurosci. 4, 193 (2010).

Stahl, J. S., van Alphen, A. M. & De Zeeuw, C. I. A comparison of video and magnetic search coil recordings of mouse eye movements. J. Neurosci. Methods 99, 101–110 (2000).

van Alphen, B., Winkelman, B. H. & Frens, M. A. Three-dimensional optokinetic eye movements in the C57BL/6J mouse. Invest. Ophthalmol. Vis. Sci. 51, 623–630 (2010).

Prechelt, L. Early stopping — but when? in Neural Networks: Tricks of the Trade (eds Montavon, G., Orr, G., & Müller, K.-R.) 53–67 (Springer, 1998).

Kingma, D. P. & Ba, J. Adam: a method for stochastic optimization. Preprint at arXiv https://arxiv.org/pdf/1412.6980.pdf (2017).

Nguyen, A., Dosovitskiy, A., Yosinski, J., Brox, T. & Clune, J. Synthesizing the preferred inputs for neurons in neural networks via deep generator networks. Adv. Neural Inf. Process. Syst. 29, 3387–3395 (2016).

Nguyen, A. M., Yosinski, J. & Clune, J. Multifaceted feature visualization: uncovering the different types of features learned by each neuron in deep neural networks. Preprint at arXiv https://arxiv.org/pdf/1602.03616.pdf (2016).

Wei, D., Zhou, B., Torralba, A. & Freeman, W. T. Understanding intra-class knowledge inside CNN. Preprint at arXiv https://arxiv.org/pdf/1507.02379.pdf (2015).

Olah, C., Mordvintsev, A. & Schubert, L. Feature visualization: how neural networks build up their understanding of images. Distill https://distill.pub/2017/feature-visualization (2017).

Simonyan, K., Vedaldi, A. & Zisserman, A. Deep inside convolutional networks: visualising image classification models and saliency maps. International Conference on Learning Representations (ICLR) Workshop Paper https://arxiv.org/abs/1312.6034 (2014).

Kindermans, P.-J., Schütt, K. T., Alber, M., Müller, K.-R. & Dähne, S. Learning how to explain neural networks: PatternNet and PatternAttribution. Preprint at arXiv https://arxiv.org/pdf/1705.05598.pdf (2017).

Yosinski, J., Clune, J., Nguyen, A., Fuchs, T. & Lipson, H. Understanding neural networks through deep visualization. Preprint at arXiv https://arxiv.org/pdf/1506.06579.pdf (2015).

Gatys, L. A., Ecker, A. S. & Bethge, M. A neural algorithm of artistic style. Preprint at arXiv https://arxiv.org/pdf/1508.06576.pdf (2015).

Mahendran, A. & Vedaldi, A. Understanding deep image representations by inverting them. Preprint at arXiv https://arxiv.org/pdf/1412.0035.pdf (2015).

Lenc, K. & Vedaldi, A. Understanding image representations by measuring their equivariance and equivalence. Preprint at arXiv https://arxiv.org/pdf/1411.5908.pdf (2015).

Tsai, C.-Y. & Cox, D. D. Characterizing visual representations within convolutional neural networks: toward a quantitative approach. In Proc. Workshop on Visualization for Deep Learning, 33rd International Conference on Machine Learning (2016).

Øygard, A. Visualizing GoogLeNet classes. Audun M. Øygard Blog https://www.auduno.com/2015/07/29/visualizing-googlenet-classes/ (2015).

Sreedhar, K. & Panlal, B. Enhancement of images using morphological transformations. Int. J. Comput. Sci. Inf. Technol. 4, 33–50 (2012).

Pologruto, T. A., Sabatini, B. L. & Svoboda, K. Scanimage: flexible software for operating laser scanning microscopes. Biomed. Eng. Online 2, 13 (2003).

Giovannucci, A. et al. Caiman: an open source tool for scalable calcium imaging data analysis. eLife 8, e38173 (2019).

Yatsenko, D., Walker, E. Y. & Tolias, A. S. Datajoint: a simpler relational data model. Preprint at arXiv https://arxiv.org/pdf/1807.11104.pdf (2018).

Paszke, A. et al. Automatic differentiation in PyTorch. In Proc. Advances in Neural Information Processing Systems (NIPS) 31 Workshop Autodiff Submission (2017).

van der Walt, S., Colbert, S. C. & Varoquaux, G. The NumPy array: a structure for efficient numerical computation. Comput. Sci. Eng. 13, 22–30 (2011).

Jones, E. et al. SciPy: Open Source Scientific Tools for Python http://www.scipy.org (SciPy.org, accessed 3 October 2019)

Merkel, D. Docker: lightweight Linux containers for consistent development and deployment. Linux J. 239, 2 (2014).

Hunter, J. D. Matplotlib: a 2D graphics environment. Comput. Sci. Eng. 9, 90–95 (2007).

Waskom, M. et al. mwaskom/seaborn: v.0.8.1 (September 2017). Zenodo https://zenodo.org/record/883859#.XZXIjUZKguV (2017).

McKinney, W. Data structures for statistical computing in Python. In Proc. 9th Python in Science Conference (eds van der Walt, S. & Millman, J.) 51–56 (2010).

Kluyver, T. et al. Jupyter notebooks: a publishing format for reproducible computational workflows. In Proc. 20th International Conference on Electronic Publishing. Positioning and Power in Academic Publishing: Players, Agents and Agendas (eds Loizides, F. & Schmidt, B.) 87–90 (IOS Press, 2016).

Acknowledgements

We thank G. Denfield for comments on the manuscript. This research was supported by the Intelligence Advanced Research Projects Activity (IARPA) via Department of Interior/Interior Business Center (DoI/IBC) contract no. D16PC00003. The US Government is authorized to reproduce and distribute reprints for governmental purposes notwithstanding any copyright annotation thereon. The views and conclusions contained herein are those of the authors and should not be interpreted as necessarily representing the official policies or endorsements, either expressed or implied, of IARPA, DoI/IBC or the US Government. This research was also supported by grant no. R01 EY026927 to A.S.T, National Eyey Institute/National Institutes of Health Core Grant for Vision Research (no. T32-EY-002520-37), National Science Foundation NeuroNex grant no. 1707400 to X.P. and A.S.T., and grant no. F30EY025510 to E.Y.W. F.H.S. is supported by the Institutional Strategy of the University of Tübingen (ZUK 63) and the Carl-Zeiss-Stiftung. F.H.S. acknowledges the support from the German Federal Ministry of Education and Research (BMBF) through the Tübingen AI Center (FKZ: 01IS18039A), the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) under Germany’s Excellence Strategy – EXC-Number 2064/1 – Project number 390727645, and Amazon AWS through a Machine Learning Research Award. P.G.F. received support from the BCM Medical Scientist Training Program, no. F30-MH112312. The name of the authors’ approach, inception loops, was inspired by the movie Inception directed by Christopher Nolan.

Author information

Authors and Affiliations

Contributions

All authors designed the experiments and developed the theoretical framework. E.Y.W. designed and implemented the inception loop framework with contributions from F.H.S. and E.C. T.M. performed the surgeries and conducted the recordings with contributions from E.F., P.G.F. and J.R. E.Y.W. performed data analyses on mice 1 and 2. E.Y.W. and E.C. performed the data analyses on mice 3–5. E.Y.W., F.H.S., A.S.E., X.P. and A.S.T. wrote the manuscript, with contributions from all authors. A.S.T. supervised all stages of the project.

Corresponding authors

Ethics declarations

Competing interests

E.Y.W., J.R. and A.S.T. hold equity ownership in Vathes LLC, which provides development and consulting for the framework (DataJoint) used to develop and operate the data analysis pipeline for this publication.

Additional information

Peer review information Nature Neuroscience thanks Bruno Olshausen, Joel Zylberberg, and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Integrated supplementary information

Supplementary Fig. 1 Stability of MEIs.

a: MEIs are stable across initializations. MEIs for six neurons from Mouse 4 generated from four different images as the initial guess. b: Cells were reliably matched between days (left versus right) by aligning the recording planes into each stack (shown for Mouse 1). The two panels show example recording planes on separate days with a subset of the cells used to generate MEIs (colored masks). Cells with identical numbers were matched. c: MEIs are stable across days. Each block shows the MEIs of matched cells computed from models trained to predict natural image responses from scans from three separate days for Mouse 1.

Supplementary Fig. 2 Matching of cells across days.

Pearson cross-correlation of our target cells’ responses (day 1, rows in each matrix) to those of their matched cells (day N, columns in each matrix) over the test set images presented in every scan. From the five mice, a total of 2, 3, 3, 2, and 4 scans were obtained and reliably matched to the cells recorded from day 1 in that mouse. High correlations on the diagonal of the matrices suggests we were able to match cells reliably across days.

Supplementary Fig. 3 CNN models are nonlinear in non-trivial ways.

The two plots show the first ten eigenvalues of the covariance matrix of the gradients of the CNN model (blue) and the linear-nonlinear model (red) on the entire image set. Different spectra correspond to different neurons (thin lines), each was normalized to its largest eigenvalue. The average normalized spectra across neurons are indicated by the thick colored lines. As expected the LN model has a one-dimensional gradient spectrum; however, the CNN model has several eigenvalues greater than zero, demonstrating it is nonlinear in a non-trivial way.

Supplementary Fig. 4 All MEIs.

Most Exciting Inputs (MEI) for all 150 target cells in each of the five mice as they were presented back to the mouse on day 2 and beyond. Each image represents an MEI image of a distinct neuron computed from the CNN models fitted on all neurons from the same scan.

Supplementary Fig. 5 Stability of MEIs across initializations.

Stability of MEI optimization across random starting initializations for 150 target cells in Mouse 5. Left: Average pairwise Pearson correlation (μ = 0.99) across five MEIs started from different random images; correlation was restricted to pixels inside the MEI mask. Right: Highest/lowest MEI activation across five MEIs created from different random starting images (ρ = 0.99).

Supplementary Fig. 6 MEIs activate neurons with high specificity across all mice.

The confusion matrix shows responses of each neuron to the MEIs of all 150 target neurons. Responses of each neuron were normalized and pooled across days, and each row was scaled so the maximum response across all images equals 1.

Supplementary Fig. 7 MEIs have higher spatial frequency content than RFs.

The average difference in the amplitude of spatial frequency spectrum of MEIs and RFs for each of the five mice. Positive value (red) indicates spatial frequency content that is, on average, stronger in the MEIs.

Supplementary Fig. 8 All RFs.

Linear receptive fields (RF) for all 150 target cells in each of the five mice as they were presented back to the mouse on day 2 and beyond. Each image represents a RF image of a distinct neuron computed from the LN models fitted on all neurons from the same scan.

Supplementary Fig. 9 MEIs as linear filters.

Scatter plot of predictive performance of the RF used as a linear filter against the MEI used as a linear filter for the 150 target cells of Mouse 5. Performance is computed as Spearman’s rank correlation over the responses to the 100 test set images. RF consistently outperforms MEI when used as a linear filter (two-sided Wilcoxon Signed-Rank test, W = 92, \(P < 10^{ - 9}\)).

Supplementary Fig. 10 Linearized CNN model approximates LN model.

Each pair of images represents the RF from the trained LN model (left) versus the RFs from a linearized CNN model (right) for all 150 target cells in Mouse 5. The high degree of similarity between the two versions of RFs suggests that the linear component of the CNN closely approximates the linear component of neuronal population responses extracted by fitting the LN model to the responses.

Supplementary Fig. 11 MEIs and control stimuli.

The remaining MEIs and other control stimuli for Mouse 5 that were not reported in Fig. 3b. MEIs, RFs, best Gabor filters (Gabor), best masked natural images (mNI), and full natural images (fNI, ‘unmasked’ version of the best masked natural image) are shown.

Supplementary information

Rights and permissions

About this article

Cite this article

Walker, E.Y., Sinz, F.H., Cobos, E. et al. Inception loops discover what excites neurons most using deep predictive models. Nat Neurosci 22, 2060–2065 (2019). https://doi.org/10.1038/s41593-019-0517-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41593-019-0517-x

This article is cited by

-

Dynamical flexible inference of nonlinear latent factors and structures in neural population activity

Nature Biomedical Engineering (2023)

-

Detailed characterization of neural selectivity in free viewing primates

Nature Communications (2023)

-

FIOLA: an accelerated pipeline for fluorescence imaging online analysis

Nature Methods (2023)

-

Context-dependent selectivity to natural images in the retina

Nature Communications (2022)

-

State-dependent pupil dilation rapidly shifts visual feature selectivity

Nature (2022)