Abstract

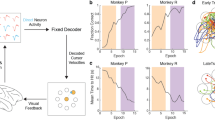

Behavior is driven by coordinated activity across a population of neurons. Learning requires the brain to change the neural population activity produced to achieve a given behavioral goal. How does population activity reorganize during learning? We studied intracortical population activity in the primary motor cortex of rhesus macaques during short-term learning in a brain–computer interface (BCI) task. In a BCI, the mapping between neural activity and behavior is exactly known, enabling us to rigorously define hypotheses about neural reorganization during learning. We found that changes in population activity followed a suboptimal neural strategy of reassociation: animals relied on a fixed repertoire of activity patterns and associated those patterns with different movements after learning. These results indicate that the activity patterns that a neural population can generate are even more constrained than previously thought and might explain why it is often difficult to quickly learn to a high level of proficiency.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 print issues and online access

$209.00 per year

only $17.42 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Change history

05 July 2018

In the version of this article initially published, equation (10) contained cos Θ instead of sin Θ as the bottom element of the right-hand vector. The error has been corrected in the HTML and PDF versions of the article.

References

Mitz, A. R., Godschalk, M. & Wise, S. P. Learning-dependent neuronal activity in the premotor cortex: activity during the acquisition of conditional motor associations. J. Neurosci. 11, 1855–1872 (1991).

Asaad, W. F., Rainer, G. & Miller, E. K. Neural activity in the primate prefrontal cortex during associative learning. Neuron 21, 1399–1407 (1998).

Li, C.-S. R., Padoa-Schioppa, C. & Bizzi, E. Neuronal correlates of motor performance and motor learning in the primary motor cortex of monkeys adapting to an external force field. Neuron 30, 593–607 (2001).

Paz, R., Boraud, T., Natan, C., Bergman, H. & Vaadia, E. Preparatory activity in motor cortex reflects learning of local visuomotor skills. Nat. Neurosci. 6, 882–890 (2003).

Rokni, U., Richardson, A. G., Bizzi, E. & Seung, H. S. Motor learning with unstable neural representations. Neuron 54, 653–666 (2007).

Mandelblat-Cerf, Y. et al. The neuronal basis of long-term sensorimotor learning. J. Neurosci. 31, 300–313 (2011).

Ganguly, K. & Carmena, J. M. Emergence of a stable cortical map for neuroprosthetic control. PLoS Biol. 7, e1000153 (2009).

Chase, S. M., Schwartz, A. B. & Kass, R. E. Latent inputs improve estimates of neural encoding in motor cortex. J. Neurosci. 30, 13873–13882 (2010).

Ganguly, K., Dimitrov, D. F., Wallis, J. D. & Carmena, J. M. Reversible large-scale modification of cortical networks during neuroprosthetic control. Nat. Neurosci. 14, 662–667 (2011).

Chase, S. M., Kass, R. E. & Schwartz, A. B. Behavioral and neural correlates of visuomotor adaptation observed through a brain-computer interface in primary motor cortex. J. Neurophysiol. 108, 624–644 (2012).

Gu, Y. et al. Perceptual learning reduces interneuronal correlations in macaque visual cortex. Neuron 71, 750–761 (2011).

Jeanne, J. M., Sharpee, T. O. & Gentner, T. Q. Associative learning enhances population coding by inverting interneuronal correlation patterns. Neuron 78, 352–363 (2013).

Mazor, O. & Laurent, G. Transient dynamics versus fixed points in odor representations by locust antennal lobe projection neurons. Neuron 48, 661–673 (2005).

Luczak, A., Barthó, P. & Harris, K. D. Spontaneous events outline the realm of possible sensory responses in neocortical populations. Neuron 62, 413–425 (2009).

Berkes, P., Orbán, G., Lengyel, M. & Fiser, J. Spontaneous cortical activity reveals hallmarks of an optimal internal model of the environment. Science 331, 83–87 (2011).

Churchland, M. M. et al. Neural population dynamics during reaching. Nature 487, 51–56 (2012).

Rigotti, M. et al. The importance of mixed selectivity in complex cognitive tasks. Nature 497, 585–590 (2013).

Mante, V., Sussillo, D., Shenoy, K. V. & Newsome, W. T. Context-dependent computation by recurrent dynamics in prefrontal cortex. Nature 503, 78–84 (2013).

Kaufman, M. T., Churchland, M. M., Ryu, S. I. & Shenoy, K. V. Cortical activity in the null space: permitting preparation without movement. Nat. Neurosci. 17, 440–448 (2014).

Golub, M. D., Yu, B. M. & Chase, S. M. Internal models for interpreting neural population activity during sensorimotor control. Elife 4, e10015 (2015).

Durstewitz, D., Vittoz, N. M., Floresco, S. B. & Seamans, J. K. Abrupt transitions between prefrontal neural ensemble states accompany behavioral transitions during rule learning. Neuron 66, 438–448 (2010).

Sadtler, P. T. et al. Neural constraints on learning. Nature 512, 423–426 (2014).

Athalye, V. R., Ganguly, K., Costa, R. M. & Carmena, J. M. Emergence of coordinated neural dynamics underlies neuroprosthetic learning and skillful control. Neuron 93, 955–970 (2017).

Vyas, S. et al. Neural population dynamics underlying motor learning transfer. Neuron https://doi.org/10.1016/j.neuron.2018.01.040 (2018).

Golub, M. D., Chase, S. M., Batista, A. P. & Yu, B. M. Brain-computer interfaces for dissecting cognitive processes underlying sensorimotor control. Curr. Opin. Neurobiol. 37, 53–58 (2016).

Taylor, D. M., Tillery, S. I. H. & Schwartz, A. B. Direct cortical control of 3D neuroprosthetic devices. Science 296, 1829–1832 (2002).

Jarosiewicz, B. et al. Functional network reorganization during learning in a brain-computer interface paradigm. Proc. Natl. Acad. Sci. USA 105, 19486–19491 (2008).

Koralek, A. C., Jin, X., Long, J. D. II, Costa, R. M. & Carmena, J. M. Corticostriatal plasticity is necessary for learning intentional neuroprosthetic skills. Nature 483, 331–335 (2012).

Clancy, K. B., Koralek, A. C., Costa, R. M., Feldman, D. E. & Carmena, J. M. Volitional modulation of optically recorded calcium signals during neuroprosthetic learning. Nat. Neurosci. 17, 807–809 (2014).

Armenta Salas, M. & Helms Tillery, S. I. Uniform and non-uniform perturbations in brain-machine interface task elicit similar neural strategies. Front. Syst. Neurosci 10, 70 (2016).

Cunningham, J. P. & Yu, B. M. Dimensionality reduction for large-scale neural recordings. Nat. Neurosci. 17, 1500–1509 (2014).

Krakauer, J. W., Pine, Z. M., Ghilardi, M.-F. & Ghez, C. Learning of visuomotor transformations for vectorial planning of reaching trajectories. J. Neurosci. 20, 8916–8924 (2000).

Paz, R., Nathan, C., Boraud, T., Bergman, H. & Vaadia, E. Acquisition and generalization of visuomotor transformations by nonhuman primates. Exp. Brain Res. 161, 209–219 (2005).

Yu, B. M. et al. Gaussian-process factor analysis for low-dimensional single-trial analysis of neural population activity. J. Neurophysiol. 102, 614–635 (2009).

Santhanam, G. et al. Factor-analysis methods for higher-performance neural prostheses. J. Neurophysiol. 102, 1315–1330 (2009).

Churchland, M. M. et al. Stimulus onset quenches neural variability: a widespread cortical phenomenon. Nat. Neurosci. 13, 369–378 (2010).

Harvey, C. D., Coen, P. & Tank, D. W. Choice-specific sequences in parietal cortex during a virtual-navigation decision task. Nature 484, 62–68 (2012).

Boyd, S. & Vandenberghe, L. Convex Optimization (Cambridge University Press, Cambridge, 2004).

Charlesworth, J. D., Tumer, E. C., Warren, T. L. & Brainard, M. S. Learning the microstructure of successful behavior. Nat. Neurosci. 14, 373–380 (2011).

Smith, M. A., Ghazizadeh, A. & Shadmehr, R. Interacting adaptive processes with different timescales underlie short-term motor learning. PLoS Biol. 4, e179 (2006).

Kording, K. P., Tenenbaum, J. B. & Shadmehr, R. The dynamics of memory as a consequence of optimal adaptation to a changing body. Nat. Neurosci. 10, 779–786 (2007).

Joiner, W. M. & Smith, M. A. Long-term retention explained by a model of short-term learning in the adaptive control of reaching. J. Neurophysiol. 100, 2948–2955 (2008).

Yang, Y. & Lisberger, S. G. Learning on multiple timescales in smooth pursuit eye movements. J. Neurophysiol. 104, 2850–2862 (2010).

Hwang, E. J., Bailey, P. M. & Andersen, R. A. Volitional control of neural activity relies on the natural motor repertoire. Curr. Biol. 23, 353–361 (2013).

Cohen, R. G. & Sternad, D. Variability in motor learning: relocating, channeling and reducing noise. Exp. Brain Res. 193, 69–83 (2009).

Shadmehr, R. & Krakauer, J. W. A computational neuroanatomy for motor control. Exp. Brain Res. 185, 359–381 (2008).

Kalman, R. E. A new approach to linear filtering and prediction problems. J. Basic Eng. 82, 35–45 (1960).

Wu, W., Gao, Y., Bienenstock, E., Donoghue, J. P. & Black, M. J. Bayesian population decoding of motor cortical activity using a Kalman filter. Neural Comput. 18, 80–118 (2006).

Gilja, V. et al. A high-performance neural prosthesis enabled by control algorithm design. Nat. Neurosci. 15, 1752–1757 (2012).

Churchland, M. M. & Abbott, L. F. Two layers of neural variability. Nat. Neurosci. 15, 1472–1474 (2012).

Acknowledgements

This work was supported by NIH R01 HD071686 (A.P.B., B.M.Y. and S.M.C.), NSF NCS BCS1533672 (S.M.C., B.M.Y. and A.P.B.), NSF CAREER award IOS1553252 (S.M.C.), NIH CRCNS R01 NS105318 (B.M.Y. and A.P.B.), Craig H. Neilsen Foundation 280028 (B.M.Y., S.M.C. and A.P.B.), Pennsylvania Department of Health Research Formula Grant SAP 4100077048 under the Commonwealth Universal Research Enhancement program (S.M.C. and B.M.Y.) and Simons Foundation 364994 (B.M.Y.).

Author information

Authors and Affiliations

Contributions

M.D.G., B.M.Y., S.M.C. and A.P.B. designed the analyses and discussed the results. M.D.G. performed all analyses and wrote the paper. P.T.S., K.M.Q., M.D.G., S.M.C., B.M.Y. and A.P.B. designed the animal experiments. P.T.S. and E.R.O. performed the animal experiments. S.I.R., E.C.T.-K. and E.R.O. performed the animal surgeries. All authors commented on the manuscript. B.M.Y. and S.M.C. contributed equally to this work.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary Text and Figures

Supplementary Figures 1–11 and Supplementary Math Note

Rights and permissions

About this article

Cite this article

Golub, M.D., Sadtler, P.T., Oby, E.R. et al. Learning by neural reassociation. Nat Neurosci 21, 607–616 (2018). https://doi.org/10.1038/s41593-018-0095-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41593-018-0095-3

This article is cited by

-

Preparatory activity and the expansive null-space

Nature Reviews Neuroscience (2024)

-

Category representation in primary visual cortex after visual perceptual learning

Cognitive Neurodynamics (2024)

-

Foundations of human spatial problem solving

Scientific Reports (2023)

-

Neurotechnologies to restore hand functions

Nature Reviews Bioengineering (2023)

-

Aligning latent representations of neural activity

Nature Biomedical Engineering (2022)