Abstract

Characterizing multifaceted individual differences in brain function using neuroimaging is central to biomarker discovery in neuroscience. We provide an integrative toolbox, Reliability eXplorer (ReX), to facilitate the examination of individual variation and reliability as well as the effective direction for optimization of measuring individual differences in biomarker discovery. We also illustrate gradient flows, a two-dimensional field map-based approach to identifying and representing the most effective direction for optimization when measuring individual differences, which is implemented in ReX.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 print issues and online access

$259.00 per year

only $21.58 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

Data used in application examples are available from public repositories. HCP data are available on ConnectomeDB (https://www.humanconnectome.org/study/hcp-young-adult)10. Self-regulation data are available on GitHub (https://github.com/IanEisenberg/Self_Regulation_Ontology)11. HNU data are available from the Consortium for Reliability and Reproducibility (https://fcon_1000.projects.nitrc.org/indi/CoRR/html/index.html)19. Application data and code are available on GitHub (https://github.com/TingsterX/Reliability_Explorer/tree/main/application_examples). Source data are provided with this paper.

Code availability

ReX is implemented using multiple R packages (lme4, dplyr, ggplot2, scales, stats, reshape2, shinybusy, colorspace, RColorBrewer). The toolbox is available under a GNU version 3 license on GitHub (https://github.com/tingsterx/reliability_explorer), with a web-based R–Shiny application on Docker Hub (tingsterx:reliability_explorer) and shinyapps.io: https://tingsterx.shinyapps.io/ReliabilityExplorer. Docker images of the command line version (tingsterx:rex) used in this paper are available on Docker Hub.

References

Seghier, M. L. & Price, C. J. Interpreting and utilising intersubject variability in brain function. Trends Cogn. Sci. 22, 517–530 (2018).

Dubois, J. & Adolphs, R. Building a science of individual differences from fMRI. Trends Cogn. Sci. 20, 425–443 (2016).

Barch, D. M. et al. Function in the human connectome: task-fMRI and individual differences in behavior. Neuroimage 80, 169–189 (2013).

Finn, E. S. et al. Can brain state be manipulated to emphasize individual differences in functional connectivity? NeuroImage 160, 140–151 (2017).

Lebreton, M., Bavard, S., Daunizeau, J. & Palminteri, S. Assessing inter-individual differences with task-related functional neuroimaging. Nat. Hum. Behav. 3, 897–905 (2019).

Van Horn, J. D., Grafton, S. T. & Miller, M. B. Individual variability in brain activity: a nuisance or an opportunity? Brain Imaging Behav. 2, 327–334 (2008).

Palminteri, S. & Chevallier, C. Can we infer inter-individual differences in risk-taking from behavioral tasks? Front. Psychol. 9, 2307 (2018).

Genon, S., Eickhoff, S. B. & Kharabian, S. Linking interindividual variability in brain structure to behaviour. Nat. Rev. Neurosci. 23, 307–318 (2022).

Hsu, S., Poldrack, R., Ram, N. & Wagner, A. D. Observed correlations from cross-sectional individual differences research reflect both between-person and within-person correlations. Preprint at PsyArXiv https://doi.org/10.31234/osf.io/zq37h (2022).

Van Essen, D. C. et al. The WU-Minn Human Connectome Project: an overview. NeuroImage 80, 62–79 (2013).

Enkavi, A. Z. et al. Large-scale analysis of test–retest reliabilities of self-regulation measures. Proc. Natl Acad. Sci. USA 116, 5472–5477 (2019).

Chen, G. et al. Intraclass correlation: improved modeling approaches and applications for neuroimaging. Hum. Brain Mapp. 39, 1187–1206 (2018).

Koo, T. K. & Li, M. Y. A guideline of selecting and reporting intraclass correlation coefficients for reliability research. J. Chiropr. Med. 15, 155–163 (2016).

Xu, M., Reiss, P. T. & Cribben, I. Generalized reliability based on distances. Biometrics 77, 258–270 (2021).

Shou, H. et al. Quantifying the reliability of image replication studies: the image intraclass correlation coefficient (I2C2). Cogn. Affect. Behav. Neurosci. 13, 714–724 (2013).

Bridgeford, E. W. et al. Eliminating accidental deviations to minimize generalization error and maximize replicability: applications in connectomics and genomics. PLoS Comput. Biol. 17, e1009279 (2021).

Finn, E. S. et al. Functional connectome fingerprinting: identifying individuals using patterns of brain connectivity. Nat. Neurosci. 18, 1664–1671 (2015).

Zuo, X.-N., Xu, T. & Milham, M. P. Harnessing reliability for neuroscience research. Nat. Hum. Behav. 3, 768–771 (2019).

Cho, J. W., Korchmaros, A., Vogelstein, J. T., Milham, M. P. & Xu, T. Impact of concatenating fMRI data on reliability for functional connectomics. NeuroImage 226, 117549 (2021).

Noble, S., Scheinost, D. & Constable, R. T. A guide to the measurement and interpretation of fMRI test–retest reliability. Curr. Opin. Behav. Sci. 40, 27–32 (2021).

Steyer, R., Smelser, N. J. & Jena, D. Classical (psychometric) test theory. In International Encyclopedia of the Social & Behavioral Sciences Vol. 3, 1955–1962 (2001).

Kline, T. J. B. Psychological Testing: a Practical Approach to Design and Evaluation (SAGE, 2005).

Noble, S., Scheinost, D. & Constable, R. T. A decade of test–retest reliability of functional connectivity: a systematic review and meta-analysis. NeuroImage 203, 116157 (2019).

Acknowledgements

We thank X. Li for organizing the preprocessed HNU data from different pipelines. This work is supported by gifts from J.P. Healey, P. Green and R. Cowen to the Child Mind Institute and National Institutes of Health funding (RF1MH128696 to T.X., R24MH114806 and 5R01MH124045 to M.P.M.). Additional grant support for J.T.V. comes from R01MH120482 (to T.D. Satterthwaite, M.P.M.), and he has funding from Microsoft Research.

Author information

Authors and Affiliations

Contributions

T.X. conceptualized and developed the software. T.X. and J.W.C. prepared the data. T.X. wrote the original draft with input from M.P.M., G.K. and J.T.V. All authors reviewed, edited and approved the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Methods thanks Ye Tian and the other, anonymous, reviewers for their contribution to the peer review of this work. Primary Handling Editor: Nina Vogt, in collaboration with the Nature Methods team. Peer reviewer reports are available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data

Extended Data Fig. 1 Results for Application 4 to compare the impact of global signal regression in multiple fMRI preprocessing pipelines at the parcel level.

a) The within- and between-individual variance of GSR and No-GSR results from four pipelines. b) The change of within- and between-individual variance comparing GSR versus No-GSR results of the fMRIprep pipeline. c) The normalized change of the within- and between-individual variance comparing GSR versus No-GSR results of the fMRIprep pipeline.

Supplementary information

Supplementary Information

Supplementary Note and Figs. 1–5

Supplementary Video 1

The theoretical relationship between reliability and validity. Validity is determined by the proportion of variation for the trait of interest to the total variation of the observed score. If there is a signal but it is not related to the trait (that is, contaminator relative to the trait), validity is lower than reliability (https://github.com/TingsterX/Reliability_Explorer/blob/main/reliability_and_validity/reliability_and_validity.md).

Source data

Source Data Fig. 1

Reliability of the demo data calculated in ReX.

Source Data Fig. 2

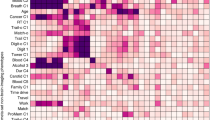

Reliability of the National Institutes of Health Toolbox and self-regulation measures.

Source Data Extended Data Fig. 1

Reliability (dbICC) of functional connectivity at the parcel level for multiple functional magnetic resonance imaging preprocessing pipelines.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Xu, T., Kiar, G., Cho, J.W. et al. ReX: an integrative tool for quantifying and optimizing measurement reliability for the study of individual differences. Nat Methods 20, 1025–1028 (2023). https://doi.org/10.1038/s41592-023-01901-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41592-023-01901-3