Abstract

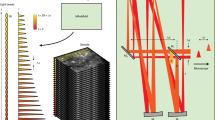

Various implementations of mesoscopes provide optical access for calcium imaging across multi-millimeter fields of view in the mammalian brain; however, capturing the activity of the neuronal population within such fields of view near-simultaneously and in a volumetric fashion has remained challenging as approaches for imaging scattering brain tissues typically are based on sequential acquisition. Here we present a modular, mesoscale light-field (MesoLF) imaging hardware and software solution that allows recording from thousands of neurons within volumes of ⌀ 4 × 0.2 mm, located at up to 350 µm depth in the mouse cortex, at 18 volumes per second and an effective voxel rate of ~40 megavoxels per second. Using our optical design and computational approach we show recording of ~10,000 neurons across multiple cortical areas in mice using workstation-grade computing resources.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 print issues and online access

$259.00 per year

only $21.58 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

A comprehensive demo dataset, complete expected outputs from the demo, as well as all required auxiliary files (pre-trained classifier models, pre-computed point-spread function) are available for download22. A demo script is contained in Supplementary Software 1. The demo script automatically downloads the demo data. Due to the very large and diversely structured data, not all data are currently available in annotated format, but can be obtained from the corresponding author upon reasonable request.

Code availability

The custom code that contains the MesoLF pipeline is available in Supplementary Software 1 under an open-source license permitting not-for-profit research use (see file LICENSE.txt) Future updates to the code will be published at http://github.com/vazirilab, together with optical and optomechanical designs and custom C# data acquisition software.

References

Broussard, G. J. et al. In vivo measurement of afferent activity with axon-specific calcium imaging. Nat. Neurosci. 21, 1272–1280 (2018).

Dana, H. et al. Sensitive red protein calcium indicators for imaging neural activity. eLife 5, e12727 (2020).

Dana, H. et al. High-performance calcium sensors for imaging activity in neuronal populations and microcompartments. Nat. Methods 16, 649 (2019).

Demas, J. et al. High-speed, cortex-wide volumetric recording of neuroactivity at cellular resolution using light beads microscopy. Nat. Methods 18, 1103–1111 (2021).

Levoy, M., Ng, R., Adams, A., Footer, M. & Horowitz, M. Light field microscopy. ACM Trans. Graph. 25, 924 (2006).

Broxton, M. et al. Wave optics theory and 3-D deconvolution for the light field microscope. Opt. Express 21, 25418–25439 (2013).

Prevedel, R. et al. Simultaneous whole-animal 3D imaging of neuronal activity using light-field microscopy. Nat. Methods 11, 727–730 (2014).

Pégard, N. C. et al. Compressive light-field microscopy for 3D neural activity recording. Optica 3, 517 (2016).

Cong, L. et al. Rapid whole brain imaging of neural activity in freely behaving larval zebrafish (Danio rerio). eLife 6, e28158 (2017).

Scrofani, G. et al. FIMic: design for ultimate 3D-integral microscopy of in-vivo biological samples. Biomed. Opt. Express 9, 335 (2018).

Lu, Z. et al. Phase-space deconvolution for light field microscopy. Opt. Express 27, 18131–18145 (2019).

Chen, Y. et al. Design of a high-resolution light field miniscope for volumetric imaging in scattering tissue. Biomed. Opt. Express 11, 1662–1678 (2020).

Adams, J. K. et al. Single-frame 3D fluorescence microscopy with ultraminiature lensless FlatScope. Sci. Adv. 3, e1701548 (2017).

Fan, J. et al. Video-rate imaging of biological dynamics at centimetre scale and micrometre resolution. Nat. Photonics 13, 809–816 (2019).

Xue, Y., Davison, I. G., Boas, D. A. & Tian, L. Single-shot 3D wide-field fluorescence imaging with a computational miniature mesoscope. Sci. Adv. 6, eabb7508 (2020).

Xiao, S. et al. High-contrast multifocus microscopy with a single camera and z-splitter prism. Optica 7, 1477–1486 (2020).

Kauvar, I. V. et al. Cortical observation by synchronous multifocal optical sampling reveals widespread population encoding of actions. Neuron 107, 351–367 (2020).

Nöbauer, T. et al. Video rate volumetric Ca2+ imaging across cortex using seeded iterative demixing (SID) microscopy. Nat. Methods 14, 811–818 (2017).

Skocek, O. et al. High-speed volumetric imaging of neuronal activity in freely moving rodents. Nat. Methods 15, 429–432 (2018).

Sofroniew, N. J., Flickinger, D., King, J. & Svoboda, K. A large field of view two-photon mesoscope with subcellular resolution for in vivo imaging. eLife 5, e14472 (2016).

Bentley, J. & Olson, C. Field Guide to Lens Design (SPIE, 2012).

Nöbauer, T., Zhang, Y., Kim, H. & Vaziri, A. MesoLF demo data and auxiliary files. https://doi.org/10.5281/zenodo.7306113 (2022).

Shemesh, O. A. et al. Precision calcium imaging of dense neural populations via a cell-body-targeted calcium indicator. Neuron 107, 470–486 (2020).

Mukamel, E. A., Nimmerjahn, A. & Schnitzer, M. J. Automated analysis of cellular signals from large-scale calcium imaging data. Neuron 63, 747–760 (2009).

Zhou, P. et al. Efficient and accurate extraction of in vivo calcium signals from microendoscopic video data. eLife 7, e28728 (2018).

Song, A., Gauthier, J. L., Pillow, J. W., Tank, D. W. & Charles, A. S. Neural anatomy and optical microscopy (NAOMi) simulation for evaluating calcium imaging methods. J. Neurosci. Methods 358, 109173 (2021).

Tibshirani, R. Regression shrinkage and selection via the Lasso. J. R. Stat. Soc. Ser. B Methodol. 58, 267–288 (1996).

Azzopardi, G., Strisciuglio, N., Vento, M. & Petkov, N. Trainable COSFIRE filters for vessel delineation with application to retinal images. Med. Image Anal. 19, 46–57 (2015).

Giovannucci, A. et al. CaImAn an open source tool for scalable calcium imaging data analysis. eLife 8, e38173 (2019).

Pachitariu, M. et al. Suite2p: beyond 10,000 neurons with standard two-photon microscopy. Preprint at bioRxiv https://doi.org/10.1101/061507 (2017).

Soltanian-Zadeh, S., Sahingur, K., Blau, S., Gong, Y. & Farsiu, S. Fast and robust active neuron segmentation in two-photon calcium imaging using spatiotemporal deep learning. Proc. Natl Acad. Sci. USA https://doi.org/10.1073/pnas.1812995116 (2019).

Bando, Y., Sakamoto, M., Kim, S., Ayzenshtat, I. & Yuste, R. Comparative evaluation of genetically encoded voltage indicators. Cell Rep. 26, 802–813 (2019).

Knöpfel, T. & Song, C. Optical voltage imaging in neurons: moving from technology development to practical tool. Nat. Rev. Neurosci. 20, 719–727 (2019).

Stringer, C. et al. Spontaneous behaviors drive multidimensional, brainwide activity. Science 364, 255 (2019).

Ng, R. Digital Light Field Photography. PhD thesis, Stanford Univ. (2006).

Acknowledgements

We thank P. Strogies and J. Petrillo at the Rockefeller University’s Precision Instrumentation Technology (PIT) for fabrication of mechanical components, D. Hillebrand (Rockefeller University) for sharing reagents, J. Gottweis (Google) for an initial GPU-implementation approach of a light-field-related operation and F. Martínez-Traub (Rockefeller University) for surgeries. Research reported in this publication was supported by the National Institute of Neurological Disorders and Stroke of the National Institutes of Health under award nos. 5U01NS103488, 1RF1NS110501 and 1RF1NS113251 (all to A.V.), the National Science Foundation under award no. NSF-DBI-1707408 (A.V.) and the Kavli Foundation through the Kavli Neural System Institute (A.V.), in particular through a Kavli Neural Systems Institute postdoctoral fellowship (T.N.).

Author information

Authors and Affiliations

Contributions

T.N. designed and implemented the MesoLF optical path under the guidance of A.V., performed experiments, conceptualized and contributed to implementation of the MesoLF computational pipeline, analyzed data and wrote the paper. Y.Z. contributed to conceptualization and implementation of the MesoLF computational pipeline, performed simulations, analyzed data and wrote the paper. H.K. performed cranial window surgeries and viral injections. A.V. conceived and led the project, conceptualized and guided the imaging, signal extraction and data analysis approach, designed in vivo mouse experiments and wrote the paper.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Methods thanks Hillel Adesnik and the other, anonymous, reviewers for their contribution to the peer review of this work. Primary Handling Editor: Nina Vogt, in collaboration with the Nature Methods team.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data

Extended Data Fig. 1 Motion correction in MesoLF.

(a) Illustrated motion patterns in raw LFM data. The lenslet aperture shadows (black areas) will not move, only patterns within those apertures (red squares), which prohibits motion correction using established algorithms without prior rearrangement of the data. (b) Left panel: LFM image formed behind one microlens, sampled by 15 × 15 pixels. Number pairs in brackets indicate pixel coordinates (i,j). Right panel: Correlation matrix for motion vectors extracted from all 15 × 15 sub-aperture images. Sub-aperture image (i,j) consists of all pixels with coordinates (i,j) relative to the nearest microlens. Consistently high values of correlation across all pairs (i,j) indicate that all sub-aperture images experience similar motion vectors, justifying the use of the same motion correcting transformation across all sub-aperture images. (c) Illustration of the motion correction pipeline in MesoLF. Scale bar: 100 µm. (d) Correlation coefficients between successive frames of the central sub-aperture image in an LFM recording of mouse cortical calcium activity, pre- and post-motion correction (blue and red traces, respectively), for a patch size of 660 × 690 µm. (e) Magnitude of sample motion along lateral axes (x and y, blue and red solid traces, respectively) versus time, for same recording as (d), as extracted using the MesoLF motion correction algorithm. Solid line indicates mean; shaded area indicates s.d. of motion in different patches across the field of view. Data identical to Fig. 4b, reproduced here for convenience. (f) Illustration of motion vectors (arrow size indicates magnitude of motion) for different patches across the 4-mm MesoLF FOV. Scale bar: 500 µm. (g) Correlation coefficients between successive frames of the central sub-aperture movie from the whole MesoLF FOV before motion correction (blue), after global correction (red) and after patch-based correction (yellow).

Extended Data Fig. 2 Temporal summary image generation.

(a) Top panel: Standard deviation (SD) image along time calculated directly from uncorrected raw data. Bottom left panel: Zoom into area indicated by green box. Bottom right panel: Intensity histogram of SD image shown in top panel. Orange arrow indicates the mean value of the background in the SD image. (b) Illustration of the effect of the three preprocessing steps used in MesoLF onto the standard deviation image: In the first step, a large-window smoothed version of the data is subtracted from the raw data to flatten the baselines. In the second step, a small-window smoothing operation is applied in addition, to reduce high-frequency noise in the SD image. In the third step, the smoothed data is taken to the third power to enhance contrast. Panels in bottom row are analogous to bottom panels in (a). (c) Illustration of the effects of the three preprocessing steps in the MesoLF pipeline onto the time series of a single pixel in a calcium activity recording. (d) Examples of simulated photon trajectories obtained from Monte-Carlo simulation of two neurons separated by 200 µm along the z axis. The focal plane for data shown in (e)-(f) is chosen to be the x–y plane containing neuron 2. (e) Intensity in focal plane obtained from Monte-Carlo simulation as in (e). Left panel: raw intensity. Right panel: Third power of left panel. (f) Intensity profile along the dashed yellow line in the two panels shown in (f).

Extended Data Fig. 3 Statistical comparison of pixel-space Richardson-Lucy and MesoLF phase-space reconstructions.

(a) Comparison of root-mean-square error (RMSE) between ground truth and reconstructions obtained using pixel-space Richardson-Lucy (RL) reconstruction and MesoLF phase-space reconstructions, for the low- and high scattering scenarios. n = 8 data points represent runs with different simulated raw data. (b) Comparison of the structure similarity index (SSIM) values between ground truth and reconstructions, same underlying data as in (a), n = 8 different simulation runs. (c) Violin plots of the 3D localization errors between ground truth neurons and neurons extracted from phase space (magenta) and RL (green) reconstructions, in the low and high scattering scenarios (depths 100 µm and 300 µm, respectively). White circle: median. Thick gray vertical line: Interquartile range. Thin vertical lines: Upper and lower proximal values. Solid disks: data points. Transparent violin-shaped area: Kernel density estimate of data distribution. n = 580 for phase space, n = 427 for RL, z = 100 μm; n = 541 for phase space, n = 258 for RL, z = 300 μm. (d) As in (c), but lateral localization error only. Violin plot elements as in (c). n = 580 for phase space, n = 427 for RL, z = 100 μm; n = 541 for phase space, n = 258 for RL, z = 300 μm. (e) As in (c), but axial localization errors only. Violin plot elements as in (c). n = 580 for phase space, n = 427 for RL, z = 100 μm; n = 541 for phase space, n = 258 for RL, z = 300 μm. (f) Example plane from reconstructions obtained using RL (left panel) and MesoLF phase space reconstruction (right panel). Magenta circles in the left panel show the segmentation result obtained using the segmentation approach in the SID package (Nöbauer et al., Nat. Methods 14, 2017). Magenta circles in the right panel are segments obtained using MesoLF segmentation. Blue arrows mark the true neurons that appear only in the phase space reconstruction. Yellow arrows mark the true neurons that only appear in the RL reconstruction. Green arrows mark false neuron segments found in reconstructions using both methods. (g) Comparison of segmentation precision and sensitivity values obtained using phase space (magenta) and RL (green) reconstructions, for the low and high scattering scenarios. Central measure: Mean. Error bars: s.d. Black circles: n = 5 different simulation runs.

Extended Data Fig. 4 Neuron segmentation performance.

(a) Comparison of segmentation performance of MesoLF versus CNMF-E (template matching and shape-based selection steps) in a 2D slice from a MesoLF recording in mouse cortex, depth 100 µm. Green circles: segments that strongly match with the ground truth. Blue circles: segments that only appear in the ground truth. Magenta circles: segments that are not consistent with ground truth. (b) Comparison of precision, sensitivity, and F1-scores for neuron detection performance in CNMF-E (template matching and shape-based selection steps) and MesoLF segmentation. Same data as in main Fig. 3h, reproduced here for convenience. Height of bars: Mean. Error bars: s.d. Black circles: n = 5 simulation runs. (c) Top panel: Illustration of 3D volume containing neurons and exhibiting scattering, as used for volumetric segmentation comparisons in remainder of figure. Schematic illustration of segmentation pipelines in CNMF-E (middle box) and MesoLF (bottom box). (d) 3D rendering of segmentation results from CNMF-E (left) and MesoLF (right). Magenta: Ground-truth neurons, green: segments. (e) Zooms into areas indicated by dashed rectangles in (d). (f) Comparison of the spatial similarity index of neurons paired between ground truth and output of CNMF-E (template matching and shape-based selection steps) versus MesoLF segmentation. p = 2.0e-9, paired one-sided Wilcoxon signed rank test. n = 63 neuron pairs. ** p < 0.01. (g) Histogram of spatial similarity indices of segmented neurons compared to ground truth by both methods (same data as in (f)).

Extended Data Fig. 5 Enhancing detection of weakly active neurons by combining morphological segmentation with NMF.

(a) Left column: Standard deviation (SD) images of simulated LFM movie containing temporally active neurons (top) and a single plane from reconstructed SD image (bottom). The brightness values (that is, magnitude of SD of their activity) of neurons in the SD image is chosen such that dimmest (least active) neuron is 8 times dimmer than the brightest (most active) one (indicated by yellow double arrow). Right column: Same as left column with brightness scaled 5× for clarity. (b) Result of morphological segmentation applied to simulated data shown in (a). Individual segments highlighted in different colors. Dimmest neuron (yellow arow) is not segmented successfully and missed. (c) Result of NMF-based segmentation applied to simulated data shown in (a). Individual segments highlighted in different colors. Dimmest neuron (yellow arow) is segmented successfully and detected. (d) Runtime comparison of NMF- and morphology-based methods. (e) Comparison between ‘ground truth’ CaImAn-based segmentation of two-photon microscopy data (first column) to segmentation results obtained from morphological segmentation (second column), NMF-based segmentation (third column), and the combination of both (fourth column), for two different depths (170 μm, top row. 300 μm, bottom row). Very dim neurons in the temporal summary image are highlighted with pink arrows. Mis-segmentation of close-by neurons is indicated with yellow arrows.

Extended Data Fig. 6 Core-shell demixing.

(a) Illustration of core-shell model used for demixing neuronal signals from local background. (b) Left part: Illustration of the MesoLF main demixing stage, during which the spatial footprints and temporal traces of both core (neuron) and shell (background, neuropil) components are updated alternatingly and iteratively. Right part: After the main demixing step, a second optimization is run to infer the amplitude mixing coefficient, which subsequently allows to subtract shell contaminations from the core region.

Supplementary information

Supplementary Information

Supplementary Figs. 1–12, Supplementary Notes 1–12, Supplementary Table 1 and Supplementary References.

Supplementary Software

MesoLF computational pipeline. Custom code that contains the complete MesoLF pipeline, including pre-trained convolutional neural network parameters for activity trace classification, inline documentation and demo scripts. See github.com/vazirilab for future updates.

All panels: MesoLF recording from mouse cortex, depth range 200–400 µm, labeling construct: AAV9-TRE3-2xsomaGCaMP7f, recording frame rate: 18 Hz. Playback in real time. Recording duration 72 s. FOV ~650 × 650 µm. Top row, first panel: raw data. Bottom row, first panel: spatial background, extracted using rank-1 matrix factorization of raw data. Top row, second panel: background-subtracted raw data, with brightness scaled up 40× compared to previous panel for clarity. Bottom row, second panel: denoising result of data in previous panel, obtained using MesoLF iterative demixing algorithm. Top row, third panel: residual after subtracting denoised data from background-subtracted raw data. Bottom row, third panel: demixed, denoised spatial components, with each component colored in different random color. Top row, fourth panel: conventional frame-by-frame LFM reconstruction of the raw data, rendered as semi-transparent volume. Bottom row, fourth panel: perspective rendering of neuron positions and Ca2+ activity extracted using MesoLF pipeline. Temporal activity is the same as in panel ‘Demixed’. Spatial positions obtained by reconstructing the spatial components shown in panel ‘Demixed’ and finding centroid positions.

Animated perspective rendering of neuron positions and Ca2+ activity recorded using the MesoLF optical system in mouse cortex and extracted using MesoLF computational pipeline. FOV 4,000 × 200 µm. Depth range 0–200 µm. Recording frame rate 18 Hz. Real-time recording duration 405 s. Playback speed-up 25×. Labeling construct: AAV9-TRE3-2xsomaGCaMP7f. Imaged volume contains all or the majority of the posterior parietal, primary somatosensory, primary visual, anteromedial visual and retrosplenial cortical area. Same data as shown in Fig. 2. Neurons are rendered as spheres placed at their MesoLF-extracted locations. Color indicates relative change in neuron brightness, normalized to noise level.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Nöbauer, T., Zhang, Y., Kim, H. et al. Mesoscale volumetric light-field (MesoLF) imaging of neuroactivity across cortical areas at 18 Hz. Nat Methods 20, 600–609 (2023). https://doi.org/10.1038/s41592-023-01789-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41592-023-01789-z

This article is cited by

-

Imagining the future of optical microscopy: everything, everywhere, all at once

Communications Biology (2023)