Abstract

Progress in many scientific disciplines is hindered by the presence of independent noise. Technologies for measuring neural activity (calcium imaging, extracellular electrophysiology and functional magnetic resonance imaging (fMRI)) operate in domains in which independent noise (shot noise and/or thermal noise) can overwhelm physiological signals. Here, we introduce DeepInterpolation, a general-purpose denoising algorithm that trains a spatiotemporal nonlinear interpolation model using only raw noisy samples. Applying DeepInterpolation to two-photon calcium imaging data yielded up to six times more neuronal segments than those computed from raw data with a 15-fold increase in the single-pixel signal-to-noise ratio (SNR), uncovering single-trial network dynamics that were previously obscured by noise. Extracellular electrophysiology recordings processed with DeepInterpolation yielded 25% more high-quality spiking units than those computed from raw data, while DeepInterpolation produced a 1.6-fold increase in the SNR of individual voxels in fMRI datasets. Denoising was attained without sacrificing spatial or temporal resolution and without access to ground truth training data. We anticipate that DeepInterpolation will provide similar benefits in other domains in which independent noise contaminates spatiotemporally structured datasets.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 print issues and online access

$259.00 per year

only $21.58 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

Two-photon imaging and Neuropixels raw data can be downloaded from the following S3 bucket: arn:aws:s3:::allen-brain-observatory. Two-photon imaging files can be accessed according to experiment ID using the following path: visual-coding-2p/ophys_movies/ophys_experiment_>.h5. We used a random subset of 1,144 experiments for training the denoising network for Ai93, and 397 experiments were used for training the denoising network for Ai148. The list of used experiment IDs is available in JSON files (in deepinterpolation/examples/json_data/) on the DeepInterpolation GitHub repository. The majority of these experiment IDs are available in the S3 bucket. Some experimental data have not been released to S3 at the time of publication. Neuropixels raw data can be accessed by experiment ID and probe ID using the following paths: visual-coding-neuropixels/raw-data/<Experiment ID>/<Probe ID>/spike_band.dat. Median subtraction was applied to the DAT files after processing, but the DAT files do not include an offline high-pass filter. Before applying DeepInterpolation, we extracted 3,600 s of data from each of the recordings listed in Supplementary Table 1. To train the fMRI denoiser, we used datasets that can be downloaded from an S3 bucket: arn:aws:s3:::openneuro.org/ds001246. We trained our denoiser on all ‘ses-perceptionTraining’ sessions across five individuals (three perception training sessions, ten runs each).

Code availability

Code for DeepInterpolation and all other steps in our algorithm is available online through a GitHub repository40. Example training and inference tutorial codes are available at the repository. Code and data to regenerate all figures presented in this study are available online through a GitHub repository41.

References

Hinton, G. E. & Salakhutdinov, R. R. Reducing the dimensionality of data with neural networks. Science 313, 504–507 (2006).

Weigert, M. et al. Content-aware image restoration: pushing the limits of fluorescence microscopy. Nat. Methods 15, 1090–1097 (2018).

Belthangady, C. & Royer, L. A. Applications, promises, and pitfalls of deep learning for fluorescence image reconstruction. Nat. Methods 16, 1215–1225 (2019).

Lehtinen, J. et al. Noise2Noise: Learning image restoration without clean data. In International Conference on Machine Learning 2965–2974 (PMLR, 2018).

Batson, J. & Royer, L. Noise2Self: blind denoising by self-supervision. In International Conference on Machine Learning 524–533 (PMLR, 2019).

Krull, A., Buchholz, T.-O. & Jug, F. Noise2Void—learning denoising from single noisy images. In 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition 2124–2132 (IEEE, 2019).

Chen, T.-W. et al. Ultrasensitive fluorescent proteins for imaging neuronal activity. Nature 499, 295–300 (2013).

Badrinarayanan, V., Kendall, A. & Cipolla, R. SegNet: a deep convolutional encoder–decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 39, 2481–2495 (2017).

Huang, G., Liu, Z., Van Der Maaten, L. & Weinberger, K. Q. Densely connected convolutional networks. In 2017 IEEE Conference on Computer Vision and Pattern Recognition 2261–2269 (IEEE, 2017).

de Vries, S. E. J. et al. A large-scale standardized physiological survey reveals functional organization of the mouse visual cortex. Nat. Neurosci. 23, 138–151 (2020).

Buchanan, E. K. et al. Penalized matrix decomposition for denoising, compression, and improved demixing of functional imaging data. Preprint at bioRxiv https://doi.org/10.1101/334706 (2019).

Song, A., Gauthier, J. L., Pillow, J. W., Tank, D. W. & Charles, A. S. Neural anatomy and optical microscopy (NAOMi) simulation for evaluating calcium imaging methods. J. Neurosci. Methods 358, 109173 (2021).

Hoffman, D. P., Slavitt, I. & Fitzpatrick, C. A. The promise and peril of deep learning in microscopy. Nat. Methods 18, 131–132 (2021).

Huang, L. et al. Relationship between simultaneously recorded spiking activity and fluorescence signal in GCaMP6 transgenic mice. eLife 10, e51675 (2021).

Mukamel, E. A., Nimmerjahn, A. & Schnitzer, M. J. Automated analysis of cellular signals from large-scale calcium imaging data. Neuron 63, 747–760 (2009).

Pachitariu, M. et al. Suite2p: beyond 10,000 neurons with standard two-photon microscopy. Preprint at bioRxiv https://doi.org/10.1101/061507 (2017).

Giovannucci, A. et al. CaImAn an open source tool for scalable calcium imaging data analysis. eLife 8, e38173 (2019).

Pnevmatikakis, E. A. et al. Simultaneous denoising, deconvolution, and demixing of calcium imaging data. Neuron 89, 285–299 (2016).

Siegle, J. H. et al. Reconciling functional differences in populations of neurons recorded with two-photon imaging and electrophysiology. eLife 10, e69068 (2021).

Rumyantsev, O. I. et al. Fundamental bounds on the fidelity of sensory cortical coding. Nature 580, 100–105 (2020).

Cohen, M. R. & Kohn, A. Measuring and interpreting neuronal correlations. Nat. Neurosci. 14, 811–819 (2011).

Stringer, C., Pachitariu, M., Steinmetz, N., Carandini, M. & Harris, K. D. High-dimensional geometry of population responses in visual cortex. Nature 571, 361–365 (2019).

Siegle, J. H. et al. Survey of spiking in the mouse visual system reveals functional hierarchy. Nature 592, 86–92 (2021).

Lima, S. Q., Hromádka, T., Znamenskiy, P. & Zador, A. M. PINP: a new method of tagging neuronal populations for identification during in vivo electrophysiological recording. PLoS ONE 4, e6099 (2009).

Caballero-Gaudes, C. & Reynolds, R. C. Methods for cleaning the BOLD fMRI signal. NeuroImage 154, 128–149 (2017).

Park, B.-Y., Byeon, K. & Park, H. FuNP (Fusion of Neuroimaging Preprocessing) pipelines: a fully automated preprocessing software for functional magnetic resonance imaging. Front. Neuroinform. 13, 5 (2019).

Esteban, O. et al. fMRIPrep: a robust preprocessing pipeline for functional MRI. Nat. Methods 16, 111–116 (2019).

Zhang, T. et al. Kilohertz two-photon brain imaging in awake mice. Nat. Methods 16, 1119–1122 (2019).

Wu, J. et al. Kilohertz two-photon fluorescence microscopy imaging of neural activity in vivo. Nat. Methods 17, 287–290 (2020).

Madisen, L. et al. Transgenic mice for intersectional targeting of neural sensors and effectors with high specificity and performance. Neuron 85, 942–958 (2015).

Daigle, T. L. et al. A suite of transgenic driver and reporter mouse lines with enhanced brain-cell-type targeting and functionality. Cell 174, 465–480 (2018).

Jun, J. J. et al. Fully integrated silicon probes for high-density recording of neural activity. Nature 551, 232–236 (2017).

Horikawa, T. & Kamitani, Y. Generic decoding of seen and imagined objects using hierarchical visual features. Nat. Commun. 8, 15037 (2017).

Siegle, J. H. et al. Open Ephys: an open-source, plugin-based platform for multichannel electrophysiology. J. Neural Eng. 14, 045003 (2017).

Pachitariu, M., Steinmetz, N., Kadir, S., Carandini, M. & Harris, K. D. Kilosort: realtime spike-sorting for extracellular electrophysiology with hundreds of channels. Preprint at bioRxiv https://doi.org/10.1101/061481 (2016).

Hill, D. N., Mehta, S. B. & Kleinfeld, D. Quality metrics to accompany spike sorting of extracellular signals. J. Neurosci. 31, 8699–8705 (2011).

Magland, J. et al. SpikeForest, reproducible web-facing ground-truth validation of automated neural spike sorters. eLife 9, e55167 (2020).

Schindelin, J. et al. Fiji: an open-source platform for biological-image analysis. Nat. Methods 9, 676–682 (2012).

Friedrich, J., Zhou, P. & Paninski, L. Fast online deconvolution of calcium imaging data. PLoS Comput. Biol. 13, e1005423 (2017).

Lecoq, J., Kapner, D., Amster, A. & Siegle, J. AllenInstitute/deepinterpolation: peer-reviewed publication. Zenodo https://doi.org/10.5281/zenodo.5165320 (2021).

Lecoq, J. AllenInstitute/deepinterpolation_paper: reviewed publication. Zenodo https://doi.org/10.5281/zenodo.5212734 (2021).

Acknowledgements

We thank the Allen Institute founder P.G. Allen for his vision, encouragement and support. We thank A. Song (Princeton University) for help with NAOMi and for providing early access to the codebase that he created with A. Charles. We thank L. Paninski, S. Yusufov and I.A. Kinsella (Columbia University) for sharing code and support for applying PMD onto comparative datasets. We thank D. Kapner for help with two-photon segmentation as well as for supportive discussions. We thank J.P. Thomson for discussions related to denoising two-photon movies. We thank A. Arkhipov and N. Gouwens for fruitful discussions and for supporting early use of DeepInterpolation toward analyzing two-photon datasets. We thank M. Schnitzer and B. Ahanonu (Stanford University) for continuous support and evaluating early application of DeepInterpolation to one-photon datasets. We thank T. Peterson, M. Imanaka, S. Lawrenz, S. Naylor, G. Ocker, M. Buice, J. Waters and H. Zeng for encouragement and support. We thank D. Ollerenshaw and N. Gouwens (Allen Institute) for sharing code to standardize figure generation. Funding for this project was provided by the Allen Institute (C.K., J.L., M.O., J.H.S. and N.O.) as well as by the National Institute of Neurological Disorders and Stroke of the National Institutes of Health under award number UF1NS107610 (J.L. and N.O.). The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

Author information

Authors and Affiliations

Contributions

J.L. initiated, conceptualized and directed the project. J.L. and M.O. conceptualized the DeepInterpolation framework. J.L. wrote all training and inference code with guidance from M.O. J.L. performed all two-photon and fMRI analyses and ran DeepInterpolation on electrophysiological data. J.H.S. and J.L. iterated the electrophysiological denoising process. P.L. wrote code to analyze simultaneous optical and electrophysiological recordings. J.H.S. performed all analysis of electrophysiological data. C.K. guided the characterization of all denoising performances. N.O. ran the two-photon ground truth simulation. J.L., M.O., J.H.S., P.L. and C.K. wrote the paper.

Corresponding author

Ethics declarations

Competing interests

The Allen Institute has applied for a patent related to the content of this study.

Additional information

Peer review information Nature Methods thanks Adam Packer and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. Nina Vogt was the primary editor on this article and managed its editorial process and peer review in collaboration with the rest of the editorial team.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data

Extended Data Fig. 1 Comparison of DeepInterpolation, Noise2Void6, a Gaussian kernel, Penalized Matrix Decomposition (PMD)2 on simulated calcium movies with ground truth.

(a) A simulated two photon calcium movie that was generated using an in silico Neural Anatomy and Optical Microscopy (NAOMi) simulation12. Left: A few ROIs were manually drawn to extract the associated traces (middle) of the simulated movie with (blue trace) and without sources of noise (ground truth, orange). Right: The corresponding Fourier transform of all traces was calculated for each trace and averaged across ROIs. (b) The same movie and traces after PMD denoising. (c) The same movie and traces after DeepInterpolation. Scale bar is 100 μm. (d) Quantification of average mean squared error (L2) between noisy traces and ground truth, as well as between Noise2Void, PMD, Gaussian kernel (with σ = 3) and DeepInterpolation with ground truth. (e) quantification of the improvement in L2 norm against the original noisy error.

Extended Data Fig. 2 Analysis of spike-evoked calcium responses in simulated calcium movies with ground truth.

(a) For the simulated movie in Supplementary Fig. 2, spike-triggered traces were generated at different levels of activity. Spikes were counted within 272 ms windows and used to sort calcium events. Left column corresponds to a single trial associated with one spiking event. Right column is the average of all associated trials. Traces were extracted from (b). For all neuronal compartments created in the field of view, the peak calcium spike amplitude was measured in ground truth data as well as in movies that went through DeepInterpolation and Gaussian kernel. Red line is the identity line. (c) Same plot as (b) except that the area under all calcium events was measured.

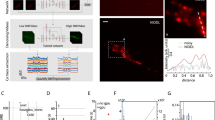

Extended Data Fig. 3 Quantification of DeepInterpolation impact on spike detection performance using high-quality simultaneous optical and cell-attached electrophysiological recording.

(a) Example high-magnification single two-photon frame acquired during a dual calcium imaging and cell-attached cellular recording in vivo in a Camk2a-tTA; tetO-GCaMP6s transgenic mouse. Cell id throughout the figure refers to the available public dataset cell ids14 (b) Exemplar simultaneous voltage cellular recording, detected Action Potential (AP), original fluorescence recording, denoised trace with DeepInterpolation and detected events using fast non-negative deconvolution39 based either on the original or the denoised data. (c) Violin distribution plots of ΔF/F amplitude associated with number of recorded action potentials in 100 ms bins. Raw traces (black) and traces after DeepInterpolation (red) are shown for 4 different recorded cells. (d) Receiver operating characteristic (ROC) curves showing the detection probability for true APs against probability of false positives as detection threshold is changed, for one or more spikes. ROC curves were calculated for 4 different temporal bin sizes. False positives were calculated from time windows with no spikes. Each ROC curve represents a different cell id. Red curves are obtained after DeepInterpolation. Inset plots zoom on lower false positive rates.

Extended Data Fig. 4 Quantification of DeepInterpolation impact on segmentation performance of Suite2p with synthetic data from NAOMi.

(a) The noisy raw movie (first row) was pre-processed with either a fine tuned DeepInterpolation model (second row), a gaussian kernel (third row), or using Penalized Matric Factorization (PMD) (fourth row). The first column shows the ROIs or cell filters identified by Suite2p that were matched with corresponding ideal ROIs in the ground truth synthetic data (cross-correlation > 0.7). The second column shows ROIs that could not be matched (cross-correlation < 0.7) with any ideal ROI in the synthetic data. The third column shows the ideal ROIs projection in the synthetic data that were matched with a segmented filter (that is not the ROI profile that were found using suite2p but the expected ROI from the simulation). Two sets of segmentation are displayed for two values of threshold_scaling, a key parameter in suite2p controlling the detection sensitivity. Scale bar is 100 μm. (b) True positive rate, that is the proportion of ROIs in the simulation that were found by suite2p. threshold_scaling was varied from 0.25 to 4 (default is 1) for every datasets. N = 4 simulated movies. Shaded area represents the standard deviation across 4 simulations. (c) False positive, that is the proportion of found ROIs that were not found in the simulated set of ideal ROIs (cross-correlation <0.7). N = 4 simulated movies. Shaded area represents the standard deviation across 4 simulations. (d) True positive rate against false positive rate for each pre-processing method.

Extended Data Fig. 5 Natural movie responses extracted from somatic and non-somatic compartments after DeepInterpolation.

(a) Example calcium responses to 10 repeats of a natural movie for 4 examples ROIs, both somatic and non-somatic (b,c,d) Top: Analysis of the sensory response to 10 repeats of a natural movie visual stimulus. Each ROI detected after DeepInterpolation was colored by the preferred movie frame time (b), the amplitude of the calcium response at the preferred frame (c) and the response reliability (d). Scale bar is 100 μm. (b) Bottom: Distribution of preferred frame of the natural movie for both somatic and non-somatic ROIs in the same field of view. (c) Bottom: Same as (B) for response amplitude at the preferred time. (d) Bottom: Reliability of all ROIs response to the natural movie. Reliability was computed by averaging all pairwise cross-correlation between each individual trial.

Extended Data Fig. 6 Fine tuning DeepInterpolation models with an L2 loss yields better reconstruction when photon count is very low.

(a) Comparison of an example image in a two-photon movie showing VIP cells expressed GCaMP6s in Raw data, after DeepInterpolation with a broadly trained model (Ai93), after DeepInterpolation with a model trained on VIP data with an L1 loss and after DeepInterpolation with a model trained on VIP data with an L2 loss. Scale bar is 100 𝜇m. (b) Simulation of reconstruction error when using either the L1 loss or the L2 loss for various levels of photon count and corrupted frames.

Extended Data Fig. 7 Extended data for Neuropixels recordings.

(a Left: Heatmap of the residual (denoised subtracted from the original data), for the same data shown in Fig. 3b. Right: Power spectra for the original (gray), denoised (red), and residual (yellow) data. (b) Number of units passing a range of quality control thresholds, for three different unit quality metrics. QC thresholds used in this work are indicated with dashed lines. (c) Physiological plots for one example unit from V1 that was only detectable after denoising (<20% of spike times were included in the original spike sorting results). (P-value calculated using the Kolmogorov–Smirnov test between the distribution of spikes per trial for the original and shuffled response). (d) Number of units with substantial response modulation to a repeated natural movie stimulus, for all cortical units detected before and after denoising. (e) Raster plots for 10 high-reliability exemplar units detected only after denoising, aligned to the 30 s natural movie clip. Each color represents a different unit.

Extended Data Fig. 8 Comparing DeepInterpolation with traditional denoising methods.

(a) Heatmap of 100 ms of Neuropixels data before and after applying three denoising methods: Gaussian filter, 2D Boxcar, and DeepInterpolation. (b) Distribution of RMS noise levels across channels shown in (A), computed over the same 10 s interval. The parameters for the Gaussian and Boxcar filters were chosen such that the resulting RMS noise distributions roughly matched that seen with DeepInterpolation. (c) Example voltage time series for one channel, before and after applying the three denoising methods shown in (A). (d) Relationship between original peak amplitude and denoised peak amplitude for all spikes in a 10 s window, from the same example channel shown in (c).

Extended Data Fig. 9 Results of simulated ground truth electrophysiology experiments.

(a) Right: Heatmap of original data (black outline), data with superimposed spike waveforms (gray outline), and superimposed data after denoising (red outline). Left: Spike waveform outlined in heatmaps, for three different conditions. (b) Mean peak waveforms for each of three units superimposed on the original data at 8 different amplitudes (N = 100 spikes per unit per amplitude). (c) Mean cosine similarity between individual denoised waveforms and the original superimposed waveform. Colors represent different units. (d) Relationship between denoised amplitude and waveform full-width at half max (FWHM), for all 100 µV waveforms. Colors represent different units.

Supplementary information

Supplementary Information

Supplementary Figs. 1–6, Note and Table 1

Supplementary Video 1

Left, example two-photon movie before and after applying DeepInterpolation. Right, the average movie was subtracted to highlight uncovered fluorescence signals.

Supplementary Video 2

Four examples of two-photon movies before and after applying DeepInterpolation. The same DeepInterpolation model was used for all four examples.

Supplementary Video 3

Example two-photon movie processed with PMD and DeepInterpolation. The white arrow highlights the impact of motion artifacts on PMD.

Supplementary Video 4

Simulated two-photon data without motion artifacts with NAOMi. The first column is ground truth data in the absence of shot noise. The second column is the same movie with shot noise. The third column is the output of PMD after processing the movie in the second column. The fourth column is the output of DeepInterpolation after processing the movie in the second column. The third row is the reconstruction error from the ground truth movie.

Supplementary Video 5

Simulated two-photon data without motion artifacts with NAOMi. The first column is ground truth data in the absence of shot noise. The second column is the same movie with shot noise. The third column is the output of Noise2Void after processing the movie in the second column. The fourth column is the output of DeepInterpolation after processing the movie in the second column.

Supplementary Video 6

Example layer 6 data before and after fine tuning (transfer training) of a pre-trained DeepInterpolation network. The second row is mean subtracted to highlight small differences.

Supplementary Video 7

Comparison of fine tuning with an L1 loss versus an L2 loss with a very low photon flux calcium imaging experiment.

Supplementary Video 8

Example fMRI data with and without DeepInterpolation. The three columns on the right are horizontal, sagittal and coronal sections of the same movie in the first column. On the second row, the temporal mean was subtracted to better highlight small changes in signal.

Supplementary Video 9

Example fMRI data to compare DeepInterpolation with a spatial Gaussian kernel. On the second row, the temporal mean was subtracted to better highlight small changes in signal.

Supplementary Video 10

Example fMRI data with and without DeepInterpolation. The residual was calculated to illustrate the type of signal that was removed by DeepInterpolation.

Rights and permissions

About this article

Cite this article

Lecoq, J., Oliver, M., Siegle, J.H. et al. Removing independent noise in systems neuroscience data using DeepInterpolation. Nat Methods 18, 1401–1408 (2021). https://doi.org/10.1038/s41592-021-01285-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41592-021-01285-2

This article is cited by

-

Surmounting photon limits and motion artifacts for biological dynamics imaging via dual-perspective self-supervised learning

PhotoniX (2024)

-

Multiscale biochemical mapping of the brain through deep-learning-enhanced high-throughput mass spectrometry

Nature Methods (2024)

-

Centripetal integration of past events in hippocampal astrocytes regulated by locus coeruleus

Nature Neuroscience (2024)

-

High-speed low-light in vivo two-photon voltage imaging of large neuronal populations

Nature Methods (2023)

-

Bio-friendly long-term subcellular dynamic recording by self-supervised image enhancement microscopy

Nature Methods (2023)