Abstract

New computing technologies inspired by the brain promise fundamentally different ways to process information with extreme energy efficiency and the ability to handle the avalanche of unstructured and noisy data that we are generating at an ever-increasing rate. To realize this promise requires a brave and coordinated plan to bring together disparate research communities and to provide them with the funding, focus and support needed. We have done this in the past with digital technologies; we are in the process of doing it with quantum technologies; can we now do it for brain-inspired computing?

Similar content being viewed by others

Main

Modern computing systems consume far too much energy. They are not sustainable platforms for the complex artificial intelligence (AI) applications that are increasingly a part of our lives. We usually do not see this, particularly in the case of cloud-based systems, as we focus on functionality—how fast are they; how accurate; how many parallel operations per second? We are so accustomed to accessing information near-instantaneously that we neglect the energy—and therefore environmental—consequences of the computing systems giving us this access. Nevertheless, each Google search has a cost: data centres currently use around 200 terawatt hours of energy per year, forecast to grow by around an order of magnitude by 20301. Similarly, the astonishing achievements of high-end AI systems such as DeepMind’s AlphaGo and AlphaZero, which can beat human experts at complex strategy games, require thousands of parallel processing units, each of which can consume around 200 watts (ref. 2).

Although not all data-intensive computing requires AI or deep learning, deep learning is deployed so widely that we must worry about its environmental cost. We should also consider applications including the Internet of Things (IoT) and autonomous robotic agents that may not need always to be operated by computationally intense deep learning algorithms but must still reduce their energy consumption. The vision of the IoT cannot be achieved if the energy requirements of the myriad connected devices are too high. Recent analysis shows that increasing demand for computing power vastly outpaces improvements made through Moore’s law scaling3. Computing power demands now double every two months (Fig. 1a). Remarkable improvements have been made through a combination of smart architecture and software–hardware co-design. For example, the performance of NVIDIA GPUs (graphics processing units) has improved by the factor of 317 since 2012: far beyond what would be expected from Moore’s law alone (Fig. 1b)—although the power consumption of units has increased from approximately 25 W to around 320 W in the same period. Further impressive performance improvements have been demonstrated at the research and development stage (Fig. 1b, red) and it is likely that we can achieve more4,5. Unfortunately, it is unlikely that conventional computing solutions alone will cope with demand over an extended period. This is especially apparent when we consider the shockingly high cost of training required for the most complex deep learning models (Fig. 1c). We need alternative approaches.

a, The increase in computing power demands over the past four decades expressed in petaFLOPS days. Until 2012, computing power demand doubled every 24 months; recently this has shortened to approximately every 2 months. The colour legend indicates different application domains. Data are from ref. 3. b, Improvements in AI hardware efficiency over the past five years. State-of-the-art solutions have driven increases in computing efficiency of more than 300 times. Solutions in research and development promise further improvements22,23,–24. c, Increase since 2011 of the costs of training AI models. Such an exponential increase is clearly unsustainable. Data are from ref. 25.

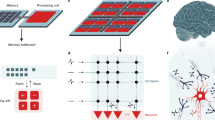

The energy problem is largely a consequence of digital computing systems storing data separately from where they are processed. This is the classical von Neumann architecture underpinning digital computing systems. Processors spend most of their time and energy moving data. Fortunately, we can improve the situation by taking inspiration from biology, which takes a different approach entirely—co-locating memory and processing, encoding information in a wholly different way or operating directly on signals, and employing massive parallelism, for example (Box 1). There is a system that achieves both energy efficiency and advanced functionality remarkably well: the brain. Recognizing that we still have much to learn about how the brain operates and that our aim is not simply to emulate biological systems, we can nevertheless learn from the substantial progress in neuroscience and computational neuroscience in the last few decades. We know just enough about the brain to use it as an inspiration.

Biological inspiration

In biology, data storage is not separate from processing. The same elements—principally neurons and synapses—perform both functions in massively parallel and adaptable structures. The 1011 neurons and 1015 synapses contained in the typical human brain expend approximately 20 W of power, whereas a digital simulation of an artificial neural network of approximately the same size consumes 7.9 MW (ref. 6). That six-order-of-magnitude gap poses us a challenge. The brain directly processes with extreme efficiency signals that are noisy. This contrasts with the signal-to-data conversion and high-precision computing in our conventional computer system that produces huge costs in energy and time for even the most powerful digital supercomputers. Brain-inspired, or ‘neuromorphic’, computing systems could therefore transform the way we process signals and data, both in terms of energy efficiency and of their capacity to handle real-world uncertainty.

This is not a new idea. The term neuromorphic, describing devices and systems that mimic some functions of biological neural systems, was coined in the late 1980s by Carver Mead at the California Institute of Technology7,8. The inspiration came from work undertaken over previous decades to model the nervous system as equivalent electrical circuits9 and to build analogue electronic devices and systems to provide similar functionality (Box 1).

A word about ‘data’. We use the term to describe information encoded in, say, an analogue signal or the physical response of a sensor, as well as the more standard computing-focused sense of digital data. When we refer to the brain “processing data” we describe an integrated set of signal-processing tasks that do not rely on digitization of signals in any conventional sense. We can think of brain-inspired systems operating at different levels: from analogue signal processing to working with large digital datasets. In the former case, we can avoid generating large datasets in the first place; in the latter we can greatly increase the efficiency of processing by moving away from the von Neumann model. Of course, there are good reasons why we represent data digitally for many applications: we want high precision, reliability and determinacy. However, digital abstraction discards massive amounts of information, found in the physics of transistors, for the minimum information quantum: a single bit. And we pay a considerable energy cost by trading efficiency for reliability. AI applications are often probabilistic at heart, and so we must consider if this trade-off makes sense. The computational tasks underpinning AI applications are very compute-intensive (and therefore energy-hungry) when performed by conventional von Neumann computers. However, we might perform similar tasks much more energy-efficiently on analogue or mixed systems that use a spike-based representation of information. There has therefore been a recent resurgence in interest in neuromorphic computing, driven by the growth in AI systems and by the emergence of new devices that offer new and exciting ways to mimic some of the capabilities of biological neural systems (Box 1).

Definitions of ‘neuromorphic’ vary considerably. Loosely speaking, the story is a hardware one: neuromorphic chips aim to integrate and utilize various useful features of the brain, including in-memory computing, spike-based information processing, fine-grained parallelism, signal processing resilient to noise and stochasticity, adaptability, learning in hardware, asynchronous communication, and analogue processing. Although it is debatable how many of these need to be implemented for something to be classified as neuromorphic, this is clearly a different approach from AI implemented on mainstream computing systems. Nevertheless, we should not be lost in terminology; the main question is whether this approach is useful.

Approaches to neuromorphic technologies lie on a spectrum between reverse-engineering the structure and function of the brain (analysis) and living with our current lack of knowledge of the brain but taking inspiration from what we do know (synthesis). Perhaps foremost among the former approaches is the Human Brain Project, a high-profile and hugely ambitious ten-year programme funded by the European Union from 2013. The programme supported the adoption and further development of two existing neuromorphic hardware platforms—SpiNNaker (at The University of Manchester) and BrainScaleS (at Heidelberg University)—as openly accessible neuromorphic platforms. Both systems implement highly complex silicon models of brain architectures to better understand the operation of the biological brain. At the other end of the spectrum, numerous groups augment the performance of digital or analogue electronics using selected biologically inspired methods. Figure 2 summarizes the range of existing neuromorphic chips, divided into four categories depending on their position on the analysis–synthesis spectrum and their technology platform. It is important to remember that neuromorphic engineering is not just about high-level cognitive systems, but also offering energy, speed and security gains (at least by removing the need for constant communication to the cloud) in small-scale edge devices with limited cognitive abilities.

Neuromorphic chips can be classified as either modelling biological systems or applying brain-inspired principles to novel computing applications. They may be further subdivided into those based on digital CMOS with novel architecture (for example, spikes may be simulated in the digital domain rather than implemented as analogue voltages) and those implemented using some degree of analogue circuitry. In all cases, however, they share at least some of the properties listed on the right-hand side, which distinguish them from conventional CMOS chips. Here we classify examples of recently developed neuromorphic chips. Further details of each can be found in the relevant reference: Neurogrid26, BrainSclaseS27, MNIFAT28, DYNAP29, DYNAP-SEL30, ROLLS31, Spirit32, ReASOn33, DeepSouth34, SpiNNaker35, IBM TrueNorth36, Intel Loihi37, Tianjic38, ODIN39 and the Intel SNN chip40. aImplemented with memristors.

Prospects

We do not propose that neuromorphic systems will, or should, replace conventional computing platforms. Instead, precision calculations should remain the preserve of digital computation, whereas neuromorphic systems can process unstructured data, perform image recognition, classification of noisy and uncertain datasets, and underpin novel learning and inference systems. In autonomous and IoT-connected systems, they can provide huge energy savings over their conventional counterparts. Quantum computing is also part of this vision. A practical quantum computer, although still several years away by any estimation, would certainly revolutionize many computing tasks. However, it is unlikely that IoT-connected smart sensors, edge computing devices, or autonomous robotic systems will adopt quantum computing without depending on cloud computing. There will remain a need for low-power computing elements capable of dealing with uncertain and noisy data. We can imagine a three-way synergy between digital, neuromorphic and quantum systems.

Just as the development of semiconductor microelectronics relied on many different disciplines, including solid-state physics, electronic engineering, computer science and materials science, neuromorphic computing is profoundly cross- and inter-disciplinary. Physicists, chemists, engineers, computer scientists, biologists and neuroscientists all have key roles. Simply getting researchers from such a diverse set of disciplines to speak a common language is challenging. In our own work we spend considerable time and effort ensuring that everyone in the room understands terminology and concepts in the same way. A case for bridging the communities of computer science (specifically AI) and neuroscience (initially computational neuroscience) is clear. After all, many concepts found in today’s state-of-the-art AI systems arose in the 1970s and 80s in neuroscience, although, of course, AI systems need not be completely bio-realistic. We must include other disciplines, recognizing that many of the strides we have made in AI or neuroscience have been enabled by different communities—for example, innovations in material science, nanotechnology or electronic engineering. Further, conventional CMOS (complementary metal–oxide–semiconductor) technology may not be the best fabric with which to efficiently implement new brain-inspired algorithms; innovations across the board are needed. Engaging these communities early reduces the risk of wasting effort on directions that have already been explored and failed, or of reinventing the wheel.

Further, we should not neglect the challenges of integrating new neuromorphic technologies at the system level. Beyond the development of brain-inspired devices and algorithms there are pressing questions around how existing, mainstream, AI systems can be replaced with functionally equivalent neuromorphic alternatives. This further emphasizes the need for a fully integrated approach to brain-inspired computation.

We should point out that, despite the potential outlined above, there is as yet no compelling demonstration of a commercial neuromorphic technology. Existing systems and platforms are primarily research tools. However, this is equally true of quantum computing, which remains a longer-term prospect. We should not let this delay the development of brain-inspired computing; the need for lower-power computing systems is pressing and we are tantalizingly close to achieving this with all the added functionality that comes from a radically different approach to computation. Commercial systems will surely emerge.

Seizing the opportunity

If neuromorphic computing is needed, how can it be achieved? First, the technical requirements. Bringing together diverse research communities is necessary but not sufficient. Incentives, opportunities and infrastructure are needed. The neuromorphic community is a disparate one lacking the focus of quantum computing, or the clear roadmap of the semiconductor industry. Initiatives around the globe are starting to gather the required expertise, and early-stage momentum is building. To foster this, funding is key. Investment in neuromorphic research is nowhere near the scale of that in digital AI or quantum technologies (Box 2). Although that is not surprising given the maturity of digital semiconductor technology, it is a missed opportunity. There are a few examples of medium-scale investment in neuromorphic research and development, such as the IBM AI Hardware Centre’s range of brain-inspired projects (including the TrueNorth chip), Intel’s development of the Loihi processor, and the US Brain Initiative project, but the sums committed are well below what they should be given the promise of the technology to disrupt digital AI.

The neuromorphic community is a large and growing one, but one that lacks a focus. Although there are numerous conferences, symposia and journals emerging in this space there remains much work to be done to bring the disparate communities together and to corral their efforts to persuade funding bodies and governments of the importance of this field.

The time is ripe for bold initiatives. At a national level, governments need to work with academic researchers and industry to create mission-oriented research centres to accelerate the development of neuromorphic technologies. This has worked well in areas such as quantum technologies and nanotechnology—the US National Nanotechnology Initiative demonstrates this very well10, and provides focus and stimulus. Such centres may be physical or virtual but must bring together the best researchers across diverse fields. Their approach must be different from that of conventional electronic technologies in which every level of abstraction (materials, devices, circuits, systems, algorithms and applications) belongs to a different community. We need holistic and concurrent design across the whole stack. It is not enough for circuit designers to consult computational neuroscientists before designing systems; engineers and neuroscientists must work together throughout the process to ensure as full an integration of bio-inspired principles into hardware as possible. Interdisciplinary co-creation must be at the heart of our approach. Research centres must house a broad constituency of researchers.

Alongside the required physical and financial infrastructure, we need a trained workforce. Electronic engineers are rarely exposed to ideas from neuroscience, and vice versa. Circuit designers and physicists may have a passing knowledge of neurons and synapses but are unlikely to be familiar with cutting-edge computational neuroscience. There is a strong case to set up Masters courses and doctoral training programmes to develop neuromorphic engineers. UK research councils sponsor Centres for Doctoral Training (CDTs), which are focused programmes supporting areas with an identified need for trained researchers. CDTs can be single- or multi-institution; there are substantial benefits to institutions collaborating on these programmes by creating complementary teams across institutional boundaries. Programmes generally work closely with industry and build cohorts of highly skilled researchers in ways that more traditional doctoral programmes often do not. There is a good case to be made to develop something similar, to stimulate interaction between nascent neuromorphic engineering communities and provide the next generation of researchers and research leaders. Pioneering examples include the Groningen Cognitive Systems and Materials research programme, which aims to train tens of doctoral students specifically in materials for cognitive (AI) systems11,the Masters programme in neuroengineering at the Technical University of Munich12; ETH Zurich courses on analogue circuit design for neuromorphic engineering13; large-scale neural modelling at Stanford University14; and development of visual neuromorphic systems at the Instituto de Microelectrónica de Sevilla15. There is scope to do much more.

Similar approaches could work at the trans-national level. As always in research, collaboration is most successful when it is the best working with the best, irrespective of borders. In such an interdisciplinary endeavour as neuromorphic computing this is critical, so international research networks and projects undoubtedly have a part to play. Early examples include the European Neurotech consortium16, focusing on neuromorphic computing technologies, as well as the Chua Memristor Centre at the University of Dresden17, which brings together many of the leading memristor researchers across materials, devices and algorithms. Again, much more can and must be done.

How can this be made attractive to governments? Government commitment to more energy-efficient bio-inspired computing can be part of a broader large-scale decarbonization push. This will not only address climate change but also will accelerate the emergence of new, low-carbon, industries around big data, IoT, healthcare analytics, modelling for drug and vaccine discovery, and robotics, amongst others. If existing industries rely on ever more large-scale conventional digital data analysis, they increase their energy cost while offering sub-optimal performance. We can instead create a virtuous circle in which we greatly reduce the carbon footprint of the knowledge technologies that will drive the next generation of disruptive industries and, in doing so, seed a host of new neuromorphic industries.

If this sounds a tall order, consider quantum technologies. In the UK the government has so far committed around £1 billion to a range of quantum initiatives, largely under the umbrella of the National Quantum Technologies Programme. A series of research hubs, bringing together industry and academia, translate quantum science into technologies targeted at sensors and metrology, imaging, communications and computing. A separate National Quantum Computing Centre builds on the work of the hubs and other researchers to deliver demonstrator hardware and software to develop a general-purpose quantum computer. China has established a multi-billion (US) dollar Chinese National Laboratory for Quantum Information Sciences, and the USA in 2018 commissioned a National Strategic Overview for Quantum Information Science18, which resulted in a five-year US$1.2 billion investment, on top of supporting a range of national quantum research centres19. Thanks to this research work there has been a global rush to start up quantum technology companies. One analysis found that in 2017 and 2018 funding for private companies reached $450 million20. No such joined-up support exists for neuromorphic computing, despite the technology being more established than quantum, and despite its potential to disrupt existing AI technologies on a much shorter time horizon. Of the three strands of future computing in our vision, neuromorphic is woefully under-invested.

Finally, some words about what bearing the COVID-19 pandemic might have on our arguments. There is a growing consensus that the crisis has accelerated many developments already under way: for example, the move to more homeworking. Although reducing commuting and travel has direct benefits—some estimates put the reduction in global CO2 as a result of the crisis at up to 17%21—new ways of working have a cost. To what extent will carbon savings from reduced travel be offset by increased data centre emissions? If anything, the COVID pandemic further emphasizes the need to develop low-carbon computing technologies such as neuromorphic systems.

Our message about how to realize the potential of neuromorphic systems is clear: provide targeted support for collaborative research through the establishment of research centres of excellence; provide agile funding mechanisms to enable rapid progress; provide mechanisms for close collaboration with industry to bring in commercial funding and generate new spin-outs and start-ups, similar to schemes already in place for quantum technology; develop training programmes for the next generation of neuromorphic researchers and entrepreneurs; and do all of this quickly and at scale.

Neuromorphic computing has the potential to transform our approach to AI. Thanks to the conjunction of new technologies and a massive, growing demand for efficient AI we have a timely opportunity. Bold thinking is needed, and bold initiatives to support this thinking. Will we seize the opportunity?

References

Jones, N. How to stop data centres from gobbling up the world’s electricity. Nature https://doi.org/10.1038/d41586-018-06610-y (12 September 2018).

Wu, K. J. Google’s new AI is a master of games, but how does it compare to the human mind? Smithsonian https://www.smithsonianmag.com/innovation/google-ai-deepminds-alphazero-games-chess-and-go-180970981/ (10 December 2018).

Amodei, D. & Hernandez, D. AI and compute. OpenAI Blog https://openai.com/blog/ai-and-compute/ (16 May 2018).

Venkatesan, R. et al. in 2019 IEEE Hot Chips 31 Symp. (HCS) https://doi.org/10.1109/HOTCHIPS.2019.8875657 (IEEE, 2019).

Venkatesan, R. et al. in 2019 IEEE/ACM Intl Conf. Computer-Aided Design (ICCAD) https://doi.org/10.1109/ICCAD45719.2019.8942127 (IEEE, 2019).

Wong, T. M. et al. 1014. Report no. RJ10502 (ALM1211-004) (IBM, 2012). The power consumption of this simulation of the brain puts that of conventional digital systems into context.

Mead, C. Analog VLSI and Neural Systems (Addison-Wesley, 1989).

Mead, C. A. Author Correction: How we created neuromorphic engineering. Nat. Electron. 3, 579–579 (2020).

Hodgkin, A. L. & Huxley, A. F. A quantitative description of membrane current and its application to conduction and excitation in nerve. J. Physiol. 117, 500–544 (1952). More complex models followed, but this seminal work remains the clearest and an excellent starting point, developing equivalent electrical circuits and circuit models for the neural membrane.

National Nanotechnology Initiative. US National Nanotechnology Initiative https://www.nano.gov (National Nanotechnology Coordination Office, accessed 18 August 2021).

About Groningen Cognitive Systems and Materials. University of Groningen https://www.rug.nl/research/fse/cognitive-systems-and-materials/about/ (accessed 9 November 2020).

Degree programs: Neuroengineering. Technical University of Munich https://www.tum.de/en/studies/degree-programs/detail/detail/StudyCourse/neuroengineering-master-of-science-msc/ (accessed 18 August 2021).

Course catalogue: 227-1033-00L Neuromorphic Engineering I. ETH Zürich http://www.vvz.ethz.ch/Vorlesungsverzeichnis/lerneinheit.view?lerneinheitId=132789&semkez=2019W&ansicht=KATALOGDATEN&lang=en (accessed 9 November 2020).

Brains in Silicon. http://web.stanford.edu/group/brainsinsilicon/ (accessed 16 March 2022).

Neuromorphs. Instituto de Microelectrónica de Sevilla http://www2.imse-cnm.csic.es/neuromorphs (accessed 9 November 2020).

Neurotech. https://neurotechai.eu (accessed 18 August 2021).

Chua Memristor Center: Members. Technische Universität Dresden https://cmc-dresden.org/members (accessed 9 November 2020).

Subcommittee on Quantum Information Science. National Strategic Overview for Quantum Information Science. https://web.archive.org/web/20201109201659/https://www.whitehouse.gov/wp-content/uploads/2018/09/National-Strategic-Overview-for-Quantum-Information-Science.pdf (US Government, 2018; accessed 17 March 2022).

Smith-Goodson, P. Quantum USA vs. quantum China: the world’s most important technology race. Forbes https://www.forbes.com/sites/moorinsights/2019/10/10/quantum-usa-vs-quantum-china-the-worlds-most-important-technology-race/#371aad5172de (10 October 2019).

Gibney, E. Quantum gold rush: the private funding pouring into quantum start-ups. Nature https://doi.org/10.1038/d41586-019-02935-4 (2 October 2019).

Le Quéré, C. et al. Temporary reduction in daily global CO2 emissions during the COVID-19 forced confinement. Nat. Clim. Change 10, 647–653 (2020).

Gokmen, T. & Vlasov, Y. Acceleration of deep neural network training with resistive cross-point devices: design considerations. Front. Neurosci. 1010.3389/fnins.2016.00333 (2016).

Marinella, M. J. et al. Multiscale co-design analysis of energy latency area and accuracy of a ReRAM analog neural training accelerator. IEEE J. Emerg. Selected Topics Circuits Systems 8, 86–101 (2018).

Chang, H.-Y. et al. AI hardware acceleration with analog memory: microarchitectures for low energy at high speed. IBM J. Res. Dev. 63, 8:1–8:14 (2019).

ARK Invest. Big Ideas 2021 https://research.ark-invest.com/hubfs/1_Download_Files_ARK-Invest/White_Papers/ARK–Invest_BigIdeas_2021.pdf (ARK Investment Management, 2021; accessed 27 April 2021).

Benjamin, B. V. et al. Neurogrid: a mixed-analog–digital multichip system for large-scale neural simulations. Proc. IEEE 102, 699–716 (2014).

Schmitt, S. et al. Neuromorphic hardware in the loop: training a deep spiking network on the BrainScaleS wafer-scale system. In 2017 Intl Joint Conf. Neural Networks (IJCNN) https://doi.org/10.1109/ijcnn.2017.7966125 (IEEE, 2017).

Lichtsteiner, P., Posch, C. & Delbruck, T. A 128 × 128 120 dB 15 μs latency asynchronous temporal contrast vision sensor. IEEE J. Solid-State Circuits 43, 566–576 (2008).

Moradi, S., Qiao, N., Stefanini, F. & Indiveri, G. A scalable multicore architecture with heterogeneous memory structures for dynamic neuromorphic asynchronous processors (DYNAPs). IEEE Trans. Biomed. Circuits Syst. 12, 106–122 (2018).

Thakur, C. S. et al. Large-scale neuromorphic spiking array processors: a quest to mimic the brain. Front. Neurosci. 12, 891 (2018).

Qiao, N. et al. A reconfigurable on-line learning spiking neuromorphic processor comprising 256 neurons and 128K synapses. Front. Neurosci. 9, 141 (2015).

Valentian, A. et al. in 2019 IEEE Intl Electron Devices Meeting (IEDM) 14.3.1–14.3.4 https://doi.org/10.1109/IEDM19573.2019.8993431 (IEEE, 2019).

Resistive Array of Synapses with ONline Learning (ReASOn) Developed by NeuRAM3 Project https://cordis.europa.eu/project/id/687299/reporting (2021).

Wang, R. et al. Neuromorphic hardware architecture using the neural engineering framework for pattern recognition. IEEE Trans. Biomed. Circuits Syst. 11, 574–584 (2017).

Furber, S. B., Galluppi, F., Temple, S. & Plana, L. A. The SpiNNaker Project. Proc. IEEE 102, 652–665 (2014). An example of a large-scale neuromorphic system as a model for the brain.

Merolla, P. A. et al. A million spiking-neuron integrated circuit with a scalable communication network and interface. Science 345, 668–673 (2014).

Davies, M. et al. Loihi: a neuromorphic manycore processor with on-chip learning. IEEE Micro 38, 82–99 (2018).

Pei, J. et al. Towards artificial general intelligence with hybrid Tianjic chip architecture. Nature 572, 106–111 (2019).

Frenkel, C., Lefebvre, M., Legat, J.-D. & Bol, D. A 0.086-mm2 12.7-pJ/SOP 64k-synapse 256-neuron online-learning digital spiking neuromorphic processor in 28-nm CMOS. IEEE Trans. Biomed. Circuits Syst. 13, 145–158 (2018).

Chen, G. K., Kumar, R., Sumbul, H. E., Knag, P. C. & Krishnamurthy, R. K. A 4096-neuron 1M-synapse 3.8-pJ/SOP spiking neural network with on-chip STDP learning and sparse weights in 10-nm FinFET CMOS. IEEE J. Solid-State Circuits 54, 992–1002 (2019).

Indiveri, G. et al. Neuromorphic silicon neuron circuits. Front. Neurosci. 5, 73 (2011).

Mehonic, A. et al. Memristors—from in‐memory computing, deep learning acceleration, and spiking neural networks to the future of neuromorphic and bio‐inspired computing. Adv. Intell Syst. 2, 2000085 (2020). A review of the promise of memristors across a range of applications, including spike-based neuromorphic systems.

Li, X. et al. Power-efficient neural network with artificial dendrites. Nat. Nanotechnol. 15, 776–782 (2020).

Chua, L. Memristor, Hodgkin–Huxley, and edge of chaos. Nanotechnology 24, 383001 (2013).

Kumar, S., Strachan, J. P. & Williams, R. S. Chaotic dynamics in nanoscale NbO2 Mott memristors for analogue computing. Nature 548, 318–321 (2017).

Serb, A. et al. Memristive synapses connect brain and silicon spiking neurons. Sci Rep. 10, 2590 (2020).

Rosemain, M. & Rose, M. France to spend $1.8 billion on AI to compete with U.S., China. Reuters https://www.reuters.com/article/us-france-tech-idUSKBN1H51XP (29 March 2018).

Castellanos, S. Executives say $1 billion for AI research isn’t enough. Wall Street J. https://www.wsj.com/articles/executives-say-1-billion-for-ai-research-isnt-enough-11568153863 (10 September 2019).

Larson, C. China’s AI imperative. Science 359, 628–630 (2018).

European Commission. A European approach to artificial intelligence. https://ec.europa.eu/digital-single-market/en/artificial-intelligence (accessed 9 November 2020).

Artificial intelligence (AI) funding investment in the United States from 2011 to 2019. Statista https://www.statista.com/statistics/672712/ai-funding-united-states (accessed 9 November 2020).

Worldwide artificial intelligence spending guide. IDC Trackers https://www.idc.com/getdoc.jsp?containerId=IDC_P33198 (accessed 9 November 2020).

Markets and Markets.com. Neuromorphic Computing Market https://www.marketsandmarkets.com/Market-Reports/neuromorphic-chip-market-227703024.html?gclid=CjwKCAjwlcaRBhBYEiwAK341jS3mzHf9nSlOEcj3MxSj27HVewqXDR2v4TlsZYaH1RWC4qdM0fKdlxoC3NYQAvD_BwE. (accessed 17 March 2022).

Acknowledgements

A.J.K. thanks the Engineering and Physical Sciences for financial support from grants EP/K01739X/1 and EP/P013503/1. A.M. acknowledges financial support from the Royal Academy of Engineering in the form of a Research Fellowship (RF201617\16\9).

Author information

Authors and Affiliations

Contributions

Both authors contributed equally to the manuscript and revisions.

Corresponding authors

Ethics declarations

Competing interests

The authors are founders and directors of Intrinsic Semiconductor Technologies Ltd (www.intrinsicst.com), a spin-out company commercializing silicon oxide RRAM.

Peer review

Peer review information

Nature thanks Steve Furber and Yulia Sandamirskaya for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Mehonic, A., Kenyon, A.J. Brain-inspired computing needs a master plan. Nature 604, 255–260 (2022). https://doi.org/10.1038/s41586-021-04362-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41586-021-04362-w

This article is cited by

-

Conflict-free joint decision by lag and zero-lag synchronization in laser network

Scientific Reports (2024)

-

Multi-junction cascaded vertical-cavity surface-emitting laser with a high power conversion efficiency of 74%

Light: Science & Applications (2024)

-

Potential and challenges of computing with molecular materials

Nature Materials (2024)

-

Phase-change memory via a phase-changeable self-confined nano-filament

Nature (2024)

-

One-vs-One, One-vs-Rest, and a novel Outcome-Driven One-vs-One binary classifiers enabled by optoelectronic memristors towards overcoming hardware limitations in multiclass classification

Discover Materials (2024)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.