Abstract

Implementations of artificial neural networks that borrow analogue techniques could potentially offer low-power alternatives to fully digital approaches1,2,3. One notable example is in-memory computing based on crossbar arrays of non-volatile memories4,5,6,7 that execute, in an analogue manner, multiply–accumulate operations prevalent in artificial neural networks. Various non-volatile memories—including resistive memory8,9,10,11,12,13, phase-change memory14,15 and flash memory16,17,18,19—have been used for such approaches. However, it remains challenging to develop a crossbar array of spin-transfer-torque magnetoresistive random-access memory (MRAM)20,21,22, despite the technology’s practical advantages such as endurance and large-scale commercialization5. The difficulty stems from the low resistance of MRAM, which would result in large power consumption in a conventional crossbar array that uses current summation for analogue multiply–accumulate operations. Here we report a 64 × 64 crossbar array based on MRAM cells that overcomes the low-resistance issue with an architecture that uses resistance summation for analogue multiply–accumulate operations. The array is integrated with readout electronics in 28-nanometre complementary metal–oxide–semiconductor technology. Using this array, a two-layer perceptron is implemented to classify 10,000 Modified National Institute of Standards and Technology digits with an accuracy of 93.23 per cent (software baseline: 95.24 per cent). In an emulation of a deeper, eight-layer Visual Geometry Group-8 neural network with measured errors, the classification accuracy improves to 98.86 per cent (software baseline: 99.28 per cent). We also use the array to implement a single layer in a ten-layer neural network to realize face detection with an accuracy of 93.4 per cent.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 51 print issues and online access

$199.00 per year

only $3.90 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

The data that support the findings of this study are available from the corresponding authors upon reasonable request.

Code availability

Computer codes are available from the corresponding authors on reasonable request.

Change history

14 January 2022

In the version of this article initially published online, the posted article PDF was an earlier, incorrect version of the final article. The correct PDF has been posted as of 14 January 2022

References

Horowitz, M. Computing’s energy problem (and what we can do about it). In Proc. International Solid-State Circuits Conference (ISSCC) 10−14 (IEEE, 2014).

Keckler, S. W., Dally, W. J., Khailany, B., Garland, M. & Glasco, D. GPUs and the future of parallel computing. IEEE Micro 31, 7–17 (2011).

Song, J. et al. An 11.5TOPS/W 1024-MAC butterfly structure dual-core sparsity-aware neural processing unit in 8nm flagship mobile SoC. In 2019 IEEE Int. Solid-State Circuits Conference Digest of Technical Papers (ISSCC) 130−131 (IEEE, 2019).

Sebastian, A. et al. Memory devices and applications for in-memory computing. Nat. Nanotechnol. 15, 529–544 (2020).

Wang, Z. et al. Resistive switching materials for information processing. Nat. Rev. Mater. 5, 173–195 (2020).

Ielmini, D. & Wong, H. P. In-memory computing with resistive switching devices. Nat. Electron. 1, 333–343 (2018).

Verma, N. et al. In-memory computing: advances and prospects. IEEE Solid-State Circuits Mag. 11, 43–55 (2019).

Woo, J. et al. Improved synaptic behavior under identical pulses using AlOx/HfO2 bilayer RRAM array for neuromorphic systems. IEEE Electron Device Lett. 37, 994–997 (2016).

Yao, P. et al. Face classification using electronic synapses. Nat. Commun. 8, 15199 (2017).

Wu, H. et al. Device and circuit optimization of RRAM for neuromorphic computing. In 2017 IEEE International Electron Devices Meeting 11.5.1−11.5.4 (IEEE, 2017).

Li, C. et al. Efficient and self-adaptive in-situ learning in multilayer memristor neural networks. Nat. Commun. 9, 2385 (2018).

Chen, W. et al. CMOS-integrated memristive non-volatile computing-in-memory for AI edge processors. Nat. Electron. 2, 420–428 (2019).

Yao, P. et al. Fully hardware-implemented memristor convolutional neural network. Nature 577, 641–646 (2020).

Le Gallo, M. et al. Mixed-precision in-memory computing. Nat. Electron. 1, 246–253 (2018).

Ambrogio, S. et al. Equivalent-accuracy accelerated neural-network training using analogue memory. Nature 558, 60–67 (2018).

Merrikh-Bayat, F. et al. High-performance mixed-signal neurocomputing with nanoscale floating-gate memory cell arrays. IEEE Trans Neural Netw. Learn. Syst. 29, 4782–4790 (2018).

Wang, P. et al. Three-dimensional NAND flash for vector-matrix multiplication. IEEE Trans. VLSI Syst. 27, 988–991 (2019).

Xiang, Y. et al. Efficient and robust spike-driven deep convolutional neural networks based on NOR flash computing array. IEEE Trans. Electron Dev. 67, 2329–2335 (2020).

Lin, Y.-Y. et al. A novel voltage-accumulation vector-matrix multiplication architecture using resistor-shunted floating gate flash memory device for low-power and high-density neural network applications. In 2018 IEEE International Electron Devices Meeting 2.4.1−2.4.4 (IEEE, 2018).

Song, Y. J. et al. Demonstration of highly manufacturable STT-MRAM embedded in 28nm logic. In 2018 IEEE International Electron Devices Meeting 18.2.1−18.2.4 (IEEE, 2018).

Lee, Y. K. et al. Embedded STT-MRAM in 28-nm FDSOI logic process for industrial MCU/IoT application. In 2018 IEEE Symposium on VLSI Technology 181−182 (IEEE, 2018).

Wei, L. et al. A 7Mb STT-MRAM in 22FFL FinFET technology with 4ns read sensing time at 0.9V using write-verify-write scheme and offset-cancellation sensing technique. In 2019 IEEE Int. Solid-State Circuits Conference Digest of Technical Papers 214−216 (IEEE, 2019).

LeCun, Y., Bengio, Y. & Hinton, G. Deep learning. Nature 521, 436–444 (2015).

Yu, S. Neuro-inspired computing with emerging nonvolatile memory. Proc. IEEE 106, 260–285 (2018).

Patil, A. D. et al. An MRAM-based deep in-memory architecture for deep neural networks. In 2019 IEEE International Symposium on Circuits and Systems (IEEE, 2019).

Zabihi, M. et al. In-memory processing on the spintronic CRAM: from hardware design to application mapping. IEEE Trans. Comput. 68, 1159–1173 (2019).

Kang, S. H. Embedded STT-MRAM for energy-efficient and cost-effective mobile systems. In 2014 IEEE Symposium on VLSI Technology (IEEE, 2014).

Zeng, Z. M. et al. Effect of resistance-area product on spin-transfer switching in MgO-based magnetic tunnel junction memory cells. Appl. Phys. Lett. 98, 072512 (2011).

Kim, H. & Kwon, S.-W. Full-precision neural networks approximation based on temporal domain binary MAC operations. US patent 17/085,300.

Hung, J.-M. et al. Challenges and trends in developing nonvolatile memory-enabled computing chips for intelligent edge devices. IEEE Trans. Electron Dev. 67, 1444–1453 (2020).

Jiang, Z., Yin, S., Seo, J. & Seok, M. C3SRAM: an in-memory-computing SRAM macro based on robust capacitive coupling computing mechanism. IEEE J. Solid-State Circuits 55, 1888–1897 (2020).

Hubara, I. et al. Binarized neural networks. In Advances in Neural Information Processing Systems 4107−4115 (NeurIPS, 2016).

Rastegari, M., Ordonez, V., Redmon, J. & Farhadi, A. XNOR-Net: ImageNet classification using binary convolutional neural networks. In 2016 European Conference on Computer Vision 525−542 (2016).

Lin, X., Zhao, C. & Pan, W. Towards accurate binary convolutional neural network. In Advances in Neural Information Processing Systems 345−353 (NeurIPS, 2017).

Zhuang, B. et al. Structured binary neural networks for accurate image classification and semantic segmentation. In 2019 IEEE Conference on Computer Vision and Pattern Recognition 413−422 (IEEE, 2019).

Shafiee, A. et al. ISAAC: a convolutional neural network accelerator with in-situ analog arithmetic in crossbars. In 2016 ACM/IEEE 43rd Annual International Symposium on Computer Architecture 14−26 (IEEE, 2016).

Liu, B. et al. Digital-assisted noise-eliminating training for memristor crossbar-based analog neuromorphic computing engine. In 2013 50th ACM/EDAC/IEEE Design Automation Conference 1−6 (IEEE, 2013).

Wu, B., Iandola, F., Jin, P. H. & Keutzer, K. SqueezeDet: unified, small, low power fully convolutional neural networks for real-time object detection for autonomous driving. In 2017 IEEE Conference on Computer Vision and Pattern Recognition 129−137 (IEEE, 2017).

Ham, D., Park, H., Hwang, S. & Kim, K. Neuromorphic electronics based on copying and pasting the brain. Nat. Electron. 4, 635–644 (2021).

Wang, P. et al. Two-step quantization for low-bit neural networks. In 2018 IEEE Conference on Computer Vision and Pattern Recognition 4376−4384 (IEEE, 2018).

Acknowledgements

We thank G. Jin (Corporate President of Samsung Electronics), S. Hwang (CEO of Samsung SDS), E. Shim (Corporate EVP of Samsung Electronics) and G. Jeong (Corporate EVP of Samsung Electronics) for technical discussions and support.

Author information

Authors and Affiliations

Contributions

S.J., G.-H.K., Y.S., D.H. and S.J.K. devised this work. S.J., H.L., S.M. and Y.J. designed the analogue circuits, and S.-W.K. and M.K. designed the digital circuits for the CMOS chips. S.H., B.K., B.S., Kilho Lee, Kangho Lee, G.-H.K. and Y.S. characterized the MTJs and optimized the fabrication steps for the MRAM crossbar array. S.J. and Y.J. developed evaluation boards. S.J., H.L., S.M. and W.Y. evaluated the MRAM crossbar array. H.K. and C.C. designed and trained the two-layer, eight-layer and ten-layer neural networks. S.J., S.K.Y. and W.Y. performed the MNIST classification experiments. S.J., S.K.Y., S.J.K. and D.H. analysed dot product errors. S.K.Y. and H.K. developed the emulator. S.-W.K. and M.K. developed the face detection system and performed the face detection experiments. S.J., H.L., S.K.Y., S.J.K. and D.H. wrote the paper. G.-H.K., Y.S., C.C., D.H. and S.J.K. supervised this work. All authors read and discussed the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review information

Nature thanks the anonymous reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data figures and tables

Extended Data Fig. 1 Conventional memory crossbar array for ANN computing.

a, Conventional memory crossbar array to perform analogue vector–matrix multiplication. b, Vector–matrix multiplication is prevalent in ANN computing: it is used to transfer data from a layer to the next.

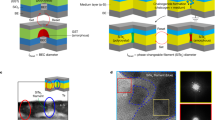

Extended Data Fig. 2 MTJ write/read operation.

a, For each column, two write/read data lines were added with access switches. b, Example of write operation. c, Example of read operation.

Extended Data Fig. 4 Error distribution after digital offsets.

Error distribution modified from Fig. 2d after applying digital offsets to select columns.

Extended Data Fig. 5 Performance with varying conditions.

a, Measured power efficiency and mean absolute error of the dot products as a function of the supply voltage of the TDC readout electronics for an 11.1 MHz operating frequency. b, Measured power efficiency and mean absolute error of the dot products as a function of the operating frequency for a 1.0 V supply voltage for the TDC readout electronics. For both a and b, each mean absolute error is obtained from 1,600 dot products as in Option 1 in Extended Data Table 1, except for the mean absolute error in the case of the 1.0 V TDC readout supply and the 11.1 MHz operating frequency, for which the error is calculated from ~4 million dot products (this Option 2 in Extended Data Table 1).

Extended Data Fig. 6 Measurement set-up.

a, Evaluation board containing voltage regulators, clock generators, an MCU and the MRAM crossbar array chip. b, The MCU communicates with the PC via USB.

Extended Data Fig. 7 Distribution of dot product errors.

a, NC = 1 and NΔ = −16 data group. b, NC = 1 and NΔ = 16 data group.

Supplementary information

Supplementary Video 1

This video demonstrates a real-time face detection using the system of Fig. 4c, including a webcam. The stream of images captured by the webcam are shown on the bottom left. When a face appears, the neural network detects it and a smile icon shows on the top left. As the face disappears, the neural network recog-nizes it and the smile icon disappears. The graph on the bottom right shows the dissipated power in the four MRAM crossbar arrays combined. The 10-layer VGG-like neural network model used here is shown on the top right, where the 7th convolutional layer is realized by using 4 MRAM crossbar arrays.

Rights and permissions

About this article

Cite this article

Jung, S., Lee, H., Myung, S. et al. A crossbar array of magnetoresistive memory devices for in-memory computing. Nature 601, 211–216 (2022). https://doi.org/10.1038/s41586-021-04196-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41586-021-04196-6

This article is cited by

-

Universal scaling between wave speed and size enables nanoscale high-performance reservoir computing based on propagating spin-waves

npj Spintronics (2024)

-

Powering AI at the edge: A robust, memristor-based binarized neural network with near-memory computing and miniaturized solar cell

Nature Communications (2024)

-

Single-nanometer CoFeB/MgO magnetic tunnel junctions with high-retention and high-speed capabilities

npj Spintronics (2024)

-

Transforming edge hardware with in situ learning features

Nature Reviews Electrical Engineering (2024)

-

Interfacial magnetic spin Hall effect in van der Waals Fe3GeTe2/MoTe2 heterostructure

Nature Communications (2024)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.