Abstract

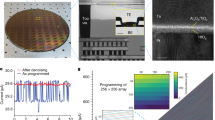

Memristor-enabled neuromorphic computing systems provide a fast and energy-efficient approach to training neural networks1,2,3,4. However, convolutional neural networks (CNNs)—one of the most important models for image recognition5—have not yet been fully hardware-implemented using memristor crossbars, which are cross-point arrays with a memristor device at each intersection. Moreover, achieving software-comparable results is highly challenging owing to the poor yield, large variation and other non-ideal characteristics of devices6,7,8,9. Here we report the fabrication of high-yield, high-performance and uniform memristor crossbar arrays for the implementation of CNNs, which integrate eight 2,048-cell memristor arrays to improve parallel-computing efficiency. In addition, we propose an effective hybrid-training method to adapt to device imperfections and improve the overall system performance. We built a five-layer memristor-based CNN to perform MNIST10 image recognition, and achieved a high accuracy of more than 96 per cent. In addition to parallel convolutions using different kernels with shared inputs, replication of multiple identical kernels in memristor arrays was demonstrated for processing different inputs in parallel. The memristor-based CNN neuromorphic system has an energy efficiency more than two orders of magnitude greater than that of state-of-the-art graphics-processing units, and is shown to be scalable to larger networks, such as residual neural networks. Our results are expected to enable a viable memristor-based non-von Neumann hardware solution for deep neural networks and edge computing.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 51 print issues and online access

$199.00 per year

only $3.90 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

Code availability

The simulator XPEsim used here is publicly available39. The codes used for the simulations described in Methods are available from the corresponding author upon reasonable request.

References

Ielmini, D. & Wong, H.-S. P. In-memory computing with resistive switching devices. Nat. Electron. 1, 333–343 (2018).

Wong, H.-S. P. & Salahuddin, S. Memory leads the way to better computing. Nat. Nanotechnol. 10, 191–194 (2015); correction 10, 660 (2015).

Williams, R. S. What’s next? Comput. Sci. Eng. 19, 7–13 (2017).

Li, C. et al. Efficient and self-adaptive in-situ learning in multilayer memristor neural networks. Nat. Commun. 9, 2385 (2018).

LeCun, Y., Bengio, Y. & Hinton, G. Deep learning. Nature 521, 436–444 (2015).

Wu, H. et al. Device and circuit optimization of RRAM for neuromorphic computing. In 2017 IEEE Int. Electron Devices Meeting (IEDM) 11.5.1–11.5.4 (IEEE, 2017).

Xia, Q. & Yang, J. J. Memristive crossbar arrays for brain-inspired computing. Nat. Mater. 18, 309–323 (2019); correction 18, 518 (2019).

Ding, K. et al. Phase-change heterostructure enables ultralow noise and drift for memory operation. Science 366, 210–215 (2019).

Welser, J., Pitera, J. & Goldberg, C. Future computing hardware for AI. In 2018 IEEE Int. Electron Devices Meeting (IEDM) 1.3.1–1.3.6 (IEEE, 2018).

LeCun, Y., Bottou, L., Bengio, Y. & Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 86, 2278–2324 (1998).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In Proc. IEEE Conference on Computer Vision and Pattern Recognition 770–778 (IEEE, 2016).

Ren, S., He, K., Girshick, R. & Sun, J. Faster R-CNN: towards real-time object detection with region proposal networks. In Advances in Neural Information Processing Systems 91–99 (NIPS, 2015).

Coates, A. et al. Deep learning with COTS HPC systems. In Proc. 30th Int. Conference on Machine Learning 1337–1345 (PMLR, 2013).

Jouppi, N. P. et al. In-datacenter performance analysis of a tensor processing unit. In Proc. 44th Int. Symposium on Computer Architecture (ISCA) 1–12 (IEEE, 2017).

Chen, Y.-H., Krishna, T., Emer, J. S. & Sze, V. Eyeriss: an energy-efficient reconfigurable accelerator for deep convolutional neural networks. IEEE J. Solid-State Circuits 52, 127–138 (2017).

Horowitz, M. Computing’s energy problem (and what we can do about it). In 2014 IEEE Int. Solid-State Circuits Conference Digest of Technical Papers (ISSCC) 10–14 (IEEE, 2014).

Woo, J. et al. Improved synaptic behavior under identical pulses using AlOx/HfO2 bilayer RRAM array for neuromorphic systems. IEEE Electron Device Lett. 37, 994–997 (2016).

Burr, G. W. et al. Neuromorphic computing using non-volatile memory. Adv. Phys. X 3, 89–124 (2017).

Yu, S. Neuro-inspired computing with emerging nonvolatile memorys. Proc. IEEE 106, 260–285 (2018).

Choi, S. et al. SiGe epitaxial memory for neuromorphic computing with reproducible high performance based on engineered dislocations. Nat. Mater. 17, 335–340 (2018).

Burr, G. W. et al. Experimental demonstration and tolerancing of a large-scale neural network (165 000 synapses) using phase-change memory as the synaptic weight element. IEEE Trans. Electron Dev. 62, 3498–3507 (2015).

Gao, L., Chen, P.-Y. & Yu, S. Demonstration of convolution kernel operation on resistive cross-point array. IEEE Electron Device Lett. 37, 870–873 (2016).

Kumar, S., Strachan, J. P. & Williams, R. S. Chaotic dynamics in nanoscale NbO2 Mott memristors for analogue computing. Nature 548, 318–321 (2017).

Yao, P. et al. Face classification using electronic synapses. Nat. Commun. 8, 15199 (2017).

Prezioso, M. et al. Training and operation of an integrated neuromorphic network based on metal-oxide memristors. Nature 521, 61–64 (2015).

Sheridan, P. M. et al. Sparse coding with memristor networks. Nat. Nanotechnol. 12, 784–789 (2017).

Ambrogio, S. et al. Equivalent-accuracy accelerated neural-network training using analogue memory. Nature 558, 60–67 (2018).

Serb, A. et al. Unsupervised learning in probabilistic neural networks with multi-state metal-oxide memristive synapses. Nat. Commun. 7, 12611 (2016).

Gao, B. et al. Modeling disorder effect of the oxygen vacancy distribution in filamentary analog RRAM for neuromorphic computing. In 2017 IEEE Int. Electron Devices Meeting (IEDM) 4.4.1–4.4.4 (IEEE, 2017).

Donahue, J. et al. DeCAF: a deep convolutional activation feature for generic visual recognition. In 2014 Int. Conference on Machine Learning 647–655 (ACM, 2014).

Han, S., Mao, H. & Dally, W. J. Deep compression: compressing deep neural networks with pruning, trained quantization and huffman coding. In 2016 International Conference on Learning Representations (ICLR) (2016).

Xu, X. et al. Fully CMOS-compatible 3D vertical RRAM with self-aligned self-selective cell enabling sub-5-nm scaling. In 2016 IEEE Symposium on VLSI Technology 84–85 (IEEE, 2016).

Pi, S. et al. Memristor crossbar arrays with 6-nm half-pitch and 2-nm critical dimension. Nat. Nanotechnol. 14, 35–39 (2019).

Wu, W. et al. A methodology to improve linearity of analog RRAM for neuromorphic computing. In 2018 IEEE Symposium on VLSI Technology 103–104 (IEEE, 2018).

Cai, Y. et al. Training low bitwidth convolutional neural network on RRAM. In Proc. 23rd Asia and South Pacific Design Automation Conference 117–122 (IEEE, 2018).

Zhang, Q. et al. Sign backpropagation: an on-chip learning algorithm for analog RRAM neuromorphic computing systems. Neural Netw. 108 217–223 (2018).

Zhao, M. et al. Investigation of statistical retention of filamentary analog RRAM for neuromophic computing. In 2017 IEEE Int. Electron Devices Meeting (IEDM) 39.34.31–39.34.34 (IEEE, 2017).

Kim, W. et al. Confined PCM-based analog synaptic devices offering low resistance-drift and 1000 programmable states for deep learning. In 2019 Symposium on VLSI Technology T66–T67 (IEEE, 2019).

Zhang, W. et al. Design guidelines of RRAM-based neural-processing unit: a joint device–circuit–algorithm analysis. In 2019 56th ACM/IEEE Design Automation Conference (DAC) 63.1 (IEEE, 2019).

O’Halloran, M. & Sarpeshkar, R. A 10-nW 12-bit accurate analog storage cell with 10-aA leakage. IEEE J. Solid-State Circuits 39, 1985–1996 (2004).

Kull, L. et al. A 3.1 mW 8b 1.2 GS/s single-channel asynchronous SAR ADC with alternate comparators for enhanced speed in 32 nm digital SOI CMOS. IEEE J. Solid-State Circuits 48, 3049–3058 (2013).

Krizhevsky, A. & Hinton, G. Learning Multiple Layers of Features From Tiny Images. Technical report (University of Toronto, 2009); https://www.cs.toronto.edu/~kriz/learning-features-2009-TR.pdf.

Acknowledgements

This work is supported in part by the National Natural Science Foundation of China (61851404), the Beijing Municipal Science and Technology Project (Z191100007519008), the National Key R&D Program of China (2016YFA0201801), the Huawei Project (YBN2019075015) and the National Young Thousand Talents Plan.

Author information

Authors and Affiliations

Contributions

P.Y., H.W. and B.G. conceived and designed the experiments. P.Y. set up the hardware platform and conducted the experiments. Q.Z. performed the simulation work. W.Z. benchmarked the system performance. All authors discussed the results. P.Y., H.W., B.G., J.T. and J.J.Y. contributed to the writing and editing of the manuscript. H.W. and H.Q. supervised the project.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Peer review information Nature thanks Darsen Lu and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data figures and tables

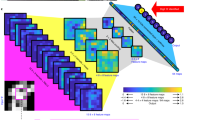

Extended Data Fig. 1 Cumulative probability distribution of memristor conductance for the remaining seven 2,048-cell arrays.

The red circles highlight abnormal data points, which deviate from their target conductance ranges owing to device variations. a, The 32-level conductance distribution on an entire 2,048-cell array. b–g, Conductance distributions for the first 32 rows of memristors (namely, 512 devices) for each of the remaining 2,048-cell arrays. A small number of writing errors were observed during the programming procedure (red circles), which could be attributed to device variations. These results show good consistency with Fig. 1c.

Extended Data Fig. 2 Input patch set generated during the sliding process and input waveforms during the convolution.

a, Input nine-dimensional vectors unrolled from the input 3 × 3 patch set. xm_n indicates the relevant pixel at the crossing of row m and column n. The input patches are generated during the sliding convolutional process over the input feature planes and are subsequently injected into the memristor weight array. For a specific input vector, each element is encoded as the corresponding input pulse applied on the associated bit line. The red box indicates the current input vector, in agreement with the case illustrated in b. b, Input waveform sample in a memristor-based convolutional operation. Each element (an 8-bit binary number) in the input vector is encoded as sequential pulses over eight time intervals (t1, t2, …, t8). For a particular period tk, bit k determines whether a 0-V pulse or a 0.2-V pulse is used. Each ‘1’ at a certain bit location implies the existence of a 0.2-V read pulse in the corresponding time interval, and a ‘0’ indicates a 0-V read pulse. The corresponding output current Ik is sensed, and this quantized value is then left-shifted by k – 1. Finally, the quantized and shifted results with respect to the same source line over the eight time intervals are summed together (ISL in the inset equation). The difference between every two ISL values from a pair of differential source lines is considered to be the expected weighted-sum result.

Extended Data Fig. 3 Drift of conductance weights in time and associated degradation in system accuracy.

a, Changes in the conductance weights with time, over 30 days after the transfer. The grey lines present the changing traces of all the cell weights, and the three coloured lines depict representative evolution trends. b, Mean weight value for the cells that belong to each of the 15 levels according to a. The 15 coloured traces show the 15 mean-value evolution traces as a function of time. c, Profile of accuracy loss during the experiment. The overall trend of the accuracy loss indicates how the conductance weight drifts deteriorate the recognition accuracy over time after hybrid training. Compared with the initial state, the recognition accuracy increases by 0.37% at t = 10 min, owing to random device-state fluctuations. d, Evolution of the weights of the weight cells considered in c over 2 h. t0 denotes the moment when the hybrid training is completed. The grey lines show the changing traces of the states of the cells, and the three coloured lines depict representative evolution trends.

Extended Data Fig. 4 Experimental accuracy of parallel memristor convolvers after hybrid training, and simulated training efficiency of different combinations of tuning layers.

a, The error rate on the entire training set after hybrid training drops substantially compared with that achieved after weight transfer for any individual convolver group. The error rates with respect to the G1, G2 and G3 groups decrease from 4.82%, 6.43% and 5.85% to 2.89%, 4.22% and 3.40%, respectively, after hybrid training. b, Simulation results for all combinations of tuning weights for different layers using hybrid training and the five-layer CNN.

Extended Data Fig. 5

Architecture of the simulated memristor-based neural processing unit and relevant circuit modules in the macro core.

Extended Data Fig. 6 Scalability of the joint strategy.

The joint strategy combines the hybrid training method and the parallel computing technique of replicating the same kernels. We show that a small subset of training data is sufficient for hybrid training. a, Recognition accuracies at different stages of the simulation process. During the simulation with ResNET-56, the kernel weights of the first convolutional layer are replicated to four groups of memristor arrays. b, After hybrid training the error rate on the test set drops substantially compared with that obtained immediately after weight transfer using each convolver group. c, The error rates drop considerably after hybrid training using 10% of the training data in the experiment with the five-layer CNN.The three experimental results show good consistency. d, Recognition accuracies at different stages of the simulation with ResNET-56. A high level of accuracy is achieved even when using 3% of the training data (1,500 training images) to update the weights of the FC layer. The mean accuracy for 10 trials is 92.00% after hybrid training, and the standard deviation is 0.8%.

Extended Data Fig. 7 Effects of read disturbance.

To investigate this effect, we set up this experiment by writing all the convolutional kernel weights to two memristor PEs. After programming all the conductance weights smoothly, we physically apply 1,000,000 read pulses (0.2 V) on all weight cells to see how the read operations disturb the weight states. a, Changes in the states of the 936 conductance weightswhile cycling read operations. The grey lines give the changing traces of the states of all cells, and the three coloured lines depict representative evolution trends. b, Conductance evolution of eight memristor states during 106 read cycles. c, Distributions of weight states after 1, 105, 5 × 105 and 106 read cycles.

Extended Data Fig. 8 Test results of the required programming pulse number and programming currents.

a, Average pulse number required to reach each target conductance state. All the initial states were programmed to >4.0 μA. b, Stacked histogram distribution corresponding to the data in a. c, Current–voltage curve obtained during a d.c. voltage sweep. RESET and SET currents are measured at points #1 and #3, respectively. The conditions of RESET and SET pulses in this study are marked by points #2 and #4, respectively. Point #5 labels the read current at the low-resistance state (LRS) and point #6 labels the read current at the high-resistance state (HRS). d, Typical programming parameters. The programming current is 60 μA at 1.5 V during the SET process and 45 μA at −1.2 V during RESET.

Supplementary information

Supplementary Information

This file contains Supplementary Notes 1 and 2 and Supplementary Figures 1–7.

Rights and permissions

About this article

Cite this article

Yao, P., Wu, H., Gao, B. et al. Fully hardware-implemented memristor convolutional neural network. Nature 577, 641–646 (2020). https://doi.org/10.1038/s41586-020-1942-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41586-020-1942-4

This article is cited by

-

Electrochemical random-access memory: recent advances in materials, devices, and systems towards neuromorphic computing

Nano Convergence (2024)

-

Purely self-rectifying memristor-based passive crossbar array for artificial neural network accelerators

Nature Communications (2024)

-

A ferroelectric fin diode for robust non-volatile memory

Nature Communications (2024)

-

Powering AI at the edge: A robust, memristor-based binarized neural network with near-memory computing and miniaturized solar cell

Nature Communications (2024)

-

Physical reservoir computing with emerging electronics

Nature Electronics (2024)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.