Abstract

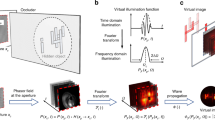

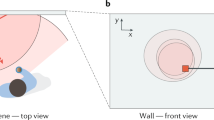

Computing the amounts of light arriving from different directions enables a diffusely reflecting surface to play the part of a mirror in a periscope—that is, perform non-line-of-sight imaging around an obstruction. Because computational periscopy has so far depended on light-travel distances being proportional to the times of flight, it has mostly been performed with expensive, specialized ultrafast optical systems1,2,3,4,5,6,7,8,9,10,11,12. Here we introduce a two-dimensional computational periscopy technique that requires only a single photograph captured with an ordinary digital camera. Our technique recovers the position of an opaque object and the scene behind (but not completely obscured by) the object, when both the object and scene are outside the line of sight of the camera, without requiring controlled or time-varying illumination. Such recovery is based on the visible penumbra of the opaque object having a linear dependence on the hidden scene that can be modelled through ray optics. Non-line-of-sight imaging using inexpensive, ubiquitous equipment may have considerable value in monitoring hazardous environments, navigation and detecting hidden adversaries.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 51 print issues and online access

$199.00 per year

only $3.90 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

Raw data captured with our digital camera during the experiments presented here are available on GitHub at https://github.com/Computational-Periscopy/Ordinary-Camera.

References

Kirmani, A., Hutchison, T., Davis, J. & Raskar, R. Looking around the corner using transient imaging. In Proc. 2009 IEEE 12th Int. Conf. Computer Vision 159–166 (IEEE, 2009).

Velten, A. et al. Recovering three-dimensional shape around a corner using ultrafast time-of-flight imaging. Nat. Commun. 3, 745 (2012).

Gupta, O., Willwacher, T., Velten, A., Veeraraghavan, A. & Raskar, R. Reconstruction of hidden 3D shapes using diffuse reflections. Opt. Express 20, 19096–19108 (2012).

Xu, K. et al. Image contrast model of non-line-of-sight imaging based on laser range-gated imaging. Opt. Eng. 53, 061610 (2013).

Laurenzis, M. & Velten, A. Nonline-of-sight laser gated viewing of scattered photons. Opt. Eng. 53, 023102 (2014).

Buttafava, M., Zeman, J., Tosi, A., Eliceiri, K. & Velten, A. Non-line-of-sight imaging using a time-gated single photon avalanche diode. Opt. Express 23, 20997–21011 (2015).

Gariepy, G., Tonolini, F., Henderson, R., Leach, J. & Faccio, D. Detection and tracking of moving objects hidden from view. Nat. Photon. 10, 23–26 (2016).

Klein, J., Laurenzis, M. & Hullin, M. Transient imaging for real-time tracking around a corner. In Proc. SPIE Electro-Optical Remote Sensing X 998802 (International Society for Optics and Photonics, 2016).

Chan, S., Warburton, R. E., Gariepy, G., Leach, J. & Faccio, D. Non-line-of-sight tracking of people at long range. Opt. Express 25, 10109–10117 (2017).

Tsai, C.-y., Kutulakos, K. N., Narasimhan, S. G. & Sankaranarayanan, A. C. The geometry of first-returning photons for non-line-of-sight imaging. In Proc. 2017 IEEE Conf. Computer Vision and Pattern Recognition 7216–7224 (IEEE, 2017).

Heide, F. et al. Non-line-of-sight imaging with partial occluders and surface normals. Preprint at https://arxiv.org/abs/1711.07134 (2018).

O’Toole, M., Lindell, D. B. & Wetzstein, G. Confocal non-line-of-sight imaging based on the light-cone transform. Nature 555, 338–341 (2018).

Kirmani, A., Jeelani, H., Montazerhodjat, V. & Goyal, V. K. Diffuse imaging: creating optical images with unfocused time-resolved illumination and sensing. IEEE Signal Process. Lett. 19, 31–34 (2012).

Heide, F., Hullin, M. B., Gregson, J. & Heidrich, W. Low-budget transient imaging using photonic mixer devices. ACM Trans. Graph. 32, 45 (2013).

Heide, F., Xiao, L., Heidrich, W. & Hullin, M. B. Diffuse mirrors: 3D reconstruction from diffuse indirect illumination using inexpensive time-of-flight sensors. In Proc. 2014 IEEE Conf. Computer Vision and Pattern Recognition 3222–3229 (IEEE, 2014).

Kadambi, A., Zhao, H., Shi, B. & Raskar, R. Occluded imaging with time-of-flight sensors. ACM Trans. Graph. 35, 15 (2016).

Pawlikowska, A. M., Halimi, A., Lamb, R. A. & Buller, G. S. Single-photon three-dimensional imaging at up to 10 kilometers range. Opt. Express 25, 11919–11931 (2017).

Kirmani, A. et al. First-photon imaging. Science 343, 58–61 (2014).

Shin, D., Kirmani, A., Goyal, V. K. & Shapiro, J. H. Photon-efficient computational 3D and reflectivity imaging with single-photon detectors. IEEE Trans. Comput. Imaging 1, 112–125 (2015).

Altmann, Y., Ren, X., McCarthy, A., Buller, G. S. & McLaughlin, S. Lidar waveform-based analysis of depth images constructed using sparse single-photon data. IEEE Trans. Image Process. 25, 1935–1946 (2016).

Rapp, J. & Goyal, V. K. A few photons among many: unmixing signal and noise for photon-efficient active imaging. IEEE Trans. Comput. Imaging 3, 445–459 (2017).

Pediredla, A. K., Buttafava, M., Tosi, A., Cossairt, O. & Veeraraghavan, A. Reconstructing rooms using photon echoes: a plane based model and reconstruction algorithm for looking around the corner. In Proc. 2017 IEEE Int. Conf. Computational Photography 1–12 (IEEE, 2017).

Pandharkar, R. et al. Estimating motion and size of moving non-line-of-sight objects in cluttered environments. In Proc. 2011 IEEE Conf. Computer Vision and Pattern Recognition 265–272 (IEEE, 2011).

Naik, N., Zhao, S., Velten, A., Raskar, R. & Bala, K. Single view reflectance capture using multiplexed scattering and time-of-flight imaging. ACM Trans. Graphics 30, 171 (ACM, 2011).

Thrampoulidis, C. et al. Exploiting occlusion in non-line-of-sight active imaging. IEEE Trans. Comput. Imaging 4, 419–431 (2018).

Xu, F. et al. Revealing hidden scenes by photon-efficient occlusion-based opportunistic active imaging. Opt. Express 26, 9945–9962 (2018).

Torralba, A. & Freeman, W. T. Accidental pinhole and pinspeck cameras: revealing the scene outside the picture. Int. J. Comput. Vis. 110, 92–112 (2014).

Bouman, K. L. et al. Turning corners into cameras: Principles and methods. In Proc. 23rd IEEE Int. Conf. Computer Vision, 2270–2278 (IEEE, 2017).

Baradad, M. et al. Inferring light fields from shadows. In Proc. IEEE Conf. Computer Vision and Pattern Recognition, 6267–6275 (2018).

Klein, J., Peters, C., Martín, J., Laurenzis, M. & Hullin, M. B. Tracking objects outside the line of sight using 2D intensity images. Sci. Rep. 6, 32491 (2016).

Kajiya, J. T. The rendering equation. In Proc. 13th Conf. Computer Graphics and Interactive Techniques Vol. 20 143–150 (ACM, 1986).

Beck, A. & Teboulle, M. A fast iterative shrinkage-thresholding algorithm. SIAM J. Imaging Sci. 2, 183–202 (2009).

Vetterli, M., Kovačević, J. & Goyal, V. K. Foundations of Signal Processing (Cambridge Univ. Press, Cambridge, 2014).

Golub, G. H. & Van Loan, C. F. Matrix Computations 3rd edn (Johns Hopkins Univ. Press, Baltimore, 1989),

Bednar, J. B. & Watt, T. L. Alpha-trimmed means and their relationship to median filters. IEEE Trans. Acoust. Speech Signal Process. 32, 145–153 (1984).

Acknowledgements

We thank F. Durand, W. T. Freeman, Y. Ma, J. Rapp, J. H. Shapiro, A. Torralba, F. N. C. Wong and G. W. Wornell for discussions. This work was supported by the Defense Advanced Research Projects Agency (DARPA) REVEAL Program contract number HR0011-16-C-0030.

Reviewer information

Nature thanks M. Laurenzis, M. O’Toole and A. Velten for their contribution to the peer review of this work.

Author information

Authors and Affiliations

Contributions

V.K.G. conceptualized the project, obtained funding and supervised the research. C.S. and J.M.-B. developed the methodology, performed the experiments, wrote the software and validated the results. C.S. produced the visualizations. J.M.-B. wrote the original draft. C.S., J.M.-B. and V.K.G. reviewed and edited the paper.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary Information

This file contains supplementary methods, supplementary text, supplementary references (36–45), figures S1-S19, tables S1 and S2 and a caption for the supplementary video.

Video 1

Supplementary Video describes the experimental setup, the computational field of view of the system, and each of the steps involved in the computational estimation of the non-line-of-sight occluder position and scene. Also shown is a moving scene measured at 1 frame per second and reconstructed frame-by-frame, without exploiting assumptions of temporal continuity of the scene.

Rights and permissions

About this article

Cite this article

Saunders, C., Murray-Bruce, J. & Goyal, V.K. Computational periscopy with an ordinary digital camera. Nature 565, 472–475 (2019). https://doi.org/10.1038/s41586-018-0868-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41586-018-0868-6

This article is cited by

-

Two-edge-resolved three-dimensional non-line-of-sight imaging with an ordinary camera

Nature Communications (2024)

-

Research Advances on Non-Line-of-Sight Imaging Technology

Journal of Shanghai Jiaotong University (Science) (2024)

-

Attention-based network for passive non-light-of-sight reconstruction in complex scenes

The Visual Computer (2024)

-

Non-line-of-sight snapshots and background mapping with an active corner camera

Nature Communications (2023)

-

Learning diffractive optical communication around arbitrary opaque occlusions

Nature Communications (2023)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.