Abstract

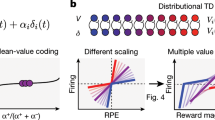

Midbrain dopamine signals are widely thought to report reward prediction errors that drive learning in the basal ganglia. However, dopamine has also been implicated in various probabilistic computations, such as encoding uncertainty and controlling exploration. Here, we show how these different facets of dopamine signalling can be brought together under a common reinforcement learning framework. The key idea is that multiple sources of uncertainty impinge on reinforcement learning computations: uncertainty about the state of the environment, the parameters of the value function and the optimal action policy. Each of these sources plays a distinct role in the prefrontal cortex–basal ganglia circuit for reinforcement learning and is ultimately reflected in dopamine activity. The view that dopamine plays a central role in the encoding and updating of beliefs brings the classical prediction error theory into alignment with more recent theories of Bayesian reinforcement learning.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 print issues and online access

$189.00 per year

only $15.75 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

References

Watabe-Uchida, M., Eshel, N. & Uchida, N. Neural circuitry of reward prediction error. Annu. Rev. Neurosci. 40, 373–394 (2017).

Schultz, W., Dayan, P. & Montague, P. R. A neural substrate of prediction and reward. Science 275, 1593–1599 (1997).

Courville, A. C., Daw, N. D. & Touretzky, D. S. Bayesian theories of conditioning in a changing world. Trends Cogn. Sci. 10, 294–300 (2006).

Gershman, S. J., Blei, D. M. & Niv, Y. Context, learning, and extinction. Psychol. Rev. 117, 197–209 (2010).

Gershman, S. J. A Unifying probabilistic view of associative learning. PLOS Comput. Biol. 11, e1004567 (2015).

Kakade, S. & Dayan, P. Acquisition and extinction in autoshaping. Psychol. Rev. 109, 533–544 (2002).

Friston, K. et al. Active inference and epistemic value. Cogn. Neurosci. 6, 187–214 (2015).

Gershman, S. J. Deconstructing the human algorithms for exploration. Cognition 173, 34–42 (2018).

Speekenbrink, M. & Konstantinidis, E. Uncertainty and exploration in a restless bandit problem. Top. Cogn. Sci. 7, 351–367 (2015).

Wilson, R. C., Geana, A., White, J. M., Ludvig, E. A. & Cohen, J. D. Humans use directed and random exploration to solve the explore-exploit dilemma. J. Exp. Psychol. Gen. 143, 2074–2081 (2014).

Ma, W. J. & Jazayeri, M. Neural coding of uncertainty and probability. Annu. Rev. Neurosci. 37, 205–220 (2014).

Rao, R. P. N. Decision making under uncertainty: a neural model based on partially observable Markov decision processes. Front. Comput. Neurosci. 4, 146 (2010).

Daw, N. D., Courville, A. C. & Touretzky, D. S. Representation and timing in theories of the dopamine system. Neural Comput. 18, 1637–1677 (2006).

Gershman, S. J. & Daw, N. D. Reinforcement learning and episodic memory in humans and animals: an integrative framework. Annu. Rev. Psychol. 68, 101–128 (2017).

Jazayeri, M. & Movshon, J. A. Optimal representation of sensory information by neural populations. Nat. Neurosci. 9, 690–696 (2006).

Grabska-Barwińska, A. et al. A probabilistic approach to demixing odors. Nat. Neurosci. 20, 98–106 (2017).

Friston, K., FitzGerald, T., Rigoli, F., Schwartenbeck, P. & Pezzulo, G. Active inference: a process theory. Neural Comput. 29, 1–49 (2017).

Buesing, L., Bill, J., Nessler, B. & Maass, W. Neural dynamics as sampling: a model for stochastic computation in recurrent networks of spiking neurons. PLOS Comput. Biol. 7, e1002211 (2011).

Pecevski, D., Buesing, L. & Maass, W. Probabilistic inference in general graphical models through sampling in stochastic networks of spiking neurons. PLOS Comput. Biol. 7, e1002294 (2011).

Haefner, R. M., Berkes, P. & Fiser, J. Perceptual decision-making as probabilistic inference by neural sampling. Neuron 90, 649–660 (2016).

Orbán, G., Berkes, P., Fiser, J. & Lengyel, M. Neural variability and sampling-based probabilistic representations in the visual cortex. Neuron 92, 530–543 (2016).

Ting, C.-C., Yu, C.-C., Maloney, L. T. & Wu, S.-W. Neural mechanisms for integrating prior knowledge and likelihood in value-based probabilistic inference. J. Neurosci. 35, 1792–1805 (2015).

Yoshida, W. & Ishii, S. Resolution of uncertainty in prefrontal cortex. Neuron 50, 781–789 (2006).

Yoshida, W., Seymour, B., Friston, K. J. & Dolan, R. J. Neural mechanisms of belief inference during cooperative games. J. Neurosci. 30, 10744–10751 (2010).

Fleming, S. M., van der Putten, E. J. & Daw, N. D. Neural mediators of changes of mind about perceptual decisions. Nat. Neurosci. 21, 617–624 (2018).

Kumaran, D., Banino, A., Blundell, C., Hassabis, D. & Dayan, P. Computations underlying social hierarchy learning: distinct neural mechanisms for updating and representing self-relevant information. Neuron 92, 1135–1147 (2016).

Turner, M. S., Cipolotti, L., Yousry, T. A. & Shallice, T. Confabulation: damage to a specific inferior medial prefrontal system. Cortex 44, 637–648 (2008).

Karlsson, M. P., Tervo, D. G. R. & Karpova, A. Y. Network resets in medial prefrontal cortex mark the onset of behavioral uncertainty. Science 338, 135–139 (2012).

Fuhs, M. C. & Touretzky, D. S. Context learning in the rodent hippocampus. Neural Comput. 19, 3173–3215 (2007).

Dufort, R. H., Guttman, N. & Kimble, G. A. One-trial discrimination reversal in the white rat. J. Comp. Physiol. Psychol. 47, 248–249 (1954).

Pubols, B. H. Jr. Serial reversal learning as a function of the number of trials per reversal. J. Comp. Physiol. Psychol. 55, 66–68 (1962).

Bromberg-Martin, E. S., Matsumoto, M., Hong, S. & Hikosaka, O. A pallidus-habenula-dopamine pathway signals inferred stimulus values. J. Neurophysiol. 104, 1068–1076 (2010).

Gallistel, C. R., Mark, T. A., King, A. P. & Latham, P. E. The rat approximates an ideal detector of changes in rates of reward: implications for the law of effect. J. Exp. Psychol. Anim. Behav. Process. 27, 354–372 (2001).

Jang, A. I. et al. The role of frontal cortical and medial-temporal lobe brain areas in learning a Bayesian prior belief on reversals. J. Neurosci. 35, 11751–11760 (2015).

Hampton, A. N., Bossaerts, P. & O’Doherty, J. P. The role of the ventromedial prefrontal cortex in abstract state-based inference during decision making in humans. J. Neurosci. 26, 8360–8367 (2006).

Mondragón, E., Alonso, E. & Kokkola, N. Associative learning should go deep. Trends Cogn. Sci. 21, 822–825 (2017).

Gibbon, J. Scalar expectancy theory and weber’s law in animal timing. Psychol. Rev. 84, 279–325 (1977).

Gibbon, J., Church, R. M. & Meck, W. H. Scalar timing in memory. Ann. NY Acad. Sci. 423, 52–77 (1984).

Shi, Z., Church, R. M. & Meck, W. H. Bayesian optimization of time perception. Trends Cogn. Sci. 17, 556–564 (2013).

Petter, E. A., Gershman, S. J. & Meck, W. H. Integrating models of interval timing and reinforcement learning. Trends Cogn. Sci. 22, 911–922 (2018).

Ma, W. J., Beck, J. M., Latham, P. E. & Pouget, A. Bayesian inference with probabilistic population codes. Nat. Neurosci. 9, 1432–1438 (2006).

Ludvig, E. A., Sutton, R. S. & Kehoe, E. J. Stimulus representation and the timing of reward-prediction errors in models of the dopamine system. Neural Comput. 20, 3034–3054 (2008).

Ludvig, E. A., Sutton, R. S. & Kehoe, E. J. Evaluating the TD model of classical conditioning. Learn. Behav. 40, 305–319 (2012).

Gershman, S. J., Moustafa, A. A. & Ludvig, E. A. Time representation in reinforcement learning models of the basal ganglia. Front. Comput. Neurosci. 7, 194 (2014).

Mello, G. B. M., Soares, S. & Paton, J. J. A scalable population code for time in the striatum. Curr. Biol. 25, 1113–1122 (2015).

Akhlaghpour, H. et al. Dissociated sequential activity and stimulus encoding in the dorsomedial striatum during spatial working memory. eLife 5, e19507 (2016).

Kim, J., Kim, D. & Jung, M. W. Distinct dynamics of striatal and prefrontal neural activity during temporal discrimination. Front. Integr. Neurosci. 12, 34 (2018).

Bakhurin, K. I. et al. Differential encoding of time by prefrontal and striatal network dynamics. J. Neurosci. 37, 854–870 (2017).

Adler, A. et al. Temporal convergence of dynamic cell assemblies in the striato-pallidal network. J. Neurosci. 32, 2473–2484 (2012).

Emmons, E. B. et al. Rodent medial frontal control of temporal processing in the dorsomedial striatum. J. Neurosci. 37, 8718–8733 (2017).

Gouvêa, T. S. et al. Striatal dynamics explain duration judgments. eLife 4, e11386 (2015).

Takahashi, Y. K., Langdon, A. J., Niv, Y. & Schoenbaum, G. Temporal specificity of reward prediction errors signaled by putative dopamine neurons in rat VTA depends on ventral striatum. Neuron 91, 182–193 (2016).

Wiener, S. I. Spatial and behavioral correlates of striatal neurons in rats performing a self-initiated navigation task. J. Neurosci. 13, 3802–3817 (1993).

Lavoie, A. M. & Mizumori, S. J. Spatial, movement- and reward-sensitive discharge by medial ventral striatum neurons of rats. Brain Res. 638, 157–168 (1994).

Caan, W., Perrett, D. I. & Rolls, E. T. Responses of striatal neurons in the behaving monkey. 2. Visual processing in the caudal neostriatum. Brain Res. 290, 53–65 (1984).

Brown, V. J., Desimone, R. & Mishkin, M. Responses of cells in the tail of the caudate nucleus during visual discrimination learning. J. Neurophysiol. 74, 1083–1094 (1995).

Kakade, S. & Dayan, P. Dopamine: generalization and bonuses. Neural Netw. 15, 549–559 (2002).

Schultz, W. & Romo, R. Dopamine neurons of the monkey midbrain: contingencies of responses to stimuli eliciting immediate behavioral reactions. J. Neurophysiol. 63, 607–624 (1990).

Kobayashi, S. & Schultz, W. Reward contexts extend dopamine signals to unrewarded stimuli. Curr. Biol. 24, 56–62 (2014).

Matsumoto, H., Tian, J., Uchida, N. & Watabe-Uchida, M. Midbrain dopamine neurons signal aversion in a reward-context-dependent manner. eLife 5, e17328 (2016).

Hollerman, J. R. & Schultz, W. Dopamine neurons report an error in the temporal prediction of reward during learning. Nat. Neurosci. 1, 304–309 (1998).

Starkweather, C. K., Babayan, B. M., Uchida, N. & Gershman, S. J. Dopamine reward prediction errors reflect hidden-state inference across time. Nat. Neurosci. 20, 581–589 (2017).

Fiorillo, C. D., Newsome, W. T. & Schultz, W. The temporal precision of reward prediction in dopamine neurons. Nat. Neurosci. 11, 966–973 (2008).

Nakahara, H., Itoh, H., Kawagoe, R., Takikawa, Y. & Hikosaka, O. Dopamine neurons can represent context-dependent prediction error. Neuron 41, 269–280 (2004).

Starkweather, C. K., Gershman, S. J. & Uchida, N. The medial prefrontal cortex shapes dopamine reward prediction errors under state uncertainty. Neuron 98, 616–629.e6 (2018).

Babayan, B. M., Uchida, N. & Gershman, S. J. Belief state representation in the dopamine system. Nat. Commun. 9, 1891 (2018).

Nomoto, K., Schultz, W., Watanabe, T. & Sakagami, M. Temporally extended dopamine responses to perceptually demanding reward-predictive stimuli. J. Neurosci. 30, 10692–10702 (2010).

Lak, A., Nomoto, K., Keramati, M., Sakagami, M. & Kepecs, A. Midbrain dopamine neurons signal belief in choice accuracy during a perceptual decision. Curr. Biol. 27, 821–832 (2017).

Sarno, S., de Lafuente, V., Romo, R. & Parga, N. Dopamine reward prediction error signal codes the temporal evaluation of a perceptual decision report. Proc. Natl Acad. Sci. USA 114, E10494–E10503 (2017).

Ghavamzadeh, M., Mannor, S., Pineau, J. & Tamar, A. Bayesian Reinforcement Learning: A Survey (Now Publishers, 2015).

Gershman, S. J. Dopamine, inference, and uncertainty. Neural Comput. 29, 3311–3326 (2017).

Kamin, L. J. in Punishment and Aversive Behavior (eds Campbell, B. A. & Church, R. M.) 279–296 (Appleton-Century-Crofts, 1969).

Rescorla, R. A. & Wagner, A. R. in Classical Conditioning II: Recent Research and Theory (eds Black, A. H. & Prokasy, W. F.) 64–99 (Appleton-Century-Crofts, 1972).

Waelti, P., Dickinson, A. & Schultz, W. Dopamine responses comply with basic assumptions of formal learning theory. Nature 412, 43–48 (2001).

Steinberg, E. E. et al. A causal link between prediction errors, dopamine neurons and learning. Nat. Neurosci. 16, 966–973 (2013).

Miller, R. R. & Matute, H. Biological significance in forward and backward blocking: resolution of a discrepancy between animal conditioning and human causal judgment. J. Exp. Psychol. Gen. 125, 370–386 (1996).

Urushihara, K. & Miller, R. R. Backward blocking in first-order conditioning. PsycEXTRA Dataset https://doi.org/10.1037/e527342012-212 (2007).

Blaisdell, A. P., Gunther, L. M. & Miller, R. R. Recovery from blocking achieved by extinguishing the blocking CS. Anim. Learn. Behav. 27, 63–76 (1999).

Dayan, P. & Kakade, S. Explaining away in weight space. Adv. Neural Inf. Process. Syst. 13, 451–457 (2001).

Miller, R. R. & Witnauer, J. E. Retrospective revaluation: the phenomenon and its theoretical implications. Behav. Process. 123, 15–25 (2016).

Lubow, R. E. Latent inhibition. Psychol. Bull. 79, 398–407 (1973).

Aguado, L., Symonds, M. & Hall, G. Interval between preexposure and test determines the magnitude of latent inhibition: Implications for an interference account. Anim. Learn. Behav. 22, 188–194 (1994).

Sadacca, B. F., Jones, J. L. & Schoenbaum, G. Midbrain dopamine neurons compute inferred and cached value prediction errors in a common framework. eLife 5, e13665 (2016).

Young, A. M., Joseph, M. H. & Gray, J. A. Latent inhibition of conditioned dopamine release in rat nucleus accumbens. Neuroscience 54, 5–9 (1993).

Frank, M. J. & Claus, E. D. Anatomy of a decision: striato-orbitofrontal interactions in reinforcement learning, decision making, and reversal. Psychol. Rev. 113, 300–326 (2006).

Deco, G. & Rolls, E. T. Synaptic and spiking dynamics underlying reward reversal in the orbitofrontal cortex. Cereb. Cortex 15, 15–30 (2005).

Wilson, R. C., Takahashi, Y. K., Schoenbaum, G. & Niv, Y. Orbitofrontal cortex as a cognitive map of task space. Neuron 81, 267–279 (2014).

Sadacca, B. F. et al. Orbitofrontal neurons signal sensory associations underlying model-based inference in a sensory preconditioning task. eLife 7, e30373 (2018).

Jones, J. L. et al. Orbitofrontal cortex supports behavior and learning using inferred but not cached values. Science 338, 953–956 (2012).

Payzan-LeNestour, É. & Bossaerts, P. Do not bet on the unknown versus try to find out more: estimation uncertainty and ‘unexpected uncertainty’ both modulate exploration. Front. Neurosci. 6, 150 (2012).

Schulz, E., Konstantinidis, E. & Speekenbrink, M. Putting bandits into context: How function learning supports decision making. J. Exp. Psychol. Learn. Mem. Cogn. 44, 927–943 (2018).

Myers, J. L. & Sadler, E. Effects of range of payoffs as a variable in risk taking. J. Exp. Psychol. 60, 306–309 (1960).

Busemeyer, J. R. & Townsend, J. T. Decision field theory: a dynamic-cognitive approach to decision making in an uncertain environment. Psychol. Rev. 100, 432–459 (1993).

Gershman, S. J. Uncertainty and exploration. Decision 6, 277–286 (2019).

Frank, M. J., Doll, B. B., Oas-Terpstra, J. & Moreno, F. Prefrontal and striatal dopaminergic genes predict individual differences in exploration and exploitation. Nat. Neurosci. 12, 1062–1068 (2009).

Humphries, M. D., Khamassi, M. & Gurney, K. Dopaminergic control of the exploration-exploitation trade-off via the basal ganglia. Front. Neurosci. 6, 9 (2012).

Pezzulo, G., Rigoli, F. & Friston, K. J. Hierarchical active inference: a theory of motivated control. Trends Cogn. Sci. 22, 294–306 (2018).

Botvinick, M. & Toussaint, M. Planning as inference. Trends Cogn. Sci. 16, 485–488 (2012).

FitzGerald, T. H. B., Dolan, R. J. & Friston, K. Dopamine, reward learning, and active inference. Front. Comput. Neurosci. 9, 136 (2015).

Friston, K. J. et al. Dopamine, affordance and active inference. PLOS Comput. Biol. 8, e1002327 (2012).

Weele, C. M. V. et al. Dopamine enhances signal-to-noise ratio in cortical-brainstem encoding of aversive stimuli. Nature 563, 397–401 (2018).

Thurley, K., Senn, W. & Lüscher, H.-R. Dopamine increases the gain of the input-output response of rat prefrontal pyramidal neurons. J. Neurophysiol. 99, 2985–2997 (2008).

Gershman, S. J., Norman, K. A. & Niv, Y. Discovering latent causes in reinforcement learning. Curr. Opin. Behav. Sci. 5, 43–50 (2015).

Gershman, S. J., Monfils, M.-H., Norman, K. A. & Niv, Y. The computational nature of memory modification. eLife 6, e23763 (2017).

Redish, A. D., Jensen, S., Johnson, A. & Kurth-Nelson, Z. Reconciling reinforcement learning models with behavioral extinction and renewal: implications for addiction, relapse, and problem gambling. Psychol. Rev. 114, 784–805 (2007).

Gardner, M. P. H., Schoenbaum, G. & Gershman, S. J. Rethinking dopamine as generalized prediction error. Proc. Biol. Sci. 285, 20181645 (2018).

Gershman, S. J. The successor representation: its computational logic and neural substrates. J. Neurosci. 38, 7193–7200 (2018).

Le Bouc, R. et al. Computational dissection of dopamine motor and motivational functions in humans. J. Neurosci. 36, 6623–6633 (2016).

Walton, M. E. & Bouret, S. What is the relationship between dopamine and effort? Trends Neurosci. 42, 79–91 (2019).

Westbrook, A. & Braver, T. S. Dopamine does double duty in motivating cognitive effort. Neuron 91, 708 (2016).

Niv, Y., Daw, N. D., Joel, D. & Dayan, P. Tonic dopamine: opportunity costs and the control of response vigor. Psychopharmacology 191, 507–520 (2007).

Sutton, R. S. Learning to predict by the methods of temporal differences. Mach. Learn. 3, 9–44 (1988).

Kaelbling, L. P., Littman, M. L. & Cassandra, A. R. Planning and acting in partially observable stochastic domains. Artif. Intell. 101, 99–134 (1998).

Pan, W.-X., Schmidt, R., Wickens, J. R. & Hyland, B. I. Dopamine cells respond to predicted events during classical conditioning: evidence for eligibility traces in the reward-learning network. J. Neurosci. 25, 6235–6242 (2005).

Menegas, W., Babayan, B. M., Uchida, N. & Watabe-Uchida, M. Opposite initialization to novel cues in dopamine signaling in ventral and posterior striatum in mice. eLife 6, e21886 (2017).

Tobler, P. N., Fiorillo, C. D. & Schultz, W. Adaptive coding of reward value by dopamine neurons. Science 307, 1642–1645 (2005).

Behrens, T. E. J., Woolrich, M. W., Walton, M. E. & Rushworth, M. F. S. Learning the value of information in an uncertain world. Nat. Neurosci. 10, 1214–1221 (2007).

Acknowledgements

Author contributions

The authors contributed equally to all aspects of the article.

Reviewer information

Nature Reviews Neuroscience thanks J. Pearson and the other, anonymous, reviewers for their contribution to the peer review of this work.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Glossary

- Active inference

-

The hypothesis that biological agents will take actions to reduce expected surprise.

- Free-energy principle

-

The hypothesis that the objective of brain function is to minimize expected (average) surprise.

- Posterior probability distribution

-

The conditional probability of latent variables (for example, hidden states) conditional on observed variables (for example, sensory data).

- Sufficient statistic

-

A function of a data sample that completely summarizes the information contained in the data about the parameters of a probability distribution.

- Value function

-

The mapping from states to long-term expected future rewards (typically discounted to reflect a preference for sooner over later rewards).

Rights and permissions

About this article

Cite this article

Gershman, S.J., Uchida, N. Believing in dopamine. Nat Rev Neurosci 20, 703–714 (2019). https://doi.org/10.1038/s41583-019-0220-7

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41583-019-0220-7

This article is cited by

-

State and rate-of-change encoding in parallel mesoaccumbal dopamine pathways

Nature Neuroscience (2024)

-

Dopamine-independent effect of rewards on choices through hidden-state inference

Nature Neuroscience (2024)

-

A mesocortical glutamatergic pathway modulates neuropathic pain independent of dopamine co-release

Nature Communications (2024)

-

Blocking D2/D3 dopamine receptors in male participants increases volatility of beliefs when learning to trust others

Nature Communications (2023)

-

Multisensory synchrony of contextual boundaries affects temporal order memory, but not encoding or recognition

Psychological Research (2023)