Abstract

Wearable devices provide an alternative pathway to clinical diagnostics by exploiting various physical, chemical and biological sensors to mine physiological (biophysical and/or biochemical) information in real time (preferably, continuously) and in a non-invasive or minimally invasive manner. These sensors can be worn in the form of glasses, jewellery, face masks, wristwatches, fitness bands, tattoo-like devices, bandages or other patches, and textiles. Wearables such as smartwatches have already proved their capability for the early detection and monitoring of the progression and treatment of various diseases, such as COVID-19 and Parkinson disease, through biophysical signals. Next-generation wearable sensors that enable the multimodal and/or multiplexed measurement of physical parameters and biochemical markers in real time and continuously could be a transformative technology for diagnostics, allowing for high-resolution and time-resolved historical recording of the health status of an individual. In this Review, we examine the building blocks of such wearable sensors, including the substrate materials, sensing mechanisms, power modules and decision-making units, by reflecting on the recent developments in the materials, engineering and data science of these components. Finally, we synthesize current trends in the field to provide predictions for the future trajectory of wearable sensors.

Similar content being viewed by others

Introduction

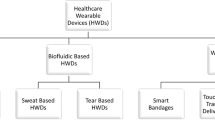

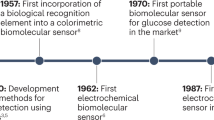

Wearable sensors are integrated analytical devices that combine typical characteristics of point-of-care systems with mobile connectivity in autonomously operating, self-contained units. Such devices allow for the continuous monitoring of the biometrics of an individual in a non-invasive or minimally invasive manner, enabling the detection of small physiological changes from baseline values over time1. Wearables have existed for decades (Fig. 1a); for example, the Holter monitor, a medical sensor used for measuring the electrical activity of the heart, dates back to the 1960s2. Although the total number of components might vary depending on the specific application, the common building blocks (Fig. 1b) of wearable devices are the substrate and electrode materials, sensing units (elements for interfacing, sampling, biorecognition, signal transduction and amplification), decision-making units (components for data collection, processing and transmission) and power units3.

a | Major commercial and research-stage milestones in the development of wearable devices for health-care monitoring10,12,13,28,99,244,283,284,285,286,287,288. Advances in telecommunication technologies, materials science, bioengineering, electronics and data analysis, together with the rapidly increasing interest in monitoring health and well-being, have been the primary drivers of innovation in modern wearable sensors148. More recently, the considerable reductions in cost have enabled the penetration of modern wearable sensors into many segments of the (consumer) population and geographical regions of the world, unlocking continuous monitoring at a scale never seen before. In addition, advances in fabrication methods have enabled greater sophistication at increasingly smaller dimensions, enabling sensor platforms to reach scales amenable to integration into personal technologies. b | Building blocks of wearable devices, including the substrate and electrode materials and the components of the sensing, decision-making and power units. ISF, interstitial fluid. Panel b (on-tooth sensor) adapted from ref.285, Springer Nature Limited.

Modern wearables can perform high-quality measurements comparable to those of regulated medical instruments. Hence, the divide between consumer and medical wearable devices is increasingly blurred. First-generation wearables, in the form of watches, shoes or headsets, have mainly focused on biophysical monitoring by tracking the physical activity, heart rate or body temperature of an individual1,4,5. With the wide adoption and success of first-generation wearables, the focus has been slowly shifting towards non-invasive or minimally invasive biochemical and multimodal monitoring, which is the next step in realizing truly individualized health care6,7,8. These second-generation wearables encompass form factors such as on-skin patches, tattoos, tooth-mounted films, contact lenses and textiles, as well as more invasive microneedles and injectable devices9,10,11. A key characteristic of second-generation wearables is the use of biofluids, whereby biorecognition elements are used to convert the presence of a specific analyte into a detectable signal. Most of these examples are laboratory prototypes, but there are some commercial exceptions (including the FreeStyle Libre glucose monitoring system and the Gx Sweat Patch)1. Wearable biochemical and biophysical sensors have been used to detect and manage diseases1,12,13,14,15 and for wellness applications16,17,18. The use of wearable devices, however, extends beyond human-centred health and well-being as their applications have also proliferated in animal health monitoring for the pet and animal husbandry markets19.

This Review details the recent developments in the field of wearable sensors with a particular focus on the sensing, decision-making and power units to establish a framework for the design and implementation of wearable devices. As we examine the various building blocks of wearable sensors, we also analyse the current trends, discuss the challenges and provide recommendations to establish a vision for how this field might evolve in the next decade to transform health care.

Assembling wearable devices

As first-generation wearables that primarily use physical sensors are mature, with many commercial examples, we place more emphasis on the ongoing development of second-generation wearables by highlighting key aspects of the sampled biofluids as well as the biorecognition elements used for analyte sensing.

Substrate materials

The unique operating constraints of a wearable sensor requires the careful selection of substrate materials with key properties. The overall materials in a device must not only have the properties necessary for the functioning of the device components but also the range of mechanical properties requisite for any wearable garment or accessory: flexibility, elasticity and toughness. We focus on the four most widely used classes in wearables development: natural materials, synthetic polymers, hydrogels and inorganic materials (Table 1).

Natural materials have been used in making clothing for millennia and are thus the foundational wearable material class, providing a combination of flexibility and mechanical robustness. These materials are derived from biological sources20 and include cotton, wool, silk, hemp, linen and chitin. One benefit of using natural materials in wearables is that the attendant fabrication methods, such as weaving and knitting21, for creating textiles with the mechanical properties required for clothing, have been extensively explored. Furthermore, these materials have already been selected to have the necessary mechanical strength, flexibility and user comfort required of a wearable substrate. Owing to the extensive supply chains of the textile industry, there are a considerable variety of materials and the cost is low. Being biological in nature, they are biocompatible and sustainable, which are key advantages for wearable materials. However, natural materials inherently lack certain desirable physical properties, including conductivity and optical attributes that are of interest for smart wearable components, although there are ongoing attempts to modify them to acquire these properties22,23. Owing to this limitation, natural materials are often used as a substrate for wearables on which other functional materials are incorporated. The route of incorporation can occur through alteration of the material itself before higher-order assembly, as illustrated by doping of a cotton thread with nanotubes24, coating of wool fibres with silver nanowires25, decorating nanocellulose with optically active nanoparticles26 or modifying silk fabrics with graphene27. Alternatively, natural materials can be combined with other materials during the fabrication process to create a mosaic material, such as the incorporation of optical fibres into the weft of a fabric for probing material-integrated reactions28 or large-scale digital knitting of multi-material textiles29.

Synthetic polymers are the functional materials most widely used in creating wearable sensors, owing to two factors. First, polymers have a wide range of fabrication methods available to them, including methods such as weaving that have traditionally been the provenance of natural materials. Synthetic polymers can also be fabricated using scalable methods such as moulding, extrusion, lamination, deposition, photolithography, milling or newer additive techniques such as 3D printing30,31. This versatility readily enables access to diverse form factors for creating wearable sensor components with the desired mechanical properties, such as stretchable substrates and textiles or layer-by-layer assembled semi-flexible circuits. Second, the properties of polymeric systems can be modified through an expansive range of physical and chemical functionalizations. For decades, polymers have been used to tune the mechanical and/or hydrophobic properties of commercial fabrics to achieve tough, flexible, waterproof and/or breathable clothing. There are industrial polymers with inherent strength, high heat resistance, conductivity and optics, among other properties. With such a varied palette of functional materials, complex devices composed of synthetic polymers enable the development of a panoply of flexible and shape-conforming circuits32, sensors33, energy harvesters34, waveguides35, light-emitting displays36 and antennas37 for wearables. Although there are synthetic polymers that inherently possess the aforementioned properties, most wearable devices have used polymer–inorganic composites, in which the synthetic polymer serves as the bulk flexible substrate, to achieve the greatest functionality. Wearable sensors can thus consist of multiple ultrathin layers of different synthetic polymer and composite materials assembled in a complex but low-cost manner. Many polymers are inert and biocompatible, including polydimethylsiloxane (PDMS), polylactic acid, polyvinylidene fluoride, polytetrafluoroethylene, polyimide and silicone, whereas others may not be skin safe for long-term direct exposure and would require careful determination of potential hazards. Most wearable devices fabricated from synthetic polymers are designed as single use or with a limited lifetime, which, combined with the difficulty in recycling advanced polymers, makes polymers poorly sustainable. In response, researchers are pushing the frontiers of green polymer chemistry to create a new generation of soft functional materials38.

Although hydrogels can be considered a subset of natural materials or synthetic polymers, their distinctive properties and unique applications in wearables warrant a discussion of them as a separate class of materials. The development of hydrogels has largely occurred in the biomedical engineering field owing to their high biocompatibility39, with a focus on their use as implantable materials or ex vivo cellular scaffolds. Hydrogels are soft, deformable and transparent materials, and their hydrophilic properties and porous networks allow for a high water content that makes them especially biologically friendly. Many natural and synthetic polymers can be used to form hydrogels, including polyethylene glycol, polyacrylamide, alginate, polyvinyl alcohol and gelatine. Facile polymerization processes enable moulding, additive manufacturing and even in situ formation. The porous nature of hydrogels provides a scaffold for creating soft electrodes40, microneedle arrays41, wicking structures for the collection of bodily fluids42,43, or even transparent batteries44. For wearables, the biocompatibility of hydrogels makes them suitable for applications involving on skin, wound or body interfacing. Hydrogels have been used in wearable devices for mechanical and chemical sensing45,46, as a depot for drug delivery47 and in the maintenance of cell-based living sensors48. The properties of zwitterionic hydrogels make them ideal materials for use as protective barriers to prevent biofouling, which can occur from interactions between a sensor and complex biofluids49. Nevertheless, although there are exceptions50, many hydrogels lack the desired mechanical properties, such as flexibility and toughness, for continuous robust operation. Moreover, hydrogels tend to cost more than other polymer systems leading to their use in specialty applications.

The last material class we consider is inorganic materials, which encompasses metals, semiconductors and nanomaterials. These materials have desirable properties, such as high conductivity, that are not achievable with other material classes. In addition, many nanomaterials have outstanding mechanical characteristics in terms of the flexibility and elasticity required for wearable devices. With the increasing interest in flexible electronics, there has been rapid development of advanced fabrication techniques for these materials, such as printing of metal51 or nanomaterial inks52 in serpentine patterns53 or even weaving of metal threads54, that allow for their incorporation into deformable wearable substrates. The use of this class is indispensable for wearables in which the general approach is the miniaturization and conversion of traditional electrical devices (namely, circuits, sensors55, antennas56 and integrated power systems57) into a wearable format. Integration of inorganic materials such as metals or semiconductors can be achieved through layer-by-layer strategies, with a popular approach being spin coating of thin metal foils onto a polymeric substrate such as polyimide or PDMS to create flexible, complex, multilayer electronics, such as ultrasound transducers58. Conductive metal–synthetic polymer blends as inks have even been used to assemble highly conformal ultrathin devices directly on the skin59. As the most electrically conductive metal, silver has been used extensively in wearable circuits42, although other conductive and semiconductive metals have been explored, including copper43, titanium carbide60 and various alloys61. Graphene, owing to its excellent conductive and mechanical properties, has been used to realize wearable strain sensors, printed circuit paths, transistors62 and capacitors63. There are also accessible protocols for the routine functionalization of graphene to develop highly sensitive and lightweight sensors for measuring proteins64, metabolites65 and gases66. Furthermore, thin films of graphene are transparent and extremely flexible, allowing for lightweight and ultrathin wearables such as electronic tattoos67. Cheaper inorganic materials, such as carbon fibres, have also been used to create durable wearable motion sensors68. The incorporation of inorganic materials in wearables is typically limited to key functional components, which limits the costs. The biocompatibility of inorganic materials is one area of concern, with nanomaterials in particular posing potential biohazard risks69. Hence, these materials are typically restricted to parts of wearables that are not intimately in contact with the user. Another consideration is the poor sustainability of inorganic materials, as their minute presence in complex wearable devices does not make extraction and recycling feasible.

With such a diverse assortment of available materials, several factors must be considered when contemplating the design of a wearable device, including the specific application of interest, the desired level of performance, the target form factor, ease of fabrication during prototyping and scale-up manufacturing, cost and sustainability.

Sensing unit

The core of the sensing unit of second-generation wearables is the sampling of the biofluid that contains the analyte. The molecular interaction between the target and biorecognition element is then converted to a sensor output and amplified with the signal transduction and amplification unit.

Biofluids and sampling

In this section, we review different types of biofluid targeted by second-generation wearables, with a focus on the considerations and challenges for biosampling with wearable sensors, depending on the analyte of interest, target application and other device components (Table 2).

Interstitial fluid (ISF) fills the extracellular space between cells and tissue structures. This bodily fluid mainly seeps from capillaries into tissues and then drains through the lymphatic system back to vascular circulation. Thus, ISF can be considered a filtered cell-free fraction of blood plasma. ISF contains similar proteomic and metabolomic profiles to blood, and is thus a rich source of biomarkers. However, ISF is considerably less invasive to access than blood, making it an ideal fluid analyte for wearable sensing. In addition, ISF might contain disease biomarkers that are absent in blood70.

There are two main approaches for wearable ISF sampling: microneedles and iontophoretic extraction. Both sampling technologies are relatively mature, with decades of development and various commercial products using them. Microneedles consist of a single or an array of microscopic structures, usually fabricated from biocompatible synthetic polymers or hydrogels71, that are designed to puncture through the stratum corneum and epidermis to access the dermis72. Initially developed for drug delivery, microneedles have become a common approach for minimally invasive biofluid sampling in wearables. The architecture of microneedles can vary. Hollow microneedles sample ISF by extraction, whereas microneedles constructed of a porous material absorb the surrounding fluid. Alternatively, the microneedles can serve as solid penetration structures, with the analysis occurring on the surface of the needles by optical or electrochemical means73. Depending on the application, microneedle devices can be designed for continual ISF sampling, although the total time of use is typically hours to a day. Many of the challenges of microneedle patches relate to the optimization of mechanical strength to prevent buckling or fracturing of the microneedles, skin resealing of the puncture wounds after removal of the arrays and local pain responses during use74.

Iontophoresis is the use of an applied low-voltage electric current to a region of the skin, which causes the electromotive migration of charged molecules75. For wearable sensing, the electrode arrangement is adjusted to extract ISF out of the body and into an external sensor, which is known as reverse iontophoresis76. This process is minimally invasive, making it a convenient ISF sampling method. One of the first commercial wearable sensing devices, a wrist-worn glucose monitor, used iontophoresis to extract ISF for analysis77. More recent designs of iontophoretic-based wearables are fabricated by layer-by-layer printing to create extremely thin tattoo sensors78. The on-demand sampling by electronic circuit control makes iontophoresis an ideal approach for exploring continuous wearable sensing platforms. Moreover, the electronic nature of this method enables integration of the sensing and sampling functions into the same electrode system79. One challenge for prolonged continuous use is that the amount of fluid extracted through reverse iontophoresis is limited by the amount of current applied to the skin, with higher currents causing irritation and pain. An alternative magnetohydrodynamic approach has been used to extract ISF in a non-invasive manner, allowing for faster extraction with reduced irritation when compared with reverse iontophoresis80.

Most sensors that target sweat have focused on metabolite detection for fitness applications, with electrolytes, nutrients and lactate being common targets81,82. Beyond personal fitness, sweat has been explored in personal medical monitoring applications for glucose83, cortisol84 and alcohol85. Sweat might also provide a useful avenue for wearable monitoring of pathogenic states such as viral infections86, cystic fibrosis87 or chronic inflammatory diseases, including gout8 or inflammatory bowel disease88. Neuropeptide biomarkers in sweat can be used as potential assessors of neurological disorders89. Although it is a highly convenient biofluid, analysis of sweat has several sampling challenges (Table 2).

Sweat can be collected by capillary wicking into microfluidic pores fabricated into a synthetic polymer membrane that is directly in contact with the skin surface90,91,92,93. Although this approach is straightforward to implement, it suffers from low sample volumes. An alternative strategy involves active sweat induction and uses iontophoresis11,94 to locally deliver sweat-stimulating compounds; the sweat sample is then extracted by reverse iontophoresis. Using reverse iontophoresis has the added advantage of coupling sample collection of sweat and ISF into a single system to broaden the available biomarkers and enable cross-correlation of different biofluids95. Active strategies that use reverse iontophoresis11,94 for sweat induction are also utilized, and share the same challenges as described for ISF extraction.

A healthy person respires at a resting rate of 12–16 breaths per minute, with each breath containing a distribution of aerosols of different sizes. These breath aerosols are generated by shear forces in the lower respiratory system and can greatly increase during activities such as talking, coughing or sneezing96. These aerosols act as transmission vectors and are, therefore, a notable source of respiratory pathogen biomarkers97,98. Wearable sampling and analysis of breath aerosols can be non-invasively achieved using a face mask. This concept was demonstrated for the detection of COVID-19 viral nucleic acids in aerosols through the use of face mask-integrated biosensors28. Another example is the detection of hydrogen peroxide, a biomarker for respiratory illnesses, using a face mask-integrated electrochemical sensor99. Given the range of diseases that can be assessed using breath components and the increasing use of face masks for limiting the spread of respiratory diseases, this wearable format presents a relatively underexplored modality for widespread health monitoring. For aerosol-based analyses, optimizing the automated collection of deposited or condensed breath samples and integration into a biosensor (which typically operates in an aqueous environment) is a technical challenge. In addition, more than 3,500 volatile organic compounds are expelled during breathing3. Miniaturization of volatile organic compound sensors for face mask integration is a barrier that, if surmounted, would enable wearable monitoring of this important biomarker source.

Other biofluids of interest for wearable sensors, namely, saliva, tear fluid and urine, are discussed in the Supplementary information and their characteristics are compared in Table 2.

Signal transduction and amplification

For wearable sensors, the method of signal transduction must provide a stream of data over a period (days, weeks or longer) for continuous monitoring, which has limited the types of sensing method that can be used. The preferred detection modalities in wearable sensors have therefore been electromechanical, electrical, optical and electrochemical techniques for quantifying biochemical and biophysical signals6,100,101. These signal transduction methods can be implemented with low-cost materials and electronics, are low power and can directly access the signal under study. Whereas electrical and electromechanical transduction have been mostly used for the continuous acquisition of biophysical signals, such as electrocardiography, motion or posture analysis, and breathing (first-generation wearables), optical and electrochemical measurements are more widely used for biochemical analysis (second-generation devices). Biocompatibility constraints of the device materials, poor signal-to-noise ratio (SNR) and complex integration of the transduction elements with other structures or electronics within the wearable have so far limited the number of detectable biologically relevant analytes, resulting in the relatively slow development of wearable sensors for biochemical analysis1,3,4. Transduction modes can also be combined for multimodal analysis to improve the performance or range of capabilities available for continuous physiological monitoring8,10,102,103.

Electromechanical sensors transduce mechanical deformation or movement into electrical signals, mostly through changes in capacitance or resistance of the sensing structures under stimulus. Microelectromechanical systems accelerometers, popularized by the first iPhone, are probably the most widely used electromechanical transducers in wearable sensing104. These sensors, microfabricated on a silicon substrate in a cleanroom, can be produced at low cost and in high volumes, with integration of the interface electronics in the same package, which makes them easy to use by non-specialists. In addition to commercially available microelectromechanical systems accelerometers, stretchable electromechanical transducers made from a composite of a polymer matrix with nanoscale or microscale inorganic fillers105,106,107,108,109,110,111,112 have also been developed by academic laboratories. These materials conform to the body or skin when worn and typically sense strain due to the changes in the conduction paths within the matrix of the material. Although affordable and easy to manufacture through printing113,114 or moulding115,116, composite transducers do not contain integrated interface electronics. These transducers are also susceptible to drift and hysteresis, mostly caused by the polymer matrix, and hence require frequent calibration. An emerging area within electromechanical transducers involves acoustic and ultrasonic sensors. These sensors rely on piezoelectric and composite materials with skin-like mechanical properties to convert subtle acoustic vibrations produced by vasoconstriction and vasodilation events into electrical signals for the continuous monitoring of cardiovascular events19,58,117. Analogue and digital signal processing methods are often applied to the captured waveforms to remove motion artefacts and improve the SNR; multisensor configurations can also be used for active noise-cancelling and localization by beamforming118,119. Other emerging approaches are focused on the use of fabrics such as cotton and silk, both as substrates and transducers in pressure-sensitive wearables. These devices are not required to be tight fitting to the body, enabling their integration into loose garments, which are more comfortable to wear on a regular basis. These fabric transducers can detect pressures in the range 10–100 kPa and can be used to monitor physiological pulse, respiration and phonation120,121,122.

Electrical transducers are used in wearable sensors to monitor biopotentials, such as electroencephalography123, electrocardiography124,125 and electromyography1,126,127. Additional applications include the monitoring of sweat production, skin hydration levels, electrolyte concentrations and respiratory rates128,129. The stability of an electrically robust and conformal connection between the skin and device is still one of the main challenges in this type of sensor, which generally requires a conductive gel for operation to reduce the electrical impedance at the contact point130. Advanced materials such as ultrathin functionalized hydrogels129 and improved structural layouts100 are some of the solutions for increasing the skin–device conformity and the quality of the signals acquired. These advances have led to the reintroduction of dry electrodes as an option for first-generation wearables. Initially withdrawn because of skin irritation, noise propensity and high impedance that masked signals, improved combinations of conductive and biocompatible electrode materials (such as poly(3,4-ethylenedioxythiophene):polystyrene sulfonate (PEDOT:PSS) or polyurethane131) and circuit designs59 have made dry electrodes a viable alternative to gel electrodes.

Optical transduction includes colorimetric, plasmonic, fluorometric and absorption-based or reflection-based methods for the quantification of both biophysical and biochemical signals28,132,133. Colorimetric wearable sensors can be produced on flexible substrates, are inexpensive and are easily read by the naked eye or with the help of a smartphone camera, but are semi-quantitative at best93,134. Recent improvements towards fully quantitative colorimetric systems include the integration of image analysis and predictive algorithms into smartphone software; for example, the Gx Sweat Patch is the first commercial personalized performance tracking device that provides individual recommendations of hydration based on sweat rate and sodium levels in real time by utilizing such a custom algorithm for predictive colorimetric analysis135. More recent approaches for wearable biochemical sensing have explored methods developed in the field of synthetic biology28. The incorporation of freeze-dried, cell-free synthetic biological circuits into flexible substrates enabled the detection of molecular targets (drugs, metabolites or viruses) in breath and the environment by colorimetry, fluorescence and bioluminescence. Of course, non-colorimetric methods would require additional instrumentation to perform the measurement, increasing the cost and complexity. Optical methods can also be used to non-invasively measure body temperature, heart rate, blood oxygen saturation and respiration132,136,137. These methods exploit the absorption or reflection of light, which is generally produced by a low-cost light-emitting diode, to monitor physiology. Because optical biophysical analysis is inexpensive and non-invasive, it is commonly integrated into commercial wearable consumer electronics138,139. High power consumption, the overall size of active systems and the instability of chemical reagents (owing to photobleaching) are some of the critical challenges that limit the expansion of optical wearable sensors4.

Electrochemical transducers relate an electrical signal (current, potential or conductance) obtained from a biofluid sample to the analyte concentration in it. On the basis of the electrical parameter evaluated at the electrode–biofluid interface, electrochemical transducers can be divided into four categories: potentiometric (measuring potential against a reference), amperometric (measuring current at a constant potential), voltametric (measuring current over a potential scan) or conductometric (measuring the capacity to transport electric current)4,140. Given their simplicity and direct output, potentiometric and amperometric systems are the dominant electrochemical modalities used in wearable systems but are still in their infancy because of the difficulty in implementing the regeneration chemistries that are necessary to perform continuous measurements in biofluids. Other factors hindering their transition are the variability in analyte diffusion in biofluids and biofouling of sensing surfaces4. Advances in electrochemical wearable sensors, including multilayered reference electrodes with supporting electrolytes141, biocompatible coatings and microfluidic integration for uniform sampling or biofluid transport142, have enabled early demonstrations of the continuous measurement of biochemical and biophysical signals in sweat, breath, tears and saliva6,99,142,143,144.

The analogue signals generated by the transducer are digitized using an analogue-to-digital converter for digital processing, communication and storage. Conversion of the analogue signals to digital is ‘lossy’ as some of the information contained in the analogue signal is lost during digitization; this is also known as the quantization error. It is therefore crucial to choose the correct analogue-to-digital converter resolution to minimize conversion losses. The sampling frequency must also be greater than twice the highest frequency of the analogue signal being converted to satisfy the Nyquist–Shannon sampling criterion.

To compete with the gold-standard techniques and sensing devices used in clinical analysis, the analytical performance of wearable sensors might require enhancement through signal amplification4,7. Target signal amplification improves the sensitivity and specificity by increasing the SNR. Signal amplification can be accomplished through various strategies, including chemical, electrical and digital approaches. Chemical amplification can be achieved using catalysts, nanoparticles, conductive polymers and/or genetic circuits, which produce a higher output signal or concentration of a detectable analyte4,28,99,100,142. An example of the potential of chemical amplification in wearables is a fully integrated sensor array for perspiration analysis, in which several enzymes and mediators were used to enable the simultaneous monitoring of glucose and lactate as well as sodium and potassium ions10. Electrical amplification can be easily achieved using operational amplifiers or other electronic components, which can be combined with analogue filters to further improve the signal quality10,145. For example, the combination of ultraflexible organic differential amplifiers and post-mismatch compensation of organic thin-film transistor sensors enabled monitoring of weak electrocardiography signals by simultaneously amplifying the target biosignal and reducing the noise, improving the SNR by 200-fold145. The subtraction of signals registered by two sensors closely located on skin119 or the use of analogue or digital improvements (such as impedance bootstrapping or the control of amplifier gain)146 are some approaches adopted to reduce motion artefacts. Digital signal amplification can take the form of digital filters or more advanced machine learning (ML) techniques to improve sensor data quality. The additional intelligence provided by digital techniques can establish optimal sample collection times or identify superior sample analysis approaches, while enabling sensitive recognition of disease data patterns, which are all key factors for early diagnosis5,147,148.

Biorecognition elements

Biorecognition elements mediate the key molecular interaction that links the presence of a biomarker to a sensor output and are key elements of second-generation wearable sensors. These components directly participate in sensitive and specific detection of a target analyte, but must also be compatible with the desired operating mode of the sensor and the target application. Biorecognition elements can be naturally occurring or synthetically selected proteins, peptides, nucleic acids or a combination thereof (Table 3). As with many of the other components of wearable sensors, biorecognition elements have been directly adapted from laboratory-based diagnostic assays. All elements share similar challenges for their adaptation into wearable sensors: operation in a flexible format at room or skin surface temperature, chemistries for immobilization onto a flexible electrode, automation of biofluid sampling and sensor exposure, prevention of electrode fouling and passivation, and regeneration of the bioreceptors for continuous use. Various factors must be considered when selecting a biorecognition element for use in a wearable sensor (Box 1).

Enzymes were one of the earliest biorecognition elements used in wearables; in particular, redox enzymes such as glucose oxidase have been used for glucose-sensing applications149. Enzymes are particularly well suited for the sensitive detection of small molecules, such as metabolites. Moreover, owing to their catalytic turnover, enzymes enable signal amplification. As metabolites are generated by enzymatic processes, there is a wealth of natural enzymes that can be selected from for creating biosensors. Most enzyme-based sensors couple a redox event generated during a catalytic event with the detection of direct150 or mediator-based151 electron transfer to an electrode. If needed, multiple enzymes can be used in coupled reaction cascades to assemble a desired input–substrate and output–product pathway152. An advantage of enzyme-based wearable sensors is that owing to the catalytic turnover, they are well suited for continuous monitoring, providing that product inhibition effects are addressed. Care must be taken to select an enzyme that lacks broad substrate specificity, which could lead to confounding results from the promiscuous binding of similar substrates and is a particular concern for heterogeneous biofluids. Other considerations include the stability of the enzyme and ease of immobilization, depending on the application. Moreover, the byproducts from redox reactions can result in self-inactivation of enzymatic systems, and another challenge is that it can be difficult to chemically modify enzymes and proteins for immobilization153,154.

Affinity proteins bind to a target biomarker, most commonly other proteins and peptides, although they might also recognize smaller molecules, such as drugs, metabolites or carbohydrates. Natural affinity proteins are typically antibodies, whereas synthetic affinity proteins are based on antibody derivatives or other protein scaffolds. As protein-based biomarkers are widely used in clinical laboratory assays for the detection of physiological changes or pathological states, there is a large body of knowledge regarding the structure, function and engineering of affinity proteins. Their sensitivity and specificity can be exceptional, and further improvements can be made through rational design155 or directed evolution156. Another advantage is the ability of affinity proteins to operate robustly in a complex mixture, which can be problematic for other bioreceptors. Careful thought must be given to how the binding event between the affinity protein and target biomarker is converted into an output by the wearable platform. Similar to enzymes, a major challenge is the integration of the chemical modifications required for protein immobilization for use in a sensor. Regeneration of saturated antibody-based sensors for continuous mobile sensing applications is a notable obstacle, with demonstrated strategies requiring additional auxiliary microfluidic systems, harsh regeneration steps or the engineering of variants with fast dissociation kinetics157,158. To date, integrated regeneration of an affinity protein has not been demonstrated for a wearable device. Hence, most studies that use affinity proteins in wearable sensors are demonstrations of single-use devices. Another barrier is the considerable effort required to generate a suitable affinity protein for a novel target and to establish an economical production system. In addition, although some antibodies are highly stable, with the ability to be stored in a lyophilized format, most are not, which affects the storage lifetimes of antibody-based sensors.

Peptide-based recognition elements are short polypeptides, of less than 50 amino acids, with limited tertiary structure. Their small size and limited folding make them more stable than the larger affinity proteins. In addition, peptides can be assembled through chemical synthesis, enabling scalable production and chemical modification for a wide range of immobilization chemistries. Selection technologies such as phage display are well-established, enabling rapid isolation of binding peptides against a particular molecular target. A drawback of affinity peptides is that owing to the limited molecular recognition surface available, the binding affinity tends to be lower than that of affinity proteins, which could be problematic, especially for complex samples.

Aptamers are affinity molecules that can be constructed from RNA, single-stranded DNA or non-natural (xenobiotic) nucleic acid scaffolds. Selection and enrichment methods have been established for the rapid generation of binding aptamers that can rival antibodies in terms of binding affinity and specificity. Furthermore, aptamers can be chemically synthesized, allowing for various chemical modifications and, thus, integration into existing electronic sensor platforms159. In general, apatamers are more stable than antibodies and can be refolded after exposure to denaturing solvents or heat, allowing for various regeneration strategies for continuous wearable monitoring. However, a particular obstacle to using aptamers is their rapid degradation owing to the high levels of nuclease present in biofluids. This issue can be ameliorated by the use of non-natural nucleoside analogues that are nuclease resistant160.

CRISPR-based sensing systems enable the precise discrimination of nucleic acid signatures — an application that is relatively unexplored for wearable sensors. Nucleic acid sensors would enable wearable detection of external pathogen exposure161, local cellular damage or even cancer surveillance162. Using the highly specific nucleic acid targeting activity of CRISPR ribonucleic proteins and the unique collateral cleavage activity of some variants, robust field-deployable platforms (non-wearable) have been developed with detection sensitivities that exceed that of laboratory-based PCR with reverse transcription (RT–PCR)163. The target-activated nonspecific nuclease activity enables signal amplification for extremely sensitive detection. Cas13a and Cas12a platforms have been developed for RNA or DNA target detection, respectively164,165. Moreover, CRISPR-based sensors can be easily reprogrammed by replacement of the guide RNA, which is the target-determining element. To date, CRISPR-based systems have been used only in wearable sensors for exposure detection and breath-based face mask detection of viruses28.

An overarching challenge for any biorecognition sensing element is sustaining continual operation for long-term longitudinal monitoring. One aspect of this difficulty is the integration of efficient regeneration schemes into wearables to reset the sensors to their initial state. The required regeneration chemistry is unique to the kind of sensor being used. Another problem is biofouling or surface passivation, which can generate false-positive or false-negative signals, or erode the sensitivity of the sensor over time. These issues will be of particular concern as non-invasive wearable sensors advance towards long-term continuous monitoring, requiring constant sensor exposure to highly heterogeneous biofluids.

The literature is replete with wearable device prototypes that reuse established biorecognition elements. However, wearables also provide the opportunity for the development of new biosensors — with wearable device considerations taken into account from the outset — which could enable new specific functions. Validating new sensing modalities and exploring regeneration strategies would unlock devices for new biomarkers and enable more avenues for continuous monitoring, respectively. Furthermore, implementation of multimodal or multiplexed analysis would reduce false positives and provide multiple outputs that correlate to a physiological state for active calibration and correction. One interesting approach is to integrate multiple inputs from different biofluids for a comprehensive approach to a health or disease target3.

Decision-making unit

Wearables enable access to physiological information through distributed arrays of sensing units, creating a diverse database that spans from the individual to larger populations. In this high-dimensional multilayered ‘data landscape’, the role of the decision-making unit is to convert raw data into a human-readable format. Conventional strategies can only be applied within a restricted point of view, using selected features for a predefined task under human supervision. By contrast, data-driven methods have the potential to augment our capabilities in extracting patterns and relationships without squandering the potential of data fusion166,167,168,169,170. These data can be exchanged over the body area network (that is, a network of multiple, interconnected sensing units worn on or implanted in the body) and analysed with the help of data-driven methods to reduce environmental artefacts by using correlations between sensory inputs and the physiological state of the body (Table 4).

For a given hardware configuration, sensing units translate physiological data into digital signals, which initiates the ‘data pipeline’ (Fig. 2a). Raw data collected from the sensors first goes into a data conversion unit, where the digital signals (for example, current or voltage) are transformed into secondary data (such as heart rate, pH or metabolite concentration) using the corresponding algorithms. This process can be expressed as a conversion function, which extracts the assumed correlation between the digital signal and the biomarker or quantity measured for each sensing unit. These conversion functions are determined by regression analysis, relying on human supervision. This step might involve additional assumptions that are hidden from the downstream application, as well as data filtering, smoothing, denoising or downsampling, depending on the application. Therefore, the data conversion unit can act as a ‘black box’ and complicate secondary data interpretation.

a | Conceptualization of the data pipeline. The combination and processing of multiple wearables with multiple sensing strategies provides access to physiologically relevant parameters and biomarkers to better explain the non-linearity in human physiology. The black and red lines indicate the data processing and model training pathways, respectively. b | Overview of data-driven methods. Post-processing of big data to explore the complex links between the measured signals and physiological status of individuals is possible with machine learning algorithms. ANN, artificial neural network; DT, decision tree; GDBSCAN, generalized density-based spatial clustering of applications with noise; GM, Gaussian means; HC, hierarchical clustering; kNN, k-nearest neighbours; RF, random forest; SVM, support-vector machines. Panel a (top part) adapted from ref.14, Springer Nature Limited.

Substitution of human supervision with ML algorithms would automate this conversion process, making it possible to connect to the downstream models and establish a process that is ‘end-to-end learnable’. Furthermore, ML methods can help to extract highly nonlinear patterns between the obtained signals and desired output, with high accuracy and computational efficiency. Data-driven methods can be particularly useful when the measured variable is a product of complex physiological events, for which one digital signal (one feature of the model) might not contain sufficient information for quantitative analysis. In such cases, digital signals collected from various sensors could be processed together as multiple features to identify these complex patterns — a task for which ML algorithms excel over conventional methods.

At the next step, secondary data are prepared for the downstream model (Fig. 2a). These steps might include outlier and anomaly detection, clustering of the input data, noise reduction, handling of missing features, data normalization, dimensionality reduction and baseline correction. A combination of these tools should be selected according to the model requirements. In the case of similarity-based learning, for example, normalization is not an option, but rather a necessity. Typically, each feature is individually scaled around a common mean and standard deviation (–1,0,1), so that the distance between any two data points is not dictated by the feature that has the largest absolute value. By contrast, statistical methods assume the data come from a steady process; hence, any trends or seasonality in the data must be handled through baseline correction171,172. Manipulation of the secondary data using such tools is commonly referred to as feature engineering.

In feature engineering, the objective is to maximize the relevant information density within the high-dimensional data for the given task. The essential idea is to discard a less useful fraction of the feature space, as any additional information with marginal effect on the outcome creates a burden for the learning process. Feature engineering practises include combining secondary data features into new variables, appending data statistics as additional features, dimensionality reduction while conserving the data variance (for example, reducing 20 secondary features into 10 new features), and coordinate transformation (for instance, transforming 20 secondary features into 20 new features)173. Interpretation of the secondary data after feature engineering can also be concatenated with alternative forms of the same information (that is, the digital signal and/or secondary data) along the data pipeline (Fig. 2a). Such skip connections have enabled the solution of complex problems in image or video processing174 by ensuring information flow within the model. The same strategy can also be applied for sensory data management in wearable networks to ensure that crucial information is still accessible after two consecutive data transformations. Although feature engineering can be managed by human supervision, artificial intelligence-driven methods can also be applied to discover alternative combinations of the original feature space (Fig. 2b).

Subsequently, the high-dimensional data augmented with engineered features are fed to a (preferably data-driven) model. The model can be interpreted as an automated process that extracts patterns from the data given a particular objective. The functionality of this process is typically interpreted within the context of classification, regression, clustering and dimensionality reduction tasks173, which in turn depend on whether the data are labelled. The labels explain the hidden physiological state of the body related to the high-level objective and are either categorical or numerical information assigned by human supervision. In supervised learning, the model is trained to predict the hidden state of the body by using these labelled examples. Therefore, predictive capabilities of the model are bounded by the biases and accuracy of the human-supervised labelling process. In this regard, training examples should be representative for the whole population of interest, the number of examples should be high enough to alleviate sampling noise, training and evaluation strategies should consider the inherent class imbalances in the problem (such as disease prevalence14), and the level of confidence in the ‘ground truth’ must be increased through multiple expert opinions168. Furthermore, data-driven learning is an ill-posed problem; that is, several models with different complexities can be used to solve the same problem. Hence, the model complexity should match the volume and dimensionality of the data to minimize generalization error. Another remedy is to use ensemble learning, in which multiple models that rely on different learning theories (for example, example-based, error-based or similarity-based learning) are used together to make a decision. In health-care monitoring, model complexity is particularly crucial, as it is linked to the individualization of the detection process. In cases in which the data are likely to exhibit unique individual patterns, such as the detection of epileptic seizures from electroencephalographic signals, the training data become limited such that an ensemble of weak learners (that is, an ML model that has a low model complexity), such as support-vector machines and random forests, are typically more successful than deep neural networks. With such non-representative, small datasets, a deep neural network could memorize the patterns instead of learning from them, which leads to inaccurate predictions when a new and unknown case is introduced. If the symptoms are stereotypical, as in the case of arrhythmia detection, a large volume of data can be collected and used in the training with more complex models. In addition, the core algorithm of the data-driven model should be built by considering the physical nature of the problem. For example, in the case of COVID-19, building blocks of the model as well as the underlying mathematics were tailored to leverage multiple physiologically relevant acoustic markers, such as muscular degradation, respiratory tract alterations and changes in vocal cord sounds, to increase sensitivity175.

An alternative approach to extract the hidden state within the data is to use artificial intelligence, rather than human supervision. In unsupervised learning, examples are either discretely clustered into similar groups (based on a similarity score) or analysed as a whole (Fig. 2b). Clustering of examples into subgroups can be performed even when the structural hierarchy is unknown. The strength of such algorithms lies in their ability to detect patterns within high-dimensional data and identify relationships between input variables. In wearables, unsupervised learning can be deployed to mine the high-dimensional data stream from a body area network, which is difficult to analyse by human supervision. Unsupervised learning can also facilitate the interpretation of the collected data by identifying the most informative features using noise reduction and outlier detection.

Depending on the design objective, the model output can be generated discretely or continuously. The next decision to be made in the design process is the data management protocol173. Model outputs can be exchanged between wearable sensors and their software, as well as external smart devices (such as smartwatches or smartphones) within the body area network, which makes it possible to record and wirelessly transfer physiologically relevant data in real time. The selection of the data transmission mode relies on the power consumption expectations and the application (real-time or single-point analysis). In the long term, wireless data transmission through Bluetooth and LoRa-based solutions might enable fast, short-range and long-range transmission without compromising power consumption. At present, however, the power consumption of radio transmission is much higher than the power needs of the local sensing–amplification–data conversion process. Therefore, in the near future, data transmission should be minimized by localizing either the whole decision-making unit or the data compression component on the sensors.

Depending on the nature and complexity of the task, the data storage requirements of wearables vary. Data storage units can be classified as volatile or non-volatile memory176. Although volatile storage provides high-speed data fetching, storage is restricted to active periods; that is, the stored data are deleted when the system is turned off. Volatile data storage has a huge effect on the system performance and power unit, as it needs frequent refreshing of the data to retain content. By contrast, non-volatile storage enables relatively low-power storage of high-density data, but the data transfer is much slower. It is also possible to integrate a cloud-based service that oversees the whole process and sends the physiological data to emergency services, health-care providers and/or administrative authorities as needed. Such an infrastructure would rely heavily on local and long-distance communications between different components, which is accompanied by intrinsic challenges, including optimization of the data collection frequency, the degree of sensor circuitry integration and power management as well as ethical concerns relating to the collection of sensitive information, access to regulated medical data, user compliance, and data safety and encryption177 (Supplementary information).

Power unit

The power requirement of wearable sensors depends on the application and the building blocks used1,3. Most wearables require a power unit that is applied to provide the supply voltage (either battery-powered or based on a specific harvesting source) and, in the case of energy harvesting, to extract energy from the environment or body. As wearable sensors are designed to monitor bodily activities, the materials used in their power units are also expected to meet essential characteristics of wearables. Ideally, such power units should be non-toxic, miniature, recyclable and either harvest energy or offer a high energy density for a long lifetime.

Energy harvesting can be accomplished through different phenomena: piezoelectricity, triboelectricity, thermoelectricity, optoelectronics, electromagnetic radiation, catalytic reactions or a combination thereof. Each approach takes advantage of specific energy sources in the human body or the external environment. These sources can be used to enable self-powered wearables driven by biomechanical (motion or heat), electromagnetic (light or radio frequency), biochemical (metabolites in bodily fluids) or a combination of processes (such as a hybrid system that combines triboelectric generators with biofuel cells)178,179.

Piezoelectric and triboelectric phenomena convert slight and uneven mechanical energy (including walking, heartbeats and respiration movements) into electricity. Mechanical stress or strain generates an internal electric field in piezoelectric materials (Fig. 3a) such as zinc oxide nanorods or nanostructured piezoelectric harvesters, including lead zirconate titanate and barium titanate180. In triboelectric harvesters, motion provokes the physical contact and separation of two materials with different electronegativities, which triggers electron flow, thereby producing a voltage181 (Fig. 3b). In this context, triboelectric power units use an electron acceptor material that attracts electrons from an electron donor material. The electron acceptors most commonly used in triboelectric nanogenerators are polytetrafluoroethylene, PDMS, fluorinated ethylene propylene and Kapton, whereas aluminium, copper, skin and nylon are the most common electron donors in this field182. Piezoelectric and triboelectric generators with stretchable electrodes and size flexibility (ranging from tens of square millimetres to tens of square centimetres) can be worn on the skin or incorporated into textile materials. These generators also offer higher power densities than other types of generator (up to 810 mW m–2 (ref.183) for piezoelectric and 230 mW m–2 (ref.184) for triboelectric harvesters) and have proved stable across high numbers of operating cycles. However, the integration of piezoelectric generators into wearables is challenging, as their output is an alternating current with an instantaneous pulse wave nature, which requires transformation into direct current. Triboelectric generators cannot meet the real-time energy consumption of portable electronics, although they can provide relatively high output voltage. In addition, the longevity of wearable triboelectric generators remains an issue, as most use metallic organic polymers, which have inherent stability and durability limitations185. As wearable piezoelectric and triboelectric generators depend on biomechanical energy, they have a low-frequency excitation source; hence, it is hard for these generators to serve as the sole energy supply for wearable devices, especially those containing multiplexing functions, intended for continuous monitoring or connected with other power-hungry elements such as displays. Antijamming capability is another consideration, as a jamming signal can be triggered during complex bodily activities (such as walking, running or jumping), thereby interrupting the desired capture of target signals. Moreover, the design, miniaturization, encapsulation and manufacture of highly deformable and fatigue-free electrodes are crucial for the development of piezoelectric and triboelectric harvesters that are stable in wearable sensors and can, for example, withstand high or low temperatures, high humidity and washing conditions.

a | Piezoelectricity is generated by mechanical motion, which activates a piezoelectric material. b | Triboelectricity is produced by motion that results in the physical contact and separation of two materials with different electronegativities. c | Thermoelectricity is generated when the surface of conductor A is heated and this energy is then transferred to conductor B, which triggers the motion of charge carriers (such as electrons and holes) and generates a voltage. d | Photovoltaic energy is generated when a photovoltaic material is irradiated with light. e | Electromagnetic radiation is managed by antennas that transform electromagnetic waves into a voltage or current. f | Wearable biofuel cells create energy from a catalytic reaction, which occurs between the fuel provided by a biofluid (such as sweat) and an enzyme; the reaction is generally enhanced by a mediator that boosts the electron transfer process between the enzymes and the electrodes.

Thermoelectric generators convert tiny amounts of heat into electricity (Fig. 3c). Wearable thermoelectric generators can thus take advantage of the heat generated from human metabolic activities, thereby producing electricity to power wearable sensors in a virtually perpetual manner. Generally, thermoelectric harvesters are rigid and heavy; however, composite versions based on conductive polymers, hybrid organic–inorganic materials, continuous inorganic films186 and liquid metals are well suited for flexible thermoelectric generators187. The thermoelectric harvesters that are integrated into wearables mostly have heatsink-like shapes, making them difficult to clean and not particularly aesthetic. In comparison with piezoelectric and triboelectric generators, thermoelectric harvesters are much larger (around tens of square centimetres) but the power densities achieved are lower (up to 200 mW m–2)188. Major challenges in the development of highly stable wearable thermoelectric generators include low energy conversion rates, biocompatibility issues, maintaining reliable contact with the heat source and adjusting to body heat temperature changes in different environments189.

Photovoltaic materials are a common power source and can be used to develop solar-powered wearable sensors (Fig. 3d). Similarly to thermoelectric generators, photovoltaic harvesters are usually rigid. However, stretchable, twistable and bendable photovoltaic harvesters have been developed based on transparent electrodes190 or smart textiles made from deformable hybrid thin films and/or soft composite materials, respectively. Smart textiles have enabled photorechargable power sources191, some of which are even washable192. However, miniaturization is still a major challenge in photovoltaic harvesters as they are mostly bulky (tens of square millimetres to square centimetres in size) and not particularly lightweight for tasks that require a higher energy density, with the energy storage elements occupying most of the space of the resulting wearable device193.

As a promising alternative, flexible antennas enable the use of electromagnetic radiation as a power source and endow wearables with the capability to transfer not only power but also data between devices194 (Fig. 3e) in a wireless and battery-free manner195. Several materials, including polymers, textiles, graphene-related materials, neoprene rubber, wool, cellulose and silk, as well as composites incorporating materials such as ceramics or MXenes, have been used to fabricate wearable antennas196. The printability of the substrates is a key issue in designing flexible antennas; different conductive materials can be printed, commonly copper, but also other ink formulations197. Beyond these materials, new physical effects and materials are being investigated198,199 to advance the field of wireless power transfer.

Ideally, the size of a wearable antenna should be less than 25 cm2 (ref.200) and their operation frequencies should range from 900 MHz to 38 GHz (ref.196). Wearable antennas have proved useful in the monitoring of body motion or position, including the monitoring of walking and fall states201 and the detection of bending positions202. The main challenges for developing wearable antennas include the design considerations of the coupling between the antenna geometry and the human body, which will affect the behaviour of the antenna and performance during high electromagnetic exposure; managing antenna alterations when it is constantly deformed during complex bodily activities; ensuring stability over time; reducing signal fade due to human body shadowing effects203; and enhancing performance of the antenna during motion or rotation of the wearer, and under different external conditions (such as temperature, humidity, proximity to the body and other clothes, and washing frequency).

Another power source for wearable sensors are biofuel cells, in which enzymes are used as catalysts to convert chemical energy into electricity (Fig. 3f). For example, lactate has the potential to be an outstanding fuel for self-powered wearable devices as it can be easily oxidized by oxidase enzymes (lactate oxidase or lactate dehydrogenase) and its concentration in sweat is relatively abundant (in the millimolar range)204,205. In addition, sweat also contains myriad analytes, allowing for sweat-activated biofuel cells for wearable sensors that target pH and multiple analytes, including glucose, urea and NH4+, even in a multiplexed manner206. The current challenges of wearable biofuel cells include increasing the energy density, increasing the stability and longevity of the catalyst, the limited fuel availability, and miniaturization and proper system integration206. Moreover, enzymes can degenerate when they operate in a non-ideal environment. To address this, nanozymes, which are catalytically active nanoparticles with enzyme-like kinetics, can replace enzymes as catalysts in biofuel cells204,207. In addition, the incorporation of nanomaterials such as carbon nanotubes and electrodes with a high surface area can lead to highly efficient self-chargeable biofuel cells208. Current on-skin biofuel cells for wearables have a size on the order of square centimetres and deliver promising power densities of up to 3.5 mW cm−2.

Wearables that require long-term operation and a high energy density to power multiplexed sensors and other components can incorporate an energy storage element209. To this end, low-cost, comfortable and safe batteries or supercapacitors that are deformable are highly desired. However, most of the available wearable energy storage devices have risks associated with toxicity and flammability210,211. To overcome this issue, fibre-like electrodes made of carbon nanotube yarn can act as supercapacitors with a power density of up to 2.6 μWh cm–2 (ref.212). Textile-like electrodes made of 2D heterostructures have also led to innovative supercapacitors with a maximum energy density of 167.05 mWh cm–3 and excellent cycling stability. Using these textile-like electrodes, a wearable energy-sensor system has been shown to monitor physiological signals in real time, including wrist pulse, heartbeat and body-bending signals213.

The power demand of wearables depends on the complexity of the measurement (for example, single analyte or multianalyte, continuous or single, and quantitative or qualitative), and is typically determined by the decision-making unit3. Therefore, the power demand can be estimated based on the desired measurement output, and a suitable power supply strategy thus chosen.

Outlook

With continued innovation and development, together with the widespread use of wearables, we are now many steps closer to fulfilling the prerequisite for proactive health care by monitoring the time-resolved variation in the physiological state of the body. Yet, there remain numerous challenges and areas for development to realize the full potential of wearable sensing devices.

From the materials perspective, the development of breathable, flexible and stretchable materials (such as superflexible wood214) is still an important challenge to satisfy the rigorous requirements of wearable applications (such as adaptation to electronic skins, smart patches or textiles). Furthermore, transient and recyclable (even compostable) substrate materials are desired for the sustainable and low-cost mass production of wearable sensors. Another challenge is to develop self-powered wearables, including ‘green’ power units (such as disposable solar panels or biofuel cells) or powerless options through near-field communication. These advances could lead to the evolution of standalone, fully integrated wearable sensors, or a biosensing unit that operates in combination with other ubiquitous personal devices (such as smartphones).

To enable robust long-term (days to weeks) continuous measurement in a wearable format, sensing and sampling technologies should be further matured. In this regard, future trends for advanced sensing units include the use of microneedles, nanoneedles or unconventional sample collection methods (such as face masks) for easy and continuous or on-demand sampling as well as the further integration of micromaterials or nanomaterials and stabilized synthetic biology reactions for signal amplification. Moreover, novel biorecognition elements or assay technologies (such as aptamers, molecularly imprinted polymers, nanozymes, DNAzymes or CRISPR–Cas-powered assays) could be applied to increase sensitivity and facilitate long-term use.

The accuracy of wearables could be improved through multimodal and/or multiplexed sensing by mounting different transducer types and/or simultaneously measuring different analytes and/or samples on the same platform. In addition, increased use of cloud or fog computing, data mining and ML for the extremely large datasets produced by wearable sensors would also help to enable more accurate predictions of the physiological status of users. In this respect, the first prerequisite is to conduct larger prospective cohort studies using wearable sensors to validate their clinical applicability for diagnosis and population-level studies. Before starting these trials, data collection and its use should be planned by considering their implementation in ML models, for both training, validation and testing of these models. Moreover, social acceptance of wearable sensors must be ensured by informing users about the advantages and disadvantages and by integrating them into existing wearable devices or application ecosystems (such as smartwatches or wearable glucometers) and health-care services (such as health insurance policies; see Supplementary information for further discussion). In addition, the integration of wearables into Internet-of-Things applications and the adoption of protocols for data safety and handling (such as blockchain177) with the establishment of an ethical regulatory framework for wearable data networks could further promote their use.

In the foreseeable future, extension of the capabilities of wearables beyond diagnostic sensing through the integration of feedback loops would pave the way for (third-generation) wearable devices for theranostic applications. Smart bandages, for example, could allow for real-time monitoring of wound healing through pH measurement and, in the case of an infection, treatment by on-demand delivery of antibiotics or anti-inflammatory drugs. Another trend is to enhance the capabilities of current wearable continuous glucose-monitoring systems to release insulin to the patient in a closed-loop manner.

References

Iqbal, S. M. A., Mahgoub, I., Du, E., Leavitt, M. A. & Asghar, W. Advances in healthcare wearable devices. npj Flex. Electron. 5, 9 (2021).

Brophy, K. et al. The future of wearable technologies. Brief. Pap. 8, 1–20 (2021).

Ates, H. C. et al. Integrated devices for non-invasive diagnostics. Adv. Funct. Mater. 31, 2010388 (2021).

Heikenfeld, J. et al. Wearable sensors: modalities, challenges, and prospects. Lab Chip 18, 217–248 (2018).

Gambhir, S. S., Ge, T. J., Vermesh, O., Spitler, R. & Gold, G. E. Continuous health monitoring: an opportunity for precision health. Sci. Transl. Med. 13, eabe5383 (2021).

Kim, J., Campbell, A. S., de Ávila, B. E.-F. & Wang, J. Wearable biosensors for healthcare monitoring. Nat. Biotechnol. 37, 389–406 (2019).

Wang, L., Lou, Z., Jiang, K. & Shen, G. Bio-multifunctional smart wearable sensors for medical devices. Adv. Intell. Syst. 1, 1900040 (2019).

Yang, Y. et al. A laser-engraved wearable sensor for sensitive detection of uric acid and tyrosine in sweat. Nat. Biotechnol. 38, 217–224 (2020).

Guo, S. et al. Integrated contact lens sensor system based on multifunctional ultrathin MoS2 transistors. Matter 4, 969–985 (2020).

Gao, W. et al. Fully integrated wearable sensor arrays for multiplexed in situ perspiration analysis. Nature 529, 509–514 (2016).

Nyein, H. Y. Y. et al. A wearable microfluidic sensing patch for dynamic sweat secretion analysis. ACS Sens. 3, 944–952 (2018).

Quer, G. et al. Wearable sensor data and self-reported symptoms for COVID-19 detection. Nat. Med. 27, 73–77 (2021).

Mishra, T. et al. Pre-symptomatic detection of COVID-19 from smartwatch data. Nat. Biomed. Eng. 4, 1208–1220 (2020).

Ates, H. C., Yetisen, A. K., Güder, F. & Dincer, C. Wearable devices for the detection of COVID-19. Nat. Electron. 4, 13–14 (2021).

Powers, R. et al. Smartwatch inertial sensors continuously monitor real-world motor fluctuations in Parkinson’s disease. Sci. Transl. Med. 13, eabd7865 (2021).

Sempionatto, J. R., Montiel, V. R. V., Vargas, E., Teymourian, H. & Wang, J. Wearable and mobile sensors for personalized nutrition. ACS Sens. 6, 1745–1760 (2021).

Hong, W. & Lee, W. G. Wearable sensors for continuous oral cavity and dietary monitoring toward personalized healthcare and digital medicine. Analyst 145, 7796–7808 (2021).

Ates, H. C. et al. On-site therapeutic drug monitoring. Trends Biotechnol. 38, 1262–1277 (2020).

Cotur, Y. et al. Stretchable composite acoustic transducer for wearable monitoring of vital signs. Adv. Funct. Mater. 30, 1910288 (2020).

Kozlowski, R. M. & Muzyczek, M. Natural Fibers (Nova Science Publishers, 2017).

Shaker, K., Umair, M., Ashraf, W. & Nawab, Y. Fabric manufacturing. Phys. Sci. Rev. 1, 20160024 (2016).

Applegate, M. B., Perotto, G., Kaplan, D. L. & Omenetto, F. G. Biocompatible silk step-index optical waveguides. Biomed. Opt. Express 6, 4221–4227 (2015).

Guidetti, G., Atifi, S., Vignolini, S. & Hamad, W. Y. Flexible photonic cellulose nanocrystal films. Adv. Mater. 28, 10042–10047 (2016).

Kim, S. J. et al. Wearable UV sensor based on carbon nanotube-coated cotton thread. ACS Appl. Mater. Interfaces 10, 40198–40202 (2018).

Gurarslan, A., Özdemir, B., Bayat, İ. H., Yelten, M. B. & Karabulut Kurt, G. Silver nanowire coated knitted wool fabrics for wearable electronic applications. J. Eng. Fibers Fabr. https://doi.org/10.1177/1558925019856222 (2019).

Morales-Narváez, E. et al. Nanopaper as an optical sensing platform. ACS Nano 9, 7296–7305 (2015).

Cao, J. & Wang, C. Multifunctional surface modification of silk fabric via graphene oxide repeatedly coating and chemical reduction method. Appl. Surf. Sci. 405, 380–388 (2017).

Nguyen, P. Q. et al. Wearable materials with embedded synthetic biology sensors for biomolecule detection. Nat. Biotechnol. 39, 1366–1374 (2021).

Song, Y. et al. Design framework for a seamless smart glove using a digital knitting system. Fash. Text. 8, 6 (2021).

Loke, G. et al. Structured multimaterial filaments for 3D printing of optoelectronics. Nat. Commun. 10, 4010 (2019).

Valentine, A. D. et al. Hybrid 3D printing of soft electronics. Adv. Mater. 29, 1703817 (2017).

Kraft, U., Molina-Lopez, F., Son, D., Bao, Z. & Murmann, B. Ink development and printing of conducting polymers for intrinsically stretchable interconnects and circuits. Adv. Electron. Mater. 6, 1900681 (2020).

Geng, W., Cuthbert, T. J. & Menon, C. Conductive thermoplastic elastomer composite capacitive strain sensors and their application in a wearable device for quantitative joint angle prediction. ACS Appl. Polym. Mater. 3, 122–129 (2021).

Sala de Medeiros, M., Chanci, D., Moreno, C., Goswami, D. & Martinez, R. V. Waterproof, breathable, and antibacterial self-powered e-textiles based on omniphobic triboelectric nanogenerators. Adv. Funct. Mater. 29, 1904350 (2019).

Wu, C., Liu, X. & Ying, Y. Soft and stretchable optical waveguide: light delivery and manipulation at complex biointerfaces creating unique windows for on-body sensing. ACS Sens. 6, 1446–1460 (2021).

Choi, S. et al. Highly flexible and efficient fabric-based organic light-emitting devices for clothing-shaped wearable displays. Sci. Rep. 7, 6424 (2017).

Xu, G. et al. Design of non-dimensional parameters in stretchable microstrip antennas with coupled mechanics-electromagnetics. Mater. Des. 205, 109721 (2021).

Mota-Morales, J. D. & Morales-Narváez, E. Transforming nature into the next generation of bio-based flexible devices: new avenues using deep eutectic systems. Matter 4, 2141–2162 (2021).

Correa, S. et al. Translational applications of hydrogels. Chem. Rev. 121, 11385–11457 (2021).

Homayounfar, S. Z. et al. Multimodal smart eyewear for longitudinal eye movement tracking. Matter 3, 1275–1293 (2020).

Turner, J. G., White, L. R., Estrela, P. & Leese, H. S. Hydrogel-forming microneedles: current advancements and future trends. Macromol. Biosci. 21, 2000307 (2021).

Matsuhisa, N. et al. Printable elastic conductors with a high conductivity for electronic textile applications. Nat. Commun. 6, 7461 (2015).

Wang, B. et al. Flexible and stretchable metal oxide nanofiber networks for multimodal and monolithically integrated wearable electronics. Nat. Commun. 11, 2405 (2020).

Schroeder, T. B. H. et al. An electric-eel-inspired soft power source from stacked hydrogels. Nature 552, 214–218 (2017).

Scarpa, E. et al. Wearable piezoelectric mass sensor based on pH sensitive hydrogels for sweat pH monitoring. Sci. Rep. 10, 10854 (2020).

Xu, J., Wang, G., Wu, Y., Ren, X. & Gao, G. Ultrastretchable wearable strain and pressure sensors based on adhesive, tough, and self-healing hydrogels for human motion monitoring. ACS Appl. Mater. Interfaces 11, 25613–25623 (2019).

Di, J. et al. Stretch-triggered drug delivery from wearable elastomer films containing therapeutic depots. ACS Nano 9, 9407–9415 (2015).

Liu, X. et al. 3D printing of living responsive materials and devices. Adv. Mater. 30, 1704821 (2018).