Abstract

The scale of genetic, epigenomic, transcriptomic, cheminformatic and proteomic data available today, coupled with easy-to-use machine learning (ML) toolkits, has propelled the application of supervised learning in genomics research. However, the assumptions behind the statistical models and performance evaluations in ML software frequently are not met in biological systems. In this Review, we illustrate the impact of several common pitfalls encountered when applying supervised ML in genomics. We explore how the structure of genomics data can bias performance evaluations and predictions. To address the challenges associated with applying cutting-edge ML methods to genomics, we describe solutions and appropriate use cases where ML modelling shows great potential.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 print issues and online access

$189.00 per year

only $15.75 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

References

Teschendorff, A. E. Avoiding common pitfalls in machine learning omic data science. Nat. Mater. 18, 422–427 (2019). This Comment article talks about cross-validation and independent test sets as solutions to two pitfalls encountered when applying supervised ML in genomics: the ‘curse of dimensionality’ and confounding.

Minhas, F., Asif, A. & Ben-Hur, A. Ten ways to fool the masses with machine learning. Preprint at arXiv https://arxiv.org/abs/1901.01686 (2019).

Eraslan, G., Avsec, Ž., Gagneur, J. & Theis, F. J. Deep learning: new computational modelling techniques for genomics. Nat. Rev. Genet. 20, 389–403 (2019).

Ching, T. et al. Opportunities and obstacles for deep learning in biology and medicine. J. R. Soc. Interface 15, 20170387 (2018).

Zou, J. et al. A primer on deep learning in genomics. Nat. Genet. 51, 12–18 (2019).

Flagel, L., Brandvain, Y. & Schrider, D. R. The unreasonable effectiveness of convolutional neural networks in population genetic inference. Mol. Biol. Evol. 36, 220–238 (2019).

Liu, J., Lewinger, J. P., Gilliland, F. D., Gauderman, W. J. & Conti, D. V. Confounding and heterogeneity in genetic association studies with admixed populations. Am. J. Epidemiol. 177, 351–360 (2013).

Vilhjálmsson, B. J. & Nordborg, M. The nature of confounding in genome-wide association studies. Nat. Rev. Genet. 14, 1–2 (2013).

Hellwege, J. N. et al. Population stratification in genetic association studies. Curr. Protoc. Hum. Genet. 95, 1.22.1–1.22.23 (2017).

Sul, J. H., Martin, L. S. & Eskin, E. Population structure in genetic studies: confounding factors and mixed models. PLoS Genet. 14, e1007309 (2018).

Weirauch, M. T. et al. Evaluation of methods for modeling transcription factor sequence specificity. Nat. Biotechnol. 31, 126–134 (2013).

Leek, J. T. et al. Tackling the widespread and critical impact of batch effects in high-throughput data. Nat. Rev. Genet. 11, 733–739 (2010). This Review documents the prevalence of batch effects in genomic data and shows how these can confound statistical inferences.

Tran, H. T. N. et al. A benchmark of batch-effect correction methods for single-cell RNA sequencing data. Genome Biol. 21, 12 (2020).

Rabanser, S., Günnemann, S. & Lipton, Z. Failing loudly: an empirical study of methods for detecting dataset shift. in Advances in Neural Information Processing Systems (NeurIPS 2019) (eds Wallach, H. et al.) Vol. 32, 1396–1408 (Curran Associates, Inc., 2019).

Gretton, A., Borgwardt, K. M., Rasch, M. J., Schölkopf, B. & Smola, A. A kernel two-sample test. J. Mach. Learn. Res. 13, 723–773 (2012).

Ren, J. et al. in Advances in Neural Information Processing Systems (NeurIPS 2019) (eds Wallach, H. et al.) Vol. 32, 14707–14718 (Curran Associates, Inc., 2019).

Kingma, D. P. & Welling, M. Auto-encoding variational Bayes. Preprint at arXiv https://arxiv.org/abs/1312.6114# (2013).

Liu, F. T., Ting, K. M. & Zhou, Z. in IEEE International Conference on Data Mining 413–422 (IEEE, 2008).

Johnson, W. E., Li, C. & Rabinovic, A. Adjusting batch effects in microarray expression data using empirical Bayes methods. Biostatistics 8, 118–127 (2007).

Leek, J. T. & Storey, J. D. Capturing heterogeneity in gene expression studies by surrogate variable analysis. PLoS Genet. 3, 1724–1735 (2007).

Butler, A., Hoffman, P., Smibert, P., Papalexi, E. & Satija, R. Integrating single-cell transcriptomic data across different conditions, technologies, and species. Nat. Biotechnol. 36, 411–420 (2018).

Stuart, T. et al. Comprehensive integration of single-cell data. Cell 177, 1888–1902.e21 (2019).

Wang, T. et al. BERMUDA: a novel deep transfer learning method for single-cell RNA sequencing batch correction reveals hidden high-resolution cellular subtypes. Genome Biol. 20, 165 (2019).

Pan, S. J. & Yang, Q. A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 22, 1345–1359 (2010).

Kouw, W. M. & Loog, M. A review of domain adaptation without target labels. IEEE Trans. Pattern Anal. Mach. Intell. 43, 766–785 (2019).

Shimodaira, H. Improving predictive inference under covariate shift by weighting the log-likelihood function. J. Stat. Plan. Inference 90, 227–244 (2000). This paper discusses distributional differences, also known as covariate shift, and proposes several weighting schemes for adjusting for this pitfall.

Bickel, S., Brückner, M. & Scheffer, T. Discriminative learning under covariate shift. J. Mach. Learn. Res. 10, 2137–2155 (2009).

Orenstein, Y. & Shamir, R. Modeling protein-DNA binding via high-throughput in vitro technologies. Brief. Funct. Genomics 16, 171–180 (2017).

Alipanahi, B., Delong, A., Weirauch, M. T. & Frey, B. J. Predicting the sequence specificities of DNA- and RNA-binding proteins by deep learning. Nat. Biotechnol. 33, 831–838 (2015).

Berger, M. F. & Bulyk, M. L. Universal protein-binding microarrays for the comprehensive characterization of the DNA-binding specificities of transcription factors. Nat. Protoc. 4, 393–411 (2009).

Annala, M., Laurila, K., Lähdesmäki, H. & Nykter, M. A linear model for transcription factor binding affinity prediction in protein binding microarrays. PLoS ONE 6, e20059 (2011).

Agius, P., Arvey, A., Chang, W., Noble, W. S. & Leslie, C. High resolution models of transcription factor-DNA affinities improve in vitro and in vivo binding predictions. PLoS Comput. Biol. 6, e1000916 (2010).

Riley, T. R., Lazarovici, A., Mann, R. S. & Bussemaker, H. J. Building accurate sequence-to-affinity models from high-throughput in vitro protein-DNA binding data using FeatureREDUCE. Elife 4, e06397 (2015).

Wong, K.-C., Li, Y., Peng, C. & Wong, H.-S. A comparison study for DNA motif modeling on protein binding microarray. IEEE/ACM Trans. Comput. Biol. Bioinform. 13, 261–271 (2016).

Rastogi, C. et al. Accurate and sensitive quantification of protein-DNA binding affinity. Proc. Natl Acad. Sci. USA 115, E3692–E3701 (2018).

Im, J., Park, B. & Han, K. A generative model for constructing nucleic acid sequences binding to a protein. BMC Genomics 20, 967 (2019).

Ishida, R. et al. RaptRanker: in silico RNA aptamer selection from HT-SELEX experiment based on local sequence and structure information. Nucleic Acids Res. 48, e82 (2020).

Nutiu, R. et al. Direct measurement of DNA affinity landscapes on a high-throughput sequencing instrument. Nat. Biotechnol. 29, 659–664 (2011).

Tabb, D. L. et al. Repeatability and reproducibility in proteomic identifications by liquid chromatography-tandem mass spectrometry. J. Proteome Res. 9, 761–776 (2010).

Pooch, E. H. P., Ballester, P. L. & Barros, R. C. Can we trust deep learning models diagnosis? The impact of domain shift in chest radiograph classification. Preprint at arXiv https://arxiv.org/abs/1909.01940# (2019).

Zech, J. R. et al. Variable generalization performance of a deep learning model to detect pneumonia in chest radiographs: a cross-sectional study. PLoS Med. 15, e1002683 (2018).

Badgeley, M. A. et al. Deep learning predicts hip fracture using confounding patient and healthcare variables. NPJ Digit. Med. 2, 31 (2019).

Antun, V., Renna, F., Poon, C., Adcock, B. & Hansen, A. C. On instabilities of deep learning in image reconstruction and the potential costs of AI. Proc. Natl Acad. Sci. USA 117, 30088–30095 (2020).

Geis, J. R. et al. Ethics of artificial intelligence in radiology: summary of the joint european and north american multisociety statement. Radiology 293, 436–440 (2019).

Larrazabal, A. J., Nieto, N., Peterson, V., Milone, D. H. & Ferrante, E. Gender imbalance in medical imaging datasets produces biased classifiers for computer-aided diagnosis. Proc. Natl Acad. Sci. USA 117, 12592–12594 (2020).

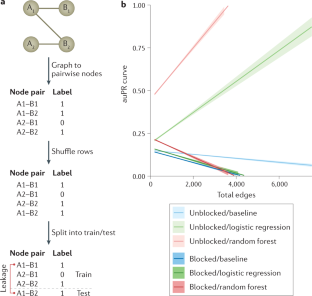

Guney, E. in Biocomputing 2017: Proceedings of the Pacific Symposium (eds Altmann, R. B. et al.) 132–143 (World Scientific, 2016).

Xi, W. & Beer, M. A. Local epigenomic state cannot discriminate interacting and non-interacting enhancer-promoter pairs with high accuracy. PLoS Comput. Biol. 14, e1006625 (2018).

Cao, F. & Fullwood, M. J. Inflated performance measures in enhancer-promoter interaction-prediction methods. Nat. Genet. 51, 1196–1198 (2019).

Whalen, S. & Pollard, K. S. Reply to ‘Inflated performance measures in enhancer-promoter interaction-prediction methods’. Nat. Genet. 51, 1198–1200 (2019).

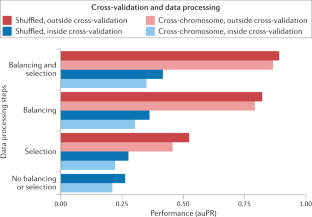

Eid, F.-E. et al. Systematic auditing is essential to debiasing machine learning in biology. Commun. Biol. 4, 183 (2020). This article proposes a set of data modifications that can be used to identify overestimated performance in supervised ML with paired-input data, such as protein–protein interactions, where examples occur in many pairs.

Roberts, D. R. et al. Cross-validation strategies for data with temporal, spatial, hierarchical, or phylogenetic structure. Ecography 40, 913–929 (2017). This study demonstrates blocking as an effective strategy for estimating the performance of ML models on data with complex dependency structures.

Korte, A. et al. A mixed-model approach for genome-wide association studies of correlated traits in structured populations. Nat. Genet. 44, 1066–1071 (2012).

Stucki, S. et al. High performance computation of landscape genomic models including local indicators of spatial association. Mol. Ecol. Resour. 17, 1072–1089 (2017).

Runcie, D. E. & Crawford, L. Fast and flexible linear mixed models for genome-wide genetics. PLoS Genet. 15, e1007978 (2019).

Jiang, L. et al. A resource-efficient tool for mixed model association analysis of large-scale data. Nat. Genet. 51, 1749–1755 (2019).

Whalen, S., Truty, R. M. & Pollard, K. S. Enhancer–promoter interactions are encoded by complex genomic signatures on looping chromatin. Nat. Genet. 48, 488–496 (2016).

Brzyski, D. et al. Controlling the rate of GWAS false discoveries. Genetics 205, 61–75 (2017).

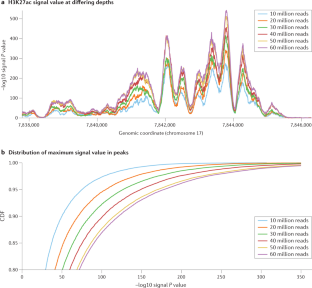

Schreiber, J., Singh, R., Bilmes, J. & Noble, W. S. A pitfall for machine learning methods aiming to predict across cell types. Genome Biol. 21, 282 (2020).

Lee, D., Redfern, O. & Orengo, C. Predicting protein function from sequence and structure. Nat. Rev. Mol. Cell Biol. 8, 995–1005 (2007).

Ribeiro, M. T., Singh, S. & Guestrin, C. in Proc. 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining 1135–1144 (Association for Computing Machinery, 2016).

Stegle, O., Parts, L., Piipari, M., Winn, J. & Durbin, R. Using probabilistic estimation of expression residuals (PEER) to obtain increased power and interpretability of gene expression analyses. Nat. Protoc. 7, 500–507 (2012).

Listgarten, J., Kadie, C., Schadt, E. E. & Heckerman, D. Correction for hidden confounders in the genetic analysis of gene expression. Proc. Natl Acad. Sci. USA 107, 16465–16470 (2010).

Parsana, P. et al. Addressing confounding artifacts in reconstruction of gene co-expression networks. Genome Biol. 20, 94 (2019).

Dinga, R., Schmaal, L., Brenda, W. J., Veltman, D. J. & Marquand, A. F. Controlling for effects of confounding variables on machine learning predictions. Preprint at bioRxiv https://doi.org/10.1101/2020.08.17.255034 (2020).

Dincer, A. B., Janizek, J. D. & Lee, S.-I. Adversarial deconfounding autoencoder for learning robust gene expression embeddings. Bioinformatics 36, i573–i582 (2020).

Skafidas, E. et al. Predicting the diagnosis of autism spectrum disorder using gene pathway analysis. Mol. Psychiatry 19, 504–510 (2014).

Robinson, E. B. et al. Response to ‘Predicting the diagnosis of autism spectrum disorder using gene pathway analysis’. Mol. Psychiatry 19, 859–861 (2014).

Keys, K. L. et al. On the cross-population generalizability of gene expression prediction models. PLoS Genet. 16, e1008927 (2020).

Belgard, T. G., Jankovic, I., Lowe, J. K. & Geschwind, D. H. Population structure confounds autism genetic classifier. Mol. Psychiatry 19, 405–407 (2014).

Chen, X. et al. Drug-target interaction prediction: databases, web servers and computational models. Brief. Bioinform. 17, 696–712 (2016).

Brookhart, M. A., Stürmer, T., Glynn, R. J., Rassen, J. & Schneeweiss, S. Confounding control in healthcare database research: challenges and potential approaches. Med. Care 48, S114–S120 (2010).

Zhang, J. M., Kamath, G. M. & Tse, D. N. Valid post-clustering differential analysis for single-cell RNA-seq. Cell Syst. 9, 383–392.e6 (2019).

Gao, L. L., Bien, J. & Witten, D. Selective Inference for hierarchical clustering. Preprint at arXiv https://arxiv.org/abs/2012.02936 (2020).

Pedregosa, F. et al. Scikit-learn: machine learning in Python. J. Mach. Learn. Res. 12, 2825–2830 (2011).

Kuhn, M. Building predictive models in R using the caret package. J. Stat. Softw. Artic. 28, 1–26 (2008).

Vidaki, A. et al. DNA methylation-based forensic age prediction using artificial neural networks and next generation sequencing. Forensic Sci. Int. Genet. 28, 225–236 (2017).

Kimura, R. et al. An epigenetic biomarker for adult high-functioning autism spectrum disorder. Sci. Rep. 9, 13662 (2019).

Levy, J. J. et al. MethylNet: an automated and modular deep learning approach for DNA methylation analysis. BMC Bioinforma. 21, 108 (2020).

Rauschert, S., Raubenheimer, K., Melton, P. E. & Huang, R. C. Machine learning and clinical epigenetics: a review of challenges for diagnosis and classification. Clin. Epigenetics 12, 51 (2020).

Capper, D. et al. DNA methylation-based classification of central nervous system tumours. Nature 555, 469–474 (2018).

Bahado-Singh, R. O. et al. Deep learning/artificial intelligence and blood-based dna epigenomic prediction of cerebral palsy. Int. J. Mol. Sci. 20, 2075 (2019).

Mohandas, N. et al. Epigenome-wide analysis in newborn blood spots from monozygotic twins discordant for cerebral palsy reveals consistent regional differences in DNA methylation. Clin. Epigenetics 10, 25 (2018).

Crowgey, E. L., Marsh, A. G., Robinson, K. G., Yeager, S. K. & Akins, R. E. Epigenetic machine learning: utilizing DNA methylation patterns to predict spastic cerebral palsy. BMC Bioinforma. 19, 225 (2018).

Aref-Eshghi, E. et al. Genomic DNA methylation-derived algorithm enables accurate detection of malignant prostate tissues. Front. Oncol. 8, 100 (2018).

Luo, R. et al. Identifying CpG methylation signature as a promising biomarker for recurrence and immunotherapy in non-small-cell lung carcinoma. Aging 12, 14649–14676 (2020).

Wilhelm-Benartzi, C. S. et al. Review of processing and analysis methods for DNA methylation array data. Br. J. Cancer 109, 1394–1402 (2013).

Peters, T. J. et al. De novo identification of differentially methylated regions in the human genome. Epigenetics Chromatin 8, 6 (2015).

Rocke, D. M., Ideker, T., Troyanskaya, O., Quackenbush, J. & Dopazo, J. Papers on normalization, variable selection, classification or clustering of microarray data. Bioinformatics 25, 701–702 (2009).

Pulini, A. A., Kerr, W. T., Loo, S. K. & Lenartowicz, A. Classification accuracy of neuroimaging biomarkers in attention-deficit/hyperactivity disorder: effects of sample size and circular analysis. Biol. Psychiatry Cogn. Neurosci. Neuroimaging 4, 108–120 (2019).

Poldrack, R. A., Huckins, G. & Varoquaux, G. Establishment of best practices for evidence for prediction: a review. JAMA Psychiatry 77, 534–540 (2020).

Ambroise, C. & McLachlan, G. J. Selection bias in gene extraction on the basis of microarray gene-expression data. Proc. Natl Acad. Sci. USA 99, 6562–6566 (2002). The authors present prediction of cancer outcome from expression of a small number of genes as an example of how supervised feature selection performed before cross-validation leads to performance overestimation.

van Eyk, C. L. et al. Analysis of 182 cerebral palsy transcriptomes points to dysregulation of trophic signalling pathways and overlap with autism. Transl. Psychiatry 8, 88 (2018).

Alakwaa, F. M., Chaudhary, K. & Garmire, L. X. Deep learning accurately predicts estrogen receptor status in breast cancer metabolomics data. J. Proteome Res. 17, 337–347 (2018).

Yuan, Y., Guo, L., Shen, L. & Liu, J. S. Predicting gene expression from sequence: a reexamination. PLoS Comput. Biol. 3, e243 (2007).

Urban, G., Torrisi, M., Magnan, C. N., Pollastri, G. & Baldi, P. Protein profiles: biases and protocols. Comput. Struct. Biotechnol. J. 18, 2281–2289 (2020). This study demonstrates how protein profiles cause leakage of information between the training and test sets, and hence performance overestimation, in the context of protein structure prediction.

Khalilia, M., Chakraborty, S. & Popescu, M. Predicting disease risks from highly imbalanced data using random forest. BMC Med. Inform. Decis. Mak. 11, 51 (2011).

Schubach, M., Re, M., Robinson, P. N. & Valentini, G. Imbalance-aware machine learning for predicting rare and common disease-associated non-coding variants. Sci. Rep. 7, 2959 (2017).

Japkowicz, N. & Stephen, S. The class imbalance problem: a systematic study1. Intell. Data Anal. 6, 429–449 (2002).

Barandela, R., Sánchez, J. S., Garca, V. & Rangel, E. Strategies for learning in class imbalance problems. Pattern Recognit. 36, 849–851 (2003). This work explores the negative consequences of imbalanced data as well as several common strategies for mitigating this pitfall.

Batista, G. E. A. P. A., Prati, R. C. & Monard, M. C. A study of the behavior of several methods for balancing machine learning training data. SIGKDD Explor. Newsl. 6, 20–29 (2004).

Buda, M., Maki, A. & Mazurowski, M. A. A systematic study of the class imbalance problem in convolutional neural networks. Neural Netw. 106, 249–259 (2018). This article explores performance measures and mitigation strategies for class imbalance specifically in the context of prediction with convolutional neural networks.

Cui, Y., Jia, M., Lin, T.-Y., Song, Y. & Belongie, S. Class-balanced loss based on effective number of samples. In 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (IEEE, 2019)

Nguyen, H. M., Cooper, E. W. & Kamei, K. Borderline over-sampling for imbalanced data classification. Int. J. Knowl. Eng. Soft Data Paradig. 3, 4 (2011).

Chawla, N. V., Bowyer, K. W., Hall, L. O. & Kegelmeyer, W. P. SMOTE: synthetic minority over-sampling technique. J. Artif. Intell. Res. 16, 321–357 (2002).

Haibo H., Yang B., Garcia, E. A. & Shutao L. in 2008 IEEE International Joint Conference on Neural Networks (IEEE World Congress on Computational Intelligence) 1322–1328 (IEEE,2008).

Lemaître, G., Nogueira, F. & Aridas, C. K. Imbalanced-learn: a python toolbox to tackle the curse of imbalanced datasets in machine learning. J. Mach. Learn. Res. 18, 559–563 (2017).

Davis, J. & Goadrich, M. in Proc. 23rd International Conference on Machine Learning 233–240 (Association for Computing Machinery, 2006).

Peña-Castillo, L. et al. A critical assessment of Mus musculus gene function prediction using integrated genomic evidence. Genome Biol. 9, S2 (2008).

Kaler, A. S. & Purcell, L. C. Estimation of a significance threshold for genome-wide association studies. BMC Genomics 20, 618 (2019).

Grant, C. E., Bailey, T. L. & Noble, W. S. FIMO: scanning for occurrences of a given motif. Bioinformatics 27, 1017–1018 (2011).

VanderWeele, T. J. & Shpitser, I. On the definition of a confounder. Ann. Stat. 41, 196–220 (2013).

Efron, B. Prediction, estimation, and attribution. J. Am. Stat. Assoc. 115, 636–655 (2020).

Yu, B. & Kumbier, K. Veridical data science. Proc. Natl Acad. Sci. USA 117, 3920–3929 (2020).

Acknowledgements

The authors thank P. Baldi, M. Beer, A. Ben-Hur, J. Ernst, E. Eskin, G. Haliburton, H. Huang, S.-I. Lee, M. Libbrecht, J. Majewski, Q. Morris, S. Mostafavi, J.-P. Vert, W. Wang, B. Yu and M. Zitnik for recommending examples and for helpful suggestions on how to review this topic.

Author information

Authors and Affiliations

Contributions

S.W. and J.S. researched data for article. All authors substantially contributed to the discussion of content, wrote the article and reviewed and or edited the manuscript before submission.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Peer review information

Nature Reviews Genetics thanks A. Gitter, J. Gagneur and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Related links

Interactive notebooks of the pitfalls discussed in this Review: https://github.com/shwhalen/ml-pitfalls

Glossary

- Examples

-

Also known as ‘samples’ or ‘observations’. The primary data objects being manipulated by a machine learning system. They are the basic units being measured.

- Training set

-

Examples and associated outcomes that are used to fit a supervised machine learning model.

- Test set

-

Examples and associated outcomes that are used to evaluate model performance. Training and test sets are disjoint.

- Independent

-

The value of one example does not depend on the value of others.

- Identically distributed

-

Generated by the same underlying distribution, with a particular mean, variance and shape.

- Generalization error

-

A measure of how accurately a model predicts outcomes in data it has never seen before.

- Prediction set

-

A third set of examples whose associated outcomes are truly not known, where a fitted model is applied to make predictions. Also known as a prospective validation set.

- True negatives

-

Negatives whose labels are correctly predicted.

- Features

-

Properties of a given example, for example, the gene expression values associated with a gene or the sequence patterns associated with a genomic window. Also known as ‘covariates’.

- Outcome

-

Outcomes are what we want to predict in supervised learning, for example, the functional class assigned to a gene or the binary classification of whether a given genomic window contains a promoter. Categorical outcomes are often referred to as ‘labels’. In regression settings, the outcome is a real number.

- Ascertainment bias

-

Examples in a study are not representative of the general population.

- Adversarial learning

-

Machine learning techniques for improving model robustness to distributional differences, such as those caused by batch effects or other confounders.

- Positive

-

Positives are examples with the outcome of interest in a binary classifier.

- Negative

-

Negatives are examples with the alternative outcome in a binary classifier. In genomics, negatives often outnumber positives.

- Collider

-

A variable causally influenced by two variables, for example, both a feature and the outcome in predictive modelling.

- Clustering

-

Unsupervised learning, where there is no measured outcome, although the cluster assignment is an estimate of an unobserved label. The goal is to organize examples on the basis of pairwise similarities of their features, for example, into groups (‘clusters’) or a hierarchical tree.

- False negatives

-

Positives whose labels are incorrectly predicted as negative.

- True positives

-

Positives whose labels are correctly predicted.

- False positives

-

Negatives whose labels are incorrectly predicted as positive.

Rights and permissions

About this article

Cite this article

Whalen, S., Schreiber, J., Noble, W.S. et al. Navigating the pitfalls of applying machine learning in genomics. Nat Rev Genet 23, 169–181 (2022). https://doi.org/10.1038/s41576-021-00434-9

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41576-021-00434-9

This article is cited by

-

Prop3D: A flexible, Python-based platform for machine learning with protein structural properties and biophysical data

BMC Bioinformatics (2024)

-

Hold out the genome: a roadmap to solving the cis-regulatory code

Nature (2024)

-

Robustness of cancer microbiome signals over a broad range of methodological variation

Oncogene (2024)

-

Enhancing coevolutionary signals in protein–protein interaction prediction through clade-wise alignment integration

Scientific Reports (2024)

-

CimpleG: finding simple CpG methylation signatures

Genome Biology (2023)