Abstract

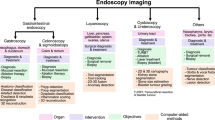

Modern endoscopy relies on digital technology, from high-resolution imaging sensors and displays to electronics connecting configurable illumination and actuation systems for robotic articulation. In addition to enabling more effective diagnostic and therapeutic interventions, the digitization of the procedural toolset enables video data capture of the internal human anatomy at unprecedented levels. Interventional video data encapsulate functional and structural information about a patient’s anatomy as well as events, activity and action logs about the surgical process. This detailed but difficult-to-interpret record from endoscopic procedures can be linked to preoperative and postoperative records or patient imaging information. Rapid advances in artificial intelligence, especially in supervised deep learning, can utilize data from endoscopic procedures to develop systems for assisting procedures leading to computer-assisted interventions that can enable better navigation during procedures, automation of image interpretation and robotically assisted tool manipulation. In this Perspective, we summarize state-of-the-art artificial intelligence for computer-assisted interventions in gastroenterology and surgery.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 print issues and online access

$209.00 per year

only $17.42 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

References

Darzi, A. & Munz, Y. The impact of minimally invasive surgical techniques. Annu. Rev. Med. 55, 223–237 (2004).

Clancy, N. T., Jones, G., Maier-Hein, L., Elson, D. S. & Stoyanov, D. Surgical spectral imaging. Med. Image Anal. 63, 101699 (2020).

Stoyanov, D. Surgical vision. Ann. Biomed. Eng. 40, 332–345 (2012).

Ahmad, O. F. et al. Artificial intelligence and computer-aided diagnosis in colonoscopy: current evidence and future directions. Lancet Gastroenterol. Hepatol. 4, 71–80 (2019).

Kaul, V., Enslin, S. & Gross, S. A. History of artificial intelligence in medicine. Gastrointest. Endosc. 92, 807–812 (2020).

Jin, Z. et al. Deep learning for gastroscopic images: computer-aided techniques for clinicians. Biomed. Eng. Online https://doi.org/10.1186/s12938-022-00979-8 (2022).

Maier-Hein, L. et al. Surgical data science-from concepts toward clinical translation. Med. Image Anal. 76, 102306 (2022).

Maier-Hein, L. et al. Surgical data science for next-generation interventions. Nat. Biomed. Eng. 1, 691–696 (2017).

Vercauteren, T., Unberath, M., Padoy, N. & Navab, N. CAI4CAI: the rise of contextual artificial intelligence in computer-assisted interventions. Proc. IEEE Inst. Electr. Electron. Eng. 108, 198–214 (2020).

Le Berre, C. et al. Application of artificial intelligence to gastroenterology and hepatology. Gastroenterology 158, 76–94 (2019).

Chadebecq, F., Vasconcelos, F., Mazomenos, E. & Stoyanov, D. Computer vision in the surgical operating room. Visc. Med. 36, 456–462 (2020).

Goodfellow, I.J. & Bengio, Y. & Courville, A. Deep Learning (MIT Press, 2016).

Mori, Y. et al. Novel computer-aided diagnostic system for colorectal lesions by using endocytoscopy (with videos). Gastrointest. Endosc. 81, 621–629 (2015).

Bernal, J. et al. Comparative validation of polyp detection methods in video colonoscopy: results from the MICCAI 2015 endoscopic vision challenge. IEEE Trans. Med. Imaging 36, 1231–1249 (2017).

Singh, H. et al. The reduction in colorectal cancer mortality after colonoscopy varies by site of the cancer. Gastroenterology 139, 1128–1137 (2010).

Zhao, S. et al. Magnitude, risk factors, and factors associated with adenoma miss rate of tandem colonoscopy: a systematic review and meta-analysis. Gastroenterology 156, 1661–1674 (2019).

Castaneda, D., Popov, V. B., Verheyen, E., Wander, P. & Gross, S. A. New technologies improve adenoma detection rate, adenoma miss rate, and polyp detection rate: a systematic review and meta-analysis. Gastrointest. Endosc. 88, 209–222 (2018).

Sánchez-Peralta, L. F., Bote-Curiel, L., Picón, A., Sánchez-Margallo, F. M. & Pagador, J. B. Deep learning to find colorectal polyps in colonoscopy: a systematic literature review. Artif. Intell. Med. 108, 101823 (2020).

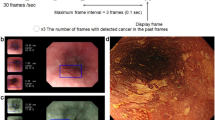

Wang, P. et al. Development and validation of a deep-learning algorithm for the detection of polyps during colonoscopy. Nat. Biomed. Eng. 2, 741–748 (2018).

Areia, M. et al. Cost-effectiveness of artificial intelligence for screening colonoscopy: a modelling study. Lancet Digit. Health 4, 436–444 (2022).

Bernal, J., Sánchez, J. & Vilariño, F. Towards automatic polyp detection with a polyp appearance model. Pattern Recognit. 45, 3166–3182 (2012).

Vazquez, D. et al. A benchmark for endoluminal scene segmentation of colonoscopy images. J. Healthc. Eng. 2017, 1–9 (2017).

Yuan, Z. et al. Automatic polyp detection in colonoscopy videos. J. Med. Imaging 10133, 1–10 (2017).

Mo, X., Tao, K., Wang, Q. & Wang, G. An efficient approach for polyps detection in endoscopic videos based on faster R-CNN. Int. Conf. Pattern Recognit. 2018, 3929–3934 (2018).

Lee, J. Y. et al. Real-time detection of colon polyps during colonoscopy using deep learning: systematic validation with four independent datasets. Sci. Rep. 10, 8379 (2020).

Spadaccini, M. et al. Computer-aided detection versus advanced imaging for detection of colorectal neoplasia: a systematic review and network meta-analysis. Lancet Gastroenterol. Hepatol. 6, 794–802 (2021).

Hussein, M. et al. A new artificial intelligence system successfully detects and localises early neoplasia in Barrett’s esophagus by using convolutional neural networks. United European Gastroenterol. J. 10, 528–537 (2022).

Hou, W. et al. Early neoplasia identification in Barrett’s esophagus via attentive hierarchical aggregation and self-distillation. Med. Image Anal. 72, 102092 (2021).

Maier-Hein, L. et al. Why rankings of biomedical image analysis competitions should be interpreted with care. Nat. Commun. 9, 5217 (2018).

Wallace, M. B. et al. Impact of artificial intelligence on miss rate of colorectal neoplasia. Gastroenterology 163, 295–304 (2022).

Van Berkel, N. et al. Initial responses to false positives in AI-supported continuous interactions: a colonoscopy case study. ACM Trans. Interact. Intell. Syst. 12, 1–18 (2022).

Pannala, R. et al. Artificial intelligence in gastrointestinal endoscopy. Videogie 5, 598–613 (2020).

Singh, D. & Singh, B. Effective and efficient classification of gastrointestinal lesions: combining data preprocessing, feature weighting, and improved ant lion optimization. J. Ambient. Intell. Humaniz. Comput. 12, 8683–8698 (2021).

Mesejo, P. et al. Computer-aided classification of gastrointestinal lesions in regular colonoscopy. IEEE Trans. Med. Imaging 35, 2051–2063 (2016).

Byrne, M. F. et al. Real-time differentiation of adenomatous and hyperplastic diminutive colorectal polyps during analysis of unaltered videos of standard colonoscopy using a deep learning model. Gut 68, 94–100 (2019).

Patel, K. et al. A comparative study on polyp classification using convolutional neural networks. PLoS ONE 15, 1–16 (2020).

Li, K. et al. Colonoscopy polyp detection and classification: dataset creation and comparative evaluations. PLoS ONE 16, 1–26 (2021).

Nogueira-Rodríguez, A. et al. Deep neural networks approaches for detecting and classifying colorectal polyps. Neurocomputing 423, 721–734 (2021).

Ozawa, T. Automated endoscopic detection and classification of colorectal polyps using convolutional neural networks. Ther. Adv. Gastroenterol. 13, 1–13 (2020).

Zorron Cheng Tao Pu, L. et al. Randomised controlled trial comparing modified Sano’s and narrow band imaging international colorectal endoscopic classifications for colorectal lesions. World J. Gastrointest. Endosc. 10, 210–218 (2018).

Zorron Cheng Tao Pu, L. et al. Computer-aided diagnosis for characterization of colorectal lesions: comprehensive software that includes differentiation of serrated lesions. Gastrointest. Endosc. 92, 891–899 (2020).

Takeda, K. et al. Accuracy of diagnosing invasive colorectal cancer using computer-aided endocytoscopy. Endoscopy 49, 798–802 (2017).

Ito, N. et al. Endoscopic diagnostic support system for cT1b colorectal cancer using deep learning. Oncology 96, 44–50 (2019).

Ahmad, O. F. et al. Performance of artificial intelligence for detection of subtle and advanced colorectal neoplasia. Dig. Endosc. 34, 862–869 (2022).

Endoscopic Classification Review Group. Update on the Paris classification of superficial neoplastic lesions in the digestive tract. Endoscopy 37, 570–578 (2005).

Kominami, Y. et al. Computer-aided diagnosis of colorectal polyp histology by using a real-time image recognition system and narrow-band imaging magnifying colonoscopy. Gastrointest. Endosc. 83, 643–649 (2016).

Jin, E. H. et al. Improved accuracy in optical diagnosis of colorectal polyps using convolutional neural networks with visual explanations. Gastroenterology 158, 2169–2179 (2020).

Cybernet. EndoBRAIN®-EYE, AI-equipped colorectal endoscopy diagnosis support software part 2; acquisition of approval under Pharmaceutical and Medical Device act (PMD act). Cybernet https://www.cybernet.jp/english/documents/pdf/news/press/2020/20200129.pdf (2020).

Münzer, B., Schoeffmann, K. & Böszörmenyi, L. Content-based processing and analysis of endoscopic images and videos: a survey. Multimed. Tools Appl. 77, 1323–1362 (2018).

Wu, H. et al. Semantic SLAM based on deep learning in endocavity environment. Symmetry 14, 614 (2022).

Freedman, D. et al. Detecting deficient coverage in colonoscopies. IEEE Trans. Med. Imaging 39, 3451–3462 (2020).

Hartley, R. & Zisserman, A. Multiple View Geometry in Computer Vision 2nd edn (Cambridge University Press, 2003).

Mur-Artal, R., Montiel, J. M. M. & Tardos, J. D. ORB-SLAM: a versatile and accurate monocular SLAM system. IEEE Trans. Robot. 31, 1147–1163 (2015).

Mahmoud, N. et al. in Computer-Assisted and Robotic Endoscopy CARE 2016 Vol. 10170, 72–83 (Springer, 2017).

Mahmoud, N. et al. SLAM based quasi dense reconstruction for minimally invasive surgery scenes. IEEE Int. Conf. Robot. Autom. Workshop C4 2017, 1–5 (2017).

Mahmoud, N. et al. Live tracking and dense reconstruction for handheld monocular endoscopy. IEEE Trans. Med. Imaging 38, 79–89 (2019).

Docea, R. et al. Simultaneous localisation and mapping for laparoscopic liver navigation: a comparative evaluation study. J. Med. Imaging 11598, 62–76 (2021).

Parashar, S., Pizarro, D. & Bartoli, A. Isometric non-rigid shape-from motion with Riemannian geometry solved in linear time. IEEE Trans. Pattern Anal. Mach. Intell. 40, 2442–2454 (2018).

Lamarca, J., Parashar, S., Bartoli, A. & Montiel, J. M. M. DefSLAM: tracking and mapping of deforming scenes from monocular sequences. IEEE Trans. Robot. 37, 291–303 (2020).

Rodriguez, J. J. G., Lamarca, J., Morlana, J., Tardos, J. D. & Montiel, J. M. M. Sd-defslam: semi-direct monocular slam for deformable and intracorporeal scenes. IEEE Int. Conf. Robot. Autom. 2021, 5170–5177 (2021).

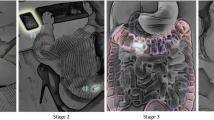

Sengupta, A. & Bartoli, A. Colonoscopic 3D reconstruction by tubular non-rigid structure-from-motion. Int. J. Comput. Assist. Radiol. Surg. 16, 1237–1241 (2021).

Lin, J. et al. Dual-modality endoscopic probe for tissue surface shape reconstruction and hyperspectral imaging enabled by deep neural networks. Med. Image Anal. 48, 162–176 (2018).

Ciuti, G. et al. Frontiers of robotic colonoscopy: a comprehensive review of robotic colonoscopes and technologies. J. Clin. Med. 9, 1648 (2020).

Ma, R. et al. RNNSLAM: reconstructing the 3D colon to visualize missing regions during a colonoscopy. Med. Image Anal. 72, 102100 (2021).

Recasens, D., Lamarca, J., Facil, J. M., Montiel, J. M. M. & Civera, J. Endo-depth-and-motion: reconstruction and tracking in endoscopic videos using depth networks and photometric constraints. IEEE Robot. Autom. Lett. 6, 7225–7232 (2021).

Zhang, S., Zhao, L., Huang, S., Ye, M. & Hao, Q. A template-based 3D reconstruction of colon structures and textures from stereo colonoscopic images. IEEE Trans. Med. Robot. Bionics. 3, 85–95 (2021).

Rau, A., Bhattarai, B., Agapito, L. & Stoyanov, D. Bimodal camera pose prediction for endoscopy. Preprint at https://doi.org/10.48550/arXiv.2204.04968 (2022).

Takiyama, H. et al. Automatic anatomical classification of esophagogastroduodenoscopy images using deep convolutional neural networks. Nat. Sci. Rep. 8, 7497 (2018).

Igarashi, S., Sasaki, Y., Mikami, T., Sakuraba, H. & Fukuda, S. Anatomical classification of upper gastrointestinal organs under various image capture conditions using AlexNet. Comput. Biol. Med. 124, 103950 (2020).

Beg, S. et al. Quality standards in upper gastrointestinal endoscopy: a position statement of the British Society of Gastroenterology (BSG) and Association of Upper Gastrointestinal Surgeons of Great Britain and Ireland (AUGIS). Gut 66, 1886–1899 (2017).

Rey, J. F. & Lambert, R. The ESGE Quality Assurance Committee: ESGE recommendations for quality control in gastrointestinal endoscopy: guidelines for image documentation in upper and lower GI endoscopy. Endoscopy 33, 901–903 (2001).

Yao, K. The endoscopic diagnosis of early gastric cancer. Ann. Gastroenterol. 26, 11–22 (2013).

He, Q. et al. Deep learning based anatomical site classification for upper gastrointestinal endoscopy. Int. J. Comput. Assist. Radiol. Surg. 15, 1085–1094 (2020).

Riegler, M. et al. Multimedia for medicine: the medico task at mediaeval 2017. CEUR Workshop Proceedings http://ceur-ws.org/Vol-1984/Mediaeval_2017_paper_3.pdf (2017).

Pogorelov, K. et al. Medico multimedia task at mediaeval 2018. CEUR Workshop Proceedings http://ceur-ws.org/Vol-2283/MediaEval_18_paper_6.pdf (2018).

Hicks, S. A. et al. ACM multimedia biomedia 2020 grand challenge overview. ACM Int. Conf. Multimed. 2020, 4655–4658 (2020).

Jha, D. et al. A comprehensive analysis of classification methods in gastrointestinal endoscopy imaging. Med. Image Anal. 70, 102007 (2021).

Pogorelov, K. et al. KVASIR: a multi-class image dataset for computer aided gastrointestinal disease detection. ACM Int. Conf. Multimed. 2017, 164–169 (2017).

Pogorelov, K. Nerthus: a bowel preparation quality video dataset. ACM Int. Conf. Multimed. 2017, 170–174 (2017).

Luo, Z., Wang, X., Xu, Z., Li, X. & Li, J. Adaptive ensemble: solution to the biomedia ACM MM grandchallenge 2019. ACM Int. Conf. Multimed. 2019, 2583–2587 (2019).

Saito, H. et al. Automatic anatomical classification of colonoscopic images using deep convolutional neural networks. Gastroenterol. Rep. 9, 226–233 (2021).

Sestini, L., Rosa, B., De Momi, E., Ferrigno, G. & Padoy, N. A kinematic bottleneck approach for pose regression of flexible surgical instruments directly from images. IEEE Robot. Autom. Lett. 6, 2938–2945 (2021).

Roß, T. et al. Comparative validation of multi-instance instrument segmentation in endoscopy: results of the ROBUST-MIS 2019 challenge. Med. Image Anal. 70, 101920 (2021).

Gonzalez, C., Bravo-Sanchez, L. & Arbelaez, P. ISINet: an instance-based approach for surgical instrument segmentation. Med. Image Comput. Comput. Assist. Interv. 12263, 595–605 (2020).

Xiaowen, K. et al. Accurate instance segmentation of surgical instruments in robotic surgery: model refinement and cross-dataset evaluation. Int. J. Comput. Assist. Radiol. Surg. 19, 1607–1614 (2021).

Colleoni, E., Edwards, P. & Stoyanov, D. Synthetic and real inputs for tool segmentation in robotic surgery. Med. Image Comput. Comput. Assist. Interv. 12263, 700–710 (2020).

Colleoni, E. & Stoyanov, D. Robotic instrument segmentation with image-to-image translation. IEEE Robot. Autom. Lett. 6, 935–942 (2021).

Pfeiffer, M. et al. Generating large labeled data sets for laparoscopic image processing tasks using unpaired image-to-image translation. Med. Image Comput. Comput. Assist. Interv. 11768, 119–127 (2019).

Sahu, M., Stromsdorfer, R., Mukhopadhyay, A. & Zachow, S. Endo-Sim2Real: consistency learning-based domain adaptation for instrument segmentation. Med. Image Comput. Comput. Assist. Interv. 12263, 784–794 (2020).

Zhang, Z., Rosa, B. & Nageotte, F. Surgical tool segmentation using generative adversarial networks with unpaired training data. IEEE Robot. Autom. Lett. 6, 6266–6273 (2021).

Zhao, Z. et al. One to many: adaptive instrument segmentation via meta learning and dynamic online adaptation in robotic surgical video. IEEE Int. Conf. Robot. Autom. 2021, 13553–13559 (2021).

Du, X. et al. Articulated multi-instrument 2-D pose estimation using fully convolutional networks. IEEE Trans. Med. Imaging 37, 1276–1287 (2018).

Kayhan, M. et al. Deep attention based semi-supervised 2D-pose estimation for surgical instruments. Pattern Recognit., ICPR Int. Workshops Chall. 2021, 444–460 (2021).

Rodrigues, M., Mayo, M. & Patros, P. Surgical tool datasets for machine learning research: a survey. Int. J. Comput. Vis. 130, 2222–2248 (2022).

Allan, M., Ourselin, S., Hawkes, D. J., Kelly, J. D. & Stoyanov, D. 3-D pose estimation of articulated instruments in robotic minimally invasive surgery. IEEE Trans. Med. Imaging 37, 1204–1213 (2018).

Hasan, K., Calvet, L., Rabbani, N. & Bartoli, A. Detection, segmentation, and 3D pose estimation of surgical tools using convolutional neural networks and algebraic geometry. Med. Image Anal. 70, 101994 (2021).

Ahmidi, N. et al. A dataset and benchmarks for segmentation and recognition of gestures in robotic surgery. IEEE Trans. Biomed. Eng. 64, 2025–2041 (2017).

Van Amsterdam, B., Clarkson, M. J. & Stoyanov, D. Gesture recognition in robotic surgery: a review. IEEE Trans. Biomed. Eng. 68, 2021–2035 (2021).

Dergachyova, O., Bouget, D., Huaulmé, A., Morandi, X. & Jannin, P. Automatic data-driven real-time segmentation and recognition of surgical workflow. Int. J. Comput. Assist. Radiol. Surg. 11, 1081–1090 (2016).

Lalys, F. & Jannin, P. Surgical process modelling: a review. Int. J. Comput. Assist. Radiol. Surg. 9, 495–511 (2014).

Oleari, E. et al. Enhancing surgical process modeling for artificial intelligence development in robotics: the saras case study for minimally invasive procedures. Int. Symp. Med. Inf. Commun. Technol. 2019, 1–6 (2019).

Gurcan, I. & Van Nguyen, H. Surgical activities recognition using multi-scale recurrent networks. IEEE Int. Conf. Acoust. Speech Signal. Proc. 2019, 2887–2891 (2019).

Funke, I. et al. Video-based surgical skill assessment using 3D convolutional neural networks. Int. J. Comput. Assist. Radiol. Surg. 14, 1217–1225 (2019).

Qin, Y., Allan, M., Burdick, J. W. & Azizian, M. Autonomous hierarchical surgical state estimation during robot-assisted surgery through deep neural networks. IEEE Robot. Autom. Lett. 6, 6220–6227 (2021).

Park, J. & Park, C. H. Recognition and prediction of surgical actions based on online robotic tool detection. IEEE Robot. Autom. Lett. 6, 2365–2372 (2021).

Long, Y. et al. Relational graph learning on visual and kinematics embeddings for accurate gesture recognition in robotic surgery. IEEE Int. Conf. Robot. Autom. 2021, 13346–13353 (2021).

Van Amsterdam, B. et al. Gesture recognition in robotic surgery with multimodal attention. IEEE Trans. Med. Imaging 41, 1677–1687 (2022).

Stauder, R. et al. The TUM LapChole dataset for the M2CAI 2016 workflow challenge. Preprint at https://doi.org/10.48550/arXiv.1610.09278 (2016).

Twinanda, A.P. et al. Single-and multi-task architectures for surgical workflow challenge at M2CAI 2016. Preprint at https://doi.org/10.48550/arXiv.1610.08844 (2016).

Twinanda, A. P. et al. EndoNet: a deep architecture for recognition tasks on laparoscopic videos. IEEE Trans. Med. Imaging 36, 86–97 (2017).

Jin, Y. et al. Multi-task recurrent convolutional network with correlation loss for surgical video analysis. Med. Image Anal. 59, 101572 (2020).

Bawa, V. S. et al. ESAD: endoscopic surgeon action detection dataset. Preprint at https://doi.org/10.48550/arXiv.2104.03178 (2021).

Bawa, V. S. et al. The SARAS endoscopic surgeon action detection (ESAD) dataset: challenges and methods. Preprint at https://doi.org/10.48550/arXiv.2104.03178 (2021).

Kitaguchi, D. et al. Automated laparoscopic colorectal surgery workflow recognition using artificial intelligence: Experimental research. Int. J. Surg. 79, 88–94 (2020).

Ban, Y. et al. SUPR-GAN: surgical prediction GAN for event anticipation in laparoscopic and robotic surgery. IEEE Robot. Autom. Lett. 7, 5741–5748 (2022).

Nwoye, C. I. et al. Rendezvous: attention mechanisms for the recognition of surgical action triplets in endoscopic videos. Med. Image Anal. 78, 102433 (2022).

Nwoye, C. I. et al. CholecTriplet2021: a benchmark challenge for surgical action triplet recognition. Preprint at https://doi.org/10.48550/arXiv.2204.04746 (2022).

Gibaud, B. Toward a standard ontology of surgical process models. Int. J. Comput. Assist. Radiol. Surg. 13, 1397–1408 (2018).

Katić, D. et al. LapOntoSPM: an ontology for laparoscopic surgeries and its application to surgical phase recognition. Int. J. Comput. Assist. Radiol. Surg. 10, 1427–1434 (2015).

Meireles, O. R. et al. SAGES Video Annotation for AI Working Groups. SAGES consensus recommendations on an annotation framework for surgical video. Surg. Endosc. 35, 4918–4929 (2021).

Mascagni, P. & Padoy, N. OR black box and surgical control tower: recording and streaming data and analytics to improve surgical care. J. Visc. Surg. 158, 18–25 (2021).

Funke, I., Mees, S. T., Weitz, J. & Speidel, S. Video-based surgical skill assessment using 3D convolutional neural networks. Int. J. Comput. Assist. Radiol. Surg. 14, 1217–1225 (2019).

Wang, T., Wang, Y. & Li, M. Towards accurate and interpretable surgical skill assessment: a video-based method incorporating recognized surgical gestures and skill levels. Med. Image Comput. Comput. Assist. Interv. 12263, 668–678 (2020).

Collins, J. W. et al. Ethical implications of AI in robotic surgical training: a Delphi consensus statement. Eur. Urol. Focus. 8, 613–622 (2022).

Lavanchy, J. L. et al. Automation of surgical skill assessment using a three-stage machine learning algorithm. Sci. Rep. 11, 5197 (2021).

Liu, D. et al. Towards unified surgical skill assessment. Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit. 2021, 9522–9531 (2021).

Vedula, S. S. et al. Artificial intelligence methods and artificial intelligence-enabled metrics for surgical education: a multidisciplinary consensus. J. Am. Coll. Surg. 234, 1181–1192 (2022).

Zhu, Y., Xu, Y., Chen, W., Zhao, T. & Zheng, S. A CNN-based cleanliness evaluation for bowel preparation in colonoscopy. Int. Cong. Image Signal. Process., BioMed. Eng. Inf. 2019, 1–5 (2019).

Hutchinson, K., Li, Z., Cantrell, L. A., Schenkman, N. S. & Alemzadeh, H. Analysis of executional and procedural errors in dry-lab robotic surgery experiments. Int. J. Med. Robot. Comput. Assist. Surg. 18, 1–15 (2022).

Zia, A. et al. Surgical visual domain adaptation: results from the MICCAI 2020 SurgVisDom Challenge. Preprint at https://doi.org/10.48550/arXiv.2102.13644 (2021).

Shademan, A. et al. Supervised autonomous robotic soft tissue surgery. Sci. Trans. Med. 8, 1–8 (2016).

Saeidi, H. et al. Autonomous robotic laparoscopic surgery for intestinal anastomosis. Sci. Robot. 7, 1–13 (2022).

Dehghani, H. & Kim, P. C. W. Robotic automation for surgery. Digit. Surg. 2021, 203–213 (2021).

Oberlin, J., Buharin, V. E., Dehghani, H. & Kim, P. C. W. Intelligence and autonomy in future robotic surgery. Robot. Surg. 2021, 183–195 (2021).

Kinross, J. M. et al. Next-generation robotics in gastrointestinal surgery. Nat. Rev. Gastroenterol. Hepatol. 17, 430–440 (2020).

Kassahun, Y. et al. Surgical robotics beyond enhanced dexterity instrumentation: a survey of machine learning techniques and their role in intelligent and autonomous surgical actions. Int. J. Comput. Assist. Radiol. Surg. 11, 553–568 (2016).

Haidegger, T. Autonomy for surgical robots: concepts and paradigms. IEEE Trans. Med. Robot. Bionics 1, 65–76 (2019).

Attanasio, A., Scaglioni, B., De Momi, E., Fiorini, P. & Valdastri, P. Autonomy in surgical robotics. Annu. Rev. Control. Robot. Auton. Syst. 4, 651–679 (2021).

Houseago, C., Bloesch, M. & Leutenegger, S. KO-Fusion: dense visual SLAM with tightly-coupled kinematic and odometric tracking. Int. Conf. Robot. Autom. 2019, 4054–4060 (2019).

Li, Y. et al. SuPer: a surgical perception framework for endoscopic tissue manipulation with surgical robotics. IEEE Robot. Autom. Lett. 5, 2294–2301 (2020).

Varier, V. M. et al. Collaborative suturing: a reinforcement learning approach to automate hand-off task in suturing for surgical robots. IEEE Int. Conf. Robot. Hum. Interact. Commun. 2020, 1380–1386 (2020).

Nguyen, T., Nguyen, N. D., Bello, F. & Nahavandi, S. A new tensioning method using deep reinforcement learning for surgical pattern cutting. IEEE Int. Conf. Ind. Technol. 2019, 1339–1344 (2019).

Attanasio, A. et al. Autonomous tissue retraction in robotic assisted minimally invasive surgery – a feasibility study. IEEE Robot. Autom. Lett. 5, 6528–6535 (2020).

Gruijthuijsen, C. et al. Robotic endoscope control via autonomous instrument tracking. Front. Robot. AI 9, 832208 (2022).

Shin, C. Autonomous tissue manipulation via surgical robot using learning based model predictive control. Int. Conf. Robot. Autom. 2019, 3875–3881 (2019).

Omisore, O. M. et al. A review on flexible robotic systems for minimally invasive surgery. IEEE Trans. Syst. Man. Cybern. 52, 631–644 (2022).

Martin, J. W. et al. Enabling the future of colonoscopy with intelligent and autonomous magnetic manipulation. Nat. Mach. Intell. 2, 595–606 (2020).

Huang, H. E. et al. Autonomous navigation of a magnetic colonoscope using force sensing and a heuristic search algorithm. Sci. Rep. 11, 16491 (2021).

Loftus, T. J. et al. Intelligent, autonomous machines in surgery. J. Surg. Res. 253, 92–99 (2020).

Hung, A. J., Chen, J. & Gill, I. S. Automated performance metrics and machine learning algorithms to measure surgeon performance and anticipate clinical outcomes in robotic surgery. JAMA Surg. 153, 770–771 (2018).

Ahmad, O. F., Stoyanov, D. & Lovat, L. B. Barriers and pitfalls for artificial intelligence in gastroenterology: Ethical and regulatory issues. Tech. Innov. Gastrointest. Endosc. 22, 80–84 (2020).

Muehlematter, U. J., Daniore, P. & Vokinger, K. N. Approval of artificial intelligence and machine learning-based medical devices in the USA and Europe (2015-20): a comparative analysis. Lancet Digit. Health 3, 195–203 (2021).

Taghiakbari, M., Mori, Y. & von Renteln, D. Artificial intelligence-assisted colonoscopy: a review of current state of practice and research. World J. Gastroenterol. 27, 8103–8122 (2021).

Vulpoi, R.-A. et al. Artificial intelligence in digestive endoscopy — where are we and where are we going? Diagnostics 12, 927 (2022).

Mori, Y., Bretthauer, M. & Kalager, M. Hopes and hypes for artificial intelligence in colorectal cancer screening. Gastroenterol 161, 774–777 (2021).

Aisu, N. et al. Regulatory-approved deep learning/machine learning-based medical devices in Japan as of 2020: a systematic review. PLoS Digit. Health 2022, 1–12 (2022).

Mori, Y., Neumann, H., Misawa, M., Kudo, S. & Bretthauer, M. Artificial intelligence in colonoscopy-Now on the market. What’s next? J. Gastroenterol. Hepatol. 36, 7–11 (2021).

Parikh, R. B. & Helmchen, L. A. Paying for artificial intelligence in medicine. NPJ Digit. Med. 5, 63 (2022).

Europa. Advanced Technologies for Industry – Product Watch. Artificial Intelligence-based software as a medical device. Europa https://ati.ec.europa.eu/sites/default/files/2020-07/ATI%20-%20Artificial%20Intelligence-based%20software%20as%20a%20medical%20device.pdf (2020).

MedTech Europe. Proposed Guiding Principles for Reimbursement of Digital Health Products and Solutions. MedTech Europe https://www.medtecheurope.org/wp-content/uploads/2019/04/30042019_eHSGSubGroupReimbursement.pdf (2019).

Zhou, X. Y. et al. Application of artificial intelligence in surgery. Front. Med. 14, 417–430 (2020).

Bayoudh, K. et al. A survey on deep multimodal learning for computer vision: advances, trends, applications, and datasets. Vis. Comput. 38, 2939–2970 (2022).

Luo, X., Mori, K. & Peters, T. M. Advanced endoscopic navigation: surgical big data, methodology, and applications. Annu. Rev. Biomed. Eng. 20, 221–251 (2018).

The MONAI Consortium. Project MONAI. MONAI https://docs.monai.io/en/stable/ (2020).

Rudiman, R. Minimally invasive gastrointestinal surgery: from past to the future. Ann. Med. Surg. 71, 102922 (2021).

Bogdanova, R., Boulanger, P. & Zheng, B. Depth perception of surgeons in minimally invasive surgery. Surg. Innov. 23, 515–524 (2016).

Acknowledgements

The authors are supported by the Wellcome/EPSRC Centre for Interventional and Surgical Sciences (WEISS) at University College London (203145Z/16/Z), EPSRC (EP/P012841/1, EP/P027938/1 and EP/R004080/1) and the H2020 FET (GA 863146). D.S. is supported by a Royal Academy of Engineering Chair in Emerging Technologies (CiET1819\2\36) and an EPSRC Early Career Research Fellowship (EP/P012841/1).

Author information

Authors and Affiliations

Contributions

D.S. and F.C. researched data for the article and wrote the article. All authors contributed substantially to discussion of the content, and reviewed and/or edited the manuscript before submission.

Corresponding author

Ethics declarations

Competing interests

D.S. is part of Digital Surgery from Medtronic and a shareholder in Odin Vision. L.B.L. is a shareholder in Odin Vision. F.C. declares no competing interests.

Peer review

Peer review information

Nature Reviews Gastroenterology & Hepatology thanks Pietro Valdastri and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Related links

Rights and permissions

About this article

Cite this article

Chadebecq, F., Lovat, L.B. & Stoyanov, D. Artificial intelligence and automation in endoscopy and surgery. Nat Rev Gastroenterol Hepatol 20, 171–182 (2023). https://doi.org/10.1038/s41575-022-00701-y

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41575-022-00701-y

This article is cited by

-

Role of surgery in T4N0-3M0 esophageal cancer

World Journal of Surgical Oncology (2023)

-

Laparoscopic right hemi-hepatectomy plus total caudate lobectomy for perihilar cholangiocarcinoma via anterior approach with augmented reality navigation: a feasibility study

Surgical Endoscopy (2023)

-

Active learning for extracting surgomic features in robot-assisted minimally invasive esophagectomy: a prospective annotation study

Surgical Endoscopy (2023)