Abstract

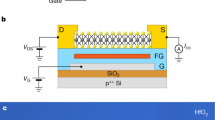

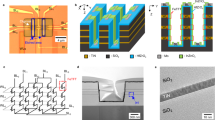

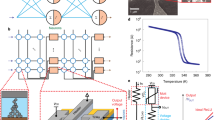

The growing computational demand in artificial intelligence calls for hardware solutions that are capable of in situ machine learning, where both training and inference are performed by edge computation. This not only requires extremely energy-efficient architecture (such as in-memory computing) but also memory hardware with tunable properties to simultaneously meet the demand for training and inference. Here we report a duplex device structure based on a ferroelectric field-effect transistor and an atomically thin MoS2 channel, and realize a universal in-memory computing architecture for in situ learning. By exploiting the tunability of the ferroelectric energy landscape, the duplex building block demonstrates an overall excellent performance in endurance (>1013), retention (>10 years), speed (4.8 ns) and energy consumption (22.7 fJ bit–1 μm–2). We implemented a hardware neural network using arrays of two-transistors-one-duplex ferroelectric field-effect transistor cells and achieved 99.86% accuracy in a nonlinear localization task with in situ trained weights. Simulations show that the proposed device architecture could achieve the same level of performance as a graphics processing unit under notably improved energy efficiency. Our device core can be combined with silicon circuitry through three-dimensional heterogeneous integration to give a hardware solution towards general edge intelligence.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 print issues and online access

$259.00 per year

only $21.58 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

Source data are provided with this paper.

References

Hutson, M. Has artificial intelligence become alchemy? Science 360, 478–478 (2018).

Christensen, D. V. et al. 2022 roadmap on neuromorphic computing and engineering. Neuromorph. Comput. Eng. 2, 022501 (2022).

Mehonic, A. & Kenyon, A. J. Brain-inspired computing needs a master plan. Nature 604, 255–260 (2022).

Salahuddin, S., Ni, K. & Datta, S. The era of hyper-scaling in electronics. Nat. Electron. 1, 442–450 (2018).

Kendall, J. D. & Kumar, S. The building blocks of a brain-inspired computer. Appl. Phys. Rev. 7, 011305 (2020).

Ambrogio, S. et al. Equivalent-accuracy accelerated neural-network training using analogue memory. Nature 558, 60–67 (2018).

Yu, S. Neuro-inspired computing with emerging nonvolatile memory. Proc. IEEE 106, 260–285 (2018).

Zhou, Z. et al. Edge intelligence: paving the last mile of artificial intelligence with edge computing. Proc. IEEE 107, 1738–1762 (2019).

Keshavarzi, A., Ni, K., Hoek, W. V. D., Datta, S. & Raychowdhury, A. Ferroelectronics for edge intelligence. IEEE Micro 40, 33–48 (2020).

Yao, P. et al. Fully hardware-implemented memristor convolutional neural network. Nature 577, 641–646 (2020).

Demasius, K.-U., Kirschen, A. & Parkin, S. Energy-efficient memcapacitor devices for neuromorphic computing. Nat. Electron. 4, 748–756 (2021).

Chen, W. et al. CMOS-integrated memristive non-volatile computing-in-memory for AI edge processors. Nat. Electron. 2, 420–428 (2019).

Cheng, C. et al. In-memory computing with emerging nonvolatile memory devices. Sci. China Inf. Sci. 64, 221402 (2021).

Li, C. et al. Analogue signal and image processing with large memristor crossbars. Nat. Electron. 1, 52–59 (2018).

Müller, J. et al. Ferroelectricity in simple binary ZrO2 and HfO2. Nano Lett. 12, 4318–4323 (2012).

Böscke, T. S., Müller, J., Bräuhaus, D., Schröder, U. & Böttger, U. Ferroelectricity in hafnium oxide thin films. Appl. Phys. Lett. 99, 102903 (2011).

Cheema, S. S. et al. Enhanced ferroelectricity in ultrathin films grown directly on silicon. Nature 580, 478–482 (2020).

Cheema, S. S. et al. Emergent ferroelectricity in subnanometer binary oxide films on silicon. Science 376, 648–652 (2022).

Gao, Z. et al. Identification of ferroelectricity in a capacitor with ultra-thin (1.5-nm) Hf0.5Zr0.5O2 film. IEEE Electron Device Lett. 42, 1303–1306 (2021).

Khan, A. I., Keshavarzi, A. & Datta, S. The future of ferroelectric field-effect transistor technology. Nat. Electron. 3, 588–597 (2020).

Schroeder, U., Park, M. H., Mikolajick, T. & Hwang, C. S. The fundamentals and applications of ferroelectric HfO2. Nat. Rev. Mater. 7, 653–669 (2022).

Jerry, M. et al. Ferroelectric FET analog synapse for acceleration of deep neural network training. In 2017 IEEE International Electron Devices Meeting (IEDM) 6.2.1–6.2.4. (IEEE, 2017).

Ni, K. et al. SoC logic compatible multi-bit FeMFET weight cell for neuromorphic applications. In 2018 IEEE International Electron Devices Meeting (IEDM) 13.2.1–13.2.4. (IEEE, 2018).

Sun, X., Wang, P., Ni, K., Datta, S. & Yu, S. Exploiting hybrid precision for training and inference: a 2T-1FeFET based analog synaptic weight cell. In 2018 IEEE International Electron Devices Meeting (IEDM) 3.1.1–3.1.4. (IEEE, 2018).

Tong, L. et al. 2D materials-based homogeneous transistor-memory architecture for neuromorphic hardware. Science 373, 1353–1358 (2021).

Zhang, W. et al. Neuro-inspired computing chips. Nat. Electron. 3, 371–382 (2020).

Luo, Q. et al. A highly CMOS compatible hafnia-based ferroelectric diode. Nat. Commun. 11, 1391 (2020).

Radisavljevic, B. et al. Single-layer MoS2 transistors. Nat. Nanotechnol. 6, 147–150 (2011).

Akinwande, D. et al. Graphene and two-dimensional materials for silicon technology. Nature 573, 507–518 (2019).

Liu, C. et al. Two-dimensional materials for next-generation computing technologies. Nat. Nanotechnol. 15, 545–557 (2020).

Marega, M. et al. Logic-in-memory based on an atomically thin semiconductor. Nature 587, 72–77 (2020).

Chung, Y.-Y. et al. High-accuracy deep neural networks using a contralateral-gated analog synapse composed of ultrathin MoS2 nFET and nonvolatile charge-trap memory. IEEE Electron Device Lett. 41, 1649–1652 (2020).

Chen, L., Pam, M. E., Li, S. & Ang, K.-W. Ferroelectric memory based on two-dimensional materials for neuromorphic computing. Neuromorph. Comput. Eng. 2, 022001 (2022).

Meng, W. et al. Three-dimensional monolithic micro-LED display driven by atomically thin transistor matrix. Nat. Nanotechnol. 16, 1231–1236 (2021).

Schram, T., Sutar, S., Radu, I. & Asselberghs, I. Challenges of wafer‐scale integration of 2D semiconductors for high‐performance transistor circuits. Adv. Mater. 34, 2109796 (2022).

Waltl, M. et al. Perspective of 2D integrated electronic circuits: scientific pipe dream or disruptive technology? Adv. Mater. 34, 2201082 (2022).

Chai, Y. In-sensor computing for machine vision. Nature 579, 32–33 (2020).

Mennel, L. et al. Ultrafast machine vision with 2D material neural network image sensors. Nature 579, 62–66 (2020).

Li, T. et al. Epitaxial growth of wafer-scale molybdenum disulfide semiconductor single crystals on sapphire. Nat. Nanotechnol. 16, 1201–1207 (2021).

Müller, J. et al. Ferroelectric hafnium oxide: a CMOS-compatible and highly scalable approach to future ferroelectric memories. In 2013 IEEE International Electron Devices Meeting (IEDM) 10.8.1–10.8.4 (IEEE, 2013).

Gong, N. & Ma, T.-P. A study of endurance issues in HfO2-based ferroelectric field effect transistors: charge trapping and trap generation. IEEE Electron Device Lett. 39, 15–18 (2018).

Y. Liu et al. 4.7 A 65nm ReRAM-enabled nonvolatile processor with 6× reduction in restore time and 4× higher clock frequency using adaptive data retention and self-write-termination nonvolatile logic. In 2016 IEEE International Solid-State Circuits Conference (ISSCC) 84–86 (IEEE, 2016).

International Roadmap for Devices and Systems (IRDSTM) 2021 Edition (IEEE, 2021); https://irds.ieee.org/editions/2021

Krivokapic, Z. et al. 14nm ferroelectric FinFET technology with steep subthreshold slope for ultra low power applications. In 2017 IEEE International Electron Devices Meeting (IEDM) 15.1.1–15.1.4 (IEEE, 2017).

Dünkel, S. et al. A FeFET based super-low-power ultra-fast embedded NVM technology for 22nm FDSOI and beyond. In 2017 IEEE International Electron Devices Meeting (IEDM) 19.7.1–19.7.4 (IEEE, 2017).

Zhao, C., Sun, Q., Zhang, C., Tang, Y. & Qian, F. Monocular depth estimation based on deep learning: an overview. Sci. China Technol. Sci. 63, 1612–1627 (2020).

Alhashim, I. & Wonka, P. High quality monocular depth estimation via transfer learning. Preprint at https://arxiv.org/abs/1812.11941 (2018).

Ronneberger, O., Fischer, P. & Brox, T. U-Net: convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 234–241 (Springer, 2015).

Geiger, A., Lenz, P. & Urtasun, R. Are we ready for autonomous driving? The KITTI vision benchmark suite. In 2012 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 3354–3361 (IEEE, 2012).

Huang, G., Liu, Z., Maaten, L. V. D. & Weinberger, K. Q. Densely connected convolutional networks. In 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2261–2269 (IEEE, 2017).

Deng, J. et al. ImageNet: a large-scale hierarchical image database. In 2009 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 248–255 (IEEE, 2009).

Wang, Z., Bovik, A. C., Sheikh, H. R. & Simoncelli, E. P. Image quality assessment: from error visibility to structural similarity. IEEE Trans. Image Process. 13, 600–612 (2004).

Eigen, D., Puhrsch, C. & Fergus, R. Depth map prediction from a single image using a multi-scale deep network. In 28th Conference on Neural Information Processing Systems (NIPS) (NIPS Foundation, 2014).

Acknowledgements

This work is supported by the National Key R&D Program of China (grant no. 2022YFB4400100 (X.W.), 2021YFA0715600 (H.Q.) and 2021YFA1202903 (W.L.)); the National Natural Science Foundation of China (grant no. T2221003 (X.W.), 61927808 (X.W.), 61734003 (X.W.), 61851401 (X.W.), 91964202 (Z.Y.), 62204124 (Z.Y.) and 51861145202 (X.W.)); the Leading-Edge Technology Program of Jiangsu Natural Science Foundation (grant no. BK20202005 (X.W.)); the Strategic Priority Research Program of the Chinese Academy of Sciences (grant no. XDB30000000 (X.W.)); the Research Grant Council of Hong Kong (no. 15205619 (Y.C.)); Key Laboratory of Advanced Photonic and Electronic Materials; Collaborative Innovation Center of Solid-State Lighting and Energy-Saving Electronics; and the Fundamental Research Funds for the Central Universities, China. In addition, we thank the NJU Micro-Fabrication and Integration Center for support during device fabrication and measurement, and thank the Beijing Advanced Innovation Center for Integrated Circuits for support on device modelling and simulations.

Author information

Authors and Affiliations

Contributions

Z.Y. and X.W. conceived and supervised the project. H.N. fabricated the device and TIIO array with assistance from Z.Y., H. Wen., W.M., W.L., Yating Li and Yuankun Li. H.N. performed the electrical measurements with assistance from Z.Y. and H. Wen. Y.M. and H.Q. performed the projections and simulations of the 22-nm-node FeFET. L.L., W.W. and T.L. performed the MoS2 growth. Q.Z., Yuankun Li, Ying Zhou, Yue Zhou and J.C. contributed to the simulations of monocular depth estimation, with guidance from B.G., Y.C. and H. Wu. H.N., Z.Y. and X.W. co-wrote the manuscript with input from the other authors. All the authors contributed to discussions.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Nanotechnology thanks Suraj Cheema and Rehan Kapadia for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data

Extended Data Fig. 1

Three operational modes in TIIO cell by tuning the CFE/CDE ratio.

Extended Data Fig. 2 The AFE/ADE ratio dependence of performance for different channel lengths.

a, The dependence of memory window, endurance and retention on AFE/ADE for presented device with Lch=3μm in Fig. 2. b-d, Optical images (b), SEM images (c), and transfer characteristics (d) of the scaled device with channel length of 85nm. e, The extrapolated 10-year data retention at 85oC of I-type FeFET (AFE/ADE = 0.053) with three scaled channel lengths,480nm, 180nm, and 85nm, shows over 5 decades of on/off ratio @10-year. The off currents kept under the measure precision and did not show any potential of rise, thus we saw them as constant (~2pA) approximately. f, The endurance of T-type FeFET (AFE/ADE = 0.67) with three scaled channel lengths.

Extended Data Fig. 3 Endurance and high temperature retention characteristics of FeFET.

a, Schematic of measurement set-up for endurance. b-e, Endurance performance of FeFET with different AFE/ADE. The program and erase voltages are ±8 V (2.86 MV/cm), respectively, with 20 ns period. f. The extrapolated 10-year data retention of I-type FeFET (AFE/ADE = 0.053) at 85oC and 125oC, shows over 6 decades of on/off ratio @10-year. The off currents kept under the measure precision and did not show any potential of rise, thus we saw them as constant approximately. g, 128-level potentiation/depression curves for T-type device (AFE/ADE = 0.43) after 0 (pristine), 5E7, and 1E8 cycles, showing great uniformity. The α(P) and α(D) stand for the fitted nonlinearity factors for potentiation and depression, respectively. h, 16-level (chosen from 128 states) data retention of an I-type device (AFE/ADE = 0.053) after 1E5 endurance cycles under 85oC accelerated test.

Extended Data Fig. 4 Ultra-fast switching of the FeFETs.

a, Schematics of the T-type duplex FeFET’s test. b, Schematics of the I-type duplex FeFET’s test. c, The waveform of fastest output from PXI-5433 pulse generator, measured by oscilloscope. The amplitude is 10 V, and the pulse width is 4.8 ns. We applied a positive pulse and a negative pulse to the gate of FeFET, with pulse widths from 10 us to the fastest 4.8 ns. The waveforms of T-type FeFET are plotted in d and that of I-type are plotted in e. For the shortest 4.8 ns pulse, there are still 2~3 decades of on/off ratio. The on/off ratio increases to 7~8 decades when pulse width reaches 10 μs. f-j, The The Read-after-write characterization. f, Waveforms of gate voltage for read-after-write test. The amplitude of programming pulses is 9V. g-h, The pulsed memory window for T-type FeFET (AFE/ADE = 0.67, AFE = 64 μm2). g, Pulsed transfer characteristics of T-type device measured in a fast sweep time of 20 μs, with a wide range of read-after-write delay time from 1s to 20 ns (the minimum time resolution of instrument without read current). h, The memory window as a function of read-after-write delay time, extracted from (g). i-j, The pulsed memory window for I-type FeFET (AFE/ADE = 0.053, AFE = 8 μm2). i, Pulsed transfer characteristics of T-type device measured in a fast sweep time of 20 μs, with a wide range of read-after-write delay time from 1s to 20 ns. j, The memory window as a function of read-after-write delay time, extracted from (d). Note that the sweep time 20 μs, we used here, is nearly the minimum time resolution of the instrument for reading current. Additionally, to avoid any destructive read on the FeFET, the performed sweep voltages started from 0V, and stopped (at ±6V for T-type, and ±10V for I-type) when the FeFET was fully switched. Finally, the chosen ID currents for VTH extraction were plotted with horizontal lines in gray. Because of the precision reduction in 20 μs fast sweep, their ID currents for VTH extraction were set to 200nA and 500nA, respectively.

Extended Data Fig. 5 The switching energy of FeFET.

a, Transient gate voltage and current of a FeFET with AFE/ADE = 0.43. b, The gate charge by integration of gate current. An interval of one period is marked with a gray block. c, Benchmark of switching energy and speed with other memory technologies. SSD: solid-state drive; HDD: hard-disc drive. The switching energy is calculated as: \({{{\boldsymbol{E}}}}_{{{{\boldsymbol{switch}}}}} = \frac{{{{{\boldsymbol{Charge}}}} \times {{{\boldsymbol{Voltage}}}}}}{2} = \frac{{{{{\boldsymbol{Q}}}}_{{{{\boldsymbol{MAX}}}}} \cdot {{{\boldsymbol{V}}}}}}{2}\). The references for the data in c are summarized in Supplementary Table 5.

Extended Data Fig. 6 The multi-bit storage capability of T-type synapse.

a, Scheme of voltage sequence in the measurement of multi-bit capability. b, The 128-state (7-bit) output characteristics of the FeFET, shows good linear relationship. c, The symmetric and linear multi-state properties of the FeFET. The potentiation (red) and depression (blue) shows non-linearity factor of 0.876 (LTP) and 0.837 (LTD), respectively, and a symmetry factor of 0.039. (See fitting methods in Supplementary Note 1). d, All-analog computing. The ring datasets were normalized to two channels of voltages (Input-X and Input-Y) multiplied with different weights (conductance G1 and G2), and exported real-time current (Output-X and Output-Y) in an all-analog manner. e-g, The evolution of weights of layer 1 (e), layer 2 (f), and bias (g) during the training process.

Extended Data Fig. 7 The data flow of training and the experimental setup.

a, The data flow of training. The blocks in blue are accomplished in software, while the orange ones are realized in the Deplux FeFETs array. As for data flow, the processed data are plotted in green, weights of ANN are in yellow, and biases are in gray. All the data are vectorized as matrix, the size of which is marked with highlight. There are 4 interfaces for data transmission between the software and hardware. b, The gathered instruments for semi-automatic measurement of FeFETs array. c, d, Zoomed photo of modified components for array test in vacuum (c) and customized probe cards and a chip under test (d). More details were included in the Supplementary Note 4.

Extended Data Fig. 8 Simulation of non-linear localization task.

a, The evolution of weights for X of 1st layer. b, The evolution of weights for Y of 1st layer. c, The evolution of weights of 2nd layer. d,. The evolution of bias. e,. The cost and f, the accuracy curve of the entire training process. Classification accuracy firstly reached 100% at the 12th epoch. g, Original 300-point dataset for training process, 210 of them are divided to training, and the rest 90 were used for test. Red points are labeled as “inside”, while blue points are labeled as “outside”. h, The final classification result at the 16th epoch, drawn as a heatmap. The training data and test data are drawn with white and black border, respectively.

Extended Data Fig. 9 Statistics of 93 FeFETs in the array.

a, Transfer curves of 93 FeFETs (Yield=86%, 93 out of 108), VDS=0.1V. These FeFETs have a median current of 3.6 μA and variation coefficient of only 0.19 (standard deviation over median value), showing excellent uniformity. b, Cumulative plot of program and erase voltages, with symmetric median value of +3.9 V and −3.9 V and small variations. The memory window is strictly symmetric at 0 V.

Extended Data Fig. 10 Results of depth estimation on TIIO architecture.

a, Accuracy with 3 levels of threshold, b, Absolute Relative Error, c, Root Mean Square Error, d, Log Mean Absolute Error. e, Typical depth estimation of a subset of the KITTI dataset. The compared zones are marked with write blocks (for ground truth and TIIO results) and yellow blocks (for original scene). More details were included in the Methods.

Supplementary information

Supplementary Information

Supplementary Notes 1–6, Figs. 1–8, Tables 1–15 and references.

Training process for the 2D location task using a TIIO matrix. In every frame of this video, all of the important parameters and results of each epoch were plotted. The top row shows the weights for X of layer 1, weights for Y of layer 1, weights of layer 2 and bias. The bottom row shows the training and testing cost, training and testing accuracy, dataset, and the output heat map of the neural network. Once the weights and bias were calculated, the output heat map was subsequently determined, from which we could know how the network classifies a data point. A 100% accuracy case is that all the ‘outside’ points are coloured in blue, whereas the ‘inside’ points are in red (same as the labels in the original dataset). This case was realized in epoch 17.

Source data

Source Data Fig. 2

Statistical source data.

Source Data Fig. 3

Statistical source data.

Source Data Fig. 4

Statistical source data.

Source Data Extended Data Fig. 2

Statistical source data.

Source Data Extended Data Fig. 3

Statistical source data.

Source Data Extended Data Fig. 4

Statistical source data.

Source Data Extended Data Fig. 5

Statistical source data.

Source Data Extended Data Fig. 6

Statistical source data.

Source Data Extended Data Fig. 8

Statistical source data.

Source Data Extended Data Fig. 9

Statistical source data.

Source Data Extended Data Fig. 10

Statistical source data.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Ning, H., Yu, Z., Zhang, Q. et al. An in-memory computing architecture based on a duplex two-dimensional material structure for in situ machine learning. Nat. Nanotechnol. 18, 493–500 (2023). https://doi.org/10.1038/s41565-023-01343-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41565-023-01343-0

This article is cited by

-

Three-dimensional integration of two-dimensional field-effect transistors

Nature (2024)

-

The Roadmap of 2D Materials and Devices Toward Chips

Nano-Micro Letters (2024)

-

Artificial morality basic device: transistor for mimicking morality logics

Science China Materials (2024)

-

CMOS backend-of-line compatible memory array and logic circuitries enabled by high performance atomic layer deposited ZnO thin-film transistor

Nature Communications (2023)

-

Silicon-processes-compatible contact engineering for two-dimensional materials integrated circuits

Nano Research (2023)