Abstract

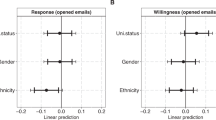

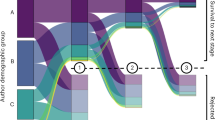

Academics and companies increasingly draw on large datasets to understand the social world, and name-based demographic ascription tools are widespread for imputing information that is often missing from these large datasets. These approaches have drawn criticism on ethical, empirical and theoretical grounds. Using a survey of all authors listed on articles in sociology, economics and communication journals in Web of Science between 2015 and 2020, we compared self-identified demographics with name-based imputations of gender and race/ethnicity for 19,924 scholars across four gender ascription tools and four race/ethnicity ascription tools. We found substantial inequalities in how these tools misgender and misrecognize the race/ethnicity of authors, distributing erroneous ascriptions unevenly among other demographic traits. Because of the empirical and ethical consequences of these errors, scholars need to be cautious with the use of demographic imputation. We recommend five principles for the responsible use of name-based demographic inference.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

The Web of Science data are available from Clarivate Analytics but restrictions apply to the availability of these data, which were used under licence for the current study and so are not publicly available. The survey data that support the findings of this study are not publicly available because they contain information that could compromise research participant privacy or consent. Non-identifying aggregate data are available upon reasonable request to the corresponding author. Reasonable requests should come from researchers with an active institutional affiliation, be for research purposes only and have ethical approval from their institutional review board or appropriate oversight body. Requests would be subject to a data sharing agreement. The authors commit to maintaining the raw data associated with this study for a minimum of 5 years. Source data for all figures are available with the supplementary materials in an Open Science Framework repository: https://doi.org/10.17605/OSF.IO/AVZPK.

Code availability

While the results we present are simple statistics, the code to generate our results and figures is available with the supplementary materials in an Open Science Framework repository at https://doi.org/10.17605/OSF.IO/AVZPK.

References

Matias, J. N., Szalavitz, S. & Zuckerman, E. FollowBias: supporting behavior change toward gender equality by networked gatekeepers on social media. In Proc. 2017 ACM Conference on Computer Supported Cooperative Work and Social Computing (eds Lee, S. & Poltrock, S.) 1082–1095 (Association for Computing Machinery, 2017).

Peng, H., Lakhani, K. & Teplitskiy, M. Acceptance in top journals shows large disparities across name-inferred ethnicities. Preprint at SocArXiv https://doi.org/10.31235/osf.io/mjbxg (2021).

Hofstra, B. & de Schipper, N. C. Predicting ethnicity with first names in online social media networks. Big Data Soc. https://doi.org/10.1177/2053951718761141 (2018).

King, M. M., Bergstrom, C. T., Correll, S. J., Jacquet, J. & West, J. D. Men set their own cites high: gender and self-citation across fields and over time. Socius https://doi.org/10.1177/2378023117738903 (2017).

Mihaljević, H., Tullney, M., Santamaría, L. & Steinfeldt, C. Reflections on gender analyses of bibliographic corpora. Front. Big Data https://doi.org/10.3389/fdata.2019.00029 (2019).

Keyes, O. The misgendering machines. In Proc. ACM on Human-Computer Interaction (eds Karahalios, K., Monroy-Hernández, A., Lampinen, A. & Fitzpatrick, G.) 1–22 (Association for Computing Machinery, 2018).

D’Ignazio, C. A Primer on Non-Binary Gender and Big Data (MIT Center for Civic Media, 2016); https://civic.mit.edu/index.html%3Fp=1165.html

Borch, C. & Pardo-Gurrera, J. P. (eds) Oxford Handbook of the Sociology of Machine Learning (Oxford Univ. Press, 2023).

Santamaría, L. & Mihaljević, H. Comparison and benchmark of name-to-gender inference services. PeerJ Comput. Sci. 4, e156 (2018).

Lindsay, J. & Dempsey, D. First names and social distinction: middle-class naming practices in Australia. J. Sociol. 53, 577–591 (2017).

Bertrand, M. & Mullainathan, S. Are Emily and Greg more employable than Lakisha and Jamal? A field experiment on labor market discrimination. Am. Econ. Rev. 94, 991–1013 (2004).

Fosch-Villaronga, E., Poulsen, A., Søraa, R. A. & Custers, B. H. M. A little bird told me your gender: gender inferences in social media. Inf. Process. Manag. 58, 102541 (2021).

Van Buskirk, I., Clauset, A. & Larremore, D. B. An open-source cultural consensus approach to name-based gender classification. Preprint at http://arxiv.org/abs/2208.01714 (2022).

West, C. & Zimmerman, D. H. Doing gender. Gend. Soc. 1, 125–151 (1987).

Bonilla-Silva, E. The essential social fact of race. Am. Sociol. Rev. 64, 899–906 (1999).

Seguin, C., Julien, C. & Zhang, Y. The stability of androgynous names: dynamics of gendered naming practices in the United States 1880–2016. Poetics 85, 101501 (2021).

Fryer, R. G. Jr. & Levitt, S. D. The causes and consequences of distinctively black names. Q. J. Econ. 119, 767–805 (2004).

Jensen, J. L. et al. Language models in sociological research: an application to classifying large administrative data and measuring religiosity. Sociol. Methodol. 52, 30–52 (2022).

Lieberson, S., Dumais, S. & Baumann, S. The instability of androgynous names: the symbolic maintenance of gender boundaries. Am. J. Sociol. 105, 1249–1287 (2000).

Kozlowski, D. et al. Avoiding bias when inferring race using name-based approaches. PLoS ONE 17, e0264270 (2022).

Sebo, P. Using genderize.io to infer the gender of first names: how to improve the accuracy of the inference. J. Med. Libr. Assoc. 109, 609–612 (2021).

Müller, D., Te, Y.-F. & Jain, P. Improving data quality through high precision gender categorization. In 2017 IEEE International Conference on Big Data (Big Data) (eds Baeza-Yeats, R., Hu, X. T. & Kepner, J.) 2628–2636 (IEEE, 2017).

Wang, Z. et al. Demographic inference and representative population estimates from multilingual social media data. In The World Wide Web Conference (eds Liu, L. & Whyte, R.) 2056–2067 (Association for Computing Machinery, 2019).

Silva, G. C., Trivedi, A. N. & Gutman, R. Developing and evaluating methods to impute race/ethnicity in an incomplete dataset. Health Serv. Outcomes Res. Methodol. 19, 175–195 (2019).

Mateos, P. A review of name-based ethnicity classification methods and their potential in population studies. Popul. Space Place 13, 243–263 (2007).

Barber, M. & Argyle, L. Misclassification and bias in predictions of individual ethnicity from administrative records. Am. Polit. Sci. Rev. (Forthcoming).

ASA membership (American Sociological Association, 2021); https://www.asanet.org/academic-professional-resources/data-about-discipline/asa-membership

Kessler, S. J. & McKenna, W. Gender: an Ethnomethodological Approach (Univ. Chicago Press, 1985).

Pascoe, C. J. Dude, You’re a Fag: Masculinity and Sexuality in High School (Univ. California Press, 2007).

McNamarah, C. T. Misgendering. Calif. Law Rev. 109, 2227–2322 (2021).

Lagos, D. Hearing gender: voice-based gender classification processes and transgender health inequality. Am. Sociol. Rev. 84, 801–827 (2019).

Browne, K. Genderism and the bathroom problem: (re)materialising sexed sites, (re)creating sexed bodies. Gend. Place Cult. 11, 331–346 (2004).

Whitley, C. T., Nordmarken, S., Kolysh, S. & Goldstein-Kral, J. I’ve been misgendered so many times: comparing the experiences of chronic misgendering among transgender graduate students in the social and natural sciences. Sociol. Inq. 92, 1001–1028 (2022).

The Belmont Report: Ethical Principles and Guidelines for the Protection of Human Subjects of Research (National Commission for the Protection of Human Subjects of Biomedical and Behavioral Research, 1979); https://www.hhs.gov/ohrp/regulations-and-policy/belmont-report/read-the-belmont-report/index.html

Hamidi, F., Scheuerman, M. K. & Branham, S. M. Gender recognition or gender reductionism?: The social implications of embedded gender recognition systems. In Proc. 2018 CHI Conference on Human Factors in Computing Systems (eds Hancock, M. & Mandryk, R.) 1–13 (Association for Computing Machinery, 2018).

Scheuerman, M. K., Pape, M. & Hanna, A. Auto-essentialization: gender in automated facial analysis as extended colonial project. Big Data Soc. https://doi.org/10.1177/20539517211053712 (2021).

Bourg, C. Gender Mistakes and Inequality (Stanford Univ. Press, 2003).

Davis, G. & Preves, S. Intersex and the social construction of sex. Contexts 16, 80 (2017).

Fausto-Sterling, A. Sexing the Body: Gender Politics and the Construction of Sexuality (Basic Books, 2000).

Lockhart, J. W. Paradigms of sex research and women in STEM. Gend. Soc. 35, 449–475 (2021).

Science must respect the dignity and rights of all humans. Nat. Hum. Behav. 6, 1029–1031 (2022).

Slater, R. B. The blacks who first entered the world of white higher education. J. Blacks High. Educ. 4, 47–56 (1994).

Blumenfeld, W. J. On the discursive construction of Jewish “racialization” and “race passing:” Jews as “U-boats” with a mysterious “queer light”. J. Crit. Thought Prax. 1, 2 (2012).

Nakamura, L. Cyberrace. PMLA 123, 1673–1682 (2008).

Sims, J. P. Reevaluation of the influence of appearance and reflected appraisals for mixed-race identity: the role of consistent inconsistent racial perception. Sociol. Race Ethn. 2, 569–583 (2016).

Buolamwini, J. & Gebru, T. Gender shades: intersectional accuracy disparities in commercial gender classification. In Proc. 1st Conference on Fairness, Accountability and Transparency (ed. Barocas, S.) 1–15 (ACM, 2018).

Tzioumis, K. Demographic aspects of first names. Sci. Data 5, 180025 (2018).

Di Bitetti, M. S. & Ferreras, J. A. Publish (in English) or perish: the effect on citation rate of using languages other than English in scientific publications. Ambio 46, 121–127 (2017).

Garcia, P. et al. No: critical refusal as feminist data practice. In Proc. 2020 Conference on Computer Supported Cooperative Work and Social Computing (eds Bietz, M. & Wiggins, A.) 199–202 (Association for Computing Machinery, 2020).

Caplan, R., Donovan, J., Hanson, L. & Matthews, J. Algorithmic Accountability: a Primer (Data & Society, 2018); https://datasociety.net/wp-content/uploads/2019/09/DandS_Algorithmic_Accountability.pdf

Angwin, J., Larson, J., Mattu, S. & Kirchner, L. Machine Bias (ProPublica, 2016); https://www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing

Harcourt, B. E. Risk as a proxy for race: the dangers of risk assessment. Fed. Sentencing Rep. 27, 237–243 (2015).

Caliskan, A., Bryson, J. J. & Narayanan, A. Semantics derived automatically from language corpora contain human-like biases. Science 356, 183–186 (2017).

Eubanks, V. Automating Inequality: How High-Tech Tools Profile, Police, and Punish the Poor (St. Martin’s Press, 2017).

O’Neil, C. Weapons of Math Destruction: How Big Data Increases Inequality and Threatens Democracy (Allen Lane, 2016).

Benjamin, R. Race After Technology: Abolitionist Tools for the New Jim Code (Polity Press, 2019).

Genderize.io. Determine the gender of a name; https://genderize.io/

Mullen, L., Blevins, C. & Schmidt, B. gender: predict gender from names using historical data http://cran.nexr.com/web/packages/gender/README.html (2021).

Kaplan, J. predictrace: predict the race and gender of a given name using census and Social Security Administration data. GitHub https://github.com/jacobkap/predictrace (2021).

Laohaprapanon, S., Sood, G. & Naji, B. appeler/ethnicolor: impute race and ethnicity based on name. GitHub https://github.com/appeler/ethnicolor (2022).

Khanna, K., Bertelsen, B., Olivella, S., Rosenman, E. & Imai, K. wru: who are you? Bayesian prediction of racial category using surname, first name, middle name, and geolocation. GitHub https://github.com/kosukeimai/wru (2022).

Acknowledgements

We thank M. Thompson-Brusstar for his insights. G. Azzara, G. Cash, J. A. Galvan, K. Lelapinyokul, S. Martinez and B. Rose provided excellent research assistance. We received no funding specifically for this work. Financial support for research assistants was in part provided by a College of Arts and Sciences Dean’s Grant to M.M.K. from Santa Clara University. The funders had no role in study design, data collection and analysis, decision to publish or preparation of the paper.

Author information

Authors and Affiliations

Contributions

J.W.L. designed and executed the analyses. J.W.L. and M.M.K. wrote the paper. All authors contributed to designing the survey and revising the paper.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Human Behaviour thanks Thomas Billard and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary Information

Supplementary Figs. 1–5, Table 1 and Appendix A (survey questions).

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Lockhart, J.W., King, M.M. & Munsch, C. Name-based demographic inference and the unequal distribution of misrecognition. Nat Hum Behav 7, 1084–1095 (2023). https://doi.org/10.1038/s41562-023-01587-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41562-023-01587-9

This article is cited by

-

Media Portrayals of Trans and Gender Diverse People: A Comparative Analysis of News Headlines Across Europe

Sex Roles (2024)

-

Structure, status, and span: gender differences in co-authorship networks across 16 region-subject pairs (2009–2013)

Scientometrics (2024)

-

Computer algorithms infer gender, race and ethnicity. Here’s how to avoid their pitfalls

Nature (2023)

-

Recommendations for the use of pediatric data in artificial intelligence and machine learning ACCEPT-AI

npj Digital Medicine (2023)