Abstract

Medical artificial intelligence is cost-effective and scalable and often outperforms human providers, yet people are reluctant to use it. We show that resistance to the utilization of medical artificial intelligence is driven by both the subjective difficulty of understanding algorithms (the perception that they are a ‘black box’) and by an illusory subjective understanding of human medical decision-making. In five pre-registered experiments (1–3B: N = 2,699), we find that people exhibit an illusory understanding of human medical decision-making (study 1). This leads people to believe they better understand decisions made by human than algorithmic healthcare providers (studies 2A,B), which makes them more reluctant to utilize algorithmic than human providers (studies 3A,B). Fortunately, brief interventions that increase subjective understanding of algorithmic decision processes increase willingness to utilize algorithmic healthcare providers (studies 3A,B). A sixth study on Google Ads for an algorithmic skin cancer detection app finds that the effectiveness of such interventions generalizes to field settings (study 4: N = 14,013).

Similar content being viewed by others

Main

Algorithms are rapidly diffusing through healthcare systems1, providing support for outpatient services (for example, telehealth) and supply to match demand for inpatient care services2,3,4. Algorithmic-based healthcare services (medical ‘artificial intelligence’ (AI)) are cost-effective and scalable and provide expert-level accuracy in applications ranging from the detection of skin cancer5 and emergency department triage6,7 to diagnoses of COVID-19 from chest X-rays8. Adoption of medical AI is critical for providing affordable, high-quality healthcare in both the developed and developing world9. However, large-scale adoption of AI hinges not only on adoption by healthcare systems and providers but also on patient utilization, and patients are reluctant to utilize medical AI10,11,12. Patients view medical AI as unable to meet their unique needs10 and as performing more poorly than comparable human providers12 and feel it is harder to hold AI providers accountable for mistakes than comparable human providers11.

We propose that another important barrier to adoption of medical AI is its perceived opacity, that it is a ‘black box’13,14. People do not subjectively understand how algorithms make medical decisions, and this impairs their utilization. Moreover, we suggest that this barrier does not rest solely on the perceived opacity of AI. It is also grounded in an illusory understanding of the medical decisions made by human providers. We theorize that people erroneously believe they better understand the decision-making of human than AI healthcare providers, and this erroneous belief contributes to algorithm aversion in healthcare utilization (greater reluctance to utilize healthcare delivered by AI providers than by comparable human providers).

Our theory is grounded in a reality (objective knowledge: what people actually know) and an illusion (subjective knowledge: what people believe they know). Decision processes used by algorithms are perceived to be opaque by patients, because they are often actually opaque to medical professionals and even their creators, who cannot explain them15,16. In other words, subjective and objective knowledge of algorithmic decision processes are similarly poor. We argue that decisions made by human providers appear more transparent, but this perception is an illusion; subjective knowledge of human decision processes is greater than objective knowledge. The perceived transparency of human decision-making stems from a belief that introspection provides direct access into the psychological processes by which people make decisions17. But people actually lack access to their own associative machinery18,19, and rely on heuristics to understand the decision-making of other people20. Human decision-making is often as much a black box as decisions made by algorithms.

We suggest that these coupled effects make people aware of their limited understanding of medical decisions made by algorithmic providers, but also lead them to overestimate their understanding of (similarly opaque) decisions made by human providers. Indeed, we find that people claim greater subjective understanding for medical decisions made by human than AI providers (people feel that they know more), but they exhibit similar objective understanding of decisions made by both providers (people actually know as little about both decision processes). We propose that, in turn, poorer subjective understanding of decisions made by AI than human healthcare providers underlies reluctance to utilize medical AI. Subjective understanding plays a critical role in the adoption of innovations, from new policies to consumer products, and facilitates adoption even when people have little objective understanding of their benefits or how innovations work21,22.

We tested our predictions in a set of five pre-registered online experiments with nationally representative and convenience samples (studies 1, 2A,B and 3A,B: N = 2,699), and a sixth pre-registered online field study on Google Ads (study 4: N = 14,013). We find that subjective understanding drives healthcare provider utilization. Moreover, we find that greater subjective understanding of medical decisions made by human than AI healthcare providers contributes to algorithm aversion, but it can be remediated with cost-effective interventions that increase utilization of algorithmic providers without reducing the utilization of human providers. All experiments were approved for use with human subjects by institutional review boards. All conditions, measures and exclusions are reported; data and pre-registrations are available at https://osf.io/taqp8/.

Results

Study 1

Our theory proposes that the greater subjective understanding people claim for medical decisions made by human than algorithmic healthcare providers is due to illusory understanding of decisions made by human providers. Making people explain a medical decision made by a human or algorithmic provider should then reduce subjective understanding of that decision more when the provider is human.

We recruited a nationally representative online sample of 297 US residents (mean age 45.37 years, 53% female) from Lucid. We used a 2 (provider: human, algorithm) × 2 (rating order: pre-intervention, post-intervention) mixed design. Participants were randomly assigned to one of the two provider conditions between subjects, and ratings of subjective understanding were repeated within subjects.

We first described triage of a potentially cancerous skin lesion with visual inspection and referred to the healthcare provider as a “doctor” or “algorithm” depending on provider condition, for example, “A doctor [algorithm] will examine the scans of your skin to identify cancerous skin lesions.” If risk was assessed to be high, the provider would refer the patient to a dermatologist to determine the appropriate course of action (see Supplementary Appendix 1 for introductory stimuli used in all studies).

Next, all participants provided a first rating of their subjective understanding of the provider’s decision-making with a measure adapted from relevant prior research22,23 (“To what extent do you understand how a doctor [algorithm] examines the scans of your skin to identify cancerous skin lesions?” with 1 for not at all and 7 for completely). To test the degree to which this rating was illusory, participants then generated a mechanistic explanation of the provider’s decision process (see Supplementary Appendix 2 for instructions). Similar interventions have been successfully implemented to shatter the illusion of understanding in other domains23. After completing the intervention, participants provided a second rating of their subjective understanding of provider’s decision process. Greater reductions in pre-intervention to post-intervention ratings indicate a larger illusion of understanding.

To test whether participants exhibited a greater illusion of explanatory depth for the decision processes used by human than algorithmic providers, we regressed subjective understanding on provider (0 for algorithm, 1 for doctor), rating order (0 for pre-intervention, 1 for post-intervention) and their interaction. The analyses revealed the predicted significant interaction (ΔH − ΔA = −0.40, z = −2.61, P = 0.009; Fig. 1). Participants claimed greater subjective understanding of a human than an algorithmic decision processes (pre-intervention: β = 1.08, z = 5.02, P < 0.001; post-intervention: β = 0.69, z = 3.19, P = 0.001). More importantly, the intervention significantly reduced reported subjective understanding of decisions made by human providers (Mpre = 5.08 versus Mpost = 4.52, ΔH = −0.56, z = −5.27, P < 0.001), but not of decisions made by algorithmic providers (Mpre = 3.99 versus Mpost = 3.83, ΔA = −0.16, z = −1.47, P = 0.14). These results hold when including all control variables in the regression (Supplementary Appendix 3).

Studies 2A,B

In studies 2A,B, we directly test our theory against an obvious alternative explanation, that greater subjective understanding for human than algorithmic healthcare providers is due to differences in objective understanding. We predicted that people claim greater subjective understanding of decisions made by human than algorithmic providers, but possess a similar objective understanding of decisions made by human and algorithmic providers.

We recruited nationally representative samples of US residents (2A: N = 400, Mage = 45.36, 52% female; 2B: N = 403, Mage = 45.16, 52% female) from Lucid. Participants were randomly assigned to one of four conditions in a 2 (provider: human, algorithm) × 2 (understanding type: subjective, objective) between-subject design.

In the same skin cancer detection context as study 1, we manipulated whether the provider was a “primary care physician” or “machine learning algorithm”. Next, we measured either objective or subjective understanding of that medical decision.

In objective understanding assessment conditions, we measured participants’ actual understanding of the process by which providers identity cancerous skin lesions. To do this, we developed a quiz in consultation with experts in the relevant medical domain (preliminary visual inspection of moles): a team of dermatologists at a medical school in the Netherlands, and a team of developers of a popular skin cancer detection application in Europe. We identified at least three objective differences in the approach used by primary care physicians versus machine learning algorithms to perform a visual inspection. First, primary care physicians generally consider a predetermined set of features such as the lesion’s asymmetry, border, colour and diameter24, whereas machine learning algorithms do not need to extract these visual features. They use raw pixels to determine visual similarity between the lesion and other lesions5. Second, primary care physicians generally use a decision tree in which mole features are considered separately and often sequentially25,26, whereas machine learning algorithms consider all features simultaneously. Third, primary care physicians make a binary decision, assigning the combination of visual symptoms to a low-risk or a high-risk category, whereas machine learning algorithms compute a more granular probability of malignancy5, although medical AI services may communicate a dichotomized low/high risk result to patients.

Building on these three differences, we created a three-item multiple-choice test intended to assess objective understanding. Each test item had one correct answer for human providers, one (different) correct answer for machine learning algorithms and one incorrect distractor answer (Cronbach’s α = 0.65 in the human condition and α = 0.67 in the algorithm condition). We scored objective understanding by summing the correct answers; thus, scores ranged from 0 to 3 (algorithm condition: m = 1.33, s.e. 0.09, human condition: m = 1.34 and s.e. 0.09).

In subjective understanding assessment conditions in study 2A, participants reported their subjective understanding of the process by which a primary care physician (machine learning algorithm) identifies cancerous skin lesions on a scale similar to that used in study 1: “To what extent do you understand how a doctor [algorithm] examines the scans of your skin to identify cancerous skin lesions?” scored on a seven-point scale with endpoints 1 for not at all and 7 for completely understand.

In subjective understanding conditions in study 2B, we used a measure of subjective understanding more similar to the measure of objective understanding, a three-item binary choice measure using more concrete descriptions of each facet of the decision process, in which participants reported whether (1) or not (0) they subjectively understood the criteria, process and output of the decision process (Supplementary Appendix 4). We scored subjective understanding in study 2B by summing points for all three items; scores ranged from 0 to 3.

We transformed raw measures of subjective and objective understanding into separate z scores (Fig. 2). We then compared them by regressing understandings on provider (0 for algorithm, 1 for human), understanding type (0 for subjective, 1 for objective) and their interaction. Participants claimed greater subjective understanding of medical decisions made by human than algorithmic providers (2A: β = −0.82, t = −6.01, P < 0.001; 2B: β = −0.46, t = −3.33, P = 0.001), whereas their objective understanding did not differ by provider (2A: β = 0.02, t = 0.11, P = 0.91; 2B: β = 0.11, t = 0.79, P = 0.43), creating the predicted provider × understanding interaction (2A: β = −0.80, t = −4.19, P < 0.001; 2B: β = −0.35, t = −1.78, P = 0.08). Note that, in study 2B, excluding the participants who failed the manipulation check increased the interaction coefficient to β = −0.70, t = −2.99, P = 0.003 (Supplementary Appendix 3). Results from both studies 2A and 2B held when including all control variables in the regressions (Supplementary Appendix 3).

Taken together with the results of study 1, medical decisions made by both human and algorithmic providers appear to objectively be a ‘black box’. However, people are more aware of their limited understanding of medical decisions made by algorithmic than human providers.

Studies 3A,B

Next, we examined whether algorithm aversion in healthcare utilization would be reduced by interventions that successfully reduced differences in the subjective understanding of medical decisions made by human and algorithmic providers. As two main decision processes are used to make mole malignancy risk assessments, and as human and algorithmic providers are each likely to favour one of these two decision processes, we again relied on a team of dermatological experts to develop two different interventions.

In study 3A, the intervention reduced differences in subjective understanding by explaining how all providers examine mole features (for example, asymmetry and colour) to make a malignancy risk assessment. This decision process better encapsulates how a human provider is likely to assess risk, although it is not inaccurate with respect to an algorithmic provider. In study 3B, the intervention reduced differences in subjective understanding by explaining how all providers make a malignancy risk assessment, that is, by examining the visual similarity between a target mole and other moles known to be malignant. This decision process better encapsulates how an algorithmic provider is likely to assess risk, although it is not inaccurate with respect to a human provider.

We recruited 1,599 US residents (3A: N = 801, Mage = 36.9 years, 50.0% female; 3B: N = 801, Mage = 45.3 years, 51.1% female) from Amazon Mechanical Turk (3A) and Lucid (3B). In both studies, participants were randomly assigned to one of four conditions in a 2 (provider: human, algorithm) × 2 (intervention: control, intervention) between-subject design.

We used the same skin cancer detection context as in study 1 and 2 and manipulated whether the provider was a human doctor or an algorithm (Supplementary Appendix 1). Then, participants in the control condition reported their subjective understanding and healthcare utilization intentions for that provider. Subjective understanding was measured in study 3A with the item used in study 2A. In study 3B, it was measured with the item used in study 1. Healthcare utilization intentions were measured in study 3A with the item “How likely would you be to utilize a healthcare service that relies on a doctor [algorithm] to identify cancerous skin lesions?” scored on a seven-point scale with endpoints of 1 for not at all likely and 7 for very likely. In study 3B, they were measured with the item “How likely would you be to utilize a healthcare service that relies on a primary care physician [machine learning algorithm] to identify cancerous skin lesions?” scored on a seven-point scale with endpoints of 1 for not at all likely and 7 for very likely.

Participants in the intervention condition first rated their subjective understanding, and then read supplementary information that described how doctors [algorithms] diagnose skin cancer based on photographs of moles, in a single diagram (Fig. 3). Next, they reported their subjective understanding of the medical decision again. Finally, they reported their healthcare utilization intentions.

a,b, Interventions in study 3A (a) and 3B (b) from the algorithm condition. In the human provider conditions, we used the same visuals and replaced “algorithm” with “doctor” (study 3A) or “machine learning algorithm” with “primary care physician” (study 3B). Mole images are in the public domain, source: National Cancer Institute (https://visualsonline.cancer.gov).

We first examined the effectiveness of the intervention for each kind of provider. It increased subjective understanding of the decision made by algorithmic providers (3A: Δ = 1.42, t = 12.51, P < 0.001; 3B: Δ = 0.56, t = 6.21, P < 0.001) and human providers (3A: Δ = 1.04, t = 11.08, P < 0.001; 3B: Δ = 1.39, t = 11.85, P < 0.001). Second, we compared the extent to which the intervention reduced differences in subjective understanding across providers by comparing ratings in the control condition versus post-intervention subjective understanding ratings in the intervention condition. To this end, we regressed subjective understanding ratings on provider (0 for algorithm, 1 for human), intervention (0 for control versus 1 for intervention) and their interaction. We found a significant provider type × intervention interaction (3A: β = −0.47, t = −2.60, P = 0.01; 3B: β = −1.02, t = −4.57, P < 0.001) (Fig. 4a,c). The difference in subjective understanding of the decision made by the human and algorithmic provider was smaller in the intervention condition (3A: β = −0.70, t = −5.41, P < 0.001; 3B: β = −1.10, t = −6.97, P < 0.001) than in the control condition (3A: β = −0.22, t = −1.74, P = 0.08; 3B: β = −0.08, t = −0.52, P = 0.60).

a–d, Explanations reduce the difference in subjective understanding between algorithmic and human decision-making in study 3A (a) and 3B (c), with scale endpoints marked as 1 for not at all and 7 for completely understand, which increases utilization intentions for the algorithmic provider in study 3A (b) and 3B (d), with scale endpoints marked with 1 for not at all likely and 7 for very likely. N = 801 and N = 798 in studies 3A and 3B, respectively. Error bars indicate ±1 s.e.m.

We next tested the impact of the intervention on algorithm aversion in healthcare utilization. We regressed utilization intentions on provider type, intervention condition and their interaction. As predicted, this revealed a significant provider type × intervention interaction (3A: β = −0.49, t = −2.70, P = 0.007; 3B: β = −0.55, t = −2.31, P = 0.02) (Fig. 4b,d). Algorithm aversion in healthcare utilization (greater reluctance to utilize algorithmic than human providers) was smaller in the intervention condition (3A: β = −0.58, t = −4.50, P < 0.001; 3B: β = −0.01, t = −0.05, P = 0.96) than in the control condition (3A: β = −1.08, t = −8.32, P < 0.001; 3B: β = −0.57, t = −3.31, P = 0.001). In other words, the intervention increased utilization intentions to a greater extent for algorithmic providers (3A: β = 0.83, t = 6.46, P < 0.001; 3B: β = 0.70, t = 4.09, P < 0.001) than for human providers (3A: β = 0.34, t = 2.66, P = 0.008; 3B: β = 0.14, t = 0.82, P = 0.41).

Finally, we tested our full process account by examining whether (i) subjective understanding mediated the influence of healthcare provider on utilization intentions and (ii) whether the increase in subjective understanding due to the intervention reduced algorithm aversion. We estimated a moderated mediation model of healthcare utilization with healthcare provider as predictor, intervention condition as moderator and subjective understanding as mediator with 5,000 bootstrap samples27. Consistent with our prediction, the indirect effect of healthcare provider on utilization intentions through subjective understanding was significantly stronger (3A: Δ = 0.20, 95% CI [0.05, 0.37]; 3B: Δ = 0.60, 95% CI [0.35, 0.89]) in the control condition (3A: β = 0.29, 95% CI [0.16, 0.45]; 3B: β = 0.65, 95% CI [0.45, 0.86]) where differences in subjective understanding were larger, than in the intervention condition (3A: β = 0.09, 95% CI [0.02, 0.17]; 3B: β = 0.09, 95% CI [−0.12, 0.21]). These results hold when including all control variables in the regression (Supplementary Appendix 3). We acknowledge the limitations of this type of mediation analysis28 and invite future research to replicate this finding with alternative manipulations, designs and measures.

To rule out that the effects of the interventions in studies 3A,B were due to differences in objective understanding between the control and intervention conditions, we ran an additional three (intervention: control, study 3A, study 3B) × 2 (understanding: subjective, objective) between-subjects pre-registered experiment with 601 participants on Amazon Mechanical Turk. It compared both subjective and objective understanding between control conditions and two conditions that each tested one of the interventions. Results from this experiment show that, relative to a control condition, the interventions used in studies 3A and 3B significantly increased subjective understanding (respectively, β = 0.67, t = 4.90, P < 0.001; β = 0.82, t = 6.05, P < 0.001) but did not influence objective understanding (respectively, P = 0.78 and P = 0.89; all interactions, ts > 3.60, ps < 0.001). For detailed study descriptions and results, see Supplementary Appendix 5. We acknowledge that the results of this ancillary study may have been influenced by the sensitivity of our measure of objective understanding. Measures with a wider range of easy and hard questions might identify more subtle changes in objective understanding resulting from these or other interventions, and identify complementary effects of changes in objective understanding on utilization of medical AI.

Study 4

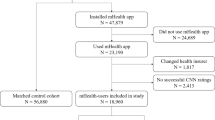

In study 4, using Google Ads, we tested whether subjective knowledge-based interventions would effectively reduce algorithm aversion in healthcare utilization in an online field setting. Following Winterich et al.29, we published sponsored search advertisements for an algorithm-based skin cancer detection application (Fig. 5). Whenever a user typed a prespecified search keyword (for example, “skin cancer picture”) they might see our sponsored search advertisement near or above the organic results on Google (see Supplementary Appendix 6 for details).

We ran our ad campaign for five days between 15 and 19 November 2020. We specified a daily budget of 60 euros; on each day our advertisements stopped when the daily budget was reached. The advertisements generated 14,013 impressions (that is, how many times the ad was viewed across the following demographics: age: 18–24 years: 6.9%, 25–35 years: 5.7%, 35–44 years: 7.3%, 45–54 years: 15.1%, 55–64 years: 35.6%, 65+ years: 29.4%; gender: 78.8% female) and generated 698 clicks.

All participants were served one of two ads for a skin cancer detection application through Google Ads (Fig. 5). We manipulated whether the sponsored ad did or did not explain the process by which the algorithm triaged moles and tested the impact of that intervention on ad click-through rates. Subjective understanding was manipulated through these descriptions of the application, as pretested in a study reported in Supplementary Appendix 7. In the intervention condition, the advertisement read “Our algorithm checks how similar skin moles are in shape/size/color to cancerous moles.” In the control condition, the advertisement read “Our algorithm checks if skin moles are cancerous moles.” Clicking on the advertisement took participants to the Google App Playstore page of SkinVision, an algorithmic skin cancer detection app (Supplementary Appendices 6 and 8).

The frequency of appearance of the different ads was not random, because the Google Ads platform serves ads according to the goal of the campaign (maximizing clicks, in our case). Hence, we cannot use the actual number of clicks as a dependent variable. Instead, we followed prior research29 and used the average percentage of clicks per impression or click-through rate. A logistic regression revealed that the click-through rate was higher in the intervention advertisement (6.36%) compared with the control advertisement (3.29%, β = 0.69, z = 8.17, P < 0.001, d = 0.38).

The results illustrate the efficacy of advertising interventions enhancing subjective knowledge in field settings. Because the assignment of users between ads was not random, of course, it may have generated unintended variance in the set of users exposed to each ad30. Given the replications in studies 3A,B, which featured random assignment, we believe it is unlikely that the results from study 4 can be solely attributed to ad optimization. We invite future research to further replicate this result by using different methodologies and sampling procedures.

Discussion

Utilization of algorithmic-based healthcare services is becoming critical with the rise of telehealth services31, the current surge in healthcare demand2,3,4 and long-term goals of providing affordable and high-quality healthcare32 in developed and developing nations9. Our results yield practical insights for reducing reluctance to utilize medical AI. Because the technologies used in algorithmic-based medical applications are complex, providers tend to present AI provider decisions as a ‘black box’. Our results underscore the importance of recent policy recommendations to open this black box to patients and users16,33. A simple one-page visual or sentence that explains the criteria or process used to make medical decisions increased acceptance of an algorithm-based skin cancer diagnostic tool, which could be easily adapted to other domains and procedures.

Given the complexity of the process by which medical AI makes decisions, firms now tend to emphasize the outcomes that algorithms produce in their marketing to consumers, which feature benefits such as accuracy, convenience and rapidity (performance), while providing few details about how algorithms work (process). Indeed, in an ancillary study examining the marketing of skin cancer smartphone applications (Supplementary Appendix 8), we find that performance-related keywords were used to describe 57–64% of the applications, whereas process-related keywords were used to describe 21% of the applications. Improving subjective understanding of how medical AI works may then not only provide beneficent insights for increasing consumer adoption but also for firms seeking to improve their positioning. Indeed, we find increased advertising efficacy for SkinVision, a skin cancer detection app, when advertising included language explaining how it works.

More broadly, our findings make theoretical contributions to the literatures on algorithm aversion and human understanding of causal systems. The literature on algorithm aversion finds that people are generally averse to using algorithms for tasks that are usually done by humans34,35, on the grounds that algorithms are perceived as unable to learn and improve from their mistakes36, unsuitable for subjective or experiential tasks37,38, unable to adapt to unique or mutable circumstances10,39 and unable to carry responsibility for negative outcomes11. Our results illustrate the importance of understanding the causal process relating their inputs (for example, medical data) to their outputs (for example, diagnoses). We also identify interventions to reduce algorithm aversion in a domain where algorithms are already in widespread use1. We invite future research on medical AI adoption with alternative and complementary interventions of health literacy40 or digital literacy41. Finally, people do exhibit an illusion of explanatory depth of causal systems21,22,23,42 in many domains, claiming to better understand a variety of ideas, technologies and biological systems than they objectively do. Our results suggest an egocentric bias in this illusion of understanding, that the illusion may loom largest in assessments of people—the causal systems most similar to ourselves.

Methods

The present research involved no more than minimal risks, and all study participants were 18 years of age or older. Studies 1–3B were approved for use with human participants by the Institutional Review Board on the Charles River Campus at Boston University (protocol 3632E); informed consent was obtained for all participants. Study 4 used data collected from the Google Ads platform, which is aggregated and fully anonymous at the individual level. It is impossible to identify, interact with and obtain consent from individual participants. This study was approved by the Erasmus University Erasmus Research Institute of Management Internal Review Board.

All manipulations and measures are reported. Pre-registrations, raw data and Stata syntax files are available on the Open Science Framework at https://osf.io/taqp8/.

In studies 1–3B, we recruited participants from the online sample recruiting platforms Lucid and Amazon Mechanical Turk. Lucid provides a nationally representative sample with respect to age, gender, ethnicity and geography43. Amazon Mechanical Turk is an online sample recruiting platform that, although not nationally representative, yields high data quality through the use of attention checks44 and worker reputation scores45. Following prior research, we selected participants with an approval rating above 95% on Amazon Mechanical Turk45. Studies 1–3B were conducted on the Qualtrics survey platform. Condition assignments were random in all our studies, with randomization administered by Qualtrics software.

Following a general rule of thumb used in recent research46, we sought to obtain a minimum of 100 participants per cell. As a result, in studies 2A,B, we decided to target a sample of 100 per cell. In study 1 we decided to target a larger sample of 150 per cell to have sufficient power to detect an interaction in a 2 × 2 mixed design (one factor was manipulated between subject while the other was repeated within subjects). In studies 3A,B, we decided to target a sample of 200 per cell to have sufficient power to detect moderated mediation. With a small effect size of Cohen’s f = 0.20, a significance level of 0.05 and n = 100 in two between-subject conditions, the power to detect a significant effect was 80.36%. With sample sizes of n = 150 and n = 200, the power was 93.23% and 97.88%, respectively.

In studies 1, 2A and 3A,B, as pre-registered, we programmed our surveys to automatically exclude participants who failed an attention check at the very beginning of the survey and prior to any manipulation. We have no data for these participants, and because these responses are recorded as incomplete, they did not affect our target sample size. Participants answered the following attention check question: “There is an artist named Frank that paints miniature figures. He usually buys these figures from a company, but the company has gone out of business. After this, Frank decides to hand-carve his own figures. However, Frank’s friend tells him that these new figures are significantly worse in quality. Did Frank’s friend think that Frank’s hand-carved figures were better than the company’s?” with answers of yes, no, maybe, cheese plates and movies (correct answer is “no”). We screened out N = 211 participants in study 1, N = 255 participants in study 2A, N = 260 participants in study 3A and N = 378 participants in study 3B.

In studies 1, 2A and 3A,B, the stimuli included either an image of a human provider or an algorithm, the first result in Google Images with search terms “Doctor” or “Artificial Intelligence” that did not include words or a face (see https://osf.io/taqp8/). Omitting these introductory images in study 2B yielded the same pattern of results.

Based on prior research on resistance to AI in the medical domain10, participants also reported three control variables related to skin cancer in studies 1–3B: their perceived susceptibility to skin cancer (“Relative to an average person of your same age and gender, to what extent do you consider yourself to be at risk of skin cancer (melanoma)?” with 1 for much lower and 5 for much higher), their self-examination frequency (“Skin self-examination is the careful and deliberate checking for changes in spots or moles on all areas of your skin, including those areas rarely exposed to the sun. How often do you practice skin self-examination?” with 1 for never and 5 for weekly) and their perceived self-efficacy (“In general, to what extent do you feel that you are confident in your ability to conduct skin self-examination?” with 1 for not at all confident and 5 for extremely confident).

In studies 1–3B, we also collected demographic variables at the end of the experiment (gender, age and employment by/as a healthcare provider) and a manipulation check question (“In the scenario you read, the data […] was analyzed by?” with answers of a doctor or an algorithm). Additional details about the manipulation check, and analyses including the control variables, are described in Supplementary Appendix 3. Effect sizes associated with main hypotheses are detailed in Supplementary Appendix 9.

Reporting summary

Further information on research design is available in the Nature Research Reporting Summary linked to this article.

Data availability

Data from all of the studies reported in this paper are publicly available at https://osf.io/taqp8/.

Code availability

Analyses were conducted with STATA 16.1, and code from all studies is publicly available at https://osf.io/taqp8/.

References

Topol, E. J. High-performance medicine: the convergence of human and artificial intelligence. Nat. Med. 25, 44–56 (2019).

Wosik, J. et al. Telehealth transformation: COVID-19 and the rise of virtual care. J. Am. Med. Inform. Assoc. 27, 957–962 (2020).

Hollander, J. E. & Carr, B. G. Virtually perfect? Telemedicine for COVID-19. N. Engl. J. Med. 382, 1679–1681 (2020).

Keesara, S., Jonas, A. & Schulman, K. Covid-19 and health care’s digital revolution. N. Engl. J. Med. 382, e82 (2020).

Esteva, A. et al. Dermatologist-level classification of skin cancer with deep neural networks. Nature 542, 115–118 (2017).

Laranjo, L. et al. Conversational agents in healthcare: a systematic review. J. Am. Med. Inform. Assoc. 25, 1248–1258 (2018).

Goto, T., Camargo, C. A. Jr, Faridi, M. K., Freishtat, R. J. & Hasegawa, K. Machine learning-based prediction of clinical outcomes for children during emergency department triage. JAMA Netw. Open 2, e186937–e186937 (2019).

Hao, K. Doctors are using AI to triage covid-19 patients. The tools may be here to stay. MIT Technology Review (23 April 2020).

Guo, J. & Li, B. The application of medical artificial intelligence technology in rural areas of developing countries. Health Equity 2, 174–181 (2018).

Longoni, C., Bonezzi, A. & Morewedge, C. K. Resistance to medical artificial intelligence. J. Cons. Res. 46, 629–650 (2019).

Promberger, M. & Baron, J. Do patients trust computers? J. Behav. Decis. Mak. 19, 455–468 (2006).

Eastwood, J., Snook, B. & Luther, K. What people want from their professionals: attitudes toward decision‐making strategies. J. Behav. Decis. Mak. 25, 458–468 (2012).

Price, W. N. Big data and black-box medical algorithms. Sci. Transl. Med. 10, eaao5333 (2018).

Burrell, J. How the machine ‘thinks’: understanding opacity in machine learning algorithms. Big Data Soc. 3, 2053951715622512 (2016).

Castelvecchi, D. Can we open the black box of AI? Nature 538, 20–23 (2016).

Kroll, J. A. et al. Accountable algorithms. Univ. Pa. Law Rev. 165, 633 (2016).

Nisbett, R. E. & Wilson, T. D. Telling more than we can know: verbal reports on mental processes. Psychol. Rev. 84, 231–259 (1977).

Kahneman, D. Maps of bounded rationality: psychology for behavioral economics. Am. Econ. Rev. 93, 1449–1475 (2003).

Morewedge, C. K. & Kahneman, D. Associative processes in intuitive judgment. Trends Cogn. Sci. 14, 435–440 (2010).

Pronin, E. & Kugler, M. B. Valuing thoughts, ignoring behavior: the introspection illusion as a source of the bias blind spot. J. Exp. Soc. Psychol. 43, 565–578 (2007).

Fernbach, P. M., Sloman, S. A., Louis, R. S. & Shube, J. N. Explanation fiends and foes: how mechanistic detail determines understanding and preference. J. Cons. Res. 39, 1115–1131 (2013).

Fernbach, P. M., Rogers, T., Fox, C. R. & Sloman, S. A. Political extremism is supported by an illusion of understanding. Psychol. Sci. 24, 939–946 (2013).

Rozenblit, L. & Keil, F. The misunderstood limits of folk science: an illusion of explanatory depth. Cogn. Sci. 26, 521–562 (2002).

Stolz, W. ABCD rule of dermatoscopy: a new practical method for early recognition of malignant melanoma. Eur. J. Dermatol. 4, 521–527 (1994).

Rogers, T. et al. A clinical aid for detecting skin cancer: the triage amalgamated dermoscopic algorithm (TADA). J. Am. Board Fam. Med. 29, 694–701 (2016).

Robinson, J. K. et al. A randomized trial on the efficacy of mastery learning for primary care provider melanoma opportunistic screening skills and practice. J. Gen. Intern. Med. 33, 855–862 (2018).

Hayes, A. F. Introduction to Mediation, Moderation, and Conditional Process Analysis: A Regression-Based Approach (Guilford Press, 2013).

Bullock, J. G., Green, D. P. & Ha, S. E. Yes, but what’s the mechanism? (don’t expect an easy answer). J. Pers. Soc. Psychol. 98, 550 (2010).

Winterich, K. P., Nenkov, G. Y. & Gonzales, G. E. Knowing what it makes: how product transformation salience increases recycling. J. Mark. 83, 21–37 (2019).

Eckles, D., Gordon, B. R. & Johnson, G. A. Field studies of psychologically targeted ads face threats to internal validity. Proc. Natl Acad. Sci. USA 115, E5254–E5255 (2018).

Tuckson, R. V., Edmunds, M. & Hodgkins, M. L. Telehealth. N. Engl. J. Med. 377, 1585–1592 (2017).

Reinhard, S. C., Kassner, E. & Houser, A. How the Affordable Care Act can help move states toward a high-performing system of long-term services and supports. Health Aff. 30, 447–453 (2011).

Watson, D. S. et al. Clinical applications of machine learning algorithms: beyond the black box. BMJ 364, l886 (2019).

Dawes, R., Faust, D. & Meehl, P. Clinical versus actuarial judgment. Science 243, 1668–1674 (1989).

Yeomans, M., Shah, A., Mullainathan, S. & Kleinberg, J. Making sense of recommendations. J. Behav. Decis. Mak. 32, 403–414 (2019).

Dietvorst, B. J., Simmons, J. P. & Massey, C. Algorithm aversion: people erroneously avoid algorithms after seeing them err. J. Exp. Psychol. Gen. 144, 114 (2015).

Castelo, N., Bos, M. W. & Lehmann, D. R. Task-dependent algorithm aversion. J. Mark. Res. 56, 809–825 (2019).

Longoni, C. & Cian, L. Artificial intelligence in utilitarian vs. hedonic contexts: the “word-of-machine” effect. J. Market. https://doi.org/10.1177/0022242920957347 (2020).

Dietvorst, B. J. & Bharti, S. People reject algorithms in uncertain decision domains because they have diminishing sensitivity to forecasting error. Psychol. Sci. 31, 1302–1314 (2020).

Ayre, J., Bonner, C., Cvejic, E. & McCaffery, K. Randomized trial of planning tools to reduce unhealthy snacking: implications for health literacy. PLoS ONE 14, e0209863 (2019).

Neter, E. & Brainin, E. eHealth literacy: extending the digital divide to the realm of health information. J. Med. Internet Res. 14, e19 (2012).

Alter, A. L., Oppenheimer, D. M. & Zemla, J. C. Missing the trees for the forest: a construal level account of the illusion of explanatory depth. J. Pers. Soc. Psychol. 99, 436 (2010).

Pennycook, G. & Rand, D. G. Fighting misinformation on social media using crowdsourced judgments of news source quality. Proc. Natl Acad. Sci. USA 116, 2521–2526 (2019).

Paolacci, G., Chandler, J. & Ipeirotis, P. G. Running experiments on Amazon Mechanical Turk. Judgm. Decis. Mak. 5, 411–419 (2010).

Peer, E., Vosgerau, J. & Acquisti, A. Reputation as a sufficient condition for data quality on Amazon Mechanical Turk. Behav. Res. Methods 46, 1023–1031 (2014).

Hofstetter, R., Rüppell, R. & John, L. K. Temporary sharing prompts unrestrained disclosures that leave lasting negative impressions. Proc. Natl Acad. Sci. USA 114, 11902–11907 (2017).

Acknowledgements

Before joining Rotterdam School of Management, R.C. received funding from the Susilo Institute for Ethics in the Global Economy, Questrom School of Business, Boston University. R.C. thanks the Erasmus Research Institute in Management for providing funding for data collection. The authors thank T. Sangers, M. Wakkee, A. Udrea and SkinVision for their feedback on human and algorithmic decision processes.

Author information

Authors and Affiliations

Contributions

R.C., C.L. and C.K.M designed research; R.C. and C.L. performed research; R.C. analysed data; R.C., C.L. and C.K.M wrote the paper.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Peer review information Nature Human Behaviour thanks Fiona Hamilton and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary information

Supplementary appendices 1–8.

Rights and permissions

About this article

Cite this article

Cadario, R., Longoni, C. & Morewedge, C.K. Understanding, explaining, and utilizing medical artificial intelligence. Nat Hum Behav 5, 1636–1642 (2021). https://doi.org/10.1038/s41562-021-01146-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41562-021-01146-0

This article is cited by

-

Artificial intelligence and illusions of understanding in scientific research

Nature (2024)

-

When and why consumers prefer human-free behavior tracking products

Marketing Letters (2024)

-

Folk Beliefs of Artificial Intelligence and Robots

International Journal of Social Robotics (2024)

-

Explainable artificial intelligence prediction-based model in laparoscopic liver surgery for segments 7 and 8: an international multicenter study

Surgical Endoscopy (2024)

-

Prediction and diagnosis of depression using machine learning with electronic health records data: a systematic review

BMC Medical Informatics and Decision Making (2023)