Abstract

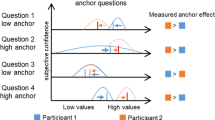

Behavioural researchers often seek to experimentally manipulate, measure and analyse latent psychological attributes, such as memory, confidence or attention. The best measurement strategy is often difficult to intuit. Classical psychometric theory, mostly focused on individual differences in stable attributes, offers little guidance. Hence, measurement methods in experimental research are often based on tradition and differ between communities. Here we propose a criterion, which we term ‘retrodictive validity’, that provides a relative numerical estimate of the accuracy of any given measurement approach. It is determined by performing calibration experiments to manipulate a latent attribute and assessing the correlation between intended and measured attribute values. Our approach facilitates optimising measurement strategies and quantifying uncertainty in the measurement. Thus, it allows power analyses to define minimally required sample sizes. Taken together, our approach provides a metrological perspective on measurement practice in experimental research that complements classical psychometrics.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

References

Steegen, S., Tuerlinckx, F., Gelman, A. & Vanpaemel, W. Increasing transparency through a multiverse analysis. Perspect. Psychol. Sci. 11, 702–712 (2016).

Silberzahn, R. et al. Many analysts, one data set: making transparent how variations in analytic choices affect results. Adv. Methods Pract. Psychol. Sci. 1, 337–356 (2018).

Lonsdorf, T. B. et al. Navigating the garden of forking paths for data exclusions in fear conditioning research. eLife 8, e52465 (2019).

Boucsein, W. et al. Publication recommendations for electrodermal measurements. Psychophysiology 49, 1017–1034 (2012).

Blumenthal, T. D. et al. Committee report: guidelines for human startle eyeblink electromyographic studies. Psychophysiology 42, 1–15 (2005).

Ojala, K. E. & Bach, D. R. Measuring learning in human classical threat conditioning: Translational, cognitive and methodological considerations. Neurosci. Biobehav. Rev. 114, 96–112 (2020).

Simmons, J. P., Nelson, L. D. & Simonsohn, U. False-positive psychology: undisclosed flexibility in data collection and analysis allows presenting anything as significant. Psychol. Sci. 22, 1359–1366 (2011).

Lonsdorf, T. B., Merz, C. J. & Fullana, M. A. Fear extinction retention: is it what we think it is? Biol. Psychiatry 85, 1074–1082 (2019).

Houwer, J. D. Why the cognitive approach in psychology would profit from a functional approach and vice versa. Perspect. Psychol. Sci. 6, 202–209 (2011).

Luce, R.D. & Suppes, P. Representational measurement theory. in Stevens’ Handbook of Experimental Psychology (ed. Pashler, H.) https://doi.org/10.1002/0471214426.pas0401 (2002).

Michell, J. The psychometricians’ fallacy: too clever by half? Br. J. Math. Stat. Psychol. 62, 41–55 (2009).

Estler, W. T. Measurement as inference: fundamental ideas. CIRP Annals 48, 611–632 (1999).

Phillips, S. D., Estler, W. T., Doiron, T., Eberhardt, K. R. & Levenson, M. S. A careful consideration of the calibration concept. J. Res. Natl. Inst. Stand. Technol. 106, 371–379 (2001).

International Bureau of Weights and Measures (BIPM). The international vocabulary of metrology—basic and general concepts and associated terms (VIM). https://www.bipm.org/utils/common/documents/jcgm/JCGM_200_2012.pdf (JCGM, 2012).

Shadish, W.R., Cook, T.D. & Campbell, D.T. Experimental and Quasi-Experimental Designs for Generalized Causal Inference (Houghton Mifflin, 2002).

Cronbach, L. J. & Meehl, P. E. Construct validity in psychological tests. Psychol. Bull. 52, 281–302 (1955).

Cronbach, L.J. Five perspectives on validity argument. in Test Validity (eds. Wainer, H. & Braun, H. I.) 3–17 (Routledge, 1988).

van der Maas, H. L. J., Molenaar, D., Maris, G., Kievit, R. A. & Borsboom, D. Cognitive psychology meets psychometric theory: on the relation between process models for decision making and latent variable models for individual differences. Psychol. Rev. 118, 339–356 (2011).

Bach, D. R. & Friston, K. J. Model-based analysis of skin conductance responses: Towards causal models in psychophysiology. Psychophysiology 50, 15–22 (2013).

Bach, D. R. et al. Psychophysiological modeling: Current state and future directions. Psychophysiology 55, e13214 (2018).

Bach, D. R. & Melinscak, F. Psychophysiological modelling and the measurement of fear conditioning. Behav. Res. Ther. 127, 103576 (2020).

Bach, D. R., Tzovara, A. & Vunder, J. Blocking human fear memory with the matrix metalloproteinase inhibitor doxycycline. Mol. Psychiatry 23, 1584–1589 (2018).

Novick, M. R. The axioms and principal results of classical test theory. J. Math. Psychol. 3, 1–18 (1966).

Lord, F. M. A strong true-score theory, with applications. Psychometrika 30, 239–270 (1965).

Metzner, C., Mäki-Marttunen, T., Zurowski, B. & Steuber, V. Modules for automated validation and comparison of models of neurophysiological and neurocognitive biomarkers of psychiatric disorders: ASSRUnit—a case study. Comput. Psychiatry 2, 74–91 (2018).

Rigdon, E. E., Sarstedt, M. & Becker, J. M. Quantify uncertainty in behavioral research. Nat. Hum. Behav. 4, 329–331 (2020).

Button, K. S. et al. Power failure: why small sample size undermines the reliability of neuroscience. Nat. Rev. Neurosci. 14, 365–376 (2013).

Smaldino, P. E. & McElreath, R. The natural selection of bad science. R. Soc. Open Sci. 3, 160384 (2016).

Khemka, S., Tzovara, A., Gerster, S., Quednow, B. B. & Bach, D. R. Modeling startle eyeblink electromyogram to assess fear learning. Psychophysiology 54, 204–214 (2017).

Bang, D. & Fleming, S. M. Distinct encoding of decision confidence in human medial prefrontal cortex. Proc. Natl. Acad. Sci. USA 115, 6082–6087 (2018).

Wager, T. D. et al. An fMRI-based neurologic signature of physical pain. N. Engl. J. Med. 368, 1388–1397 (2013).

Munafò, M. R. et al. A manifesto for reproducible science. Nat. Hum. Behav. 1, 0021 (2017).

Nosek, B. A., Ebersole, C. R., DeHaven, A. C. & Mellor, D. T. The preregistration revolution. Proc. Natl. Acad. Sci. USA 115, 2600–2606 (2018).

Acknowledgements

D.R.B. is supported by funding from the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation programme (Grant agreement No. ERC-2018 CoG-816564 ActionContraThreat). S.M.F. is supported by a Sir Henry Dale Fellowship from the Wellcome Trust and Royal Society (206648/Z/17/Z). The Wellcome Centre for Human Neuroimaging is funded by core funding from the Wellcome Trust (203147/Z/16/Z). The funders had no role in study design, data collection and analysis, decision to publish or preparation of the manuscript.

Author information

Authors and Affiliations

Contributions

D.R.B., F.M., S.M.F. and M.C.V. contributed to conception of the work. D.R.B. wrote and F.M. and M.C.V. contributed to the mathematical derivation. D.R.B., F.M., S.M.F. and M.C.V. wrote and revised the paper.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Peer review information Primary handling editor: Jamie Horder

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary Information

Supplementary Methods, Supplementary Results, Supplementary Discussion, Supplementary Fig. 1 and Supplementary References.

Rights and permissions

About this article

Cite this article

Bach, D.R., Melinščak, F., Fleming, S.M. et al. Calibrating the experimental measurement of psychological attributes. Nat Hum Behav 4, 1229–1235 (2020). https://doi.org/10.1038/s41562-020-00976-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41562-020-00976-8

This article is cited by

-

Attenuating human fear memory retention with minocycline: a randomized placebo-controlled trial

Translational Psychiatry (2024)

-

The impact of doxycycline on human contextual fear memory

Psychopharmacology (2024)

-

Understanding clinical fear and anxiety through the lens of human fear conditioning

Nature Reviews Psychology (2023)

-

Cross-species anxiety tests in psychiatry: pitfalls and promises

Molecular Psychiatry (2022)