Abstract

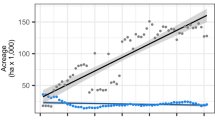

Many scientific disciplines are currently experiencing a 'reproducibility crisis' because numerous scientific findings cannot be repeated consistently. A novel but controversial hypothesis postulates that stringent levels of environmental and biotic standardization in experimental studies reduce reproducibility by amplifying the impacts of laboratory-specific environmental factors not accounted for in study designs. A corollary to this hypothesis is that a deliberate introduction of controlled systematic variability (CSV) in experimental designs may lead to increased reproducibility. To test this hypothesis, we had 14 European laboratories run a simple microcosm experiment using grass (Brachypodium distachyon L.) monocultures and grass and legume (Medicago truncatula Gaertn.) mixtures. Each laboratory introduced environmental and genotypic CSV within and among replicated microcosms established in either growth chambers (with stringent control of environmental conditions) or glasshouses (with more variable environmental conditions). The introduction of genotypic CSV led to 18% lower among-laboratory variability in growth chambers, indicating increased reproducibility, but had no significant effect in glasshouses where reproducibility was generally lower. Environmental CSV had little effect on reproducibility. Although there are multiple causes for the 'reproducibility crisis', deliberately including genetic variability may be a simple solution for increasing the reproducibility of ecological studies performed under stringently controlled environmental conditions.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

References

Cassey, P. & Blackburn, T. Reproducibility and repeatability in ecology. Bioscience 56, 958–959 (2006).

Ellison, A. M. Repeatability and transparency in ecological research. Ecology 91, 2536–2539 (2010).

Lawton, J. H. The Ecotron facility at Silwood Park: the value of ‘big bottle’ experiments. Ecology 77, 665–669 (1996).

Benton, T. G., Solan, M., Travis, J. M. & Sait, S. M. Microcosm experiments can inform global ecological problems. Trends Ecol. Evol. 22, 516–521 (2007).

Drake, J. M. & Kramer, A. M. Mechanistic analogy: how microcosms explain nature. Theor. Ecol. 5, 433–444 (2012).

Fraser, L. H. & Keddy, P. The role of experimental microcosms in ecological research. Trends Ecol. Evol. 12, 478–481 (1997).

Srivastava, D. S. et al. Are natural microcosms useful model systems for ecology? Trends Ecol. Evol. 19, 379–384 (2004).

De Boeck, H. J. et al. Global change experiments: challenges and opportunities. BioScience 65, 922–931 (2015).

Richter, S. H. et al. Effect of population heterogenization on the reproducibility of mouse behavior: a multi-laboratory study. PLoS ONE 6, e16461 (2011).

Richter, S. H., Garner, J. P. & Würbel, H. Environmental standardization: cure or cause of poor reproducibility in animal experiments? Nat. Methods 6, 257–261 (2009).

Richter, S. H., Garner, J. P., Auer, C., Kunert, J. & Würbel, H. Systematic variation improves reproducibility of animal experiments. Nat. Methods 7, 167–168 (2010).

Massonnet, C. et al Probing the reproducibility of leaf growth and molecular phenotypes: a comparison of three Arabidopsis accessions cultivated in ten laboratories. Plant Physiol. 152, 2142–2157 (2010).

Begley, C. G. & Ellis, M. L. Raise standards for preclinical cancer research. Nature 483, 531–533 (2012).

Open Science Collaboration Estimating the reproducibility of psychological science. Science 349, aac4716 (2015).

Parker, T. H. et al. Transparency in ecology and evolution: real problems, real solutions. Trends Ecol. Evol. 31, 711–719 (2016).

Moore, R. P. & Robinson, W. D. Artificial bird nests, external validity, and bias in ecological field studies. Ecology 85, 1562–1567 (2004).

Temperton, V. M., Mwangi, P. N., Scherer-Lorenzen, M., Schmid, B. & Buchmann, N. Positive interactions between nitrogen-fixing legumes and four different neighbouring species in a biodiversity experiment. Oecologia 151, 190–205 (2007).

Meng, L. et al. Arbuscular mycorrhizal fungi and rhizobium facilitate nitrogen uptake and transfer in soybean/maize intercropping system. Front. Plant Sci. 6, 339 (2015).

Sleugh, B., Moore, K. J., George, J. R. & Brummer, E. C. Binary legume–grass mixtures improve forage yield, quality, and seasonal distribution. Agron. J. 92, 24–29 (2000).

Keuskamp, J. A., Dingemans, B. J. J., Lehtinen, T., Sarneel, J. M. & Hefting, M. M. Tea bag index: a novel approach to collect uniform decomposition data across ecosystems. Methods Ecol. Evol. 4, 1070–1075 (2013).

Nyfeler, D., Huguenin-Elie, O., Suter, M., Frossard, E. & Lüscher, A. Grass–legume mixtures can yield more nitrogen than legume pure stands due to mutual stimulation of nitrogen uptake from symbiotic and non-symbiotic sources. Agric. Ecosyst. Environ. 140, 155–163 (2011).

Suter, M. et al Nitrogen yield advantage from grass–legume mixtures is robust over a wide range of legume proportions and environmental conditions. Glob. Change Biol. 21, 2424–2438 (2015).

Loreau, M. & de Mazancourt, C. Biodiversity and ecosystem stability: a synthesis of underlying mechanisms. Ecol. Lett. 16, 106–115 (2013).

Reusch, T. B., Ehlers, A., Hämmerli, A. & Worm, B. Ecosystem recovery after climatic extremes enhanced by genotypic diversity. Proc. Natl Acad. Sci. USA 102, 2826–2831 (2005).

Hughes, A. R., Inouye, B. D., Johnson, M. T. J., Underwood, N. & Vellend, M. Ecological consequences of genetic diversity. Ecol. Lett. 11, 609–623 (2008).

Prieto, I. et al. Complementary effects of species and genetic diversity on productivity and stability of sown grasslands. Nat. Plants 1, 1–5 (2015).

Wasserstein, R. L. & Lazar, N. A. The ASA’s statement on P-values: context, process, and purpose. Am. Stat. 70, 129–133 (2016).

Baker, M. 1,500 scientists lift the lid on reproducibility. Nature 533, 452–454 (2016).

Nuzzo, R. How scientists fool themselves—and how they can stop. Nature 526, 182–185 (2015).

R Development Core Team R: A Language and Environment for Statistical Computing (R Foundation for Statistical Computing, Vienna, 2017).

Tukey, J. W. Exploratory Data Analysis (Addison–Wesley, Reading, USA, 1977).

Pinheiro, J., Bates, D., DebRoy, S., Sarkar, D. & R Core Team. nlme: Linear and Nonlinear Mixed-Effects Models R Package Version 3.1-122 (The R Foundation, 2016); http://CRAN.R-project.org/package=nlme

Zuur, A. F., Ieno, E. N., Walker, N., Saveliev, A. A. & Smith, G. M. Mixed-Effects Models and Extensions in Ecology with R (Springer, New York, 2009).

Lê, S., Josse, J. & Husson, F. FactoMineR: an R package for multivariate analysis. J. Stat. Softw. 25, 1–18 (2008).

Josse, J., Chavent, M., Liquet, B. & Husson, F. Handling missing values with regularized iterative multiple correspondence analysis. J. Classif. 29, 91–116 (2010).

Josse, J. & Husson, F. missMDA: a package for handling missing values in multivariate data analysis. J. Stat. Softw. 70, 1–31 (2016).

Acknowledgements

This study benefited from the Centre Nationnal de la Recherche Scientifique human and technical resources allocated to the Ecotrons research infrastructures, the state allocation ‘Investissement d’Avenir’ ANR-11-INBS-0001 and financial support from the ExpeER (grant 262060) consortium funded under the EU-FP7 research programme (FP2007–2013). Brachypodium seeds were provided by R. Sibout (Observatoire du Végétal, Institut Jean-Pierre Bourgin) and Medicago seeds were supplied by J.-M. Prosperi (Institut National de la Recherche Agronomique Biological Resource Centre). We further thank J. Varale, G. Hoffmann, P. Werthenbach, O. Ravel, C. Piel, D. Landais, D. Degueldre, T. Mathieu, P. Aury, N. Barthès, B. Buatois and R. Leclerc for assistance during the study. For additional acknowledgements, see the Supplementary Information.

Author information

Authors and Affiliations

Contributions

A.M. and J.R. designed the study with input from M. Blouin, S.B., M. Bonkowski and J.-C.L. Substantial methodological contributions were provided by S.S., T.G., L.R. and M.S.-L. Conceptual feedback on an early version was provided by G.T.F., N.E., J.R. and A.M.E. Data were analysed by A.M. with input from A.M.E. A.M. wrote the manuscript with input from all authors. All authors were involved in carrying out the experiments and/or analyses.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing financial interests.

Additional information

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary information

Supplementary Tables 1–3; Supplementary Figures 1–5; Model outputs; Supplementary Acknowledgements.

Rights and permissions

About this article

Cite this article

Milcu, A., Puga-Freitas, R., Ellison, A.M. et al. Genotypic variability enhances the reproducibility of an ecological study. Nat Ecol Evol 2, 279–287 (2018). https://doi.org/10.1038/s41559-017-0434-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41559-017-0434-x

This article is cited by

-

Re-focusing sampling, design and experimental methods to assess rapid evolution by non-native plant species

Biological Invasions (2024)

-

Experimental community coalescence sheds light on microbial interactions in soil and restores impaired functions

Microbiome (2023)

-

Quantifying microbial control of soil organic matter dynamics at macrosystem scales

Biogeochemistry (2021)

-

Variation under domestication in animal models: the case of the Mexican axolotl

BMC Genomics (2020)

-

It is time for an empirically informed paradigm shift in animal research

Nature Reviews Neuroscience (2020)