Abstract

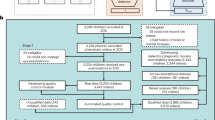

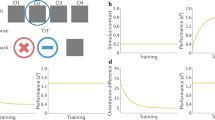

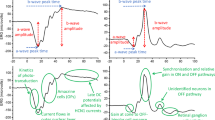

Sensory loss is associated with behavioural changes, but how behavioural dynamics change when a sensory modality is impaired remains unclear. Here, by recording under a designed standardized scenario, the behavioural phenotypes of 4,196 infants who experienced varying degrees of visual loss but retained high behavioural plasticity, we show that behaviours with significantly higher occurrence in visually impaired infants can be identified, and that correlations between the frequency of specific behavioural patterns and visual-impairment severity, as well as variations in behavioural dynamics with age, can be quantified. We also show that a deep-learning algorithm (a temporal segment network) trained with the full-length videos can discriminate, for an independent dataset from 400 infants, mild visual impairment from healthy behaviour (area under the curve (AUC) of 85.2%), severe visual impairment from mild impairment (AUC of 81.9%), and various ophthalmological conditions from healthy vision (with AUCs ranging from 81.6% to 93.0%). The video dataset of behavioural phenotypes in response to visual loss and the trained machine-learning algorithm should help the study of visual function and behavioural plasticity in infants.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$99.00 per year

only $8.25 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

The authors declare that the main data supporting the results in this study are available within the paper and its Supplementary Information. The datasets generated during the study and representative videos for each behaviour are available for research purposes from the corresponding authors on reasonable request. The raw videos are not publicly available due to restrictions of portrait rights and patient privacy.

Code availability

We used the temporal segment network framework (https://github.com/yjxiong/temporal-segment-networks). Our experimental protocol made use of proprietary libraries and we are unable to publicly release this code. However, we detail the experiments and implementation details in the Methods and Supplementary Information.

References

Merabet, L. B. & Pascual-Leone, A. Neural reorganization following sensory loss: the opportunity of change. Nat. Rev. Neurosci. 11, 44–52 (2010).

Akre, K. L. & Johnsen, S. Psychophysics and the evolution of behavior. Trends Ecol. Evolution. 29, 291–300 (2014).

Klein, M. et al. Sensory determinants of behavioral dynamics in drosophila thermotaxis. Proc. Natl Acad. Sci. USA 112, E220–E229 (2015).

Dey, S. et al. Cyclic regulation of sensory perception by a female hormone alters behavior. Cell 161, 1334–1344 (2015).

Bridgeman, B. & Tseng, P. Embodied cognition and the perception-action link. Phys. Life Rev. 8, 73–85 (2011).

Pamplona, D., Triesch, J. & Rothkopf, C. A. Power spectra of the natural input to the visual system. Vis. Res. 83, 66–75 (2013).

Prasad, S. & Galetta, S. L. Anatomy and physiology of the afferent visual system. Handb. Clin. Neurol. 102, 3–19 (2011).

Lea, A. J. et al. Developmental plasticity: bridging research in evolution and human health. Evol. Med Public Health 2017, 162–175 (2018).

Williams, B. A., Mandrekar J. N., Mandrekar, S. J., Cha, S. S. & Furth, A. F. Finding Optimal Cut Points for Continous Covariates with Binary and Time to Event Outcomes Technical Reports Series 79 (Department of Health Science Research, Mayo Clinic, 2006).

Online Mendelian Inheritance in Man (Johns Hopkins Univ., accessed 9 January 2019); https://omim.org/

Bavelier, D. & Neville, H. J. Cross-modal plasticity: where and how? Nat. Rev. Neurosci. 3, 443–452 (2002).

Hoyt, C. & Taylor, D. Pediatric ophthalmology and strabismus 4th edn (Elsevier, 2013).

Katz, L. N., Hennig, J. A., Cormack, L. K. & Huk, A. C. A distinct mechanism of temporal integration for motion through depth. J. Neurosci. 35, 10212–10216 (2015).

Long, E. et al. Dynamic response to initial stage blindness in visual system development. Clin. Sci. 131, 1515–1527 (2017).

Tirosh, E., Shnitzer, M. R., Davidovitch, M. & Cohen, A. Behavioral problems among visually impaired between 6 months and 5 years. Int J. Rehabil. Res. 21, 63–70 (1998).

Tröster, H., Brambring, M. & Beelmann, A. The age dependence of stereotyped behaviors in blind infants and preschoolers. Child Care Health Dev. 17, 137–157 (1991).

Molloy, A. & Rowe, F. J. Manneristic behaviors of visually impaired children. Strabismus 19, 77–84 (2011).

Mariotti, S. P. Global Data on Visual Impairment 2010 (World Health Organization, 2012).

Gilbert, C. & Foster, A. Childhood blindness in the context of VISION 2020—the right to sight. Bull. World Health Organ 79, 277–232 (2001).

Frisén, L. Vanishing optotypes. new type of acuity test letters. Arch. Ophthalmol. 104, 1194–1198 (1986).

Hilton, A. F. & Stanley, J. C. Pitfalls in testing children’s vision by the sheridan gardiner single optotype method. Br. J. Ophthalmol. 56, 135–139 (1972).

Brémond-Gignac, D. et al. Visual development in infants: physiological and pathological mechanisms. Curr. Opin. Ophthalmol. 22, S1–S8 (2011).

Li, Y. & Lin, H. Progress in screening and treatment of common congenital eye diseases. Eye Sci. 28, 157–162 (2013).

Romeo, D. M. et al. Visual function assessment in late-preterm newborns. Early Hum. Dev. 88, 301–305 (2012).

Livingstone, I. A., Lok, A. S. & Tarbert, C. New mobile technologies and visual acuity. Conf. Proc. IEEE Eng. Med Biol. Soc. 2014, 2189–2192 (2014).

Blaikie, A. J. & Dutton, G. N. How to assess eyes and vision in infants and preschool children. BMJ 350, h1716 (2015).

Mayer, D. L. et al. Monocular acuity norms for the Teller Acuity Cards between ages one month and four years. Invest. Ophthalmol. Vis. Sci. 36, 671 (1995).

Wang, L. et al. Temporal segment networks: towards good practices for deep action recognition. ACM Trans. Inf. Syst. 22, 20–36 (2016).

Azen, R. & Budescu, D. V. The dominance analysis approach for comparing predictors in multiple regression. Psychol. Methods 8, 129 (2003).

Acknowledgements

We thank the National Supercomputer Center in Guangzhou for computing resources support. This study was funded by the National Key R&D Program of China (2018YFC0116500), the Key Research Plan for the National Natural Science Foundation of China in Cultivation Project (91846109), the Natural Science Foundation of China (81822010), Guangdong Science and Technology Innovation Leading Talents (2017TX04R031), and the Pearl River Scholar Program (2016).

Author information

Authors and Affiliations

Contributions

H.L., E.L. and Z.Liu designed the research; H.L., X.L., Z.Lin, Z.Liu, J.L., J.C., X.W., W.L., E.L., H.C., R.L., Y.Y., W.C. and Y.L. collected the data; Y.X., A.X., X.H., Y.Zhang, Z.Z., X.D. and E.L. conducted the study; E.L. and J.H. analysed the data; H.L. and E.L. co-wrote the manuscript; Z.Liu, Y.Zhu and C.C. critically revised the manuscript; W.Z. did the technical review; and all authors discussed the results and provided comments regarding the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary Information

Supplementary methods, figures, tables and references.

Rights and permissions

About this article

Cite this article

Long, E., Liu, Z., Xiang, Y. et al. Discrimination of the behavioural dynamics of visually impaired infants via deep learning. Nat Biomed Eng 3, 860–869 (2019). https://doi.org/10.1038/s41551-019-0461-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41551-019-0461-9

This article is cited by

-

Early detection of visual impairment in young children using a smartphone-based deep learning system

Nature Medicine (2023)

-

A digital mask to safeguard patient privacy

Nature Medicine (2022)